ploeh blog danish software design

An abstract example of refactoring from interaction-based to property-based testing

A C# example with xUnit.net and CsCheck

This is the first comprehensive example that accompanies the article Epistemology of interaction testing. In that article, I argue that in a code base that leans toward functional programming (FP), property-based testing is a better fit than interaction-based testing. In this example, I will show how to refactor simple interaction-based tests into a property-based tests.

This small article series was prompted by an email from Sergei Rogovtsev, who was kind enough to furnish example code. I'll use his code as a starting point for this example, so I've forked the repository. If you want to follow along, all my work is in a branch called no-mocks. That branch simply continues off the master branch.

Interaction-based testing #

Sergei Rogovtsev writes:

"A major thing to point out here is that I'm not following TDD here not by my own choice, but because my original question arose in a context of a legacy system devoid of tests, so I choose to present it to you in the same way. I imagine that working from tests would avoid a lot of questions."

Even when using test-driven development (TDD), most code bases I've seen make use of Stubs and Mocks (or, rather, Spies). In an object-oriented context this can make much sense. After all, a catch phrase of object-oriented programming is tell, don't ask.

If you base API design on that principle, you're modelling side effects, and it makes sense that tests use Spies to verify those side effects. The book Growing Object-Oriented Software, Guided by Tests is a good example of this approach. Thus, even if you follow established good TDD practice, you could easily arrive at a code base reminiscent of Sergei Rogovtsev's example. I've written plenty of such code bases myself.

Sergei Rogovtsev then extracts a couple of components, leaving him with a Controller class looking like this:

public string Complete(string state, string code) { var knownState = _repository.GetState(state); try { if (_stateValidator.Validate(code, knownState)) return _renderer.Success(knownState); else return _renderer.Failure(knownState); } catch (Exception e) { return _renderer.Error(knownState, e); } }

This code snippet doesn't show the entire class, but only its solitary action method. Keep in mind that the entire repository is available on GitHub if you want to see the surrounding code.

The Complete method orchestrates three injected dependencies: _repository, _stateValidator, and _renderer. The question that Sergei Rogovtsev asks is how to test this method. You may think that it's so simple that you don't need to test it, but keep in mind that this is a minimal and self-contained example that stands in for something more complicated.

The method has a cyclomatic complexity of 3, so you need at least three test cases. That's also what Sergei Rogovtsev's code contains. I'll show each test case in turn, while I refactor them.

The overall question is still this: Both IStateValidator and IRenderer interfaces have only a single production implementation, and in both cases the implementations are pure functions. If interaction-based testing is suboptimal, is there a better way to test this code?

As I outlined in the introductory article, I consider property-based testing a good alternative. In the following, I'll refactor the tests. Since the tests already use AutoFixture, most of the preliminary work can be done without choosing a property-based testing framework. I'll postpone that decision until I need it.

State validator #

The IStateValidator interface has a single implementation:

public class StateValidator : IStateValidator { public bool Validate(string code, (string expectedCode, bool isMobile, Uri redirect) knownState) => code == knownState.expectedCode; }

The Validate method is a pure function, so it's completely deterministic. It means that you don't have to hide it behind an interface and replace it with a Test Double in order to control it. Rather, just feed it proper data. Still, that's not what the interaction-based tests do:

[Theory] [AutoData] public void HappyPath(string state, string code, (string, bool, Uri) knownState, string response) { _repository.Add(state, knownState); _stateValidator .Setup(validator => validator.Validate(code, knownState)) .Returns(true); _renderer .Setup(renderer => renderer.Success(knownState)) .Returns(response); _target .Complete(state, code) .Should().Be(response); }

These tests use AutoFixture, which will make it a bit easier to refactor them to properties. It also makes the test a bit more abstract, since you don't get to see concrete test data. In short, the [AutoData] attribute will generate a random state string, a random code string, and so on. If you want to see an example with concrete test data, the next article shows that variation.

The test uses Moq to control the behaviour of the Test Doubles. It states that the Validate method will return true when called with certain arguments. This is possible because you can redefine its behaviour, but as far as executable specifications go, this test doesn't reflect reality. There's only one Validate implementation, and it doesn't behave like that. Rather, it'll return true when code is equal to knownState.expectedCode. The test poorly communicates that behaviour.

Even before I replace AutoFixture with CsCheck, I'll prepare the test by making it more honest. I'll replace the code parameter with a Derived Value:

[Theory] [AutoData] public void HappyPath(string state, (string, bool, Uri) knownState, string response) { var (expectedCode, _, _) = knownState; var code = expectedCode; // The rest of the test...

I've removed the code parameter to replace it with a variable derived from knownState. Notice how this documents the overall behaviour of the (sub-)system.

This also means that I can now replace the IStateValidator Test Double with the real, pure implementation:

[Theory] [AutoData] public void HappyPath(string state, (string, bool, Uri) knownState, string response) { var (expectedCode, _, _) = knownState; var code = expectedCode; _repository.Add(state, knownState); _renderer .Setup(renderer => renderer.Success(knownState)) .Returns(response); var sut = new Controller(_repository, new StateValidator(), _renderer.Object); sut .Complete(state, code) .Should().Be(response); }

I give the Failure test case the same treatment:

[Theory] [AutoData] public void Failure(string state, (string, bool, Uri) knownState, string response) { var (expectedCode, _, _) = knownState; var code = expectedCode + "1"; // Any extra string will do _repository.Add(state, knownState); _renderer .Setup(renderer => renderer.Failure(knownState)) .Returns(response); var sut = new Controller(_repository, new StateValidator(), _renderer.Object); sut .Complete(state, code) .Should().Be(response); }

The third test case is a bit more interesting.

An impossible case #

Before I make any changes to it, the third test case is this:

[Theory] [AutoData] public void Error( string state, string code, (string, bool, Uri) knownState, Exception e, string response) { _repository.Add(state, knownState); _stateValidator .Setup(validator => validator.Validate(code, knownState)) .Throws(e); _renderer .Setup(renderer => renderer.Error(knownState, e)) .Returns(response); _target .Complete(state, code) .Should().Be(response); }

This test case verifies the behaviour of the Controller class when the Validate method throws an exception. If we want to instead use the real, pure implementation, how can we get it to throw an exception? Consider it again:

public bool Validate(string code, (string expectedCode, bool isMobile, Uri redirect) knownState) => code == knownState.expectedCode;

As far as I can tell, there's no way to get this method to throw an exception. You might suggest passing null as the knownState parameter, but that's not possible. This is a new version of C# and the nullable reference types feature is turned on. I spent some fifteen minutes trying to convince the compiler to pass a null argument in place of knownState, but I couldn't make it work in a unit test.

That's interesting. The Error test is exercising a code path that's impossible in production. Is it redundant?

It might be, but here I think that it's more an artefact of the process. Sergei Rogovtsev has provided a minimal example, and as it sometimes happens, perhaps it's a bit too minimal. He did write, however, that he considered it essential for the example that the logic involved more that an Boolean true/false condition. In order to keep with the spirit of the example, then, I'm going to modify the Validate method so that it's also possible to make it throw an exception:

public bool Validate(string code, (string expectedCode, bool isMobile, Uri redirect) knownState) { if (knownState == default) throw new ArgumentNullException(nameof(knownState)); return code == knownState.expectedCode; }

The method now throws an exception if you pass it a default value for knownState. From an implementation standpoint, there's no reason to do this, so it's only for the sake of the example. You can now test how the Controller handles an exception:

[Theory] [AutoData] public void Error(string state, string code, string response) { _repository.Add(state, default); _renderer .Setup(renderer => renderer.Error(default, It.IsAny<Exception>())) .Returns(response); var sut = new Controller(_repository, new StateValidator(), _renderer.Object); sut .Complete(state, code) .Should().Be(response); }

The test no longer has a reference to the specific Exception object that Validate is going to throw, so instead it has to use Moq's It.IsAny API to configure the _renderer. This is, however, only an interim step, since it's now time to treat that dependency in the same way as the validator.

Renderer #

The Renderer class has three methods, and they are all pure functions:

public class Renderer : IRenderer { public string Success((string expectedCode, bool isMobile, Uri redirect) knownState) { if (knownState.isMobile) return "{\"success\": true, \"redirect\": \"" + knownState.redirect + "\"}"; else return "302 Location: " + knownState.redirect; } public string Failure((string expectedCode, bool isMobile, Uri redirect) knownState) { if (knownState.isMobile) return "{\"success\": false, \"redirect\": \"login\"}"; else return "302 Location: login"; } public string Error((string expectedCode, bool isMobile, Uri redirect) knownState, Exception e) { if (knownState.isMobile) return "{\"error\": \"" + e.Message + "\"}"; else return "500"; } }

Since all three methods are deterministic, automated tests can control their behaviour simply by passing in the appropriate arguments:

[Theory] [AutoData] public void HappyPath(string state, (string, bool, Uri) knownState, string response) { var (expectedCode, _, _) = knownState; var code = expectedCode; _repository.Add(state, knownState); var renderer = new Renderer(); var sut = new Controller(_repository, renderer); var expected = renderer.Success(knownState); sut .Complete(state, code) .Should().Be(expected); }

Instead of configuring an IRenderer Stub, the test can state the expected output: That the output is equal to the output that renderer.Success would return.

Notice that the test doesn't require that the implementation calls renderer.Success. It only requires that the output is equal to the output that renderer.Success would return. Thus, it has less of an opinion about the implementation, which means that it's marginally less coupled to it.

You might protest that the test now duplicates the implementation code. This is partially true, but no more than the previous incarnation of it. Before, the test used Moq to explicitly require that renderer.Success gets called. Now, there's still coupling, but this refactoring reduces it.

As a side note, this may partially be an artefact of the process. Here I'm refactoring tests while keeping the implementation intact. Had I started with a property, perhaps the test would have turned out differently, and less coupled to the implementation. If you're interested in a successful exercise in using property-based TDD, you may find my article Property-based testing is not the same as partition testing interesting.

Simplification #

Once you've refactored the tests to use the pure functions as dependencies, you no longer need the interfaces. The interfaces IStateValidator and IRenderer only existed to support testing. Now that the tests no longer use the interfaces, you can delete them.

Furthermore, once you've removed those interfaces, there's no reason for the classes to support instantiation. Instead, make them static:

public static class StateValidator { public static bool Validate( string code, (string expectedCode, bool isMobile, Uri redirect) knownState) { if (knownState == default) throw new ArgumentNullException(nameof(knownState)); return code == knownState.expectedCode; } }

You can do the same for the Renderer class.

This doesn't change the overall flow of the Controller class' Complete method, although the implementation details have changed a bit:

public string Complete(string state, string code) { var knownState = _repository.GetState(state); try { if (StateValidator.Validate(code, knownState)) return Renderer.Success(knownState); else return Renderer.Failure(knownState); } catch (Exception e) { return Renderer.Error(knownState, e); } }

StateValidator and Renderer are no longer injected dependencies, but rather 'modules' that affords pure functions.

Both the Controller class and the tests that cover it are simpler.

Properties #

So far I've been able to make all these changes without introducing a property-based testing framework. This was possible because the tests already used AutoFixture, which, while not a property-based testing framework, already strongly encourages you to write tests without literal test data.

This makes it easy to make the final change to property-based testing. On the other hand, it's a bit unfortunate from a pedagogical perspective. This means that you didn't get to see how to refactor a 'traditional' unit test to a property. The next article in this series will plug that hole, as well as show a more realistic example.

It's now time to pick a property-based testing framework. On .NET you have a few choices. Since this code base is C#, you may consider a framework written in C#. I'm not convinced that this is necessarily better, but it's a worthwhile experiment. Here I've used CsCheck.

Since the tests already used randomly generated test data, the conversion to CsCheck is relatively straightforward. I'm only going to show one of the tests. You can always find the rest of the code in the Git repository.

[Fact] public void HappyPath() { (from state in Gen.String from expectedCode in Gen.String from isMobile in Gen.Bool let urls = new[] { "https://example.com", "https://example.org" } from redirect in Gen.OneOfConst(urls).Select(s => new Uri(s)) select (state, (expectedCode, isMobile, redirect))) .Sample((state, knownState) => { var (expectedCode, _, _) = knownState; var code = expectedCode; var repository = new RepositoryStub(); repository.Add(state, knownState); var sut = new Controller(repository); var expected = Renderer.Success(knownState); sut .Complete(state, code) .Should().Be(expected); }); }

Compared to the AutoFixture version of the test, this looks more complicated. Part of it is that CsCheck (as far as I know) doesn't have the same integration with xUnit.net that AutoFixture has. That might be an issue that someone could address; after all, FsCheck has framework integration, to name an example.

Test data generators are monads so you typically leverage whatever syntactic sugar a language offers to simplify monadic composition. In C# that syntactic sugar is query syntax, which explains that initial from block.

The test does look too top-heavy for my taste. An equivalent problem appears in the next article, where I also try to address it. In general, the better monad support a language offers, the more elegantly you can address this kind of problem. C# isn't really there yet, whereas languages like F# and Haskell offer superior alternatives.

Conclusion #

In this article I've tried to demonstrate how property-based testing is a viable alternative to using Stubs and Mocks for verification of composition. You can try to sabotage the Controller.Complete method in the no-mocks branch and see that one or more properties will fail.

While the example code base that I've used for this article has the strength of being small and self-contained, it also suffers from a few weaknesses. It's perhaps a bit too abstract to truly resonate. It also uses AutoFixture to generate test data, which already takes it halfway towards property-based testing. While that makes the refactoring easier, it also means that it may not fully demonstrate how to refactor an example-based test to a property. I'll try to address these shortcomings in the next article.

Next: A restaurant example of refactoring from example-based to property-based testing.

More functional pits of success

FAQ: What are the other pits of successes of functional programming?

People who have seen my presentation Functional architecture: the pits of success occasionally write to ask: What are the other pits?

The talk is about some of the design goals that we often struggle with in object-oriented programming, but which tend to happen automatically in functional programming (FP). In the presentation I cover three pits of success, but I also mention that there are more. In a one-hour conference presentation, I simply didn't have time to discuss more than three.

It's a natural question, then, to ask what are the pits of success that I don't cover in the talk?

I've been digging through my notes and found the following:

- Parallelism

- Ports and adapters

- Services, Entities, Value Objects

- Testability

- Composition

- Package and component principles

- CQS

- Encapsulation

Finding a lost list like this, more than six years after I jotted it down, presents a bit of a puzzle to me, too. In this post, I'll see if I can reconstruct some of the points.

Parallelism #

When most things are immutable you don't have to worry about multiple threads updating the same shared resource. Much has already been said and written about this in the context of functional programming, to the degree that for some people, it's the main (or only?) reason to adopt FP.

Even so, I had (and still have) a version of the presentation that included this advantage. When I realised that I had to cut some content for time, it was easy to cut this topic in favour of other benefits. After all, this one was already well known in 2016.

Ports and adapters #

This was one of the three benefits I kept in the talk. I've also covered it on my blog in the article Functional architecture is Ports and Adapters.

Services, Entities, Value Objects #

This was the second topic I included in the talk. I don't think that there's an explicit article here on the blog that deals with this particular subject matter, so if you want the details, you'll have to view the recording.

In short, though, what I had in mind was that Domain-Driven Design explicitly distinguishes between Services, Entities, and Value Objects, which often seems to pull in the opposite direction of the object-oriented notion of data with behaviour. In FP, on the contrary, it's natural to separate data from behaviour. Since behaviour often implements business logic, and since business logic tends to change at a different rate than data, it's a good idea to keep them apart.

Testability #

The third pit of success I covered in the talk was testability. I've also covered this here on the blog: Functional design is intrinsically testable. Pure functions are trivial to test: Supply some input and verify the output.

Composition #

Pure functions compose. In the simplest case, use the return value from one function as input for another function. In more complex cases, you may need various combinators in order to be able to 'click' functions together.

I don't have a single article about this. Rather, I have scores: From design patterns to category theory.

Package and component principles #

When it comes to this one, I admit, I no longer remember what I had in mind. Perhaps I was thinking about Scott Wlaschin's studies of cycles and modularity. Perhaps I did, again, have my article about Ports and Adapters in mind, or perhaps it was my later articles on dependency rejection that already stirred.

CQS #

The Command Query Separation principle states that an operation (i.e. a method) should be either a Command or a Query, but not both. In most programming languages, the onus is on you to maintain that discipline. It's not a design principle that comes easy to most object-oriented programmers.

Functional programming, on the other hand, emphasises pure functions, which are all Queries. Commands have side effects, and pure functions can't have side effects. Haskell even makes sure to type-check that pure functions don't perform side effects. If you're interested in a C# explanation of how that works, see The IO Container.

What impure actions remain after you've implemented most of your code base as pure functions can violate or follow CQS as you see fit. I usually still follow that principle, but since the impure parts of a functional code base tends to be fairly small and isolated to the edges of the application, even if you decide to violate CQS there, it probably makes little difference.

Encapsulation #

Functional programmers don't talk much about encapsulation, but you'll often hear them say that we should make illegal states unrepresentable. I recently wrote an article that explains that this tends to originate from the same motivation: Encapsulation in Functional Programming.

In languages like F# and Haskell, most type definitions require a single line of code, in contrast to object-oriented programming where types are normally classes, which take up a whole file each. This makes it much easier and succinct to define constructive data in proper FP languages.

Furthermore, perhaps most importantly, pure functions are referentially transparent, and referential transparency fits in your head.

Conclusion #

In a recording of the talk titled Functional architecture: the pits of success I explain that the presentation only discusses three pits of success, but that there are more. Consulting my notes, I found five more that I didn't cover. I've now tried to remedy this lapse.

I don't, however, believe that this list is exhaustive. Why should it be?

On trust in software development

Can you trust your colleagues to write good code? Can you trust yourself?

I've recently noticed a trend among some agile thought leaders. They talk about trust and gatekeeping. It goes something like this:

Why put up barriers to prevent people from committing code? Don't you trust your colleagues?

Gated check-ins, pull requests, reviews are a sign of a dysfunctional organisation.

I'm deliberately paraphrasing. While I could cite multiple examples, I wish to engage with the idea rather than the people who propose it. Thus, I apologise for the seeming use of weasel words in the above paragraph, but my agenda is the opposite of appealing to anonymous authority.

If someone asks me: "Don't you trust your colleagues?", my answer is:

No, I don't trust my colleagues, as I don't trust myself.

Framing #

I don't trust myself to write defect-free code. I don't trust that I've always correctly understood the requirements. I don't trust that I've written the code in the best possible way. Why should I trust my colleagues to be superhumanly perfect?

The trust framing is powerful because few people like to be labeled as mistrusting. When asked "don't you trust your colleagues?" you don't want to answer in the affirmative. You don't want to come across as suspicious or paranoid. You want to belong.

The need to belong is fundamental to human nature. When asked if you trust your colleagues, saying "no" implicitly disassociates you from the group.

Sometimes the trust framing goes one step further and labels processes such as code reviews or pull requests as gatekeeping. This is still the same framing, but now turns the group dynamics around. Now the question isn't whether you belong, but whether you're excluding others from the group. Most people (me included) want to be nice people, and excluding other people is bullying. Since you don't want to be a bully, you don't want to be a gatekeeper.

Framing a discussion about software engineering as one of trust and belonging is powerful and seductive. You're inclined to accept arguments made from that position, and you may not discover the sleight of hand. It's subliminal.

Most likely, it's such a fundamental and subconscious part of human psychology that the thought leaders who make the argument don't realise what they are doing. Many of them are professionals that I highly respect; people with more merit, experience, and education than I have. I don't think they're deliberately trying to put one over you.

I do think, on the other hand, that this is an argument to be challenged.

Two kinds of trust #

On the surface, the trust framing seems to be about belonging, or its opposite, exclusion. It implies that if you don't trust your co-workers, you suspect them of malign intent. Organisational dysfunction, it follows, is a Hobbesian state of nature where everyone is out for themselves: Expect your colleague to be a back-stabbing liar out to get you.

Indeed, the word trust implies that, too, but that's usually not the reason to introduce guardrails and checks to a software engineering process.

Rather, another fundamental human characteristic is fallibility. We make mistakes in all sorts of way, and we don't make them from malign intent. We make them because we're human.

Do we trust our colleagues to make no mistakes? Do we trust that our colleagues have perfect knowledge of requirement, goals, architecture, coding standards, and so on? I don't, just as I don't trust myself to have those qualities.

This interpretation of trust is, I believe, better aligned with software engineering. If we institute formal sign-offs, code reviews, and other guardrails, it's not that we suspect co-workers of ill intent. Rather, we're trying to prevent mistakes.

Two wrongs... #

That's not to say that all guardrails are necessary all of the time. The thought leaders I so vaguely refer to will often present alternatives: Pair programming instead of pull requests. Indeed, that can be an efficient and confidence-inducing way to work, in certain contexts. I describe advantages as well as disadvantages in my book Code That Fits in Your Head.

I've warned about the trust framing, but that doesn't mean that pull requests, code reviews, gated check-ins, or feature branches are always a good idea. Just because one argument is flawed it does't mean that the message is wrong. It could be correct for other reasons.

I agree with the likes of Martin Fowler and Dave Farley that feature branching is a bad idea, and that you should adopt Continuous Delivery. Accelerate strongly suggests that.

I also agree that pull requests and formal reviews with sign-offs, as they're usually practised, is at odds with even Continuous Integration. Again, be aware of common pitfalls in logic. Just because one way to do reviews is counter-productive, it doesn't follow that all reviews are bad.

As I have outlined in another article, under the right circumstances, agile pull requests are possible. I've had good result with pull requests like that. Reviewing was everyone's job, and we integrated multiple times a day.

Is that way to work always possible? Is it always the best way to work? No, of course not. Context matters. I've worked with another team where it was evident that that process had little chance of working. On the other hand, that team wasn't keen on pair programming either. Then what do you do?

Mistakes were made #

I rarely have reason to believe that co-workers have malign intent. When we are working together towards a common goal, I trust that they have as much interest in reaching that goal as I have.

Does that trust mean that everyone is free to do whatever they want? Of course not. Even with the best of intentions, we make mistakes, there are misunderstandings, or we have incomplete information.

This is one among several reasons I practice test-driven development (TDD). Writing a test before implementation code catches many mistakes early in the process. In this context, the point is that I don't trust myself to be perfect.

Even with TDD and the best of intentions, there are other reasons to look at other people's work.

Last year, I did some freelance programming for a customer, and sometimes I would receive feedback that a function I'd included in a pull request already existed in the code base. I didn't have that knowledge, but the review caught it.

Could we have caught that with pair or ensemble programming? Yes, that would work too. There's more than one way to make things work, and they tend to be context-dependent.

If everyone on a team have the luxury of being able to work together, then pair or ensemble programming is an efficient way to coordinate work. Little extra process may be required, because everyone is already on the same page.

If team members are less fortunate, or have different preferences, they may need to rely on the flexibility offered by asynchrony. This doesn't mean that you can't do Continuous Delivery, even with pull requests and code reviews, but the trade-offs are different.

Conclusion #

There are many good reasons to be critical of code reviews, pull requests, and other processes that seem to slow things down. The lack of trust in co-workers is, however, not one of them.

You can easily be swayed by that argument because it touches something deep in our psyche. We want to be trusted, and we want to trust our colleagues. We want to belong.

The argument is visceral, but it misrepresents the motivation for process. We don't review code because we believe that all co-workers are really North Korean agents looking to sneak in security breaches if we look away.

We look at each other's work because it's human to make mistakes. If we can't all be in the same office at the same time, fast but asynchronous reviews also work.

Comments

This reminds me of Hanlon's razor.

Never attribute to malice that which is adequately explained by stupidity.

Too much technical attibution is still being presented on a whole, into a field which

is far more of a social and psycological construct. What's even worse is the evidence around organisation and team capabilities is pretty clear towards what makes a good team and organisation.

The technical solutions for reaching trust or beloningness is somewhat a menas to an end. They can't stand alone because it only takes two humans to side track them.

therefore I still absolutely believe that the technical parts of software engineering is by far the less demanding. As technical work most often still are done as individual contributions

based on loose requirements, equally loose leadership and often non-existing enforcement from tooling and scattered human ownership. Even worse subjective perceptions.

That I believe on the other hand has everything to do with trust, gatekeeping, belonging and other human psycology needs.

I like to look at it from the other side - I ask my colleagues for reviewing my code, because I trust them. I trust they can help me make it better and it's in all of us interests to make it better - as soon as the code gets in to the main branch, it's not my code anymore - it's teams code.

Also, not tightly connected to the topic of code reviews and pull requests, but still about trust in software development, I had this general thought about trusting yourself in software programming:

Don't trust your past self. Do trust your future self.

Don't trust your past self in the meaning: don't hesitate to refactor your code. If you've written the code a while ago, even with the best intentions of making it readable, as you normally have, but now you read it and you don't understand it - don't make too much effort into trying to understand it. If you don't understand it, others won't as well. Rewrite it to make it better.

Do trust your future self in the meaning: don't try to be smarter than you are now. If you don't know all the requirements or answers to all the questions, don't guess them when you don't have to. Don't create an abstraction from one use case you have - in future you will have more use cases and you will be able to create a better abstraction - trust me, or actually trust yourself. I guess it's just YAGNI rephrased ;)

Jakub, thank you for writing. I don't think that I have much to add. More people should use refactoring as a tool for enhancing understanding when they run into incomprehensible code. Regardless of who originally wrote that code.

Confidence from Facade Tests

Recycling an old neologism of mine, I try to illustrate a point about the epistemology of testing function composition.

This article continues the introduction of a series on the epistemology of interaction testing. In the first article, I attempted to explain how to test the composition of functions. Despite my best efforts, I felt that that article somehow fell short of its potential. Particularly, I felt that I ought to have been able to provide some illustrations.

After publishing the first article, I finally found a way to illustrate what I'd been trying to communicate. That's this article. Better late than never.

Previously, on epistemology of interaction testing #

A brief summary of the previous article may be in order. The question this article series tries to address is how to unit test composition of functions - particularly pure functions.

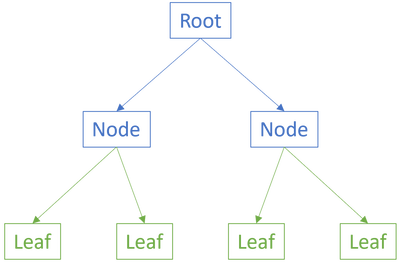

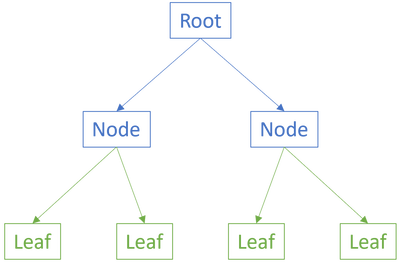

Consider the illustration from the previous article, repeated here for your convenience:

When the leaves are pure functions they are intrinsically testable. That's not the hard part, but how do we test the internal nodes or the root?

While most people would reach for Stubs and Spies, those kinds of Test Doubles tend to break encapsulation.

What are the alternatives?

An alternative I find useful is to test groups of functions composed together. Particularly when they are pure functions, you have no problem with non-deterministic behaviour. On the other hand, this approach seems to run afoul of the problem with combinatorial explosion of integration testing so eloquently explained by J.B. Rainsberger.

What I suggest, however, isn't quite integration testing.

Neologism #

If it isn't integration testing, then what is it? What do we call it?

I'm going to resurrect and recycle an old term of mine: Facade Tests. Ten years ago I had a more narrow view of a term like 'unit test' than I do today, but the overall idea seems apt in this new context. A Facade Test is a test that exercises a Facade.

These days, I don't find it productive to distinguish narrowly between different kinds of tests. At least not to the the degree that I wish to fight over terminology. On the other hand, occasionally it's useful to have a name for a thing, in order to be able to differentiate it from some other thing.

The term Facade Tests is my attempt at a neologism. I hope it helps.

Code coverage as a proxy for confidence #

The question I'm trying to address is how to test functions that compose other functions - the internal nodes or the root in the above graph. As I tried to explain in the previous article, you need to build confidence that various parts of the composition work. How do you gain confidence in the leaves?

One way is to test each leaf individually.

The first test or two may exercise a tiny slice of the System Under Test (SUT):

The next few tests may exercise another part of the SUT:

Keep adding more tests:

Stop when you have good confidence that the SUT works as intended:

If you're now thinking of code coverage, I can't blame you. To be clear, I haven't changed my position about code coverage. Code coverage is a useless target measure. On the other hand, there's no harm in having a high degree of code coverage. It still might give you confidence that the SUT works as intended.

You may think of the amount of green in the above diagrams as a proxy for confidence. The more green, the more confident you are in the SUT.

None of the arguments here hinge on code coverage per se. What matters is confidence.

Facade testing confidence #

With all the leaves covered, you can move on to the internal nodes. This is the actual problem that I'm trying to address. We would like to test an internal node, but it has dependencies. Fortunately, the context of this article is that the dependencies are pure functions, so we don't have a problem with non-deterministic behaviour. No need for Test Doubles.

It's really simple, then. Just test the internal node until you're confident that it works:

The goal is to build confidence in the internal node, the new SUT. While it has dependencies, covering those with tests is no longer the goal. This is the key difference between Facade Testing and Integration Testing. You're not trying to cover all combinations of code paths in the integrated set of components. You're still just trying to test the new SUT.

Whether or not these tests exercise the leaves is irrelevant. The leaves are already covered by other tests. What 'coverage' you get of the leaves is incidental.

Once you've built confidence in internal nodes, you can repeat the process with the root node:

The test covers enough of the root node to give you confidence in it. Some of the dependencies are also partially exercised by the tests, but this is still secondary. The way I've drawn the diagram, the left internal node is exercised in such a way that its dependencies (the leaves) are partially exercised. The test apparently also exercises the right internal node, but none of that activity makes it interact with the leaves.

These aren't integration tests, so they avoid the problem of combinatorial explosion.

Conclusion #

This article was an attempt to illustrate the prose in the previous article. You can unit test functions that compose other functions by first unit testing the leaf functions and then the compositions. While these tests exercise an 'integration' of components, the purpose is not to test the integration. Thus, they aren't integration tests. They're facade tests.

Next: An abstract example of refactoring from interaction-based to property-based testing.

Comments

I really appreciate that you are writing about testing compositions of pure functions. As an F# dev who tries to adhere to the impureim sandwich (which, indeed, you helped me with before), this is something I have also been struggling with, and failing to find good answers to.

But following your suggestion, aren’t we testing implementation details?

Using the terminology in this article, I often have a root function that is public, which composes and delegates work to private helper functions. Compared to having all the logic directly in the root function, the code is, unsurprisingly, easier to read and maintain this way. However, all the private helper functions (internal nodes and leaves) as well as the particularities of how the root and the internal nodes compose their “children”, are very much just implementation details of the root function.

I occasionally need to change such code in a way that does not change the public API (at least not significantly enough to cause excessive test maintenance), but which significantly restructures the internal helpers. If I were to test as suggested in this article, I would have many broken tests on my hands. These would be tests of the internal nodes and leaves (which may not exist at all after the refactor, having been replaced with completely different functions) as well as tests of how the root node composes the other functions (which, presumably, would still pass but may not actually test anything useful anymore).

In short, testing in the way suggested here would act as a force to avoid refactoring, which seems counter-productive.

One would also need to use InternalsVisibleTo or similar in order to test those helpers. I’m not very concerned about that on its own (though I’d like to keep the helpers private), but it always smells of testing implementation details, which, as I argue, is what I think we’re doing. (One could alternatively make the helpers public – they’re pure, after all, so presumably no harm done – but that would expose a public API that no-one should actually use, and doesn’t avoid the main problem anyway.)

As a motivating example from my world, consider a system for sending email notifications. The root function accepts a list of notifications that should be sent, together with any auxiliary data (names and other data from all users referenced by the notifications; translated strings; any environment-specific data such as base URLs for links; etc.), and returns the email HTML (or at least a structure that maps trivially to HTML). In doing this, the code has to group notifications in several levels, sort them in various ways, merge similar consecutive notifications in non-trivial ways, hide notifications that the user has not asked to receive (but which must still be passed to the root function since they are needed for other logic), and so on. All in all, I have almost 600 lines of pure code that does this. (In addition, I have 150 lines that fetches everything from the DB and creates necessary lookup maps of auxiliary data to pass to the root function. I consider this code “too boring to fail”.)

The pure part of the code was recently significantly revamped. Had I had tests for private/internal helpers, the refactor would likely have been much more painful.

I expect there is no perfect way to make the code both testable and easy to refactor. But I am still eager to hear your thoughts on my concern: Following your suggestion, aren’t we testing implementation details?

Christer, thank you for writing. The short answer is: yes.

Isn't this a separate problem, though? If you're using Stubs and Spies to test interaction, and other tests to verify your implementations, then isn't that a similar problem?

I'm going to graze this topic in the future article in this series tentatively titled Refactoring pure function composition without breaking existing tests, but I should probably write another article more specifically about this topic...

Christer (and everyone who may be interested), I've long been wanting to expand on my previous answer, and I finally found the time to write an article that discusses the implementation-detail question.

Apart from that I also want to remind readers that the article Refactoring pure function composition without breaking existing tests has been available since May 1st, 2023. It shows one example of using the Strangler pattern to refactor pure Facade Tests without breaking them.

This doesn't imply that one should irresponsibly make every pure function public. These days, I make things internal by default, but make them public if I think they'd be good seams. Particularly when following test-driven development, it's possible to unit test private helpers via a public API. This does, indeed, have the benefit that you're free to refactor those helpers without impacting test code.

The point of this article series isn't that you should make pure functions public and test interactions with property-based testing. The point is that if you already have pure functions and you wish to test how they interact, then property-based testing is a good way to achieve that goal.

If, on the other hand, you have a pure function for composing emails, and you can keep all helper functions private, still cover it enough to be confident that it works, and do that by only exercising a single public root function (mail-slot testing) then that's preferable. That's what I would aim for as well.

Warnings-as-errors friction

TDD friction. Surely that's a bad thing(?)

Paul Wilson recently wrote on Mastodon:

Software development opinion (warnings as errors)

Just seen this via Elixir Radar, https://curiosum.com/til/warnings-as-errors-elixir-mix-compile on on treating warnings as errors, and yeah don't integrate code with warnings. But ....

Having worked on projects with this switched on in dev, it's an annoying bit of friction when Test Driving code. Yes, have it switched on in CI, but don't make me fix all the warnings before I can run my failing test.

(Using an env variable for the switch is a good compromise here, imo).

This made me reflect on similar experiences I've had. I thought perhaps I should write them down.

To be clear, this article is not an attack on Paul Wilson. He's right, but since he got me thinking, I only find it honest and respectful to acknowledge that.

The remark does, I think, invite more reflection.

Test friction example #

An example would be handy right about now.

As I was writing the example code base for Code That Fits in Your Head, I was following the advice of the book:

- Turn on Nullable reference types (only relevant for C#)

- Turn on static code analysis or linters

- Treat warnings as errors. Yes, also the warnings produced by the two above steps

As Paul Wilson points out, this tends to create friction with test-driven development (TDD). When I started the code base, this was the first TDD test I wrote:

[Fact] public async Task PostValidReservation() { var response = await PostReservation(new { date = "2023-03-10 19:00", email = "katinka@example.com", name = "Katinka Ingabogovinanana", quantity = 2 }); Assert.True( response.IsSuccessStatusCode, $"Actual status code: {response.StatusCode}."); }

Looks good so far, doesn't it? Are any of the warnings-as-errors settings causing friction? Not directly, but now regard the PostReservation helper method:

[SuppressMessage( "Usage", "CA2234:Pass system uri objects instead of strings", Justification = "URL isn't passed as variable, but as literal.")] private async Task<HttpResponseMessage> PostReservation( object reservation) { using var factory = new WebApplicationFactory<Startup>(); var client = factory.CreateClient(); string json = JsonSerializer.Serialize(reservation); using var content = new StringContent(json); content.Headers.ContentType.MediaType = "application/json"; return await client.PostAsync("reservations", content); }

Notice the [SuppressMessage] attribute. Without it, the compiler emits this error:

error CA2234: Modify 'ReservationsTests.PostReservation(object)' to call 'HttpClient.PostAsync(Uri, HttpContent)' instead of 'HttpClient.PostAsync(string, HttpContent)'.

That's an example of friction in TDD. I could have fixed the problem by changing the last line to:

return await client.PostAsync(new Uri("reservations", UriKind.Relative), content);

This makes the actual code more obscure, which is the reason I didn't like that option. Instead, I chose to add the [SuppressMessage] attribute and write a Justification. It is, perhaps, not much of an explanation, but my position is that, in general, I consider CA2234 a good and proper rule. It's a specific example of favouring stronger types over stringly typed code. I'm all for it.

If you grok the motivation for the rule (which, evidently, the documentation code-example writer didn't) you also know when to safely ignore it. Types are useful because they enable you to encapsulate knowledge and guarantees about data in a way that strings and ints typically don't. Indeed, if you are passing URLs around, pass them around as Uri objects rather than strings. This prevents simple bugs, such as accidentally swapping the place of two variables because they're both strings.

In the above example, however, a URL isn't being passed around as a variable. The value is hard-coded right there in the code. Wrapping it in a Uri object doesn't change that.

But I digress...

This is an example of friction in TDD. Instead of being able to just plough through, I had to stop and deal with a Code Analysis rule.

SUT friction example #

But wait! There's more.

To pass the test, I had to add this class:

[Route("[controller]")] public class ReservationsController { #pragma warning disable CA1822 // Mark members as static public void Post() { } #pragma warning restore CA1822 // Mark members as static }

I had to suppress CA1822 as well, because it generated this error:

error CA1822: Member Post does not access instance data and can be marked as static (Shared in VisualBasic)

Keep in mind that because of my settings, it's an error. The code doesn't compile.

You can try to fix it by making the method static, but this then triggers another error:

error CA1052: Type 'ReservationsController' is a static holder type but is neither static nor NotInheritable

In other words, the class should be static as well:

[Route("[controller]")] public static class ReservationsController { public static void Post() { } }

This compiles. What's not to like? Those Code Analysis rules are there for a reason, aren't they? Yes, but they are general rules that can't predict every corner case. While the code compiles, the test fails.

Out of the box, that's just not how that version of ASP.NET works. The MVC model of ASP.NET expects action methods to be instance members.

(I'm sure that there's a way to tweak ASP.NET so that it allows static HTTP handlers as well, but I wasn't interested in researching that option. After all, the above code only represents an interim stage during a longer TDD session. Subsequent tests would prompt me to give the Post method some proper behaviour that would make it an instance method anyway.)

So I kept the method as an instance method and suppressed the Code Analysis rule.

Friction? Demonstrably.

Opt in #

Is there a way to avoid the friction? Paul Wilson mentions a couple of options: Using an environment variable, or only turning warnings into errors in your deployment pipeline. A variation on using an environment variable is to only turn on errors for Release builds (for languages where that distinction exists).

In general, if you have a useful tool that unfortunately takes a long time to run, making it a scheduled or opt-in tool may be the way to go. A mutation testing tool like Stryker can easily run for hours, so it's not something you want to do for every change you make.

Another example is dependency analysis. One of my recent clients had a tool that scanned their code dependencies (NuGet, npm) for versions with known vulnerabilities. This tool would also take its time before delivering a verdict.

Making tools opt-in is definitely an option.

You may be concerned that this requires discipline that perhaps not all developers have. If a tool is opt-in, will anyone remember to run it?

As I also describe in Code That Fits in Your Head, you could address that issue with a checklist.

Yeah, but do we then need a checklist to remind us to look at the checklist? Right, quis custodiet ipsos custodes? Is it going to be turtles all the way down?

Well, if no-one in your organisation can be trusted to follow any commonly-agreed-on rules on a regular basis, you're in trouble anyway.

Good friction? #

So far, I've spent some time describing the problem. When encountering resistance your natural reaction is to find it disagreeable. You want to accomplish something, and then this rule/technique/tool gets in the way!

Despite this, is it possible that this particular kind of friction is beneficial?

By (subconsciously, I'm sure) picking a word like 'friction', you've already chosen sides. That word, in general, has a negative connotation. Is it the only word that describes the situation? What if we talked about it instead in terms of safety, assistance, or predictability?

Ironically, friction was a main complaint about TDD when it was first introduced.

"What do you mean? I have to write a test before I write the implementation? That's going to slow me down!"

The TDD and agile movement developed a whole set of standard responses to such objections. Brakes enable you to go faster. If it hurts, do it more often.

Try those on for size, only now applied to warnings as errors. Friction is what makes brakes work.

Additive mindset #

As I age, I'm becoming increasingly aware of a tendency in the software industry. Let's call it the additive mindset.

It's a reflex to consider addition a good thing. An API with a wide array of options is better than a narrow API. Software with more features is better than software with few features. More log data provides better insight.

More code is better than less code.

Obviously, that's not true, but we. keep. behaving as though it is. Just look at the recent hubbub about ChatGPT, or GitHub Copilot, which I recently wrote about. Everyone reflexively view them as productivity tools because the can help us produce more code faster.

I had a cup of coffee with my wife as I took a break from writing this article, and I told her about it. Her immediate reaction when told about friction is that it's a benefit. She's a doctor, and naturally view procedure, practice, regulation, etcetera as occasionally annoying, but essential to the practice of medicine. Without procedures, patients would die from preventable mistakes and doctors would prescribe morphine to themselves. Checking boxes and signing off on decisions slow you down, and that's half the point. Making you slow down can give you the opportunity to realise that you're about to do something stupid.

Worried that TDD will slow down your programmers? Don't. They probably need slowing down.

But if TDD is already being touted as a process to make us slow down and think, is it a good idea, then, to slow down TDD with warnings as errors? Are we not interfering with a beneficial and essential process?

Alternatives to TDD #

I don't have a confident answer to that question. What follows is tentative. I've been doing TDD since 2003 and while I was also an early critic, it's still central to how I write code.

When I began doing TDD with all the errors dialled to 11 I was concerned about the friction, too. While I also believe in linters, the two seem to work at cross purposes. The rule about static members in the above example seems clearly counterproductive. After all, a few commits later I'd written enough code for the Post method that it had to be an instance method after all. The degenerate state was temporary, an artefact of the TDD process, but the rule triggered anyway.

What should I think of that?

I don't like having to deal with such false positives. The question is whether treating warnings as errors is a net positive or a net negative?

It may help to recall why TDD is a useful practice. A major reason is that it provides rapid feedback. There are, however, other ways to produce rapid feedback. Static types, compiler warnings, and static code analysis are other ways.

I don't think of these as alternatives to TDD, but rather as complementary. Tests can produce feedback about some implementation details. Constructive data is another option. Compiler warnings and linters enter that mix as well.

Here I again speak with some hesitation, but it looks to me as though the TDD practice originated in dynamically typed tradition (Smalltalk), and even though some Java programmers were early adopters as well, from my perspective it's always looked stronger among the dynamic languages than the compiled languages. The unadulterated TDD tradition still seems to largely ignore the existence of other forms of feedback. Everything must be tested.

At the risk of repeating myself, I find TDD invaluable, but I'm happy to receive rapid feedback from heterogeneous sources: Tests, type checkers, compilers, linters, fellow ensemble programmers.

This suggests that TDD isn't the only game in town. This may also imply that the friction to TDD caused by treating warnings as errors may not be as costly as first perceived. After all, slowing down something that you rely on 75% of the time isn't quite as bad as slowing down something you rely on 100% of the time.

While it's a cost, perhaps it went down...

Simplicity #

As always, circumstances matter. Is it always a good idea to treat warnings as errors?

Not really. To be honest, treating warnings as errors is another case of treating a symptom. The reason I recommend it is that I've seen enough code bases where compiler warnings (not errors) have accumulated. In a setting where that happens, treating (new) warnings as errors can help get the situation under control.

When I work alone, I don't allow warnings to build up. I rarely tell the compiler to treat warnings as errors in my personal code bases. There's no need. I have zero tolerance for compiler warnings, and I do spot them.

If you have a team that never allows compiler warnings to accumulate, is there any reason to treat them as errors? Probably not.

This underlines an important point about productivity: A good team without strict process can outperform a poor team with a clearly defined process. Mindset beats tooling. Sometimes.

Which mindset is that? Not the additive mindset. Rather, I believe in focusing on simplicity. The alternative to adding things isn't to blindly remove things. You can't add features to a program only by deleting code. Rather, add code, but keep it simple. Decouple to delete.

perfection is attained not when there is nothing more to add, but when there is nothing more to remove.

Simple code. Simple tests. Be warned, however, that code simplicity does not imply naive code understandable by everyone. I'll refer you to Rich Hickey's wonderful talk Simple Made Easy and remind you that this was the line of thinking that lead to Clojure.

Along the same lines, I tend to consider Haskell to be a vehicle for expressing my thoughts in a simpler way than I can do in F#, which again enables simplicity not available in C#. Simpler, not easier.

Conclusion #

Does treating warnings as errors imply TDD friction? It certainly looks that way.

Is it worth it, nonetheless? Possibly. It depends on why you need to turn warnings into errors in the first place. In some settings, the benefits of treating warnings as errors may be greater than the cost. If that's the only way you can keep compiler warnings down, then do treat warnings as errors. Such a situation, however, is likely to be a symptom of a more fundamental mindset problem.

This almost sounds like a moral judgement, I realise, but that's not my intent. Mindset is shaped by personal preference, but also by organisational and peer pressure, as well as knowledge. If you only know of one way to achieve a goal, you have no choice. Only if you know of more than one way can you choose.

Choose the way that leaves the code simpler than the other.

Test Data Generator monad

With examples in C# and F#.

This article is an instalment in an article series about monads. In other related series previous articles described Test Data Generator as a functor, as well as Test Data Generator as an applicative functor. As is the case with many (but not all) functors, this one also forms a monad.

This article expands on the code from the above-mentioned articles about Test Data Generators. Keep in mind that the code is a simplified version of what you'll find in a real property-based testing framework. It lacks shrinking and referentially transparent (pseudo-)random value generation. Probably more things than that, too.

SelectMany #

A monad must define either a bind or join function. In C#, monadic bind is called SelectMany. For the Generator<T> class, you can implement it as an instance method like this:

public Generator<TResult> SelectMany<TResult>(Func<T, Generator<TResult>> selector) { Func<Random, TResult> newGenerator = r => { Generator<TResult> g = selector(generate(r)); return g.Generate(r); }; return new Generator<TResult>(newGenerator); }

SelectMany enables you to chain generators together. You'll see an example later in the article.

Query syntax #

As the monad article explains, you can enable C# query syntax by adding a special SelectMany overload:

public Generator<TResult> SelectMany<U, TResult>( Func<T, Generator<U>> k, Func<T, U, TResult> s) { return SelectMany(x => k(x).Select(y => s(x, y))); }

The implementation body always looks the same; only the method signature varies from monad to monad. Again, I'll show you an example of using query syntax later in the article.

Flatten #

In the introduction you learned that if you have a Flatten or Join function, you can implement SelectMany, and the other way around. Since we've already defined SelectMany for Generator<T>, we can use that to implement Flatten. In this article I use the name Flatten rather than Join. This is an arbitrary choice that doesn't impact behaviour. Perhaps you find it confusing that I'm inconsistent, but I do it in order to demonstrate that the behaviour is the same even if the name is different.

public static Generator<T> Flatten<T>(this Generator<Generator<T>> generator) { return generator.SelectMany(x => x); }

As you can tell, this function has to be an extension method, since we can't have a class typed Generator<Generator<T>>. As usual, when you already have SelectMany, the body of Flatten (or Join) is always the same.

Return #

Apart from monadic bind, a monad must also define a way to put a normal value into the monad. Conceptually, I call this function return (because that's the name that Haskell uses):

public static Generator<T> Return<T>(T value) { return new Generator<T>(_ => value); }

This function ignores the random number generator and always returns value.

Left identity #

We needed to identify the return function in order to examine the monad laws. Let's see what they look like for the Test Data Generator monad, starting with the left identity law.

[Theory] [InlineData(17, 0)] [InlineData(17, 8)] [InlineData(42, 0)] [InlineData(42, 1)] public void LeftIdentityLaw(int x, int seed) { Func<int, Generator<string>> h = i => new Generator<string>(r => r.Next(i).ToString()); Assert.Equal( Generator.Return(x).SelectMany(h).Generate(new Random(seed)), h(x).Generate(new Random(seed))); }

Notice that the test can't directly compare the two generators, because equality isn't clearly defined for that class. Instead, the test has to call Generate in order to produce comparable values; in this case, strings.

Since Generate is non-deterministic, the test has to seed the random number generator argument in order to get reproducible results. It can't even declare one Random object and share it across both method calls, since generating values changes the state of the object. Instead, the test has to generate two separate Random objects, one for each call to Generate, but with the same seed.

Right identity #

In a manner similar to above, we can showcase the right identity law as a test.

[Theory] [InlineData('a', 0)] [InlineData('a', 8)] [InlineData('j', 0)] [InlineData('j', 5)] public void RightIdentityLaw(char letter, int seed) { Func<char, Generator<string>> f = c => new Generator<string>(r => new string(c, r.Next(100))); Generator<string> m = f(letter); Assert.Equal( m.SelectMany(Generator.Return).Generate(new Random(seed)), m.Generate(new Random(seed))); }

As always, even a parametrised test constitutes no proof that the law holds. I show the tests to illustrate what the laws look like in 'real' code.

Associativity #

The last monad law is the associativity law that describes how (at least) three functions compose. We're going to need three functions. For the demonstration test I'm going to conjure three nonsense functions. While this may not be as intuitive, it on the other hand reduces the noise that more realistic code tends to produce. Later in the article you'll see a more realistic example.

[Theory] [InlineData('t', 0)] [InlineData('t', 28)] [InlineData('u', 0)] [InlineData('u', 98)] public void AssociativityLaw(char a, int seed) { Func<char, Generator<string>> f = c => new Generator<string>(r => new string(c, r.Next(100))); Func<string, Generator<int>> g = s => new Generator<int>(r => r.Next(s.Length)); Func<int, Generator<TimeSpan>> h = i => new Generator<TimeSpan>(r => TimeSpan.FromDays(r.Next(i))); Generator<string> m = f(a); Assert.Equal( m.SelectMany(g).SelectMany(h).Generate(new Random(seed)), m.SelectMany(x => g(x).SelectMany(h)).Generate(new Random(seed))); }

All tests pass.

CPR example #

Formalities out of the way, let's look at a more realistic example. In the article about the Test Data Generator applicative functor you saw an example of parsing a Danish personal identification number, in Danish called CPR-nummer (CPR number) for Central Person Register. (It's not a register of central persons, but rather the central register of persons. Danish works slightly differently than English.)

CPR numbers have a simple format: DDMMYY-SSSS, where the first six digits indicate a person's birth date, and the last four digits are a sequence number. An example could be 010203-1234, which indicates a woman born February 1, 1903.

In C# you might model a CPR number as a class with a constructor like this:

public CprNumber(int day, int month, int year, int sequenceNumber) { if (year < 0 || 99 < year) throw new ArgumentOutOfRangeException( nameof(year), "Year must be between 0 and 99, inclusive."); if (month < 1 || 12 < month) throw new ArgumentOutOfRangeException( nameof(month), "Month must be between 1 and 12, inclusive."); if (sequenceNumber < 0 || 9999 < sequenceNumber) throw new ArgumentOutOfRangeException( nameof(sequenceNumber), "Sequence number must be between 0 and 9999, inclusive."); var fourDigitYear = CalculateFourDigitYear(year, sequenceNumber); var daysInMonth = DateTime.DaysInMonth(fourDigitYear, month); if (day < 1 || daysInMonth < day) throw new ArgumentOutOfRangeException( nameof(day), $"Day must be between 1 and {daysInMonth}, inclusive."); this.day = day; this.month = month; this.year = year; this.sequenceNumber = sequenceNumber; }

The system has been around since 1968 so clearly suffers from a Y2k problem, as years are encoded with only two digits. The workaround for this is that the most significant digit of the sequence number encodes the century. At the time I'm writing this, the Danish-language wikipedia entry for CPR-nummer still includes a table that shows how one can derive the century from the sequence number. This enables the CPR system to handle birth dates between 1858 and 2057.

The CprNumber constructor has to consult that table in order to determine the century. It uses the CalculateFourDigitYear function for that. Once it has the four-digit year, it can use the DateTime.DaysInMonth method to determine the number of days in the given month. This is used to validate the day parameter.

The previous article showed a test that made use of a Test Data Generator for the CprNumber class. The generator was referenced as Gen.CprNumber, but how do you define such a generator?

CPR number generator #

The constructor arguments for month, year, and sequenceNumber are easy to generate. You need a basic generator that produces values between two boundaries. Both QuickCheck and FsCheck call it choose, so I'll reuse that name:

public static Generator<int> Choose(int min, int max) { return new Generator<int>(r => r.Next(min, max + 1)); }

The choose functions of QuickCheck and FsCheck consider both boundaries to be inclusive, so I've done the same. That explains the + 1, since Random.Next excludes the upper boundary.

You can now combine choose with DateTime.DaysInMonth to generate a valid day:

public static Generator<int> Day(int year, int month) { var daysInMonth = DateTime.DaysInMonth(year, month); return Gen.Choose(1, daysInMonth); }

Let's pause and consider the implications. The point of this example is to demonstrate why it's practically useful that Test Data Generators are monads. Keep in mind that monads are functors you can flatten. When do you need to flatten a functor? Specifically, when do you need to flatten a Test Data Generator? Right now, as it turns out.

The Day method returns a Generator<int>, but where do the year and month arguments come from? They'll typically be produced by another Test Data Generator such as choose. Thus, if you only map (Select) over previous Test Data Generators, you'll produce a Generator<Generator<int>>:

Generator<int> genYear = Gen.Choose(1970, 2050); Generator<int> genMonth = Gen.Choose(1, 12); Generator<(int, int)> genYearAndMonth = genYear.Apply(genMonth); Generator<Generator<int>> genDay = genYearAndMonth.Select(t => Gen.Choose(1, DateTime.DaysInMonth(t.Item1, t.Item2)));

This example uses an Apply overload to combine genYear and genMonth. As long as the two generators are independent of each other, you can use the applicative functor capability to combine them. When, however, you need to produce a new generator from a value produced by a previous generator, the functor or applicative functor capabilities are insufficient. If you try to use Select, as in the above example, you'll produce a nested generator.

Since it's a monad, however, you can Flatten it:

Generator<int> flattened = genDay.Flatten();

Or you can use SelectMany (monadic bind) to flatten as you go. The CprNumber generator does that, although it uses query syntax syntactic sugar to make the code more readable:

public static Generator<CprNumber> CprNumber => from sequenceNumber in Gen.Choose(0, 9999) from year in Gen.Choose(0, 99) from month in Gen.Choose(1, 12) let fourDigitYear = TestDataBuilderFunctor.CprNumber.CalculateFourDigitYear(year, sequenceNumber) from day in Gen.Day(fourDigitYear, month) select new CprNumber(day, month, year, sequenceNumber);

The expression first uses Gen.Choose to produce three independent int values: sequenceNumber, year, and month. It then uses the CalculateFourDigitYear function to look up the proper century based on the two-digit year and the sequenceNumber. With that information it can call Gen.Day, and since the expression uses monadic composition, it's flattening as it goes. Thus day is an int value rather than a generator.

Finally, the entire expression can compose the four int values into a valid CprNumber object.

You can consult the previous article to see Gen.CprNumber in use.

Hedgehog CPR generator #

You can reproduce the CPR example in F# using one of several property-based testing frameworks. In this example, I'll continue the example from the previous article as well as the article Danish CPR numbers in F#. You can see a couple of tests in these articles. They use the cprNumber generator, but never show the code.

In all the property-based testing frameworks I've seen, generators are called Gen. This is also the case for Hedgehog. The Gen container is a monad, and there's a gen computation expression that supplies syntactic sugar.

You can translate the above example to a Hedgehog Gen value like this:

let cprNumber = gen { let! sequenceNumber = Range.linear 0 9999 |> Gen.int32 let! year = Range.linear 0 99 |> Gen.int32 let! month = Range.linear 1 12 |> Gen.int32 let fourDigitYear = Cpr.calculateFourDigitYear year sequenceNumber let daysInMonth = DateTime.DaysInMonth (fourDigitYear, month) let! day = Range.linear 1 daysInMonth |> Gen.int32 return Cpr.tryCreate day month year sequenceNumber } |> Gen.some

To keep the example simple, I haven't defined an explicit day generator, but instead just inlined DateTime.DaysInMonth.

Consult the articles that I linked above to see the Gen.cprNumber generator in use.

Conclusion #

Test Data Generators form monads. This is useful when you need to generate test data that depend on other generated test data. Monadic bind (SelectMany in C#) can flatten the generator functor as you go. This article showed examples in both C# and F#.

The same abstraction also exists in the Haskell QuickCheck library, but I haven't shown any Haskell examples. If you've taken the trouble to learn Haskell (which you should), you already know what a monad is.

Next: Functor relationships.

Comments

CsCheck is a full implementation of something along these lines. It uses the same random sample generation in the shrinking step always reducing a Size measure. It turns out to be a better way of shrinking than the QuickCheck way.

Anthony, thank you for writing. You'll be pleased to learn, I take it, that the next article in the series about the epistemology of interaction testing uses CsCheck as the example framework.

A thought on workplace flexibility and asynchrony

Is an inclusive workplace one that enables people to work at different hours?

In the early noughties I worked for Microsoft Consulting Service in Denmark. In some sense it was quite the competitive working environment with an unhealthy focus on billable hours, customer satisfaction surveys, and stack ranking. On the other hand, since I was mostly on my own on customer engagements, my managers didn't care when and how I worked. As long as I billed and customers were happy, they were happy.

That sometimes allowed me great flexibility.

At one time I was on a project for a customer in another part of Denmark, and while Denmark isn't that big, it was still understood that I would do most of my work remotely. The main deliverable was the code base for a software system, and while I might email and otherwise communicate with the customer and a few colleagues during the day, we didn't have any fixed schedules. In other words, I could work whenever I wanted, as long as I got the work done.

My daughter was a toddler at the time, and as is the norm in Denmark, already in day nursery. My wife is a doctor and was, at that time, working in hospitals - some of the most inflexible workplaces I can think of. She had to leave early in the morning because the hospitals run on fixed schedules.

I'd get up to have breakfast with her. After she left for work, I'd work until my daughter woke up. She typically woke up between 8 and 9, so I'd already be 1-2 hours into my work day. I'd stop working, make her breakfast, and take her to day care. We'd typically arrive between 10 and 11 in good spirits. I'd then bicycle home and work until my wife came home with our daughter. Perhaps I'd get a few more hours of work done in the evening.

I worked odd hours, and I loved the flexibility. My customers expected me to deliver iterations of the software and generally stay in touch, but they were perfectly happy with mostly asynchronous communication. Back then, it mostly meant email.

During the normal work day, I might be unavailable for hours, taking care of my daughter, exercising, grocery shopping, etc. Yet, I still billed more hours than most of my colleagues, and ultimately received an award for my work.

In the decades that followed, I haven't always had such flexibility, but that early experience gave me a strong appreciation for asynchronous work.

Lockdown work wasn't flexible #

When COVID-19 hit and most countries went into lockdown, many office workers got their first taste of remote work. Many struggled, for a variety of reasons. Some of those reasons are quite real. If you don't have a well-equipped home office, spending eight hours a day on a kitchen chair is hardly ideal working conditions. And no, the sofa isn't a good long-term solution either.

Another problem during lockdown is that your entire family may be home, too. If you have kids, you'll have to attend to them. To be clear, if you've only experienced working from home during COVID-19 lockdown, you may have suffered from many of these problems without realising the benefits of flexibility.

To add spite to injury, many workplaces tried to carry on as if nothing had changed, apart from the physical location of people. Office hours were still in effect, and work now took place over video calls. If you spent eight hours on Teams or Zoom, that's not flexible working conditions. Rather, it's the worst of both worlds. The only benefit is that you avoid the commute.

Remote compared to asynchronous work #

As outlined above, remote work isn't necessarily flexible. Flexibility comes from asynchronous work processes more than physical location. The flexibility is a result of the freedom to chose when to work, more than where to work.

Based on my decades of experience working asynchronously from home, I published an article about the trade-off between latency and throughput, comparing working together in an office with working asynchronously from home. The point is that you can make both work, but the way you organise work matters. In-office work is efficient if everyone is at the office at the same time. Remote work is efficient if people can work asynchronously.

As is usually the case, there are trade-offs. The disadvantage of working together is that you must all be present simultaneously. Thus, you don't get the flexibility of choosing when to work. The benefits of working asynchronously is exactly that flexibility, but on the other hand, you lose the advantage of the efficient, high-bandwidth communication that comes from being physically in the same room as others.

Inclusion through flexibility? #