Compose object graphs with confidence by Mark Seemann

The main principle behind the Register Resolve Release pattern is that loosely coupled object graphs should be composed as a single action in the entry point of the application (the Composition Root). For request-based applications (web sites and services), we use a variation where we compose once per request.

It seems to me that a lot of people are apprehensive when they first hear about this concept. It may sound reasonable from an architectural point of view, but isn't it horribly inefficient? A well-known example of such a concern is Jeffrey Palermo's blog post Constructor over-injection anti-pattern. Is it really a good idea to compose a complete object graph in one go? What if we don't need part of the graph, or only need it later? Doesn't it adversely affect response times?

Normally it doesn't, and if it does, there are elegant ways to address the issue.

In the rest of this blog post I will expand on this topic. To keep the discussion as simple as possible, I'll restrict my analysis to object trees instead of full graphs. This is quite a reasonable simplification as we should strive to avoid circular dependencies, but even in the case of full graphs the arguments and techniques put forward below hold.

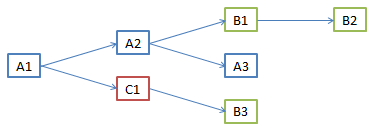

Consider a simple tree composed of classes from three different assemblies:

All the A classes (blue) are defined in the A assembly, B classes (green) in the B assembly, and the C1 class (red) in the C assembly. In code we create the tree with Constructor Injection like this:

var t = new A1( new A2( new B1( new B2()), new A3()), new C1( new B3()));

Given the tree above, we can now address the most common concerns about composing object trees in one go.

Will it be slow? #

Most likely not. Keep in mind that Injection Constructors should be very simple, so not a lot of work is going on during composition. Obviously just creating new object instances takes a bit of time in itself, but we create objects instances all the time in .NET code, and it's often a very fast operation.

Even when using DI Containers, which perform a lot of (necessary) extra work when creating objects, we can create tens of thousand trees per second. Creation of objects simply isn't that big a deal.

But still: what about assembly loading? #

I glossed over an important point in the above argument. While object creation is fast, it sometimes takes a bit of time to load an assembly. The tree above uses classes from three different assemblies, so to create the tree all three assemblies must be loaded.

In many cases that's a performance hit you'll have to take because you need those classes anyway, but sometimes you might be concerned with taking this performance hit too early. However, I make the claim that in the vast majority of cases, this concern is irrelevant.

In this particular context there are two different types of applications: Request-based applications (web) and all the rest (desktop apps, daemons, batch-jobs, etc.).

Request-based applications #

For request-based applications such as web sites and REST services, an object tree must be composed for each request. However, all requests are served by the same AppDomain, so once an assembly is loaded, it sticks around to be available for all subsequent requests. Thus, the first few requests will suffer a performance penalty from having to load all assemblies, but after that there will be no performance impact.

In short, in request-based applications, you can compose object trees with confidence. In only extremely rare cases should you have performance issues from composing the entire tree in one go.

Long-running applications #

For long-running applications the entire object tree must be composed at start-up. For background services such as daemons and batch processes the start-up time probably doesn't matter much, but for desktop applications it can be of great importance.

In some cases the application requires the entire tree to be immediately available, in which case there's not a lot you can do. Still, once all assemblies have been loaded, actually creating the tree will be very fast.

In other cases an entire branch of the tree may not be immediately required. As an example, if the C1 node in the above graph isn't needed right away, we could improve start-up time if we could somehow defer creating that branch, because this would also defer loading of the entire C assembly.

Deferred branches #

Since object creation is fast, the only case where it makes sense to defer loading of a branch is when creation of that branch causes an assembly to be loaded. If we can defer creation of such a branch, we can also defer loading of the assembly, thus improving the time it takes to compose the initial tree.

Imagine that we wish to defer creation of the C1 branch of the above tree. It will prevent the C assembly from being loaded because that assembly is not used in any other place in the tree. However, it will not prevent the B assembly from being loaded, since that assembly is also being used by the A2 node.

Still, in those rare situations where it makes sense to defer creation of a branch, we can make that cut into a part of the infrastructure of the tree. I originally described this technique as a reaction to the above mentioned post by Jeffrey Palermo, but here's a restatement in the current context.

We can defer creating the C1 node by wrapping it in a lazy implementation of the same interface. The C1 node implements an interface called ISolo<IMarker>, so we can wrap it in a Virtual Proxy that defers creation of C1 until it's needed:

public class LazySoloMarker : ISolo<IMarker> { private readonly Lazy<ISolo<IMarker>> lazy; public LazySoloMarker(Lazy<ISolo<IMarker>> lazy) { if (lazy == null) { throw new ArgumentNullException("lazy"); } this.lazy = lazy; } #region ISolo<IMarker> Members public IMarker Item { get { return this.lazy.Value.Item; } } #endregion }

This Virtual Proxy takes a Lazy<ISolo<IMarker>> as input and defers to it to implement the members of the interface. This only causes the Value property to be created when it's first accessed - which may be long after the LazySoloMarker instance was created.

The tree can now be composed like this:

var t = new A1( new A2( new B1( new B2()), new A3()), new LazySoloMarker( new Lazy<ISolo<IMarker>>(() => new C1( new B3()))));

This retains all the original behavior of the original tree, but defers creation of the C1 node until it's needed for the first time.

The bottom line is this: you can compose the entire object graph with confidence. It's not going to be a performance bottleneck.

Update (2013-08-19 08:09 UTC): For a more detailed treatment of this topic, watch my NDC 2013 talk Big Object Graphs Up Front.

Comments

The viewpoint however seems skewed towards request-based apps and I think really trivializes the innate lazy exploration nature of object graphs in rich-client apps (let's not call them desktop, as mobile is the exact same scenario - only difference is resources and processing power are a lot more limited and thus our architectures have to be better designed to account for it).

The main premise here is that in a rich-client app the trees you mention above should ALWAYS be lazily loaded, since the "branches" of the component tree are usually screens or their various sub-components (a dialog in some sub-screen for example). The branches are also more like "chains" as screens can have multiple follow-on screens that slowly load more content as you dive in deeper (while content you have visited higher up the chain may get unloaded - "lazy unloading" shall we say?). You never want to load screens that are not yet visible at app startup. In a desktop, fully-local app this results in a chuggy-performing or needlessly resource-wasteful app; in mobile it is simply impossible within the memory constraints. And of course... a majority of rich-client screens are hydrated with data pulled from the Internet and that data depends on either user input or server state (think accessing a venue listing on Yelp - you need the user to say which venue they want to view and the venue data is stored and updated server-side in a crowd-sourced manner not locally available to the user). So even if you had unlimited client-side memory you still couldn't pre-load the whole component object graph for the application up front.

I completely agree with your Composition Root article (/2011/07/28/CompositionRoot) and I think it describes things very beautifully. But what it also blissfully points out is that rich-client apps are poorly suited to a Composition Root and thus Dependency Injection approach.

DI does lazy-loading very poorly (just look at the amount of wrapper/plumbing you have to write for one component above). Now picture doing that for every screen in a mobile app (ours has ~30 screens and it's quite a trivial, minimal app for our problem domain). That's not to even mention whether you actually WANT to pull all the lifetime management into a single component - what could easily be seen as a break in Cohesion for logic belonging to the various sub-components. In web this doesn't really matter as the lifetime is usually all or nothing - it's either a singleton/per-request or per-instance. The request provides the full scoping needed for most processing. But in rich-client the lifetimes need to be finely managed based on screen navigation, user action and caching or memory constraints. Sub-components need to get loaded in and out dynamically in a lot more complex a manner than singletons or per-user-session. Again pulling this fine-tuned logic into a shared, centralized component is highly questionable - even if it was easily doable, which it's not.

I won't go into alternatives here (perhaps that's the subject of a post I should write up), but service location and manual instantiation ends up being a much preferable approach in these kinds of long-running application scenarios. Testability can be achieved in other, possibly simpler ways (http://unitbox.codeplex.com) if that's the driving concern.

Thus I think the key driving differentiator comes down to object graph composition: are you able to feasibly and desirably load the whole thing at once (such as in web scenarios) or not? In rich-client apps (desktop and mobile) this is a striking NO. You need components to load sub-components at will and not be bound and dependent on a centralized component (Composite Root) to do so. The alternative is passing a dependency container around to every component in the system so that the components can resolve sub-components using the container - but we all know how major an anti-pattern that is (oh Android!...)

Would love to see the community start differentiating between the scenarios where DI makes sense and where it ends up being an anti-productive burden. And I think rich client apps is evidently one of those places.

Thank you for your insightful comment. When it comes to the analysis of the needs of desktop and mobile applications, I completely agree that many nodes of the object graph would need to be lazy for exactly the reasons you so clearly explain.

However, I don't agree with your conclusions. Yes, there's a bit of plumbing involved with defining a Virtual Proxy over an expensive dependency, but I don't think it's particularly problematic issue. There's a number of reasons for that:

- First of all, let's consider your example of an application with 30 screens. Obviously, you don't want to load all 30 screens up-front, but that doesn't mean that you'll need to write 30 custom Virtual Proxies. Hopefully, you have a single abstraction that loads screens, so that's only a single Virtual Proxy you'll need to write.

- As you point out, you'll also want to postpone loading of data for each screen. I completely agree, but you don't need a Service Locator for this. The most common approach for this is to inject a strongly typed Query service (think: Repository) into your Controllers (or whatever you use to load data). This would essentially be a stateless service object without much (if any) read-only state, so even in a mobile app, I doubt it would take up much resources. Even so, you can still lazy-load it if you need to - but do measure before jumping to conclusions.

- In the end, you may need to proxy more than a single service, but if you find yourself in a situation where you need to proxy 30+ services, that's more likely to indicate a violation of the Reused Abstractions Principle than a failure of DI and the Composition Root pattern.

- Finally, while it may seem like an overhead to create the plumbing code, it's likely to be very robust. Once you've created a Virtual Proxy for an interface, the only reason it has to change is if you change the interface itself. If you stick to Role Interfaces that shouldn't happen very often. Thus, while you may be concerned that creating Virtual Proxies will require extra effort, it'll abstract away an application concern that will tend to be very robust and have a very low maintenance overhead. I consider this far superior to the brittle approach of a Service Locator. In the end, you'll spend less time maintaining that code than if you go for a Service Locator. It's a classic case of a bigger up-front investment that pays huge dividends over time - just like TDD.

Just found your blog while doing additional research for a conference talk I'm about to give. The content here is pure gold! I'll make sure to read all of it.

Your response to Marcel above is exactly what I was thinking of when I read his comment. I'm a professional Android developer and I confirm that the scenario described in Marcel's comment is best handled in a way you suggested.

I would like to know your expert opinion on this statement of mine: "When using DI, if a requirement for lazy loading of an injected service(s) arises, it should be treated as 'code smell'. Most probably, this requirement is due to a service(s) that does too much work upon construction. If this service can be refactored, then you should favor refactoring over lazy loading. If the service can't be refactored (e.g. part of the framework), then you should check whether the client can be refactored in a way that eliminates a need for lazy loading. Use lazy loading of injected services only as a last resort in order to compensate for an unfortunate design that you can't change".

Does the above statement makes sense in your opinion?

Vasiliy, thank you for writing. That statement sounds reasonable. FWIW, objects that do too much work during construction violate Nikola Malovic's 4th law of IoC. Unfortunately, the original links to Nikola Malovic laws of IoC no longer point at the original material, but I described the fourth law in an article called Injection Constructors should be simple.

If you want to give some examples of possible refactorings, you may want to take a look at the Decoraptor pattern. Additionally, if you're dealing with a third-party component, you can often create an Adapter that behaves nicely during construction.

Mark, thank you for such a quick response and additional information and references! I would vote for Decoraptor to be included in the next edition of GOF's book.

BTW, I think I found Nikola'a article that you mentioned here.