ploeh blog danish software design

When properties are easier than examples

Sometimes, describing the properties of a function is easier than coming up with examples.

Instead of the term test-driven development you may occasionally encounter the phrase example-driven development. The idea is that each test is an example of how the system under test ought to behave. As you add more tests, you add more examples.

I've noticed that beginners often find it difficult to come up with good examples. This is the reason I've developed the Devil's advocate technique. It's meant as a heuristic that may help you identify the next good example. It's particularly effective if you combine it with the Transformation Priority Premise (TPP) and equivalence partitioning.

I've noticed, however, that translating concrete examples into code is not always straightforward. In the following, I'll describe an experience I had in 2020 while developing an online restaurant reservation system.

The code shown here is part of the sample code base that accompanies my book Code That Fits in Your Head.

Problem outline #

I'm going to start by explaining what it was that I was trying to do. I wanted to present the maître d' (or other restaurant staff) with a schedule of a day's reservations. It should take the form of a list of time entries, one entry for every time one or more new reservations would start. I also wanted to list, for each entry, all reservations that were currently ongoing, or would soon start. Here's a simple example, represented as JSON:

"date": "2023-08-23", "entries": [ { "time": "20:00:00", "reservations": [ { "id": "af5feb35f62f475cb02df2a281948829", "at": "2023-08-23T20:00:00.0000000", "email": "crystalmeth@example.net", "name": "Crystal Metheney", "quantity": 3 }, { "id": "eae39bc5b3a7408eb2049373b2661e32", "at": "2023-08-23T20:30:00.0000000", "email": "x.benedict@example.org", "name": "Benedict Xavier", "quantity": 4 } ] }, { "time": "20:30:00", "reservations": [ { "id": "af5feb35f62f475cb02df2a281948829", "at": "2023-08-23T20:00:00.0000000", "email": "crystalmeth@example.net", "name": "Crystal Metheney", "quantity": 3 }, { "id": "eae39bc5b3a7408eb2049373b2661e32", "at": "2023-08-23T20:30:00.0000000", "email": "x.benedict@example.org", "name": "Benedict Xavier", "quantity": 4 } ] } ]

To keep the example simple, there are only two reservations for that particular day: one for 20:00 and one for 20:30. Since something happens at both of these times, both time has an entry. My intent isn't necessarily that a user interface should show the data in this way, but I wanted to make the relevant data available so that a user interface could show it if it needed to.

The first entry for 20:00 shows both reservations. It shows the reservation for 20:00 for obvious reasons, and it shows the reservation for 20:30 to indicate that the staff can expect a party of four at 20:30. Since this restaurant runs with a single seating per evening, this effectively means that although the reservation hasn't started yet, it still reserves a table. This gives a user interface an opportunity to show the state of the restaurant at that time. The table for the 20:30 party isn't active yet, but it's effectively reserved.

For restaurants with shorter seating durations, the schedule should reflect that. If the seating duration is, say, two hours, and someone has a reservation for 20:00, you can sell that table to another party at 18:00, but not at 18:30. I wanted the functionality to take such things into account.

The other entry in the above example is for 20:30. Again, both reservations are shown because one is ongoing (and takes up a table) and the other is just starting.

Desired API #

A major benefit of test-driven development (TDD) is that you get fast feedback on the API you intent for the system under test (SUT). You write a test against the intended API, and besides a pass-or-fail result, you also learn something about the interaction between client code and the SUT. You often learn that the original design you had in mind isn't going to work well once it meets the harsh realities of an actual programming language.

In TDD, you often have to revise the design multiple times during the process.

This doesn't mean that you can't have a plan. You can't write the initial test if you have no inkling of what the API should look like. For the schedule feature, I did have a plan. It turned out to hold, more or less. I wanted the API to be a method on a class called MaitreD, which already had these four fields and the constructors to support them:

public TimeOfDay OpensAt { get; } public TimeOfDay LastSeating { get; } public TimeSpan SeatingDuration { get; } public IEnumerable<Table> Tables { get; }

I planned to implement the new feature as a new instance method on that class:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations)

This plan turned out to hold in general, although I ultimately decided to simplify the return type by getting rid of the Occurrence container. It's going to be present throughout this article, however, so I need to briefly introduce it. I meant to use it as a generic container of anything, but with an time-stamp associated with the value:

public sealed class Occurrence<T> { public Occurrence(DateTime at, T value) { At = at; Value = value; } public DateTime At { get; } public T Value { get; } public Occurrence<TResult> Select<TResult>(Func<T, TResult> selector) { if (selector is null) throw new ArgumentNullException(nameof(selector)); return new Occurrence<TResult>(At, selector(Value)); } public override bool Equals(object? obj) { return obj is Occurrence<T> occurrence && At == occurrence.At && EqualityComparer<T>.Default.Equals(Value, occurrence.Value); } public override int GetHashCode() { return HashCode.Combine(At, Value); } }

You may notice that due to the presence of the Select method this is a functor.

There's also a little extension method that we may later encounter:

public static Occurrence<T> At<T>(this T value, DateTime at) { return new Occurrence<T>(at, value); }

The plan, then, is to return a collection of occurrences, each of which may contain a collection of tables that are relevant to include at that time entry.

Examples #

When I embarked on developing this feature, I thought that it was a good fit for example-driven development. Since the input for Schedule requires a collection of Reservation objects, each of which comes with some data, I expected the test cases to become verbose. So I decided to bite the bullet right away and define test cases using xUnit.net's [ClassData] feature. I wrote this test:

[Theory, ClassData(typeof(ScheduleTestCases))] public void Schedule( MaitreD sut, IEnumerable<Reservation> reservations, IEnumerable<Occurrence<Table[]>> expected) { var actual = sut.Schedule(reservations); Assert.Equal( expected.Select(o => o.Select(ts => ts.AsEnumerable())), actual); }

This is almost as simple as it can be: Call the method and verify that expected is equal to actual. The only slightly complicated piece is the nested projection of expected from IEnumerable<Occurrence<Table[]>> to IEnumerable<Occurrence<IEnumerable<Table>>>. There are ugly reasons for this that I don't want to discuss here, since they have no bearing on the actual topic, which is coming up with tests.

I also added the ScheduleTestCases class and a single test case:

private class ScheduleTestCases : TheoryData<MaitreD, IEnumerable<Reservation>, IEnumerable<Occurrence<Table[]>>> { public ScheduleTestCases() { // No reservations, so no occurrences: Add(new MaitreD( TimeSpan.FromHours(18), TimeSpan.FromHours(21), TimeSpan.FromHours(6), Table.Communal(12)), Array.Empty<Reservation>(), Array.Empty<Occurrence<Table[]>>()); } }

The simplest implementation that passed that test was this:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { yield break; }

Okay, hardly rocket science, but this was just a test case to get started. So I added another one:

private void SingleReservationCommunalTable() { var table = Table.Communal(12); var r = Some.Reservation; Add(new MaitreD( TimeSpan.FromHours(18), TimeSpan.FromHours(21), TimeSpan.FromHours(6), table), new[] { r }, new[] { new[] { table.Reserve(r) }.At(r.At) }); }

This test case adds a single reservation to a restaurant with a single communal table. The expected result is now a single occurrence with that reservation. In true TDD fashion, this new test case caused a test failure, and I now had to adjust the Schedule method to pass all tests:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { if (reservations.Any()) { var r = reservations.First(); yield return new[] { Table.Communal(12).Reserve(r) }.AsEnumerable().At(r.At); } yield break; }

You might have wanted to jump to something prettier right away, but I wanted to proceed according to the Devil's advocate technique. I was concerned that I was going to mess up the implementation if I moved too fast.

And that was when I basically hit a wall.

Property-based testing to the rescue #

I couldn't figure out how to proceed from there. Which test case ought to be the next? I wanted to follow the spirit of the TPP and pick a test case that would cause another incremental step in the right direction. The sheer number of possible combinations overwhelmed me, though. Should I adjust the reservations? The table configuration for the MaitreD class? The SeatingDuration?

It's possible that you'd be able to conjure up the perfect next test case, but I couldn't. I actually let it stew for a couple of days before I decided to give up on the example-driven approach. While I couldn't see a clear path forward with concrete examples, I had a vivid vision of how to proceed with property-based testing.

I left the above tests in place and instead added a new test class to my code base. Its only purpose: to test the Schedule method. The test method itself is only a composition of various data definitions and the actual test code:

[Property] public Property Schedule() { return Prop.ForAll( GenReservation.ArrayOf().ToArbitrary(), ScheduleImp); }

This uses FsCheck 2.14.3, which is written in F# and composes better if you also write the tests in F#. In order to make things a little more palatable for C# developers, I decided to implement the building blocks for the property using methods and class properties.

The ScheduleImp method, for example, actually implements the test. This method runs a hundred times (FsCheck's default value) with randomly generated input values:

private static void ScheduleImp(Reservation[] reservations) { // Create a table for each reservation, to ensure that all // reservations can be allotted a table. var tables = reservations.Select(r => Table.Standard(r.Quantity)); var sut = new MaitreD( TimeSpan.FromHours(18), TimeSpan.FromHours(21), TimeSpan.FromHours(6), tables); var actual = sut.Schedule(reservations); Assert.Equal( reservations.Select(r => r.At).Distinct().Count(), actual.Count()); }

The step you see in the first line of code is an example of a trick that I find myself doing often with property-based testing: instead of trying to find some good test values for a particular set of circumstances, I create a set of circumstances that fits the randomly generated test values. As the code comment explains, given a set of Reservation values, it creates a table that fits each reservation. In that way I ensure that all the reservations can be allocated a table.

I'll soon return to how those random Reservation values are generated, but first let's discuss the rest of the test body. Given a valid MaitreD object it calls the Schedule method. In the assertion phase, it so far only verifies that there's as many time entries in actual as there are distinct At values in reservations.

That's hardly a comprehensive description of the SUT, but it's a start. The following implementation passes both the new property, as well as the two examples above.

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { return from r in reservations group Table.Communal(12).Reserve(r) by r.At into g select g.AsEnumerable().At(g.Key); }

I know that many C# programmers don't like query syntax, but I've always had a soft spot for it. I liked it, but wasn't sure that I'd be able to keep it up as I added more constraints to the property.

Generators #

Before we get to that, though, I promised to show you how the random reservations are generated. FsCheck has an API for that, and it's also query-syntax-friendly:

private static Gen<Email> GenEmail => from s in Arb.Default.NonWhiteSpaceString().Generator select new Email(s.Item); private static Gen<Name> GenName => from s in Arb.Default.StringWithoutNullChars().Generator select new Name(s.Item); private static Gen<Reservation> GenReservation => from id in Arb.Default.Guid().Generator from d in Arb.Default.DateTime().Generator from e in GenEmail from n in GenName from q in Arb.Default.PositiveInt().Generator select new Reservation(id, d, e, n, q.Item);

GenReservation is a generator of Reservation values (for a simplified explanation of how such a generator might work, see The Test Data Generator functor). It's composed from smaller generators, among these GenEmail and GenName. The rest of the generators are general-purpose generators defined by FsCheck.

If you refer back to the Schedule property above, you'll see that it uses GenReservation to produce an array generator. This is another general-purpose combinator provided by FsCheck. It turns any single-object generator into a generator of arrays containing such objects. Some of these arrays will be empty, which is often desirable, because it means that you'll automatically get coverage of that edge case.

Iterative development #

As I already discovered in 2015 some problems are just much better suited for property-based development than example-driven development. As I expected, this one turned out to be just such a problem. (Recently, Hillel Wayne identified a set of problems with no clear properties as rho problems. I wonder if we should pick another Greek letter for this type of problems that almost ooze properties. Sigma problems? Maybe we should just call them describable problems...)

For the next step, I didn't have to write a completely new property. I only had to add a new assertion, and thereby strengthening the postconditions of the Schedule method:

Assert.Equal( actual.Select(o => o.At).OrderBy(d => d), actual.Select(o => o.At));

I added the above assertion to ScheduleImp after the previous assertion. It simply states that actual should be sorted in ascending order.

To pass this new requirement I added an ordering clause to the implementation:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { return from r in reservations group Table.Communal(12).Reserve(r) by r.At into g orderby g.Key select g.AsEnumerable().At(g.Key); }

It passes all tests. Commit to Git. Next.

Table configuration #

If you consider the current implementation, there's much not to like. The worst offence, I think, is that it conjures a hard-coded communal table out of thin air. The method ought to use the table configuration passed to the MaitreD object. This seems like an obvious flaw to address. I therefore added this to the property:

Assert.All(actual, o => AssertTables(tables, o.Value)); } private static void AssertTables( IEnumerable<Table> expected, IEnumerable<Table> actual) { Assert.Equal(expected.Count(), actual.Count()); }

It's just another assertion that uses the helper assertion also shown. As a first pass, it's not enough to cheat the Devil, but it sets me up for my next move. The plan is to assert that no tables are generated out of thin air. Currently, AssertTables only verifies that the actual count of tables in each occurrence matches the expected count.

The Devil easily foils that plan by generating a table for each reservation:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { var tables = reservations.Select(r => Table.Communal(12).Reserve(r)); return from r in reservations group r by r.At into g orderby g.Key select tables.At(g.Key); }

This (unfortunately) passes all tests, so commit to Git and move on.

The next move I made was to add an assertion to AssertTables:

Assert.Equal( expected.Sum(t => t.Capacity), actual.Sum(t => t.Capacity));

This new requirement states that the total capacity of the actual tables should be equal to the total capacity of the allocated tables. It doesn't prevent the Devil from generating tables out of thin air, but it makes it harder. At least, it makes it so hard that I found it more reasonable to use the supplied table configuration:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { var tables = reservations.Zip(Tables, (r, t) => t.Reserve(r)); return from r in reservations group r by r.At into g orderby g.Key select tables.At(g.Key); }

The implementation of Schedule still cheats because it 'knows' that no tests (except for the degenerate test where there are no reservations) have surplus tables in the configuration. It takes advantage of that knowledge to zip the two collections, which is really not appropriate.

Still, it seems that things are moving in the right direction.

Generated SUT #

Until now, ScheduleImp has been using a hard-coded sut. It's time to change that.

To keep my steps as small as possible, I decided to start with the SeatingDuration since it was currently not being used by the implementation. This meant that I could start randomising it without affecting the SUT. Since this was a code change of middling complexity in the test code, I found it most prudent to move in such a way that I didn't have to change the SUT as well.

I completely extracted the initialisation of the sut to a method argument of the ScheduleImp method, and adjusted it accordingly:

private static void ScheduleImp(MaitreD sut, Reservation[] reservations) { var actual = sut.Schedule(reservations); Assert.Equal( reservations.Select(r => r.At).Distinct().Count(), actual.Count()); Assert.Equal( actual.Select(o => o.At).OrderBy(d => d), actual.Select(o => o.At)); Assert.All(actual, o => AssertTables(sut.Tables, o.Value)); }

This meant that I also had to adjust the calling property:

public Property Schedule() { return Prop.ForAll( GenReservation .ArrayOf() .SelectMany(rs => GenMaitreD(rs).Select(m => (m, rs))) .ToArbitrary(), t => ScheduleImp(t.m, t.rs)); }

You've already seen GenReservation, but GenMaitreD is new:

private static Gen<MaitreD> GenMaitreD(IEnumerable<Reservation> reservations) { // Create a table for each reservation, to ensure that all // reservations can be allotted a table. var tables = reservations.Select(r => Table.Standard(r.Quantity)); return from seatingDuration in Gen.Choose(1, 6) select new MaitreD( TimeSpan.FromHours(18), TimeSpan.FromHours(21), TimeSpan.FromHours(seatingDuration), tables); }

The only difference from before is that the new MaitreD object is now initialised from within a generator expression. The duration is randomly picked from the range of one to six hours (those numbers are my arbitrary choices).

Notice that it's possible to base one generator on values randomly generated by another generator. Here, reservations are randomly produced by GenReservation and merged to a tuple with SelectMany, as you can see above.

This in itself didn't impact the SUT, but set up the code for my next move, which was to generate more tables than reservations, so that there'd be some free tables left after the schedule allocation. I first added a more complex table generator:

/// <summary> /// Generate a table configuration that can at minimum accomodate all /// reservations. /// </summary> /// <param name="reservations">The reservations to accommodate</param> /// <returns>A generator of valid table configurations.</returns> private static Gen<IEnumerable<Table>> GenTables(IEnumerable<Reservation> reservations) { // Create a table for each reservation, to ensure that all // reservations can be allotted a table. var tables = reservations.Select(r => Table.Standard(r.Quantity)); return from moreTables in Gen.Choose(1, 12).Select(Table.Standard).ArrayOf() from allTables in Gen.Shuffle(tables.Concat(moreTables)) select allTables.AsEnumerable(); }

This function first creates standard tables that exactly accommodate each reservation. It then generates an array of moreTables, each fitting between one and twelve people. It then mixes those tables together with the ones that fit a reservation and returns the sequence. Since moreTables can be empty, it's possible that the entire sequence of tables only just accommodates the reservations.

I then modified GenMaitreD to use GenTables:

private static Gen<MaitreD> GenMaitreD(IEnumerable<Reservation> reservations) { return from seatingDuration in Gen.Choose(1, 6) from tables in GenTables(reservations) select new MaitreD( TimeSpan.FromHours(18), TimeSpan.FromHours(21), TimeSpan.FromHours(seatingDuration), tables); }

This provoked a change in the SUT:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { return from r in reservations group r by r.At into g orderby g.Key select Allocate(g).At(g.Key); }

The Schedule method now calls a private helper method called Allocate. This method already existed, since it supports the algorithm used to decide whether or not to accept a reservation request.

Rinse and repeat #

I hope that a pattern starts to emerge. I kept adding more and more randomisation to the data generators, while I also added more and more assertions to the property. Here's what it looked like after a few more iterations:

private static void ScheduleImp(MaitreD sut, Reservation[] reservations) { var actual = sut.Schedule(reservations); Assert.Equal( reservations.Select(r => r.At).Distinct().Count(), actual.Count()); Assert.Equal( actual.Select(o => o.At).OrderBy(d => d), actual.Select(o => o.At)); Assert.All(actual, o => AssertTables(sut.Tables, o.Value)); Assert.All( actual, o => AssertRelevance(reservations, sut.SeatingDuration, o)); }

While AssertTables didn't change further, I added another helper assertion called AssertRelevance. I'm not going to show it here, but it checks that each occurrence only contains reservations that overlaps that point in time, give or take the SeatingDuration.

I also made the reservation generator more sophisticated. If you consider the one defined above, one flaw is that it generates reservations at random dates. The chance that it'll generate two reservations that are actually adjacent in time is minimal. To counter this problem, I added a function that would return a generator of adjacent reservations:

/// <summary> /// Generate an adjacant reservation with a 25% chance. /// </summary> /// <param name="reservation">The candidate reservation</param> /// <returns> /// A generator of an array of reservations. The generated array is /// either a singleton or a pair. In 75% of the cases, the input /// <paramref name="reservation" /> is returned as a singleton array. /// In 25% of the cases, the array contains two reservations: the input /// reservation as well as another reservation adjacent to it. /// </returns> private static Gen<Reservation[]> GenAdjacentReservations(Reservation reservation) { return from adjacent in GenReservationAdjacentTo(reservation) from useAdjacent in Gen.Frequency( new WeightAndValue<Gen<bool>>(3, Gen.Constant(false)), new WeightAndValue<Gen<bool>>(1, Gen.Constant(true))) let rs = useAdjacent ? new[] { reservation, adjacent } : new[] { reservation } select rs; } private static Gen<Reservation> GenReservationAdjacentTo(Reservation reservation) { return from minutes in Gen.Choose(-6 * 4, 6 * 4) // 4: quarters/h from r in GenReservation select r.WithDate( reservation.At + TimeSpan.FromMinutes(minutes)); }

Now that I look at it again, I wonder whether I could have expressed this in a simpler way... It gets the job done, though.

I then defined a generator that would either create entirely random reservations, or some with some adjacent ones mixed in:

private static Gen<Reservation[]> GenReservations { get { var normalArrayGen = GenReservation.ArrayOf(); var adjacentReservationsGen = GenReservation.ArrayOf() .SelectMany(rs => Gen .Sequence(rs.Select(GenAdjacentReservations)) .SelectMany(rss => Gen.Shuffle( rss.SelectMany(rs => rs)))); return Gen.OneOf(normalArrayGen, adjacentReservationsGen); } }

I changed the property to use this generator instead:

[Property] public Property Schedule() { return Prop.ForAll( GenReservations .SelectMany(rs => GenMaitreD(rs).Select(m => (m, rs))) .ToArbitrary(), t => ScheduleImp(t.m, t.rs)); }

I could have kept at it longer, but this turned out to be good enough to bring about the change in the SUT that I was looking for.

Implementation #

These incremental changes iteratively brought me closer and closer to an implementation that I think has the correct behaviour:

public IEnumerable<Occurrence<IEnumerable<Table>>> Schedule(IEnumerable<Reservation> reservations) { return from r in reservations group r by r.At into g orderby g.Key let seating = new Seating(SeatingDuration, g.Key) let overlapping = reservations.Where(seating.Overlaps) select Allocate(overlapping).At(g.Key); }

Contrary to my initial expectations, I managed to keep the implementation to a single query expression all the way through.

Conclusion #

This was a problem that I was stuck on for a couple of days. I could describe the properties I wanted the function to have, but I had a hard time coming up with a good set of examples for unit tests.

You may think that using property-based testing looks even more complicated, and I admit that it's far from trivial. The problem itself, however, isn't easy, and while the property-based approach may look daunting, it turned an intractable problem into a manageable one. That's a win in my book.

It's also worth noting that this would all have looked more elegant in F#. There's an object-oriented tax to be paid when using FsCheck from C#.

Accountability and free speech

A most likely naive suggestion.

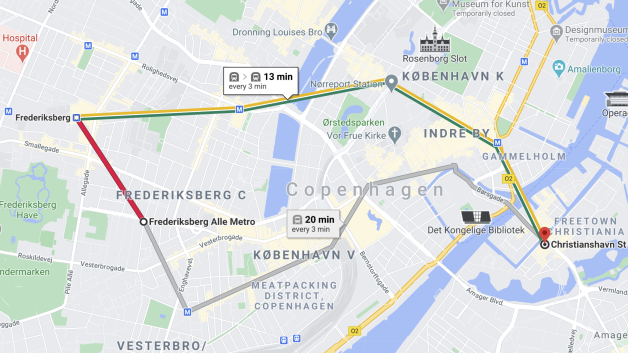

A few years ago, my mother (born 1940) went to Paris on vacation with her older sister. She was a little concerned if she'd be able to navigate the Métro, so she asked me to print out all sorts of maps in advance. I wasn't keen on another encounter with my nemesis, the printer, so I instead showed her how she could use Google Maps to get on-demand routes. Google Maps now include timetables and line information for many metropolitan subway lines around the world. I've successfully used it to find my way around London, Paris, New York, Tokyo, Sydney, and Melbourne.

It even works in my backwater home-town:

It's rapidly turning into indispensable digital infrastructure, and that's beginning to trouble me.

You can still get paper maps of the various rapid transit systems around the world, but for how long?

Digital infrastructure #

If you're old enough, you may remember phone books. In the days of land lines and analogue phones, you'd look up a number and then dial it. As the internet became increasingly ubiquitous, phone directories went online as well. Paper phone books were seen as waste, and ultimately disappeared from everyday life.

You can think of paper phone books as a piece of infrastructure that's now gone. I don't miss them, but it's worth reflecting on what impact their disappearing has. Today, if I need to find a phone number, Google is often the place I go. The physical infrastructure is gone, replaced by a digital infrastructure.

Google, in particular, now provides important infrastructure for modern society. Not only web search, but also maps, video sharing, translation, emails, and much more.

Other companies offer other services that verge on being infrastructure. Facebook is more than just updates to friends and families. Many organisations, including schools and universities, coordinate activities via Facebook, and the political discourse increasingly happens there and on Twitter.

We've come to rely on these 'free' services to a degree that resembles our reliance on physical infrastructure like the power grid, running water, roads, telephones, etcetera.

TANSTAAFL #

Robert A. Heinlein coined the phrase There ain't no such thing as a free lunch (TANSTAAFL) in his excellent book The Moon is a Harsh Mistress. Indeed, the digital infrastructure isn't free.

Recent events have magnified some of the cost we're paying. In January, Facebook and Twitter suspended the then-president of the United States from the platforms. I've little sympathy for Donald Trump, who strikes me as an uncouth narcissist, but since I'm a Danish citizen living in Denmark, I don't think that I ought to have much of an opinion about American politics.

I do think, on the other hand, that the suspension sets an uncomfortable precedent.

Should we let a handful of tech billionaires control essential infrastructure? This time, the victim was someone half the world was glad to see go, but who's next? Might these companies suspend the accounts of politicians who work against them?

Never in the history of the world has decision power over so many people been so concentrated. I'll remind my readers that Facebook, Twitter, Google, etcetera are in worldwide use. The decisions of a handful of majority shareholders can now affect billions of people.

If you're suspended from one of these platforms, you may lose your ability to participate in school activities, or from finding your way through a foreign city, or from taking part of the democratic discussion.

The case for regulation #

In this article, I mainly want to focus on the free-speech issue related mostly to Facebook and Twitter. These companies make money from ads. The longer you stay on the platform, the more ads they can show you, and they've discovered that nothing pulls you in like anger. These incentives are incredibly counter-productive for society.

Users are implicitly encouraged to create or spread anger, yet few are held accountable. One reason is that you can easily create new accounts without disclosing your real-world identity. This makes it difficult to hold users accountable for their utterances.

Instead of clear rules, users are suspended for inexplicable and arbitrary reasons. It seems that these are mostly the verdicts of algorithms, but as we've seen in the case of Donald Trump, it can also be the result of an undemocratic, but political decision.

Everyone can lose their opportunities for self-expression for arbitrary reasons. This is a free-speech issue.

Yes, free speech.

I'm well aware that Facebook and Twitter are private companies, and no-one has any right to an account on those platforms. That's why I started this essay by discussing how these services are turning into infrastructure.

More than a century ago, the telephone was new technology operated by private companies. I know the Danish history best, but it serves well as an example. KTAS, one of the world's first telephone companies, was a private company. Via mergers and acquisitions, it still is, but as it grew and became the backbone of the country's electronic communications network, the government introduced legislation. For decades, it and its sister companies were regional monopolies.

The government allowed the monopoly in exchange for various obligations. Even when the monopoly ended in 1996, the original monopoly companies inherited these legal obligations. For instance, every Danish citizen has the right to get a land-line installed, even if that installation by itself is a commercial loss for the company. This could be the case on certain remote islands or other rural areas. The Danish state compensates the telephone operator for this potential loss.

Many other utility companies run in a similar fashion. Some are semi-public, some are private, but common to water, electricity, garbage disposal, heating, and other operators is that they are regulated by government.

When a utility becomes important to the functioning of society, a sensible government steps in to regulate it to ensure the further smooth functioning of society.

I think that the services offered by Google, Facebook, and Twitter are fast approaching a level of significance that ought to trigger government regulation.

I don't say that lightly. I'm actually quite libertarian in my views, but I'm no anarcho-libertarian. I do acknowledge that a state offers essential services such as protection of property rights, a judicial system, and so on.

Accountability #

How should we regulate social media? I think we should start by exchanging accountability for the right to post.

Let's take another step back for a moment. For generations, it's been possible to send a letter to the editor of a regular newspaper. If the editor deems it worthy for publication, it'll be printed in the next issue. You don't have any right to get your letter published; this happens at the discretion of the editor.

Why does it work that way? It works that way because the editor is accountable for what's printed in the paper. Ultimately, he or she can go to jail for what's printed.

Freedom of speech is more complicated than it looks at first glance. For instance, censorship in Denmark was abolished with the 1849 constitution. This doesn't mean that you can freely say whatever you'd like; it only means that government has no right to prevent you from saying or writing something. You can still be prosecuted after the fact if you say or write something libellous, or if you incite violence, or if you disclose secrets that you've agreed to keep, etcetera.

This is accountability. It works when the person making the utterance is known and within reach of the law.

Notice, particularly, that an editor-in-chief is accountable for a newspaper's contents. Why isn't Facebook or Twitter accountable for content?

These companies have managed to spin a story that they're platforms rather than publishers. This argument might have had some legs ten years ago. When I started using Twitter in 2009, the algorithm was easy to understand: My feed showed the tweets from the accounts that I followed, in the order that they were published. This was easy to understand, and Twitter didn't, as far as I could tell, edit the feed. Since no editing took place, I find the platform argument applicable.

Later, the social networks began editing the feeds. No humans were directly involved, but today, some posts are amplified while others get little attention. Since this is done by 'algorithms' rather than editors, the companies have managed to convince lawmakers that they're still only platforms. Let's be real, though. Apparently, the public needs a programmer to say the following, and I'm a programmer.

Programmers, employees of those companies, wrote the algorithms. They experimented and tweaked those algorithms to maximise 'engagement'. Humans are continually involved in this process. Editing takes place.

I think it's time to stop treating social media networks as platforms, and start treating them as publishers.

Practicality #

Facebook and Twitter will protest that it's practically impossible for them to 'edit' what happens on the networks. I find this argument unconvincing, since editing already takes place.

I'd like to suggest a partial way out, though. This brings us back to regulation.

I think that each country or jurisdiction should make it possible for users to opt in to being accountable. Users should be able to verify their accounts as real, legal persons. If they do that, their activity on the platform should be governed by the jurisdiction in which they reside, instead of by the arbitrary and ill-defined 'community guidelines' offered by the networks. You'd be accountable for your utterances according to local law where you live. The advantage, though, is that this accountability buys you the right to be present on the platform. You can be sued for your posts, but you can't be kicked off.

I'm aware that not everyone lives in a benign welfare state such as Denmark. This is why I suggest this as an option. Even if you live in a state that regulates social media as outlined above, the option to stay anonymous should remain. This is how it already works, and I imagine that it should continue to work like that for this group of users. The cost of staying anonymous, though, is that you submit to the arbitrary and despotic rules of those networks. For many people, including minorities and citizens of oppressive states, this is likely to remain a better trade-off.

Problems #

This suggestion is in no way perfect. I can already identify one problem with it.

A country could grant its citizens the right to conduct infowar on an adversary; think troll armies and the like. If these citizens are verified and 'accountable' to their local government, but this government encourages rather than punishes incitement to violence in a foreign country, then how do we prevent that?

I have a few half-baked ideas, but I'd rather leave the problem here in the hope that it might inspire other people to start thinking about it, too.

Conclusion #

This is a post that I've waited for a long time for someone else to write. If someone already did, I'm not aware of it. I can think of three reasons:

- It's a really stupid idea.

- It's a common idea, but no-one talks about it because of pluralistic ignorance.

- It's an original idea that no-one else have had.

The idea is, in short, to make it optional for users to 'buy' the right to stay on a social network for the price of being held legally accountable. This requires some national as well as international regulation of the digital infrastructure.

Could it work? I don't know, but isn't it worth discussing?

ASP.NET POCO Controllers: an experience report

Controllers don't have to derive from a base class.

In most tutorials about ASP.NET web APIs you'll be told to let Controller classes derive from a base class. It may be convenient if you believe that productivity is measured by how fast you can get an initial version of the software into production. Granted, sometimes that's the case, but usually there's a price to be paid. Did you produce legacy code in the process?

One common definition of legacy code is that it's code without tests. With ASP.NET I've repeatedly found that when Controllers derive from ControllerBase they become harder to unit test. It may be convenient to have access to the Url and User properties, but this tends to make the arrange phase of a unit test much more complex. Not impossible; just more complex than I like. In short, inheriting from ControllerBase just to get access to, say, Url or User violates the Interface Segregation Principle. That ControllerBase is too big a dependency for my taste.

I already use self-hosting in integration tests so that I can interact with my REST APIs via HTTP. When I want to test how my API reacts to various HTTP-specific circumstances, I do that via integration tests. So, in a recent code base I decided to see if I could write an entire REST API in ASP.NET Core without inheriting from ControllerBase.

The short answer is that, yes, this is possible, and I'd do it again, but you have to jump through some hoops. I consider that hoop-jumping a fine price to pay for the benefits of simpler unit tests and (it turns out) better separation of concerns.

In this article, I'll share what I've learned. The code shown here is part of the sample code base that accompanies my book Code That Fits in Your Head.

POCO Controllers #

Just so that we're on the same page: A POCO Controller is a Controller class that doesn't inherit from any base class. In my code base, they're defined like this:

[Route("")] public sealed class HomeController

or

[Authorize(Roles = "MaitreD")] public class ScheduleController

As you can tell, they don't inherit from ControllerBase or any other base class. They are annotated with attributes, like [Route], [Authorize], or [ApiController]. Strictly speaking, that may disqualify them as true POCOs, but in practice I've found that those attributes don't impact the sustainability of the code base in a negative manner.

Dependency Injection is still possible, and in use:

[ApiController] public class ReservationsController { public ReservationsController( IClock clock, IRestaurantDatabase restaurantDatabase, IReservationsRepository repository) { Clock = clock; RestaurantDatabase = restaurantDatabase; Repository = repository; } public IClock Clock { get; } public IRestaurantDatabase RestaurantDatabase { get; } public IReservationsRepository Repository { get; } // Controller actions and other members go here...

I consider Dependency Injection nothing but an application of polymorphism, so I think that still fits the POCO label. After all, Dependency Injection is just argument-passing.

HTTP responses and status codes #

The first thing I had to figure out was how to return various HTTP status codes. If you just return some model object, the default status code is 200 OK. That's fine in many situations, but if you're implementing a proper REST API, you should be using headers and status codes to communicate with the client.

The ControllerBase class defines many helper methods to return various other types of responses, such as BadRequest or NotFound. I had to figure out how to return such responses without access to the base class.

Fortunately, ASP.NET Core is now open source, so it isn't too hard to look at the source code for those helper methods to see what they do. It turns out that they are just discoverable methods that create and return various result objects. So, instead of calling the BadRequest helper method, I can just return a BadRequestResult:

return new BadRequestResult();

Some of the responses were a little more involved, so I created my own domain-specific helper methods for them:

private static ActionResult Reservation201Created(int restaurantId, Reservation r) { return new CreatedAtActionResult( actionName: nameof(Get), controllerName: null, routeValues: new { restaurantId, id = r.Id.ToString("N") }, value: r.ToDto()); }

Apparently, the controllerName argument isn't required, so should be null. I had to experiment with various combinations to get it right, but I have self-hosted integration tests that cover this result. If it doesn't work as intended, tests will fail.

Returning this CreatedAtActionResult object produces an HTTP response like this:

HTTP/1.1 201 Created

Content-Type: application/json; charset=utf-8

Location: https://example.net:443/restaurants/2112/reservations/276d124f20cf4cc3b502f57b89433f80

{

"id": "276d124f20cf4cc3b502f57b89433f80",

"at": "2022-01-14T19:45:00.0000000",

"email": "donkeyman@example.org",

"name": "Don Keyman",

"quantity": 4

}

I've edited and coloured the response for readability. The Location URL actually also includes a digital signature, which I've removed here just to make the example look a little prettier.

In any case, returning various HTTP headers and status codes is less discoverable when you don't have a base class with all the helper methods, but once you've figured out the objects to return, it's straightforward, and the code is simple.

Generating links #

A true (level 3) REST API uses hypermedia as the engine of application state; that is, links. This means that a HTTP response will typically include a JSON or XML representation with several links. This article includes several examples.

ASP.NET provides IUrlHelper for exactly that purpose, and if you inherit from ControllerBase the above-mentioned Url property gives you convenient access to just such an object.

When a class doesn't inherit from ControllerBase, you don't have convenient access to an IUrlHelper. Then what?

It's possible to get an IUrlHelper via the framework's built-in Dependency Injection engine, but if you add such a dependency to a POCO Controller, you'll have to jump through all sorts of hoops to configure it in unit tests. That was exactly the situation I wanted to avoid, so that got me thinking about design alternatives.

That was the real, underlying reason I came up with the idea to instead add REST links as a cross-cutting concern. The Controllers are wonderfully free of that concern, which helps keeping the complexity down of the unit tests.

I still have test coverage of the links, but I prefer testing HTTP-related behaviour via the HTTP API instead of relying on implementation details:

[Theory] [InlineData("Hipgnosta")] [InlineData("Nono")] [InlineData("The Vatican Cellar")] public async Task RestaurantReturnsCorrectLinks(string name) { using var api = new SelfHostedApi(); var client = api.CreateClient(); var response = await client.GetRestaurant(name); var expected = new HashSet<string?>(new[] { "urn:reservations", "urn:year", "urn:month", "urn:day" }); var actual = await response.ParseJsonContent<RestaurantDto>(); var actualRels = actual.Links.Select(l => l.Rel).ToHashSet(); Assert.Superset(expected, actualRels); Assert.All(actual.Links, AssertHrefAbsoluteUrl); }

This test verifies that the representation returned in response contains four labelled links. Granted, this particular test only verifies that each link contains an absolute URL (which could, in theory, be http://www.example.com), but the whole point of fit URLs is that they should be opaque. I've other tests that follow links to verify that the API affords the desired behaviour.

Authorisation #

The final kind of behaviour that caused me a bit of trouble was authorisation. It's easy enough to annotate a Controller with an [Authorize] attribute, which is fine as long all you need is role-based authorisation.

I did, however, run into one situation where I needed something closer to an access control list. The system that includes all these code examples is a multi-tenant restaurant reservation system. There's one protected resource: a day's schedule, intended for use by the restaurant's staff. Here's a simplified example:

GET /restaurants/2112/schedule/2021/2/23 HTTP/1.1

Authorization: Bearer eyJhbGciOiJIUzI1NiIsInCI6IkpXVCJ9.eyJ...

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

{

"name": "Nono",

"year": 2021,

"month": 2,

"day": 23,

"days": [

{

"date": "2021-02-23",

"entries": [

{

"time": "19:45:00",

"reservations": [

{

"id": "2c7ace4bbee94553950afd60a86c530c",

"at": "2021-02-23T19:45:00.0000000",

"email": "anarchi@example.net",

"name": "Ann Archie",

"quantity": 2

}

]

}

]

}

]

}

Since this resource contains personally identifiable information (email addresses) it's protected. You have to present a valid JSON Web Token with required claims. Role claims, however, aren't enough. A minimum bar is that the token must contain a sufficient role claim like "MaitreD" shown above, but that's not enough. This is, after all, a multi-tenant system, and we don't want one restaurant's MaitreD to be able to see another restaurant's schedule.

If ASP.NET can address that kind of problem with annotations, I haven't figured out how. The Controller needs to check an access control list against the resource being accessed. The above-mentioned User property of ControllerBase would be really convenient here.

Again, there are ways to inject an entire ClaimsPrincipal class into the Controller that needs it, but once more I felt that that would violate the Interface Segregation Principle. I didn't need an entire ClaimsPrincipal; I just needed a list of restaurant IDs that a particular JSON Web Token allows access to.

As is often my modus operandi in such situations, I started by writing the code I wished to use, and then figured out how to make it work:

[HttpGet("restaurants/{restaurantId}/schedule/{year}/{month}/{day}")] public async Task<ActionResult> Get(int restaurantId, int year, int month, int day) { if (!AccessControlList.Authorize(restaurantId)) return new ForbidResult(); // Do the real work here...

AccessControlList is a Concrete Dependency. It's just a wrapper around a collection of IDs:

public sealed class AccessControlList { private readonly IReadOnlyCollection<int> restaurantIds;

I had to create a new class in order to keep the built-in ASP.NET DI Container happy. Had I been doing Pure DI I could have just injected IReadOnlyCollection<int> directly into the Controller, but in this code base I used the built-in DI Container, which I had to configure like this:

services.AddHttpContextAccessor(); services.AddTransient(sp => AccessControlList.FromUser( sp.GetService<IHttpContextAccessor>().HttpContext.User));

Apart from having to wrap IReadOnlyCollection<int> in a new class, I found such an implementation preferable to inheriting from ControllerBase. The Controller in question only depends on the services it needs:

public ScheduleController( IRestaurantDatabase restaurantDatabase, IReservationsRepository repository, AccessControlList accessControlList)

The ScheduleController uses its restaurantDatabase dependency to look up a specific restaurant based on the restaurantId, the repository to read the schedule, and accessControlList to implement authorisation. That's what it needs, so that's its dependencies. It follows the Interface Segregation Principle.

The ScheduleController class is easy to unit test, since a test can just create a new AccessControlList object whenever it needs to:

[Fact] public async Task GetScheduleForAbsentRestaurant() { var sut = new ScheduleController( new InMemoryRestaurantDatabase(Some.Restaurant.WithId(2)), new FakeDatabase(), new AccessControlList(3)); var actual = await sut.Get(3, 2089, 12, 9); Assert.IsAssignableFrom<NotFoundResult>(actual); }

This test requests the schedule for a restaurant with the ID 3, and the access control list does include that ID. The restaurant, however, doesn't exist, so despite correct permissions, the expected result is 404 Not Found.

Conclusion #

ASP.NET has supported POCO Controllers for some time now, but it's clearly not a mainstream scenario. The documentation and Visual Studio tooling assumes that your Controllers inherit from one of the framework base classes.

You do, therefore, have to jump through a few hoops to make POCO Controllers work. The result, however, is more lightweight Controllers and better separation of concerns. I find the jumping worthwhile.

Comments

Hi Mark, as I read the part about BadRequest and NotFound being less discoverable, I wondered if you had

considered creating a ControllerHelper (terrible name

I know)

class with BadRequest, etc?

Then by adding using static Resteraunt.ControllerHelper as a little bit of boilerplate code to

the top of each POCO controller, you would be able to do return BadRequest(); again.

Dave, thank you for writing. I hadn't thought of that, but it's a useful idea. Someone would have to figure out how to write such a helper class, but once it exists, it would offer the same degree of discoverability. I'd suggest a name like Result or HttpResult, so that you could write e.g. Result.BadRequest().

Wouldn't adding a static import defy the purpose, though? As I see it, C# discoverability is enabled by 'dot-driven development'. Don't you lose that by a static import?

Self-hosted integration tests in ASP.NET

A way to self-host a REST API and test it through HTTP.

In 2020 I developed a sizeable code base for an online restaurant REST API. In the spirit of outside-in TDD, I found it best to test the HTTP behaviour of the API by actually interacting with it via HTTP.

Sometimes ASP.NET offers more than one way to achieve the same end result. For example, to return 200 OK, you can use both OkObjectResult and ObjectResult. I don't want my tests to be coupled to such implementation details, so by testing an API via HTTP instead of using the ASP.NET object model, I decouple the two.

You can easily self-host an ASP.NET web API and test it using an HttpClient. In this article, I'll show you how I went about it.

The code shown here is part of the sample code base that accompanies my book Code That Fits in Your Head.

Reserving a table #

In true outside-in fashion, I'll first show you the test. Then I'll break it down to show you how it works.

[Fact] public async Task ReserveTableAtNono() { using var api = new SelfHostedApi(); var client = api.CreateClient(); var at = DateTime.Today.AddDays(434).At(20, 15); var dto = Some.Reservation.WithDate(at).WithQuantity(6).ToDto(); var response = await client.PostReservation("Nono", dto); await AssertRemainingCapacity(client, at, "Nono", 4); await AssertRemainingCapacity(client, at, "Hipgnosta", 10); }

This test uses xUnit.net 2.4.1 to make a reservation at the restaurant named Nono. The first line that creates the api variable spins up a self-hosted instance of the REST API. The next line creates an HttpClient configured to communicate with the self-hosted instance.

The test proceeds to create a Data Transfer Object that it posts to the Nono restaurant. It then asserts that the remaining capacity at the Nono and Hipgnosta restaurants are as expected.

You'll see the implementation details soon, but I first want to discuss this high-level test. As is the case with most of my code, it's far from perfect. If you're not familiar with this code base, you may have plenty of questions:

- Why does it make a reservation 434 days in the future? Why not 433, or 211, or 1?

- Is there anything significant about the quantity 6?

- Why is the expected remaining capacity at Nono 4?

- Why is the expected remaining capacity at Hipgnosta 10? Why does it even verify that?

The three other questions are all related. A bit of background is in order. I wrote this test during a process where I turned the system into a multi-tenant system. Before that change, there was only one restaurant, which was Hipgnosta. I wanted to verify that if you make a reservation at another restaurant (here, Nono) it changes the observable state of that restaurant, and not of Hipgnosta.

The way these two restaurants are configured, Hipgnosta has a single communal table that seats ten guests. This explains why the expected capacity of Hipgnosta is 10. Making a reservation at Nono shouldn't affect Hipgnosta.

Nono has a more complex table configuration. It has both standard and communal tables, but the largest table is a six-person communal table. There's only one table of that size. The next-largest tables are four-person tables. Thus, a reservation for six people reserves the largest table that day, after which only four-person and two-person tables are available. Therefore the remaining capacity ought to be 4.

The above test knows all this. You are welcome to criticise such hard-coded knowledge. There's a real risk that it might make it more difficult to maintain the test suite in the future.

Certainly, had this been a unit test, and not an integration test, I wouldn't have accepted so much implicit knowledge - particularly because I mostly apply functional architecture, and pure functions should have isolation. Functions shouldn't depend on implicit global state; they should return a value based on input arguments. That's a bit of digression, though.

These are integration tests, which I mostly use for smoke tests and to verify HTTP-specific behaviour. I have unit tests for fine-grained testing of edge cases and variations of input. While I wouldn't accept so much implicit knowledge from a unit test, I find that it so far works well with integration tests.

Self-hosting #

It only takes a WebApplicationFactory to self-host an ASP.NET API. You can use it directly, but if you want to modify the hosted service in some way, you can also inherit from it.

I want my self-hosted integration tests to run as state-based tests that use an in-memory database instead of SQL Server. I've defined SelfHostedApi for that purpose:

internal sealed class SelfHostedApi : WebApplicationFactory<Startup> { protected override void ConfigureWebHost(IWebHostBuilder builder) { builder.ConfigureServices(services => { services.RemoveAll<IReservationsRepository>(); services.AddSingleton<IReservationsRepository>(new FakeDatabase()); }); } }

The way that WebApplicationFactory works, its ConfigureWebHost method runs after the Startup class' ConfigureServices method. Thus, when ConfigureWebHost runs, the services collection is already configured to use SQL Server. As Julius H so kindly pointed out to me, the RemoveAll extension method removes all existing registrations of a service. I use it to remove the SQL Server dependency from the system, after which I replace it with a test-specific in-memory implementation.

Since the in-memory database is configured with Singleton lifetime, that instance is going to be around for the lifetime of the service. While it's only keeping track of things in memory, it'll keep state until the service shuts down, which happens when the above api variable goes out of scope.

Notice that I named the class by the role it plays rather than which base class it derives from.

Posting a reservation #

The PostReservation method is an extension method on HttpClient:

internal static async Task<HttpResponseMessage> PostReservation( this HttpClient client, string name, object reservation) { string json = JsonSerializer.Serialize(reservation); using var content = new StringContent(json); content.Headers.ContentType.MediaType = "application/json"; var resp = await client.GetRestaurant(name); resp.EnsureSuccessStatusCode(); var rest = await resp.ParseJsonContent<RestaurantDto>(); var address = rest.Links.FindAddress("urn:reservations"); return await client.PostAsync(address, content); }

It's part of a larger set of methods that enables an HttpClient to interact with the REST API. Three of those methods are visible here: GetRestaurant, ParseJsonContent, and FindAddress. These, and many other, methods form a client API for interacting with the REST API. While this is currently test code, it's ripe for being extracted to a reusable client SDK library.

I'm not going to show all of them, but here's GetRestaurant to give you a sense of what's going on:

internal static async Task<HttpResponseMessage> GetRestaurant(this HttpClient client, string name) { var homeResponse = await client.GetAsync(new Uri("", UriKind.Relative)); homeResponse.EnsureSuccessStatusCode(); var homeRepresentation = await homeResponse.ParseJsonContent<HomeDto>(); var restaurant = homeRepresentation.Restaurants.First(r => r.Name == name); var address = restaurant.Links.FindAddress("urn:restaurant"); return await client.GetAsync(address); }

The REST API has only a single documented address, which is the 'home' resource at the relative URL ""; i.e. the root of the API. In this incarnation of the API, the home resource responds with a JSON array of restaurants. The GetRestaurant method finds the restaurant with the desired name and finds its address. It then issues another GET request against that address, and returns the response.

Verifying state #

The verification phase of the above test calls a private helper method:

private static async Task AssertRemainingCapacity( HttpClient client, DateTime date, string name, int expected) { var response = await client.GetDay(name, date.Year, date.Month, date.Day); var day = await response.ParseJsonContent<CalendarDto>(); Assert.All( day.Days.Single().Entries, e => Assert.Equal(expected, e.MaximumPartySize)); }

It uses another of the above-mentioned client API extension methods, GetDay, to inspect the REST API's calendar entry for the restaurant and day in question. Each calendar contains a series of time entries that lists the largest party size the restaurant can accept at that time slot. The two restaurants in question only have single seatings, so once you've booked a six-person table, you have it for the entire evening.

Notice that verification is done by interacting with the system itself. No Back Door Manipulation is required. I favour this if at all possible, since I believe that it offers better confidence that the system behaves as it should.

Conclusion #

It's been possible to self-host .NET REST APIs for testing purposes at least since 2012, but it's only become easier over the years. All you need to get started is the WebApplicationFactory<TEntryPoint> class, although you're probably going to need a derived class to override some of the system configuration.

From there, you can interact with the self-hosted system using the standard HttpClient class.

Since I configure these tests to run on an in-memory database, the execution time is comparable to 'normal' unit tests. I admit that I haven't measured it, but that's because I haven't felt the need to do so.

Comments

Hi Mark, 2 questions from me:

- What kind of integration does this test verify? The integration between the HTTP request processing part and the service logic? If so, why fake the database only and not the whole logic?

- If you fake the database here, then would you have a separate test for testing integration with DB (e.g. some kind of "adapter test", as described in the GOOS book)?

Grzegorz, thank you for writing.

1. As I already hinted at, the motivation for this test was that I was expanding the code from a single-tenant to a multi-tenant system. I did this in small steps, using tests to drive the augmented behaviour. Before I added the above test, I'd introduced the concept of a restaurant ID to identify each restaurant, and initially added a method overload to my data access interface that required such an ID:

Task Create(int restaurantId, Reservation reservation);

I was using the Strangler pattern to be able to move in small steps. One step was to add overloads like the above. Subsequent steps would be to move existing callers from the 'legacy' overload to the new overload, and then finally delete the 'legacy' overload.

When moving from a single-tenant system to a multi-tenant system, I had to grandfather in the existing restaurant, which I arbitrarily chose to give the restaurant ID 1. During this process, I kept the existing system working so as to not break existing clients.

I gradually introduced method overloads that took a restaurant ID as a parameter (as above), and then deleted the legacy methods that didn't take a restaurant ID. In order to not break anything, I'd initially be calling the new methods with the hard-coded restaurant ID 1.

The above test was one in a series of tests that followed. Their purpose was to verify that the system had become truly multi-tenant. Before I added that test, an attempt to make a reservation at Nono would result in a call to Create(1, reservation) because there was still hard-coded restaurant IDs left in the code base.

(To be honest, the above is a simplified account of events. It was actually more complex than that, since it involved hypermedia controls. I also, for reasons that I may divulge in the future, didn't perform this work behind a feature flag, which under other circumstances would have been appropriate.)

What the above test verifies, then, is that the reservation gets associated with the correct restaurant, instead of the restaurant that was grandfathered in (Hipgnosta). An in-memory (Fake) database was sufficient to demonstrate that.

Why didn't I use the real, relational database? Read on.

2. As I've recently discussed, integration tests that involve SQL Server are certainly possible. They tend to be orders-of-magnitudes slower than unit tests that run entirely in memory, so I only add them if I deem them necessary.

I interact with databases according to the Dependency Inversion Principle. The above Create method is an example of that. The method is defined by the needs of the client code rather than on implementation details.

The actual implementation of the data access interface is, as you imply, an Adapter. It adapts the SQL Server SDK (i.e. ADO.NET) to my data access interface. As long as I can keep such Adapters Humble Objects I don't cover them by tests.

Such Adapters are often just mappings: This class field maps to that table column, that field to that other column, and so on. If there's no logic, there isn't much to test.

This doesn't mean that bugs can't appear in database Adapters. If that happens, I may introduce regression tests that involve the database. I prefer to do this in a separate test suite, however, since such tests tend to be slow, and TDD test suites should be fast.

Hi Mark, I have been a fan of "integration" style testing since I found Andrew Locks's post on the topic. In fact, I personally find that it makes sense to take that idea to one extreme.

As far as I can see, your test assumes something like "Given that no reservations have been made". Did you consider to make that "Given" explicitly recognizable in your test?

I am asking because this is a major pet topic of mine and I would love to hear your thoughts. I am not suggesting writing Gherkin instead of C# code, just if and how you would make the "Given" assumption explicit.

A more practical comment is on your use of services.RemoveAll<IReservationsRepository>(); It seems extreme to remove all, as all you want to achieve is to overwrite a few service declarations. Andrew Lock demonstrates how to keep all the ASP.NET middleware intact by keeping most of the service registrations.

I usually use ConfigureTestServices on WebApplicationFactory, something like,

factory.WithWebHostBuilder(builder => builder.ConfigureTestServices(MyTestCompositionRoot.Initialize));

Brian, thank you for writing. You're correct that one implicit assumption is that no prior reservations have been made to Nono on that date. The reality is, as you suggest, that there are no reservations at all, but the test doesn't require that. It only relies on the weaker assumption that there are prior reservations to neither Nono nor Hipgnosta on the date in question.

I often find it tricky to make 'negative' assumptions explicit. How much should one highlight? Is it also relevant to highlight that SelfHostedApi isn't going to send notification emails? That it doesn't write to a persistent log? That it doesn't have the capability to launch missiles?

I'm not saying that it's irrelevant to highlight certain assumptions, but I'm not sure that I have a clear heuristic for it. After all, it depends on what the reader cares about, and how clear the assumptions are.

If it became clear to me that the assumption of 'no prior reservations' was important to the reader, I might consider to embed that in the name of the test, or perhaps by adding a comment. Another option is to hide the initialisation of SelfHostedApi behind a required factory method so that you'd have to initialise it as using var api = SelfHostedApi.StartEmpty();

In this particular code base, though, that SelfHostedApi is used repeatedly. A programmer who regularly works with that code base will quickly internalise the assumptions embedded in that self-hosted service.

Granted, it can be a dangerous strategy to rely too much on implicit assumptions, because it can make it more difficult to change things if those assumptions change in the future. I do, after all, favour the wisdom from the Zen of Python: Explicit is better than implicit.

Making such things as we discuss here explicit does, however, tend to make the tests more verbose. Pragmatically, then, there's a balance to strike. I'm not claiming that the test shown here is perfect. In a a living code base, this would be a concern that I would keep a constant eye on. I'd fiddle with it, trying out making things more explicit, less explicit, and so on, to see what works best in context.

Your other point about services.RemoveAll<IReservationsRepository>() I don't understand. Why is that extreme? It's literally the most minimal, precise change I can make. It removes only the IReservationsRepository, of which there's exactly one Singleton instance:

var connStr = Configuration.GetConnectionString("Restaurant"); services.AddSingleton<IReservationsRepository>(sp => { var logger = sp.GetService<ILogger<LoggingReservationsRepository>>(); var postOffice = sp.GetService<IPostOffice>(); return new EmailingReservationsRepository( postOffice, new LoggingReservationsRepository( logger, new SqlReservationsRepository(connStr))); });

The integration test throws away that Singleton object graph, but nothing else.

Hi Mark, thanks for a thorough answer.

Alas, my second question was not thought through - I somehow thought you threw away all service registrations, which you obviously do not. In fact, I do exactly what you do, usually with Replace and thereby implicitly assume that there is only one registration. My Bad.

We very much agree in the importance of favouring explicit assumptions.

The solution to stating 'negative' assumptions, as long as we talk about the 'initial context' (the 'Given') is actually quite simple if your readers know that you always state assumptions explicitly. If none are stated, it's the same as saying 'the world is empty' - at least when it comes to the bounded context of whatever you are testing. I find that the process of identifying a minimal set of assumptions per test is a worthwhile exercise.

So, IMHO it boils down to consistently stating all assumptions and making the relevant bounded context clear.

Brian, thank you for writing. I agree that it's a good rule of thumb to expect the world to be empty unless explicitly stated otherwise. This is the reason that I love functional programming.

One question, however, is whether a statement is sufficiently explicit. The integration test shown here is a good example. What's actually happening is that SelfHostedApi sets up a self-hosted service configured in a certain way. It contains three restaurants, of which two are relevant in this particular test. Each restaurant comes with quite a bit of configuration: opening time, closing time, seating duration, and the configuration of tables. Just consider the configuration of Nono:

{

"Id": 2112,

"Name": "Nono",

"OpensAt": "18:00",

"LastSeating": "21:00",

"SeatingDuration": "6:00",

"Tables": [

{

"TableType": "Communal",

"Seats": 6

},

{

"TableType": "Communal",

"Seats": 4

},

{

"TableType": "Standard",

"Seats": 2

},

{

"TableType": "Standard",

"Seats": 2

},

{

"TableType": "Standard",

"Seats": 4

},

{

"TableType": "Standard",

"Seats": 4

}

]

}

The code base also enables a test writer to express the above configuration as code instead of JSON, but the point I'm trying to make is that you probably don't want that amount of detail to appear in the Arrange phase of each test, regardless of how explicit it'd be.

To work around an issue like this, you might instead define an immutable test data variable called nono. Then, in each test that uses these restaurants, you might include something like AddRestaurant(nono) or AddRestaurant(hipgnosta) in the Arrange phase (hypothetical API). That's not quite as explicit, because it hides the actual values away, but is probably still good enough.

Then you find yourself always adding the same restaurants, so you say to yourself: It'd be nice if I had a helper method like AddStandardRestaurants. So, you may add such a helper method.

But you find that for the integration tests, you always call that helper method, so ultimately, you decide to just roll it into the definition of SelfHostedApi. That's basically what's going on here.

I don't recall whether I explicitly stated the following in the article, but I use integration tests consistent with the test pyramid. The purpose of the integration tests is to verify that components integrate correctly, and that the HTTP API behaves according to contract. In my experience, I can let the 'givens' be somewhat implicit and still keep the code maintainable. For integration tests, that is.

On the unit level, I favour pure functions, which are intrinsically testable. Due to isolation, everything that affects the behaviour of a pure function must be explicitly passed as arguments, and as a bi-product, they become explicit as part of a test's Arrange phase.

But, again, let me reiterate that I agree with you. I don't claim that the code shown here is perfect. It's so implicit that it makes me uncomfortable too, but on the other hand, I think that a test like the above communicates intent well. The more explicit details one adds, the more they may drown out the intent. It's a difficult balance to strike.

Hi Mark, I'm happy that we agree. Still, this is a pet topic of mine so please allow me one additional comment.

I wish all code had at least a functional core, as that would make testing so much easier. You could say that explicitly stating initial context resembles testing pure functions.

I do believe that it is possible to state initial context without being overwhelmed by details. It can by no means be fully automated - this is where the mind of a tester must be used. Also, it will never be perfect, but at least it can be documented somewhat systematically and kept under version control, so the reasoning can be challenged.

In your example, I would not add Nono or Hipgnosta or a standard restaurant, as those names say noting about what makes my test pass.

Rather, I would add e.g. a "restaurant with a 6 and a 4 person table", as this seems to be what makes your test pass, if I understood it correctly. In another test I might have "restaurant which is open from 6 PM with last seating at 9 PM" if I want to test time aspects of booking. It may be the same Json file used in those two tests, because what's most important is that you document what makes each individual test pass.

However, if it's not too much trouble (it sometimes is) I would even try to minimize the initial context. For example, I would test timing aspects with a minimal restaurant with a single table. Unless, timing and tables interact - maybe late bookings are OK if the restaurant is starved for customers? This is where the "tester mind" comes in handy.

In your restaurant example, that would mean having quite a few restaurant definitions, but only definitions which include the needed context for each test. My experience is that minimizing the initial context will teach you a lot about the code. This is similar to unit tests which focus on a small part of code, but "minimizing initial context" allows for less fragile tests than when "minimizing code".

We started using this approach because our tests did not give sufficient confidence. Tests were flaky and it was difficult to see what was tested and why. I can safely say that this is much better now.

My examples of initial context above may appear like they could grow out of control, but our experience so far is that it is possible to find a relatively small set of equivalence classes of initial context items, even for fairly complex functionality.

Brian, I agree that in unit testing, being able to reduce test cases to minimal examples is useful. Once you have those minimal examples, being explicit about all preconditions is more attainable. This is why, when I coach teams, I try to teach them about equivalence class partitioning. If, within each equivalence class, you can impose some sort of (partial) ordering, you may be able to pick a canonical representation that constitutes the minimal example.

This is basically what the shrinking process of property-based testing frameworks attempt to do.

Thus, when my testing goal is to explore the boundaries of the system under test, I go to great lengths to ensure that the arrange phase is as explicit as possible. I recently published an example of how I use property-based testing to such an end.

The goal of the integration test in the present article, however, is not to explore boundary cases or verify the correctness of algorithms in question. I have unit tests for that. The goal is to examine whether things may be correctly 'clicked together'.

Expressing an explicit minimal context for the above test is more involved than you outline. Having two restaurants, with two differently sized tables, is only the beginning. You must also ensure that they are open at the same time, so that an attempted reservation would fit both. Furthermore, you must then pick the date and time of the reservation to fit within that time window. This is still not hopelessly difficult, and might make for a good exercise, but it could easily add 4-5 extra lines of code to the test.

And again: had this been a unit test, I'd happily added those extra lines, but for an integration test, I felt that it was less important.

Parametrised test primitive obsession code smell

Watch out for this code smell with some unit testing frameworks.

In a previous article you saw this state-based integration test: