ploeh blog danish software design

Feedback on ASP.NET vNext Dependency Injection

ASP.NET vNext includes a Dependency Injection API. This post offers feedback on the currently available code.

As part of Microsoft's new openness, the ASP.NET team have made the next version of ASP.NET available on GitHub. Obviously, it's not yet done, but I suppose that the reasons for this move is to get early feedback, as well as perhaps take contributions. This is an extremely positive move for the ASP.NET team, and I'm very grateful that they have done this, because it enables me to provide early feedback, and offer my help.

It looks like one of the proposed new features of the next version of ASP.NET is a library or API simply titled Dependency Injection. In this post, I will provide feedback to the team on that particular sub-project, in the form of an open blog post. The contents of this blog post is also cross-posted to the official ASP.NET vNext forum.

Dependency Injection support #

The details on the motivation for the Dependency Injection library are sparse, but I assume that the purpose is provide 'Dependency Injection support' to ASP.NET. If so, that motivation is laudable, because Dependency Injection (DI) is the proper way to write loosely coupled code when using Object-Oriented Design.

Some parts of the ASP.NET family already have DI support; personally, I'm most familiar with ASP.NET MVC and ASP.NET Web API. Other parts have proven rather hostile towards DI - most notably ASP.NET Web Forms. The problem with Web Forms is that Constructor Injection is impossible, because the Web Forms framework doesn't provide a hook for creating new Page objects.

My interpretation #

As far as I can tell, the current ASP.NET Dependency Injection code defines an interface for creating objects:

public interface ITypeActivator { object CreateInstance( IServiceProvider services, Type instanceType, params object[] parameters); }

In addition to this central interface, there are other interfaces that enable you to configure a 'service collection', and then there are Adapters for

- Autofac

- Ninject

- StructureMap

- Unity

- Caste Windsor

My recommendations #

It's an excellent idea to add 'Dependency Injection support' to ASP.NET, for the few places where it's not already present. However, as I've previously explained, a Conforming Container isn't the right solution. The right solution is to put the necessary extensibility points into the framework:

- ASP.NET MVC already has a good extensibility point in the IControllerFactory interface. I recommend keeping this interface, and other interfaces in MVC that play a similar role.

- ASP.NET Web API already has a good extensibility point in the IHttpControllerActivator interface. I recommend keeping this interface, and other interfaces in Web API that play a similar role.

- ASP.NET Web Forms have no extensibility point that enables you to create custom Page objects. I recommend adding an IPageFactory interface, as described in my article about DI-Friendly frameworks. Other object types related to Web Forms, such as Object Data Sources, suffer from the same shortcoming, and should have similar factory interfaces.

- There may be other parts of ASP.NET with which I'm not particularly familiar (SignalR?), but they should all follow the same pattern of defining Abstract Factories for user classes, in the cases where these don't already exist.

"perfection is attained not when there is nothing more to add, but when there is nothing more to remove." - Antoine de Saint ExupéryThese are my general recommendations to the team, but if desired, I'd also like to offer my assistance with any particular issues the team might encounter.

DI-Friendly Framework

How to create a Dependency Injection-friendly software framework.

It seems to me that every time a development organisation wants to add 'Dependency Injection support' to a framework, all too often, the result is a Conforming Container. In this article I wish to describe good alternatives to this anti-pattern.

In a previous article I covered how to design a Dependency Injection-friendly library; in this article, I will deal with frameworks. The distinction I usually make is:

- A Library is a reusable set of types or functions you can use from a wide variety of applications. The application code initiates communication with the library and invokes it.

- A Framework consists of one or more libraries, but the difference is that Inversion of Control applies. The application registers with the framework (often by implementing one or more interfaces), and the framework calls into the application, which may call back into the framework. A framework often exists to address a particular general-purpose Domain (such as web applications, mobile apps, workflows, etc.).

The composition challenge #

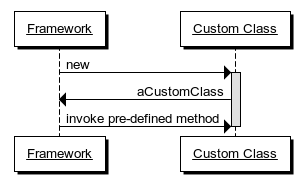

One of the most challenging aspects of writing a framework is that the framework designers can't predict what users will want to do. Often, a framework defines a way for you to interact with it:

- Implement an interface

- Derive from a base class

- Adorn your classes or methods with a particular attribute

- Name your classes according to some naming convention

Once the framework has an instance of the custom user class, it can easily start using it by invoking methods defined by the interface the class implements, etc. The difficult part is creating the instance. By default, most frameworks require that a custom class has a default (parameterless) constructor, but that may be a design smell, and doesn't fit with the Constructor Injection pattern. Such a requirement is a sensible default, but isn't Dependency Injection-friendly; in fact, it's an example of the Constrained Construction anti-pattern, which you can read about in my book.

Most framework designers realize this and resolve to add Dependency Injection support to the framework. Often, in the first few iterations, they get it right!

Abstractions and ownership #

If you examine the sequence diagram above, you should realize one thing: the framework is the client of the custom user code; the custom user code provides the services for the framework. In most cases, the custom user code exposes itself as a service to the framework. Some examples may be in order:

- In ASP.NET MVC, user code implements the IController interface, although this is most commonly done by deriving from the abstract Controller base class.

- In ASP.NET Web API, user code implements the IHttpController interface, although this is most commonly done by deriving from the abstract ApiController class.

- In Windows Presentation Foundation, user code derives from the Window class.

There's an extremely important point hidden here: although it looks like a framework has to deal with the unknown, all the requirements of the framework are known:

- The framework defines the interface or base class

- The framework creates instances of the custom user classes

- The framework invokes methods on the custom user objects

"clients [...] own the abstract interfaces"This is a quote from chapter 11, which is about the Dependency Inversion Principle, so it all fits.

Notice what the framework does in the list above. Not only does it use the custom user objects, it also creates instances of the custom user classes. This is the tricky part, where many framework designers have a hard time seeing past the fact that the custom user code is unknown. However, from the perspective of the framework, the concrete type of a custom user class is irrelevant; it just needs to create an instance of it, but treat it as the well-known interface it implements.

- The client owns the interface

- The framework is the client

- The framework knows what it needs, not what user code needs

- Thus, framework interfaces should be defined by what the framework needs, not as a general-purpose interface to deal with user code

- Users know much better what user code needs than the framework can ever hope to do

public interface IFrameworkControllerFactory { IFrameworkController Create(Type controllerType); }

assuming that the interface that the user code must implement is called IFrameworkController.

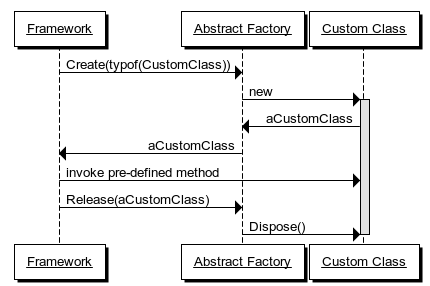

The custom user class may contain one or more disposable objects, so in order to prevent resource leaks, the framework must also provide a hook for decommissioning:

public interface IFrameworkControllerFactory { IFrameworkController Create(Type controllerType); void Release(IFrameworkController controller); }

In this expanded iteration of the Abstract Factory, the contract is that the framework will invoke the Release method when it's finished with a particular IFrameworkController instance.

Some framework designers attempt to introduce a 'more sophisticated' lifetime model, but there's no reason for that. This Create/Release design is simple, easy to understand, works very well, and fits perfectly into the Register Resolve Release pattern, since it provides hooks for the Resolve and Release phases.

ASP.NET MVC 1 and 2 provided flawless examples of such Abstract Factories in the form of the IControllerFactory interface:

public interface IControllerFactory { IController CreateController( RequestContext requestContext, string controllerName); void ReleaseController(IController controller); }

Unfortunately, in ASP.NET MVC 3, a completely unrelated third method was added to that interface; it's still useful, but not as clean as before.

Framework designers ought to stop here. With such an Abstract Factory, they have perfect Dependency Injection support. If a user wants to hand-code the composition, he or she can implement the Abstract Factory interface. Here's an ASP.NET 1 example:

public class PoorMansCompositionRoot : DefaultControllerFactory { private readonly Dictionary<IController, IEnumerable<IDisposable>> disposables; private readonly object syncRoot; public PoorMansCompositionRoot() { this.syncRoot = new object(); this.disposables = new Dictionary<IController, IEnumerable<IDisposable>>(); } protected override IController GetControllerInstance( RequestContext requestContext, Type controllerType) { if (controllerType == typeof(HomeController)) { var connStr = ConfigurationManager .ConnectionStrings["postings"].ConnectionString; var ctx = new PostingContext(connStr); var sqlChannel = new SqlPostingChannel(ctx); var sqlReader = new SqlPostingReader(ctx); var validator = new DefaultPostingValidator(); var validatingChannel = new ValidatingPostingChannel( validator, sqlChannel); var controller = new HomeController(sqlReader, validatingChannel); lock (this.syncRoot) { this.disposables.Add(controller, new IDisposable[] { sqlChannel, sqlReader }); } return controller; } return base.GetControllerInstance(requestContext, controllerType); } public override void ReleaseController(IController controller) { lock (this.syncRoot) { foreach (var d in this.disposables[controller]) { d.Dispose(); } } } }

In this example, I derive from DefaultControllerFactory, which implements the IControllerFactory interface - it's a little bit easier than implementing the interface directly.

In this example, the Composition Root only handles a single user Controller type (HomeController), but I'm sure you can extrapolate from the example.

If a developer rather prefers using a DI Container, that's also perfectly possible with the Abstract Factory approach. Here's another ASP.NET 1 example, this time with Castle Windsor:

public class WindsorCompositionRoot : DefaultControllerFactory { private readonly IWindsorContainer container; public WindsorCompositionRoot(IWindsorContainer container) { if (container == null) { throw new ArgumentNullException("container"); } this.container = container; } protected override IController GetControllerInstance( RequestContext requestContext, Type controllerType) { return (IController)this.container.Resolve(controllerType); } public override void ReleaseController(IController controller) { this.container.Release(controller); } }

Notice how seamless the framework's Dependency Injection support is: the framework has no knowledge of Castle Windsor, and Castle Windsor has no knowledge of the framework. The small WindsorCompositionRoot class acts as an Adapter between the two.

Resist the urge to generalize #

If frameworks would only come with the appropriate hooks in the form of Abstract Factories with Release methods, they'd be perfect.

Unfortunately, as a framework becomes successful and grows, more and more types are added to it. Not only (say) Controllers, but Filters, Formatters, Handlers, and whatnot. A hypothetical XYZ framework would have to define Abstract Factories for each of these extensibility points:

public interface IXyzControllerFactory { IXyzController Create(Type controllerType); void Release(IXyzController controller); } public interface IXyzFilterFactory { IXyzFilter Create(Type fiterType); void Release(IXyzFilter filter); } // etc.

Clearly, that seems repetitive, so it's no wonder that framework designers look at that repetition and wonder if they can generalize. The appropriate responses to this urge, are, in prioritised order:

- Resist the urge to generalise, and define each Abstract Factory as a separate interface. That design is easy to understand, and users can implement as many or as few of these Abstract Factories as they want. In the end, frameworks are designed for the framework users, not for the framework developers.

- If absolutely unavoidable, define a generic Abstract Factory.

Many distinct, but similar Abstract Factory interfaces may be repetitive, but that's unlikely to hurt the user. A good framework provides optional extensibility points - it doesn't force users to relate to all of them at once. As an example, I'm a fairly satisfied user of the ASP.NET Web API, but while I create lots of Controllers, and the occasional Exception Filter, I've yet to write my first custom Formatter. I only add a custom IHttpControllerActivator for my Controllers. Although (unfortunately) ASP.NET Web API has had a Conforming Container in the form of the IDependencyResolver interface since version 1, I've never used it. In a properly designed framework, a Conforming Container is utterly redundant.

If the framework must address the apparent DRY violation of multiple similar Abstract Factory definitions, an acceptable solution is a generic interface:

public interface IFactory<T> { T Create(Type itemType); void Release(T item); }

This type of generic Factory is generally benign, although it may hurt discoverability, because a generic type looks as though you can use anything for the type argument T, where, in fact, the framework only needs a finite set of Abstract Factories, like

- IFactory<IXyzController>

- IFactory<IXyzFilter>

- IFactory<IXyzFormatter>

- IFactory<IXyzHandler>

In the end, though, users will need to inform the framework about their custom factories, so this discoverability issue can be addressed. A framework usually defines an extensibility point where users can tell it about their custom extensions. An example of that is ASP.NET MVC's ControllerBuilder class. Although I'm not too happy about the use of a Singleton, it's hard to do something wrong:

var controllerFactory = new PoorMansCompositionRoot(); ControllerBuilder.Current.SetControllerFactory(controllerFactory);

Unfortunately, some frameworks attempt to generalize this extensibility point. As an example, in ASP.NET Web API, you'll have to use ServicesContainer.Replace:

public void Replace(Type serviceType, object service)

Although it's easy enough to use:

configuration.Services.Replace( typeof(IHttpControllerActivator), new CompositionRoot(this.eventStore, this.eventStream, this.imageStore));

It's not particularly discoverable, because you'll have to resort to the documentation, or trawl through the (fortunately open source) code base, in order to discover that there's an IHttpControllerActivator interface you'd like to replace. The Replace method gives the impression that you can replace any Type, but in practice, it only makes sense to replace a few well-known interfaces, like IHttpControllerActivator.

Even with a generic Abstract Factory, a much more discoverable option would be to expose all extensible services as strongly-typed members of a configuration object. As an example, the hypothetical XYZ framework could define its configuration API like this:

public class XyzConfiguration { public IFactory<IXyzController> ControllerFactory { get; set; } public IFactory<IXyzFilter> FilterFactory { get; set; } // etc. }

Such use of Property Injection enables users to override only those Abstract Factories they care about, and leave the rest at their defaults. Additionally, it's easy to enumerate all extensibility options, because the XyzConfiguration class provides a one-stop place for all extensibility points in the framework.

Define attributes without behaviour #

Some frameworks provide extensibility points in the form of attributes. ASP.NET MVC, for example, defines various Filter attributes, such as [Authorize], [HandleError], [OutputCache], etc. Some of these attributes contain behaviour, because they implement interfaces such as IAuthorizationFilter, IExceptionFilter, and so on.

Attributes with behaviour is a bad idea. Due to compiler limitations (at least in both C# and F#), you can only provide constants and literals to an attribute. That effectively rules out Dependency Injection, but if an attribute contains behaviour, it's guaranteed that some user comes by and wants to add some custom behaviour in an attribute. The only way to add 'Dependency Injection support' to attributes is through a static Service Locator - an exceptionally toxic design. Attribute designers should avoid this. This is not Dependency Injection support; it's Service Locator support. There's no reason to bake in Service Locator support in a framework. People who deliberately want to hurt themselves can always add a static Service Locator by themselves.

Instead, attributes should be designed without behaviour. Instead of putting the behaviour in the attribute itself, a custom attribute should only provide metadata - after all, that's the original raison d'être of attributes.

Attributes with metadata can then be detected and handled by normal services, which enable normal Dependency Injection. See this Stack Overflow answer for an ASP.NET MVC example, or my article on Passive Attributes for a Web API example.

Summary #

A framework must expose appropriate extensibility points in order to be useful. The best way to support Dependency Injection is to provide an Abstract Factory with a corresponding Release method for each custom type users are expected to create. This is the simplest solution. It's extremely versatile. It has few moving parts. It's easy to understand. It enables gradual customisation.

Framework users who don't care about Dependency Injection at all can simply ignore the whole issue and use the framework with its default services. Framework users who prefer to hand-code object composition, can implement the appropriate Abstract Factories by writing custom code. Framework users who prefer to use their DI Container of choice can implement the appropriate Abstract Factories as Adapters over the container.

That's all. There's no reason to make it more complicated than that. There's particularly no reason to force a Conforming Container upon the users.

DI-Friendly Library

How to create a Dependency Injection-friendly software library.

In my book, I go to great lengths to explain how to develop loosely coupled applications using various Dependency Injection (DI) patterns, including the Composition Root pattern. With the great emphasis on applications, I didn't particularly go into details about making DI-friendly libraries. Partly this was because I didn't think it was necessary, but since one of my highest voted Stack Overflow answers deal with this question, it may be worth expanding on.

In this article, I will cover libraries, and in a later article I will deal with frameworks. The distinction I usually make is:

- A Library is a reusable set of types or functions you can use from a wide variety of applications. The application code initiates communication with the library and invokes it.

- A Framework consists of one or more libraries, but the difference is that Inversion of Control applies. The application registers with the framework (often by implementing one or more interfaces), and the framework calls into the application, which may call back into the framework. A framework often exists to address a particular general-purpose Domain (such as web applications, mobile apps, workflows, etc.).

Most well-designed libraries are already DI-friendly - particularly if they follow the SOLID principles, because the Dependency Inversion Principle (the D in SOLID) is the guiding principle behind DI.

Still, it may be valuable to distil a few recommendations.

Program to an interface, not an implementation #

If your library consists of several collaborating classes, define proper interfaces between these collaborators. This enables clients to redefine part of your library's behaviour, or to slide cross-cutting concerns in between two collaborators, using a Decorator.

Be sure to define these interfaces as Role Interfaces.

An example of a small library that follows this principle is Hyprlinkr, which defines two interfaces used by the main RouteLinker class:

public interface IRouteValuesQuery { IDictionary<string, object> GetRouteValues( MethodCallExpression methodCallExpression); }

and

public interface IRouteDispatcher { Rouple Dispatch( MethodCallExpression method, IDictionary<string, object> routeValues); }

This not only makes it easier to develop and maintain the library itself, but also makes it more flexible for users.

Use Constructor Injection #

Favour the Constructor Injection pattern over other injection patterns, because of its simplicity and degree of encapsulation.

As an example, Hyprlinkr's main class, RouteLinker, has this primary constructor:

private readonly HttpRequestMessage request; private readonly IRouteValuesQuery valuesQuery; private readonly IRouteDispatcher dispatcher; public RouteLinker( HttpRequestMessage request, IRouteValuesQuery routeValuesQuery, IRouteDispatcher dispatcher) { if (request == null) throw new ArgumentNullException("request"); if (routeValuesQuery == null) throw new ArgumentNullException("routeValuesQuery"); if (dispatcher == null) throw new ArgumentNullException("dispatcher"); this.request = request; this.valuesQuery = routeValuesQuery; this.dispatcher = dispatcher; }

Notice that it follows Nikola Malovic's 4th law of IoC that Injection Constructors should be simple.

Although not strictly required in order to make a library DI-friendly, expose every injected dependency as an Inspection Property - it will make the library easier to use when composed in one place, but used in another place. Again, Hyprlinkr does that:

public IRouteValuesQuery RouteValuesQuery { get { return this.valuesQuery; } }

and so on for its other dependencies, too.

Consider an Abstract Factory for short-lived objects #

Sometimes, your library must create short-lived objects in order to do its work. Other times, the library can only create a required object at run-time, because only at run-time is all required information available. You can use an Abstract Factory for that.

The Abstract Factory doesn't always have to be named XyzFactory; in fact, Hyprlinkr's IRouteDispatcher interface is an Abstract Factory, although it's in disguise because it has a different name.

public interface IRouteDispatcher { Rouple Dispatch( MethodCallExpression method, IDictionary<string, object> routeValues); }

Notice that the return value of an Abstract Factory doesn't have to be another interface instance; in this case, it's an instance of the concrete class Rouple, which is a data structure without behaviour.

Consider a Facade #

If some objects are difficult to construct, because their classes have complex constructors, consider supplying a Facade with a good default combination of appropriate dependencies. Often, a simple alternative to a Facade is Constructor Chaining:

public RouteLinker(HttpRequestMessage request) : this(request, new DefaultRouteDispatcher()) { } public RouteLinker(HttpRequestMessage request, IRouteValuesQuery routeValuesQuery) : this(request, routeValuesQuery, new DefaultRouteDispatcher()) { } public RouteLinker(HttpRequestMessage request, IRouteDispatcher dispatcher) : this(request, new ScalarRouteValuesQuery(), dispatcher) { } public RouteLinker( HttpRequestMessage request, IRouteValuesQuery routeValuesQuery, IRouteDispatcher dispatcher) { if (request == null) throw new ArgumentNullException("request"); if (routeValuesQuery == null) throw new ArgumentNullException("routeValuesQuery"); if (dispatcher == null) throw new ArgumentNullException("dispatcher"); this.request = request; this.valuesQuery = routeValuesQuery; this.dispatcher = dispatcher; }

Notice how the Routelinker class provides appropriate default values for those dependencies it can.

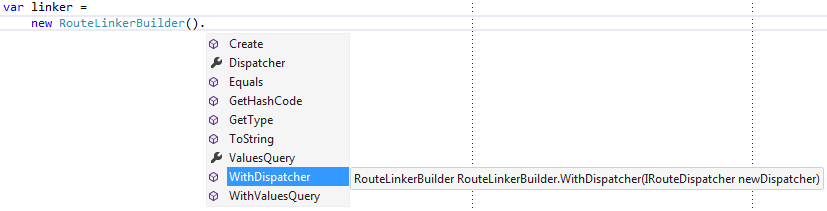

However, a Library with a more complicated API could potentially benefit from a proper Facade. One way to make the API's extensibility points discoverable is by implementing the Facade as a Fluent Builder. The following RouteLinkerBuilder isn't part of Hyprlinkr, because I consider the Constructor Chaining alternative simpler, but it could look like this:

public class RouteLinkerBuilder { private readonly IRouteValuesQuery valuesQuery; private readonly IRouteDispatcher dispatcher; public RouteLinkerBuilder() : this(new ScalarRouteValuesQuery(), new DefaultRouteDispatcher()) { } private RouteLinkerBuilder( IRouteValuesQuery valuesQuery, IRouteDispatcher dispatcher) { this.valuesQuery = valuesQuery; this.dispatcher = dispatcher; } public RouteLinkerBuilder WithValuesQuery(IRouteValuesQuery newValuesQuery) { return new RouteLinkerBuilder(newValuesQuery, this.dispatcher); } public RouteLinkerBuilder WithDispatcher(IRouteDispatcher newDispatcher) { return new RouteLinkerBuilder(this.valuesQuery, newDispatcher); } public RouteLinker Create(HttpRequestMessage request) { return new RouteLinker(request, this.valuesQuery, this.dispatcher); } public IRouteValuesQuery ValuesQuery { get { return this.valuesQuery; } } public IRouteDispatcher Dispatcher { get { return this.dispatcher; } } }

This has the advantage that it's easy to get started with the library:

var linker = new RouteLinkerBuilder().Create(request);

This API is also discoverable, because Intellisense helps users discover how to deviate from the default values:

It enables users to override only those values they care about:

var linker = new RouteLinkerBuilder().WithDispatcher(customDispatcher).Create(request);

If I had wanted to force users of Hyprlinkr to use the (hypothetical) RouteLinkerBuilder, I could make the RouteLinker constructor internal, but I don't much care for that option; I prefer to empower my users, not constrain them.

Composition #

Any application that uses your library can compose objects from it in its Composition Root. Here's a hand-coded example from one of Grean's code bases:

private static RouteLinker CreateDefaultRouteLinker(HttpRequestMessage request) { return new RouteLinker( request, new ModelFilterRouteDispatcher( new DefaultRouteDispatcher() ) ); }

This example is just a small helper method in the Composition Root, but as you can see, it composes a RouteLinker instance using our custom ModelFilterRouteDispatcher class as a Decorator for Hyprlinkr's built-in DefaultRouteDispatcher.

However, it would also be easy to configure a DI Container to do this instead.

Summary #

If you follow SOLID, and normal rules for encapsulation, your library is likely to be DI-friendly. No special infrastructure is required to add 'DI support' to a library.

Comments

I found great library for in process messaging made by Jimmy Bogard - MediatR, but it uses service locator. Implemented mediator uses service locator to lookup for handlers matching message type registered in container. Source.

What would be best approach to eliminate service locator in this case? Would it be better to pass all handler instances in mediator constructor and then lookup for matching one?

Maris, thank you for writing. Hopefully, this article answers your question.

Conforming Container

A Dependency Injection anti-pattern.

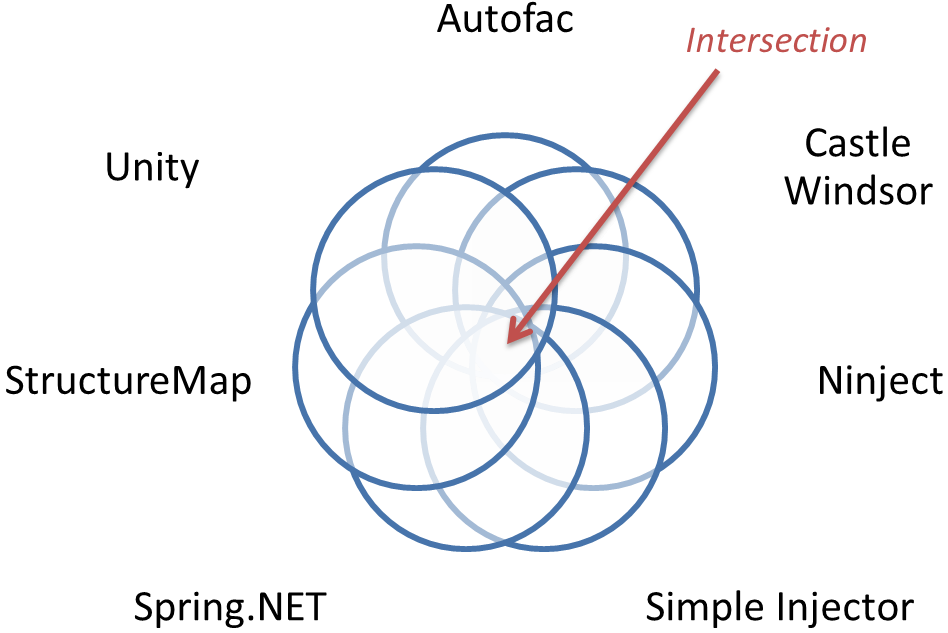

Once in a while, someone comes up with the idea that it would be great to introduce a common abstraction over various DI Containers in .NET. My guess is that part of the reason for this is that there are so many DI Containers to choose from on .NET:

- Autofac

- Castle Windsor

- Managed Extensibility Framework

- Ninject

- Simple Injector

- Spring.NET

- StructureMap

- Unity

General form #

At its core, a Conforming Container introduces a central interface, often called IContainer, IServiceLocator, IServiceProvider, ITypeActivator, IServiceFactory, or something in that vein. The interface defines one or more methods called Resolve, Create, GetInstance, or similar:

public interface IContainer { object Resolve(Type type); object Resolve(Type type, params object[] arguments); T Resolve<T>(); T Resolve<T>(params object[] arguments); IEnumerable<T> ResolveAll<T>(); // etc. }

Sometimes, the interface defines only a single of those methods; sometimes, it defines even more variations of methods to create objects based on a Type.

Some Conforming Containers stop at this point, so that the interface only exposes Queries, which means that they only cover the Resolve phase of the Register Resolve Release pattern. Other efforts attempt to address Register phase too:

public interface IContainer { void AddService(Type serviceType, Type implementationType); void AddService<TService, TImplementation>(); // etc. }

The intent is to enable configuration of the container using some sort of metadata. Sometimes, the methods have more advanced configuration parameters that also enable you to specify the lifestyle of the service, etc.

Finally, a part of a typical Conforming Container ecosystem is various published Adapters to concrete DI Containers. A hypothetical Confainer project may publish the following Adapter packages:

- Confainer.Autofac

- Confainer.Windsor

- Confainer.Ninject

- Confainer.Unity

Symptoms and consequences #

A Conforming Container is an anti-pattern, because it's

a commonly occurring solution to a problem that generates decidedly negative consequences,such as:

- Calls to the Conforming Container are likely to be sprinkled liberally over an entire code base.

- It pushes novice users towards the Service Locator anti-pattern. Most people encountering Dependency Injection for the first time mistake it for the Service Locator anti-pattern, despite the entirely opposite natures of these two approaches to loose coupling.

- It attempts to relieve symptoms of bad design, instead of addressing the underlying problem. Too many 'loosely coupled' designs attempt to rely on the Service Locator anti-pattern, which, by default, introduces a dependency to a concrete Service Locator throughout a code base. However, exclusively using the Constructor Injection and Composition Root design patterns eliminate the problem altogether, resulting in a simpler design with fewer moving parts.

- It pulls in the direction of the lowest common denominator.

- It stifles innovation, because new, creative, but radical ideas may not fit into the narrow view of the world a Conforming Container defines.

- It makes it more difficult to avoid using a DI Container. A DI Container can be useful in certain scenarios, but often, hand-coded composition is better than using a DI Container. However, if a library or framework depends on a Conforming Container, it may be difficult to harvest the benefits of hand-coded composition.

- It may introduce versioning hell. Imagine that you need to use a library that depends on Confainer 1.3.7 in an application that also uses a framework that depends on Confainer 2.1.7. Since a Conforming Container is intended as an infrastructure component, this is likely to happen, and to cause much grief.

- A Conforming Container is often a product of Speculative Generality, instead of a product of need. As such, the API is likely to be poorly suited to address real-world scenarios, be difficult to extent, and may exhibit churn in the form of frequent breaking changes.

- If Adapters are supplied by contributors (often the DI Container maintainers themselves), the Adapters may have varying quality levels, and may not support the latest version of the Conforming Container.

A code base using a Conforming Container may have code like this all over the place:

var foo = container.Resolve<IFoo>(); // ... use foo for something... var bar = container.Resolve<IBar>(); // ... use bar for something else... var baz = container.Resolve<IBaz>(); // ... use baz for something else again...

This breaks encapsulation, because it's impossible to identify a class' collaborators without reading its entire code base.

Additionally, concrete DI Containers have distinct feature sets. Although likely to be out of date by now, this feature comparison chart from my book illustrate this point:

| Castle Windsor | StructureMap | Spring.NET | Autofac | Unity | MEF | |

|---|---|---|---|---|---|---|

| Code as Configuration | x | x | x | x | ||

| Auto-registration | x | x | x | |||

| XML configuration | x | x | x | x | x | |

| Modular configuration | x | x | x | x | x | x |

| Custom lifetimes | x | x | (x) | x | ||

| Decommissioning | x | x | (x) | x | ||

| Interception | x | x | x |

This is only a simple chart that plots the most common features of DI Containers. Each DI Container has dozens of features - many of them unique to that particular DI Container. A Conforming Container can either support an intersection or union of all those features.

A Conforming Container that targets only the intersection of all features will be able to support only a small fraction of all available features, diminishing the value of the Conforming Container to the point where it becomes gratuitous.

A Conforming Container that targets the union of all features is guaranteed to consist mostly of a multitude of NotImlementedExceptions, or, put in another way, massively breaking the Liskov Substitution Principle.

Typical causes #

The typical causes of the Conforming Container anti-pattern are:

- Lack of understanding of Dependency Injection. Dependency Injection is a set of patterns driven by the Dependency Inversion Principle. A DI Container is an optional library, not a required part.

- A fear of letting an entire code base depend on a concrete DI Container, if that container turns out to be a poor choice. Few programmers have thouroughly surveyed all available DI Containers before picking one for a project, so architects desire to have the ability to replace e.g. StructureMap with Ninject.

- Library designers mistakenly thinking that Dependency Injection support involves defining a Conforming Container.

- Framework designers mistakenly thinking that Dependency Injection support involves defining a Conforming Container.

Known exceptions #

There are no cases known to me where a Conforming Container is a good solution to the problem at hand. There's always a better and simpler solution.

Refactored solution #

Instead of relying on the Service Locator anti-pattern, all collaborating classes should rely on the Constructor Injection pattern:

public class CorrectClient { private readonly IFoo foo; private readonly IBar bar; private readonly IBaz baz; public CorrectClient(IFoo foo, IBar bar, IBaz baz) { this.foo = foo; this.bar = bar; this.baz = baz; } public void DoSomething() { // ... use this.foo for something... // ... use this.bar for something else... // ... use this.baz for something else again... } }

This leaves all options open for any code consuming the CorrectClient class. The only exception to relying on Constructor Injection is when you need to compose all these collaborating classes. The Composition Root has the single responsibility of composing all the objects into a working object graph:

public class CompositionRoot { public CorrectClient ComposeClient() { return new CorrectClient( new RealFoo(), new RealBar(), new RealBaz()); } }

In this example, the final graph is rather shallow, but it can be as complex and deep as necessary. This Composition Root uses hand-coded composition, but if you want to use a DI Container, the Composition Root is where you put it:

public class WindsorCompositionRoot { private readonly WindsorContainer container; public WindsorCompositionRoot() { this.container = new WindsorContainer(); // Configure the container here, // or better yet: use a WindsorInstaller } public CorrectClient ComposeClient() { return this.container.Resolve<CorrectClient>(); } }

This class (and perhaps a few auxiliary classes, such as a Windsor Installer) is the only class that uses a concrete DI Container. This is the Hollywood Principle in action. There's no reason to hide the DI Container behind an interface, because it has no clients. The DI Containers knows about the application; the application knows nothing about the DI Container.

In all but the most trivial of applications, the Composition Root is only an extremely small part of the entire application.

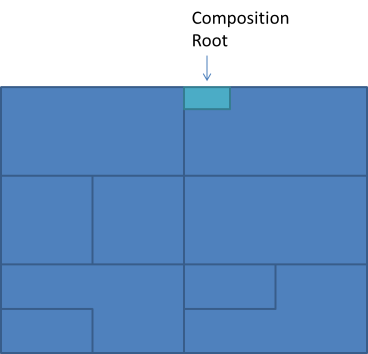

(The above picture is meant to illustrate an arbitrary application architecture; it could be layered, onion, hexagonal, or something else - it doesn't really matter.) If you want to replace one DI Container with another DI Container, you only replace the Composition Root; the rest of the application will never notice the difference.

Notice that only applications should have Composition Roots. Libraries and frameworks should not.

- Library classes should be defined with Constructor Injection throughout. If the library object model is very complex, a few Facades can be supplied to make it easier for library users to get started. See my article on DI-friendly libraries for more details.

- Frameworks should have appropriate hooks built in. These hooks should not be designed as Service Locators, but rather as Abstract Factories. See my article on DI-friendly frameworks for more details.

Variations #

Sometimes the Conforming Container only defines a Service Locator-like API, and sometimes it also defines a configuration API. That configuration API may include various axes of configurability, most notably lifetime management and decommisioning.

Decommissioning is often designed around the concept of a disposable 'context' scope, but as I explain in my book, that's not an extensible pattern.

Known examples #

There are various known examples of Conforming Containers for .NET:

- Common Service Locator

- ASP.NET MVC Dependency Resolver

- ASP.NET Web API Dependency Resolver

- NServiceBus IContainer

- Rebus container adapters

Configuring Azure Web Jobs

It's easy to configure Azure Web Jobs written in .NET.

Azure Web Jobs is a nice feature for Azure Web Sites, because it enables you to bundle a background worker, scheduled batch job, etc. together with your Web Site. It turns out that this feature works pretty well, but it's not particularly well-documented, so I wanted to share a few nice features I've discovered while using them.

You can write a Web Job as a simple Command Line executable, but if you can supply command-line arguments to it, I have yet to discover how to do that. A good alternative is an app.config file with configuration settings, but it can be a hassle to deal with various configuration settings across different deployment environments. There's a simple solution to that.

CloudConfigurationManager #

If you use CloudConfigurationManager.GetSetting, configuration settings are read using various fallback mechanisms. The CloudConfigurationManager class is poorly documented, and I couldn't find documentation for the current version, but one documentation page about a deprecated version sums it up well enough:

"The GetSetting method reads the configuration setting value from the appropriate configuration store. If the application is running as a .NET Web application, the GetSetting method will return the setting value from the Web.config or app.config file. If the application is running in Windows Azure Cloud Service or in a Windows Azure Website, the GetSetting will return the setting value from the ServiceConfiguration.cscfg."That is probably still true, but I've found that it actually does more than that. As far as I can tell, it attempts to read configuration settings in this prioritized order:

- Try to find the configuration value in the Web Site's online configuration (see below).

- Try to find the configuration value in the .cscfg file.

- Try to find the configuration value in the app.config file or web.config file.

(It's possible that, under the hood, this UI actually maintains an auto-generated .cscfg file, in which case the first two bullet points above turn out to be one and the same.)

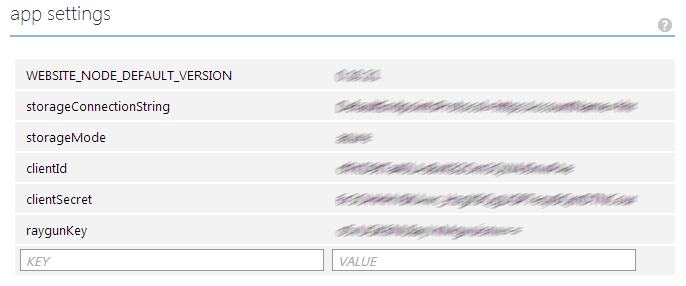

This is a really nice feature, because it means that you can push your deployments directly from your source control system (I use Git), and leave your configuration files empty in source control:

<appSettings> <add key="timeout" value="0:01:00" /> <add key="estimatedDuration" value="0:00:02" /> <add key="toleranceFactor" value="2" /> <add key="idleTime" value="0:00:05" /> <add key="storageMode" value="files" /> <add key="storageConnectionString" value="" /> <add key="raygunKey" value="" /> </appSettings>

Instead of having to figure out how to manage or merge those super-secret keys in the build system, you can simply shortcut the whole issue by not involving those keys in your build system; they're only stored in Azure - where you can't avoid having them anyway, because your system needs them in order to work.

Usage #

It's easy to use CloudConfigurationManager: instead of getting your configuration values with ConfigurationManager.AppSettings, you use CloudConfigurationManager.GetSetting:

let clientId = CloudConfigurationManager.GetSetting "clientId"

The CloudConfigurationManager class isn't part of the .NET Base Class Library, but you can easily add it from NuGet; it's called Microsoft.WindowsAzure.ConfigurationManager. The Azure SDK isn't required - it's just a stand-alone library with no dependencies, so I happily add it to my Composition Root when I know I'm going to deploy to an Azure Web Site.

Web Jobs #

Although I haven't found any documentation to that effect yet, a .NET console application running as an Azure Web Job will pick up configuration settings in the way described above. On other words, it shares configuration values with the web site that it's part of. That's darn useful.

Comments

Howard, thank you for writing. You should always keep your secrets out of source control. In some projects, I've used web.config transforms for that purpose. Leave your checked-in .config files empty, and have local (not checked-in) .config files on development machines, production servers, and so on.

As far as I know, on most other platforms, people simply use environment variables instead of configuration files. To me, that sounds like a simple solution to the problem.

Service Locator violates SOLID

Yet another reason to avoid the Service Locator anti-pattern is that it violates the principles of Object-Oriented Design.

Years ago, I wrote an article about Service Locator. Regular readers of this blog may already know that I consider Service Locator an anti-pattern. That hasn't changed, but I recently realized that there's another way to explain why Service Locator is the inverse of good Object-Oriented Design (OOD). My original article didn't include that perspective at all, so perhaps this is a clearer way of explaining it.

In this article, I'll assume that you're familiar with the SOLID principles (also known as the Principles of OOD), and that you accept them as generally correct. It's not because I wish to argue by an appeal to authority, but rather because threre's already a large body of work that explains why these principles are beneficial to software design.

In short, Service Locator violates SOLID because it violates the Interface Segregation Principle (ISP). That's because a Service Locator effectively has infinitely many members.

Service Locator deconstructed #

In order to understand why a Service Locator has infinitely many members, you'll need to understand what a Service Locator is. Often, it's a class or interface with various members, but it all boils down to a single member:

T Create<T>();

Sometimes the method takes one or more parameters, but that doesn't change the conclusion, so I'm leaving out those input parameters to keep things simple.

A common variation is the untyped, non-generic variation:

object Create(Type type);

Since my overall argument relies on the generic version, first I'll need to show you why those two methods are equivalent. If you imagine that all you have is the non-generic version, you can easily write a generic extension method for it:

public static class ServiceLocatorEnvy { public static T Create<T>(this IServiceLocator serviceLocator) { return (T)serviceLocator.Create(typeof(T)); } }

As you can see, this extension method has exactly the same signature as the generic version; you can always create a generic Service Locator based on a non-generic Service Locator. Thus, while my main argument (coming up next) is based on a generic Service Locator, it also applies to non-generic Service Locators.

Infinite methods #

From a client's perspective, there's no limit to how many variations of the Create method it can invoke:

var foo = serviceLocator.Create<IFoo>(); var bar = serviceLocator.Create<IBar>(); var baz = serviceLocator.Create<IBaz>(); // etc.

Again, from the client's perspective, that's equivalent to multiple method definitions like:

IFoo CreateFoo(); IBar CreateBar(); IBaz CreateBaz(); // etc.

However, the client can keep coming up with new types to request, so effectively, the number of Create methods is infinite!

Relation to the Interface Segregation Principle #

By now, you understand that a Service Locator is an interface or class with effectively an infinite number of methods. That violates the ISP, which states:

Clients should not be forced to depend on methods they do not use.However, since a Service Locator exposes an infinite number of methods, any client using it is forced to depend on infinitely many methods it doesn't use.

Quod Erat Demonstrandum #

The Service Locator anti-pattern violates the ISP, and thus it also violates SOLID as a whole. SOLID is also known as the Principles of OOD. Therefore, Service Locator is bad Objected-Oriented Design.

Update 2015-10-26: The fundamental problem with Service Locator is that it violates encapsulation.

Comments

Nelson, thank you for writing. First, it's important to realize that this overall argument applies to methods with 'free' generic type parameters; that is, a method where the type in itself isn't generic, but the method is. One example of the difference I mean is that a generic Abstract Factory is benign, whereas a Server Locator isn't.

Second, that still leaves the case where you may have a generic parameter that determines the return type of the method. The LINQ Select method is an example of such a method. These tend not to be problematic, but I had some trouble explaining why that is until James Jensen explained it to me.

Dear Mark,

I totally agree with your advise on Service Locator being an anti-pattern, specifically if it is used within the core logic. However, I don't think that your argumentation in this post is correct. I think that you apply Object-Oriented Programming Principles to Metaprogramming, which should not be done, but I'm not quite sure if my argument is completely reasonable.

All .NET DI Containers that I know of use the Reflection API to solve the problem of dynamically composing an object graph. The very essence of this API is it's ability to inspect and call members of any .NET type, even the ones that the code was not compiled against. Thus you do not use Strong Object-Oriented Typing any longer, but access the members of a e.g. a class indirectly using a model that relies on the Type class and its associated types. And this is the gist of it: code is treated as data, this is Metaprogramming, as we all know.

Without these capabilities, DI containers wouldn't be able to do their job because they couldn't e.g. analyze the arguments of a class's constructor to further

instantiate other objects needed. Thus we can say that DI containers are just an abstraction over the Metaprogramming API of .NET.

And of course, these containers offer an API that is shaped by their Metaprogramming foundation. This can be seen in your post: although you discuss the

generic variation T Create<T>(), this is just syntactic sugar for the actual important method: object Create(Type type).

Metaprogramming in C# is totally resolved at runtime, and therefore one shouldn't apply the Interface Segregation Principle to APIs that are formed by it. These are designed to help you improve the Object-Oriented APIs which particularly incorporate Static Typing enforced by the compiler. A DI container does not have an unlimited number of Create methods, it has a single one and it receives a Type argument - the generic version just creates the Type object for you. And the parameter has to be as "weak" as Type, because we cannot use Static Typing - this technically allows the client to pass in types that the container is not configured for, but you cannot prevent this using the compiler because of the dynamic nature of the Reflection API.

What is your opinion on that?

Kenny, thank you for writing. The point that this post is making is mainly that Service Locator violates the Interface Segregation Principle (ISP). The appropriate perspective on ISP (and LSP and DIP as well) is from a client. The client of a Service Locator effectively sees an API with infinitely many methods. That's where the damage is done.

How the Service Locator is implemented isn't important to ISP, LSP, or DIP. (The SRP and OCP, on the other hand, relate to implementations.) You may notice that this article doesn't use the word container a single time.

Dear Mark,

I get the point that you are talking from the client's perspective - but even so, a client programming against a Service Locator should be aware that it is programming against an abstraction of a Metaprogramming API (and not an Object-Oriented API). If you think about the call to the Create method, then you basically say "Give me an object graph with the specified type as the object graph root" as a client - how do you implement this with the possibilities that OOP provides? You can't model this with classes, interfaces, and the means of Procedural and Structural Programming that are integrated in OOP - because these techniques do not allow you to treat code as data.

And again, your argument is based on the generic version of the Create method, but that shouldn't be the

point of focus. It is the non-generic version object Create (Type type) which clearly indicates

that it is a Metaprogramming API because of the Type parameter and the object return type -

Type is the entry point to .NET

Reflection and object is the only type the Service Locator can guarantee as the object graph is dynamically resolved

at runtime - no Strong Typing involved. The existence of the generic Create variation is merely justified

because software developers are lazy - they don't want to manually downcast the returned object to the type they

actually need. Well, one could argue that this comforts the Single Point of Truth / Don't repeat yourself

principles, too, as all information to create the object graph and to downcast the root object are derived

from the generic type argument, but that doesn't change the fact that Service Locator is a Metaprogramming API.

And that's why I solely used the term DI container throughout my previous comment, because Service Locator is just the part of the API of a DI container that is concerned with resolving object graphs (and the Metainformation to create these object graphs was registered beforehand). Sure, you can implement Service Locators as a hard-wired registry of Singleton objects (or even Factories that create objects on the fly) to circumvent the use of the Reflection API (although one probably had to use some sort of Type ID in this case, maybe in form of a string or GUID). But honestly, these are half-baked solutions that do not solve the problem in a reusable way. A reusable Service Locator must treat code as data, especially if you want additional features like lifetime management.

Another point: take for example the MembershipProvider class - this polymorphic abstraction is truly a violation of the Interface Segregation Principle because it offers way too many members that a client probably won't need. But notice that each of these members has a different meaning, which is not the case with the Create methods of the Service Locator. The generic Create method is just another abstraction over the non-generic version to simplify the access to the Service Locator.

Long story short: Service Locator is a Metaprogramming API, the SOLID principles target Object-Oriented APIs, thus the latter shouldn't be used to assess the former. There's is no real way to hide the fact that clients need to be aware that they are calling a Metaprogramming API if they directly reference a Service Locator (which shouldn't be done in core logic).

Kenny, thank you for writing. While I don't agree with everything you wrote, your arguments are well made, and I have no problems following them. If we disagree, I think we disagree about semantics, because the way I read your comment, I think it eventually leads you to the same conclusions that I have arrived at.

Ultimately, you also state that Service Locator isn't an Object-Oriented Design, and in that, I entirely agree. The SOLID principles are also known as the principles of Object-Oriented Design, so when I'm stating that I think that Service Locator violates SOLID, my more general point is that Service Locator isn't Object-Oriented because it violates a fundamental principle of OOD. You seem to have arrived at the same conclusion, although via a different route. I always like when that happens, because it confirms that the conclusion may be true.

To be honest, though, I don't consider the arguments I put forth in the present article as my strongest ever. Sometimes, I write articles on topics that I've thought about for years, but I also often write articles that are half-baked ideas; I put these articles out in order to start a discussion, so I appreciate your comments.

I'm much happier with the article that argues that Service Locator violates Encapsulation.

AutoFixture conventions with Albedo

You can use Albedo with AutoFixture to build custom conventions.

In a question to one of my previous posts, Jeff Soper asks about using custom, string-based conventions for AutoFixture:

"I always wince when testing for the ParameterType.Name value [...] It seems like it makes a test that would use this implementation very brittle."Jeff's concern is that when you're explicitly looking for a parameter (or property or field) with a particular name (like "currencyCode"), the unit test suite may become brittle, because if you change the parameter name, the string may retain the old name, and the Customization no longer works.

Jeff goes on to say:

"This makes me think that I shouldn't need to be doing this, and that a design refactoring of my SUT would be a better option."His concerns can be addressed on several different levels, but in this post, I'll show you how you can leverage Albedo to address some of them.

If you often find yourself in a situation where you're writing an AutoFixture Customization based on string matching of parameters, properties or fields, you should ask yourself if you're targeting one specific class, or if you're writing a convention? If you often target individual specific classes, you probably need to rethink your strategy, but you can easily run into situations where you need to introduce true conventions in your code base. This can be beneficial, because it'll make your code more consistent.

Here's an example from the code base in which I'm currently working. It's a REST service written in F#. To model the JSON going in and out, I've defined some Data Transfer Records, and some of them contain dates. However, JSON doesn't deal particularly well with dates, so they're treated as strings. Here's a JSON representation of a comment:

{

"author": {

"id": "1234",

"name": "Mark Seemann",

"email": "1234@ploeh.dk"

},

"createdDate": "2014-04-30T18:14:08.1051775+00:00",

"text": "Is this a comment?"

}

The record is defined like this:

type CommentRendition = { Author : PersonRendition CreatedDate : string Text : string }

This is a problem for AutoFixture, because it sees CreatedDate as a string, and populates it with an anonymous string. However, much of the code base expects the CreatedDate to be a proper date and time value, which can be parsed into a DateTimeOffset value. This would cause many tests to fail if I didn't change the behaviour.

Instead of explicitly targeting the CreatedDate property on the CommentRendition record, I defined a conventions: any parameter, field, or property that ends with "date" and has the type string, should be populated with a valid string representation of a date and time.

This is easy to write as a one-off Customization, but then it turned out that I needed an almost similar Customization for IDs: any parameter, field, or property that ends with "id" and has the type string, should be populated with a valid GUID string formatted in a certain way.

Because ParameterInfo, PropertyInfo, and FieldInfo share little polymorphic behaviour, it's time to pull out Albedo, which was created for situations like this. Here's a reusable convention which can check any parameter, proeprty, or field for a given name suffix:

type TextEndsWithConvention(value, found) = inherit ReflectionVisitor<bool>() let proceed x = TextEndsWithConvention (value, x || found) :> IReflectionVisitor<bool> let isMatch t (name : string) = t = typeof<string> && name.EndsWith(value, StringComparison.OrdinalIgnoreCase) override this.Value = found override this.Visit (pie : ParameterInfoElement) = let pi = pie.ParameterInfo isMatch pi.ParameterType pi.Name |> proceed override this.Visit (pie : PropertyInfoElement) = let pi = pie.PropertyInfo isMatch pi.PropertyType pi.Name |> proceed override this.Visit (fie : FieldInfoElement) = let fi = fie.FieldInfo isMatch fi.FieldType fi.Name |> proceed static member Matches value request = let refraction = CompositeReflectionElementRefraction<obj>( [| ParameterInfoElementRefraction<obj>() :> IReflectionElementRefraction<obj> PropertyInfoElementRefraction<obj>() :> IReflectionElementRefraction<obj> FieldInfoElementRefraction<obj>() :> IReflectionElementRefraction<obj> |]) let r = refraction.Refract [request] r.Accept(TextEndsWithConvention(value, false)).Value

It simply aggregates a boolean value (found), based on the name and type of various properties, fields, and parameters that comes its way. If there's a match, found will be true; otherwise, it'll be false.

The date convention is now trivial:

type DateStringCustomization() = let builder = { new ISpecimenBuilder with member this.Create(request, context) = if request |> TextEndsWithConvention.Matches "date" then box ((context.Resolve typeof<DateTimeOffset>).ToString()) else NoSpecimen request |> box } interface ICustomization with member this.Customize fixture = fixture.Customizations.Add builder

The ID convention is very similar:

type IdStringCustomization() = let builder = { new ISpecimenBuilder with member this.Create(request, context) = if request |> TextEndsWithConvention.Matches "id" then box ((context.Resolve typeof<Guid> :?> Guid).ToString "N") else NoSpecimen request |> box } interface ICustomization with member this.Customize fixture = fixture.Customizations.Add builder

With these conventions in place in my entire test suite, I can simply follow them and get correct values. What happens if I refactor one of my fields so that they no longer have the correct suffix? That's likely to break my tests, but that's a good thing, because it alerts me that I deviated from the conventions, and (inadvertently, I should hope) made the production code less consistent.

Single Writer Web Jobs on Azure

How to ensure a Single Writer in load-balanced Azure deployments

In my Functional Architecture with F# Pluralsight course, I describe how using the Actor model (F# Agents) can make a concurrent system much simpler to implement, because the Agent can ensure that the system only has a Single Writer. Having a Single Writer eliminates much complexity, because while the writer decides what to write (if at all), nothing changes. Multiple readers can still read data, but as long as the Single Writer can keep up with input, this is a much simpler way to deal with concurrency than the alternatives.

However, the problem is that while F# Agents work well on a single machine, they don't (currently) scale. This is particularly notable on Azure, because in order get the guaranteed SLA, you'll need to deploy your application to two or more nodes. If you have an F# Agent running on both nodes, obviously you no longer have a Single Writer, and everything just becomes much more difficult. If only there was a way to ensure a Single Writer in a distributed environment...

Fortunately, it looks like the (in-preview) Azure feature Web Jobs (inadvertently) solves this major problem for us. Web Jobs come in three flavours:

- On demand

- Continuously running

- Scheduled

That turns out not to be a particularly useful option as well, because

"If your website runs on more than one instance, a continuously running task will run on all of your instances."On the other hand

"On demand and scheduled tasks run on a single instance selected for load balancing by Microsoft Azure."It sounds like Scheduled Web Jobs is just what we need!

There's just one concern that we need to address: what happens if a Scheduled Web Job is taking too long running, in such a way that it hasn't completed when it's time to start it again. For example, what if you run a Scheduled Web Job every minute, but it sometimes takes 90 seconds to complete? If a new process starts executing while the first one is running, you would no longer have a Single Writer.

Reading the documentation, I couldn't find any information about how Azure handles this scenario, so I decided to perform some tests.

The Qaiain email micro-service proved to be a fine tool for the experiment. I slightly modified the code to wait for 90 seconds before exiting:

[<EntryPoint>] let main argv = match queue |> AzureQ.dequeue with | Some(msg) -> msg.AsString |> Mail.deserializeMailData |> send queue.DeleteMessage msg | _ -> () Async.Sleep 90000 |> Async.RunSynchronously match queue |> AzureQ.dequeue with | Some(msg) -> msg.AsString |> Mail.deserializeMailData |> send queue.DeleteMessage msg | _ -> () 0 // return an integer exit code

In addition to that, I also changed how the subject of the email that I would receive would look, in order to capture the process ID of the running application, as well as the time it sent the email:

smtpMsg.Subject <-

sprintf

"Process ID: %i, Time: %O"

(Process.GetCurrentProcess().Id)

DateTimeOffset.Now

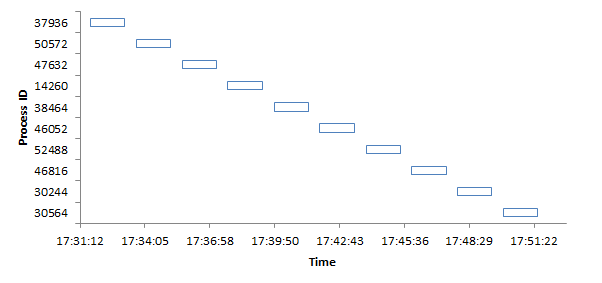

My hypothesis was that if Scheduled Web Jobs are well-behaved, a new job wouldn't start if an existing job was already running. Here are the results:

| Time | Process |

|---|---|

| 17:31:39 | 37936 |

| 17:33:10 | 37936 |

| 17:33:43 | 50572 |

| 17:35:14 | 50572 |

| 17:35:44 | 47632 |

| 17:37:15 | 47632 |

| 17:37:46 | 14260 |

| 17:39:17 | 14260 |

| 17:39:50 | 38464 |

| 17:41:21 | 38464 |

| 17:41:51 | 46052 |

| 17:43:22 | 46052 |

| 17:43:54 | 52488 |

| 17:45:25 | 52488 |

| 17:45:56 | 46816 |

| 17:47:27 | 46816 |

| 17:47:58 | 30244 |

| 17:49:29 | 30244 |

| 17:50:00 | 30564 |

| 17:51:31 | 30564 |

This looks great, but it's easier to see if I visualize it:

As you can see, processes do not overlap in time. This is a highly desirable result, because it seems to guarantee that we can have a Single Writer running in a distributed, load-balanced system.

Azure Web Jobs are currently in preview, so let's hope the Azure team preserve this functionality in the final version. If you care about this, please let the team know.

Composed assertions with Unquote

With F# and Unquote, you can write customized, composable assertions.

Yesterday, I wrote this unit test:

[<Theory; UnitTestConventions>] let PostReturnsCorrectResult (sut : TasksController) (task : TaskRendition) = let result : IHttpActionResult = sut.Post task verify <@ result :? Results.StatusCodeResult @> verify <@ HttpStatusCode.Accepted = (result :?> Results.StatusCodeResult).StatusCode @>

For the record, here's the SUT:

type TasksController() = inherit ApiController() member this.Post(task : TaskRendition) = this.StatusCode HttpStatusCode.Accepted :> IHttpActionResult

There's not much to look at yet, because at that time, I was just getting started, and as always, I was using Test-Driven Development. The TasksController class is an ASP.NET Web API 2 Controller. In this incarnation, it merely accepts an HTTP POST, ignores the input, and returns 202 (Accepted).

The unit test uses AutoFixture.Xunit to create an instance of the SUT and a DTO record, but that's not important in this context. It also uses Unquote for assertions, although I've aliased the test function to verify. Although Unquote is an extremely versatile assertion module, I wasn't happy with the assertions I wrote.

What's the problem? #

The problem is the duplication of logic. First, it verifies that result is, indeed, an instance of StatusCodeResult. Second, if that's the case, it casts result to StatusCodeResult in order to access its concrete StatusCode property; it feels like I'm almost doing the same thing twice.

You may say that this isn't a big deal in a test like this, but in my experience, this is a smell. The example looks innocuous, but soon, I'll find myself writing slightly more complicated assertions, where I need to type check and cast more than once. This can rapidly lead to Assertion Roulette.

The xUnit.net approach #

For a minute there, I caught myself missing xUnit.net's Assert.IsAssignableFrom<T> method, because it returns a value of type T if the conversion is possible. That would have enabled me to write something like:

let scr = Assert.IsAssignableFrom<Results.StatusCodeResult> result

Assert.Equal(HttpStatusCode.Accepted, scr.StatusCode)

It seems a little nicer, although in my experience, this quickly turns to spaghetti, too. Still, I found myself wondering if I could do something similar with Unquote.

A design digression #

At this point, you are welcome to pull GOOS at me and quote: listen to your tests! If the tests are difficult to write, you should reconsider your design; I agree, but I can't change the API of ASP.NET Web API. In Web API 1, my preferred return type for Controller actions were HttpResponseMessage, but it was actually a bit inconvenient to work with in unit tests. Web API 2 introduces various IHttpActionResult implementations that are easier to unit test. Perhaps this could be better, but it seems like a step in the right direction.

In any case, I can't change the API, so coming up with a better way to express the above assertion is warranted.

Composed assertions #

To overcome this little obstacle, I wrote this function:

let convertsTo<'a> candidate = match box candidate with | :? 'a as converted -> Some converted | _ -> None

(You have to love a language that let's you write match box! There's also a hint of such nice over Some converted...)

The convertsTo function takes any object as input, and returns an Option containing the converted value, if the conversion is possible; otherwise, it returns None. In other words, the signature of the convertsTo function is obj -> 'a option.

This enables me to write the following Unquote assertion:

[<Theory; UnitTestConventions>] let PostReturnsCorrectResult (sut : TasksController) (task : TaskRendition) = let result : IHttpActionResult = sut.Post task verify <@ result |> convertsTo<Results.StatusCodeResult> |> Option.map (fun x -> x.StatusCode) |> Option.exists ((=) HttpStatusCode.Accepted) @>

While this looks more verbose than my two original assertions, this approach is more composable.

The really beautiful part of this is that Unquote can still tell me what goes wrong, if the test doesn't pass. As an example, if I change the SUT to:

type TasksController() = inherit ApiController() member this.Post(task : TaskRendition) = this.Ok() :> IHttpActionResult

The assertion message is:

System.Web.Http.Results.OkResult |> Dsl.convertsTo |> Option.map (fun x -> x.StatusCode) |> Option.exists ((=) Accepted) None |> Option.map (fun x -> x.StatusCode) |> Option.exists ((=) Accepted) None |> Option.exists ((=) Accepted) false

Notice how, in a series of reductions, Unquote breaks down for me exactly what went wrong. The top line is my original expression. The next line shows me the result of evaluating System.Web.Http.Results.OkResult |> Dsl.convertsTo; the result is None. Already at this point, it should be quite evident what the problem is, but in the next line again, it shows the result of evaluating None |> Option.map (fun x -> x.StatusCode); again, the result is None. Finally, it shows the result of evaluating None |> Option.exists ((=) Accepted), which is false.

Here's another example. Assume that I change the SUT to this:

type TasksController() = inherit ApiController() member this.Post(task : TaskRendition) = this.StatusCode HttpStatusCode.OK :> IHttpActionResult

In this example, instead of returning the wrong implementation of IHttpActionResult, the SUT does return a StatusCodeResult instance, but with the wrong status code. Unquote is still very helpful:

System.Web.Http.Results.StatusCodeResult |> Dsl.convertsTo |> Option.map (fun x -> x.StatusCode) |> Option.exists ((=) Accepted) Some System.Web.Http.Results.StatusCodeResult |> Option.map (fun x -> x.StatusCode) |> Option.exists ((=) Accepted) Some OK |> Option.exists ((=) Accepted) false

Notice that it still uses a series of reductions to show how it arrives at its conclusion. Again, the first line is the original expression. The next line shows the result of evaluating System.Web.Http.Results.StatusCodeResult |> Dsl.convertsTo, which is Some System.Web.Http.Results.StatusCodeResult. So far so good; this is as required. The third line shows the result of evaluating Some System.Web.Http.Results.StatusCodeResult |> Option.map (fun x -> x.StatusCode), which is Some OK. Still good. Finally, it shows the result of evaluating Some OK |> Option.exists ((=) Accepted), which is false. The value in the option was HttpStatusCode.OK, but should have been HttpStatusCode.Accepted.

Summary #

Unquote is a delight to work with. As the project site explains, it's not an API or a DSL. It just evaluates and reports on the expressions you write. If you already know F#, you already know how to use Unquote, and you can write your assertion expressions as expressive and complex as you want.

Exude

Announcing Exude, an extension to xUnit.net providing test cases as first-class, programmatic citizens.

Sometimes, when writing Parameterized Tests with xUnit.net, you need to provide parameters that don't lend themselves easily to be defined as constants in [InlineData] attributes.

In Grean, we've let ourselves inspire by Mauricio Scheffer's blog post First-class tests in MbUnit, but ported the concept to xUnit.net and released it as open source.

It's called Exude and is available on GitHub and on NuGet.

Here's a small example:

[FirstClassTests] public static IEnumerable<ITestCase> YieldFirstClassTests() { yield return new TestCase(_ => Assert.Equal(1, 1)); yield return new TestCase(_ => Assert.Equal(2, 2)); yield return new TestCase(_ => Assert.Equal(3, 3)); }

More examples and information is available on the project site.

Comments

1. A framework generally must have some sort of initializer, particularly if it must do something like add route values to MVC (which must be done during a particular point in the application lifecycle). This startup code must be placed in the composition root of the application. Considering that the framework should have no knowledge of the composition root of the application, how best can this requirement be met? The only thing I have come up with is to add a static method that must be in the application startup code and using WebActivator to get it running.

2. Sort of related to the first issue, how would it be possible to address the extension point where abstract factories can be injected without providing a static method? I am considering expanding the static method from #1 to include an overload that accepts an Action<IConfiguration> as a parameter. The developer can then use that overload to create a method Configure(IConfiguration configuration) in their application to set the various abstract factory (in the IConfiguration instance, of course). The IConfiguration interface would contain well named members to set specific factories, so it is easy to discover what factory types can be provided. Could this idea be improved upon?

3. Considering that my framework relies on the .NET garbage collector to dispose of objects that were created by a given abstract factory, what pattern can I adapt to ensure the framework always calls Release() at the right time? A concrete example would seem to be in order.

Shad, thank you for writing. From your questions it's a bit unclear to me whether you're writing a framework or a library. Although you write framework, your questions sound like it's a library... or at least, if you're writing a framework, it sounds like you're writing a sub-framework for another framework (MVC). Is that correct?

Re: 1. It's true that a framework needs some sort of initializer. In the ideal world, it would look something like

new MyFrameworkRunner().Run();, and you would put this single line of code in the entry point of your application (its Main method). Unfortunately, ASP.NET doesn't work that way, so we have to work with the cards we're dealt. Here, the entry point is Application_Start, so if you need to initialise something, this is where you do it.The initialisation method can be a static or instance method.

Re: 2. That sounds reasonable, but it depends upon where your framework stores the custom configuration. If you add a method overload to a static method, it almost indicates to me that the framework's configuration is stored in shared state, which is never attractive. An alternative is to utilise the Dependency Inversion Principle, and instead inject any custom configuration into the root of the framework itself:

new MyFrameworkRunner(someCustomCoonfiguration).Run();Re: 3. A framework is responsible for the lifetime of the objects it creates. If it creates objects, it must also provide an extensibility point for decommissioning them after use. This is the reason I strongly recommend (in this very article) that an Abstract Factory for a framework must always have a Release method in addition to the Create method.

A concrete example is difficult to provide when the question is abstract...

If you want to take a look, the framework I am referring to is called MvcSiteMapProvider. I would definitely categorize it as a sub-framework of MVC because it can rely on the host application to provide service instances (although it doesn't have to). It has a static entry point to launch it's composition root (primarily because WebActivator requires there to be a static method, and WebActivator can launch the application without the need for the NuGet package to modify the Global.asax file directly), but the inner workings rely (almost) entirely on instances and constructor injection. There is still some refactoring to be done on the HTML helpers to put all of the logic into replaceable instances, which I plan to do in a future major version.

Since it is about 90% of the way there already, my plan is to modify the internal poor-man's DI container to accept injected factory instances to provide the alternate implementations. A set of default factories will be created during initialization, and then it will pass these instances (through the IConfiguration variable) out to the host application where it can replace or wrap the factories. After the host does what it needs to, the services will be wired up in the poor man's DI container and then its off to the races. I think this can be done without dropping support for the existing Conforming Container, meaning I don't need to wait for a major release to implement it.

Anyway, you have adequately answered my 2 questions about initialization and I think I am now on the right track. You also gave me some food for thought as how to accomplish this without making it static (although ultimately some wrapper method will need to be static in order to make it work with WebActivator).