Single Writer Web Jobs on Azure by Mark Seemann

How to ensure a Single Writer in load-balanced Azure deployments

In my Functional Architecture with F# Pluralsight course, I describe how using the Actor model (F# Agents) can make a concurrent system much simpler to implement, because the Agent can ensure that the system only has a Single Writer. Having a Single Writer eliminates much complexity, because while the writer decides what to write (if at all), nothing changes. Multiple readers can still read data, but as long as the Single Writer can keep up with input, this is a much simpler way to deal with concurrency than the alternatives.

However, the problem is that while F# Agents work well on a single machine, they don't (currently) scale. This is particularly notable on Azure, because in order get the guaranteed SLA, you'll need to deploy your application to two or more nodes. If you have an F# Agent running on both nodes, obviously you no longer have a Single Writer, and everything just becomes much more difficult. If only there was a way to ensure a Single Writer in a distributed environment...

Fortunately, it looks like the (in-preview) Azure feature Web Jobs (inadvertently) solves this major problem for us. Web Jobs come in three flavours:

- On demand

- Continuously running

- Scheduled

That turns out not to be a particularly useful option as well, because

"If your website runs on more than one instance, a continuously running task will run on all of your instances."On the other hand

"On demand and scheduled tasks run on a single instance selected for load balancing by Microsoft Azure."It sounds like Scheduled Web Jobs is just what we need!

There's just one concern that we need to address: what happens if a Scheduled Web Job is taking too long running, in such a way that it hasn't completed when it's time to start it again. For example, what if you run a Scheduled Web Job every minute, but it sometimes takes 90 seconds to complete? If a new process starts executing while the first one is running, you would no longer have a Single Writer.

Reading the documentation, I couldn't find any information about how Azure handles this scenario, so I decided to perform some tests.

The Qaiain email micro-service proved to be a fine tool for the experiment. I slightly modified the code to wait for 90 seconds before exiting:

[<EntryPoint>] let main argv = match queue |> AzureQ.dequeue with | Some(msg) -> msg.AsString |> Mail.deserializeMailData |> send queue.DeleteMessage msg | _ -> () Async.Sleep 90000 |> Async.RunSynchronously match queue |> AzureQ.dequeue with | Some(msg) -> msg.AsString |> Mail.deserializeMailData |> send queue.DeleteMessage msg | _ -> () 0 // return an integer exit code

In addition to that, I also changed how the subject of the email that I would receive would look, in order to capture the process ID of the running application, as well as the time it sent the email:

smtpMsg.Subject <-

sprintf

"Process ID: %i, Time: %O"

(Process.GetCurrentProcess().Id)

DateTimeOffset.Now

My hypothesis was that if Scheduled Web Jobs are well-behaved, a new job wouldn't start if an existing job was already running. Here are the results:

| Time | Process |

|---|---|

| 17:31:39 | 37936 |

| 17:33:10 | 37936 |

| 17:33:43 | 50572 |

| 17:35:14 | 50572 |

| 17:35:44 | 47632 |

| 17:37:15 | 47632 |

| 17:37:46 | 14260 |

| 17:39:17 | 14260 |

| 17:39:50 | 38464 |

| 17:41:21 | 38464 |

| 17:41:51 | 46052 |

| 17:43:22 | 46052 |

| 17:43:54 | 52488 |

| 17:45:25 | 52488 |

| 17:45:56 | 46816 |

| 17:47:27 | 46816 |

| 17:47:58 | 30244 |

| 17:49:29 | 30244 |

| 17:50:00 | 30564 |

| 17:51:31 | 30564 |

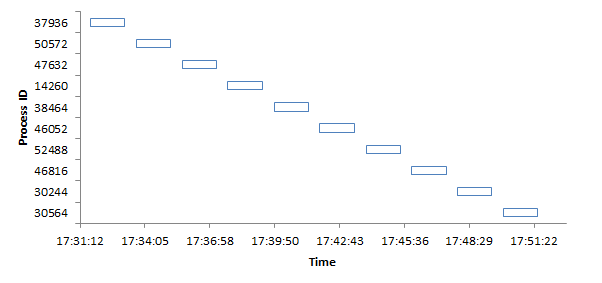

This looks great, but it's easier to see if I visualize it:

As you can see, processes do not overlap in time. This is a highly desirable result, because it seems to guarantee that we can have a Single Writer running in a distributed, load-balanced system.

Azure Web Jobs are currently in preview, so let's hope the Azure team preserve this functionality in the final version. If you care about this, please let the team know.