Testing Container Configurations by Mark Seemann

Here's a question I often get:

“Should I test my DI Container configuration?”

The motivation for asking mostly seems to be that people want to know whether or not their applications are correctly wired. That makes sense.

A related question I also often get is whether or not a particular container has a self-test feature? In this post I'll attempt to answer both questions.

Container Self-testing #

Some DI Containers have a method you can invoke to make it perform a consistency check on itself. As an example, StructureMap has the AssertConfigurationIsValid method that, according to documentation does “a full environment test of the configuration of [the] container.” It will “try to create every configured instance [...]”

Calling the method is really easy:

container.AssertConfigurationIsValid();

Such a self-test can often be an expensive operation (not only for StructureMap, but in general) because it's basically attempting to create an instance of each and every Service registered in the container. If the configuration is large, it's going to take some time, but it's still going to be faster than performing a manual test of the application.

Two questions remain: Is it worth invoking this method? Why don't all containers have such a method?

The quick answer is that such a method is close to worthless, which also explains why many containers don't include one.

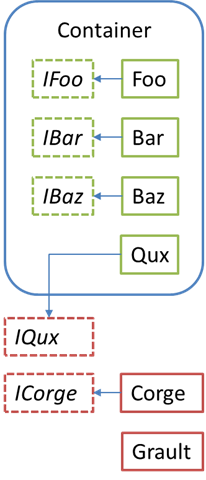

To understand the answer, consider the set of all components contained in the container in this figure:

The container contains the set of components IFoo, IBar, IBaz, Foo, Bar, Baz, and Qux so a self-test will try to create a single instance of each of these seven types. If all seven instances can be created, the test succeeds.

All this accomplishes is to verify that the configuration is internally consistent. Even so, an application could require instances of the ICorge, Corge or Grault types which are completely external to the configuration, in which case resolution would fail.

Even more subtly, resolution would also fail whenever the container is queried for an instance of IQux, since this interface isn't part of the configuration, even though it's related to the concrete Qux type which is registered in the container. A self-test only verifies that the concrete Qux class can be resolved, but it never attempts to create an instance of the IQux interface.

In short, the fact that a container's configuration is internally consistent doesn't guarantee that all services required by an application can be served.

Still, you may think that at least a self-test can constitute an early warning system: if the self-test fails, surely it must mean that the configuration is invalid? Unfortunately, that's not true either.

If a container is being configured using Auto-registration/Convention over Configuration to scan one or more assemblies and register certain types contained within, chances are that ‘too many' types will end up being registered - particularly if one or more of these assemblies are reusable libraries (as opposed to application-specific assemblies). Often, the number of redundant types added is negligible, but they may make the configuration internally inconsistent. However, if the inconsistency only affects the redundant types, it doesn't matter. The container will still be able to resolve everything the current application requires.

Thus, a container self-test method is worthless.

Then how can the container configuration be tested?

Explicit Testing of Container Configuration #

Since a container self-test doesn't achieve the desired goal, how can we ensure that an application can be composed correctly?

One option is to write an automated integration test (not a unit test) for each service that the application requires. Still, if done manually, you run the risk of forgetting to write a test for a specific service. A better option is to come up with a convention so that you can identify all the services required by a specific application, and then write a convention-based test to verify that the container can resolve them all.

Will this guarantee that the application can be correctly composed?

No, it only guarantees that it can be composed - not that this composition is correct.

Even when a composed instance can be created for each and every service, many things may still be wrong:

- Composition is just plain wrong:

- Decorators may be missing

- Decorators may have been added in the wrong order

- The wrong services are injected into consumers (this is more likely to happen when you follow the Reused Abstractions Principle, since there will be multiple concrete implementations of each interface)

- Configuration values like connection strings and such are incorrect - e.g. while a connection string is supplied to a constructor, it may not contain the correct values.

- Even if everything is correctly composed, the run-time environment may prevent the application from working. As an example, even if an injected connection string is correct, there may not be any connection to the database due to network or security misconfiguration.

In short, a Subcutaneous Test or full System Test is the only way to verify that everything is correctly wired. However, if you have good test coverage at the unit test level, a series of Smoke Tests is all that you need at the System Test level because in general you have good reason to believe that all behavior is correct. The remaining question is whether all this correct behavior can be correctly connected, and that tends to be an all-or-nothing proposition.

Conclusion #

While it would be possible to write a set of convention-based integration tests to verify the configuration of a DI Container, the return of investment is too low since it doesn't remove the need for a set of Smoke Tests at the System Test level.

Comments

A failing smoke test won't always tell you exactly what went wrong (while that's a failing of the test, it's not always that easy to fix). Rather than investigating, I'd prefer to have a failing integration test that means the smoke test won't even be run.

I find the most value comes from tests that try and resolve the root of the object graph from the bootstrapped container, i.e. anything I (or a framework) explicitly try and resolve at runtime.

Their being green doesn't necessarily mean the application is fine, but their being red means it's definitely broken. It is a duplication of testing, but so are the smoke tests and some of the unit tests (hopefully!).

The correct wireing is already tested by the DI Container itself. An error prone configuration will be obvious in the first developer or at least user test.

So, is this kind of test overhead, useful or even necessary?

I probably wouldn't do these kind tests.

Would it be worthwhile strategy to unit test my container's configuration by mocking the container's resolver? I'd like to be able to run my registration on a container, then assert that the mocked resolver received the correct "Resolve" messages in the correct order. Currently I'm using validating this with an integration test, but I was thinking that this would be much simpler---if my container supports it.

I'll go back and think a bit more about how I can test the resulting behaviour instead of the implementation.

Nice post! We have a set of automated developer acceptance tests which start the system in an machine.specifications test, execute business commands, shuts down the system and does business asserts on the external subsystems which are stubbed away depending on the feature under test. With that we have an implicit container test: the system boots and behave as expected. If someone adds new ccomponents for that feature which are not properly registered the tests will fail.

Daniel

While I agree on the principle one question came to my mind related to the first part. I don't understand why resolving IQux is an issue because even in runtime it's not required and not registered?

Thanks

Thomas

So, if an application code base requires IQux, the application is going to fail even when the container self-test succeeds.

You have made a very basic and primitive argument to sell a complex but feasible process as pointless:

and without a working example of a better way you explain why you are right. I am an avid reader of your posts and often reference them but IMO this particular argument is not well reasoned.

Your opening argument explains why you may have an issue when using the Service Locator anti-pattern:

Assertions such as the following would ideally be specified in a set of automated tests regardless of the method of composition

&

And I fail to see how Pure DI would make the following into lesser issues

&

My response was prompted by a statement made by you on stackoverflow. You are most highly regarded when it comes to .NET and DI and I feel statements such as "Some people hope that you can ask a DI Container to self-diagnose, but you can't." are very dangerous when they are out-of-date.

Simple Injector will diagnose a number of issues beyond "do I have a registration for X". I'm not claiming that these tests alone are full-proof validation of your configuration but they are set of built in tests for issues that can occur in any configuration (including a Pure configuration) and these combined tests are far from worthless ...

IDisposableYour claim that "such a method is close to worthless" may be true for the majority of the available .NET DI Containers but it does not take Simple Injector's diagnostic services into consideration.