Against consistency by Mark Seemann

A one-sided argument against imposing a uniform coding style.

I want to credit Nat Pryce for planting the seed for the following line of thinking at GOTO Copenhagen 2012. I'd also like to relieve him of any responsibility for what follows. The blame is all mine.

I'd also like to point out that I'm not categorically against consistency in code. There are plenty of good arguments for having a consistent coding style, but as regular readers may have observed, I have a contrarian streak to my personality. If you're only aware of one side of an argument, I believe that you're unequipped to make informed decisions. Thus, I make the following case against imposing coding styles, not because I'm dead-set opposed to consistent code, but because I believe you should be aware of the disadvantages.

TL;DR #

In this essay, I use the term coding style to indicate a set of rules that governs how code should be formatted. This may include rules about where you put brackets, whether to use tabs or spaces, which naming conventions to use, maximum line width, in C# whether you should use the var keyword or explicit variable declaration, and so on.

As already stated, I can appreciate consistency in code as much as the next programmer. I've seen more than one code base, however, where a formal coding style contributed to ossification.

I've consulted a few development organisations with an eye to improving processes and code quality. Sometimes my suggestions are met with hesitation. When I investigate what causes developers to resist, it turns out that my advice goes against 'how things are done around here.' It might even go against the company's formal coding style guidelines.

Coding styles may impede progress.

Below, I'll discuss a few examples.

Class fields #

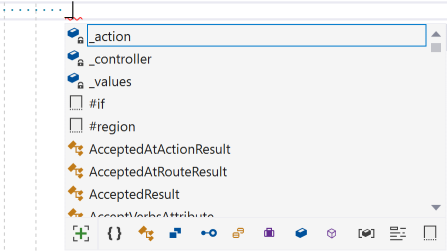

A typical example of a coding style regulates naming of class fields. While it seems to be on retreat now, at one time many C# developers would name class fields with a leading underscore:

private readonly string? _action; private readonly string? _controller; private readonly object? _values;

I never liked that naming convention because it meant that I always had to type an underscore and then at least one other letter before I could make good use of my IDE. For example, in order to take advantage of auto-completion when using the _action field, I'd have to type _a, instead of just a.

Yes, I know that typing isn't a bottleneck, but it still annoyed me because it seemed redundant.

A variation of this coding style is to mandate an m_ prefix, which only exacerbates the problem.

Many years ago, I came across a 'solution': Put the underscore after the field name, instead of in front of it:

private readonly string? action_; private readonly string? controller_; private readonly object? values_;

Problem solved - I thought for some years.

Then someone pointed out to me that if distinguishing a class field from a local variable is the goal, you can use the this qualifier. That made sense to me. Why invent some controversial naming rule when you can use a language keyword instead?

So, for years, I'd always interact with class fields like this.action, this.controller, and so on.

Then someone else point out to me that this ostensible need to be able to distinguish class fields from local variables, or static from instance fields, was really a symptom of either poor naming or too big classes. While that hurt a bit, I couldn't really defend against the argument.

This is all many years ago. These days, I name class fields like I name variables, and I don't qualify access.

The point of this little story is to highlight how you can institute a naming convention with the best of intentions. As experience accumulates, however, you may realise that you've become wiser. Perhaps that naming convention wasn't such a good idea after all.

When that happens, change the convention. Don't worry that this is going to make the code base inconsistent. An improvement is an improvement, while consistency might only imply that the code base is consistently bad.

Explicit variable declaration versus var #

In late 2007, more than a decade ago, C# 3 introduced the var keyword to the language. This tells the compiler to automatically infer the static type of a variable. Before that, you'd have to explicitly declare the type of all variables:

string href = new UrlBuilder() .WithAction(nameof(CalendarController.Get)) .WithController(nameof(CalendarController)) .WithValues(new { year = DateTime.Now.Year }) .BuildAbsolute(Url);

In the above example, the variable href is explicitly declared as a string. With the var keyword you can alternatively write the expression like this:

var href = new UrlBuilder() .WithAction(nameof(CalendarController.Get)) .WithController(nameof(CalendarController)) .WithValues(new { year = DateTime.Now.Year }) .BuildAbsolute(Url);

The href variable is still statically typed as a string. The compiler figures that out for you, in this case because the BuildAbsolute method returns a string:

public string BuildAbsolute(IUrlHelper url)

These two alternatives are interchangeable. They compile to the same IL code.

When C# introduced this language feature, a year-long controversy erupted. Opponents felt that using var made code less readable. This isn't an entirely unreasonable argument, but most C# programmers subsequently figured that the advantages of using var outweigh the disadvantages.

A major advantage is that using var better facilitates refactoring. Sometimes, for example, you decide to change the return type of a method. What happens if you change the return type of UrlBuilder?

public Uri BuildAbsolute(IUrlHelper url)

If you've used the var keyword, the compiler just infers a different type. If, on the other hand, you've explicitly declared href as a string, that piece of code no longer compiles.

Using the var keyword makes refactoring easier. You'll still need to edit some call sites when you make a change like this, because Uri affords a different API than string. The point, however, is that when you use var, the cost of making a change is lower. Less ceremony means that you can spend your effort where it matters.

In the context of coding styles, I still, more than a decade after the var keyword was introduced, encounter code bases that use explicit variable declaration.

When I explain the advantages of using the var keyword to the team responsible for the code base, they may agree in principle, but still oppose using it in practice. The reason? Using var would make the code base inconsistent.

Aiming for a consistent coding style is fine, but only as long as it doesn't prohibit improvements. Don't let it stand in the way of progress.

Habitability #

I don't mind consistency; in fact, I find it quite attractive. It must not, however, become a barrier to improvement.

I've met programmers who so strongly favour consistency that they feel that, in order to change coding style, they'd have to go through the entire code base and retroactively update it all to fit the new rule. This is obviously prohibitively expensive to do, so practically it prevents change.

Consistency is fine, but learn to accept inconsistency. As Nat Pryce said, we should learn to love the mess, to adopt a philosophy akin to wabi-sabi.

I think this view on inconsistent code helped me come to grips with my own desire for neatness. An inconsistent code base looks inhabited. I don't mind looking around in a code base and immediately being able to tell: oh, Anna wrote this, or Nader is the author of this method.

What's more important is that the code is comprehensible.

Conclusion #

Consistency in code isn't a bad thing. Coding styles can help encourage a degree of consistency. I think that's fine.

On the other hand, consistency shouldn't be the highest goal of a code base. If improving the code makes a code base inconsistent, I think that the improvement should trump consistency every time.

Let the old code be as it is, until you need to work with it. When you do, you can apply Robert C. Martin's boy scout rule: Always leave the code cleaner than you found it. Code perfection is like eventual consistency; it's something that you should constantly move towards, yet may never attain.

Learn to appreciate the 'lived-in' quality of an active code base.