At the boundaries, static types are illusory by Mark Seemann

Static types are useful, but have limitations.

Regular readers of this blog may have noticed that I like static type systems. Not the kind of static types offered by C, which strikes me as mostly being able to distinguish between way too many types of integers and pointers. A good type system is more than just numbers on steroids. A type system like C#'s is workable, but verbose. The kind of type system I find most useful is when it has algebraic data types and good type inference. The examples that I know best are the type systems of F# and Haskell.

As great as static type systems can be, they have limitations. Hillel Wayne has already outlined one kind of distinction, but here I'd like to focus on another constraint.

Application boundaries #

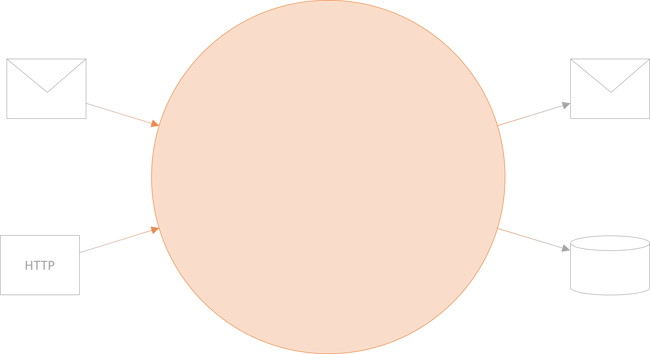

Any piece of software interacts with the 'rest of the world'; effectively everything outside its own process. Sometimes (but increasingly rarely) such interaction is exclusively by way of some user interface, but more and more, an application interacts with other software in some way.

Here I've drawn the application as an opaque disc in order to emphasise that what happens inside the process isn't pertinent to the following discussion. The diagram also includes some common kinds of traffic. Many applications rely on some kind of database or send messages (email, SMS, Commands, Events, etc.). We can think of such traffic as the interactions that the application initiates, but many systems also receive and react to incoming data: HTTP traffic or messages that arrive on a queue, and so on.

When I talk about application boundaries, I have in mind what goes on in that interface layer.

An application can talk to the outside world in multiple ways: It may read or write a file, access shared memory, call operating-system APIs, send or receive network packets, etc. Usually you get to program against higher-level abstractions, but ultimately the application is dealing with various binary protocols.

Protocols #

The bottom line is that at a sufficiently low level of abstraction, what goes in and out of your application has no static type stronger than an array of bytes.

You may counter-argue that higher-level APIs deal with that to present the input and output as static types. When you interact with a text file, you'll typically deal with a list of strings: One for each line in the file. Or you may manipulate JSON, XML, Protocol Buffers, or another wire format using a serializer/deserializer API. Sometime, as is often the case with CSV, you may need to write a very simple parser yourself. Or perhaps something slightly more involved.

To demonstrate what I mean, there's no shortage of APIs like JsonSerializer.Deserialize, which enables you to write code like this:

let n = JsonSerializer.Deserialize<Name> (json, opts)

and you may say: n is statically typed, and its type is Name! Hooray! But you do realise that that's only half a truth, don't you?

An interaction at the application boundary is expected to follow some kind of protocol. This is even true if you're reading a text file. In these modern times, you may expect a text file to contain Unicode, but have you ever received a file from a legacy system and have to deal with its EBCDIC encoding? Or an ASCII file with a code page different from the one you expect? Or even just a file written on a Unix system, if you're on Windows, or vice versa?

In order to correctly interpret or transmit such data, you need to follow a protocol.

Such a protocol can be low-level, as the character-encoding examples I just listed, but it may also be much more high-level. You may, for example, consider an HTTP request like this:

POST /restaurants/90125/reservations?sig=aco7VV%2Bh5sA3RBtrN8zI8Y9kLKGC60Gm3SioZGosXVE%3D HTTP/1.1

Content-Type: application/json

{

"at": "2021-12-08 20:30",

"email": "snomob@example.com",

"name": "Snow Moe Beal",

"quantity": 1

}

Such an interaction implies a protocol. Part of such a protocol is that the HTTP request's body is a valid JSON document, that it has an at property, that that property encodes a valid date and time, that quantity is a natural number, that email is present, and so on.

You can model the expected input as a Data Transfer Object (DTO):

public sealed class ReservationDto { public string? At { get; set; } public string? Email { get; set; } public string? Name { get; set; } public int Quantity { get; set; } }

and even set up your 'protocol handlers' (here, an ASP.NET Core action method) to use such a DTO:

public Task<ActionResult> Post(ReservationDto dto)

While this may look statically typed, it assumes a particular protocol. What happens when the bytes on the wire don't follow the protocol?

Well, we've already been around that block more than once.

The point is that there's always an implied protocol at the application boundary, and you can choose to model it more or less explicitly.

Types as short-hands for protocols #

In the above example, I've relied on some static typing to deal with the problem. After all, I did define a DTO to model the expected shape of input. I could have chosen other alternatives: Perhaps I could have used a JSON parser to explicitly use the JSON DOM, or even more low-level used Utf8JsonReader. Ultimately, I could have decided to write my own JSON parser.

I'd rarely (or never?) choose to implement a JSON parser from scratch, so that's not what I'm advocating. Rather, my point is that you can leverage existing APIs to deal with input and output, and some of those APIs offer a convincing illusion that what happens at the boundary is statically typed.

This illusion is partly API-specific, and partly language-specific. In .NET, for example, JsonSerializer.Deserialize looks like it'll always deserialize any JSON string into the desired model. Obviously, that's a lie, because the function will throw an exception if the operation is impossible (i.e. when the input is malformed). In .NET (and many other languages or platforms), you can't tell from an API's type what the failure modes might be. In contrast, aeson's fromJSON function returns a type that explicitly indicates that deserialization may fail. Even in Haskell, however, this is mostly an idiomatic convention, because Haskell also 'supports' exceptions.

At the boundary, a static type can be a useful shorthand for a protocol. You declare a static type (e.g. a DTO) and rely on built-in machinery to handle malformed input. You give up some fine-grained control in exchange for a more declarative model.

I often choose to do that because I find such a trade-off beneficial, but I'm under no illusion that my static types fully model what goes 'on the wire'.

Reversed roles #

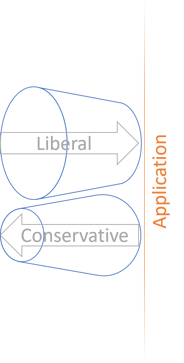

So far, I've mostly discussed input validation. Can types replace validation? No, but they can make most common validation scenarios easier. What happens when you return data?

You may decide to return a statically typed value. A serializer can faithfully convert such a value to a proper wire format (JSON, XML, or similar). The recipient may not care about that type. After all, you may return a Haskell value, but the system receiving the data is written in Python. Or you return a C# object, but the recipient is JavaScript.

Should we conclude, then, that there's no reason to model return data with static types? Not at all, because by modelling output with static types, you are being conservative with what you send. Since static types are typically more rigid than 'just code', there may be corner cases that a type can't easily express. While this may pose a problem when it comes to input, it's only a benefit when it comes to output. This means that you're narrowing the output funnel and thus making your system easier to work with.

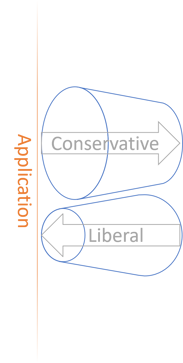

Now consider another role-reversal: When your application initiates an interaction, it starts by producing output and receives input as a result. This includes any database interaction. When you create, update, or delete a row in a database, you send data, and receive a response.

Should you not consider Postel's law in that case?

Most people don't, particularly if they rely on object-relational mappers (ORMs). After all, if you have a static type (class) that models a database row, what's the harm using that when updating the database?

Probably none. After all, based on what I've just written, using a static type is a good way to be conservative with what you send. Here's an example using Entity Framework:

using var db = new RestaurantsContext(ConnectionString); var dbReservation = new Reservation { PublicId = reservation.Id, RestaurantId = restaurantId, At = reservation.At, Name = reservation.Name.ToString(), Email = reservation.Email.ToString(), Quantity = reservation.Quantity }; await db.Reservations.AddAsync(dbReservation); await db.SaveChangesAsync();

Here we send a statically typed Reservation 'Entity' to the database, and since we use a static type, we're being conservative with what we send. That's only good.

What happens when we query a database? Here's a typical example:

public async Task<Restaurants.Reservation?> ReadReservation(int restaurantId, Guid id) { using var db = new RestaurantsContext(ConnectionString); var r = await db.Reservations.FirstOrDefaultAsync(x => x.PublicId == id); if (r is null) return null; return new Restaurants.Reservation( r.PublicId, r.At, new Email(r.Email), new Name(r.Name), r.Quantity); }

Here I read a database row r and unquestioning translate it to my domain model. Should I do that? What if the database schema has diverged from my application code?

I suspect that much grief and trouble with relational databases, and particularly with ORMs, stem from the illusion that an ORM 'Entity' is a statically-typed view of the database schema. Typically, you can either use an ORM like Entity Framework in a code-first or a database-first fashion, but regardless of what you choose, you have two competing 'truths' about the database: The database schema and the Entity Classes.

You need to be disciplined to keep those two views in synch, and I'm not asserting that it's impossible. I'm only suggesting that it may pay to explicitly acknowledge that static types may not represent any truth about what's actually on the other side of the application boundary.

Types are an illusion #

Given that I usually find myself firmly in the static-types-are-great camp, it may seem odd that I now spend an entire article trashing them. Perhaps it looks as though I've had a revelation and made an about-face, but that's not the case. Rather, I'm fond of making the implicit explicit. This often helps improve understanding, because it helps delineate conceptual boundaries.

This, too, is the case here. All models are wrong, but some models are useful. So are static types, I believe.

A static type system is a useful tool that enables you to model how your application should behave. The types don't really exist at run time. Even though .NET code (just to point out an example) compiles to a binary representation that includes type information, once it runs, it JITs to machine code. In the end, it's just registers and memory addresses, or, if you want to be even more nihilistic, electrons moving around on a circuit board.

Even at a higher level of abstraction, you may say: But at least, a static type system can help you encapsulate rules and assumptions. In a language like C#, for example, consider a predicative type like this NaturalNumber class:

public struct NaturalNumber : IEquatable<NaturalNumber> { private readonly int i; public NaturalNumber(int candidate) { if (candidate < 1) throw new ArgumentOutOfRangeException( nameof(candidate), $"The value must be a positive (non-zero) number, but was: {candidate}."); this.i = candidate; } // Various other members follow...

Such a class effectively protects the invariant that a natural number is always a positive integer. Yes, that works well until someone does this:

var n = (NaturalNumber)FormatterServices.GetUninitializedObject(typeof(NaturalNumber));

This n value has the internal value 0. Yes, FormatterServices.GetUninitializedObject bypasses the constructor. This thing is evil, but it exists, and at least in the current discussion serves to illustrate the point that types are illusions.

This isn't just a flaw in C#. Other languages have similar backdoors. One of the most famously statically-typed languages, Haskell, comes with unsafePerformIO, which enables you to pretend that nothing untoward is going on even if you've written some impure code.

You may (and should) institute policies to not use such backdoors in your normal code bases. You don't need them.

Types are useful models #

All this may seem like an argument that types are useless. That would, however, be to draw the wrong conclusion. Types don't exist at run time to the same degree that Python objects or JavaScript functions don't exist at run time. Any language (except assembler) is an abstraction: A way to model computer instructions so that programming becomes easier (one would hope, but then...). This is true even for C, as low-level and detail-oriented as it may seem.

If you grant that high-level programming languages (i.e. any language that is not machine code or assembler) are useful, you must also grant that you can't rule out the usefulness of types. Notice that this argument is one of logic, rather than of preference. The only claim I make here is that programming is based on useful illusions. That the abstractions are illusions don't prevent them from being useful.

In statically typed languages, we effectively need to pretend that the type system is good enough, strong enough, generally trustworthy enough that it's safe to ignore the underlying reality. We work with, if you will, a provisional truth that serves as a user interface to the computer.

Even though a computer program eventually executes on a processor where types don't exist, a good compiler can still check that our models look sensible. We say that it type-checks. I find that indispensable when modelling the internal behaviour of a program. Even in a large code base, a compiler can type-check whether all the various components look like they may compose correctly. That a program compiles is no guarantee that it works correctly, but if it doesn't type-check, it's strong evidence that the code's model is internally inconsistent.

In other words, that a statically-typed program type-checks is a necessary, but not a sufficient condition for it to work.

This holds as long as we're considering program internals. Some language platforms allow us to take this notion further, because we can link software components together and still type-check them. The .NET platform is a good example of this, since the IL code retains type information. This means that the C#, F#, or Visual Basic .NET compiler can type-check your code against the APIs exposed by external libraries.

On the other hand, you can't extend that line of reasoning to the boundary of an application. What happens at the boundary is ultimately untyped.

Are types useless at the boundary, then? Not at all. Alexis King has already dealt with this topic better than I could, but the point is that types remain an effective way to capture the result of parsing input. You can view receiving, handling, parsing, or validating input as implementing a protocol, as I've already discussed above. Such protocols are application-specific or domain-specific rather than general-purpose protocols, but they are still protocols.

When I decide to write input validation for my restaurant sample code base as a set of composable parsers, I'm implementing a protocol. My starting point isn't raw bits, but rather a loose static type: A DTO. In other cases, I may decide to use a different level of abstraction.

One of the (many) reasons I have for finding ORMs unhelpful is exactly because they insist on an illusion past its usefulness. Rather, I prefer implementing the protocol that talks to my database with a lower-level API, such as ADO.NET:

private static Reservation ReadReservationRow(SqlDataReader rdr) { return new Reservation( (Guid)rdr["PublicId"], (DateTime)rdr["At"], new Email((string)rdr["Email"]), new Name((string)rdr["Name"]), new NaturalNumber((int)rdr["Quantity"])); }

This actually isn't a particular good protocol implementation, because it fails to take Postel's law into account. Really, this code should be a Tolerant Reader. In practice, not that much input contravariance is possible, but perhaps, at least, this code ought to gracefully handle if the Name field was missing.

The point of this particular example isn't that it's perfect, because it's not, but rather that it's possible to drop down to a lower level of abstraction, and sometimes, this may be a more honest representation of reality.

Conclusion #

It may be helpful to acknowledge that static types don't really exist. Even so, internally in a code base, a static type system can be a powerful tool. A good type system enables a compiler to check whether various parts of your code looks internally consistent. Are you calling a procedure with the correct arguments? Have you implemented all methods defined by an interface? Have you handled all cases defined by a sum type? Have you correctly initialized an object?

As useful type systems are for this kind of work, you should also be aware of their limitations. A compiler can check whether a code base's internal model makes sense, but it can't verify what happens at run time.

As long as one part of your code base sends data to another part of your code base, your type system can still perform a helpful sanity check, but for data that enters (or leaves) your application at run time, bets are off. You may attempt to model what input should look like, and it may even be useful to do that, but it's important to acknowledge that reality may not look like your model.

You can write statically-typed, composable parsers. Some of them are quite elegant, but the good ones explicitly model that parsing of input is error-prone. When input is well-formed, the result may be a nicely encapsulated, statically-typed value, but when it's malformed, the result is one or more error values.

Perhaps the most important message is that databases, other web services, file systems, etc. involve input and output, too. Even if you write code that initiates a database query, or a web service request, should you implicitly trust the data that comes back?

This question of trust doesn't have to imply security concerns. Rather, systems evolve and errors happen. Every time you interact with an external system, there's a risk that it has become misaligned with yours. Static types can't protect you against that.