Greyscale-box test-driven development by Mark Seemann

Is TDD white-box testing or black-box testing?

Surely you're aware of the terms black-box testing and white-box testing, but have you ever wondered where test-driven development (TDD) fits in that picture?

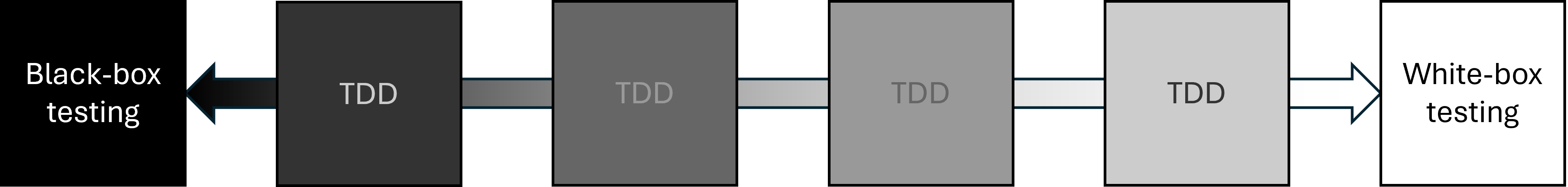

The short answer is that TDD as a software development practice sits somewhere between the two. It really isn't black and white, and exactly where TDD sits on the spectrum changes with circumstances.

If the above diagram indicates that TDD can't occupy the space of undiluted black- or white-box testing, that's not my intent. In my experience, however, you rarely do neither when you engage with the TDD process. Rather, you find yourself somewhere in-between.

In the following, I'll examine the two extremes in order to explain why TDD rarely leads to either, starting with black-box testing.

Compartmentalization of knowledge #

If you follow the usual red-green-refactor checklist, you write test code and production code in tight loops. You write some test code, some production code, more test code, then more production code, and so on.

If you're working by yourself, at least, that makes it almost impossible to treat the System Under Test (SUT) as a black box. After all, you're also the one who writes the production code.

You can try to 'forget' the production code you just wrote whenever you circle back to writing another test, but in practice, you can't. Even so, it may still be a useful exercise. I call this technique Gollum style (originally introduced in the Pluralsight course Outside-In Test-Driven Development as a variation on the Devil's advocate technique). The idea is to assume two roles, and to explicitly distinguish the goals of the tester from the aims of the implementer.

Still, while this can be an illuminating exercise, I don't pretend that this is truly black-box testing.

Pair programming #

If you pair-program, you have better options. You could have one person write a test, and another person implement the code to pass the test. I could imagine a setup where the tester can't see the production code. Although I've never seen or heard about anyone doing that, this would get close to true black-box TDD.

To demonstrate, imagine a team doing the FizzBuzz kata in this way. The tester writes the first test:

[Fact] public void One() { var actual = FizzBuzzer.Convert(1); Assert.Equal("1", actual); }

Either the implementer is allowed to see the test, or the specification is communicated to him or her in some other way. In any case, the natural response to the first test is an implementation like this:

public static string Convert(int number) { return "1"; }

In TDD, this is expected. This is the simplest implementation that passes all tests. We imagine that the tester already knows this, and therefore adds this test next:

[Fact] public void Two() { var actual = FizzBuzzer.Convert(2); Assert.Equal("2", actual); }

The implementer's response is this:

public static string Convert(int number) { if (number == 1) return "1"; return "2"; }

The tester can't see the implementation, so may believe that the implementation is now 'appropriate'. Even if he or she wants to be 'more sure', a few more test cases (for, say, 4, 7, or 38) could be added; it doesn't make any difference for the following argument.

Next, incrementally, the tester may add a few test cases that cover the "Fizz" behaviour:

[Fact] public void Three() { var actual = FizzBuzzer.Convert(3); Assert.Equal("Fizz", actual); } [Fact] public void Six() { var actual = FizzBuzzer.Convert(6); Assert.Equal("Fizz", actual); }

Similar test cases cover the "Buzz" and "FizzBuzz" behaviours. For this example, I wrote eight test cases in total, but a more sceptical tester might write twelve or even sixteen before feeling confident that the test suite sufficiently describes the desired behaviour of the system. Even so, a sufficiently adversarial implementer might (given eight test cases) deliver this implementation:

public static string Convert(int number) { switch (number) { case 1: return "1"; case 2: return "2"; case 5: case 10: return "Buzz"; case 15: case 30: return "FizzBuzz"; default: return "Fizz"; } }

To be clear, it's not that I expect real-world programmers to be either obtuse or nefarious. In real life, on the other hand, requirements are more complicated, and may be introduced piecemeal in a fashion that may lead to buggy, overly-complicated implementations.

Under-determination #

Remarkably, black-box testing may work better as an ex-post technique, compared to TDD. If we imagine that an implementer has made an effort to correctly implement a system according to specification, a tester may use black-box testing to poke at the SUT, using both randomly selected test cases, and by explicitly exercising the SUT at boundary cases.

Even so, black-box testing in reality tends to run into the problem of under-determination, also known from philosophy of science. As I outlined in Code That Fits in Your Head, software testing has many similarities with empirical science. We use experiments (tests) to corroborate hypotheses that we have about software: Typically either that it doesn't pass tests, or that it does pass all tests, depending on where in the red-green-refactor cycle we are.

Similar to science, we are faced with the basic epistemological problem that we have a finite number of tests, but usually an infinite (or at least extremely big) state space. Thus, as pointed out by the problem of under-determination, more than one 'reality' fits the available observations (i.e. test cases). The above FizzBuzz implementation is an example of this.

As an aside, certain problems actually have images that are sufficiently small that you can cover everything in total. In its most common description, the FizzBuzz kata, too, falls into this category.

"Write a program that prints the numbers from 1 to 100."

This means that you can, in fact, write 100 test cases and thereby specify the problem in its totality. What you still can't do with black-box testing, however, is impose a particular implementation. An adversarial implementer could write the Convert function as one big switch statement. Just like I did with the Tennis kata, another kata with a small state space.

This, however, rarely happens in the real world. Example-driven testing is under-determined. And no, property-based testing doesn't fundamentally change that conclusion. It behoves you to look critically at the actual implementation code, and not rely exclusively on testing.

Working with implementation code #

It's hardly a surprise that TDD isn't black-box testing. Is it white-box testing, then? Since the red-green-refactor cycle dictates a tight loop between test and production code, you always have the implementation code at hand. In that sense, the SUT is a white box.

That said, the common view on white-box testing is that you work with knowledge about the internal implementation of an already-written system, and use that to design test cases. Typically, looking at the code should enable a tester to identify weak spots that warrant testing.

This isn't always the case with TDD. If you follow the red-green-refactor checklist, each cycle should leave you with a SUT that passes all tests in the simplest way that could possibly work. Consider the first incarnation of Convert, above (the one that always returns "1"). It passes all tests, and from a white-box-testing perspective, it has no weak spots. You can't identify a test case that'll make it crash.

If you consider the test suite as an executable specification, that degenerate implementation is correct, since it passes all tests. Of course, according to the kata description, it's wrong. Looking at the SUT code will tell you that in a heartbeat. It should prompt you to add another test case. The question is, though, whether that qualifies as white-box testing, or it's rather reminiscent of the transformation priority premise. Not that that's necessarily a dichotomy.

Overspecified software #

Perhaps a more common problem with white-box testing in relation to TDD is the tendency to take a given implementation for granted. Of course, working according to the red-green-refactor cycle, there's no implementation before the test, but a common technique is to use Mock Objects to let tests specify how the SUT should be implemented. This leads to the familiar problem of Overspecified Software.

Here's an example.

Finding values in an interval #

In the code base that accompanies Code That Fits in Your Head, the code that handles a new restaurant reservation contains this code snippet:

var reservations = await Repository .ReadReservations(restaurant.Id, reservation.At) .ConfigureAwait(false); var now = Clock.GetCurrentDateTime(); if (!restaurant.MaitreD.WillAccept(now, reservations, reservation)) return NoTables500InternalServerError(); await Repository.Create(restaurant.Id, reservation) .ConfigureAwait(false);

The ReadReservations method is of particular interest in this context. It turns out to be a small extension method on a more general interface method:

internal static Task<IReadOnlyCollection<Reservation>> ReadReservations( this IReservationsRepository repository, int restaurantId, DateTime date) { var min = date.Date; var max = min.AddDays(1).AddTicks(-1); return repository.ReadReservations(restaurantId, min, max); }

The IReservationsRepository interface doesn't have a method that allows a client to search for all reservations on a given date. Rather, it defines a more general method that enables clients to search for reservations in a given interval:

Task<IReadOnlyCollection<Reservation>> ReadReservations( int restaurantId, DateTime min, DateTime max);

As the parameter names imply, the method finds and returns all the reservations for a given restaurant between the min and max values. A previous article already covers this method in much detail.

I think I've stated this more than once before: Code is never perfect. Although I made a genuine attempt to write quality code for the book's examples, now that I revisit this API, I realize that there's room for improvement. The most obvious problem with that method definition is that it's not clear whether the range includes, or excludes, the boundary values. Would it improve encapsulation if the method instead took a Range<DateTime> parameter?

At the very least, I could have named the parameters inclusiveMin and inclusiveMax. That's how the system is implemented, and you can see an artefact of that in the above extension method. It searches from midnight of date to the tick just before midnight on the next day.

The SQL implementation reflects that contract, too.

SELECT [PublicId], [At], [Name], [Email], [Quantity] FROM [dbo].[Reservations] WHERE [RestaurantId] = @RestaurantId AND @Min <= [At] AND [At] <= @Max

Here, @RestaurantId, @Min, and @Max are query parameters. Notice that the query uses the <= relation for both @Min and @Max, making both endpoints inclusive.

Interactive white-box testing #

Since I'm aware of the problem of overspecified software, I test-drove the entire code base using state-based testing. Imagine, however, that I'd instead used a dynamic mock library. If so, a test could have looked like this:

[Fact] public async Task PostUsingMoq() { var now = DateTime.Now; var reservation = Some.Reservation.WithDate(now.AddDays(2).At(20, 15)); var repoTD = new Mock<IReservationsRepository>(); repoTD .Setup(r => r.ReadReservations( Some.Restaurant.Id, reservation.At.Date, reservation.At.Date.AddDays(1).AddTicks(-1))) .ReturnsAsync(new Collection<Reservation>()); var sut = new ReservationsController( new SystemClock(), new InMemoryRestaurantDatabase(Some.Restaurant), repoTD.Object); var ar = await sut.Post(Some.Restaurant.Id, reservation.ToDto()); Assert.IsAssignableFrom<CreatedAtActionResult>(ar); // More assertions could go here. }

This test uses Moq, but the example doesn't hinge on that. I rarely use dynamic mock libraries these days, but when I do, I still prefer Moq.

Notice how the Setup reproduces the implementation of the ReadReservations extension method. The implication is that if you change the implementation code, you break the test.

Even so, we may consider this an example of a test-driven white-box test. While, according to the red-green-refactor cycle, you're supposed to write the test before the implementation, this style of TDD only works if you, the test writer, has an exact plan for how the SUT is going to look.

An innocent refactoring? #

Don't you find that min.AddDays(1).AddTicks(-1) expression a bit odd? Wouldn't the code be cleaner if you could avoid the AddTicks(-1) part?

Well, you can.

A tick is the smallest unit of measurement of DateTime values. Since ticks are discrete, the range defined by the extension method would be equivalent to a right-open interval, where the minimum value is still included, but the maximum is not. If you made that change, the extension method would be simpler:

internal static Task<IReadOnlyCollection<Reservation>> ReadReservations( this IReservationsRepository repository, int restaurantId, DateTime date) { var min = date.Date; var max = min.AddDays(1); return repository.ReadReservations(restaurantId, min, max); }

In order to offset that change, you also change the SQL accordingly:

SELECT [PublicId], [At], [Name], [Email], [Quantity] FROM [dbo].[Reservations] WHERE [RestaurantId] = @RestaurantId AND @Min <= [At] AND [At] < @Max

Notice that the query now compares [At] with @Max using the < relation.

While this is formally a breaking change of the interface, it's entirely internal to the application code base. No external systems or libraries depend on IReservationsRepository. Thus, this change is a true refactoring: It improves the code without changing the observable behaviour of the system.

Even so, this change breaks the PostUsingMoq test.

To make the test pass, you'll need to repeat the change you made to the SUT:

[Fact] public async Task PostUsingMoq() { var now = DateTime.Now; var reservation = Some.Reservation.WithDate(now.AddDays(2).At(20, 15)); var repoTD = new Mock<IReservationsRepository>(); repoTD .Setup(r => r.ReadReservations( Some.Restaurant.Id, reservation.At.Date, reservation.At.Date.AddDays(1))) .ReturnsAsync(new Collection<Reservation>()); var sut = new ReservationsController( new SystemClock(), new InMemoryRestaurantDatabase(Some.Restaurant), repoTD.Object); var ar = await sut.Post(Some.Restaurant.Id, reservation.ToDto()); Assert.IsAssignableFrom<CreatedAtActionResult>(ar); // More assertions could go here. }

If it's only one test, you can probably live with that, but it's the opposite of a robust test; it's a Fragile Test.

"to refactor, the essential precondition is [...] solid tests"

A common problem with interaction-based testing is that even small refactorings break many tests. We might see that as a symptom of having too much knowledge of implementation details. We might view this as related to white-box testing.

To be clear, the tests in the code base that accompanies Code That Fits in Your Head are all state-based, so contrary to the PostUsingMoq test, all 161 tests easily survive the above refactoring.

Greyscale-box TDD #

It's not too hard to argue that TDD isn't black-box testing, but it's harder to argue that it's not white-box testing. Naturally, as you follow the red-green-refactor cycle, you know all about the implementation. Still, the danger of being too aware of the SUT code is being trapped in an implementation mindset.

While there's nothing wrong with getting the implementation right, many maintainability problems originate in insufficient encapsulation. Deliberately treating the SUT as a grey box helps in discovering a SUT's contract. That's why I recommend techniques like Devil's Advocate. Pretending to view the SUT from the outside can shed valuable light on usability and maintainability issues.

Conclusion #

The notions of white-box and black-box testing have been around for decades. So has TDD. Even so, it's not always clear to practitioners whether TDD is one or the other. The reason is, I believe, that TDD is neither. Good TDD practice sits somewhere between white-box testing and black-box testing.

Exactly where on that greyscale spectrum TDD belongs depends on context. The more important encapsulation is, the closer you should move towards black-box testing. The more important correctness or algorithm performance is, the closer to white-box testing you should move.

You can, however, move position on the spectrum even in the same code base. Perhaps you want to start close to white-box testing as you focus on getting the implementation right. Once the SUT works as intended, you may then decide to shift your focus towards encapsulation, in which case moving closer to black-box testing could prove beneficial.