Redirect legacy URLs by Mark Seemann

Evolving REST API URLs when cool URIs don't change.

More than one reader reacted to my article on fit URLs by asking about bookmarks and original URLs. Daniel Sklenitzka's question is a good example:

While I answered the question on the same page, I think that it's worthwhile to expand it."I see how signing the URLs prevents clients from retro-engineering the URL templates, but how does it help preventing breaking changes? If the client stores the whole URL instead of just the ID and later the URL changes because a restaurant ID is added, the original URL is still broken, isn't it?"

The rules of HTTP #

I agree with the implicit assumption that clients are allowed to bookmark links. It seems, then, like a breaking change if you later change your internal URL scheme. That seems to imply that the bookmarked URL is gone, breaking a tenet of the HTTP protocol: Cool URIs don't change.

REST APIs are supposed to play by the rules of HTTP, so it'd seem that once you've published a URL, you can never retire it. You can, on the other hand, change its behaviour.

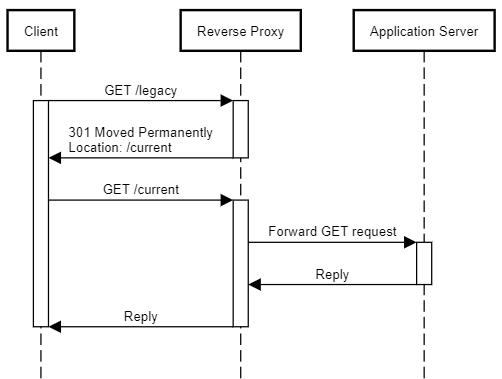

Let's call such URLs legacy URLs. Keep them around, but change them to return 301 Moved Permanently responses.

The rules of REST go both ways. The API is expected to play by the rules of HTTP, and so are the clients. Clients are not only expected to follow links, but also redirects. If a legacy URL starts returning a 301 Moved Permanently response, a well-behaved client doesn't break.

Reverse proxy #

As I've previously described, one of the many benefits of HTTP-based services is that you can put a reverse proxy in front of your application servers. I've no idea how to configure or operate NGINX or Varnish, but from talking to people who do know, I get the impression that they're quite scriptable.

Since the above ideas are independent of actual service implementation or behaviour, it's a generic problem that you should seek to address with general-purpose software.

Imagine that a reverse proxy is configured with a set of rules that detects legacy URLs and knows how to forward them. Clearly, the reverse proxy must know of the REST API's current URL scheme to be able to do that. You might think that this would entail leaking an implementation detail, but just as I consider any database used by the API as part of the overall system, I'd consider the reverse proxy as just another part.

Redirecting with ASP.NET #

If you don't have a reverse proxy, you can also implement redirects in code. It'd be better to use something like a reverse proxy, because that would mean that you get to delete code from your code base, but sometimes that's not possible.

The code shown here is part of the sample code base that accompanies my book Code That Fits in Your Head.

In ASP.NET, you can return 301 Moved Permanently responses just like any other kind of HTTP response:

[Obsolete("Use Get method with restaurant ID.")] [HttpGet("calendar/{year}/{month}")] public ActionResult LegacyGet(int year, int month) { return new RedirectToActionResult( nameof(Get), null, new { restaurantId = Grandfather.Id, year, month }, permanent: true); }

This LegacyGet method redirects to the current Controller action called Get by supplying the arguments that the new method requires. The Get method has this signature:

[HttpGet("restaurants/{restaurantId}/calendar/{year}/{month}")] public async Task<ActionResult> Get(int restaurantId, int year, int month)

When I expanded the API from a single restaurant to a multi-tenant system, I had to grandfather in the original restaurant. I gave it a restaurantId, but in order to not put magic constants in the code, I defined it as the named constant Grandfather.Id.

Notice that I also adorned the LegacyGet method with an [Obsolete] attribute to make it clear to maintenance programmers that this is legacy code. You might argue that the Legacy prefix already does that, but the [Obsolete] attribute will make the compiler emit a warning, which is even better feedback.

Regression test #

While legacy URLs may be just that: legacy, that doesn't mean that it doesn't matter whether or not they work. You may want to add regression tests.

If you implement redirects in code (as opposed to a reverse proxy), you should also add automated tests that verify that the redirects work:

[Theory] [InlineData("http://localhost/calendar/2020?sig=ePBoUg5gDw2RKMVWz8KIVzF%2Fgq74RL6ynECiPpDwVks%3D")] [InlineData("http://localhost/calendar/2020/9?sig=ZgxaZqg5ubDp0Z7IUx4dkqTzS%2Fyjv6veDUc2swdysDU%3D")] public async Task BookmarksStillWork(string bookmarkedAddress) { using var api = new LegacyApi(); var actual = await api.CreateDefaultClient().GetAsync(new Uri(bookmarkedAddress)); Assert.Equal(HttpStatusCode.MovedPermanently, actual.StatusCode); var follow = await api.CreateClient().GetAsync(actual.Headers.Location); follow.EnsureSuccessStatusCode(); }

This test interacts with a self-hosted service at the HTTP level. LegacyApi is a test-specific helper class that derives from WebApplicationFactory<Startup>.

The test uses URLs that I 'bookmarked' before I evolved the URLs to a multi-tenant system. As you can tell from the host name (localhost), these are bookmarks against the self-hosted service. The test first verifies that the response is 301 Moved Permanently. It then requests the new address and uses EnsureSuccessStatusCode as an assertion.

Conclusion #

When you evolve fit URLs, it could break clients that may have bookmarked legacy URLs. Consider leaving 301 Moved Permanently responses at those addresses.