Good names are skin-deep by Mark Seemann

Good names are important, but insufficient, for code maintainability.

You should give the building blocks of your code bases descriptive names. It's easier to understand the purpose of a library, module, class, method, function, etcetera if the name contains a clue about the artefact's purpose. This is hardly controversial, and while naming is hard, most teams I visit agree that names are important.

Still, despite good intentions and efforts to name things well, code bases deteriorate into unmaintainable clutter.

Clearly, good names aren't enough.

Tenuousness of names #

A good name is tenuous. First, naming is hard, so while you may have spent some effort coming up with a good name, other people may misinterpret it. Because they originate from natural language, names are as ambiguous as language. (Terse operators, on the other hand...)

Another maintainability problem with names is that implementation may change over time, but the names remain constant. Granted, modern IDEs make it easy to rename methods, but developers rarely adjust names when they adjust behaviour. Even the best names may become misleading over time.

These weakness aren't the worst, though. In my experience, a more fundamental problem is that all it takes is one badly named 'wrapper object' before the information in a good name is lost.

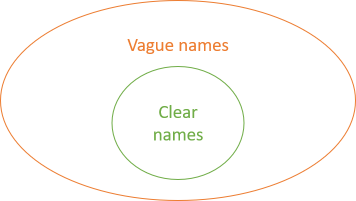

In the figure, the inner object is well-named. It has a clear name and descriptive method names. All it takes before this information is lost, however, is another object with vague names to 'encapsulate' it.

An attempt at a descriptive method name #

Here's an example. Imagine an online restaurant reservation system. One of the features of this system is to take reservations and save them in the database.

A restaurant, however, is a finite resource. It can only accommodate a certain number of guests at the same time. Whenever the system receives a reservation request, it'll have to retrieve the existing reservations for that time and make a decision. Can it accept the reservation? Only if it can should it save the reservation.

How do you model such an interaction? How about a descriptive name? How about TrySave? Here's a possible implementation:

public async Task<bool> TrySave(Reservation reservation) { if (reservation is null) throw new ArgumentNullException(nameof(reservation)); var reservations = await Repository .ReadReservations( reservation.At, reservation.At + SeatingDuration) .ConfigureAwait(false); var availableTables = Allocate(reservations); if (!availableTables.Any(t => reservation.Quantity <= t.Seats)) return false; await Repository.Create(reservation).ConfigureAwait(false); return true; }

There's an implicit naming convention in .NET that methods with the Try prefix indicate an operation that may or may not succeed. The return value of such methods is either true or false, and they may also have out parameters if they optionally produce a value. That's not the case here, but I think one could make the case that TrySave succinctly describes what's going on.

All is good, then?

A vague wrapper #

After our conscientious programmer meticulously designed and named the above TrySave method, it turns out that it doesn't meet all requirements. Users of the system file a bug: the system accepts reservations outside the restaurant's opening hours.

The original programmer has moved on to greener pastures, so fixing the bug falls on a poor maintenance developer with too much to do. Having recently learned about the open-closed principle, our new protagonist decides to wrap the existing TrySave in a new method:

public async Task<bool> Check(Reservation reservation) { if (reservation is null) throw new ArgumentNullException(nameof(reservation)); if (reservation.At < DateTime.Now) return false; if (reservation.At.TimeOfDay < OpensAt) return false; if (LastSeating < reservation.At.TimeOfDay) return false; return await Manager.TrySave(reservation).ConfigureAwait(false); }

This new method first checks whether the reservation is within opening hours and in the future. If that's not the case, it returns false. Only if these preconditions are fulfilled does it delegate the decision to that TrySave method.

Notice, however, the name. The bug was urgent, and our poor programmer didn't have time to think of a good name, so Check it is.

Caller's perspective #

How does this look from the perspective of calling code? Here's the Controller action that handles the pertinent HTTP request:

public async Task<ActionResult> Post(ReservationDto dto) { if (dto is null) throw new ArgumentNullException(nameof(dto)); Reservation? r = dto.Validate(); if (r is null) return new BadRequestResult(); var isOk = await Manager.Check(r).ConfigureAwait(false); if (!isOk) return new StatusCodeResult(StatusCodes.Status500InternalServerError); return new NoContentResult(); }

Try to forget the code you've just seen and imagine that you're looking at this code first. You'd be excused if you miss what's going on. It looks as though the method just does a bit of validation and then checks 'something' concerning the reservation.

There's no hint that the Check method might perform the significant side effect of saving the reservation in the database.

You'll only learn that if you read the implementation details of Check. As I argue in my Humane Code video, if you have to read the source code of an object, encapsulation is broken.

Such code doesn't fit in your brain. You'll struggle as you try keep track of all the things that are going on in the code, all the way from the outer boundary of the application to implementation details that relate to databases, third-party services, etcetera.

Straw man? #

You may think that this is a straw man argument. After all, wouldn't it be better to edit the original TrySave method?

Perhaps, but it would make that class more complex. The TrySave method has a cyclomatic complexity of only 3, while the Check method has a complexity of 5. Combining them might easily take them over some threshold.

Additionally, each of these two classes have different dependencies. As the TrySave method implies, it relies on both Repository and SeatingDuration, and the Allocate helper method (not shown) uses a third dependency: the restaurant's table configuration.

Likewise, the Check method relies on OpensAt and LastSeating. If you find it better to edit the original TrySave method, you'd have to combine these dependencies as well. Each time you do that, the class grows until it becomes a God object.

It's rational to attempt to separate things in multiple classes. It also, on the surface, seems to make unit testing easier. For example, here's a test that verifies that the Check method rejects reservations before the restaurant's opening time:

[Fact] public async Task RejectReservationBeforeOpeningTime() { var r = new Reservation( DateTime.Now.AddDays(10).Date.AddHours(17), "colaera@example.com", "Cole Aera", 1); var mgrTD = new Mock<IReservationsManager>(); mgrTD.Setup(mgr => mgr.TrySave(r)).ReturnsAsync(true); var sut = new RestaurantManager( TimeSpan.FromHours(18), TimeSpan.FromHours(21), mgrTD.Object); var actual = await sut.Check(r); Assert.False(actual); }

By replacing the TrySave method by a test double, you've ostensibly decoupled the Check method from all the complexity of the TrySave method.

To be clear, this style of programming, with lots of nested interfaces and tests with mocks and stubs is far from ideal, but I still find it better than a big ball of mud.

Alternative #

A better alternative is Functional Core, Imperative Shell, AKA impureim sandwich. Move all impure actions to the edge of the system, leaving only referentially transparent functions as the main implementers of logic. It could look like this:

[HttpPost] public async Task<ActionResult> Post(ReservationDto dto) { if (dto is null) throw new ArgumentNullException(nameof(dto)); var id = dto.ParseId() ?? Guid.NewGuid(); Reservation? r = dto.Validate(id); if (r is null) return new BadRequestResult(); var reservations = await Repository.ReadReservations(r.At).ConfigureAwait(false); if (!MaitreD.WillAccept(DateTime.Now, reservations, r)) return NoTables500InternalServerError(); await Repository.Create(r).ConfigureAwait(false); return Reservation201Created(r); }

Nothing is swept under the rug here. WillAccept is a pure function, and while it encapsulates significant complexity, the only thing you need to understand when you're trying to understand the above Post code is that it returns either true or false.

Another advantage of pure functions is that they are intrinsically testable. That makes unit testing and test-driven development easier.

Even with a functional core, you'll also have an imperative shell. You can still test that, too, such as the Post method. It isn't referentially transparent, so you might be inclined to use mocks and stubs, but I instead recommend state-based testing with a Fake database.

Conclusion #

Good names are important, but don't let good names, alone, lull you into a false sense of security. All it takes is one vaguely named wrapper object, and all the information in your meticulously named methods is lost.

This is one of many reasons I try to design with static types instead of names. Not that I dismiss the value of good names. After all, you'll have to give your types good names as well.

Types are more robust in the face of inadvertent changes; or, rather, they tend to resist when we try to do something stupid. I suppose that's what lovers of dynamically typed languages feel as 'friction'. In my mind, it's entirely opposite. Types keep me honest.

Unfortunately, most type systems don't offer an adequate degree of safety. Even in F#, which has a great type system, you can introduce impure actions into what you thought was a pure function, and you'd be none the wiser. That's one of the reasons I find Haskell so interesting. Because of the way IO works, you can't inadvertently sweep surprises under the rug.

Comments

I find the idea of the impure/pure/impure sandwich rather interesting and I agree with the benefits that it yields. However, I was wondering about where to move synchronization logic, i.e. the reservation system should avoid double bookings. With the initial TrySave approach it would be clear for me where to put this logic: the synchonrization mechanism should be part of the TrySave method. With the impure/pure/impure sandwich, it will move out to the most outer layern (HTTP Controller) - at least this is how I'd see it. My feelings tells me that this is a bit smelly, but I can't really pin point why I think so. Can you give some advice on this? How would you solve that?

Johannes, thank you for writing. There are several ways to address that issue, depending on what sort of trade-off you're looking for. There's always a trade-off.

You can address the issue with a lock-free architecture. This typically involves expressing the desired action as a Command and putting it on a durable queue. If you combine that with a single-threaded, single-instance Actor that pulls Commands off the queue, you need no further transaction processing, because the architecture itself serialises writes. You can find plenty of examples of such an architecture on the internet, including (IIRC) my Pluralsight course Functional Architecture with F#.

Another option is to simply surround the impureim sandwich with a TransactionScope (if you're on .NET, that is).