Epistemology of interaction testing by Mark Seemann

How do we know that components interact correctly?

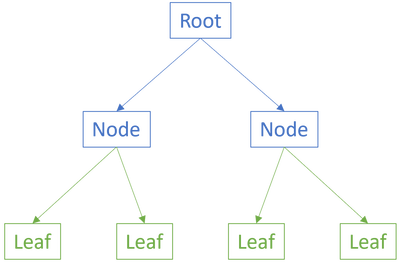

Most software systems are composed as a graph of components. To be clear, I use the word component loosely to mean a collection of functionality - it may be an object, a module, a function, a data type, or perhaps something else I haven't thought of. Some components deal with the bigger picture and will typically coordinate other components that perform more specific tasks. If we think of a component graph as a tree, then some components are leaves.

Leaf components, being self-contained and without dependencies, are typically the easiest to test. Most test-driven development (TDD) katas focus on these kinds of components: Tennis, bowling, diamond, Roman numerals, gossiping bus drivers, and so on. Even the legacy security manager kata is simple and quite self-contained. There's nothing wrong with that, and there's good reason to keep such exercises simple. After all, you want to be able to complete a kata in a few hours. You can hardly do that if the exercise is to develop an entire web site with user interface, persistent data storage, security, data validation, business logic, third-party integration, emails, instrumentation and logging, and so on.

This means that even if you get good at TDD against 'leaf' functionality, you may be struggling when it comes to higher-level components. How does one unit test code that has dependencies?

Interaction-based testing #

A common solution is to invert the dependencies. You can, for example, use Dependency Injection to inject Test Doubles into the System Under Test (SUT). This enables you to control the behaviour of the dependencies and to verify that the SUT behaves as expected. Not only that, but you can also verify that the SUT interacts with the dependencies as expected. This is called interaction-based testing. It is, perhaps, the most common form of unit testing in the industry, and exemplary explained in Growing Object-Oriented Software, Guided by Tests.

The kinds of Test Doubles most useful with interaction-based testing are Stubs and Mocks. They are, however, problematic because they break encapsulation. And encapsulation, to be clear, is also a concern in functional programming.

I have already described how to move from interaction-based to state-based testing, and why functional programming is intrinsically more testable.

How to test composition of pure functions? #

When you adopt functional programming (FP) you'll sooner or later need to compose or orchestrate pure functions. How do you test that the composition of pure functions is correct? That's what you can test with a Mock or Spy.

You've developed component A, perhaps as a higher-order function, that depends on another component B. You want to test that A correctly interacts with B, but if interaction-based testing is no longer 'allowed' (because it breaks encapsulation), then what do you do?

For a long time, I pondered that question myself, while I was busy enjoying FP making most things easier. It took me some time to understand that the answer, as is often the case, is mu. I'll get back to that later.

I'm not the only one struggling with this question. Sergei Rogovtcev writes and asks what I interpret as the same question:

"I do have a component A, which is, frankly, some controller doing some checks and processing around a fairly complex state. This process can have several outcomes, let's call them Success, Fail, and Missing (the actual states are not important, but I'd like to have more than two). Then we have a component B, which is responsible for the rendering of the result. Of course, three different states lead to three different renderings, but the renderings are also influenced by state (let's say we have browser, mobile and native clients, and we need to provide different renderings). Originally the components are objects, B having three separate methods, but I can express them as pure functions, at least for the purpose of this discussion - A, and then BSuccess, BFail and BMissing. I can easily test each part of B in isolation; the problem comes when I need to test A, which calls different parts of B. If I use mocks, the solution is simple - I inject a mock of B to A, and then verify that A calls appropriate parts according to the process result. This requires knowing the innards of A, but otherwise it is a well-known and well-understood approach. But if I want to avoid mocks, what do I do? I cannot test A without relying on some code path in B, and this to me means that I'm losing the benefits of unit testing and entering the realm of integration testing."

In his email Sergei Rogovtcev has explicitly given me permission to quote him and engage with this question. As I've outlined, I've grappled with that question myself, so I find the question worthwhile. I can't, however, work with it without questioning the premise. This is not an attack on Sergei Rogovtcev; after all, I had that question myself, so any critique I make is directed as much at my former self as at him.

Axiomatic versus scientific knowledge #

It may be helpful to elevate the discussion. How do we know that software (or a subsystem thereof) works? You could say that one answer to that is: Passing tests. If all tests are passing, we may have high confidence that the system works.

In the parlance of Sergei Rogovtcev, we can easily unit test component B because it's composed from pure functions.

How do we unit test component A, though? With Mocks and Stubs, you can prove that the interaction works as intended. The keyword here is prove. If you assume that component B works correctly, 'all' you have to do is to demonstrate that component A correctly interacts with component B. I used to do that all the time and called it data-flow verification or structural inspection. The idea was that if you could demonstrate that component A correctly interacts with any LSP-compliant implementation of component B, and then also demonstrate that in reality (when composed in the Composition Root) component A is composed with a component B that has also been demonstrated to work correctly, then the (sub-)system works correctly.

This is almost like a mathematical proof. First prove lemma B, then prove theorem A using lemma B. Finally, state corollary C: b is a special case handled by lemma B, so therefore a is covered by theorem A. Q.E.D.

It's a logical and deductive approach to the problem of verifying the composition of the whole from verified parts. It's almost mathematical in the sense that it tries to erect an axiomatic system.

It's also fundamentally flawed.

I didn't understand that a decade ago, and in practice, the method worked well enough - apart from all the problems stemming from poor encapsulation. The problem with that approach is that an axiomatic system is only as strong as its axioms. What are the axioms in this system? The axioms, or premises, are that each of the components (A and B) are already correct. Based on these premises, this testing approach then proves that the composition is also correct.

How do we know that the components work correctly?

In this context, the answer is that they pass all tests. This, however, doesn't constitute any kind of proof. Rather, this is experimental knowledge, more reminiscent of science than of mathematics.

Why are we trying to prove, then, that composition works correctly? Why not just test it?

This observation cuts to the heart of the epistemology of testing. How do we know that software works? Typically not by proving it correct, but by subjecting it to experiments. As I've also outlined in Code That Fits in Your Head, we can regard automated tests as scientific experiments that we repeat over and over.

Integration testing #

To outline the argument so far: While you can use Mocks and Spies to verify that a component correctly interacts with another component, this may be overkill. You're essentially trying to prove a conjecture based on doubtful evidence.

Does it really matter that two components interact correctly? Aren't the components implementation details? Do users care?

Users and other stakeholders care about the behaviour of the software system. Why not test that?

This is, unfortunately, easier said than done. Sergei Rogovtcev strongly implies that he isn't keen on integration testing. While he doesn't explicitly state why, there are good reasons to be wary of integration testing. As J.B. Rainsberger eloquently explained, a major problem with integration testing is the combinatorial explosion of test cases. If you ought to write 53,000 test cases to cover all combinations of pathways through integrated components, which test cases do you write? Surely not all 53,000.

J.B. Rainsberger's argument is that if you're going to write no more than a dozen unit tests, you're unlikely to cover enough test cases to be confident that the system works.

What if, however, you could write hundreds or thousands of test cases?

Property-based testing #

You may recall that the premise of this article is functional programming (FP), where property-based testing is a common testing technique. While you can, to a degree, also use this technique in object-oriented programming (OOP), it's often difficult because of side effects and non-deterministic behaviour.

When you write a property-based test, you write a single piece of code that evaluates a property of the SUT. The property looks like a parametrised unit test; the difference is that the input is generated randomly, but in a fashion you can control. This enables you to write hundreds or thousands of test cases without having to write them explicitly.

Thus, epistemologically, you can use property-based testing with integrated components to produce confidence that the (sub-)system works. In practice, I find that the confidence I get from this technique is at least as high as the one I used to get from unit testing with Stubs and Spies.

Examples #

All of this is abstract and theoretical, I realise. An example would be handy right about now. Such examples, however, are complex enough to warrant their own articles:

- Confidence from Facade Tests

- An abstract example of refactoring from interaction-based to property-based testing

- A restaurant example of refactoring from example-based to property-based testing

- Refactoring pure function composition without breaking existing tests

- When is an implementation detail an implementation detail?

Sergei Rogovtcev was kind enough to furnish a rather abstract, but minimal and self-contained, example. I'll go through that first, and then follow up with a more realistic example.

Conclusion #

How do you know that a software system works correctly? Ultimately, if it behaves in the way it's supposed to, it works correctly. Testing an entire system from the outside, however, is rarely viable in itself. The number of possible test cases is just too large.

You can partially address that problem by decomposing the system into components. You can then test the components individually, and verify that they interact correctly. This last part is the topic of this article. A common way to to address this problem is to use Mocks and Spies to prove interactions correct. It does solve the problem of correctness quite neatly, but has the undesirable side effect of making the tests brittle.

An alternative is to use property-based testing to verify that the components integrate correctly. Rather than something that looks like a proof, this is a question of numbers. Throw enough random test cases at the system, and you'll be confident that it works. How many? Enough.

Next: Confidence from Facade Tests.

Comments

First of all, let me thank you for taking time and effort to discuss this.

There's a minor point about integration testing:

The situation is somewhat more complicated: in fact, I tend to have at least a few integration tests for a feature I'm involved with, starting the coverage from the happy paths (the minimum requirement being to verify that we've wired correctly as many components as can be verified), and then, if possible, extending to error paths, edge cases and so on. Even the code from my email originally had integration tests covering all the outcomes for a single rendering (browser). The problem that I've faced then, and which prompted my question, was exactly the one that you quote from J.B. Rainsberger: combinatorial explosion. As soon as I decided to cover a second rendering (mobile), I saw that I needed to replicate the setups for outcomes (success/fail/missing), but modify the asserts for their rendering. And then again the same for the native client. Unit tests, even with their ungainly break in encapsulation, gave the simple appeal of writing less code...

Hopefully, this seem to be the very same premise that you explore towards the end of your post, leading to the property-based testing - which I was trying to incorporate into my toolset for quite some time, but was always somewhat baffled at how it should work and integrate into object-oriented (and C#-based) code. So I'm very much looking forward for your next installment in this series.

And again, thank you for exploring these matters.

Sergei, thank you for writing. I hope that this small series of articles will be able to at least give you some ideas. I am, however, concerned that I may miss the mark.

When discussing problems like this, there's always a risk that the examples we look at are too simple; that they don't adequately represent the real world. For instance, we may look at the example code in the next few articles and calculate how well we've covered all combinations.

Perhaps we may find that the combinatorial 'explosion' is only in the ten-thousands, which is within reasonable reach of well-written properties.

Then, when we come back to our 'real' problems, the combinatorial explosion may be orders of magnitudes larger. You can easily ask a property-based framework to run a property millions of time, but it'll take time. Perhaps this makes the tests so slow that it's not a practical solution.

All that said, I think that not all is lost. Part of the solution, however, may be found elsewhere.

The more I learn about functional programming (FP), the more I'm amazed at the alternative mindset it offers. Solutions that look in one way in object-oriented programming (OOP) may look completely different in FP. You've probably noticed this yourself. Often, you have to turn a problem on its head to see it 'the FP way'.

The following is something that I've not yet thought through rigorously, so perhaps there are flaws in my thinking. I offer it for peer review here.

OOP composition tends to be 'deep'. If we think of object composition as a directed (acyclic, hopefully!) graph, typical OOP composition might resemble a graph where each node has only few children, but the distance from the root to each leaf is great. Since, every time you compose two objects, you have to multiply the number of pathways, this gives you this combinatorial explosion we've discussed. The deeper the graph, the worse it is.

In FP I typically find myself composing functions in a more shallow fashion. Instead of having functions that call other functions that call other functions, etc. I tend to have functions that return values that I then pass to other functions, and so on. This produces a shallower and wider composition graph. Doesn't it also reduce the combinations that we need to consider for testing?

I haven't subjected this idea to a more formal analysis yet, so this may be wrong. If I'm right, though, this could mean that property-based testing is still a viable solution to the problem.

Identifying useful properties is another problem that you also bring up, particularly in the context of OOP. So far, property-based testing is more prevalent in FP, and perhaps there's a reason for that.

It seems to me that there's a connection between property-based testing and encapsulation. Essentially, a property is an executable description of some invariant, or pre- or post-condition. Most real-world object-oriented code I've seen, however, isn't encapsulated. If you have poor encapsulation, it's no wonder that it's hard to identify useful properties.

Even so, Identifying good properties is a skill that you have to learn. It's fairly easy to construct properties that, in a sense, 'reproduce the implementation'. The challenge is to avoid that, and that's not always easy. As an example, it took me years before I found a good way to express properties of FizzBuzz without repeating the implementation.

Intuitively I'd say that it shouldn't (reduce), because in the end the number of combinations that we consider for testing is the number states our SUT can be in, which is defined as a combination of all its inputs. But I may, of course, miss something important here.

My own opinion on this, coming from a short-ish brush with FP, is that FP, or, more precisely, more expressive type systems, reduce the number of combinations by reducing the number of possible inputs by the virtue of more expressive types. My favorite example is that even less expressive type system, one with simple int and string instead of all-encompassing var/object, allows us to get rid off all the tests where we pass "foo" to a function that only works on numbers. Explicit nullability gets rid of all the null-related test-cases (and we get an indication where we lack such cases for null-accepting functions). This can be continued by adding more and more cases until we arrive at the (in)famous "if it compiles, is works".

I don't remember whether I've included this guard case in my original email, but I definitely remember thinking of mentioning that I'm confined to a less-expressive type system of C#. Even comparing to F# (as I remember it from my side studies), I can see how some tests can be made redundant by, for example, introducing a sum type and then relying on compiler to check for exhaustive match. Sometimes I wonder what would a more expressive type system do to these problems...

Sergei, thank you for writing. A more expressive type system certainly does reduce the amount of testing required. While I prefer F#, the good news is that most of what F# can do, C# can do, too. Everything is just more verbose in C#. The main stumbling block that people usually complain about is the lack of sum types, but you can use Visitors as sum types. You get the same benefits as with F# discriminated unions, except with much more ceremony.