ploeh blog danish software design

Partial Type Name Role Hint

This article describes how object roles can be indicated by parts of a type name.

In my overview article on Role Hints I described how making object roles explicit can help making code more object-oriented. One way code can convey information about the role played by an object is to let a part of a class' name convey that information. This is often very useful when using Convention over Configuration.

While a class can have an elaborate and concise name, a part of that name can communicate a particular role. If the name is complex enough, it can hint at multiple roles.

Example: Selecting a shipping Strategy #

As an example, consider the shipping Strategy selection problem from a previous post. Does attribute-based metadata like this really add any value?

[HandlesShippingMethod(ShippingMethod.Express)] public class ExpressShippingCostCalculator : IBasketCalculator

Notice how the term Express appears twice on two lines of code. You could successfully argue that the DRY principle is being violated here. This becomes even more apparent when considering the detached metadata example. Here's a static way to populate the map (in F# just because I can):

let map = Dictionary<ShippingMethod, IBasketCalculator>()

map.Add(ShippingMethod.Standard,

StandardShippingCostCalculator())

map.Add(ShippingMethod.Express,

ExpressShippingCostCalculator())

map.Add(ShippingMethod.PriceSaver,

PriceSaverShippingCostCalculator())

This code snippet uses some slightly unorthodox (but still valid) formatting to highlight the problem. Instead of a single statement per shipping method, you could just as well write an algorithm that populates this map based on the first part of each calculator's name. Follow that train of thought to its logical conclusion, and you don't even need the map:

public class ShippingCostCalculatorFactory : IShippingCostCalculatorFactory { private readonly IEnumerable<IBasketCalculator> candidates; public ShippingCostCalculatorFactory( IEnumerable<IBasketCalculator> candidates) { this.candidates = candidates; } public IBasketCalculator GetCalculator(ShippingMethod shippingMethod) { return (from c in this.candidates let t = c.GetType() where t.Name.StartsWith(shippingMethod.ToString()) select c).First(); } }

This implementation uses the start of the type name of each candidate as a Role Hint. The ExpressShippingCostCalculator already effectively indicates that it calculates shipping cost for the Express shipping method.

This is an example of Convention over Configuration. Follow a simple naming convention, and things just work. This isn't an irreversible decision. If, in the future, you discover that you need a more elaborate selection algorithm, you can always modify the ShippingCostCalculatorFactory class (or, if you wish to adhere to the Open/Closed Principle, add an alternative implementation of IShippingCostCalculatorFactory).

Example: ASP.NET MVC Controllers #

The default routing algorithm in ASP.NET MVC works this way. An incoming request to /basket/1234 is handled by a BasketController instance, /product/3457 by a ProductController instance, and so on.

The ASP.NET Web API works the same way, too.

Summary #

Using a part of a type name as a Role Hint is very common when using Convention over Configuration. Many developers react strongly against this approach because they feel that the loss of type safety and the use of Reflection makes this a bit 'too magical' for their tastes. However, even when using attributes, you can easily forget to add an attribute to a class, so in the end you must rely on testing to be sure that everything works. The type safety of attributes is often an illusion.

The great benefit of Convention over Configuration is that it significantly cuts down on the number of moving parts. It also 'forces' you (and your team) to write more consistent code, because the overall application is simply not going to work if you don't follow the conventions.

Role Interface Role Hint

This article describes how object roles can be indicated by the use of Role Interfaces.

In my overview article on Role Hints I described how making object roles explicit can help making code more object-oriented. One way code can convey information about the role played by an object is by implementing one or more Role Interfaces. As the name implies, a Role Interface describes a role an object can play. Classes can implement more than one Role Interface.

Example: Selecting a shipping Strategy #

As an example, consider the shipping Strategy selection problem from the previous post. That example seemed to suffer from the Feature Envy smell because the attribute had to expose the handled shipping method as a property in order to enable the selection mechanism to pick the right Strategy.

Another alternative is to define a Role Interface for matching objects to shipping methods:

public interface IHandleShippingMethod { bool CanHandle(ShippingMethod shippingMethod); }

A shipping cost calculator can implement the IHandleShippingMethod interface to participate in the selection process:

public class ExpressShippingCostCalculator : IBasketCalculator, IHandleShippingMethod { public int CalculatePrice(ShoppingBasket basket) { /* Perform some complicated price calculation based on the * basket argument here. */ return 1337; } public bool CanHandle(ShippingMethod shippingMethod) { return shippingMethod == ShippingMethod.Express; } }

An ExpressShippingCostCalculator object can play one of two roles: It can calculate basket prices and it can handle basket calculations related to shipping methods. It doesn't have to expose as a property the shipping method it handles, which enables some more sophisticated scenarios like handling more than one shipping method, or handling a certain shipping method only if some other set of conditions are also met.

You can implement the selection algorithm like this:

public class ShippingCostCalculatorFactory : IShippingCostCalculatorFactory { private readonly IEnumerable<IBasketCalculator> candidates; public ShippingCostCalculatorFactory( IEnumerable<IBasketCalculator> candidates) { this.candidates = candidates; } public IBasketCalculator GetCalculator(ShippingMethod shippingMethod) { return (from c in this.candidates let handles = c as IHandleShippingMethod where handles != null && handles.CanHandle(shippingMethod) select c).First(); } }

Notice that because the implementation of CanHandle can be more sophisticated and conditional on the context, more than one of the candidates may be able to handle a given shipping method. This means that the order of the candidates matters. Instead of selecting a Single item from the candidates, the implementation now selects the First. This provides a fall-through mechanism where a preferred, but specialized candidate is asked before less preferred, general-purpose candidates.

This particular definition of the IHandleShippingMethod interface suffers from the same tight coupling to the ShippingMethod enum as the previous example. One fix may be to define the shipping method as a string, but you could still successfully argue that even implementing an interface such as IHandleShippingMethod in a Domain Model object mixes architectural concerns. Detached metadata might still be a better option.

Summary #

As the name implies, a Role Interface can be used as a Role Hint. However, you must be wary of pulling in disconnected architectural concerns. Thus, while a class can implement several Role Interfaces, it should only implement interfaces defined in appropriate layers. (The word 'layer' here is used in a loose sense, but the same considerations apply for Ports and Adapters: don't mix Port types and Adapter types.)

Comments

Could you expand upon how you might implement the fall-through mechanism you mentioned in your IShippingCostCalculatorFactory implementation, where more than one candidate can handle the shippingMethod?

How would you sort your IEnumerable<IBasketCalculator> candidates in GetCalculator() so that the candidate returned by First() is the one specifically meant to handle the ShippingMethod when one exists, and the default implementation when a specific one doesn't exist?

I considered using FirstOrDefault(), then returning the default implementation if the result of the query was nothing, but my default IHandleShippingMethod implementation always returns True from CanHandle() - I don't know what other value it could return.

You could have super-specialized IBasketCalculator implementations, that (e.g.) are only active certain times of day, and you could have the ones I've shown here, and then you could have a fallback that implements IHandleShippingMethod.CanHandle by simply returning true no matter what the input is. If you put this fallback implementations last in the injected candidates, it's only going to picked up by the First method if no other candidate (before it) returns true from CanHandle.

Thus, there no reason to sort the candidates within GetCalculator - in fact, it would be an error to do so. As I wrote above, "the order of the candidates matters."

Metadata Role Hint

This article describes how object roles can be indicated by metadata.

In my overview article on Role Hints I described how making object roles explicit can help making code more object-oriented. One way code can convey information about the role played by an object is by leveraging metadata. In .NET that would often take the form of attributes, but you can also maintain the metadata in a separate data structure, such as a dictionary.

Metadata can provide useful Role Hints when there are many potential objects to choose from.

Example: Selecting a shipping Strategy #

Consider a web shop. When you take your shopping basket to checkout you are presented with a choice of shipping methods, e.g. Standard, Express, and Price Saver. Since this is a web application, the user's choice must be communicated to the application code as some sort of primitive type. In this example, assume an enum:

public enum ShippingMethod { Standard = 0, Express, PriceSaver }

Calculating the shipping cost for a basket may be a complex operation that involves the total weight and size of the basket, as well as the distance it has to travel. If the web shop has geographically distributed warehouses, it may be cheaper to ship from a warehouse closer to the customer. However, if the closest warehouse doesn't have all items in stock, there may be a better way to optimize profit. Again, that calculation likely depends on the shipping method chosen by the customer. Thus, a common solution is to select a shipping cost calculation Strategy based on the user's selection. A simplified example may look like this:

public class BasketCostCalculator { private readonly IShippingCostCalculatorFactory shippingFactory; public BasketCostCalculator( IShippingCostCalculatorFactory shippingCostCalculatorFactory) { this.shippingFactory = shippingCostCalculatorFactory; } public int CalculatePrice( ShoppingBasket basket, ShippingMethod shippingMethod) { var shippingCalculator = this.shippingFactory.GetCalculator(shippingMethod); return shippingCalculator.CalculatePrice(basket) + basket.Total; } }

A naïve attempt at an implementation of the IShippingCostCalculatorFactory may involve a switch statement:

public IBasketCalculator GetCalculator(ShippingMethod shippingMethod) { switch (shippingMethod) { case ShippingMethod.Express: return new ExpressShippingCostCalculator(); case ShippingMethod.PriceSaver: return new PriceSaverShippingCostCalculator(); case ShippingMethod.Standard: default: return new StandardShippingCostCalculator(); } }

Now, before you pull Refactoring at me and tell me to replace the enum with a polymorphic type, I must remind you that at the boundaries, applications aren't object-oriented. At some place, close to the application boundary, the application must translate an incoming primitive to a polymorphic type. That's the responsibility of something like the ShippingCostCalculatorFactory.

There are several problems with the above implementation of IShippingCostCalculatorFactory. In the simplified example code, all three implementations of IBasketCalculator have default constructors, but that's not likely to be the case. Recall that calculating shipping cost involves complicated business rules. Not only are those classes likely to need all sorts of configuration data to determine price per weight range, etc. but they might even need to perform lookups against external system - such as getting a qoute from an external carrier. In other words, the ExpressShippingCostCalculator, PriceSaverShippingCostCalculator, and StandardShippingCostCalculator are unlikely to have default constructors.

There are various ways to implement such an Abstract Factory, but none of them may fit perfectly. Another option is to associate metadata with each implementation. Using attributes for such purpose is the classic .NET solution:

public class HandlesShippingMethodAttribute : Attribute { private readonly ShippingMethod shippingMethod; public HandlesShippingMethodAttribute(ShippingMethod shippingMethod) { this.shippingMethod = shippingMethod; } public ShippingMethod ShippingMethod { get { return this.shippingMethod; } } }

You can now adorn each IBasketCalculator implementation with this attribute:

[HandlesShippingMethod(ShippingMethod.Express)] public class ExpressShippingCostCalculator : IBasketCalculator { public int CalculatePrice(ShoppingBasket basket) { /* Perform some complicated price calculation based on the * basket argument here. */ return 1337; } }

Obviously, the other IBasketCalculator implementations get a similar attribute, only with a different ShippingMethod value. This effectively provides a hint about the role of each IBasketCalculator implementation. Not only is the ExpressShippingCostCalculator a basket calculator; it specifically handles the Express shipping method.

You can now implement IShippingCostCalculatorFactory using these Role Hints:

public class ShippingCostCalculatorFactory : IShippingCostCalculatorFactory { private readonly IEnumerable<IBasketCalculator> candidates; public ShippingCostCalculatorFactory( IEnumerable<IBasketCalculator> candidates) { this.candidates = candidates; } public IBasketCalculator GetCalculator(ShippingMethod shippingMethod) { return (from c in this.candidates let handledMethod = c .GetType() .GetCustomAttributes<HandlesShippingMethodAttribute>() .SingleOrDefault() where handledMethod != null && handledMethod.ShippingMethod == shippingMethod select c).Single(); } }

This implementation is created with a sequence of IBasketCalculator candidates and then selects the matching candidate upon each GetCalculator method call. (Notice that I decided that candidates was a better Role Hint than e.g. calculators.) To find a match, the method looks through the candidates and examines each candidate's [HandlesShippingMethod] attribute.

You may think that this is horribly inefficient because it virtually guarantees that the majority of the injected candidates are never going to be used in this method call, but that's not a concern.

Example: detached metadata #

The use of attributes has been a long-standing design principle in .NET, but seems to me to offer a poor combination of tight coupling and Primitive Obsession. To be fair, it looks like even in the BCL, the more modern APIs are less based on attributes, and more based on other alternatives. In other posts in this series on Role Hints I'll describe other ways to match objects, but even when working with metadata there are other alternatives.

One alternative is to decouple the metadata from the type itself. There's no particular reason the metadata should be compiled into each type (and sometimes, if you don't own the type in question, you may not be able to do this). Instead, you can define the metadata in a simple map. Remember, 'metadata' simply means 'data about data'.

public class ShippingCostCalculatorFactory : IShippingCostCalculatorFactory { private readonly IDictionary<ShippingMethod, IBasketCalculator> map; public ShippingCostCalculatorFactory( IDictionary<ShippingMethod, IBasketCalculator> map) { this.map = map; } public IBasketCalculator GetCalculator(ShippingMethod shippingMethod) { return this.map[shippingMethod]; } }

That's a much simpler implementation than before, and only requires that you supply the appropriately populated dictionary in the application's Composition Root.

Summary #

Metadata, such as attributes, can be used to indicate the role played by an object. This is particularly useful when you have to select among several candidates that all have the same type. However, while the Design Guidelines for Developing Class Libraries still seems to favor the use of attributes for metadata, this isn't the most modern approach. One of the problems is that it is likely to tightly couple the resulting code. In the above example, the ShippingMethod enum is a pure boundary concern. It may be defined in the UI layer (or module) of the application, while you might prefer the shipping cost calculators to be implemented in the Domain Model (since they contain complicated business logic). However, using the ShippingMethod enum in an attribute and placing that attribute on each implementation couples the Domain Model to the user interface.

One remedy for the problem of tight coupling is to express the selected shipping method as a more general type, such as a string or integer. Another is to use detached metadata, as in the second example.

Comments

Bottom line: I've landed on just using the container directly inside of these factories. I am completely on board with the idea of a single call to Resolve() on the container, but this is one case where I have decided it's "easier" to consciously violate that principle. I feel like it's a bit more explicit--developers at least know to look in the registration code to figure out which implementation will be returned. Using a map just creates one more layer that essentially accomplishes the same thing.

A switch statement is even better and more explicit (as an aside, I don't know why the switch statement gets such a bad rap). But as you noted, a switch statement won't work if the calculator itself has dependencies that need resolution. In the past, I have actually injected the needed dependencies into the factory, and supplied them to the implementations via new().

Anyway, I'm enjoying this series and getting some nice insights from it.

So an attribute on the implementation class can serve two important purposes here -- first, it does indeed make the intended role of the class explicit, and second, it can give your IoC container registration hints, particularly helpful when using assembly scanning / convention-based registrations.

The detached metadata "injecting mapping dictionary from composition root" sample is great!

In my practice I did such mapping-thing with inharitance and the result is a lot of factories all-over the code, that knows about DI-container (because of lazy-initialization with many dependencies inside each factory)

With some modifications, like generic-parameters and lazy-initialization, injection dictionary over constructor inside such universal one-universal-factory-class really could be great solution for the most cases.

NSubstitute Auto-mocking with AutoFixture

This post announces the availability of the NSubstitute-based Auto-mocking extension for AutoFixture.

Almost two and a half years ago I added an Auto-mocking extension to AutoFixture, using Moq. Since then, a couple of other Auto-mocking extensions have been added, and this Sunday I accepted a pull request for Auto-mocking with NSubstitute, bringing the total number of Auto-mocking extensions for AutoFixture up to four:

- AutoFixture.AutoMoq

- AutoFixture.AutoRhinoMocks

- AutoFixture.AutoFakeItEasy

- AutoFixture.AutoNSubstitute

Kudos to Daniel Hilgarth for creating this extension, and for offering a pull request of high quality!

Comments

Argument Name Role Hint

This article describes how object roles can by indicated by argument or variable names.

In my overview article on Role Hints I described how making object roles explicit can help making code more object-oriented. One way code can convey information about the role played by an object is by proper naming of variables and method arguments. In many ways, this is the converse view of a Type Name Role Hint.

To reiterate, the Design Guidelines for Developing Class Libraries provides this rule:

Consider using names based on a parameter's meaning rather than names based on the parameter's type.

As described in the post about Type Name Role Hints, this rule makes sense when the argument type is too generic to provide enough information about role played by an object.

Example: unit test variables #

Previously I've described how explicitly naming unit test variables after their roles clearly communicates to the Test Reader the purpose of each variable.

[Fact] public void GetUserNameFromProperSimpleWebTokenReturnsCorrectResult() { // Fixture setup var sut = new SimpleWebTokenUserNameProjection(); var request = new HttpRequestMessage(); request.Headers.Authorization = new AuthenticationHeaderValue( "Bearer", new SimpleWebToken(new Claim("userName", "foo")).ToString()); // Exercise system var actual = sut.GetUserName(request); // Verify outcome Assert.Equal("foo", actual); // Teardown }

Currently I prefer these well-known variable names in unit tests:

- sut

- expected

- actual

Further variables can be named on a case-by-case basis, like the request variable in the above example.

Example: Selecting next Wizard Page #

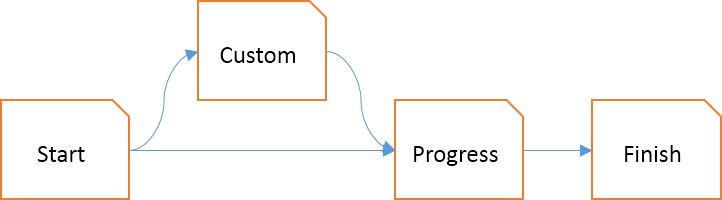

Consider a Wizard in a rich client, implemented using the MVVM pattern. A Wizard can be modeled as a 'Graph of Responsibility'. A simple example may look like this:

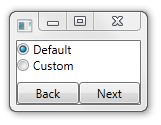

This is a rather primitive Wizard where the start page asks you whether you want to proceed in a 'default' or 'custom' way:

If you select Default and press Next, the Wizard will immediately proceed to the Progress step. If you select Custom, the Wizard will first show you the Custom step, where you can tweak your experience. Subsequently, when you press Next, the Progess step is shown.

Imagine that each Wizard page must implement the IWizardPage interface:

public interface IWizardPage : INotifyPropertyChanged { IWizardPage Next { get; } IWizardPage Previous { get; } }

The Start page's View Model must wait for the user's selection and then serve the correct Next page. Using the DIP, the StartWizardPageViewModel doesn't need to know about the concrete 'custom' and 'progress' steps:

private readonly IWizardPage customPage; private readonly IWizardPage progressPage; private bool isCustomChecked; public StartWizardPageViewModel( IWizardPage progressPage, IWizardPage customPage) { this.progressPage = progressPage; this.customPage = customPage; } public IWizardPage Next { get { if (this.isCustomChecked) return this.customPage; return this.progressPage; } }

Notice that the StartWizardPageViewModel depends on two different IWizardPage objects. In such a case, the interface name is insufficient to communicate the role of each dependency. Instead, the argument names progressPage and customPage are used to convey the role of each object. The role of the customPage is more specific than just being a Wizard page - it's the 'custom' page.

Example: Message Router #

While you may not be building Wizard-based user interfaces with MVVM, I chose the previous example because the problem domain (that of modeling a Wizard UI) is something most of us can relate to. Another set of examples is much more general-purpose in nature, but may feel more abstract.

Due to the multicore problem, asynchronous messaging architectures are becoming increasingly common - just consider the growing popularity of CQRS. In a Pipes and Filters architecture, Message Routers are central. Many variations of Message Routers presented in Enterprise Integration Patterns provide examples in C# where the alternative outbound channels are identified with Role Hints such as outQueue1, outQueue2, etc. See e.g. pages 83, 233, 246, etc. Due to copyright reasons, I'm not going to repeat them here, but here's a generic Message Router that does much the same:

public class ConditionalRouter<T> { private readonly IMessageSpecification<T> specification; private readonly IChannel<T> firstChannel; private readonly IChannel<T> secondChannel; public ConditionalRouter( IMessageSpecification<T> specification, IChannel<T> firstChannel, IChannel<T> secondChannel) { this.specification = specification; this.firstChannel = firstChannel; this.secondChannel = secondChannel; } public void Handle(T message) { if (this.specification.IsSatisfiedBy(message)) this.firstChannel.Send(message); else this.secondChannel.Send(message); } }

Once again, notice how the ConditionalRouter selects between the two roles of firstChannel and secondChannel based on the outcome of the Specification. The constructor argument names carry (slightly) more information about the role of each channel than the interface name.

Summary #

Parameter or variable names can be used to convey information about the role played by an object. This is especially helpful when the type of the object is very general (such as string, DateTime, int, etc.), but can also be used to select among alternative objects of the same type even when the type is specific enough to adhere to the Single Responsibility and Interface Segregation principles.

Type Name Role Hints

This article describes how object roles can by indicated by type names.

In my overview article on Role Hints I described how making object roles explicit can help making code more object-oriented. When first hearing about the concept of object roles, a typical reaction is: How is that different from the class name? Doesn't the class name communicate the purpose of the class?

Sometimes it does, so this is a fair question. However, there are certainly other situations where this isn't the case at all.

Consider many primitive types: do the names int (or Int32), bool (or Boolean), Guid, DateTime, Version, string, etc. communicate anything about the roles played by instances?

In most cases, such type names provide poor hints about the roles played by the instances. Most developers already implicitly know this, and the Design Guidelines for Developing Class Libraries also provides this rule:

Consider using names based on a parameter's meaning rather than names based on the parameter's type.

Most of us can probably agree that code like this would be hard to use correctly:

public static bool TryCreate(Uri u1, Uri u2, out Uri u3)

Which values should you use for u1? Which value for u2?

Fortunately, the actual signature follows the Design Guidelines:

public static bool TryCreate(Uri baseUri, Uri relativeUri, out Uri result)

This is much better because the argument names communicate the roles the various Uri parameters play relative to each other. With the object roles provided by descriptive parameter names, the method signature is often all the documentation required to understand the proper intended use of the method.

The Design Guidelines' rules sound almost universal. Are there cases when the name of a type is more informative than the argument or variable name?

Example: Uri.IsBaseOf #

To stay with the Uri class for a little while longer, consider the IsBaseOf method:

public bool IsBaseOf(Uri uri)

This method accepts any Uri instance as input parameter. The uri argument doesn't play any other role than being an Uri instance, so there's no reason that an API designer should go out of his or her way to come up with some artificial 'role' name for the parameter. In this example, the name of the type is sufficient information about the role played by the instance - or you could say that in this context the class itself and the role it plays conflates into the same name.

Example: MVC instances #

If you've ever worked with ASP.NET MVC or ASP.NET Web API you may have noticed that rarely do you refer to Model, View or Controller instances with variables. Often, you just return a new model directly from the relevant Action Method:

public ViewResult Get() { var now = DateTime.Now; var currentMonth = new Month(now.Year, now.Month); return this.View(this.reader.Query(currentMonth)); }

In this example, notice how the model is implicitly created with a call to the reader's Query method. (However, you get a hint about the intermediary variables' roles from their names.) If we ever assign a model instance to a local variable before returning it with a call to the View method, we often tend to simply name that variable model.

Furthermore, in ASP.NET MVC, do you ever create instances of Controllers or Views (except in unit tests)? Instances of Controllers and Views are created by the framework. Basically, the role each Controller and View plays is embodied in their class names - HomeController, BookingController, BasketController, BookingViewModel, etc.

Example: Command Handler #

Consider a 'standard' CQRS implementation with a single Command Handler for each Command message type in the system. The Command Handler interface might be defined like this:

public interface ICommandHandler<T> { void Execute(T command); }

At this point, the type argument T could be literally any type, so the argument name command conveys the role of the object better than the type. However, once you look at a concrete implementation, the method signature materializes into something like this:

public void Execute(RequestReservationCommand command)

In the concrete case, the type name (RequestReservationCommand) carries more information about the role than the argument name (command).

Example: Dependency Injection #

With basic Dependency Injection, a common Role Hint is the type itself.

public BasketController( IBasketService basketService, CurrencyProvider currencyProvider) { if (basketService == null) throw new ArgumentNullException("basketService"); if (currencyProvider == null) throw new ArgumentNullException("currencyProvider"); this.basketService = basketService; this.currencyProvider = currencyProvider; }

From the point of view of the BasketController, the type names of the IBasketService interface and the CurrencyProvider abstract base class carry all required information about the role played by these dependencies. You can tell this because the argument names simply echo the type names. In the complete system, there could conceivably be more than one implementation of IBasketService, but in the context of the BasketController, some IBasketService instance is all that is required.

Summary #

The more generic a type is, the less information about role does the type name itself carry. Primitives and Value Objects such as integers, strings, Guids, Uris, etc. can be used in so many ways that you should strongly consider proper naming of arguments and variables to convey information about the role played by an object. This is what the Framework Design Guidelines suggest. However, as types become increasingly specific, their names carry more information about their roles. For very specific classes, the class name itself may carry all appropriate information about the object's intended role. As a rule of thumb, expect such classes to also adhere to the Single Responsibility Principle.

Role Hints

This article provides an overview of different ways to hint at the role an object is playing.

One of the interesting points in Lean Architecture is that many so-called object-oriented languages aren't really object-oriented, but rather class-oriented. This is as true for C# as for Java. The code artifacts are classes (or interfaces, etc.); not objects.

When asked to distinguish, most of us understand that objects are instances of classes, but since the languages are centered around classes, we sometimes forget this distinction and treat objects and classes as one and the same. When this happens, we run into some of the problems that Udi Dahan describes in his excellent talk Making Roles Explicit. The solution is proposed in the same talk: make roles explicit.

So, what's a role? you might ask. A role is the purpose of an object instance in a given context. (That sounds a bit like the (otherwise rather confusingly described) concept of DCI.) An object can play more than one role during its lifetime, or a class can be instantiated in one of the roles it can play. Some objects can play only a single role.

There are several ways to hint at the role played by an object:

- Type Name. Use the name of the type (such as the class name) to hint at the role played by instances of the class. This seems to be mostly relevant for objects that can play only a single role.

- Argument Name. Use the name of an argument (or variable) to hint at the role played by an instance.

- Metadata. Use data about code (such as attributes or marker interfaces) to hint at the role played by instances of the class.

- Role Interfaces. A class can implement several Role Interfaces; clients can treat instances as the roles they require.

- Partial Type Name. Use part of the type name to hint at a role played by objects. This can be used to enable Convention over Configuration.

Making the role of an object explicit can tip the balance in favor of true object-orientation instead of class-orientation. It will likely make your code easier to read, understand and maintain.

Zookeepers must become Rangers

For want of a nail the shoe was lost... This post explains why Zookeeper developers must become Ranger developers to escape the vicious cycle of impossible deadlines and low-quality code.

In a previous article I wrote about Ranger and Zookeper developers. The current article is going to make no sense to you if you haven't read the previous, but in brief, Rangers explicitly deal with versioning and forwards and backwards compatibility (henceforth called temporal compatibility) in order to produce workable code.

While reading the previous article, you may have thought that you'd rather like to be a Ranger than a Zookeeper, simply because it sounds cooler. Well, I didn't pick those names by accident :) You don't want to be a Zookeeper developer.

Zookeepers may be under the impression that, since they (or their organization) control the entire installation base of their code, they don't need to deal with the versioning aspect of software design. This is an illusion. It may seem like a little thing, but, through a chain reaction, the lack of versioning leads to impossible deadlines, slipping code quality, death marches and many unpleasant aspects of our otherwise wonderful vocation.

In order to escape the vicious cycle of low-quality-code, death marches, and firefighting, Zookeepers need to explicitly deal with versioning of the software they produce, essentially turning themselves into Rangers.

In the following, I will make two assumptions about the type of software I discuss here:

- It's impossible to predict all future feature requirements.

- Applications don't exist in a vacuum. They depend on other applications, and other applications depend on them.

For the vast majority of Zoo Software, I believe these tenets to be true.

In order to understand why versioning is so important (even for Zoo Software) it's important to first understand why Zookeepers consistently disregard it.

We don't need no steenking design guidelines #

It's sometimes a bit surprising to me that (.NET) programmers are resistant to good design. There's this tome of knowledge originally published as the Framework Design Guidelines and later published online on MSDN as the Design Guidelines for Developing Class Libraries. Even if you don't care to read through it all, tools such as Visual Studio Code Analysis and FxCop (which is free) encapsulate many of those guidelines and helps identify when your code diverges.

In my experience, Zookeeper resistance against the Framework Design Guidelines is perfectly examplified by the 'rules' related to selecting between classes and interfaces:

- Do favor defining classes over interfaces.

- Do use abstract (MustInherit in Visual Basic) classes instead of interfaces to decouple the contract from implementations.

(For the record, I think this is horrible advice, but that's a discussion for another day.)

The problem with a guideline like this is that most Zookeepers react to such advice by thinking: "This isn't relevant for the kind of code I write. I control the entire install base of my code, so it's not a problem for me to introduce a breaking change. That entire knowledge base of design guidelines is targeted at another type of developers. I need not care about it."

Zookeepers fail to address versioning and temporal compatibility because they (incorrectly) assume that they can always schedule deployments of services and clients together in such a way that breaking changes never actually break anything. That may work as long as systems are monolithic and exist in a vacuum, but as soon as you start integrating systems, this disregard for versioning leads straight to hell.

Example: an internal music catalog service #

Now that you understand why Zookeepers tend to ignore versioning it's time to understand where that attitude leads. This is best done with an example.

Imagine a team tasked with building a music catalog service for internal use. In the first release of the service a track looks like this:

<track> <name>Recovery</name> <artist>Curve</artist> <album>Come Clean</album> <length>288</length> </track>

This is obviously a naïve attempt, and a bit of planning could probably have prevented the following nasty particular surprise. However, this is just an illustrative example, and I'm sure you've found yourself in a situation where a new requirement took you entirely by surprise - no matter how much you tried to predict the future.

After the team has released the first version of the music catalog service, it turns out that some tracks may be the result of collaborations, and thus have multiple artists. The track may also appear in multiple albums (such as compilations or greatest hits collections), or on no album at all. Thus, version 2 of a track will have to look like this:

<track> <name>Under Pressure</name> <artists> <artist>David Bowie</artist> <artist>Queen</artist> </artists> <albums> <album>Hot Space</album> <album>Greatest Hits</album> <album>The Singles: 1969-1993</album> </albums> <length>242</length> </track>

This is obviously a breaking change, but since the team works in an organization where the entire installation base is under control, they work with the Enterprise Architecture team to schedule a release of this breaking change with all their clients.

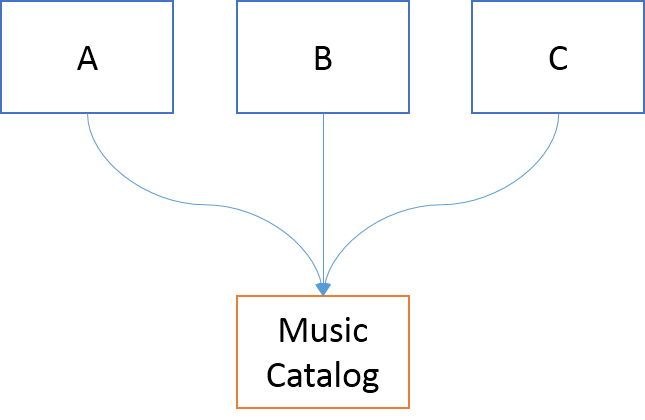

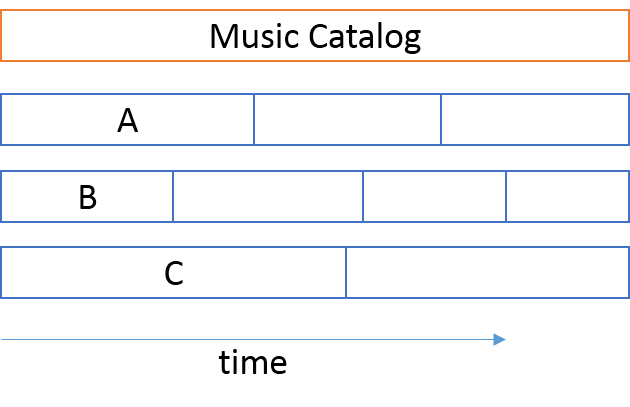

However, the service was made to support other systems, so this change must be coordinated with all the known clients. It turns out that three systems depend on the music catalog service.

Each of these systems are being worked on by separate teams, each with individual deadlines. These teams are not planning to deploy new versions of their applications all on the same day. Their immediate schedules are already set, and they aren't aligned.

The Architecture team must negotiate future iterations with teams A, B, and C to line them up so that the music catalog team can release their new version on the same day.

This alignment may be months into the future - perhaps a significant fraction of a year. The music catalog team can't sit still while they wait for this date to arrive, so they work on other new features, most likely introducing other breaking changes along the way.

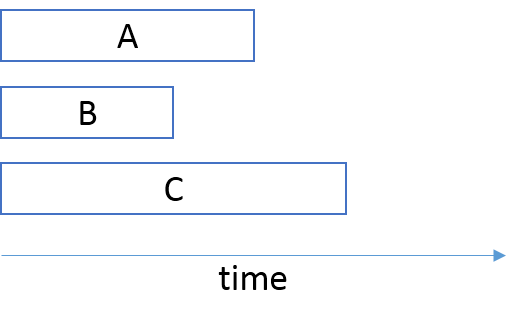

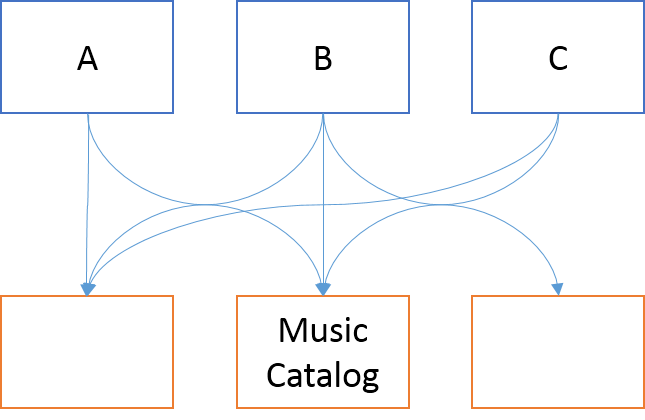

Meanwhile, team A goes through three iterations. In the first two iterations, they know that they are going to deploy into a production environment with version 1 of the music catalog, so they are going to need a testing environment that looks like that. For the third iteration, they know that they are going to deploy into a production environment with version 2 of the music catalog, so they are going to have to change their testing environment to reflect that fact.

Team B goes through four iterations that don't align with those of Team A. When Team A updates their testing environment to music catalog version 2, Team B is still working on an iteration where they must test against version 1. Thus, they must have their own testing environment. They can't share the testing environment with Team A.

The same is true for Team C: it needs its own private testing environment. Notice that this is a testing environment that not only involves configuration and maintenance of the team's own application, but also of its dependency, the music catalog service. In order to provide realistic data for performance testing, each testing environment must also be maintained with representative sample data, and may have to run on production-like hardware, in production-like network topologies. Add software licences to the mix, and you may start to realize that such a testing environment is expensive.

So far, the analysis has been unrealistically simplified. It turns out that the A, B, and C applications have other dependencies besides the music catalog service.

Each of these dependencies are also moving, producing new features. Some of those new features also involve breaking changes. Once again, the Enterprise Architecture team must negotiate a coordinated deployment date to make sure nothing breaks. However, the organization have more applications than A, B, and C. Application D, for instance, doesn't depend on the music catalog service, but it shares a dependency with application A. Thus, Team D must take part in the coordination effort, making sure that they deploy their new version on the same day as everyone else. Meanwhile, they'll need yet another one of those expensive testing environments.

I'm not making this up. I've had clients (financial institutions) that had hundreds of testing environments and big, coordinated deployments. Their applications didn't have two or three dependencies, but dozens of dependencies each - often on mainframe-based systems (very expensive).

The rot of Big Bang Releases #

All this leads to Big Bang Releases. Once or twice each year, all Zoo Sofware is simultaneously deployed according to a meticulously laid plan. This date becomes an unnegotiatable deadline. No team can miss the deadline, because dozens of other teams directly or indirectly depend on each other.

What happens when a Big Bang deadline grows nearer? What if you aren't ready to release? What if you just discovered a catastrophic bug?

This is what happens:

- Teams work nights and weekends to meet deadlines. Members burn out, or get divorced, or decide to quit. More work is left for remaining team members. A vicious circle indeed.

- Software is deployed with known bugs. Often, it's simply not possible to address all bugs in time, because they are discovered too late.

- The team enters survival mode, building up technical debt. Unit tests are ignored. Dirty hacks are made. Code rots. In the end, the team deploys a version of the software where they don't really know what works and what doesn't work. This makes it harder to work on the next version, because the lack of test coverage means that they don't even know if they'll be introducing breaking changes. At the absence of knowledge it's better to assume the worst. Another vicious cycle is created.

Remember: each team must deploy at the Big Bang Release, no matter how bad the quality is. It's not even possible to elect to skip a release all together, because the other teams have built their new versions with the assumption that your application's breaking changes will be deployed. In a Big Bang Release, there are only two options: Deploy everything, or deploy nothing. Deciding to deploy nothing may threaten the entire company's existence, so that decision is rarely made.

Do you think I'm exaggerating? I have seen this happen more than once. In fact, I also strongly suspect that this sort of situation recently unfolded itself within a big international ISV - at least, the symptoms are all here, for all to see :)

All this because Zookeepers don't deal with temporal compatibility.

Call to action #

Zookeepers must stop being Zookeepers and become Rangers. There's no excuse to not deal explicitly with versioning. You can't just introduce a breaking change in your code. The cost is often much larger than you think. The repercussions can be immense. Sometimes there's no way around breaking changes, but deal with them in tried-and-true ways. Introduce your new API side-by-side with the old API and deprecate the old API. Give clients a chance to move to the new API at their own pace. Monitor usage of your API to figure out when it's safe to completely remove the deprecated API. There are many well-described ways to deal with versioning and temporal compatibility. This is not an article about how to evolve an API, but rather a call to action: explicitly address versioning, or face the consequences!

Looser coupling can help. What I've described here is simply a form of tight temporal coupling. Still, even with loosely coupled, asynchronous, messaging-based architectures, messages must still be explicitly versioned to avoid problems.

Once an orginazation has moved entirely to a loosely coupled, explicitly versioned architecture where each team can use Continuous Delivery, they may find that they don't need that Enterprise Achitecture team at all :)

Comments

Thanks for sharing,

Jason

In such a case, one had to change an acceptance test if a service could not deal with legacy clients. A developer who does this or a manager who commands this, acts unprofessional.

A team that calls itself agile and does not use the tools and techniques necessary for agile development, is just a bunch of cowboy coders, but neither "Zookeepers" nor "Rangers".

The point of the article (IMO) is that developers need to learn a new tool that you haven't listed - namely good internal version handling (and to start thinking of internal collaborators in the same way as external).

A tricky thing for an organisation is to decide when make the move from (easy-to-write) temporally coupled code to discrete services (with increased versioning and infrastructure overheads). Most places with this problem have started off small, grow quickly by bolting bits onto the existing architecture, and by the time someone switched on enough to start changing the organisation takes charge, it is too late to make the switch easily. TBH most places I've worked where this is the root cause of the problems, the Enterprise Architect team can't see the wood for the trees.

However, the other Markus is spot on. The message of this article isn't that you should use TDD, Continuous Integration, etc. Lot's of people have already said that before me. The message is that even if you are an 'internal' developer, you must think explicitly about versioning and backwards compatibility.

Rangers and Zookeepers

This article discusses software that runs in the wild, versus software running in potentially controllable environments.

There are many perspectives on software development. One particular perspective that has always interested me is the distinction between software running 'in the wild' versus software running in potentially controllable environments (such as corporate networks, including DMZs). Whether it's one or the other has a substantial impact on how you write, release and maintain software.

Historical perspective #

Software 'in the wild' is simply software where you don't know, or can't control, the install base. In the good old days (a few years ago) that typically meant software produced by ISVs such as Microsoft, Oracle, SAS, SAP, Autodesk, Adobe etc. The software produced by such organizations were/are often purchased by license and deployed by enterprise operations organizations. Even for ISVs targeting end-users directly (such as personal tax software, single player games, etc.), the software was/is typically installed by the individual user, and the ISV had/has zero control of the deployment environment or schedule.

The opposite of ISV Software has until recently been Enterprise Software. The problem with this term is that it has become almost derogatory, but I don't think that's entirely fair, because the forces working on this kind of software are very different from those working on ISV software. In this category I count specialized software made for specialized, internal purposes. It can be developed by in-house developers or a hired team, but the main characteristic is that the software is deployed in a potentially controllable environment (I originally wrote 'controlled environment,' but one reviewer interpreted this to indicate only software explicitly managed by process and tools such as Chef or Puppet). Even if there are several deployment environments such as testing, staging and production, and even if we are talking about Client/Server architectures with desktop clients deployed to enterprise desktops, an operations team should be able to keep track of all installations and schedule updates (if any). Often the original developers can work with operations to troubleshoot problems directly on the installed machine (or at least get logs).

Note that I'm not counting software such as SAP, Microsoft Dynamics or Oracle as Enterprise Software, despite the fact that such software is used by enterprises. Enterprise software is software developed by the enterprise itself, for its own purposes.

Enterprise Software can be a small system that sits in a corner of a corporate network, used by two employees every last weekday of the month. It can also be a massively scalable system build for a special occasion, such as the official site for a big sports tournament like the FIFA World Cup or the Summer Olympics.

Current perspective #

The historical distinction of ISV versus Enterprise Development makes less and less sense today. First of all, with the shift of emphasis towards SaaS, traditional ISVs are moving into an area that looks a lot like Enterprise Development. With SaaS, vendors suddenly find themselves in a situation where they control the entire installation base (at least on the service side). Some of them are now figuring out that this enables them to iterate faster than before. Apparently even such an Enterprisey-sounding service as the Team Foundation Service is now deploying new features several times a year. Expect that cadence to increase.

On the other hand, the rising popularity of Open Source Software (OSS) suddenly puts a lot of OSS developers in the old position of ISV developers. OSS tends to run in the wild, and the developers have no control of the installation base or upgrade schedules.

Oh, and do I even have to say 'Apps'?

Thus, we need a better terminology. Developing, supporting and managing software in 'the wild' sounds a lot like the duties of a Ranger: you can put overall plans into motion to nudge the environment in a certain direction, and sometimes you encounter a particular specimen with which you can interact, but essentially you'll have to understand complex environmental dynamics and plan for the long term.

If traditional ISV developers, as well as OSS programmers, are Rangers, then Enterprise and SaaS developers must be Zookeepers. They deal with a controlled environment, can keep an accurate tally, and execute detailed project plans at pre-planned schedules.

OK, I admit that it sounds cooler to be a Ranger than a Zookeeper, but the metaphor makes sense to me :)

As a corrollary, we can talk about Wildlife Software versus Zoo Software.

Forces #

The forces working on Wildlife Software versus Zoo Software are very different:

| Advantages | Disadvantages | |

|---|---|---|

| Wildlife Software | Since you can't control the installation base, you have to make the software robust, well-tested, secure, easy (enough) to install, and documented. It should be well-instrumented and possible to troubleshoot without being the original developer. Once you release a version into the wild, it must be able to stand on its own, or it will die. This is a rather Darwinian environment, but the advantage is that the software that survives tends to have some attributes we often associate with high 'quality'. | Traditionally, producing software with all these 'quality' attributes has been an expensive and slow endeavor. It also leads to conservatism, because every change must be carefully considered. Once released into the wild, a feature or behavior of a piece of software can't be changed (in that version). To wit: Microsoft has traditionally shipped new versions of Windows, Office, Visual Studio, the BCL etc. years apart. The BCL is peppered with sealed or internal classes, much to the chagrin of programmers everywhere. |

| Zoo Software | The Product Owners of Zoo Software will expect their programmers to be able to iterate much faster, since the installation base is much smaller and well-known. There are fewer environment permutations to consider - e.g. you may know up front that the software should only have to be able to run on Windows Server 2008 R2 with a SQL Server 2008 R2 database. The entire deployment environment is also well-known, so there are many assumptions you can trust to hold. This indicates that you should be able to produce software in an 'agile' manner. Because the Zoo is a much less dangerous place, the software can be less robust (at least along some axes), which again indicates that it can be produced by a smaller team than corresponding Wildlife Software. This again helps keeping down cost. | There are certain quality shortcuts that can be safely made with Zoo Software - e.g. never testing the software on Windows XP if you know it's never going to run on that OS. However, once a team under deadline pressure starts to make warranted shortcuts, it may begin making unwarranted shortcuts as well. Thus, we often experience Zoo Software that is poorly tested, is extremely difficult to deploy, is poorly documented and hard to operate. This, I believe, is why Enterprise Development today has such a negative ring to it. |

Now that former ISVs are moving into Zoo Software via SaaS, it's going to be interesting to see what happens in this space.

Conclusion #

Don't jump to conclusions about the advantages of either approach. This article is meant to be descriptive, first and foremost. This means that I'm describing the characteristics of Wildlife and Zoo Software as I most commonly encounter those types of software. I'm fully aware of initiatives such as DevOps, so I'm not saying that software has to be like I describe it - I'm just describing what I'm currently observing.

Comments

It's an interesting dynamic that while ISVs is moving into creating what can be thought of as Zoo Software due to the SaaS environment the fact that the client in a SaaS environment most often is browser-based means that they remain in Ranger-role on the client-side since the myriad of different browsers and browser-versions makes the new client-world as wild if not even wilder than a traditional desktop application environment.

Encapsulation of properties

This post explains why Information Hiding isn't entirely the same thing as Encapsulation.

Despite being an old concept, Encapsulation is one of the most misunderstood principles of object-oriented programming. Particularly for C# and Visual Basic.NET developers, this concept is confusing because those languages have properties. Other languages, including F#, have properties too, but it would be far from me to suggest that F# programmers are confused :)

(As an aside, even in languages without formal properties, such as e.g. Java, you often see property-like constructs. In Java, for example, a pair of a getter and a setter method is sometimes informally referred to as a property. In the end, that's also how .NET properties are implemented. Thus, the present article can also be applied to Java and other 'property-less' languages.)

In my experience, the two most important aspects of encapsulation are Protection of Invariants and Information Hiding. Earlier, I have had much to say about Protection of Invariants, including the invariants of properties. In this post, I will instead focus on Information Hiding.

With all that confusion (which I will get back to), you would think that Information Hiding is really hard to grasp. It's not - it's really simple, but I think that the name erects an effective psychological barrier to understanding. If instead we were to call it Implementation Hiding, I think most people would immediately grasp what it's all about.

However, since Information Hiding has this misleading name, it becomes really difficult to understand what it means. Does it mean that all information in an object should be hidden from clients? How can we reconcile such a viewpoint with the fundamental concept that object-orientation is about data and behavior? Some people take the misunderstanding so far that they begin to evangelize against properties as a design principle. Granted, too heavy a reliance on properties leads to violations of the Law of Demeter as well as Feature Envy, but without properties, how can a client ever know the state of a system?

Direct field access isn't the solution, as this discloses data to an even larger degree than properties. Still, in the lack of better guidance, the question of Encapsulation often degenerates to the choice between fields and properties. Perhaps the most widely known and accepted .NET design guideline is that data should be exposed via properties. This again leads to the redundant 'Automatic Property' language feature.

Tell me again: how is this

public string Name { get; set; }

better than this?

public string Name;

The Design Guidelines for Developing Class Libraries isn't wrong. It's just important to understand why properties are preferred over fields, and it has only partially to do with Encapsulation. The real purpose of this guideline is to enable versioning of types. In other words: it's about backwards compatibility.

An example demonstrating how properties enable code to evolve while maintaining backwards compatibility is in order. This example also demonstrates Implementation Hiding.

Example: a tennis game #

The Tennis kata is one of my favorite TDD katas. Previously, I posted my own, very specific take on it, but I've also, when teaching, asked groups to do it as an exercise. Often participants arrive at a solution not too far removed from this example:

public class Game { private int player1Score; private int player2Score; public void PointTo(Player player) { if (player == Player.One) if (this.player1Score >= 30) this.player1Score += 10; else this.player1Score += 15; else if (this.player2Score >= 30) this.player2Score += 10; else this.player2Score += 15; } public string Score { get { if (this.player1Score == this.player2Score && this.player1Score >= 40) return "Deuce"; if (this.player1Score > 40 && this.player1Score == this.player2Score + 10) return "AdvantagePlayerOne"; if (this.player2Score > 40 && this.player2Score == this.player1Score + 10) return "AdvantagePlayerTwo"; if (this.player1Score > 40 && this.player1Score >= this.player2Score + 20) return "GamePlayerOne"; if (this.player2Score > 40) return "GamePlayerTwo"; var score1Word = ToWord(this.player1Score); var score2Word = ToWord(this.player2Score); if (score1Word == score2Word) return score1Word + "All"; return score1Word + score2Word; } } private string ToWord(int score) { switch (score) { case 0: return "Love"; case 15: return "Fifteen"; case 30: return "Thirty"; case 40: return "Forty"; default: throw new ArgumentException( "Unexpected score value.", "score"); } } }

Granted: there's a lot more going on here than just a single property, but I wanted to provide an example of enough complexity to demonstrate why Information Hiding is an important design principle. First of all, this Game class tips its hat to that other principle of Encapsulation by protecting its invariants. Notice that while the Score property is public, it's read-only. It wouldn't make much sense if the Game class allowed an external caller to assign a value to the property.

When it comes to Information Hiding the Game class hides the implementation details by exposing a single Score property, but internally storing the state as two integers. It doesn't hide the state of the game, which is readily available via the Score property.

The benefit of hiding the data and exposing the state as a property is that it enables you to vary the implementation independently from the public API. As a simple example, you may realize that you don't really need to keep the score in terms of 0, 15, 30, 40, etc. Actually, the scoring rules of a tennis game are very simple: in order to win, a player must win at least four points with at least two more points than the opponent. Once you realize this, you may decide to change the implementation to this:

public class Game { private int player1Score; private int player2Score; public void PointTo(Player player) { if (player == Player.One) this.player1Score += 1; else this.player2Score += 1; } public string Score { get { if (this.player1Score == this.player2Score && this.player1Score >= 3) return "Deuce"; if (this.player1Score > 3 && this.player1Score == this.player2Score + 1) return "AdvantagePlayerOne"; if (this.player2Score > 3 && this.player2Score == this.player1Score + 1) return "AdvantagePlayerTwo"; if (this.player1Score > 3 && this.player1Score >= this.player2Score + 2) return "GamePlayerOne"; if (this.player2Score > 3) return "GamePlayerTwo"; var score1Word = ToWord(this.player1Score); var score2Word = ToWord(this.player2Score); if (score1Word == score2Word) return score1Word + "All"; return score1Word + score2Word; } } private string ToWord(int score) { switch (score) { case 0: return "Love"; case 1: return "Fifteen"; case 2: return "Thirty"; case 3: return "Forty"; default: throw new ArgumentException( "Unexpected score value.", "score"); } } }

This is a true Refactoring because it modifies (actually simplifies) the internal implementation without changing the external API one bit. Good thing those integer fields were never publicly exposed.

The tennis game scoring system is actually a finite state machine and from Design Patterns we know that this can be effectively implemented using the State pattern. Thus, a further refactoring could be to implement the Game class with a set of private or internal state classes. However, I will leave this as an exercise to the reader :)

The progress towards the final score often seems to be an important aspect in sports reporting, so another possible future development of the Game class might be to enable it to not only report on its current state (as the Score property does), but also report on how it arrived at that state. This might prompt you to store the sequence of points as they were scored, and calculate past and current state based on the history of points. This would cause you to change the internal storage from two integers to a sequence of points scored.

In both the above refactoring cases, you would be able to make the desired changes to the Game class because the implementation details are hidden.

Conclusion #

Information Hiding isn't Data Hiding. Implementation Hiding would be a much better word. Properties expose data about the object while hiding the implementation details. This enables you to vary the implementation and the public API independently. Thus, the important benefit from Information Hiding is that it enables you to evolve your API in a backwards compatible fashion. It doesn't mean that you shouldn't expose any data at all, but since properties are just as much members of a class' public API as its methods, you must exercise the same care when designing properties as when designing methods.

Update (2012.12.02): A reader correctly pointed out to me that there was a bug in the Game class. This bug has now been corrected.

Comments

http://www.itmweb.com/essay550.htm

In his conclusion he makes the following uncommon distinction: "Abstraction, information hiding, and encapsulation are very different, but highly-related, concepts. One could argue that abstraction is a technique that helps us identify which specific information should be visible, and which information should be hidden. Encapsulation is then the technique for packaging the information in such a way as to hide what should be hidden, and make visible what is intended to be visible."

Within a theoretical discussion, I agree with his conclusion that "a stronger argument can be made for keeping the concepts, and thus the terms, distinct". A key thing to keep in mind here according to this definition encapsulating something doesn't necessarily mean it is hidden.

When you refer to "encapsulation" you seem to refer to e.g. Rumbaugh's definition: "Encapsulation (also information hiding) consists of separating the external aspects of an object which are accessible to other objects, from the internal implementation details of the object, which are hidden from other objects." who ironically doesn't seem to make a distinction between information hiding and encapsulation, highlighting the problem even more. This also corresponds to the first listed definition on the wikipedia article on encapsulation you link to, but not the second.

1. A language mechanism for restricting access to some of the object's components.

2. A language construct that facilitates the bundling of data with the methods (or other functions) operating on that data.

When hiding information we almost always (always?) encapsulate something, which is probably why many see them as the same thing.

In a practical setting where we are just quickly trying to convey ideas things get even more problematic. When I refer to "encapsulation" I usually refer to information hiding, because that is generally my intention when encapsulating something. Interpreting it the other way around where encapsulation is seen solely as an OOP principle where state is placed within the context of a class with restricted access is more problematic when no distinction is made with information hiding. The many other ways in which information can be hidden are neglected.

Keeping this warning in mind on the ambiguous definitions of encapsulation and information hiding, I wonder how you feel about my blog posts where I discuss information hiding beyond the scope of the class. To me this is a useful thing to do, for all the same reasons as information hiding in general is a good thing to do.

In "Improved encapsulation using lambdas" I discuss how a piece of reusable code can be encapsulated within the scope of one function by using lambdas.

http://whathecode.wordpress.com/2011/06/05/improved-encapsulation-using-lambdas/

In "Beyond private accessibility" I discuss how variables used only within one function could potentially be hidden from other functions in a class.

http://whathecode.wordpress.com/2011/06/13/beyond-private-accessibility/

I'm glad I subscribed to this blog, you seem to talk about a lot of topics I'm truly interested in. :)

The main purpose of this post is to drive a big stake through the notion that properties = Encapsulation.

The lambda trick you describe is an additional way to scope a method - another is to realize that interfaces can also be used for scoping.

While I agree 100% with the intent of that statement, I just wanted to warn you that according to some definitions, a property encapsulates a backing field, a getter, and a setter. Whether or not that getter and setter hide anything or are protected, is assumed by some to be an entirely different concept, namely information hiding.

However using the 'mainstream' OOP definition of encapsulation you are absolutely right that a property with a public getter and setter (without any implementation logic inside) doesn't encapsulate anything as no access restrictions are imposed.

And as per my cause, neither does moving something to the scope of the class with access restrictions provide the best possible information hiding in OOP. You are only hiding complexity from fellow programmers who will consume the class, but not for those who have to maintain it (or extend from it in the case of protected members).

You are probably right his example can be further encapsulated, but if you are taking the effort of encapsulating something I would at least try to make it reusable instead of making a specific 'PlayerScore' class.

A score is basically a range of values which is a construct which is missing from C# and Java, but e.g. Ruby has. From experience I can tell you implementing it yourself is truly useful as you start seeing opportunities to use it everywhere: https://github.com/Whathecode/Framework-Class-Library-Extension/blob/master/Whathecode.System/Arithmetic/Range/Interval.cs

Probably I would create a generic or abstract 'Score' class which is composed of a range and possibly some additional logic (Reset(), events, ...)

As to moving the logic of the game (the score comparison) to the 'PlayerScore' class, I don't think I entirely agree. This is the concern of the 'Game' class and not the 'Score'. When separating these concerns one could use the 'Score' class in different types of games as well.

However I feel that it's just not a very good fit in C# compared to functional languages like F# or Clojure where functions are truely first class and defining a function inside another function is fully supported with the same syntax as regular functions. What I really would like if C# simply would let you define functions inside functions with the usual syntax.

Regarding performance I don't know how C# does it but I imagine it's similar to the F# inner function performance hit is negligible: http://stackoverflow.com/questions/7920234/what-are-the-performance-side-effects-of-defining-functions-inside-a-recursive-f

Maybe it's better to encapsulate the private func as a public func in a nested private (static?) class and then have the inner function as a private function of that class.

Comments

I've used MVP pattern in Unity3D game development, where I had like MosterView and MonsterPresenter autowired by assembly scanning. As result I have IPresenter as input into View and IoC container that discover and inject correct Presenter implementation into View. I also wrote additional test, where I assert if every view has corresponding presenter, such that I would discover convention violation not at run-time, but at tests running step. Just reduces feedback time a little bit.

This idea came after watching your "Conventions: Make your code consistent" presentation. Thanks.