ploeh blog danish software design

Hyperlinking With the ASP.NET Web API

When creating resources with the ASP.NET Web API (beta) it's important to be able to create correct hyperlinks (you know, if it doesn't have hyperlinks, it's not REST). These hyperlinks may link to other resources in the same API, so it's important to keep the links consistent. A client following such a link should hit the desired resource.

This post describes an refactoring-safe approach to creating hyperlinks using the Web API RouteCollection and Expressions.

The Problem #

Obviously hyperlinks can be hard-coded, but since incoming requests are matched based on the Web API's RouteCollection, there's a risk that hard-coded links become disconnected from the API's incoming routes. In other words, hard-coding links is probably not a good idea.

For reference, the default route in the Web API looks like this:

routes.MapHttpRoute( name: "DefaultApi", routeTemplate: "{controller}/{id}", defaults: new { controller = "Catalog", id = RouteParameter.Optional } );

A sample action fitting that route might look like this:

public Artist Get(string id)

where the Get method is defined by the ArtistController class.

Desired Outcome #

In order to provide a refactoring-safe way to create links to e.g. the artist resource, the strongly typed Resource Linker approach outlined by José F. Romaniello can be adopted. The IResourceLinker interface looks like this:

public interface IResourceLinker { Uri GetUri<T>(Expression<Action<T>> method); }

This makes it possible to create links like this:

var artist = new Artist { Name = artistName, Links = new[] { new Link { Href = this.resourceLinker.GetUri<ArtistController>(r => r.Get(artistsId)).ToString(), Rel = "self" } }, // More crap goes here... };

In this example, the resourceLinker field is an injected instance of IResourceLinker.

Since the input to the GetUri method is an Expression, it's being checked at compile time. It's refactoring-safe because a refactoring tool will be able to e.g. change the name of the method call in the Expression if the name of the method changes.

Example Implementation #

It's possible to implement IResourceLinker over a Web API RouteCollection. Here's an example implementation:

public class RouteLinker : IResourceLinker { private Uri baseUri; private readonly HttpControllerContext ctx; public RouteLinker(Uri baseUri, HttpControllerContext ctx) { this.baseUri = baseUri; this.ctx = ctx; } public Uri GetUri<T>(Expression<Action<T>> method) { if (method == null) throw new ArgumentNullException("method"); var methodCallExp = method.Body as MethodCallExpression; if (methodCallExp == null) { throw new ArgumentException("The expression's body must be a MethodCallExpression. The code block supplied should invoke a method.\nExample: x => x.Foo().", "method"); } var routeValues = methodCallExp.Method.GetParameters() .ToDictionary(p => p.Name, p => GetValue(methodCallExp, p)); var controllerName = methodCallExp.Method.ReflectedType.Name .ToLowerInvariant().Replace("controller", ""); routeValues.Add("controller", controllerName); var relativeUri = this.ctx.Url.Route("DefaultApi", routeValues); return new Uri(this.baseUri, relativeUri); } private static object GetValue(MethodCallExpression methodCallExp, ParameterInfo p) { var arg = methodCallExp.Arguments[p.Position]; var lambda = Expression.Lambda(arg); return lambda.Compile().DynamicInvoke().ToString(); } }

This isn't much different from José F. Romaniello's example, apart from the fact that it creates a dictionary of route values and then uses the UrlHelper.Route method to create a relative URI.

Please note that this is just an example implementation. For instance, the call to the Route method supplies the hard-coded string "DefaultApi" to indicate which route (from Global.asax) to use. I'll leave it as an exercise for the interested reader to provide a generalization of this implementation.

IQueryable is Tight Coupling

From time to time I encounter people who attempt to express an API in terms of IQueryable<T>. That's almost always a bad idea. In this post, I'll explain why.

In short, the IQueryable<T> interface is one of the best examples of a Header Interface that .NET has to offer. It's almost impossible to fully implement it.

Please note that this post is about the problematic aspects of designing an API around the IQueryable<T> interface. It's not an attack on the interface itself, which has its place in the BCL. It's also not an attack on all the wonderful LINQ methods available on IEnumerable<T>.

You can say that IQueryable<T> is one big Liskov Substitution Principle (LSP)violation just waiting to happen. In the next two section, I will apply Postel's law to explain why that is.

Consuming IQueryable<T> #

The first part of Postel's law applied to API design states that an API should be liberal in what it accepts. In other words, we are talking about input, so an API that consumes IQueryable<T> would take this generalized shape:

IFoo SomeMethod(IQueryable<Bar> q);

Is that a liberal requirement? It most certainly is not. Such an interface demands of any caller that they must be able to supply an implementation of IQueryable<Bar>. According to the LSP we must be able to supply any implementation without changing the correctness of the program. That goes for both the implementer of IQueryable<Bar> as well as the implementation of SomeMethod.

At this point it's important to keep in mind the purpose of IQueryable<T>: it's intended for implementation by query providers. In other words, this isn't just some sequence of Bar instances which can be filtered and projected; no, this is a query expression which is intended to be translated into a query somewhere else - most often some dialect of SQL.

That's quite a demand to put on the caller.

It's certainly a powerful interface (or so it would seem), but is it really necessary? Does SomeMethod really need to be able to perform arbitrarily complex queries against a data source?

In one recent discussion, it turns out that all the developer really wanted to do was to be able to select based on a handful of simple criteria. In another case, the developer only wanted to do simple paging.

Such requirements could be modeled much simpler without making huge demands on the caller. In both cases, we could provide specialized Query Objects instead, or perhaps even simpler just a set of specialized queries:

IFoo FindById(int fooId); IFoo FindByCorrelationId(int correlationId);

Or, in the case of paging:

IEnumerable<IFoo> GetFoos(int page);

This is certainly much more liberal in that it requires the caller to supply only the required information in order to implement the methods. Designing APIs in terms of Role Interfaces instead of Header Interfaces makes the APIs much more flexible. This will enable you to respond to change.

Exposing IQueryable<T> #

The other part of Postel's law states that an API should be conservative in what it sends. In other words, a method must guarantee that the data it returns conforms rigorously to the contract between caller and implementer.

A method returning IQueryable<T> would take this generalized shape:

IQueryable<Bar> GetBars();

When designing APIs, a huge part of the contract is defined by the interface (or base class). Thus, the return type of a method specifies a conservative guarantee about the returned data. In the case of returning IQueryable<Bar> the method thus guarantees that it will return a complete implementation of IQueryable<Bar>.

Is that conservative?

Once again invoking the LSP, a consumer must be able to do anything allowed by IQueryable<Bar> without changing the correctness of the program.

That's a big honking promise to make.

Who can keep that promise?

Current Implementations #

Implementing IQueryable<T> is a huge undertaking. If you don't believe me, just take a look at the official Building an IQueryable provider series of blog posts. Even so, the interface is so flexible and expressive that with a single exception, it's always possible to write a query that a given provider can't translate.

Have you ever worked with LINQ to Entities or another ORM and received a NotSupportedException? Lots of people have. In fact, with a single exception, it's my firm belief that all existing implementations violate the LSP (in fact, I challenge my readers to refer me to a real, publicly available implementation of IQueryable<T> that can accept any expression I throw at it, and I'll ship a free copy of my book to the first reader to do so).

Furthermore, the subset of features that each implementation supports varies from query provider to query provider. An expression that can be translated by the Entity framework may not work with Microsoft's OData query provider.

The only implementation that fully implements IQueryable<T> is the in-memory implementation (and referring to this one does not earn you a free book). Ironically, this implementation can be considered a Null Object implementation and goes against the whole purpose of the IQueryable<T> interface exactly because it doesn't translate the expression to another language.

Why This Matters #

You may think this is all a theoretical exercise, but it actually does matter. When writing Clean Code, it's important to design an API in such a way that it's clear what it does.

An interface like this makes false guarantees:

public interface IRepository { IQueryable<T> Query<T>(); }

According to the LSP and Postel's law, it would seem to guarantee that you can write any query expression (no matter how complex) against the returned instance, and it would always work.

In practice, this is never going to happen.

Programmers who define such interfaces invariably have a specific ORM in mind, and they implicitly tend to stay within the bounds they know are safe for that specific ORM. This is a leaky abstraction.

If you have a specific ORM in mind, then be explicit about it. Don't hide it behind an interface. It creates the illusion that you can replace one implementation with another. In practice, that's impossible. Imagine attempting to provide an implementation over an Event Store.

The cake is a lie.

Comments

If you have the following query you get different results with the EF LINQ provider and the LINQ to Objects provider

var r = from x in context.Xs select new { x.Y.Z }

dependant on the type of join between the sets or if Y is null

makes in-memory testing difficult

P.S. Kudos by the way. I love every single piece of it.

Also, having a generic repository as an intermediate step between an ORM and a specific repository lets you unit test specific repositories and/or use them with multiple persistence mechanisms (which is sometimes desirable). Despite the leaks, this "pattern" does have some uses in my book when used VERY carefully (although it is misused in 99 cases out of 100 when you see it).

Everybody who wants to implement the repositories using EF would use EntityRepository and everybody who wants to use other ORMs can create his own derived abstraction from IRepository.

But the consumer codes in my library just use the IRepository inteface (Ex. A CRUD ViewModel base class).

It it correct?

See http://bartdesmet.net/blogs/bart/archive/2008/08/15/the-most-funny-interface-of-the-year-iqueryable-lt-t-gt.aspx and also take a look at the section 9min 30 secs into this talk http://channel9.msdn.com/Events/PDC/PDC10/FT10

Who on Earth defines an API that *takes* an IQueryable? Nobody I know, and

I've never had the need to. So the first half of the post is kinda

irrelevant ;)

-- Implementing IQueryable{T} is a huge undertaking.

That's why NOBODY has to. The ORM you use will (THEY will take the huge

undertaking and they have, go look for EF and NHibernate and even RavenDB

who do provide such an API), as well as Linq to Objects for your testing

needs.

So, the IRepository.Query{T} is doing *exactly* what its definition

promises: "Mediates between the domain and data mapping layers using a

collection-like interface for accessing domain objects." (

http://martinfowler.com/eaaCatalog/repository.html)

In the ORM-implemented case, it goes against the data mapping layer, in the

Linq to Objects case, it does directly over the collection of objects.

It's the PERFECT abstraction to make code that goes against a DB/repository

unit-testable. (not that it frees you from having full integration

end-to-end tests that go against the *real* repository to make sure you're

not using any unsupported constructs against the in-memory version).

IQueryable{T} got rid of an entire slew of useless interfaces to abstract

the real repository. We should wholeheartedly embrace it and move forward,

instead of longing for the days when we had to do all that manually ;)

That's the thing, if this interface is used in the read side of a CQRS

system, there's no event store there. Views need flexible queryability. No?

Saying this:

public IQueryable{Customer} GetActiveCustomers() {

return CustomersDb.Where(x => x.IsActive).ToList().AsQueryable();

}

tells consumers that the return value of the method is a representation of how the results will be materialized when the query is executed. Saying this, OTOH:

public IEnumerable{Customer} GetActiveCustomers() {

return CustomersDb.Where(x => x.IsActive).ToList();

}

explicitly tells consumers that you are returning the *results* of a query, and it's none of the consuming code's business to know how it got there.

Materialization of those query results is what you seemed to be focused on, and that's not IQueryable's task. That's the task of the specific LINQ provider. IQueryable's job is only to *describe* queries, not actually execute them.

A repository interface should be explicit about the operations it provides over the set of data, and should not open the door to arbitrary querying that is not part of the applications overall design / architecture. YAGNI

I know that this may fly in the face of those who are used to arbitrary late-bound SQL style query flexibility and code-gen'd ORMs, but this type of setup is not one that's conducive to performance or scaling in the long term. ORMs may be good to get something up and running without a lot of effort (or thought into how the data is used), but they rapidly show their cracks / leaks over time. This is why you see sites like StackOverflow as they reach scale adopt MicroORMs that are closer to the metal like Dapper instead of using EF, L2S or NHibernate. (Other great Micro ORMs are Massive, OrmLite, and PetaPoco to name just a few.)

Is it work to be explicit in your contracts around data access? Absolutely... but this is a better long-term design decision when you factor in testability and performance goals. How do you test the perf characteristics of an API that doesn't have well-defined operations? Well... you can't really.

Also -- consider stores that are *not* SQL based as Mark alludes to. We're using Riak, where querying capabilities are limited in scope and there are performance trade-offs to certain types of operations on secondary indices. We accept this tradeoff b/c the operational characteristics of Riak are unparalleled and it has great latency characteristics when retrieving data. We need to be explicit about how we allow data to be retrieved in our data access layer because some things are a no-go in our underlying store (for instance, listing keys is extremely expensive at the moment in Riak).

Bind yourself to IQueryable and you've handcuffed yourself. IQueryable is simply not a fit in a lot of cases, and in others it's total overkill... data access is not one size fits all. Embrace the polyglot!

However, I don't yet see how to design an easy to use and read API with query objects. Maybe you could provide a repository implementation that works without IQueryable but is still readable and easy to use?

However, exposing IQueryable in an API is so powerful that it's worth this minor annoyance.

I like the idea of the OData or Web API queries, but would rather they just expose the query parameters and let us build adapters from the query to the datastore as relevant.

The whole idea of web service interfaces is to expose a technology-agnostic interoperable API to the outside world.

Exposing an IQueryable/OData endpoint effectively couples your services to using OData indefinitely as you wont be able to feasibly determine what 'query space' existing clients are binded to, i.e. what existing queries/tables/views/properties you need to freeze/support indefinitely. This is an issue when exposing any implementation at the surface area of your API, as it limits your ability to add your own custom logic on it, e.g. Authorization, Caching, Monitoring, Rate-Limiting, etc. And because OData is really slow, you'll hit performance/scaling problems with it early. The lack of control over the endpoint, means you're effectively heading for a rewrite: https://coldie.net/?tag=servicestack

Lets see how feasible is would be to move off an oData provider implementation by looking at an existing query from Netflix's OData api:

http://odata.netflix.com/Catalog/Titles?$filter=Type%20eq%20'Movie'%20and%20(Rating%20eq%20'G'%20or%20Rating%20eq%20'PG-13')

This service is effectively now coupled to a Table/View called 'Titles' with a column called 'Type'.

And how it would be naturally written if you weren't using OData:

http://api.netflix.com/movies?ratings=G,PG-13

Now if for whatever reason you need to replace the implementation of the existing service (e.g. a better technology platform has emerged, it runs too slow and you need to move this dataset over to a NoSQL/Full-TextIndexing-backed sln) how much effort would it take to replace the OData impl (which is effectively binded to an RDBMS schema and OData binary impl) to the more intuitive impl-agnostic query below? It's not impossible, but as it's prohibitively difficult to implement an OData API for a new technology, that rewriting + breaking existing clients would tend to be the preferred option.

Letting internal implementations dictate the external facing url structure is a sure way to break existing clients when things need to change. This is why you should expose your services behind Cool URIs, i.e. logical permanent urls (that are unimpeded by implementation) that do not change, as you generally don't want to limit the technology choices of your services.

It might be a good option if you want to deliver adhoc 'exploratory services' on it, but it's not something I'd ever want external clients binded to in a production system. And if I'm only going to limit its use to my local intranet what advantages does it have over just giving out a read-only connection string? which will allow others use with their favourite Sql Explorer, Reporting or any other tools that speaks SQL.

The testable interface abstraction allows for one-liners in the ORM-bound implementation, as well as the testable fake. Doesn't get any simpler than that.

That hardly seems like complicated stuff to me.

Mario, thank you for writing. It occasionally happens that I change my mind, as I learn new skills and gain more experience, but in this case, I haven't changed my mind. I indirectly use IQueryable<T> when I occasionally have to query a relational database with the Entity Framework or LINQ to SQL (which happens extremely rarely these days), but I never design my own interfaces around IQueryable<T>; my reasons for introducing an interface is normally to reduce coupling, but introducing any interface that exposes or depends on IQueryable<T> doesn't reduce coupling.

In Agile Principles, Patterns, and Practices in C#, Robert C. Martin explains in chapter 11 that "clients [...] own the abstract interfaces" - in other words: the client, which invokes methods on the interface, should define the methods that the interface should expose, based on what it needs - not based on what an arbitrary implementation might be able to implement. This is the design philosophy I always follow. A client shouldn't have to need to be able to perform arbitrary queries against a data access layer. If it does, it's such a Leaky Abstraction anyway that the interface isn't going to help; in such cases, just let the client talk directly to the ORM or the ADO.NET objects. That's much easier to understand.

The same argument also goes against designing interfaces around Expression<Func<T, bool>>. It may seem flexible, but the fact is that such an expression can be arbitrarily complex, so in practice, it's impossible to guarantee that you can translate every expression to all SQL dialects; and what about OData? Or queries against MongoDB?

Still, I rarely use ORMs at all. Instead, I increasingly rely on simple abstractions like Drain when designing my systems.

Robust DI With the ASP.NET Web API

Note 2014-04-03 19:46 UTC: This post describes how to address various Dependency Injection issues with a Community Technical Preview (CTP) of ASP.NET Web API 1. Unless you're still using that 2012 CTP, it's no longer relevant. Instead, refer to my article about Dependency Injection with the final version of Web API 1 (which is also relevant for Web API 2).

Like the WCF Web API, the new ASP.NET Web API supports Dependency Injection (DI), but the approach is different and the resulting code you'll have to write is likely to be more complex. This post describes how to enable robust DI with the new Web API. Since this is based on the beta release, I hope that it will become easier in the final release.

At first glance, enabling DI on an ASP.NET Web API looks seductively simple. As always, though, the devil is in the details. Nikos Baxevanis has already provided a more thorough description, but it's even more tricky than that.

Protocol #

To enable DI, all you have to do is to call the SetResolver method, right? It even has an overload that enables you to supply two code blocks instead of implementing an interface (although you can certainly also implement IDependencyResolver). Could it be any easier than that?

Yes, it most certainly could.

Imagine that you'd like to hook up your DI Container of choice. As a first attempt, you try something like this:

GlobalConfiguration.Configuration.ServiceResolver.SetResolver( t => this.container.Resolve(t), t => this.container.ResolveAll(t).Cast<object>());

This compiles. Does it work? Yes, but in a rather scary manner. Although it satisfies the interface, it doesn't satisfy the protocol ("an interface describes whether two components will fit together, while a protocol describes whether they will work together." (GOOS, p. 58)).

The protocol, in this case, is that if you (or rather the container) can't resolve the type, you should return null. What's even worse is that if your code throws an exception (any exception, apparently), DependencyResolver will suppress it. In case you didn't know, this is strongly frowned upon in the .NET Framework Design Guidelines.

Even so, the official introduction article instead chooses to play along with the protocol and explicitly handle any exceptions. Something along the lines of this ugly code:

GlobalConfiguration.Configuration.ServiceResolver.SetResolver( t => { try { return this.container.Resolve(t); } catch (ComponentNotFoundException) { return null; } }, t => { try { return this.container.ResolveAll(t).Cast<object>(); } catch (ComponentNotFoundException) { return new List<object>(); } } );

Notice how try/catch is used for control flow - another major no no in the .NET Framework Design Guidelines.

At least with a good DI Container, we can do something like this instead:

GlobalConfiguration.Configuration.ServiceResolver.SetResolver( t => this.container.Kernel.HasComponent(t) ? this.container.Resolve(t) : null, t => this.container.ResolveAll(t).Cast<object>());

Still, first impressions don't exactly inspire trust in the implementation...

API Design Issues #

Next, I would like to direct your attention to the DependencyResolver API. At its core, it looks like this:

public interface IDependencyResolver { object GetService(Type serviceType); IEnumerable<object> GetServices(Type serviceType); }

It can create objects, but what about decommissioning? What if, deep in a dependency graph, a Controller contains an IDisposable object? This is not a particularly exotic scenario - it might be an instance of an Entity Framework ObjectContext. While an ApiController itself implements IDisposable, it may not know that it contains an injected object graph with one or more IDisposable leaf nodes.

It's a fundamental rule of DI that you must Release what you Resolve. That's not possible with the DependencyResolver API. The result may be memory leaks.

Fortunately, it turns out that there's a fix for this (at least for Controllers). Unfortunately, this workaround leverages another design problem with DependencyResolver.

Mixed Responsibilities #

It turns out that when you wire a custom resolver up with the SetResolver method, the ASP.NET Web API will query your custom resolver (such as a DI Container) for not only your application classes, but also for its own infrastructure components. That surprised me a bit because of the mixed responsibility, but at least this is a useful hook.

One of the first types the framework will ask for is an instance of IHttpControllerFactory, which looks like this:

public interface IHttpControllerFactory { IHttpController CreateController(HttpControllerContext controllerContext, string controllerName); void ReleaseController(IHttpController controller); }

Fortunately, this interface has a Release hook, so at least it's possible to release Controller instances, which is most important because there will be a lot of them (one per HTTP request).

Discoverability Issues #

The IHttpControllerFactory looks a lot like the well-known ASP.NET MVC IControllerFactory interface, but there are subtle differences. In ASP.NET MVC, there's a DefaultControllerFactory with appropriate virtual methods one can overwrite (it follows the Template Method pattern).

There's also a DefaultControllerFactory in the Web API, but unfortunately no Template Methods to override. While I could write an algorithm that maps from the controllerName parameter to a type which can be passed to a DI Container, I'd rather prefer to be able to reuse the implementation which the DefaultControllerFactory contains.

In ASP.NET MVC, this is possible by overriding the GetControllerInstance method, but it turns out that the Web API (beta) does this slightly differently. It favors composition over inheritance (which is actually a good thing, so kudos for that), so after mapping controllerName to a Type instance, it invokes an instance of the IHttpControllerActivator interface (did I hear anyone say "FactoryFactory?"). Very loosely coupled (good), but not very discoverable (not so good). It would have been more discoverable if DefaultControllerFactory had used Constructor Injection to get its dependency, rather than relying on the Service Locator which DependencyResolver really is.

However, this is only an issue if you need to hook into the Controller creation process, e.g. in order to capture the HttpControllerContext for further use. In normal scenarios, despite what Nikos Baxevanis describes in his blog post, you don't have to override or implement IHttpControllerFactory.CreateController. The DependencyResolver infrastructure will automatically invoke your GetService implementation (or the corresponding code block) whenever a Controller instance is required.

Releasing Controllers #

The easiest way to make sure that all Controller instances are being properly released is to derive a class from DefaultControllerFactory and override the ReleaseController method:

public class ReleasingControllerFactory : DefaultHttpControllerFactory { private readonly Action<object> release; public ReleasingControllerFactory(Action<object> releaseCallback, HttpConfiguration configuration) : base(configuration) { this.release = releaseCallback; } public override void ReleaseController(IHttpController controller) { this.release(controller); base.ReleaseController(controller); } }

Notice that it's not necessary to override the CreateController method, since the default implementation is good enough - it'll ask the DependencyResolver for an instance of IHttpControllerActivator, which will again ask the DependencyResolver for an instance of the Controller type, in the end invoking your custom GetObject implemention.

To keep the above example generic, I just injected an Action<object> into ReleasingControllerFactory - I really don't wish to turn this into a discussion about the merits and demerits of various DI Containers. In any case, I'll leave it as an exercise to you to wire up your favorite DI Container so that the releaseCallback is actually a call to the container's Release method.

Lifetime Cycles of Infrastructure Components #

Before I conclude, I'd like to point out another POLA violation that hit me during my investigation.

The ASP.NET Web API utilizes DependencyResolver to resolve its own infrastructure types (such as IHttpControllerFactory, IHttpControllerActivator, etc.). Any custom DependencyResolver you supply will also be queried for these types. However:

When resolving infrastructure components, the Web API doesn't respect any custom lifecycle you may have defined for these components.

At a certain point while I investigated this, I wanted to configure a custom IHttpControllerActivator to have a Web Request Context (my book, section 8.3.4) - in other words, I wanted to create a new instance of IHttpControllerActivator for each incoming HTTP request.

This is not possible. The framework queries a custom DependencyResolver for an infrastructure type, but even when it receives an instance (i.e. not null), it doesn't trust the DependencyResolver to efficiently manage the lifetime of that instance. Instead, it caches this instance for ever, and never asks for it again. This is, in my opinion, a mistaken responsibility, and I hope it will be corrected in the final release.

Concluding Thoughts #

Wiring up the ASP.NET Web API with robust DI is possible, but much harder than it ought to be. Suggestions for improvements are:

- A Release hook in DependencyResolver.

- The framework itself should trust the DependencyResolver to efficiently manage lifetime of all objects it create.

As I've described, there are other places were minor adjustments would be helpful, but these two suggestions are the most important ones.

Update (2012.03.21): I've posted this feedback to the product group on uservoice and Connect - if you agree, please visit those sites and vote up the issues.

Comments

Won't happen. The release issue was highlighted during the RCs and Beta releases and the feedback from Brad Wilson was they were not going to add a release mechanism :(

The same applies to the *Activators that where added (IViewPageActivator, etc.), they really need a release hook too

I know for some people is not an option whether use or not what MVC produces, if you are not one of them, I suggest you give a try to FubuMVC, a MVC framework built from the beginning with DI in mind.

It has its own shortcoming, but the advantages surpasses the problems.

The issue you had about not releasing disposable objects is simply non an issue in FubuMVC.

Kind regards,

Jaime.

First of all, right now I need a framework for building REST services, and not a web framework. FubuMVC might have gained REST features (such as conneg) since last I looked, but the ASP.NET Web API does have quite an attractive feature set for building REST APIs.

Secondly, last time I discussed the issue of releasing components, Jeremy Miller was very much against it. StructureMap doesn't have a Release hook, so I wonder whether FubuMVC does...

Personally, I'm not against trying other technologies, and I've previously looked a bit at OpenRasta and Nancy. However, many of my clients prefer Microsoft technologies.

I must also admit to be somewhat taken aback by the direction Microsoft has taken here. The WCF Web API was really good, so I also felt it was my duty to provide feedback to Microsoft as well as the community about this.

Yes, SM does not have a built-in release hook, I agree on that, but fubumvc uses something called "NestedContainer".

This nested container shares the same settings of your top level container (often StructureMap.ObjectFactory.Container), but with one added bonus, on disposal, also disposes any disposable dependency it resolved during the request lifetime.

And all of this is controller by behaviors that wrap an action call at runtime (but configured at start-up), sorta a "Russian Doll Model" like fubu folks like to call to this.

So all these behaviors wrap each other, allowing you to effectively dispose resources.

To avoid giving wrong explanations, I better share a link which already does this in an effective way:

http://codebetter.com/jeremymiller/2011/01/09/fubumvcs-internal-runtime-the-russian-doll-model-and-how-it-compares-to-asp-net-mvc-and-openrasta/

And by no means, don't take my words as a harsh opinion against MS MVC, it just a matter of preferences, if to people out there MS MVC or ASP.Net Web API solves their needs, I will not try to convince you otherwise.

Kind regards,

Jaime.

Im currently using it to register my repository following this guidance: http://www.devtrends.co.uk/blog/introducing-the-unity.webapi-nuget-package

Thanks,

Vindberg.

https://github.com/ServiceStack

The ServiceStack philosophy on IoC - https://github.com/ServiceStack/ServiceStack/wiki/The-IoC-container

I'm sure you'll take issue with something in the stack, but keep in mind Demis iterates very quickly and the stack is a very pragmatic / results driven one. Unlike Microsoft, he's very willing to accept patches, etc where things may fall short of user reqs, so if you have feedback, he's always listening.

Just beyond my understanding how you can design a support for IoC container with no decommissioning.

Hopefully MS will fix this issue/lackness before RTM

But I don't see an ASP.NET MVC release number assigned to it. There is no .Release() method in the ASP.NET MVC 4 release candidate...safe to say they aren't resolving this until the next release?

I've found this article very useful in understanding the use of Windsor with Web API, and I am now wondering whether anything has changed in the RC of MVC 4 that would make any part of this method redundant? Would be great to get an update from you regarding the current status of the framework with regards to implementing an IOC with Web API.

Many Thanks

Chris

In the future, I may add an update to this article, unless someone else beats me to it.

Migrating from WCF Web API to ASP.NET Web API

Now that the WCF Web API has ‘become' the ASP.NET Web API, I've had to migrate a semi-complex code base from the old to the new framework. These are my notes from that process.

Migrating Project References #

As far as I can tell, the ASP.NET Web API isn't just a port of the WCF Web API. At a cursory glance, it looks like a complete reimplementation. If it's a port of the old code, it's at least a rather radical one. The assemblies have completely different names, and so on.

Both old and new project, however, are based on NuGet packages, so it wasn't particularly hard to change.

To remove the old project references, I ran this NuGet command:

Uninstall-Package webapi.all -RemoveDependencies

followed by

Install-Package aspnetwebapi

to install the project references for the ASP.NET Web API.

Rename Resources to Controllers #

In the WCF Web API, there was no naming convention for the various resource classes. In the quickstarts, they were sometimes called Apis (like ContactsApi), and I called mine Resources (like CatalogResource). Whatever your naming convention was, the easiest things is to find them all and rename them to end with Controller (e.g. CatalogController).

AFAICT you can change the naming convention, but I didn't care enough to do so.

Derive Controllers from ApiController #

Unless you care to manually implement IHttpController, each Controller should derive from ApiController:

public class CatalogController : ApiController

Remove Attributes #

The WCF Web API uses the [WebGet] and [WebInvoke] attributes. The ASP.NET Web API, on the other hand, uses routes, so I removed all the attributes, including their UriTemplates:

//[WebGet(UriTemplate = "/")] public Catalog GetRoot()

Add Routes #

As a replacement for attributes and UriTemplates, I added HTTP routes:

routes.MapHttpRoute( name: "DefaultApi", routeTemplate: "{controller}/{id}", defaults: new { controller = "Catalog", id = RouteParameter.Optional } );

The MapHttpRoute method is an extension method defined in the System.Web.Http namespace, so I had to add a using directive for it.

Composition #

Wiring up Controllers with Constructor Injection turned out to be rather painful. For a starting point, I used Nikos Baxevanis' guide, but it turns out there are further subtleties which should be addressed (more about this later, but to prevent a stream of comments about the DependencyResolver API: yes, I know about that, but it's inadequate for a number of reasons).

Media Types #

In the ASP.NET Web API application/json is now the default media type format if the client doesn't supply any Accept header. For the WCF Web API I had to resort to a hack to change the default, so this was a pleasant surprise.

It's still pretty easy to add more supported media types:

GlobalConfiguration.Configuration.Formatters.XmlFormatter .SupportedMediaTypes.Add( new MediaTypeHeaderValue("application/vnd.247e.artist+xml")); GlobalConfiguration.Configuration.Formatters.JsonFormatter .SupportedMediaTypes.Add( new MediaTypeHeaderValue("application/vnd.247e.artist+json"));

(Talk about a Law of Demeter violation, BTW...)

However, due to an over-reliance on global state, it's not so easy to figure out how one would go about mapping certain media types to only a single Controller. This was much easier in the WCF Web API because it was possible to assign a separate configuration instance to each Controller/Api/Resource/Service/Whatever... This, I've still to figure out how to do...

Comments

webapi prev6 seems much batter than current beta

http://code.msdn.microsoft.com/Contact-Manager-Web-API-0e8e373d/view/Discussions

see Daniel reply to my post

Implementing an Abstract Factory

Abstract Factory is a tremendously useful pattern when used with Dependency Injection (DI). While I've repeatedly described how it can be used to solve various problems in DI, apparently I've never described how to implement one. As a comment to an older blog post of mine, Thomas Jaskula asks how I'd implement the IOrderShipperFactory.

To stay consistent with the old order shipper scenario, this blog post outlines three alternative ways to implement the IOrderShipperFactory interface.

To make it a bit more challenging, the implementation should create instances of the OrderShipper2 class, which itself has a dependency:

public class OrderShipper2 : IOrderShipper { private readonly IChannel channel; public OrderShipper2(IChannel channel) { if (channel == null) throw new ArgumentNullException("channel"); this.channel = channel; } public void Ship(Order order) { // Ship the order and // raise a domain event over this.channel } }

In order to be able to create an instance of OrderShipper2, any factory implementation must be able to supply an IChannel instance.

Manually Coded Factory #

The first option is to manually wire up the OrderShipper2 instance within the factory:

public class ManualOrderShipperFactory : IOrderShipperFactory { private readonly IChannel channel; public ManualOrderShipperFactory(IChannel channel) { if (channel == null) throw new ArgumentNullException("channel"); this.channel = channel; } public IOrderShipper Create() { return new OrderShipper2(this.channel); } }

This has the advantage that it's easy to understand. It can be unit tested and implemented in the same library that also contains OrderShipper2 itself. This means that any client of that library is supplied with a read-to-use implementation.

The disadvantage of this approach is that if/when the constructor of OrderShipper2 changes, the ManualOrderShipperFactory class must also be corrected. Pragmatically, this may not be a big deal, but one could successfully argue that this violates the Open/Closed Principle.

Container-based Factory #

Another option is to make the implementation a thin Adapter over a DI Container - in this example Castle Windsor:

public class ContainerFactory : IOrderShipperFactory { private IWindsorContainer container; public ContainerFactory(IWindsorContainer container) { if (container == null) throw new ArgumentNullException("container"); this.container = container; } public IOrderShipper Create() { return this.container.Resolve<IOrderShipper>(); } }

But wait! Isn't this an application of the Service Locator anti-pattern? Not if this class is part of the Composition Root.

If this implementation was placed in the same library as OrderShipper2 itself, it would mean that the library would have a hard dependency on the container. In such a case, it would certainly be a Service Locator.

However, when a Composition Root already references a container, it makes sense to place the ContainerFactory class there. This changes its role to the pure infrastructure component it really ought to be. This seems more SOLID, but the disadvantage is that there's no longer a ready-to-use implementation packaged together with the LazyOrderShipper2 class. All new clients must supply their own implementation.

Dynamic Proxy #

The third option is to basically reduce the principle behind the container-based factory to its core. Why bother writing even a thin Adapter if one can be automatically generated.

With Castle Windsor, the Typed Factory Facility makes this possible:

container.AddFacility<TypedFactoryFacility>(); container.Register(Component .For<IOrderShipperFactory>() .AsFactory()); var factory = container.Resolve<IOrderShipperFactory>();

There is no longer any code which implements IOrderShipperFactory. Instead, a class conceptually similar to the ContainerFactory class above is dynamically generated and emitted at runtime.

While the code never materializes, conceptually, such a dynamically emitted implementation is still part of the Composition Root.

This approach has the advantage that it's very DRY, but the disadvantages are similar to the container-based implementation above: there's no longer a ready-to-use implementation. There's also the additional disadvantage that out of the three alternative here outlined, the proxy-based implementation is the most difficult to understand.

Comments

But i have a question. What if object, created by factory, implements IDisposable? Where we should call Dispose()?

Sorry for my English...

Often, when I open this blog from the google search results page, javascript function "highlightWord" hangs my Firefox. Probably too big cycle on DOM.

You can read more about this in chapter 6 in my book.

Regarding the bug report: thanks - I've noticed it too, but didn't know what to do about it...

You have cleand up my doubts with this sentence "But wait! Isn’t this an application of the Service Locator anti-pattern? Not if this class is part of the Composition Root." I was not just confortable about if it's well done or not.

I use also Dynamic Proxy factory because it's a great feature.

Thomas

I thought about this "life time" problem. And I've got an idea.

What if we let a concrete factory to implement the IDisposable interface?

In factory.Create method we will push every resolved service into the HashSet.

In factory.Dispose method we will call container.Release method for each object in HashSet.

Then we register our factory with a short life time, like Transitional.

So the container will release services, created by factory, as soon as possible.

What do you think about it?

About bug in javascript ...

Now the DOM visitor method "highlightWord" called for each word. It is very slow. And empty words, which passed into visitor, caused the creation of many empty SPAN elements. It is much slower.

I allowed myself to rewrite your functions... Just replace it with the following code.

var googleSearchHighlight = function () {

if (!document.createElement) return;

ref = document.referrer;

if (ref.indexOf('?') == -1 || ref.indexOf('/') != -1) {

if (document.location.href.indexOf('PermaLink') != -1) {

if (ref.indexOf('SearchView.aspx') == -1) return;

}

else {

//Added by Scott Hanselman

ref = document.location.href;

if (ref.indexOf('?') == -1) return;

}

}

//get all words

var allWords = [];

qs = ref.substr(ref.indexOf('?') + 1);

qsa = qs.split('&');

for (i = 0; i < qsa.length; i++) {

qsip = qsa[i].split('=');

if (qsip.length == 1) continue;

if (qsip[0] == 'q' || qsip[0] == 'p') { // q= for Google, p= for Yahoo

words = decodeURIComponent(qsip[1].replace(/\+/g, ' ')).split(/\s+/);

for (w = 0; w < words.length; w++) {

var word = words[w];

if (word.length)

allWords.push(word);

}

}

}

//pass words into DOM visitor

if(allWords.length)

highlightWord(document.getElementsByTagName("body")[0], allWords);

}

var highlightWord = function (node, allWords) {

// Iterate into this nodes childNodes

if (node.hasChildNodes) {

var hi_cn;

for (hi_cn = 0; hi_cn < node.childNodes.length; hi_cn++) {

highlightWord(node.childNodes[hi_cn], allWords);

}

}

// And do this node itself

if (node.nodeType == 3) { // text node

//do words iteration

for (var w = 0; w < allWords.length; w++) {

var word = allWords[w];

if (!word.length)

continue;

tempNodeVal = node.nodeValue.toLowerCase();

tempWordVal = word.toLowerCase();

if (tempNodeVal.indexOf(tempWordVal) != -1) {

pn = node.parentNode;

if (pn && pn.className != "searchword") {

// word has not already been highlighted!

nv = node.nodeValue;

ni = tempNodeVal.indexOf(tempWordVal);

// Create a load of replacement nodes

before = document.createTextNode(nv.substr(0, ni));

docWordVal = nv.substr(ni, word.length);

after = document.createTextNode(nv.substr(ni + word.length));

hiwordtext = document.createTextNode(docWordVal);

hiword = document.createElement("span");

hiword.className = "searchword";

hiword.appendChild(hiwordtext);

pn.insertBefore(before, node);

pn.insertBefore(hiword, node);

pn.insertBefore(after, node);

pn.removeChild(node);

}

}

}

}

}

I upload .js file here http://rghost.ru/37057487

Granted, if the constructor to OrderShipper2 changes, you MUST modify the abstract factory. However, isn't modifying the constructor of OrderShipper2 itself a violation of the OCP? If you are adding new dependencies, you are probably making a significant change.

At that point, you would just create a new implementation of IOrderShipper.

(Thank you very much for your assistance with the javascript - I didn't even know which particular script was causing all that trouble. Unfortunately, the script itself is compiled into the dasBlog engine which hosts this blog, so I can't change it. However, I think I managed to switch it off... Yet another reason to find myself a new blog platform...)

Btw, another thing I think about is the abstract factory's responsibility. If factory consumer can create service with the factory.Create method, maybe we should let it to destroy object with the factory.Destroy method too? Piece of the life time management moves from one place to another. Factory consumer takes responsibility for the life time (defines new scope). For example we have ControllerFactory in ASP.NET MVC. It has the Release method for this.

In other words, why not to add the Release method into the abstract factory?

However, what if we have a desktop application or a long-running batch job? How do we define an implicit scope then? One option might me to employ a timeout, but I'll have to think more about this... Not a bad idea, though :)

But for a single long-lived thread we have to invent something.

It seems that there is no silver bullet in the management of a lifetime. At least without using Resolve-Release pattern in several places instead of one ...

Can you write another book on this subject? :)

A Task<T>, on the other, provides us with the ability to attach a continuation, so that seems to me to be an appropriate candidate...

Or you could register the container with itself, enabling it to auto-wire itself.

None of these options are particularly nice, which is why I instead tend to prefer one of the other options described above.

Julien, thank you for your question. Using a Dynamic Proxy is an implementation detail. The consumer (in this case LazyOrderShipper2) depends only on IOrderShipperFactory.

Does using a Dynamic Proxy make it harder to swap containers? Yes, it does, because I'm only aware of one .NET DI Container that has this capability (although it's a couple of years ago since I last surveyed the .NET DI Container landscape). Therefore, if you start out with Castle Windsor, and then later on decide to exchange it for another DI Container, you would have to supply a manually coded implementation of IOrderShipperFactory. You could choose to implement either a Manually Coded Factory, or a Container-based Factory, as described in this article. It's rather trivial to do, so I would hardly call it blocking issue.

The more you rely on specific features of a particular DI Container, the more work you'll have to perform to migrate. This is why I always recommend that you design your classes following normal, good design practices first, without any regard to how you'd use them with any particular DI Container. Only when you've designed your API should you figure out how to compose it with a DI Container (if at all).

Personally, I don't find container verification particularly valuable, but then again, I mostly use Pure DI anyway.

Is Layering Worth the Mapping?

For years, layered application architecture has been a de-facto standard for loosely coupled architectures, but the question is: does the layering really provide benefit?

In theory, layering is a way to decouple concerns so that UI concerns or data access technologies don't pollute the domain model. However, this style of architecture seems to come at a pretty steep price: there's a lot of mapping going on between the layers. Is it really worth the price, or is it OK to define data structures that cut across the various layers?

The short answer is that if you cut across the layers, it's no longer a layered application. However, the price of layering may still be too high.

In this post I'll examine the problem and the proposed solution and demonstrate why none of them are particularly good. In the end, I'll provide a pointer going forward.

Proper Layering #

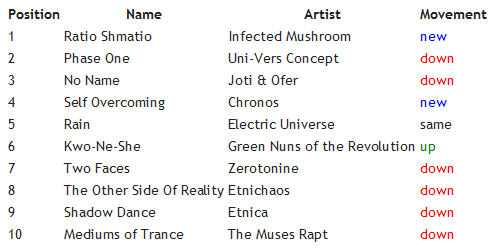

To understand the problem with layering, I'll describe a fairly simple example. Assume that you are building a music service and you've been asked to render a top 10 list for the day. It would have to look something like this in a web page:

As part of rendering the list, you must color the Movement values accordingly using CSS.

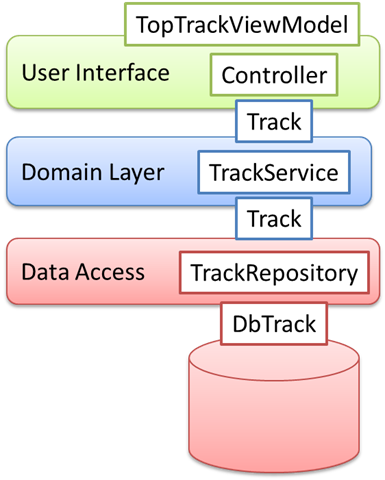

A properly layered architecture would look something like this:

Each layer defines some services and some data-carrying classes (Entities, if you want to stick with the Domain-Driven Design terminology). The Track class is defined by the Domain layer, while the TopTrackViewModel class is defined in the User Interface layer, and so on. If you are wondering about why Track is used to communicate both up and down, this is because the Domain layer should be the most decoupled layer, so the other layers exist to serve it. In fact, this is just a vertical representation of the Ports and Adapters architecture, with the Domain Model sitting in the center.

This architecture is very decoupled, but comes at the cost of much mapping and seemingly redundant repetition. To demonstrate why that is, I'm going to show you some of the code. This is an ASP.NET MVC application, so the Controller is an obvious place to start:

public ViewResult Index() { var date = DateTime.Now.Date; var topTracks = this.trackService.GetTopFor(date); return this.View(topTracks); }

This doesn't look so bad. It asks an ITrackService for the top tracks for the day and returns a list of TopTrackViewModel instances. This is the implementation of the track service:

public IEnumerable<TopTrackViewModel> GetTopFor( DateTime date) { var today = DateTime.Now.Date; var yesterDay = today - TimeSpan.FromDays(1); var todaysTracks = this.repository.GetTopFor(today).ToList(); var yesterdaysTracks = this.repository.GetTopFor(yesterDay).ToList(); var length = todaysTracks.Count; var positions = Enumerable.Range(1, length); return from tt in todaysTracks.Zip( positions, (t, p) => new { Position = p, Track = t }) let yp = (from yt in yesterdaysTracks.Zip( positions, (t, p) => new { Position = p, Track = t }) where yt.Track.Id == tt.Track.Id select yt.Position) .DefaultIfEmpty(-1) .Single() let cssClass = GetCssClass(tt.Position, yp) select new TopTrackViewModel { Position = tt.Position, Name = tt.Track.Name, Artist = tt.Track.Artist, CssClass = cssClass }; } private static string GetCssClass( int todaysPosition, int yesterdaysPosition) { if (yesterdaysPosition < 0) return "new"; if (todaysPosition < yesterdaysPosition) return "up"; if (todaysPosition == yesterdaysPosition) return "same"; return "down"; }

While that looks fairly complex, there's really not a lot of mapping going on. Most of the work is spent getting the top 10 track for today and yesterday. For each position on today's top 10, the query finds the position of the same track on yesterday's top 10 and creates a TopTrackViewModel instance accordingly.

Here's the only mapping code involved:

select new TopTrackViewModel { Position = tt.Position, Name = tt.Track.Name, Artist = tt.Track.Artist, CssClass = cssClass };

This maps from a Track (a Domain class) to a TopTrackViewModel (a UI class).

This is the relevant implementation of the repository:

public IEnumerable<Track> GetTopFor(DateTime date) { var dbTracks = this.GetTopTracks(date); foreach (var dbTrack in dbTracks) { yield return new Track( dbTrack.Id, dbTrack.Name, dbTrack.Artist); } }

You may be wondering about the translation from DbTrack to Track. In this case you can assume that the DbTrack class is a class representation of a database table, modeled along the lines of your favorite ORM. The Track class, on the other hand, is a proper object-oriented class which protects its invariants:

public class Track { private readonly int id; private string name; private string artist; public Track(int id, string name, string artist) { if (name == null) throw new ArgumentNullException("name"); if (artist == null) throw new ArgumentNullException("artist"); this.id = id; this.name = name; this.artist = artist; } public int Id { get { return this.id; } } public string Name { get { return this.name; } set { if (value == null) throw new ArgumentNullException("value"); this.name = value; } } public string Artist { get { return this.artist; } set { if (value == null) throw new ArgumentNullException("value"); this.artist = value; } } }

No ORM I've encountered so far has been able to properly address such invariants - particularly the non-default constructor seems to be a showstopper. This is the reason a separate DbTrack class is required, even for ORMs with so-called POCO support.

In summary, that's a lot of mapping. What would be involved if a new field is required in the top 10 table? Imagine that you are being asked to provide the release label as an extra column.

- A Label column must be added to the database schema and the DbTrack class.

- A Label property must be added to the Track class.

- The mapping from DbTrack to Track must be updated.

- A Label property must be added to the TopTrackViewModel class.

- The mapping from Track to TopTrackViewModel must be updated.

- The UI must be updated.

That's a lot of work in order to add a single data element, and this is even a read-only scenario! Is it really worth it?

Cross-Cutting Entities #

Is strict separation between layers really so important? What would happen if Entities were allowed to travel across all layers? Would that really be so bad?

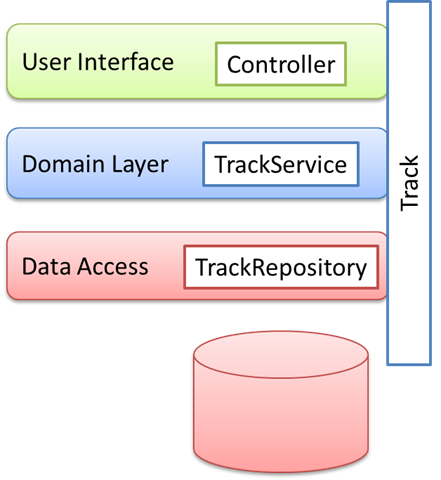

Such an architecture is often drawn like this:

Now, a single Track class is allowed to travel from layer to layer in order to avoid mapping. The controller code hasn't really changed, although the model returned to the View is no longer a sequence of TopTrackViewModel, but simply a sequence of Track instances:

public ViewResult Index() { var date = DateTime.Now.Date; var topTracks = this.trackService.GetTopFor(date); return this.View(topTracks);}

The GetTopFor method also looks familiar:

public IEnumerable<Track> GetTopFor(DateTime date) { var today = DateTime.Now.Date; var yesterDay = today - TimeSpan.FromDays(1); var todaysTracks = this.repository.GetTopFor(today).ToList(); var yesterdaysTracks = this.repository.GetTopFor(yesterDay).ToList(); var length = todaysTracks.Count; var positions = Enumerable.Range(1, length); return from tt in todaysTracks.Zip( positions, (t, p) => new { Position = p, Track = t }) let yp = (from yt in yesterdaysTracks.Zip( positions, (t, p) => new { Position = p, Track = t }) where yt.Track.Id == tt.Track.Id select yt.Position) .DefaultIfEmpty(-1) .Single() let cssClass = GetCssClass(tt.Position, yp) select Enrich( tt.Track, tt.Position, cssClass); } private static string GetCssClass( int todaysPosition, int yesterdaysPosition) { if (yesterdaysPosition < 0) return "new"; if (todaysPosition < yesterdaysPosition) return "up"; if (todaysPosition == yesterdaysPosition) return "same"; return "down"; } private static Track Enrich( Track track, int position, string cssClass) { track.Position = position; track.CssClass = cssClass; return track; }

Whether or not much has been gained is likely to be a subjective assessment. While mapping is no longer taking place, it's still necessary to assign a CSS Class and Position to the track before handing it off to the View. This is the responsibility of the new Enrich method:

private static Track Enrich( Track track, int position, string cssClass) { track.Position = position; track.CssClass = cssClass; return track; }

If not much is gained at the UI layer, perhaps the data access layer has become simpler? This is, indeed, the case:

public IEnumerable<Track> GetTopFor(DateTime date) { return this.GetTopTracks(date); }

If, hypothetically, you were asked to add a label to the top 10 table it would be much simpler:

- A Label column must be added to the database schema and the Track class.

- The UI must be updated.

This looks good. Are there any disadvantages? Yes, certainly. Consider the Track class:

public class Track { public int Id { get; set; } public string Name { get; set; } public string Artist { get; set; } public int Position { get; set; } public string CssClass { get; set; } }

It also looks simpler than before, but this is actually not particularly beneficial, as it doesn't protect its invariants. In order to play nice with the ORM of your choice, it must have a default constructor. It also has automatic properties. However, most insidiously, it also somehow gained the Position and CssClass properties.

What does the Position property imply outside of the context of a top 10 list? A position in relation to what?

Even worse, why do we have a property called CssClass? CSS is a very web-specific technology so why is this property available to the Data Access and Domain layers? How does this fit if you are ever asked to build a native desktop app based on the Domain Model? Or a REST API? Or a batch job?

When Entities are allowed to travel along layers, the layers basically collapse. UI concerns and data access concerns will inevitably be mixed up. You may think you have layers, but you don't.

Is that such a bad thing, though?

Perhaps not, but I think it's worth pointing out:

The choice is whether or not you want to build a layered application. If you want layering, the separation must be strict. If it isn't, it's not a layered application.

There may be great benefits to be gained from allowing Entities to travel from database to user interface. Much mapping cost goes away, but you must realize that then you're no longer building a layered application - now you're building a web application (or whichever other type of app you're initially building).

Further Thoughts #

It's a common question: how hard is it to add a new field to the user interface?

The underlying assumption is that the field must somehow originate from a corresponding database column. If this is the case, mapping seems to be in the way.

However, if this is the major concern about the application you're currently building, it's a strong indication that you are building a CRUD application. If that's the case, you probably don't need a Domain Model at all. Go ahead and let your ORM POCO classes travel up and down the stack, but don't bother creating layers: you'll be building a monolithic application no matter how hard you try not to.

In the end it looks as though none of the options outlined in this article are particularly good. Strict layering leads to too much mapping, and no mapping leads to monolithic applications. Personally, I've certainly written quite a lot of strictly layered applications, and while the separation of concerns was good, I was never happy with the mapping overhead.

At the heart of the problem is the CRUDy nature of many applications. In such applications, complex object graphs are traveling up and down the stack. The trick is to avoid complex object graphs.

Move less data around and things are likely to become simpler. This is one of the many interesting promises of CQRS, and particularly it's asymmetric approach to data.

To be honest, I wish I had fully realized this when I started writing my book, but when I finally realized what I'd implicitly felt for long, it was too late to change direction. Rest assured that nothing in the book is fundamentally flawed. The patterns etc. still hold, but the examples could have been cleaner if the sample applications had taken a CQRS-like direction instead of strict layering.

Comments

- Domain layer with rich objects and protected variations

- Commands and Command handlers (write only) acting on the domain entities

- Mapping domain layer using NHibernate (I thought at first to start by creating a different data model because of the constraints of orm on the domain, but i managed to get rid of most of them - like having a default but private constructor, and mapping private fields instead of public properties ...)

- Read model which consist of view models.

- Red model mapping layer which uses the orm to map view models to database directly (thus i got rid of mapping between view models and domains - but introduced a mapping between view models and database).

- Query objects to query the read model.

at the end of application i came to almost the same conclusion of not being happy with the mappings.

- I have to map domain model to database.

- I have to map between view models (read model) and database.

- I have to map between view models and commands.

- I have to map between commands and domain model.

- Having many fine grained commands is good in terms of defining strict behaviors and ubiquitous language but at the cost of maintainability, now when i introduce a new field most probably will have to modify a database field, domain model, view model, two data mappings for domain model and view model, 1 or more command and command handlers, and UI !

So taking the practice of CQRS for me enriched the domain a little, simplified the operations and separated it from querying, but complicated the implementation and maintenance of the application.

So I am still searching for a better approach to gain the rich domain model behavior and simplifying maintenance and eliminate mappings.

I too have felt pain of a lot of useless mapping a lot times. But even for CRUD applications where the business rules do not change much, I still almost always take the pain and do the mapping because I have a deep sitting fear of monolthic apps and code rot. If I give my entities lots of different concerns (UI, persistence, domainlogic etc.), isn't this kind of code smell the culture medium for more code smell and code rot? When evolving my application and I am faced with a quirk in my code, I often only have two choices: Add a new quirk or remove the original quirk. It kind of seems that in the long run a rotten monolithic app that his hard to maintain and extend becomes almost inevitable if I give up SoC the way you described.

Did I understand you correctly that you'd say it's OK to soften SoC a bit and let the entity travel across all layers for *some* apps? If yes, what kind of apps? Do you share my fear of inevitable code rot? How do you deal with this?

I use either convention based NH mappings which make me almost forget it's there, or MemoryImage to reconstruct my object model (the boilerplate is reduced by wrapping my objects with proxies that intercept method calls and record them as a json Event Source). In both cases I can use a helper library which wraps my objects with proxies that help protect invariants.

My web UI is built using a framework along the lines of the Naked Object a pattern*, which can be customised using ViewModels and custom views, and which enforces good REST design. This framework turns objects into pages and methods into ajax forms.

I built all this stuff after getting frustrated with the problems you mention above, inspired in part by all the time I spent at Uni trying to make games (in which an in memory domain model is rendered to the screen by a disconnected rendering engine, and input collected via a separate mechanism and applied to that model).

The long of the short of it is that I can add a field to the screen in seconds, and add a new feature in minutes. There are some trade offs, but it's great to feel like I am just writing an object model and leaving the rest to my frameworks.

*not Naked Objects MVC, a DIY library thats not ready for the public yet.

http://en.wikipedia.org/wiki/Naked_objects

https://github.com/mcintyre321/NhCodeFirst my mappings lib (but Fluent NH is probably fine)

http://martinfowler.com/bliki/MemoryImage.html

https://github.com/mcintyre321/Harden my invariant helper library

The only thing I'm saying is that it's better to build a monolithic application when you know and understand that this is what you are doing, than it is to be building a monolithic application while under the illusion that you are building a decoupled application.

If there's a point in there, it's that static languages (without designers) generally don't lend well to RAD, and if RAD is what you need, you have many popular options that aren't C#.

Also, I have two problems with some of the sample code you wrote. Specifically, the Enrich method. First, a method signature such as:

T Method(T obj, ...);

Implies to consumers that the method is pure. Because this isn't the case, consumers will incorrectly use this method without the intention of modifying the original object. Second, if it is true that your ViewModels are POCOs consisting of your mapped properties AND a list of computed properties, perhaps you should instead use inheritance. This implementation is certainly SOLID:

(sorry for the formatting)

class Track

{

/* Mapped Properties */

public Track()

{

}

protected Track(Track rhs)

{

Prop1 = rhs.Prop1;

Prop2 = rhs.Prop2;

Prop3 = rhs.Prop3;

}

}

class TrackWebViewModel : Track

{

public string ClssClass { get; private set; }

public TrackWebViewModel(Track track, string cssClass) : this(track)

{

CssClass = cssClass;

}

}

let cssClass = GetCssClass(tt.Position, yp) select Enrich(tt.Track, tt.Position, cssClass)

Simply becomes

select new TrackWebViewModel(tt.Track, tt.Position, cssClass)

While your suggested, inheritance-based solution seems cleaner, please note that it also introduces a mapping (in the copy constructor). Once you start doing this, there's almost always a composition-based solution which is at least as good as the inheritance-based solution.

As far as the copy-ctor, yes, that is a mapping, but when you add a field, you just have to modify a file you're already modifying. This is a bit nicer than having to update mapping code halfway across your application. But you're right, composting a Track into a specific view model would be a nicer approach with fewer modifications required to add more view models and avoiding additional mapping.

Though, I still am a bit concerned about the architecture as a whole. I understand in this post you were thinking out loud about a possible way to reduce complexity in certain types of applications, yet I'm wondering if you had considered using a platform designed for applications like this instead of using C#. Rails is quite handy when you need CRUD.

thanks for the blog posts and DI book, which I'm currently reading over the weekend. I guess the scenario you're describing here is more or less commonplace, and as the team I'm working on are constantly bringing this subject up on the whiteboard, I thought I'd share a few thoughts on the mapping part. Letting the Track entity you're mentioning cross all layers is probably not a good idea.

I agree that one should program to interfaces. Thus, any entity (be it an entity created via LINQ to SQL, EF, any other ORM/DAL or business object) should be modeled and exposed via an interface (or hierarchy thereof) from repositories or the like. With that in mind, here's what I think of mapping between entities. Popular ORM/DALs provide support for partial entities, external mapping files, POCOs, model-first etc.

Take this simple example:

// simplified pattern (deliberately not guarding args, and more)

interface IPageEntity { string Name { get; }}

class PageEntity : IPageEntity {}

class Page : IPage

{

private IPageEntity entity;

private IStrategy strategy;

public Page(IPageEntity entity, IStrategy strategy)

{

this.entity = entity;

this.strategy = strategy;

}

public string Name

{

get { return this.entity.Name; }

set { this.strategy.CopyOnWrite(ref this.entity).Name = value; }

}

}

One of the benefits from using an ORM/DAL is that queries are strongly typed and results are projected to strongly typed entities (concrete or anonymous). They are prime candidates for embedding inside of business objects (BO) (as DTOs), as long as they implement the required interface for injected in the constructor. This is much more flexible (and clean) rather than taking a series of arguments, each corresponding to a property on the original entity. Instead of having the BO keeping private members mapped to the constructor args, and subsequently mapping public properties and methods to said members, you simply map to the entity injected in the BO.

The benefit is much greater performance (less copying of args), a consistent design pattern and a layer of indirection in your BO that allows you to capture any potential changes to your entity (such as using the copy-on-write pattern that creates an exact copy of the entity, releasing it from a potential shared cache you've created by decorating the repository, from which the entity originally was retrieved).

Again, in my example one of the goals were to make use of shared (cached) entities for high performance, while making sure that there are no side effects from changing a business object (seeing this from client code perspective).

Code following the above pattern is just as testable as the code in your example (one could argue it's even easier to write tests, and maintenance is vastly reduced). Whenever I see BO constructors taking huge amounts of args I cry blood.

In my experience, this allows a strictly layered application to thrive without the mapping overhead.

Your thoughts?

What do you propose in cases when you have a client server application with request/response over WCF and the domain model living on the server? Is there a better way than for example mapping from NH entities to DataContracts and from DataContracts to ViewModels?

Thnaks for the post!

"... asks an ITrackService for the top tracks for the day and returns a list of TopTrackViewModel instances..."

The diagram really makes it look like the TrackService returns Track objects to the Controller and the Controller maps them into TopTrackViewModel objects. If that is the case... how would you communicate the Position and CssClass properties that the Domain Layer has computed between the two layers?

Also, I noticed that ITrackService.GetTopFor(DateTime date) never uses the input parameter... should it really be more like ITrackService.GetTopForToday() instead?

Awesome blog! Is there an email address I can contact you in private?

If the service layer exists in order to decouple the service from the client, you must translate from the internal model to a service API. However, if this is the case, keep in mind that at the service boundary, you are no longer dealing with objects at all, so translation or mapping is conceptually very important. If you have .NET on both sides it's easy to lose sight of this, but imagine that either service or client is implemented in COBOL and you may begin to understand the importance of translation.

So as always, it's a question of evaluating the advantages and disadvantages of each alternative in the concrete situation. There's no one correct answer.

Personally, I'm beginning to consider ORMs the right solution to the wrong problem. A relational database is rarely the correct data store for a LoB application or your typical web site.

Pardon me if I've gotten this wrong, because I'm approaching your post as an actionscript developer who is entirely self-taught. I don't know C#, but I like to read posts on good design from whatever language.

How I might resolve this problem is to create a TrackDisplayModel Class that wraps the Track Class and provides the "enriched" methods of the position and cssClass. In AS, we have something called a Proxy Class that allows us to "repeat" methods on an inner object without writing a lot of code, so you could pass through the properties of the Track by making TrackDisplayModel extend Proxy. By doing this, I'd give up code completion and type safety, so I might not find that long term small cost worth the occasional larger cost of adding a field in more conventional ways.

Does C# have a Class that is like Proxy that could be used in this way?

No matter how you'd achieve such things, I'm not to happy about doing this, as most of the variability axes we get from a multi-paradigmatic language such as C# is additive - it means that while it's fairly easy to add new capabilities to objects, it's actually rather hard to remove capabilities.

When we map from e.g. a domain model to a UI model, we should consider that we must be able to do both: add as well as subtract behavior. There may be members in our domain model we don't want the UI model to use.

Also, when the application enables the user to write as well as read data, we must be able to map both ways, so this is another reason why we must be able to add as well as remove members when traversing layers.

I'm not saying that a dynamic approach isn't viable - in fact, I find it attractive in various scenarios. I'm just saying that simply exposing a lower-level API to upper layers by adding more members to it seems to me to go against the spirit of the Single Responsibility Principle. It would just be tight coupling on the semantic level.

You might also want to refer to my answer to Amy immediately above. The data structures the UI requires may be entirely different from the Domain Model, which again could be different from the data access model. The UI will often be an aggregate of data from many different data sources.

In fact, I'd consider it a code smell if you could get by with the same data structure in all layers. If one can do that, one is most likely building a CRUD application, in which case a monolithic RAD application might actually be a better architectural choice.

You've mentioned in your blogs (here and elsewhere) and on stackoverflow simliar sentiments to your last comment:

<blockquote>

I'd consider it a code smell if you could get by with the same data structure in all layers. If one can do that, one is most likely building a CRUD application, in which case a monolithic RAD application might actually be a better architectural choice.

</blockquote>