Using only a Domain Model to persist restaurant table configurations by Mark Seemann

A data architecture example in C# and ASP.NET.

This is part of a small article series on data architectures. In this, the third instalment, you'll see an alternative way of modelling data in a server-based application. One that doesn't rely on statically typed classes to model data. As the introductory article explains, the example code shows how to create a new restaurant table configuration, or how to display an existing resource. The sample code base is an ASP.NET 8.0 REST API.

Keep in mind that while the sample code does store data in a relational database, the term table in this article mainly refers to physical tables, rather than database tables.

The idea is to use 'raw' serialization APIs to handle communication with external systems. For the presentation layer, the example even moves representation concerns to middleware, so that it's nicely abstracted away from the application layer.

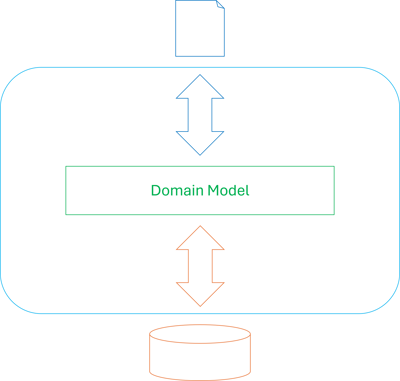

An architecture diagram like this attempts to capture the design:

Here, the arrows indicate mappings, not dependencies.

Like in the DTO-based Ports and Adapters architecture, the goal is to being able to design Domain Models unconstrained by serialization concerns, but also being able to format external data unconstrained by Reflection-based serializers. Thus, while this architecture is centred on a Domain Model, there are no Data Transfer Objects (DTOs) to represent JSON, XML, or database rows.

HTTP interaction #

To establish the context of the application, here's how HTTP interactions may play out. The following is a copy of the identically named section in the article Using Ports and Adapters to persist restaurant table configurations, repeated here for your convenience.

A client can create a new table with a POST HTTP request:

POST /tables HTTP/1.1

content-type: application/json

{ "communalTable": { "capacity": 16 } }

Which might elicit a response like this:

HTTP/1.1 201 Created Location: https://example.com/Tables/844581613e164813aa17243ff8b847af

Clients can later use the address indicated by the Location header to retrieve a representation of the resource:

GET /Tables/844581613e164813aa17243ff8b847af HTTP/1.1 accept: application/json

Which would result in this response:

HTTP/1.1 200 OK

Content-Type: application/json; charset=utf-8

{"communalTable":{"capacity":16}}

By default, ASP.NET handles and returns JSON. Later in this article you'll see how well it deals with other data formats.

Boundary #

ASP.NET supports some variation of the model-view-controller (MVC) pattern, and Controllers handle HTTP requests. At the outset, the action method that handles the POST request looks like this:

[HttpPost] public async Task<IActionResult> Post(Table table) { var id = Guid.NewGuid(); await repository.Create(id, table).ConfigureAwait(false); return new CreatedAtActionResult( nameof(Get), null, new { id = id.ToString("N") }, null); }

While this looks identical to the Post method for the Shared Data Model architecture, it's not, because it's not the same Table class. Not by a long shot. The Table class in use here is the one originally introduced in the article Serializing restaurant tables in C#, with a few inconsequential differences.

How does a Controller action method receive an input parameter directly in the form of a Domain Model, keeping in mind that this particular Domain Model is far from serialization-friendly? The short answer is middleware, which we'll get to in a moment. Before we look at that, however, let's also look at the Get method that supports HTTP GET requests:

[HttpGet("{id}")] public async Task<IActionResult> Get(string id) { if (!Guid.TryParseExact(id, "N", out var guid)) return new BadRequestResult(); Table? table = await repository.Read(guid).ConfigureAwait(false); if (table is null) return new NotFoundResult(); return new OkObjectResult(table); }

This, too, looks exactly like the Shared Data Model architecture, again with the crucial difference that the Table class is completely different. The Get method just takes the table object and wraps it in an OkObjectResult and returns it.

The Table class is, in reality, extraordinarily opaque, and not at all friendly to serialization, so how do the service turn it into JSON?

JSON middleware #

Most web frameworks come with extensibility points where you can add middleware. A common need is to be able to add custom serializers. In ASP.NET they're called formatters, and can be added at application startup:

builder.Services.AddControllers(opts => { opts.InputFormatters.Insert(0, new TableJsonInputFormatter()); opts.OutputFormatters.Insert(0, new TableJsonOutputFormatter()); });

As the names imply, TableJsonInputFormatter deserializes JSON input, while TableJsonOutputFormatter serializes strongly typed objects to JSON.

We'll look at each in turn, starting with TableJsonInputFormatter, which is responsible for deserializing JSON documents into Table objects, as used by, for example, the Post method.

JSON input formatter #

You create an input formatter by implementing the IInputFormatter interface, although in this example code base, inheriting from TextInputFormatter is enough:

internal sealed class TableJsonInputFormatter : TextInputFormatter

You can use the constructor to define which media types and encodings the formatter will support:

public TableJsonInputFormatter() { SupportedMediaTypes.Add(MediaTypeHeaderValue.Parse("application/json")); SupportedEncodings.Add(Encoding.UTF8); SupportedEncodings.Add(Encoding.Unicode); }

You'll also need to tell the formatter, which .NET type it supports:

protected override bool CanReadType(Type type) { return type == typeof(Table); }

As far as I can tell, the ASP.NET framework will first determine which action method (that is, which Controller, and which method on that Controller) should handle a given HTTP request. For a POST request, as shown above, it'll determine that the appropriate action method is the Post method.

Since the Post method takes a Table object as input, the framework then goes through the registered formatters and asks them whether they can read from an HTTP request into that type. In this case, the TableJsonInputFormatter answers true only if the type is Table.

When CanReadType answers true, the framework then invokes a method to turn the HTTP request into an object:

public override async Task<InputFormatterResult> ReadRequestBodyAsync( InputFormatterContext context, Encoding encoding) { using var rdr = new StreamReader(context.HttpContext.Request.Body, encoding); var json = await rdr.ReadToEndAsync().ConfigureAwait(false); var table = TableJson.Deserialize(json); if (table is { }) return await InputFormatterResult.SuccessAsync(table).ConfigureAwait(false); else return await InputFormatterResult.FailureAsync().ConfigureAwait(false); }

The ReadRequestBodyAsync method reads the HTTP request body into a string value called json, and then passes the value to TableJson.Deserialize. You can see the implementation of the Deserialize method in the article Serializing restaurant tables in C#. In short, it uses the default .NET JSON parser to probe a document object model. If it can turn the JSON document into a Table value, it does that. Otherwise, it returns null.

The above ReadRequestBodyAsync method then checks if the return value from TableJson.Deserialize is null. If it's not, it wraps the result in a value that indicates success. If it's null, it uses FailureAsync to indicate a deserialization failure.

With this input formatter in place as middleware, any action method that takes a Table parameter will automatically receive a deserialized JSON object, if possible.

JSON output formatter #

The TableJsonOutputFormatter class works much in the same way, but instead derives from the TextOutputFormatter base class:

internal sealed class TableJsonOutputFormatter : TextOutputFormatter

The constructor looks just like the TableJsonInputFormatter, and instead of a CanReadType method, it has a CanWriteType method that also looks identical.

The WriteResponseBodyAsync serializes a Table object to JSON:

public override Task WriteResponseBodyAsync( OutputFormatterWriteContext context, Encoding selectedEncoding) { if (context.Object is Table table) return context.HttpContext.Response.WriteAsync(table.Serialize(), selectedEncoding); throw new InvalidOperationException("Expected a Table object."); }

If context.Object is, in fact, a Table object, the method calls table.Serialize(), which you can also see in the article Serializing restaurant tables in C#. In short, it pattern-matches on the two possible kinds of tables and builds an appropriate abstract syntax tree or document object model that it then serializes to JSON.

Data access #

While the application stores data in SQL Server, it uses no object-relational mapper (ORM). Instead, it simply uses ADO.NET, as also outlined in the article Do ORMs reduce the need for mapping?

At first glance, the Create method looks simple:

public async Task Create(Guid id, Table table) { using var conn = new SqlConnection(connectionString); using var cmd = table.Accept(new SqlInsertCommandVisitor(id)); cmd.Connection = conn; await conn.OpenAsync().ConfigureAwait(false); await cmd.ExecuteNonQueryAsync().ConfigureAwait(false); }

The main work, however, is done by the nested SqlInsertCommandVisitor class:

private sealed class SqlInsertCommandVisitor(Guid id) : ITableVisitor<SqlCommand> { public SqlCommand VisitCommunal(NaturalNumber capacity) { const string createCommunalSql = @" INSERT INTO [dbo].[Tables] ([PublicId], [Capacity]) VALUES (@PublicId, @Capacity)"; var cmd = new SqlCommand(createCommunalSql); cmd.Parameters.AddWithValue("@PublicId", id); cmd.Parameters.AddWithValue("@Capacity", (int)capacity); return cmd; } public SqlCommand VisitSingle(NaturalNumber capacity, NaturalNumber minimalReservation) { const string createSingleSql = @" INSERT INTO [dbo].[Tables] ([PublicId], [Capacity], [MinimalReservation]) VALUES (@PublicId, @Capacity, @MinimalReservation)"; var cmd = new SqlCommand(createSingleSql); cmd.Parameters.AddWithValue("@PublicId", id); cmd.Parameters.AddWithValue("@Capacity", (int)capacity); cmd.Parameters.AddWithValue("@MinimalReservation", (int)minimalReservation); return cmd; } }

It 'pattern-matches' on the two possible kinds of table and returns an appropriate SqlCommand that the Create method then executes. Notice that no 'Entity' class is needed. The code works straight on SqlCommand.

The same is true for the repository's Read method:

public async Task<Table?> Read(Guid id) { const string readByIdSql = @" SELECT [Capacity], [MinimalReservation] FROM [dbo].[Tables] WHERE[PublicId] = @id"; using var conn = new SqlConnection(connectionString); using var cmd = new SqlCommand(readByIdSql, conn); cmd.Parameters.AddWithValue("@id", id); await conn.OpenAsync().ConfigureAwait(false); using var rdr = await cmd.ExecuteReaderAsync().ConfigureAwait(false); if (!await rdr.ReadAsync().ConfigureAwait(false)) return null; var capacity = (int)rdr["Capacity"]; var mimimalReservation = rdr["MinimalReservation"] as int?; if (mimimalReservation is null) return Table.TryCreateCommunal(capacity); else return Table.TryCreateSingle(capacity, mimimalReservation.Value); }

It works directly on SqlDataReader. Again, no extra 'Entity' class is required. If the data in the database makes sense, the Read method return a well-encapsulated Table object.

XML formats #

That covers the basics, but how well does this kind of architecture stand up to changing requirements?

One axis of variation is when a service needs to support multiple representations. In this example, I'll imagine that the service also needs to support not just one, but two, XML formats.

Granted, you may not run into that particular requirement that often, but it's typical of a kind of change that you're likely to run into. In REST APIs, for example, you should use content negotiation for versioning, and that's the same kind of problem.

To be fair, application code also changes for a variety of other reasons, including new features, changes to business logic, etc. I can't possibly cover all, though, and many of these are much better described than changes in wire formats.

As described in the introduction article, ideally the XML should support a format implied by these examples:

<communal-table> <capacity>12</capacity> </communal-table> <single-table> <capacity>4</capacity> <minimal-reservation>3</minimal-reservation> </single-table>

Notice that while these two examples have different root elements, they're still considered to both represent a table. Although at the boundaries, static types are illusory we may still, loosely speaking, consider both of those XML documents as belonging to the same 'type'.

With both of the previous architectures described in this article series, I've had to give up on this schema. The present data architecture, finally, is able to handle this requirement.

HTTP interactions with element-biased XML #

The service should support the new XML format when presented with the the "application/xml" media type, either as a content-type header or accept header. An initial POST request may look like this:

POST /tables HTTP/1.1 content-type: application/xml <communal-table><capacity>12</capacity></communal-table>

Which produces a reply like this:

HTTP/1.1 201 Created Location: https://example.com/Tables/a77ac3fd221e4a5caaca3a0fc2b83ffc

And just like before, a client can later use the address in the Location header to request the resource. By using the accept header, it can indicate that it wishes to receive the reply formatted as XML:

GET /Tables/a77ac3fd221e4a5caaca3a0fc2b83ffc HTTP/1.1 accept: application/xml

Which produces this response with XML content in the body:

HTTP/1.1 200 OK Content-Type: application/xml; charset=utf-8 <communal-table><capacity>12</capacity></communal-table>

How do you add support for this new format?

Element-biased XML formatters #

Not surprisingly, you can add support for the new format by adding new formatters.

opts.InputFormatters.Add(new ElementBiasedTableXmlInputFormatter()); opts.OutputFormatters.Add(new ElementBiasedTableXmlOutputFormatter());

Importantly, and in stark contrast to the DTO-based Ports and Adapters example, you don't have to change the existing code to add XML support. If you're concerned about design heuristics such as the Single Responsibility Principle, you may consider this a win. Apart from the two lines of code adding the formatters, all other code to support this new feature is in new classes.

Both of the new formatters support the "application/xml" media type.

Deserializing element-biased XML #

The constructor and CanReadType implementation of ElementBiasedTableXmlInputFormatter is nearly identical to code you've already seen here, so I'll skip the repetition. The ReadRequestBodyAsync implementation is also conceptually similar, but of course differs in the details.

public override async Task<InputFormatterResult> ReadRequestBodyAsync( InputFormatterContext context, Encoding encoding) { var xml = await XElement .LoadAsync(context.HttpContext.Request.Body, LoadOptions.None, CancellationToken.None) .ConfigureAwait(false); var table = TableXml.TryParseElementBiased(xml); if (table is { }) return await InputFormatterResult.SuccessAsync(table).ConfigureAwait(false); else return await InputFormatterResult.FailureAsync().ConfigureAwait(false); }

As is also the case with the JSON input formatter, the ReadRequestBodyAsync method really only implements an Adapter over a more specialized parser function:

internal static Table? TryParseElementBiased(XElement xml) { if (xml.Name == "communal-table") { var capacity = xml.Element("capacity")?.Value; if (capacity is { }) { if (int.TryParse(capacity, out var c)) return Table.TryCreateCommunal(c); } } if (xml.Name == "single-table") { var capacity = xml.Element("capacity")?.Value; var minimalReservation = xml.Element("minimal-reservation")?.Value; if (capacity is { } && minimalReservation is { }) { if (int.TryParse(capacity, out var c) && int.TryParse(minimalReservation, out var mr)) return Table.TryCreateSingle(c, mr); } } return null; }

In keeping with the common theme of the Domain Model Only data architecture, it deserialized by examining an Abstract Syntax Tree (AST) or document object model (DOM), specifically making use of the XElement API. This class is really part of the LINQ to XML API, but you'll probably agree that the above code example makes little use of LINQ.

Serializing element-biased XML #

Hardly surprising, turning a Table object into element-biased XML involves steps similar to converting it to JSON. The ElementBiasedTableXmlOutputFormatter class' WriteResponseBodyAsync method contains this implementation:

public override Task WriteResponseBodyAsync( OutputFormatterWriteContext context, Encoding selectedEncoding) { if (context.Object is Table table) return context.HttpContext.Response.WriteAsync( table.GenerateElementBiasedXml(), selectedEncoding); throw new InvalidOperationException("Expected a Table object."); }

Again, the heavy lifting is done by a specialized function:

internal static string GenerateElementBiasedXml(this Table table) { return table.Accept(new ElementBiasedTableVisitor()); } private sealed class ElementBiasedTableVisitor : ITableVisitor<string> { public string VisitCommunal(NaturalNumber capacity) { var xml = new XElement( "communal-table", new XElement("capacity", (int)capacity)); return xml.ToString(SaveOptions.DisableFormatting); } public string VisitSingle( NaturalNumber capacity, NaturalNumber minimalReservation) { var xml = new XElement( "single-table", new XElement("capacity", (int)capacity), new XElement("minimal-reservation", (int)minimalReservation)); return xml.ToString(SaveOptions.DisableFormatting); } }

True to form, GenerateElementBiasedXml assembles an appropriate AST for the kind of table in question, and finally converts it to a string value.

Attribute-biased XML #

I was curious how far I could take this kind of variation, so for the sake of exploration, I invented yet another XML format to support. Instead of making exclusive use of XML elements, this format uses XML attributes for primitive values.

<communal-table capacity="12" /> <single-table capacity="4" minimal-reservation="3" />

In order to distinguish this XML format from the other, I invented the vendor media type "application/vnd.ploeh.table+xml". The new formatters only handle this media type.

There's not much new to report. The new formatters work like the previous. In order to parse the new format, a new function does that, still based on XElement:

internal static Table? TryParseAttributeBiased(XElement xml) { if (xml.Name == "communal-table") { var capacity = xml.Attribute("capacity")?.Value; if (capacity is { }) { if (int.TryParse(capacity, out var c)) return Table.TryCreateCommunal(c); } } if (xml.Name == "single-table") { var capacity = xml.Attribute("capacity")?.Value; var minimalReservation = xml.Attribute("minimal-reservation")?.Value; if (capacity is { } && minimalReservation is { }) { if (int.TryParse(capacity, out var c) && int.TryParse(minimalReservation, out var mr)) return Table.TryCreateSingle(c, mr); } } return null; }

Likewise, converting a Table object to this format looks like code you've already seen:

internal static string GenerateAttributeBiasedXml(this Table table) { return table.Accept(new AttributedBiasedTableVisitor()); } private sealed class AttributedBiasedTableVisitor : ITableVisitor<string> { public string VisitCommunal(NaturalNumber capacity) { var xml = new XElement( "communal-table", new XAttribute("capacity", (int)capacity)); return xml.ToString(SaveOptions.DisableFormatting); } public string VisitSingle( NaturalNumber capacity, NaturalNumber minimalReservation) { var xml = new XElement( "single-table", new XAttribute("capacity", (int)capacity), new XAttribute("minimal-reservation", (int)minimalReservation)); return xml.ToString(SaveOptions.DisableFormatting); } }

Consistent with adding the first XML support, I didn't have to touch any of the existing Controller or data access code.

Evaluation #

If you're concerned with separation of concerns, the Domain Model Only architecture gracefully handles variation in external formats without impacting application logic, Domain Model, or data access. You deal with each new format in a consistent and independent manner. The architecture offers the ultimate data representation flexibility, since everything you can write as a stream of bytes you can implement.

Since at the boundary, static types are illusory this architecture is congruent with reality. For a REST service, at least, reality is what goes on the wire. While static types can also be used to model what wire formats look like, there's always a risk that you can use your IDE's refactoring tools to change a DTO in such a way that the code still compiles, but you've now changed the wire format. This could easily break existing clients.

When wire compatibility is important, I test-drive enough self-hosted tests that directly use and verify the wire format to give me a good sense of stability. Without DTO classes, it becomes increasingly important to cover externally visible behaviour with a trustworthy test suite, but really, if compatibility is important, you should be doing that anyway.

It almost goes without saying that a requirement for this architecture is that your chosen web framework supports it. As you've seen here, ASP.NET does, but that's not a given in general. Most web frameworks worth their salt will come with mechanisms that enable you to add new wire formats, but the question is how opinionated such extensibility points are. Do they expect you to work with DTOs, or are they more flexible than that?

You may consider the pure Domain Model Only data architecture too specialized for everyday use. I may do that, too. As I wrote in the introduction article, I don't intent these walk-throughs to be prescriptive. Rather, they explore what's possible, so that you and I have a bigger set of alternatives to choose from.

Hybrid architectures #

In the code base that accompanies Code That Fits in Your Head, I use a hybrid data architecture that I've used for years. ADO.NET for data access, as shown here, but DTOs for external JSON serialization. As demonstrated in the article Using Ports and Adapters to persist restaurant table configurations, using DTOs for the presentation layer may cause trouble if you need to support multiple wire formats. On the other hand, if you don't expect that this is a concern, you may decide to run that risk. I often do that.

When presenting these three architectures to a larger audience, one audience member told me that his team used another hybrid architecture: DTOs for the presentation layer, and separate DTOs for data access, but no Domain Model. I can see how this makes sense in a mostly CRUD-heavy application where nonetheless you need to be able to vary user interfaces independently from the database schema.

Finally, I should point out that the Domain Model Only data architecture is, in reality, also a kind of Ports and Adapters architecture. It just uses more low-level Adapter implementations than you idiomatically see.

Conclusion #

The Domain Model Only data architecture emphasises modelling business logic as a strongly-typed, well-encapsulated Domain Model, while eschewing using statically-typed DTOs for communication with external processes. What I most like about this alternative is that it leaves little confusion as to where functionality goes.

When you have, say, TableDto, Table, and TableEntity classes, you need a sophisticated and mature team to trust all developers to add functionality in the right place. If there's only a single Table Domain Model, it may be more evident to developers that only business logic belongs there, and other concerns ought to be addressed in different ways.

Even so, you may consider all the low-level parsing code not to your liking, and instead decide to use DTOs. I may too, depending on context.

Comments

In this version of the data archictecture, let's suppose that the controller that now accepts a Domain Object directly is part of a larger REST API. How would you handle discoverability of the API, as the usual OpenAPI (Swagger et.al.) tools probably takes offence at this type of request object?

Jes, thank you for writing. If by discoverability you mean 'documentation', I would handle that the same way I usually handle documentation requirements for REST APIs: by writing one or my documents that explain how the API works. If there are other possible uses of OpenAPI than that, and the GUI to perform ad-hoc experiments, I'm going to need to be taken to task, because then I'm not aware of them.

I've recently discussed my general misgivings about OpenAPI, and they apply here as well. I'm aware that other people feel differently about this, and that's okay too.

You may be right, but I haven't tried, so I don't know if this is the case.