Pendulum swing: no Haskell type annotation by default by Mark Seemann

Are Haskell IDE plugins now good enough that you don't need explicit type annotations?

More than three years ago, I published a small article series to document that I'd changed my mind on various small practices. Belatedly, here comes a fourth article, which, frankly, is a cousin rather than a sibling. Still, it fits the overall theme well enough to become another instalment in the series.

Here, I consider using fewer Haskell type annotations, following a practice that I've always followed in F#.

To be honest, though, it's not that I've already applied the following practice for a long time, and only now write about it. It's rather that I feel the need to write this article to kick an old habit and start a new.

Inertia #

As I write in the dedication in Code That Fits in Your Head,

"To my parents:

"My mother, Ulla Seemann, to whom I owe my attention to detail.

"My father, Leif Seemann, from whom I inherited my contrarian streak."

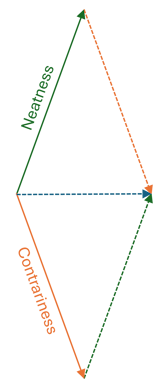

One should always be careful simplifying one's personality to a simple, easy-to-understand model, but a major point here is that I have two traits that pull in almost the opposite direction.

Despite much work, I only make slow progress. My desire to make things neat and proper almost cancel out my tendency to go against the norms. I tend to automatically toe whatever line that exists until the cognitive dissonance becomes so great that I can no longer ignore it.

I then write an article for the blog to clarify my thoughts.

You may read what comes next and ask, what took you so long?!

I can only refer to the above. I may look calm on the surface, but underneath I'm paddling like the dickens. Despite much work, though, only limited progress is visible.

Nudge #

Haskell is a statically typed language with the most powerful type system I know my way around. The types carry so much information that one can often infer a function's contract from the type alone. This is also fortunate, since many Haskell libraries tend to have, shall we say, minimal documentation. Even so, I've often found myself able to figure out how to use an unfamiliar Haskell API by examining the various types that a library exports.

In fact, the type system is so powerful that it drives a specialized search engine. If you need a function with the type (String -> IO Int) -> [String] -> IO [Int] you can search for it. Hoogle will list all functions that match that type, including functions that are more abstract than your specialized need. You don't even have to imagine what the name might be.

Since the type system is so powerful, it's a major means of communication. Thus, it makes sense that GHC regularly issues a warning if a function lacks a type annotation.

While the compiler enables you to control which warnings are turned on, the missing-signatures warning is included in the popular all flag that most people, I take it, use. I do, at least.

If you forget to declare the type of a function, the compiler will complain:

src\SecurityManager.hs:15:1: warning: [GHC-38417] [-Wmissing-signatures] Top-level binding with no type signature: createUser :: (Monad m, Text.Printf.PrintfArg b, Text.Printf.PrintfArg (t a), Foldable t, Eq (t a)) => (String -> m ()) -> m (t a) -> (t a -> b) -> m () | 15 | createUser writeLine readLine encrypt = do | ^^^^^^^^^^

This is a strong nudge that you're supposed to give each function a type declaration, so I've been doing that for years. Neat and proper.

Of course, if you treat warnings as errors, as I recommend, the nudge becomes a law.

Learning from F# #

While I try to adopt the style and idioms of any language I work in, it's always annoyed me that I had to add a type annotation to a Haskell function. After all, the compiler can usually infer the type. Frankly, adding a type signature feels like redundant ceremony. It's like having to declare a function in a header file before being able to implement it in another file.

This particularly bothers me because I've long since abandoned type annotations in F#. As far as I can tell, most of the F# community has, too.

When you implement an F# function, you just write the implementation and let the compiler infer the type. (Code example from Zone of Ceremony.)

let inline consume quantity = let go (acc, xs) x = if quantity <= acc then (acc, Seq.append xs (Seq.singleton x)) else (acc + x, xs) Seq.fold go (LanguagePrimitives.GenericZero, Seq.empty) >> snd

Since F# often has to interact with .NET code written in C#, you regularly have to add some type annotations to help the compiler along:

let average (timeSpans : NonEmpty<TimeSpan>) = [ timeSpans.Head ] @ List.ofSeq timeSpans.Tail |> List.averageBy (_.Ticks >> double) |> int64 |> TimeSpan.FromTicks

Even so, I follow the rule of minimal annotations: Only add the type information required to compile, and let the compiler infer the rest. For example, the above average function has the inferred type NonEmpty<TimeSpan> -> TimeSpan. While I had to specify the input type in order to be able to use the Ticks property, I didn't have to specify the return type. So I didn't.

My impression from reading other people's F# code is that this is a common, albeit not universal, approach to type annotation.

This minimizes ceremony, since you only need to declare and maintain the types that the compiler can't infer. There's no reason to repeat the work that the compiler can already do, and in practice, if you do, it just gets in the way.

Motivation for explicit type definitions #

When I extol the merits of static types, proponents of dynamically typed languages often argue that the types are in the way. Granted, this is a discussion that I still struggle with, but based on my understanding of the argument, it seems entirely reasonable. After all, if you have to spend time declaring the type of each and every parameter, as well as a function's return type, it does seem to be in the way. This is only exacerbated if you later change your mind.

Programming is, to a large extend, an explorative activity. You start with one notion of how your code should be structured, but as you progress, you learn. You'll often have to go back and change existing code. This, as far as I can tell, is much easier in, say, Python or Clojure than in C# or Java.

If, however, one extrapolates from the experience with Java or C# to all statically typed languages, that would be a logical fallacy. My point with Zone of Ceremony was exactly that there's a group of languages 'to the right' of high-ceremony languages with low levels of ceremony. Even though they're statically typed.

I have to admit, however, that in that article I cheated a little in order to drive home a point. While you can write Haskell code in a low-ceremony style, the tooling (in the form of the all warning set, at least) encourages a high-ceremony style. Add those type definitions, even thought they're redundant.

It's not that I don't understand some of the underlying motivation behind that rule. Daniel Wagner enumerated several reasons in a 2013 Stack Overflow answer. Some of the reasons still apply, but on the other hand, the world has also moved on in the intervening decade.

To be honest, the Haskell IDE situation has always been precarious. One day, it works really well; the next day, I struggle with it. Over the years, though, things have improved.

There was a time when an explicit type definition was a indisputable help, because you couldn't rely on tools to light up and tell you what the inferred type was.

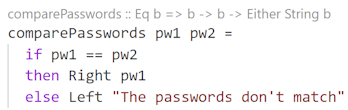

Today, on the other hand, the Haskell extension for Visual Studio Code automatically displays the inferred type above a function implementation:

To be clear, the top line that shows the type definition is not part of the source code. It's just shown by Visual Studio Code as a code lens (I think it's called), and it automatically changes if I edit the code in such a way that the type changes.

If you can rely on such automatic type information, it seems that an explicit type declaration is less useful. It's at least one less reason to add type annotations to the source code.

Ceremony example #

In order to explain what I mean by the types being in the way, I'll give an example. Consider the code example from the article Legacy Security Manager in Haskell. In it, I described how every time I made a change to the createUser action, I had to effectively remove and re-add the type declaration.

It doesn't have to be like that. If instead I'd started without type annotations, I could have moved forward without being slowed down by having to edit type definitions. Take the first edit, breaking the dependency on the console, as an example. Without type annotations, the createUser action would look exactly as before, just without the type declaration. Its type would still be IO ().

After the first edit, the first lines of the action now look like this:

createUser writeLine readLine = do () <- writeLine "Enter a username" -- ...

Even without a type definition, the action still has a type. The compiler infers it to be (Monad m, Eq a, IsChar a) => (String -> m ()) -> m [a] -> m (), which is certainly a bit of a mouthful, but exactly what I had explicitly added in the other article.

The code doesn't compile until I also change the main method to pass the new parameters:

main = createUser putStrLn getLine

You'd have to make a similar edit in, say, Python, although there'd be no compiler to remind you. My point isn't that this is better than a dynamically typed language, but rather that it's on par. The types aren't in the way.

We see the similar lack of required ceremony when the createUser action finally pulls in the comparePasswords and validatePassword functions:

createUser writeLine readLine encrypt = do () <- writeLine "Enter a username" username <- readLine writeLine "Enter your full name" fullName <- readLine writeLine "Enter your password" password <- readLine writeLine "Re-enter your password" confirmPassword <- readLine writeLine $ either id (printf "Saving Details for User (%s, %s, %s)" username fullName . encrypt) (validatePassword =<< comparePasswords password confirmPassword)

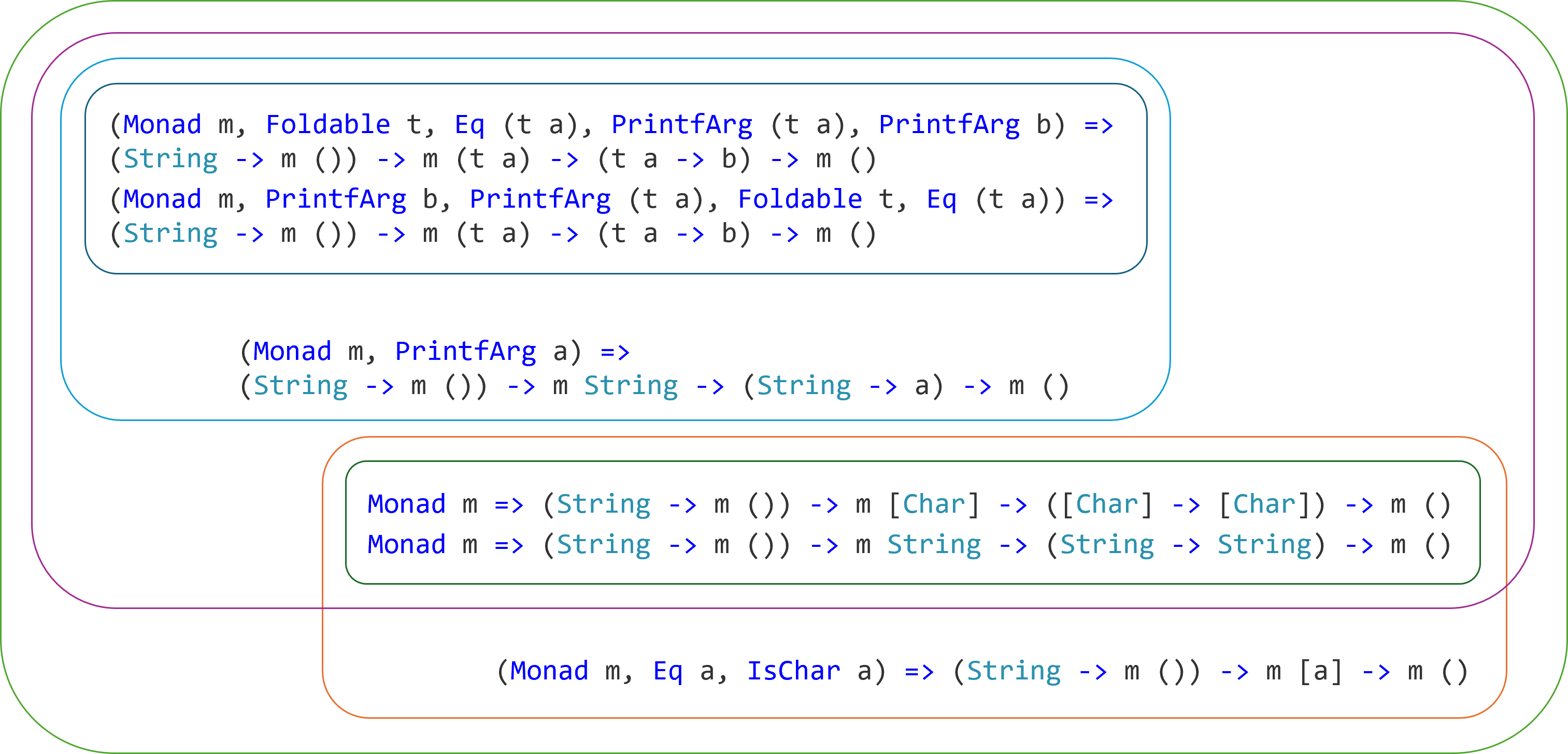

Again, there's no type annotation, and while the type actually does change to

(Monad m, PrintfArg b, PrintfArg (t a), Foldable t, Eq (t a)) => (String -> m ()) -> m (t a) -> (t a -> b) -> m ()

it impacts none of the existing code. Again, the types aren't in the way, and no ceremony is required.

Compare that inferred type signature with the explicit final type annotation in the previous article. The inferred type is much more abstract and permissive than the explicit declaration, although I also grant that Daniel Wagner had a point that you can make explicit type definitions more reader-friendly.

Flies in the ointment #

Do the inferred types communicate intent? That's debatable. For example, it's not immediately clear that the above t a allows String.

Another thing that annoys me is that I had to add that unit binding on the first line:

createUser writeLine readLine encrypt = do () <- writeLine "Enter a username" -- ...

The reason for that is that if I don't do that (that is, if I just write writeLine "Xyz" all the way), the compiler infers the type of writeLine to be String -> m b2, rather than just String -> m (). In effect, I want b2 ~ (), but because the compiler thinks that b2 may be anything, it issues an unused-do-bind warning.

The idiomatic way to resolve that situation is to add a type definition, but that's the situation I'm trying to avoid. Thus, my desire to do without annotations pushes me to write unnatural implementation code. This reminds me of the notion of test-induced damage. This is at best a disagreeable compromise.

It also annoys me that implementation details leak out to the inferred type, witnessed by the PrintfArg type constraint. What happens if I change the implementation to use list concatenation?

createUser writeLine readLine encrypt = do () <- writeLine "Enter a username" username <- readLine writeLine "Enter your full name" fullName <- readLine writeLine "Enter your password" password <- readLine writeLine "Re-enter your password" confirmPassword <- readLine let createMsg pwd = "Saving Details for User (" ++ username ++", " ++ fullName ++ ", " ++ pwd ++")" writeLine $ either id (createMsg . encrypt) (validatePassword =<< comparePasswords password confirmPassword)

If I do that, the type also changes:

Monad m => (String -> m ()) -> m [Char] -> ([Char] -> [Char]) -> m ()

While we get rid of the PrintfArg type constraint, the type becomes otherwise more concrete, now operating on String values (keeping in mind that String is a type synonym for [Char]).

The code still compiles, and all tests still pass, because the abstraction I've had in mind all along is essentially this last type.

The writeLine action should take a String and have some side effect, but return no data. The type String -> m () nicely models that, striking a fine balance between being sufficiently concrete to capture intent, but still abstract enough to be testable.

The readLine action should provide input String values, and again m String nicely models that concern.

Finally, encrypt is indeed a naked String endomorphism: String -> String.

With my decades of experience with object-oriented design, it still strikes me as odd that implementation details can make a type more abstract, but once you think it over, it may be okay.

More liberal abstractions #

The inferred types are consistently more liberal than the abstraction I have in mind, which is

Monad m => (String -> m ()) -> m String -> (String -> String) -> m ()

In all cases, the inferred types include that type as a subset.

I hope that I've created the above diagram so that it makes sense, but the point I'm trying to get across is that the two type definitions in the lower middle are equivalent, and are the most specific types. That's the intended abstraction. Thinking of types as sets, all the other inferred types are supersets of that type, in various ways. Even though implementation details leak out in the shape of PrintfArg and IsChar, these are effectually larger sets.

This takes some getting used to: The implementation details are more liberal than the abstraction. This seems to be at odds with the Dependency Inversion Principle (DIP), which suggests that abstractions shouldn't depend on implementation details. I'm not yet sure what to make of this, but I suspect that this is more of problem of overlapping linguistic semantics than software design. What I mean is that I have a feeling that 'implementation detail' have more than one meaning. At least, in the perspective of the DIP, an implementation detail limits your options. For example, depending on a particular database technology is more constraining than depending on some abstract notion of what the persistence mechanism might be. Contrast this with an implementation detail such as the PrintfArg type constraint. It doesn't narrow your options; on the contrary, it makes the implementation more liberal.

Still, while an implementation should be liberal in what it accepts, it's probably not a good idea to publish such a capability to the wider world. After all, if you do, someone will eventually rely on it.

For internal use only #

Going through all these considerations, I think I'll revise my position as the following.

I'll forgo type annotations as long as I explore a problem space. For internal application use, this may effectively mean forever, in the sense that how you compose an application from smaller building blocks is likely to be in permanent flux. Here I have in mind your average web asset or other public-facing service that's in constant development. You keep adding new features, or changing domain logic as the overall business evolves.

As I've also recently discussed, Haskell is a great scripting language, and I think that here, too, I'll dial down the type definitions.

If I ever do another Advent of Code in Haskell, I think I'll also eschew explicit type annotations.

On the other hand, I can see that once an API stabilizes, you may want to lock it down. This may also apply to internal abstractions if you're working in a team and you explicitly want to communicate what a contract is.

If the code is a reusable library, I think that explicit type definitions are still required. Both for the reasons outlined by Daniel Wagner, and also to avoid being the victim of Hyrum's law.

That's why I phrase this pendulum swing as a new default. I'll begin programming without type definitions, but add them as needed. The point is rather that there may be parts of a code base where they're never needed, and then it's okay to keep going without them.

You can use a language pragma to opt out of the missing-signatures compiler warning on a module-by-module basis:

{-# OPTIONS_GHC -Wno-missing-signatures #-}

This will enable me to rely on type inference in parts of the code base, while keeping the build clean of compiler warnings.

Conclusion #

I've always appreciated the F# compiler's ability to infer types and just let type changes automatically ripple through the code base. For that reason, the Haskell norm of explicitly adding a (redundant) type annotation has always vexed me.

It often takes me a long time to reach seemingly obvious conclusions, such as: Don't always add type definitions to Haskell functions. Let the type inference engine do its job.

The reason it takes me so long to take such a small step is that I want to follow 'best practice'; I want to write idiomatic code. When the standard compiler-warning set complains about missing type definitions, it takes me significant deliberation to discard such advice. I could imagine other programmers being in the same situation, which is one reason I wrote this article.

The point isn't that type definitions are a universally bad idea. They aren't. Rather, the point is only that it's also okay to do without them in parts of a code base. Perhaps only temporarily, but in some cases maybe permanently.

The missing-signatures warning shouldn't, I now believe, be considered an absolute law, but rather a contextual rule.