ploeh blog danish software design

Pure times in Haskell

A Polling Consumer implementation written in Haskell.

As you can read in the introductory article, I've come to realise that the Polling Consumer that I originally wrote in F# isn't particularly functional. Being the friendly and productive language that it is, F# doesn't protect you from mixing pure and impure code, but Haskell does. For that reason, you can develop a prototype in Haskell, and later port it to F#, if you want to learn how to solve the problem in a strictly functional way.

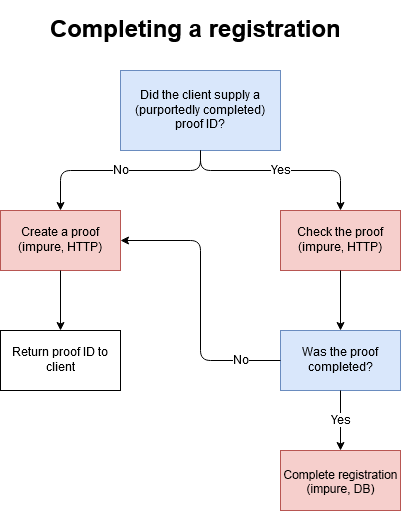

To recapitulate, the task is to implement a Polling Consumer that runs for a predefined duration, after which it exits (so that it can be restarted by a scheduler).

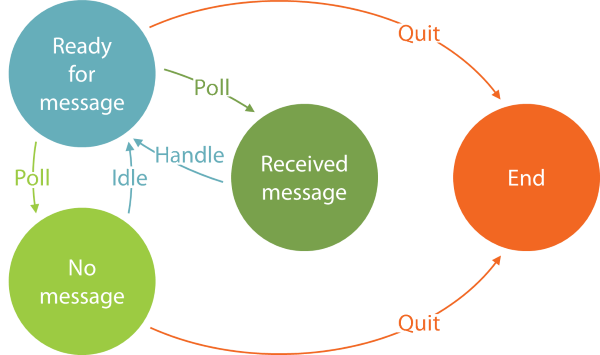

The program is a finite state machine that moves between four states. From the ready state, it'll need to decide whether to poll for a new message or exit. Polling and handling takes time (and at compile-time we don't know how long), and the program ought to stop at a pre-defined time. If it gets too close to that time, it should exit, but otherwise, it should attempt to handle a message (and keep track of how long this takes). You can read a more elaborate description of the problem in the original article.

State data types #

The premise in that initial article was that F#'s type system is so powerful that it can aid you in designing a good solution. Haskell's type system is even more powerful, so it can give you even better help.

The Polling Consumer program must measure and keep track of how long it takes to poll, handle a message, or idle. All of these are durations. In Haskell, we can represent them as NominalDiffTime values. I'm a bit concerned, though, that if I represent all of these durations as NominalDiffTime values, I may accidentally use a poll duration where I really need a handle duration, and so on. Perhaps I'm being overly cautious, but I like to get help from the type system. In the words of Igal Tabachnik, types prevent typos:

newtype PollDuration = PollDuration NominalDiffTime deriving (Eq, Show) newtype IdleDuration = IdleDuration NominalDiffTime deriving (Eq, Show) newtype HandleDuration = HandleDuration NominalDiffTime deriving (Eq, Show) data CycleDuration = CycleDuration { pollDuration :: PollDuration, handleDuration :: HandleDuration } deriving (Eq, Show)

This simply declares that PollDuration, IdleDuration, and HandleDuration are all NominalDiffTime values, but you can't mistakenly use a PollDuration where a HandleDuration is required, and so on.

In addition to those three types of duration, I also define a CycleDuration. This is the data that I actually need to keep track of: how long does it take to handle a single message? I'm assuming that polling for a message is an I/O-bound operation, so it may take significant time. Likewise, handling a message may take time. When deciding whether to exit or handle a new message, both durations count. Instead of defining CycleDuration as a newtype alias for NominalDiffTime, I decided to define it as a record type comprised of a PollDuration and a HandleDuration. It's not that I'm really interested in keeping track of these two values individually, but it protects me from making stupid mistakes. I can only create a CycleDuration value if I have both a PollDuration and a HandleDuration value.

In short, I'm trying to combat primitive obsession.

With these duration types in place, you can define the states of the finite state machine:

data PollingState msg = Ready [CycleDuration] | ReceivedMessage [CycleDuration] PollDuration msg | NoMessage [CycleDuration] PollDuration | Stopped [CycleDuration] deriving (Show)

Like the original F# code, state data can be represented as a sum type, with a case for each state. In all four cases, a CycleDuration list keeps track of the observed message-handling statistics. This is the way the program should attempt to calculate whether it's safe to handle another message, or exit. Two of the cases (ReceivedMessage and NoMessage) also contain a PollDuration, which informs the program about the duration of the poll operation that caused it to reach that state. Additionally, the ReceivedMessage case contains a message of the generic type msg. This makes the entire PollingState type generic. A message can be of any type: a string, a number, or a complex data structure. The Polling Consumer program doesn't care, because it doesn't handle messages; it only schedules the polling.

This is reminiscent of the previous F# attempt, with the most notable difference that it doesn't attempt to capture durations as Timed<'a> values. It does capture durations, but not when the operations started and stopped. So how will it know what time it is?

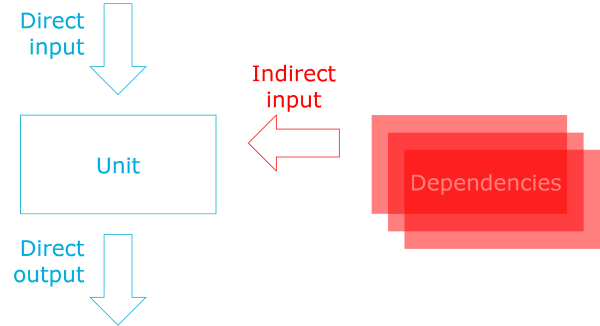

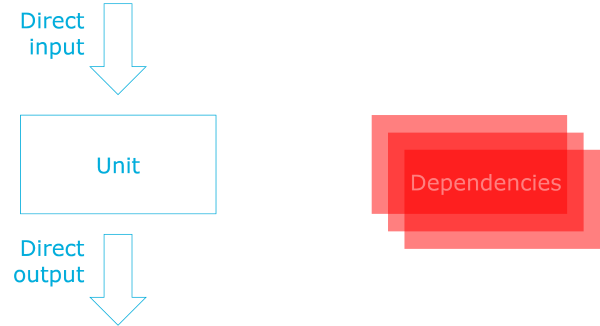

Interactions as pure values #

This is the heart of the matter. The Polling Consumer must constantly look at the clock. It's under a deadline, and it must also measure durations of poll, handle, and idle operations. All of this is non-deterministic, so not pure. The program has to interact with impure operations during its entire lifetime. In fact, its ultimate decision to exit will be based on impure data. How can you model this in a pure fashion?

You can model long-running (impure) interactions by defining a small instruction set for an Abstract Syntax Tree (AST). That sounds intimidating, but once you get the hang of it, it becomes routine. In later articles, I'll expand on this, but for now I'll refer you to an excellent article by Scott Wlaschin, who explains the approach in F#.

data PollingInstruction msg next = CurrentTime (UTCTime -> next) | Poll ((Maybe msg, PollDuration) -> next) | Handle msg (HandleDuration -> next) | Idle IdleDuration (IdleDuration -> next) deriving (Functor)

This PollingInstruction sum type defines four cases of interaction. Each case is

- named after the interaction

- defines the type of data used as input arguments for the interaction

- and also defines a continuation; that is: a function that will be executed with the return value of the interaction

Handle case contains all three elements: the interaction is named Handle, the input to the interaction is of the generic type msg, and the continuation is a function that takes a HandleDuration value as input, and returns a value of the generic type next. In other words, the interaction takes a msg value as input, and returns a HandleDuration value as output. That duration is the time it took to handle the input message. (The intent is that the operation that 'implements' this interaction also actually handles the message, whatever that means.)

Likewise, the Idle interaction takes an IdleDuration as input, and also returns an IdleDuration. The intent here is that the 'implementation' of the interaction suspends itself for the duration of the input value, and returns the time it actually spent in suspension (which is likely to be slightly longer than the requested duration).

Both CurrentTime and Poll, on the other hand, are degenerate, because they take no input. You don't need to supply any input argument to read the current time. You could model that interaction as taking () ('unit') as an input argument (CurrentTime () (UTCTime -> next)), but the () is redundant and can be omitted. The same is the case for the Poll case, which returns a Maybe msg and how long the poll took.

(The PollingInstruction sum type defines four cases, which is also the number of cases defined by PollingState. This is a coincidence; don't read anything into it.)

The PollingInstruction type is generic in a way that you can make it a Functor. Haskell can do this for you automatically, using the DeriveFunctor language extension; that's what deriving (Functor) does. If you'd like to see how to explicitly make such a data structure a functor, please refer to the F# example; F# can't automatically derive functors, so you'll have to do it manually.

Since PollingInstruction is a Functor, we can make a Monad out of it. You use a free monad, which allows you to build a monad from any functor:

type PollingProgram msg = Free (PollingInstruction msg)

In Haskell, it's literally a one-liner, but in F# you'll have to write the code yourself. Thus, if you're interested in learning how this magic happens, I'm going to dissect this step in the next article.

The motivation for defining a Monad is that we get automatic syntactic sugar for our PollingProgram ASTs, via Haskell's do notation. In F#, we're going to write a computation expression builder to achieve the same effect.

The final building blocks for the specialised PollingProgram API is a convenience function for each case:

currentTime :: PollingProgram msg UTCTime currentTime = liftF (CurrentTime id) poll :: PollingProgram msg (Maybe msg, PollDuration) poll = liftF (Poll id) handle :: msg -> PollingProgram msg HandleDuration handle msg = liftF (Handle msg id) idle :: IdleDuration -> PollingProgram msg IdleDuration idle d = liftF (Idle d id)

More one-liners, as you can tell. These all use liftF to turn PollingInstruction cases into PollingProgram values. The degenerate cases CurrentTime and Poll simply become values, whereas the complete cases become (pure) functions.

Support functions #

You may have noticed that until now, I haven't written much 'code' in the sense that most people think of it. It's mostly been type declarations and a few one-liners. A strong and sophisticated type system like Haskell's enable you to shift some of the programming burden from 'real programming' to type definitions, but you'll still have to write some code.

Before we get to the state transitions proper, we'll look at some support functions. These will, I hope, serve as a good introduction to how to use the PollingProgram API.

One decision the Polling Consumer program has to make is to decide whether it should suspend itself for a short time. That's easy to express using the API:

shouldIdle :: IdleDuration -> UTCTime -> PollingProgram msg Bool shouldIdle (IdleDuration d) stopBefore = do now <- currentTime return $ d `addUTCTime` now < stopBefore

The shouldIdle function returns a small program that, when evaluated, will decide whether or not to suspend itself. It first reads the current time using the above currentTime value. While currentTime has the type PollingProgram msg UTCTime, due to Haskell's do notation, the now value simply has the type UTCTime. This enables you to use the built-in addUTCTime function (here written using infix notation) to add now to d (a NominalDiffTime value, due to pattern matching into IdleDuration).

Adding the idle duration d to the current time now gives you the time the program would resume, were it to suspend itself. The shouldIdle function compares that time to the stopBefore argument (another UTCTime value). If the time the program would resume is before the time it ought to stop, the return value is True; otherwise, it's False.

Since the entire function is defined within a do block, the return type isn't just Bool, but rather PollingProgram msg Bool. It's a little PollingProgram AST, but it looks like imperative code.

You sometimes hear the bon mot that Haskell is the world's greatest imperative language. The combination of free monads and do notation certainly makes it easy to define small grammars (dare I say DSLs?) that look like imperative code, while still being strictly functional.

The crux is that shouldIdle is pure. It looks impure, but it's not. It's an Abstract Syntax Tree, and it only becomes non-deterministic if interpreted by an impure interpreter (more on that later).

The purpose of shouldIdle is to decide whether or not to idle or exit. If the program decides to idle, it should return to the ready state, as per the above state diagram. In this state, it needs to decide whether or not to poll for a message. If there's a message, it should be handled, and all of that takes time. In the ready state, then, the program must figure out how much time it thinks that handling a message will take.

One way to do that is to consider the observed durations so far. This helper function calculates the expected duration based on the average and standard deviation of the previous durations:

calculateExpectedDuration :: NominalDiffTime -> [CycleDuration] -> NominalDiffTime calculateExpectedDuration estimatedDuration [] = estimatedDuration calculateExpectedDuration _ statistics = toEnum $ fromEnum $ avg + stdDev * 3 where fromCycleDuration :: CycleDuration -> Float fromCycleDuration (CycleDuration (PollDuration pd) (HandleDuration hd)) = toEnum $ fromEnum $ pd + hd durations = fmap fromCycleDuration statistics l = toEnum $ length durations avg = sum durations / l stdDev = sqrt (sum (fmap (\x -> (x - avg) ** 2) durations) / l)

I'm not going to dwell much on this function, as it's a normal, pure, mathematical function. The only feature I'll emphasise is that in order to call it, you must pass an estimatedDuration that will be used when statistics is empty. This is because you can't calculate the average of an empty list. This estimated duration is simply your wild guess at how long you think it'll take to handle a message.

With this helper function, you can now write a small PollingProgram that decides whether or not to poll:

shouldPoll :: NominalDiffTime -> UTCTime -> [CycleDuration] -> PollingProgram msg Bool shouldPoll estimatedDuration stopBefore statistics = do let expectedHandleDuration = calculateExpectedDuration estimatedDuration statistics now <- currentTime return $ expectedHandleDuration `addUTCTime` now < stopBefore

Notice that the shouldPoll function looks similar to shouldIdle. As an extra initial step, it first calculates expectedHandleDuration using the above calculateExpectedDuration function. With that, it follows the same two steps as shouldIdle.

This function is also pure, because it returns an AST. While it looks impure, it's not, because it doesn't actually do anything.

Transitions #

Those are all the building blocks required to write the state transitions. In order to break down the problem in manageable chunks, you can write a transition function for each state. Such a function would return the next state, given a particular input state.

While it'd be intuitive to begin with the ready state, let's instead start with the simplest transition. In the end state, nothing should happen, so the transition is a one-liner:

transitionFromStopped :: Monad m => [CycleDuration] -> m (PollingState msg) transitionFromStopped statistics = return $ Stopped statistics

Once stopped, the program stays in the Stopped state. This function simply takes a list of CycleDuration values and elevates them to a monad type. Notice that the return value isn't specifically a PollingProgram, but any monad. Since PollingProgram is a monad, that'll work too, though.

Slightly more complicated than transitionFromStopped is the transition from the received state. There's no branching in that case; simply handle the message, measure how long it took, add the observed duration to the statistics, and transition back to ready:

transitionFromReceived :: [CycleDuration] -> PollDuration -> msg -> PollingProgram msg (PollingState msg) transitionFromReceived statistics pd msg = do hd <- handle msg return $ Ready (CycleDuration pd hd : statistics)

Again, this looks impure, but the return type is PollingProgram msg (PollingState msg), indicating that the return value is an AST. As is not uncommon in Haskell, the type declaration is larger than the implementation.

Things get slightly more interesting in the no message state. Here you get to use the above shouldIdle support function:

transitionFromNoMessage :: IdleDuration -> UTCTime -> [CycleDuration] -> PollingProgram msg (PollingState msg) transitionFromNoMessage d stopBefore statistics = do b <- shouldIdle d stopBefore if b then idle d >> return (Ready statistics) else return $ Stopped statistics

The first step in transitionFromNoMessage is calling shouldIdle. Thanks to Haskell's do notation, the b value is a simple Bool value that you can use to branch. If b is True, then first call idle and then return to the Ready state; otherwise, exit to the Stopped state.

Notice how PollingProgram values are composable. For instance, shouldIdle defines a small PollingProgram that can be (re)used in a bigger program, such as in transitionFromNoMessage.

Finally, from the ready state, the program can transition to three other states, so this is the most complex transition:

transitionFromReady :: NominalDiffTime -> UTCTime -> [CycleDuration] -> PollingProgram msg (PollingState msg) transitionFromReady estimatedDuration stopBefore statistics = do b <- shouldPoll estimatedDuration stopBefore statistics if b then do pollResult <- poll case pollResult of (Just msg, pd) -> return $ ReceivedMessage statistics pd msg (Nothing , pd) -> return $ NoMessage statistics pd else return $ Stopped statistics

Like transitionFromNoMessage, the transitionFromReady function first calls a supporting function (this time shouldPoll) in order to make a decision. If b is False, the next state is Stopped; otherwise, the program moves on to the next step.

The program polls for a message using the poll helper function defined above. While poll is a PollingProgram msg (Maybe msg, PollDuration) value, thanks to do notation, pollResult is a Maybe msg, PollDuration value. Matching on that value requires you to handle two separate cases: If a message was received (Just msg), then return a ReceivedMessage state with the message. Otherwise (Nothing), return a NoMessage state.

With those four functions you can now define a function that can transition from any input state:

transition :: NominalDiffTime -> IdleDuration -> UTCTime -> PollingState msg -> PollingProgram msg (PollingState msg) transition estimatedDuration idleDuration stopBefore state = case state of Ready stats -> transitionFromReady estimatedDuration stopBefore stats ReceivedMessage stats pd msg -> transitionFromReceived stats pd msg NoMessage stats _ -> transitionFromNoMessage idleDuration stopBefore stats Stopped stats -> transitionFromStopped stats

The transition function simply pattern-matches on the input state and delegates to each of the four above transition functions.

A short philosophical interlude #

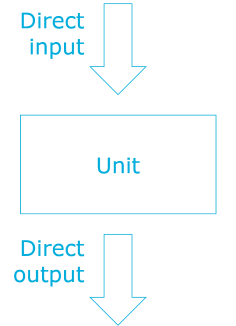

All code so far has been pure, although it may not look that way. At this stage, it may be reasonable to pause and consider: what's the point, even?

After all, when interpreted, a PollingProgram can (and, in reality, almost certainly will) have impure behaviour. If we create an entire executable upon this abstraction, then we've essentially developed a big program with impure behaviour...

Indeed we have, but the alternative would have been to write it all in the context of IO. If you'd done that, then you'd allow any non-deterministic, side-effecty behaviour anywhere in your program. At least with a PollingProgram, any reader will quickly learn that only a maximum of four impure operations can happen. In other words, you've managed to control and restrict the impurity to exactly those interactions you want to model.

Not only that, but the type of impurity is immediately visible as part of a value's type. In a later article, you'll see how different impure interaction APIs can be composed.

Interpretation #

At this point, you have a program in the form of an AST. How do you execute it?

You write an interpreter:

interpret :: PollingProgram Message a -> IO a interpret program = case runFree program of Pure r -> return r Free (CurrentTime next) -> getCurrentTime >>= interpret . next Free (Poll next) -> pollImp >>= interpret . next Free (Handle msg next) -> handleImp msg >>= interpret . next Free (Idle d next) -> idleImp d >>= interpret . next

When you turn a functor into a monad using the Free constructor (see above), your functor is wrapped in a general-purpose sum type with two cases: Pure and Free. Your functor is always contained in the Free case, whereas Pure is the escape hatch. This is where you return the value of the entire computation.

An interpreter must match both Pure and Free. Pure is easy, because you simply return the result value.

In the Free case, you'll need to match each of the four cases of PollingInstruction. In all four cases, you invoke an impure implementation function, pass its return value to next, and finally recursively invoke interpret with the value returned by next.

Three of the implementations are details that aren't of importance here, but if you want to review them, the entire source code for this article is available as a gist. The fourth implementation is the built-in getCurrentTime function. They are all impure; all return IO values. This also implies that the return type of the entire interpret function is IO a.

This particular interpreter is impure, but nothing prevents you from writing a pure interpreter, for example for use in unit testing.

Execution #

You're almost done. You have a function that returns a new state for any given input state, as well as an interpreter. You need a function that can repeat this in a loop until it reaches the Stopped state:

run :: NominalDiffTime -> IdleDuration -> UTCTime -> PollingState Message -> IO (PollingState Message) run estimatedDuration idleDuration stopBefore state = do ns <- interpret $ transition estimatedDuration idleDuration stopBefore state case ns of Stopped _ -> return ns _ -> run estimatedDuration idleDuration stopBefore ns

This recursive function calls transition with the input state. You may recall that transition returns a PollingProgram msg (PollingState msg) value. Passing this value to interpret returns an IO (PollingState Message) value, and because of the do notation, the new state (ns) is a PollingState Message value.

You can now pattern match on ns. If it's a Stopped value, you return the value. Otherwise, you recursively call run once more.

The run function keeps doing this until it reaches the Stopped state.

Finally, then, you can write the entry point for the program:

main :: IO () main = do timeAtEntry <- getCurrentTime let estimatedDuration = 2 let idleDuration = IdleDuration 5 let stopBefore = addUTCTime 60 timeAtEntry s <- run estimatedDuration idleDuration stopBefore $ Ready [] timeAtExit <- getCurrentTime putStrLn $ "Elapsed time: " ++ show (diffUTCTime timeAtExit timeAtEntry) putStrLn $ printf "%d message(s) handled." $ report s

It defines the initial input parameters:

- My wild guess about the handle duration is 2 seconds

- I'd like the idle duration to be 5 seconds

- The program should run for 60 seconds

Ready []. These are all the arguments you need to call run.

Once run returns, you can print the number of messages handled using a (trivial) report function that I haven't shown (but which is available in the gist).

If you run the program, it'll produce output similar to this:

Polling Handling Polling Handling Polling Handling Polling Sleeping Polling Handling Polling Sleeping Polling Handling Polling Handling Polling Sleeping Polling Sleeping Polling Sleeping Polling Sleeping Polling Sleeping Polling Handling Polling Handling Polling Handling Polling Handling Polling Sleeping Polling Elapsed time: 56.6835022s 10 message(s) handled.

It does, indeed, exit before 60 seconds have elapsed.

Summary #

You can model long-running interactions with an Abstract Syntax Tree. Without do notation, writing programs as 'raw' ASTs would be cumbersome, but turning the AST into a (free) monad makes it all quite palatable.

Haskell's sophisticated type system makes this a fairly low-hanging fruit, once you understand how to do it. You can also port this type of design to F#, although, as you shall see next, more boilerplate is required.

Next: Pure times in F#.

Pure times

How to interact with the system clock using strict functional programming.

A couple of years ago, I published an article called Good times with F#. Unfortunately, that article never lived up to my expectations. Not that I don't have a good time with F# (I do), but the article introduced an attempt to model execution durations of operations in a functional manner. The article introduced a Timed<'a> generic type that I had high hopes for.

Later, I published a Pluralsight course called Type-Driven Development with F#, in which I used Timed<'a> to implement a Polling Consumer. It's a good course that teaches you how to let F#'s type system give you rapid feedback. You can read a few articles that highlight the important parts of the course.

There's a problem with the implementation, though. It's not functional.

It's nice F# code, but F# is this friendly, forgiving, multi-paradigmatic language that enables you to get real work done. If you want to do this using partial application as a replacement for Dependency Injection, it'll let you. It is, however, not functional.

Consider, as an example, this function:

// (Timed<TimeSpan list> -> bool) -> (unit -> Timed<'a>) -> Timed<TimeSpan list> // -> State let transitionFromNoMessage shouldIdle idle nm = if shouldIdle nm then idle () |> Untimed.withResult nm.Result |> ReadyState else StoppedState nm.Result

The idle function has the type unit -> Timed<'a>. This can't possibly be a pure function, since a deterministic function can't produce a value from nothing when it doesn't know the type of the value. (In F#, this is technically not true, since we could return null for all reference types, and 'zero' for all value types, but even so, it should be clear that we can't produce any useful return value in a deterministic manner.)

The same argument applies, in weaker form, to the shouldIdle function. While it is possible to write more than one pure function with the type Timed<TimeSpan list> -> bool, the intent is that it should look at the time statistics and the current time, and decide whether or not it's 'safe' to poll again. Getting the current time from the system clock is a non-deterministic operation.

Ever since I discovered that Dependency Injection is impossible in functional programming, I knew that I had to return to the Polling Consumer example and show how to implement it in a truly functional style. In order to be sure that I don't accidentally call an impure function from a 'pure' function, I'll first rewrite the Polling Consumer in Haskell, and afterwards translate the Haskell code to F#. When reading, you can skip the Haskell article and go directly to the F# article, or vice versa, if you like.

Next: Pure times in Haskell.

Fractal trees with PureScript

A fractal tree drawn with PureScript

Last week, I attended Mathias Brandewinder's F# (and Clojure) dojo in Copenhagen, and had great fun drawing a fractal tree in F# together with other attendees. Afterwards, I started thinking that it'd be fairly easy to port the F# code to Haskell, but then I reconsidered. The combination of Haskell, Windows, and drawing sounded intimidating. This seemed a good opportunity to take PureScript for a spin, because that would enable me to draw the tree on an HTML canvas.

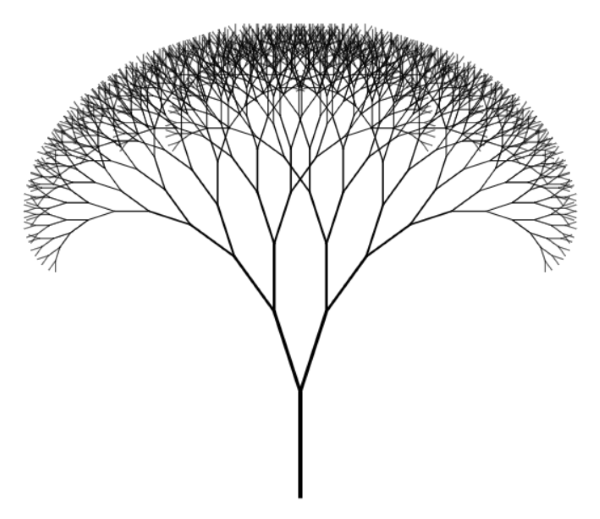

In case you're wondering, a fractal tree is simply a tree that branches infinitely in (I suppose) a deterministic fashion. Here's an example of the output of the code of this article:

This is my first attempt at PureScript, and I think I spent between five and ten hours in total. Most of them I used to figure out how to install PureScript, how to set up a development environment, and so on. All in all I found the process pleasing.

While it's a separate, independent language, PureScript is clearly a descendant of Haskell, and the syntax is similar.

Separating data from effects #

In functional programming, you routinely separate data from effects. Instead of trying to both draw and calculate branches of a tree in a single operation, you figure out how to first define a fractal tree as data, and then subsequently you can draw it.

A generic binary tree is a staple of functional programming. Here's one way to do it:

data Tree a = Leaf a | Node a (Tree a) (Tree a)

Such a tree is either a leaf node with a generically typed value, or an (intermediate) node with a value and two branches, which are themselves (sub)trees.

In a sense, this definition is an approximation, because a 'real' fractal tree has no leafs. In Haskell you can easily define infinite trees, because Haskell is lazily evaluated. PureScript, on the other hand, is eagerly evaluated, so infinite recursion would require jumping through some hoops, and I don't think it's important in this exercise.

While the above tree type can contain values of any type, in this exercise, it should contain line segments. One way to do this is to define a Line record type:

data Line = Line { x :: Number, y :: Number, angle :: Number, length :: Number, width :: Number }

This is a type with five labelled values, all of them numbers. x and y are coordinates for the origin of the line, angle defines the angle (measured in radians) from the origin, and length the length of the line. In a similarly obvious vein, width denotes the width of the line, although this data element has no impact on the calculation of the tree. It's purely a display concern.

Given the first four numbers in a Line value, you can calculate the endpoint of a line:

endpoint :: forall r. { x :: Number , y :: Number , angle :: Number , length :: Number | r } -> Tuple Number Number endpoint line = -- Flip the y value because Canvas coordinate system points down from upper -- left corner Tuple (line.x + line.length * cos line.angle) (-(-line.y + line.length * sin line.angle))

This may look more intimidating than it really is. The first seven lines are simply the (optional) type declaration; ignore that for a minute. The function itself is a one-liner, although I've formatted it on several lines in order to stay within an 80 characters line width. It simply performs a bit of trigonometry in order to find the endpoint of a line with an origin, angle, and length. As the code comment states, it negates the y value because the HTML canvas coordinate system points down instead of up (larger y values are further towards the bottom of the screen than smaller values).

The function calculates a new set of coordinates for the endpoint, and returns them as a tuple. In PureScript, tuples are explicit and created with the Tuple data constructor.

In general, as far as I can tell, you have less need of tuples in PureScript, because instead, you have row type polymorphism. This is still a new concept to me, but as far as I can tell, it's a sort of static duck typing. You can see it in the type declaration of the endpoint function. The function takes a line argument, the type of which is any record type that contains x, y, angle, and length labels of the Number type. For instance, as you'll soon see, you can pass a Line value to the endpoint function.

Creating branches #

In a fractal tree, you calculate two branches for any given branch. Typically, in order to draw a pretty picture, you make the sub-branches smaller than the parent branch. You also incline each branch an angle from the parent branch. While you can hard-code the values for these operations, you can also pass them as arguments. In order to prevent an explosion of primitive function arguments, I collected all such parameters in a single data type:

data FractalParameters = FractalParameters { leftAngle :: Number, rightAngle :: Number, shrinkFactor :: Number }

This is a record type similar to the Line type you've already seen.

When you have a FractalParameters value and a (parent) line, you can calculate its branches:

createBranches :: FractalParameters -> Line -> Tuple Line Line createBranches (FractalParameters p) (Line line) = Tuple left right where Tuple x y = endpoint line left = Line { x: x, y: y, angle: pi * (line.angle / pi + p.leftAngle), length: (line.length * p.shrinkFactor), width: (line.width * p.shrinkFactor) } right = Line { x: x, y: y, angle: pi * (line.angle / pi - p.rightAngle), length: (line.length * p.shrinkFactor), width: (line.width * p.shrinkFactor) }

The createBranches function returns a tuple of Line values, one for the left branch, and one for the right branch. First, it calls endpoint with line. Notice that line is a Line value, and because Line defines x, y, angle, and length labels, it can be used as an input argument. This type-checks because of row type polymorphism.

Given the endpoint of the parent line, createBranches then creates two new Line values (left and right) with that endpoint as their origins. Both of these values are modified with the FractalParameters argument, so that they branch off to the left and the right, and also shrink in an aesthetically pleasing manner.

Creating a tree #

Now that you can calculate the branches of a line, you can create a tree using recursion:

createTree :: Int -> FractalParameters -> Line -> Tree Line createTree depth p line = if depth <= 0 then Leaf line else let Tuple leftLine rightLine = createBranches p line left = createTree (depth - 1) p leftLine right = createTree (depth - 1) p rightLine in Node line left right

The createTree function takes a depth argument, which specifies the depth (or is it the height?) of the tree. The reason I called it depth is because createTree is recursive, and depth controls the depth of the recursion. If depth is zero or less, the function returns a Leaf node containing the input line.

Otherwise, it calls createBranches with the input line, and recursively calls createTree for each of these branches, but with a decremented depth. It then returns a Node containing the input line and the two sub-trees left and right.

This implementation isn't tail-recursive, but the above image was generated with a recursion depth of only 10, so running out of stack space wasn't my biggest concern.

Drawing the tree #

With the createTree function you can create a fractal tree, but it's no fun if you can't draw it. You can use the Graphics.Canvas module in order to draw on an HTML canvas. First, here's how to draw a single line:

drawLine :: Context2D -> Line -> Eff (canvas :: CANVAS) Unit drawLine ctx (Line line) = do let Tuple x' y' = endpoint line void $ strokePath ctx $ do void $ moveTo ctx line.x line.y void $ setLineWidth line.width ctx void $ lineTo ctx x' y' closePath ctx

While hardly unmanageable, I was surprised that I wasn't able to find a pre-defined function that would let me draw a line. Perhaps I was looking in the wrong place.

With this helper function, you can now draw a tree using pattern matching:

drawTree :: Context2D -> Tree Line -> Eff (canvas :: CANVAS) Unit drawTree ctx (Leaf line) = drawLine ctx line drawTree ctx (Node line left right) = do drawLine ctx line drawTree ctx left drawTree ctx right

If the input tree is a Leaf value, the first line matches and the function simply draws the line, using the drawLine function.

When the input tree is a Node value, the function first draws the line associated with that node, and then recursively calls itself with the left and right sub-trees.

Execution #

The drawTree function enables you to draw using a Context2D value, which you can create from an HTML canvas:

mcanvas <- getCanvasElementById "canvas" let canvas = unsafePartial (fromJust mcanvas) ctx <- getContext2D canvas

From where does the "canvas" element come? Ultimately, PureScript compiles to JavaScript, and you can put the compiled script in an HTML file together with a canvas element:

<body> <canvas id="canvas" width="800" height="800"></canvas> <script src="index.js" type="text/javascript"></script> </body>

Once you have a Context2D value, you can draw a tree. Here's the whole entry point, including the above canvas-finding code:

main :: Eff (canvas :: CANVAS) Unit main = do mcanvas <- getCanvasElementById "canvas" let canvas = unsafePartial (fromJust mcanvas) ctx <- getContext2D canvas let trunk = Line { x: 300.0, y: 600.0, angle: (pi / 2.0), length: 100.0, width: 4.0 } let p = FractalParameters { leftAngle: 0.1, rightAngle: 0.1, shrinkFactor: 0.8 } let tree = createTree 10 p trunk drawTree ctx tree

After it finds the Context2D value, it hard-codes a trunk Line and a set of FractalParameters. From these, it creates a tree of size 10 and draws the tree in the beginning of this article.

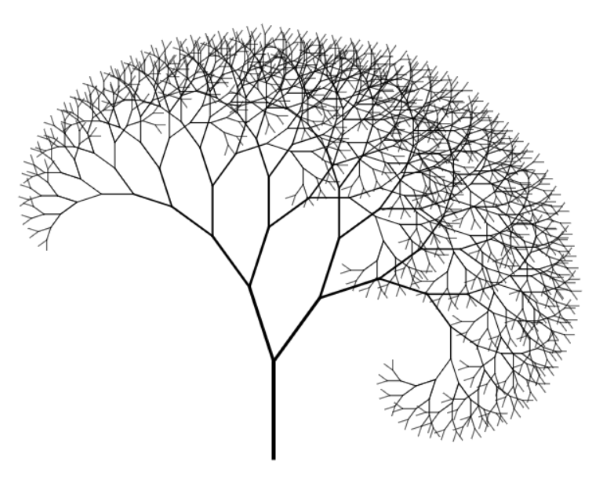

You can fiddle with the parameters to your liking. For example, you can make the right angle wider than the left angle:

let p = FractalParameters { leftAngle: 0.1, rightAngle: 0.2, shrinkFactor: 0.8 }

This produces an asymmetric tree:

In order to compile the code, I used this command:

$ pulp browserify -t html/index.js

This compiles the PureScript code to a single index.js file, which is output to the html directory. This directory also contains the HTML file with the canvas.

You can find the entire PureScript file in this Gist.

Summary #

It was fun to try PureScript. I've been staying away from JavaScript-based development for many years now, but if I ever have to do some client-side development, I may consider it. So far, I've found that PureScript seems viable for drawing. How good it is if you need to interact with 'normal' web-pages or SPAs, I don't know (yet).

If you have some experience with Haskell, it looks like it's easy to get started with PureScript.

Using Polly with F# async workflows

How to use Polly as a Circuit Breaker in F# async workflows.

Release It! describes a stability design pattern called Circuit Breaker, which is used to fail fast if a downstream service is experiencing problems.

I recently had to add a Circuit Breaker to an F# async workflow, and although Circuit Breaker isn't that difficult to implement (my book contains an example in C#), I found it most prudent to use an existing implementation. Polly seemed a good choice.

In my F# code base, I was already working with an 'abstraction' of the type HttpRequestMessage -> Async<HttpResponseMessage>: given an HttpClient called client, the implementation is as simple as client.SendAsync >> Async.AwaitTask. Since SendAsync can throw HttpRequestException or WebException, I wanted to define a Circuit Breaker policy for these two exception types.

While Polly supports policies for Task-based APIs, it doesn't automatically work with F# async workflows. The problem is that whenever you convert an async workflow into a Task (using Async.AwaitTask), or a Task into an async workflow (using Async.StartAsTask), any exceptions thrown will end up buried within an AggregateException. In order to dig them out again, I first had to write this function:

// Exception -> bool let rec private isNestedHttpException (e : Exception) = match e with | :? AggregateException as ae -> ae.InnerException :: Seq.toList ae.InnerExceptions |> Seq.exists isNestedHttpException | :? HttpRequestException -> true | :? WebException -> true | _ -> false

This function recursively searches through all inner exceptions of an AggregateException and returns true if it finds one of the exception types I'm interested in handling; otherwise, it returns false.

This predicate enabled me to write the Polly policy I needed:

open Polly // int -> TimeSpan -> CircuitBreaker.CircuitBreakerPolicy let createPolicy exceptionsAllowedBeforeBreaking durationOfBreak = Policy .Handle<AggregateException>(fun ae -> isNestedHttpException ae) .CircuitBreakerAsync(exceptionsAllowedBeforeBreaking, durationOfBreak)

Since Polly exposes an object-oriented API, I wanted a curried alternative, so I also wrote this curried helper function:

// Policy -> ('a -> Async<'b>) -> 'a -> Async<'b> let private execute (policy : Policy) f req = policy.ExecuteAsync(fun () -> f req |> Async.StartAsTask) |> Async.AwaitTask

The execute function executes any function of the type 'a -> Async<'b> with a Polly policy. As you can see, there's some back-and-forth between Tasks and async workflows, so this is probably not the most efficient Circuit Breaker ever configured, but I wagered that since the underlying operation was going to involve an HTTP request and response, the overhead would be insignificant. No one has complained yet.

When Polly opens the Circuit Breaker, it throws an exception of the type BrokenCircuitException. Again because of all the marshalling, this also gets wrapped within an AggregateException, so I had to write another function to unwrap it:

// Exception -> bool let rec private isNestedCircuitBreakerException (e : Exception) = match e with | :? AggregateException as ae -> ae.InnerException :: Seq.toList ae.InnerExceptions |> Seq.exists isNestedCircuitBreakerException | :? CircuitBreaker.BrokenCircuitException -> true | _ -> false

The isNestedCircuitBreakerException is similar to isNestedHttpException, so it'd be tempting to refactor. I decided, however, to rely on the rule of three and leave both functions as they were.

In my F# code I prefer to handle application errors using Either values instead of relying on exceptions, so I wanted to translate any BrokenCircuitException to a particular application error. With the above isNestedCircuitBreakerException predicate, this was now possible with a try/with expression:

// Policy -> ('a -> Async<'b>) -> 'a -> Async<Result<'b, BoundaryFailure>> let sendUsingPolicy policy send req = async { try let! resp = req |> execute policy send return Result.succeed resp with e when isNestedCircuitBreakerException e -> return Result.fail Controllers.StabilityFailure }

This function takes a policy, a send function that actually sends the request, and the request to send. If all goes well, the response is lifted into a Success case and returned. If the Circuit Breaker is open, a StabilityFailure value is returned instead.

Since the with expression uses an exception filter, all other exceptions will still be thrown from this function.

It might still be worthwhile to look into options for a more F#-friendly Circuit Breaker. One option would be to work with the Polly maintainers to add such an API to Polly itself. Another option would be to write a separate F# implementation.

Comments

There are two minor points I want to address: * I think instead of the recursive search for a nested exception you can use AggregateException.Flatten() |> Seq.exists ...

* And I know that you know that a major difference between

Async and Task is that a Task is typically started whereas an Async is not.

So it might be irritating that calling execute already starts the execution. If you wrapped the body of execute inside another async block it would be lazy as usual, I think.

Simple holidays

A story about arriving at the simplest solution that could possibly work.

The Zen of Python states: Simple is better than complex. If I've met a programmer who disagrees with that, I'm not aware of it. It's hardly a controversial assertion, but what does 'simplicity' mean? Can you even identify a simple solution?

I often see software developers defaulting to complex solutions, because a simpler solution isn't immediately obvious. In retrospect, a simple solution often is obvious, but only once you've found it. Until then, it's elusive.

I'd like to share a story in which I arrived at a simple solution after several false starts. I hope it can be an inspiration.

Dutch holidays #

Recently, I had to write some code that takes into account Dutch holidays. (In order to address any confusion that could arise from this: No, I'm not Dutch, I'm Danish, but currently, I'm doing some work on a system targeting a market in the Netherlands.) Specifically, given a date, I had to find the latest possible Dutch bank day on or before that date.

For normal week days (Monday to Friday), it's easy, because such a date is already a bank date. In other words, in that case, you can simply return the input date. Also, in normal weeks, given a Saturday or Sunday, you should return the preceding Friday. The problem is, however, that some Fridays are holidays, and therefore not bank days.

Like many other countries, the Netherlands have complicated rules for determining official holidays. Here are some tricky parts:

- Some holidays always fall on the same date. One example is Bevrijdingsdag (Liberation Day), which always falls on May 5. This holiday celebrates a historic event (the end of World War II in Europe), so if you wanted to calculate bank holidays in the past, you'd have to figure out in which year this became a public holiday. Surely, at least, it must have been 1945 or later.

- Some holidays fall on specific week days. The most prominent example is Easter, where Goede Vrijdag (Good Friday) always (as the name implies) falls on a Friday. Which Friday exactly can be calculated using a complicated algorithm.

- One holiday (Koningsdag (King's Day)) celebrates the king's birthday. The date is determined by the currently reigning monarch's birthday, and it's called Queen's Day when the reigning monarch is a queen. Obviously, the exact date changes depending on who's king or queen, and you can't predict when it's going to change. And what will happen if the current monarch abdicates or dies before his or her birthday, but after the new monarch's birthday? Does that mean that there will be no such holiday that year? Or what about the converse? Could there be two such holidays if a monarch abdicates after his or her birthday, and the new monarch's birthday falls later the same year?

Figuring out if a date is a bank day, then, is what you might call an 'interesting' problem. How would you solve it? Before you read on, take a moment to consider how you'd attempt to solve the problem. If you will, you can consider the test cases immediately below to get a better sense of the problem.

Test cases #

Here's a small set of test cases that I wrote in order to describe the problem:

| Test case | Input date | Expected output |

|---|---|---|

| Monday | 2017-03-06 | 2017-03-06 |

| Tuesday | 2017-03-07 | 2017-03-07 |

| Wednesday | 2017-03-08 | 2017-03-08 |

| Thursday | 2017-03-09 | 2017-03-09 |

| Friday | 2017-03-10 | 2017-03-10 |

| Saturday | 2017-03-11 | 2017-03-10 |

| Sunday | 2017-03-12 | 2017-03-10 |

| Good Friday | 2017-04-14 | 2017-04-13 |

| Saturday after Good Friday | 2017-04-15 | 2017-04-13 |

| Sunday after Good Friday | 2017-04-16 | 2017-04-13 |

| Easter Monday | 2017-04-17 | 2017-04-13 |

| Ascension Day - Thursday | 2017-05-25 | 2017-05-24 |

| Whit Monday | 2110-05-26 | 2110-05-23 |

| Liberation Day | 9713-05-05 | 9713-05-04 |

Option: query a third-party service #

How would you solve the problem? The first solution that occurred to me was to use a third-party service. My guess is that most developers would consider this option. After all, it's essentially third-part data. The official holidays are determined by a third party, in this case the Dutch state. Surely, some Dutch official organisation would publish the list of official holidays somewhere. Perhaps, if you're lucky, there's even an on-line service you can query in order to download the list of holidays in some machine-readable format.

There are, however, problems with this alternative: if you query such a service each time you need to find an appropriate bank date, how are you going to handle network errors? What if the third-part service is (temporarily) unavailable?

Since I'm trying to figure out bank dates, you may already have guessed that I'm handling money, so it's not desirable to simple throw an exception and say that a caller would have to try again later. This could lead to loss of revenue.

Querying a third-party service every time you need to figure out a Dutch bank holiday is out of the question for that reason. It's also likely to be inefficient.

Option: cache third-party data #

Public holidays rarely change, so your next attempt could be a variation of the previous. Use third-party data, but instead of querying a third-party service every time you need the information, cache it.

The problem with caching is that you're not guaranteed that the data you seek is in the cache. At application start, caches are usually empty. You'd have to rely on making one good query to the third-party data source in order to put the data in the cache. Only if that succeeds can you use the cache. This, again, leaves you vulnerable to the normal failure modes of distributed computing. If you can't reach the third-party data source, you have nothing to put in the cache.

This can be a problem at application start, or when the cache data expires.

Using a cache reduces the risk that the data is unavailable, but it doesn't eliminate it. It also adds complexity in the form of a cache that has to be configured and managed. Granted, you can use a reusable cache library or service to minimise that cost, so it may not be a big deal. Still, when making a decision about application architecture, I think it helps to explicitly identify advantages and disadvantages.

Using a cache felt better to me, but I still wasn't happy. Too many things could go wrong.

Option: persistent cache #

An incremental improvement on the previous option would be to write the cache data to persistent storage. This takes care of the issue with the cache being empty at application start-up. You can even deal with cache expiry by keep using stale data if you can't reach the 'official' source of the data.

It leaves me a bit concerned, though, because if you allow the system to continue working with stale data, perhaps the application could enter a state where the data never updates. This could happen if the official data source moves, or changes format. In such a case, your application would keep trying to refresh the cache, and it would permanently fail. It would permanently run with stale data. Would you ever discover that problem?

My concern is that the application could silently fail. You could counter that by logging a warning somewhere, but that would introduce a permanent burden on the team responsible for operating the application. This isn't impossible, but it does constitute an extra complexity. This alternative still didn't feel good to me.

Option: cron #

Because I wasn't happy with any of the above alternatives, I started looking for different approaches to the problem. For a short while, I considered using a .NET implementation of cron, with a crontab file. As far as I can tell, though there's no easy way to define Easter using cron, so I quickly abandoned that line of inquiry.

Option: Nager.Date #

I wasn't entirely done with idea of calculating holidays on the fly. While calculating Easter is complicated, it can be done; there is a well-defined algorithm for it. Whenever I run into a general problem like this, I assume that someone has already done the required work, and this is also the case here. I quickly found an open source library called Nager.Date; I'm sure that there are other alternatives, but Nager.Date looks like it's more than good enough.

Such a library would be able to calculate all holidays for a given year, based on the algorithms embedded in it. That looked really promising.

And yet... again, I was concerned. Official holidays are, as we've already established, politically decided. Using an algorithmic approach is fundamentally wrong, because that's not really how the holidays are determined. Holidays are defined by decree; it just so happens that some of the decrees take the form of an algorithm (such as Easter).

What would happen if the Dutch state decides to add a new holiday? Or to take one away? Of when a new monarch is crowned? In order to handle such changes, we'd now have to hope that Nager.Date would be updated. We could try to make that more likely to happen by sending a pull request, but we'd still be vulnerable to a third party. What if the maintainer of Nager.Date is on vacation?

Even if you can get a change into a library like Nager.Date, how is the algorithmic approach going to deal with historic dates? If the monarch changes, you can update the library, but does it correctly handle dates in the past, where the King's Day was different?

Using an algorithm to determine a holiday seemed promising, but ultimately, I decided that I didn't like this option either.

Option: configuration file #

My main concern about using an algorithm is that it'd make it difficult to handle arbitrary changes and exceptional cases. If we'd use a configuration file, on the other hand, we could always edit the configuration file in order to add or remove holidays for a given year.

In essence, I was envisioning a configuration file that simply contained a list of holidays for each year.

That sounds fairly simple and maintainable, but from where should the data come?

You could probably download a list of official holidays for the next few years, like 2017, 2018, 2019, and so on, but the list would be finite, and probably not cover more than a few years into the future.

What if, for example, I'd only be able to find an official list that goes to 2020? What will happen, then, when our application enters 2021? To the rest of the code base, it'd look like there were no holidays in 2021.

At this time we can expect that new official lists have been published, so a programmer could obtain such a list and update the configuration file when it's time. This, unfortunately, is easy to forget. Four years in the future, perhaps none of the original programmers are left. It's more than likely that no one will remember to do this.

Option: algorithm-generated configuration file #

The problem that the configuration data could 'run out' can be addressed by initialising the configuration file with data generated algorithmically. You could, for example, ask Nager.Date to generate all the holidays for the next many years. In fact, the year 9999 is the maximum year handled by .NET's System.DateTime, so you could ask it to generate all the holidays until 9999.

That sounds like a lot, but it's only about half a megabyte of data...

This solves the problem of 'running out' of holiday data, but still enables you to edit the holiday data when it changes in the future. For example, if the King's Day changes in 2031, you can change all the King's Day values from 2031 onward, while retaining the correct values for the previous years.

This seems promising...

Option: hard-coded holidays #

I almost decided to use the previous, configuration-based solution, and I was getting ready to invent a configuration file format, and a reader for it, and so on. Then I recalled Mike Hadlow's article about the configuration complexity clock.

I'm fairly certain that the only people who would be editing a hypothetical holiday configuration file would be programmers. In that case, why put the configuration in a proprietary format? Why deal with the hassle of reading and parsing such a file? Why not put the data in code?

That's what I decided to do.

It's not a perfect solution. It's still necessary to go and change that code file when the holiday rules change. For example, when the King's Day changes, you'd have to edit the file.

Still, it's the simplest solution I could come up with. It has no moving parts, and uses a 'best effort' approach in order to guarantee that holidays will always be present. If you can come up with a better alternative, please leave a comment.

Data generation #

Nager.Date seemed useful for generating the initial set of holidays, so I wrote a small F# script that generated the necessary C# code snippets:

#r @"packages/Nager.Date.1.3.0/lib/net45/Nager.Date.dll" open System.IO open Nager.Date let formatHoliday (h : Model.PublicHoliday) = let y, m, d = h.Date.Year, h.Date.Month, h.Date.Day sprintf "new DateTime(%i, %2i, %2i), // %s/%s" y m d h.Name h.LocalName let holidays = [2017..9999] |> Seq.collect (fun y -> DateSystem.GetPublicHoliday (CountryCode.NL, y)) |> Seq.map formatHoliday File.WriteAllLines (__SOURCE_DIRECTORY__ + "/dutch-holidays.txt", holidays)

This script simply asks Nager.Date to calculate all Dutch holidays for the years 2017 to 9999, format them as C# code snippets, and write the lines to a text file. The size of that file is 4 MB, because the auto-generated code comments also take up some space.

First implementation attempt #

The next thing I did was to copy the text from dutch-holidays.txt to a C# code file, which I had already prepared with a class and a few methods that would query my generated data. The result looked like this:

public static class DateTimeExtensions { public static DateTimeOffset AdjustToLatestPrecedingDutchBankDay( this DateTimeOffset value) { var candidate = value; while (!(IsDutchBankDay(candidate.DateTime))) candidate = candidate.AddDays(-1); return candidate; } private static bool IsDutchBankDay(DateTime date) { if (date.DayOfWeek == DayOfWeek.Saturday) return false; if (date.DayOfWeek == DayOfWeek.Sunday) return false; if (dutchHolidays.Contains(date.Date)) return false; return true; } #region Dutch holidays private static DateTime[] dutchHolidays = { new DateTime(2017, 1, 1), // New Year's Day/Nieuwjaarsdag new DateTime(2017, 4, 14), // Good Friday/Goede Vrijdag new DateTime(2017, 4, 17), // Easter Monday/ Pasen new DateTime(2017, 4, 27), // King's Day/Koningsdag new DateTime(2017, 5, 5), // Liberation Day/Bevrijdingsdag new DateTime(2017, 5, 25), // Ascension Day/Hemelvaartsdag new DateTime(2017, 6, 5), // Whit Monday/Pinksteren new DateTime(2017, 12, 25), // Christmas Day/Eerste kerstdag new DateTime(2017, 12, 26), // St. Stephen's Day/Tweede kerstdag new DateTime(2018, 1, 1), // New Year's Day/Nieuwjaarsdag new DateTime(2018, 3, 30), // Good Friday/Goede Vrijdag // Lots and lots of dates... new DateTime(9999, 5, 6), // Ascension Day/Hemelvaartsdag new DateTime(9999, 5, 17), // Whit Monday/Pinksteren new DateTime(9999, 12, 25), // Christmas Day/Eerste kerstdag new DateTime(9999, 12, 26), // St. Stephen's Day/Tweede kerstdag }; #endregion }

My old computer isn't happy about having to compile 71,918 lines of C# in a single file, but it's doable, and as far as I can tell, Visual Studio caches the result of compilation, so as long as I don't change the file, there's little adverse effect.

Unit tests #

In order to verify that the implementation works, I wrote this parametrised test:

public class DateTimeExtensionsTests { [Theory] [InlineData("2017-03-06", "2017-03-06")] // Monday [InlineData("2017-03-07", "2017-03-07")] // Tuesday [InlineData("2017-03-08", "2017-03-08")] // Wednesday [InlineData("2017-03-09", "2017-03-09")] // Thursday [InlineData("2017-03-10", "2017-03-10")] // Friday [InlineData("2017-03-11", "2017-03-10")] // Saturday [InlineData("2017-03-12", "2017-03-10")] // Sunday [InlineData("2017-04-14", "2017-04-13")] // Good Friday [InlineData("2017-04-15", "2017-04-13")] // Saturday after Good Friday [InlineData("2017-04-16", "2017-04-13")] // Sunday after Good Friday [InlineData("2017-04-17", "2017-04-13")] // Easter Monday [InlineData("2017-05-25", "2017-05-24")] // Ascension Day - Thursday [InlineData("2110-05-26", "2110-05-23")] // Whit Monday [InlineData("9713-05-05", "9713-05-04")] // Liberation Day public void AdjustToLatestPrecedingDutchBankDayReturnsCorrectResult( string sutS, string expectedS) { var sut = DateTimeOffset.Parse(sutS); var actual = sut.AdjustToLatestPrecedingDutchBankDay(); Assert.Equal(DateTimeOffset.Parse(expectedS), actual); } }

All test cases pass. This works in [Theory], but unfortunately, it turns out, it doesn't work in practice.

When used in an ASP.NET Web API application, AdjustToLatestPrecedingDutchBankDay throws a StackOverflowException. It took me a while to figure out why, but it turns out that the stack size is smaller in IIS than when you run a 'normal' .NET process, such as an automated test.

System.DateTime is a value type, and as far as I can tell, it uses some stack space during initialisation. When the DateTimeExtensions class is first used, the static dutchHolidays array is initialised, and that uses enough stack space to exhaust the stack when running in IIS.

Final implementation #

The stack space problem seems to be related to DateTime initialisation. If I store a similar number of 64-bit integers in an array, it seems that there's no problem.

First, I had to modify the formatHoliday function:

let formatHoliday (h : Model.PublicHoliday) = let t, y, m, d = h.Date.Ticks, h.Date.Year, h.Date.Month, h.Date.Day sprintf "%19iL, // %i-%02i-%02i, %s/%s" t y m d h.Name h.LocalName

This enabled me to generate a new file with C# code fragments, but now containing ticks instead of DateTime values. Copying those C# fragments into my file gave me this:

public static class DateTimeExtensions { public static DateTimeOffset AdjustToLatestPrecedingDutchBankDay( this DateTimeOffset value) { var candidate = value; while (!(IsDutchBankDay(candidate.DateTime))) candidate = candidate.AddDays(-1); return candidate; } private static bool IsDutchBankDay(DateTime date) { if (date.DayOfWeek == DayOfWeek.Saturday) return false; if (date.DayOfWeek == DayOfWeek.Sunday) return false; if (dutchHolidays.Contains(date.Date.Ticks)) return false; return true; } #region Dutch holidays private static long[] dutchHolidays = { 636188256000000000L, // 2017-01-01, New Year's Day/Nieuwjaarsdag 636277248000000000L, // 2017-04-14, Good Friday/Goede Vrijdag 636279840000000000L, // 2017-04-17, Easter Monday/ Pasen 636288480000000000L, // 2017-04-27, King's Day/Koningsdag 636295392000000000L, // 2017-05-05, Liberation Day/Bevrijdingsdag 636312672000000000L, // 2017-05-25, Ascension Day/Hemelvaartsdag 636322176000000000L, // 2017-06-05, Whit Monday/Pinksteren 636497568000000000L, // 2017-12-25, Christmas Day/Eerste kerstdag 636498432000000000L, // 2017-12-26, St. Stephen's Day/Tweede kerstdag 636503616000000000L, // 2018-01-01, New Year's Day/Nieuwjaarsdag 636579648000000000L, // 2018-03-30, Good Friday/Goede Vrijdag // Lots and lots of dates... 3155171616000000000L, // 9999-05-06, Ascension Day/Hemelvaartsdag 3155181120000000000L, // 9999-05-17, Whit Monday/Pinksteren 3155372928000000000L, // 9999-12-25, Christmas Day/Eerste kerstdag 3155373792000000000L, // 9999-12-26, St. Stephen's Day/Tweede kerstdag }; #endregion }

That implementation still passes all tests, and works at in practice as well.

Conclusion #

It took me some time to find a satisfactory solution. I had more than once false start, until I ultimately arrived at the solution I've described here. I consider it simple because it's self-contained, deterministic, easy to understand, and fairly easy to maintain. I even left a comment in the code (not shown here) that described how to recreate the configuration data using the F# script shown here.

The first solution that comes into your mind may not be the simplest solution, but if you take some time to consider alternatives, you may save yourself and your colleagues some future grief.

Comments

What do you think about creating an "admin page" that would allow users to configure the bank holidays themselves (which would then be persisted in the application database)? This moves the burden of correctness to the administrators of the application, who I'm sure are highly motivated to get this right - as well as maintain it. It also removes the need for a deployment in the face of changing holidays.

For the sake of convenience, you could still "seed" the database with the values generated by your F# script

jadarnel27, thank you for writing. Your suggestion could be an option as well, but is hardly the simplest solution. In order to implement that, I'd need to add an administration web site for my application, program the user interface, connect the administration site and my original application (a REST API) to a persistent data source, write code for input validation, etcetera.

Apart from all that work, the bank holidays would have to be stored in an out-of-process data store, such as a database or NoSQL data store, because the REST API that needs this feature is running in a server farm. This adds latency to each lookup, as well as a potential error source. What should happen if the connection to the data store is broken? Additionally, such a data store should be backed up, so we'd also need to establish an operational procedure to ensure that that happens.

It was never a requirement that the owners of the application should be able to administer this themselves. It's certainly an option, but it's so much more complex than the solution outlined above that I think one should start by making a return-on-investment analysis.

Another option: Calculate the holidays! I think you might find som useful code in my HolidaysAPI. It is even in F#, and the Web project is based upon a course or blog post by you.

Alf, thank you for writing. Apart from the alternative library, how is that different from the option I covered under the heading Option: Nager.Date?

Great post, thanks. The minor point here is that it is probably not so effective to do Contains over long[]. I'd consider using something that can check the value existance faster.

EQR, thank you for writing. The performance profile of the implementation wasn't my main concern with this article, so it's likely that it can be improved.

I did do some lackadaisical performance testing, but didn't detect any measurable difference between the implementation shown here, and one using a HashSet. On the other hand, there are other options I didn't try at all. One of these could be to perform a binary search, since the array is already ordered.

Hi Mark, thanks for this post. One small note on your selection of bank holidays. Liberation day is an official bank holiday, but only for civil servants, for us 'normal' people this only happens every 5 years. This is reflected in Nager.Date wrong here

Thomas, thank you for writing. That just goes to show, I think, that holiday calculation is as complicated as any other 'business logic'. It should be treated as such, and not as an algorithmic calculation.

A reusable ApiController Adapter

Refactor ApiController Adapters to a single, reusable base class.

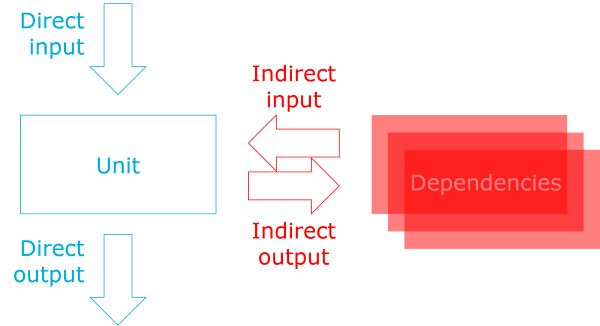

Regular readers of this blog will know that I write many RESTful APIs in F#, using ASP.NET Web API. Since I like to write functional F#, but ASP.NET Web API is an object-oriented framework, I prefer to escape the object-oriented framework as soon as possible. (In general, it makes good architectural sense to write most of your code as framework-independent as possible.)

(Regular readers will also have seen the above paragraph before.)

To bridge the gap between the object-oriented framework and my functional code, I implement Controller classes like this:

type CreditCardController (imp) = inherit ApiController () member this.Post (portalId : string, req : PaymentDtr) : IHttpActionResult = match imp portalId req with | Success (resp : PaymentDtr) -> this.Ok resp :> _ | Failure RouteFailure -> this.NotFound () :> _ | Failure (ValidationFailure msg) -> this.BadRequest msg :> _ | Failure (IntegrationFailure msg) -> this.InternalServerError (InvalidOperationException msg) :> _

The above example is a Controller that handles incoming payment data. It immediately delegates all work to an injected imp function, and pattern matches on the return value in order to return correct responses.

This CreditCardController extends ApiController in order to work nicely with ASP.NET Web API, while the injected imp function is written using functional programming. If you want to be charitable, you could say that the Controller is an Adapter between ASP.NET Web API and the functional F# API that actually implements the service. If you want to be cynical, you could also call it an anti-corruption layer.

This works well, but tends to become repetitive:

open System open System.Web.Http type BoundaryFailure = | RouteFailure | ValidationFailure of string | IntegrationFailure of string type HomeController (imp) = inherit ApiController () [<AllowAnonymous>] member this.Get () : IHttpActionResult = match imp () with | Success (resp : HomeDtr) -> this.Ok resp :> _ | Failure RouteFailure -> this.NotFound () :> _ | Failure (ValidationFailure msg) -> this.BadRequest msg :> _ | Failure (IntegrationFailure msg) -> this.InternalServerError (InvalidOperationException msg) :> _ type CreditCardController (imp) = inherit ApiController () member this.Post (portalId : string, req : PaymentDtr) : IHttpActionResult = match imp portalId req with | Success (resp : PaymentDtr) -> this.Ok resp :> _ | Failure RouteFailure -> this.NotFound () :> _ | Failure (ValidationFailure msg) -> this.BadRequest msg :> _ | Failure (IntegrationFailure msg) -> this.InternalServerError (InvalidOperationException msg) :> _ type CreditCardRecurrentStartController (imp) = inherit ApiController () member this.Post (portalId : string, req : PaymentDtr) : IHttpActionResult = match imp portalId req with | Success (resp : PaymentDtr) -> this.Ok resp :> _ | Failure RouteFailure -> this.NotFound () :> _ | Failure (ValidationFailure msg) -> this.BadRequest msg :> _ | Failure (IntegrationFailure msg) -> this.InternalServerError (InvalidOperationException msg) :> _ type CreditCardRecurrentController (imp) = inherit ApiController () member this.Post (portalId : string, transactionKey : string, req : PaymentDtr) : IHttpActionResult = match imp portalId transactionKey req with | Success (resp : PaymentDtr) -> this.Ok resp :> _ | Failure RouteFailure -> this.NotFound () :> _ | Failure (ValidationFailure msg) -> this.BadRequest msg :> _ | Failure (IntegrationFailure msg) -> this.InternalServerError (InvalidOperationException msg) :> _ type PushController (imp) = inherit ApiController () member this.Post (portalId : string, req : PushRequestDtr) : IHttpActionResult = match imp portalId req with | Success () -> this.Ok () :> _ | Failure RouteFailure -> this.NotFound () :> _ | Failure (ValidationFailure msg) -> this.BadRequest msg :> _ | Failure (IntegrationFailure msg) -> this.InternalServerError (InvalidOperationException msg) :> _

At this point in our code base, we had five Controllers, and they all had similar implementations. They all pass their input parameters to their injected imp functions, and they all pattern match in exactly the same way. There are, however, small variations. Notice that one of the Controllers expose an HTTP GET operation, whereas the other four expose a POST operation. Conceivably, there could also be Controllers that allow both GET and POST, and so on, but this isn't the case here.

The parameter list for each of the action methods also vary. Some take two arguments, one takes three, and the GET method takes none.

Another variation is that HomeController.Get is annotated with an [<AllowAnonymous>] attribute, while the other action methods aren't.

Despite these variations, it's possible to make the code less repetitive.

You'd think you could easily refactor the code by turning the identical pattern matches into a reusable function. Unfortunately, it's not so simple, because the methods used to return HTTP responses are all protected (to use a C# term for something that F# doesn't even have). You can call this.Ok () or this.NotFound () from a derived class, but not from a 'free' let-bound function.

After much trial and error, I finally arrived at this reusable base class:

type private Imp<'inp, 'out> = 'inp -> Result<'out, BoundaryFailure> [<AbstractClass>] type FunctionController () = inherit ApiController () // Imp<'a,'b> -> 'a -> IHttpActionResult member this.Execute imp req : IHttpActionResult = match imp req with | Success resp -> this.Ok resp :> _ | Failure RouteFailure -> this.NotFound () :> _ | Failure (ValidationFailure msg) -> this.BadRequest msg :> _ | Failure (IntegrationFailure msg) -> this.InternalServerError (InvalidOperationException msg) :> _

It's been more than a decade, I think, since I last used inheritance to enable reuse, but in this case I could find no other way because of the design of ApiController. It gets the job done, though:

type HomeController (imp : Imp<_, HomeDtr>) = inherit FunctionController () [<AllowAnonymous>] member this.Get () = this.Execute imp () type CreditCardController (imp : Imp<_, PaymentDtr>) = inherit FunctionController () member this.Post (portalId : string, req : PaymentDtr) = this.Execute imp (portalId, req) type CreditCardRecurrentStartController (imp : Imp<_, PaymentDtr>) = inherit FunctionController () member this.Post (portalId : string, req : PaymentDtr) = this.Execute imp (portalId, req) type CreditCardRecurrentController (imp : Imp<_, PaymentDtr>) = inherit FunctionController () member this.Post (portalId : string, transactionKey : string, req : PaymentDtr) = this.Execute imp (portalId, transactionKey, req) type PushController (imp : Imp<_, unit>) = inherit FunctionController () member this.Post (portalId : string, req : PushRequestDtr) = this.Execute imp (portalId, req)

Notice that all five Controllers now derive from FunctionController instead of ApiController. The HomeController.Get method is still annotated with the [<AllowAnonymous>] attribute, and it's still a GET operation, whereas all the other methods still implement POST operations. You'll also notice that the various Post methods have retained their varying number of parameters.

In order to make this work, I had to make one trivial change: previously, all the imp functions were curried, but that doesn't fit into a single reusable base implementation. If you consider the type of FunctionController.Execute, you can see that the imp function is expected to take a single input value of the type 'a (and return a value of the type Result<'b, BoundaryFailure>). Since any imp function can only take a single input value, I had to uncurry them all. You can see that now all the Post methods pass their input parameters as a single tuple to their injected imp function.

You may be wondering about the Imp<'inp, 'out> type alias. It's not strictly necessary, but helps keep the code clear. As I've attempted to indicate with the code comment above the Execute method, it's generic. When used in a derived Controller, the compiler can infer the type of 'a because it already knows the types of the input parameters. For example, in CreditCardController.Post, the input parameters are already annotated as string and PaymentDtr, so the compiler can easily infer the type of (portalId, req).

On the other hand, the compiler can't infer the type of 'b, because that value doesn't relate to IHttpActionResult. To help the compiler, I introduced the Imp type, because it enabled me to concisely annotate the type of the output value, while using a wild-card type for the input type.

I wouldn't mind getting rid of Controllers altogether, but this is as close as I've been able to get with ASP.NET Web API.

Comments

I find a few issues with your blog post. Technically, I don't think anything you write in it is wrong, but there are a few of the statements I find problematic in the sense that people will probably read the blog post and conclude that "this is the only solution because of X" when in fact X is trivial to circumvent.

The main issue I have with your post is the following:

You'd think you could easily refactor the code by turning the identical pattern matches into a reusable function. Unfortunately, it's not so simple, because the methods used to return HTTP responses are all protected (to use a C# term for something that F# doesn't even have). You can call this.Ok () or this.NotFound () from a derived class, but not from a 'free' let-bound function.

As stated earlier, there is nothing in your statement that is technically wrong, but I'd argue that you've reached the wrong conclusion due to lack of data. Or in this case familiarity with Web API and it's source code. If you go look at the source for ApiController, you will notice that while true, the methods are protected, they are also trivial one-liners (helper methods) calling public APIs. It is completely possible to create a helper ala:

// NOTE: Untested let handle (ctrl: ApiController) = function | Success of result -> OkNegotiatedContentResult (result, ctrl) | Failure RouteFailure -> NotFoundResult (ctrl) | Failure (ValidationFailure msg) -> BadRequestErrorMessageResult (msg, ctrl) | Failure (IntegrationFailure msg) -> ExceptionResult ((InvalidOperationException msg), ctrl)

Another thing you can do is implement IHttpActionResult on your discriminate union, so you can just return it directly from you controller, in which case you'd end up with code like this:

member this.Post (portalId : string, req : PaymentDtr) = imp portalId req

It's definitely a bit more work, but in no way is it undoable. Thirdly, Web API (and especially the new MVC Core which has taken over for it) is incredibly pluggable through it's DI. You don't have to use the base ApiController class if you don't want to. You can override the resolution logic, the handing of return types, routing, you name it. It should for instance be entirely doable to write a handler for "controllers" that look like this:

[<Controller>] module MyController = [<AllowAnonymous>] // assume impl is imported and in scope here - this controller has no DI let post (portalId : string) (req : PaymentDtr) = impl portalId req

This however would probably be a bit of work requiring quite a bit of reflection voodoo. But somebody could definitely do it and put it in a library, and it would be nuget-installable for all.

Anyways, I hope this shows you that there are more options to solve the problem. And who knows, you may have considered all of them and concluded them unviable. I've personally dug through the source code of both MVC and Web API a few times which is why I know a bunch of this stuff, and I just figured you might want to know some of it too :).

Aleksander, thank you for writing. I clearly have some scars from working with ASP.NET Web API since version 0.6 (or some value in that neighbourhood). In general, if a method is defined on ApiController, I've learned the hard way that such methods tend to be tightly coupled to the Controller. Often, such methods even look into the untyped context dictionary in the request message object, so I've gotten used to use them whenever they're present.