ploeh blog danish software design

Test-specific Eq

Adding Eq instances for better assertions.

Most well-written unit tests follow some variation of the Arrange Act Assert pattern. In the Assert phase, you may write a sequence of assertions that verify different aspects of what 'success' means. Even so, it boils down to this: You check that the expected outcome is equal to the actual outcome. Some testing frameworks like to turn the order around, but the idea remains the same. After all, equality is symmetric.

The ideal assertion is one that simply checks that actual is equal to expected. Some languages allow custom infix operators, in which case it's natural to define this fundamental assertion as an operator, such as @?=.

Since this is Haskell, however, the @?= operator comes with type constraints. Specifically, what we compare must be an Eq instance. In other words, the type in question must support the == operator. What do you do when a type is no Eq instance?

No Eq #

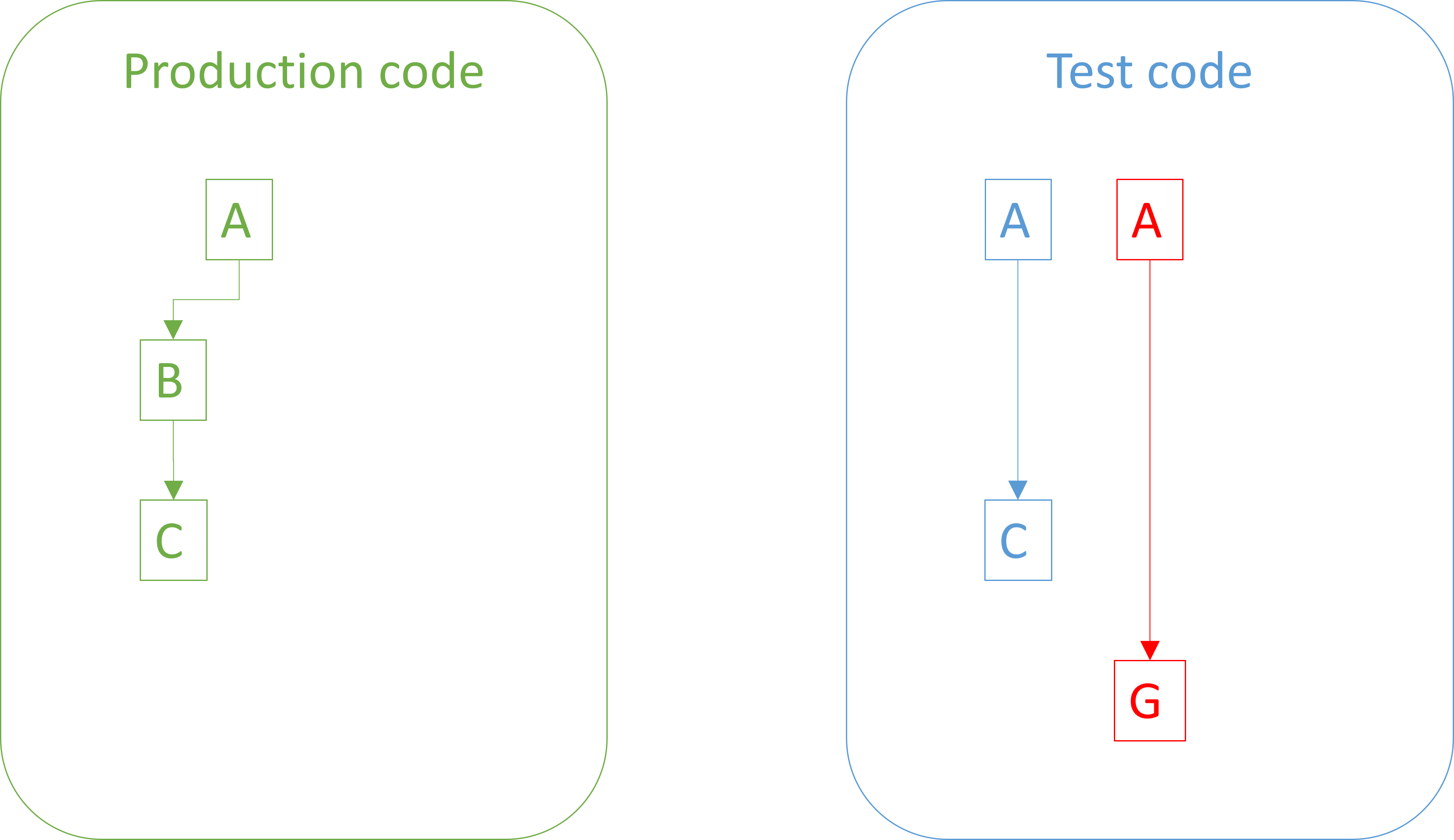

In a recent article you saw how a complicated test induced a tautological assertion. The main reason that the test was complicated was that the values involved were not Eq instances.

This got me thinking: Might test-specific equality help?

The easiest way to find out is to try. In this article, you'll see how that experiment turns out. First, however, you need a quick introduction to the problem space. The task at hand was to implement a cellular automaton, ostensibly modelling Galápagos finches meeting. When two finches encounter each other, they play out a game of Prisoner's Dilemma according to a strategy implemented in a domain-specific language.

Specifically, a finch is modelled like this:

data Finch = Finch { finchID :: Int, finchHP :: HP, finchRoundsLeft :: Rounds, -- The colour is used for visualisation, but has no semantic significance. finchColour :: Colour, -- The current strategy. finchStrategy :: Strategy, -- The expression that is evaluated to produce the strategy. finchStrategyExp :: Exp }

The Finch data type is not an Eq instance. The reason is that Strategy is effectively a free monad over this functor:

data EvalOp a

= ErrorOp Error

| MeetOp (FinchID -> a)

| GroomOp (Bool -> a)

| IgnoreOp (Bool -> a)

Since EvalOp is a sum of functions, it can't be an Eq instance, and this then applies transitively to Finch, as well as the CellState container that keeps track of each cell in the cellular grid:

data CellState = CellState { cellFinch :: Maybe Finch, cellRNG :: StdGen }

An important part of working with this particular code base is that the API is given, and must not be changed.

Given these constraints and data types, is there a way to improve test assertions?

Smelly tests #

The lack of Eq instances makes it difficult to write simple assertions. The worst test I wrote is probably this, making use of a predefined example Finch value named flipflop:

testCase "Cell 1 reproduces" $ let cell1 = Galapagos.CellState (Just flipflop) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 6) -- seeded to reprod actual = Galapagos.reproduce Galapagos.defaultParams (cell1, cell2) in do -- Sanity check on first finch. Unfortunately, CellState is no Eq -- instance, so we can't just compare the entire record. Instead, -- using HP as a sample: (Galapagos.finchHP <$> Galapagos.cellFinch (fst actual)) @?= Just 20 -- New finch should have HP from params: (Galapagos.finchHP <$> Galapagos.cellFinch (snd actual)) @?= Just 14 -- New finch should have lifespan from params: (Galapagos.finchRoundsLeft <$> Galapagos.cellFinch (snd actual)) @?= Just 23 -- New finch should have same colour as parent: ( Galapagos.finchColour <$> Galapagos.cellFinch (snd actual)) @?= Galapagos.finchColour <$> Galapagos.cellFinch cell1 -- More assertions, described by their error messages: ( (Galapagos.finchID <$> Galapagos.cellFinch (fst actual)) /= (Galapagos.finchID <$> Galapagos.cellFinch (snd actual))) @? "Finches have same ID, but they should be different." ((/=) `on` Galapagos.cellRNG) cell2 (snd actual) @? "New cell 2 should have an updated RNG."

As you can tell from the apologies all these assertions leave something to be desired. The first assertion uses finchHP as a proxy for the entire finch in cell1, which is not supposed to change. Instead of an assertion for each of the first finch's attributes, the test 'hopes' that if finchHP didn't change, then so didn't the other values.

The test then proceeds to verify various fields of the new finch in cell2, checking them one by one, since the lack of Eq makes it impossible to simply check that the actual value is equal to the expected value.

In comparison, the test you saw in the previous article is almost pretty. It uses another example Finch value named cheater.

testCase "Cell 1 does not reproduce" $ let cell1 = Galapagos.CellState (Just cheater) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 1) -- seeded: no repr. actual = Galapagos.reproduce Galapagos.defaultParams (cell1, cell2) in do -- Sanity check that cell 1 remains, sampling on strategy: ( Galapagos.finchStrategyExp <$> Galapagos.cellFinch (fst actual)) @?= Galapagos.finchStrategyExp <$> Galapagos.cellFinch cell1 ( Galapagos.finchHP <$> Galapagos.cellFinch (snd actual)) @?= Nothing

The apparent simplicity is mostly because at that time, I'd almost given up on more thorough testing. In this test, I chose finchStrategyExp as a proxy for each value, and 'hoped' that if these properties behaved as expected, other attributes would, too.

Given that I was following test-driven development and thus engaging in grey-box testing, I had reason to believe that the implementation was correct if the test passes.

Still, those tests exhibit more than one code smell. Could test-specific equality be the answer?

Test utilities for finches #

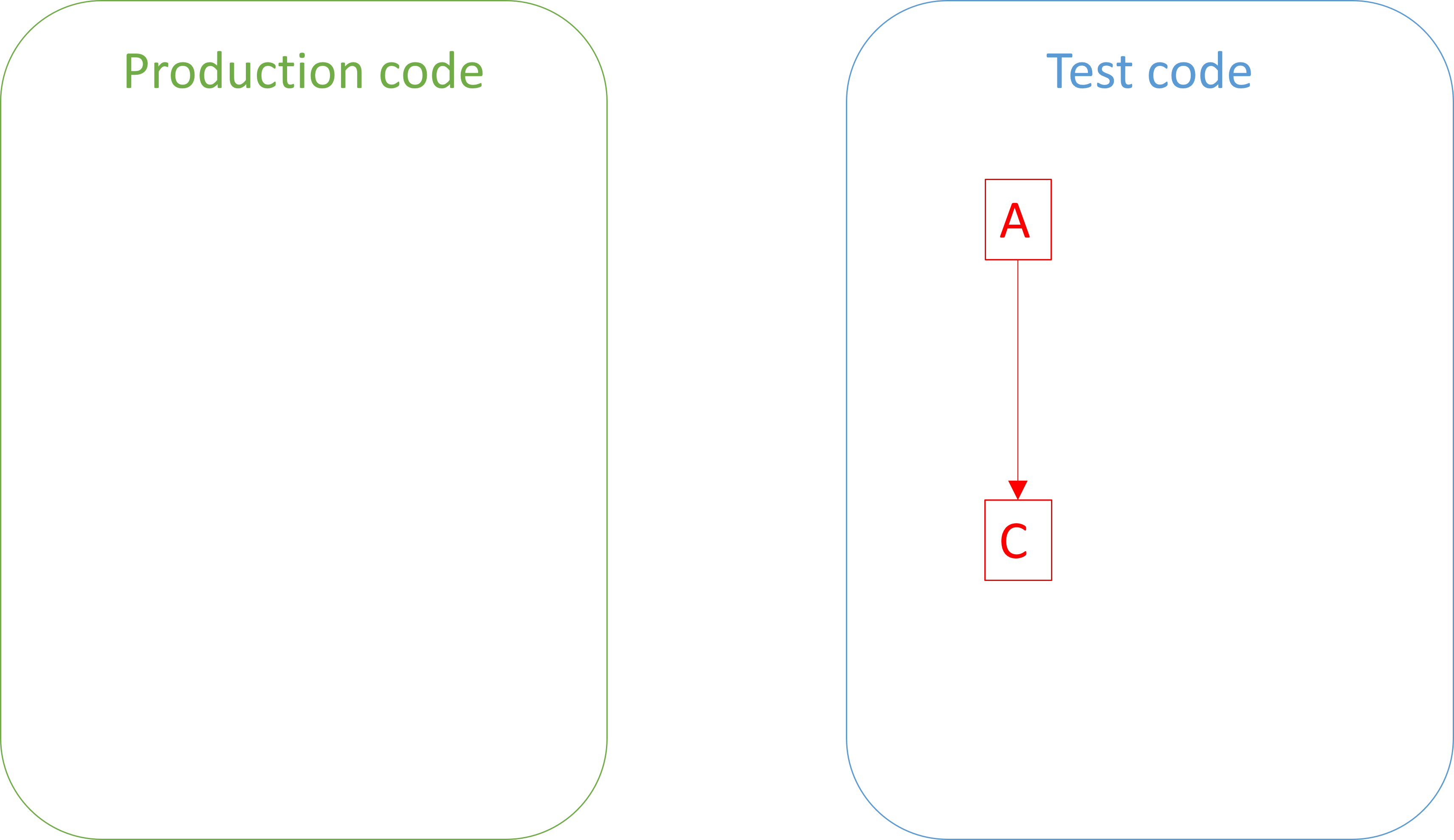

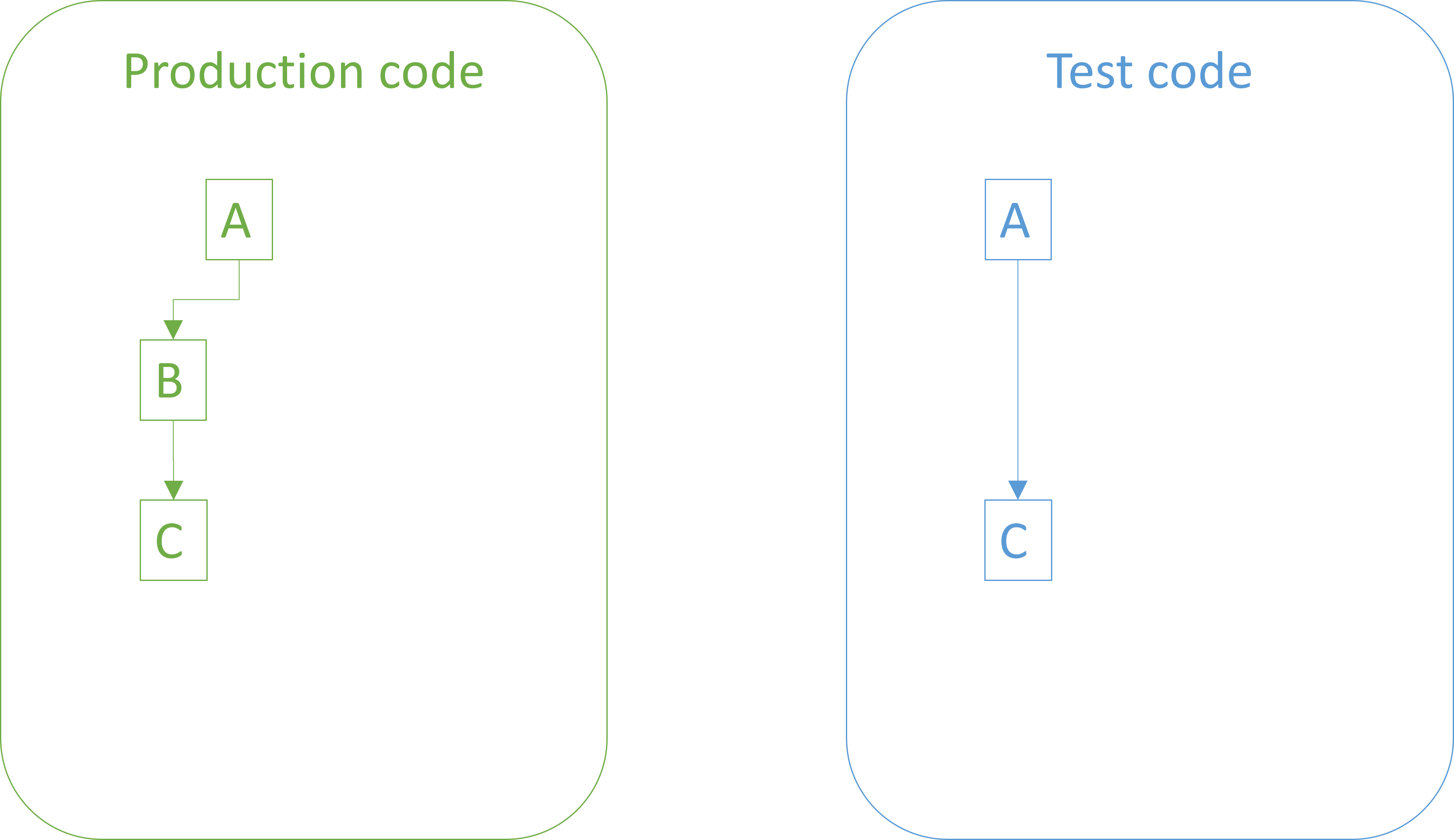

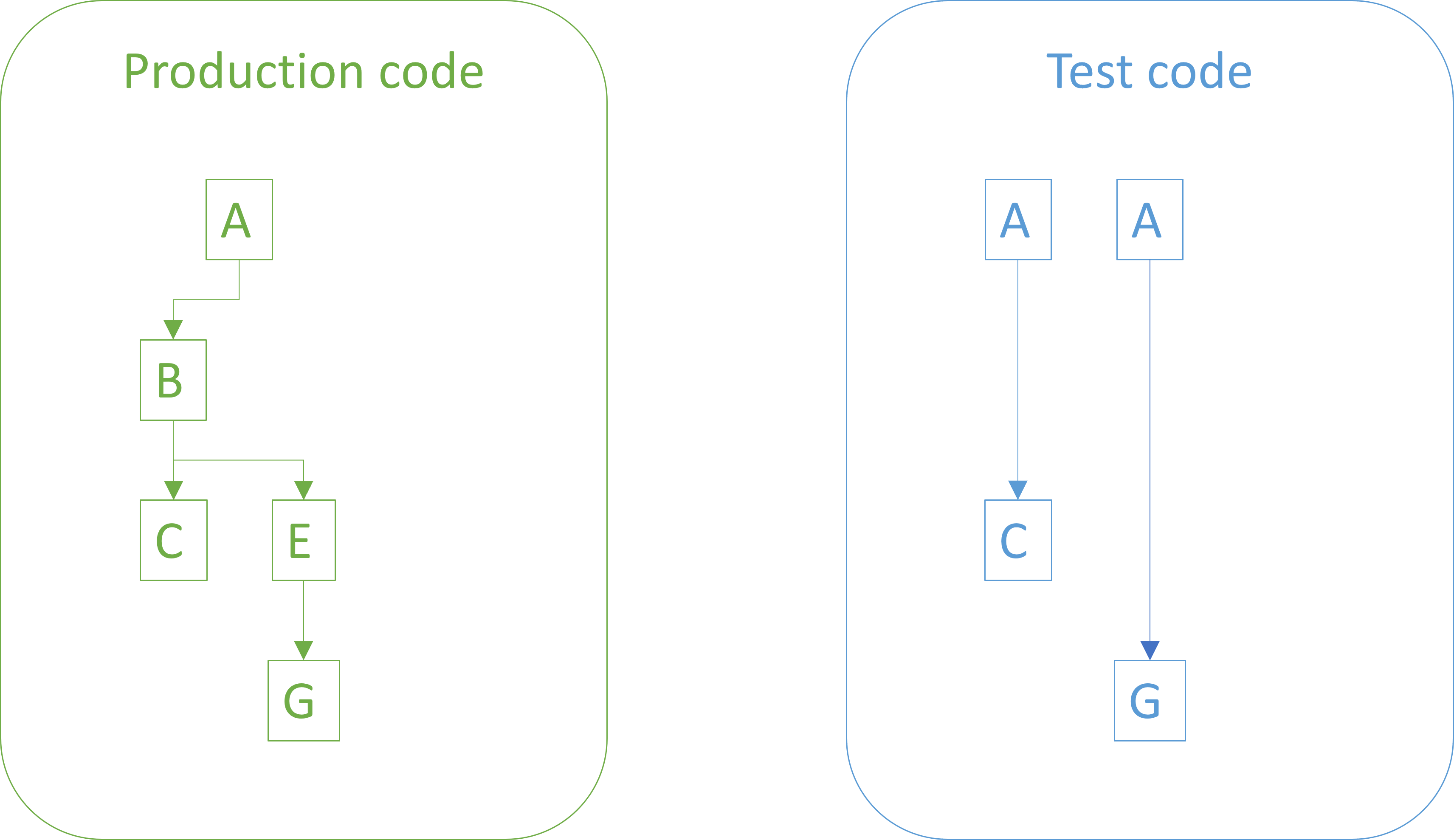

The fundamental problem is that the finchStrategy field prevents Finch from being an Eq instance. Finding a way to compare Strategy values seems impractical. A more realistic course of action might be to compare all other fields. One option is to introduce a test-specific type with proper Eq and Show instances.

data FinchEq = FinchEq { feqID :: Int , feqHP :: Galapagos.HP , feqRoundsLeft :: Galapagos.Rounds , feqColour :: Galapagos.Colour , feqStrategyExp :: Exp } deriving (Eq, Show)

This data type only exists in the test code base. It has all the fields of Finch, except finchStrategy.

While I could use it as-is, it quickly turns out that a helper function to turn a CellState value into a FinchEq value would also be useful.

finchEq :: Galapagos.Finch -> FinchEq finchEq f = FinchEq { feqID = Galapagos.finchID f , feqHP = Galapagos.finchHP f , feqRoundsLeft = Galapagos.finchRoundsLeft f , feqColour = Galapagos.finchColour f , feqStrategyExp = Galapagos.finchStrategyExp f } cellFinchEq :: Galapagos.CellState -> Maybe FinchEq cellFinchEq = fmap finchEq . Galapagos.cellFinch

Finally, the System Under Test (the reproduce function) takes a tuple as input, and returns a tuple of the same type as output. To avoid some code duplication, it's practical to introduce a data type that can map over both components.

newtype Pair a = Pair (a, a) deriving (Eq, Show, Functor)

This newtype wrapper makes it possible to map both the first and the second component of a pair (a two-tuple) using a single projection, since Pair is a Functor instance.

That's all the machinery required to rewrite the two tests shown above.

Improving the first test #

The first test may be rewritten as this:

testCase "Cell 1 reproduces" $ let cell1 = Galapagos.CellState (Just flipflop) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 6) -- seeded to reprod actual = Galapagos.reproduce Galapagos.defaultParams (cell1, cell2) expected = Just $ finchEq $ flipflop { Galapagos.finchID = -1142203427417426925 -- From Character. Test , Galapagos.finchHP = 14 , Galapagos.finchRoundsLeft = 23 } in do (cellFinchEq <$> Pair actual) @?= Pair (cellFinchEq cell1, expected) ((/=) `on` Galapagos.cellRNG) cell2 (snd actual) @? "New cell 2 should have an updated RNG."

That's still a bit of code. If you're used to C# or Java code, you may not bat an eyelid over a fifteen-line code block (that even has a few blank lines), but fifteen lines of Haskell code is still significant.

There are compound reasons for this. One is that the Galapagos module is a qualified import, which makes the code more verbose than it otherwise could have been. It doesn't help that I follow a strict rule of staying within an 80-character line width.

That said, this version of the test has stronger assertions than before. Notice that the first assertion compares two Pairs of FinchEq values. This means that all five comparable fields of each finch is compared against the expected value. Since the assertion compares two Pairs, that's ten comparisons in all. The previous test only made five comparisons on the finches.

The second assertion remains as before. It's there to ensure that the System Under Test (SUT) remembers to update its pseudo-random number generator.

Perhaps you wonder about the expected values. For the finchID, hopefully the comment gives a hint. I originally set this value to 0, ran the test, observed the actual value, and used what I had observed. I could do that because I was refactoring an existing test that exercised an existing SUT, following the rules of empirical Characterization Testing.

The finchID values are in practice randomly generated numbers. These are notoriously awkward in test contexts, so I could also have excluded that field from FinchEq. Even so, I kept the field, because it's important to be able to verify that the new finch has a different finchID than the parent that begat it.

Derived values #

Where do the magic constants 14 and 23 come from? Although we could use comments to explain their source, another option is to use Derived Values to explicitly document their origin:

testCase "Cell 1 reproduces" $ let cell1 = Galapagos.CellState (Just flipflop) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 6) -- seeded to reprod actual = Galapagos.reproduce Galapagos.defaultParams (cell1, cell2) expected = Just $ finchEq $ flipflop { Galapagos.finchID = -1142203427417426925 -- From Character. Test , Galapagos.finchHP = Galapagos.startHP Galapagos.defaultParams , Galapagos.finchRoundsLeft = Galapagos.lifespan Galapagos.defaultParams } in do (cellFinchEq <$> Pair actual) @?= Pair (cellFinchEq cell1, expected) ((/=) `on` Galapagos.cellRNG) cell2 (snd actual) @? "New cell 2 should have an updated RNG."

We now learn that the finchHP value originates from the startHP value of the defaultParams, and similarly for finchRoundsLeft.

To be honest, I'm not sure that this is an improvement. It makes the test more abstract, and if we wish that tests may serve as executable documentation, concrete example values may be easier to understand. Besides, this gets uncomfortably close to duplicating the actual implementation code contained in the SUT.

This variation only serves as an exploration of alternatives. I would strongly consider rolling this change back, and instead add some comments to the magic numbers.

Improving the second test #

The second test improves better.

testCase "Cell 1 does not reproduce" $ let cell1 = Galapagos.CellState (Just cheater) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 1) -- seeded: no repr. actual = Galapagos.reproduce Galapagos.defaultParams (cell1, cell2) in (cellFinchEq <$> Pair actual) @?= Pair (cellFinchEq cell1, Nothing)

Not only is it shorter, the assertion is much stronger. It achieves the ideal of verifying that the actual value is equal to the expected value, comparing five data fields on each of the two finches.

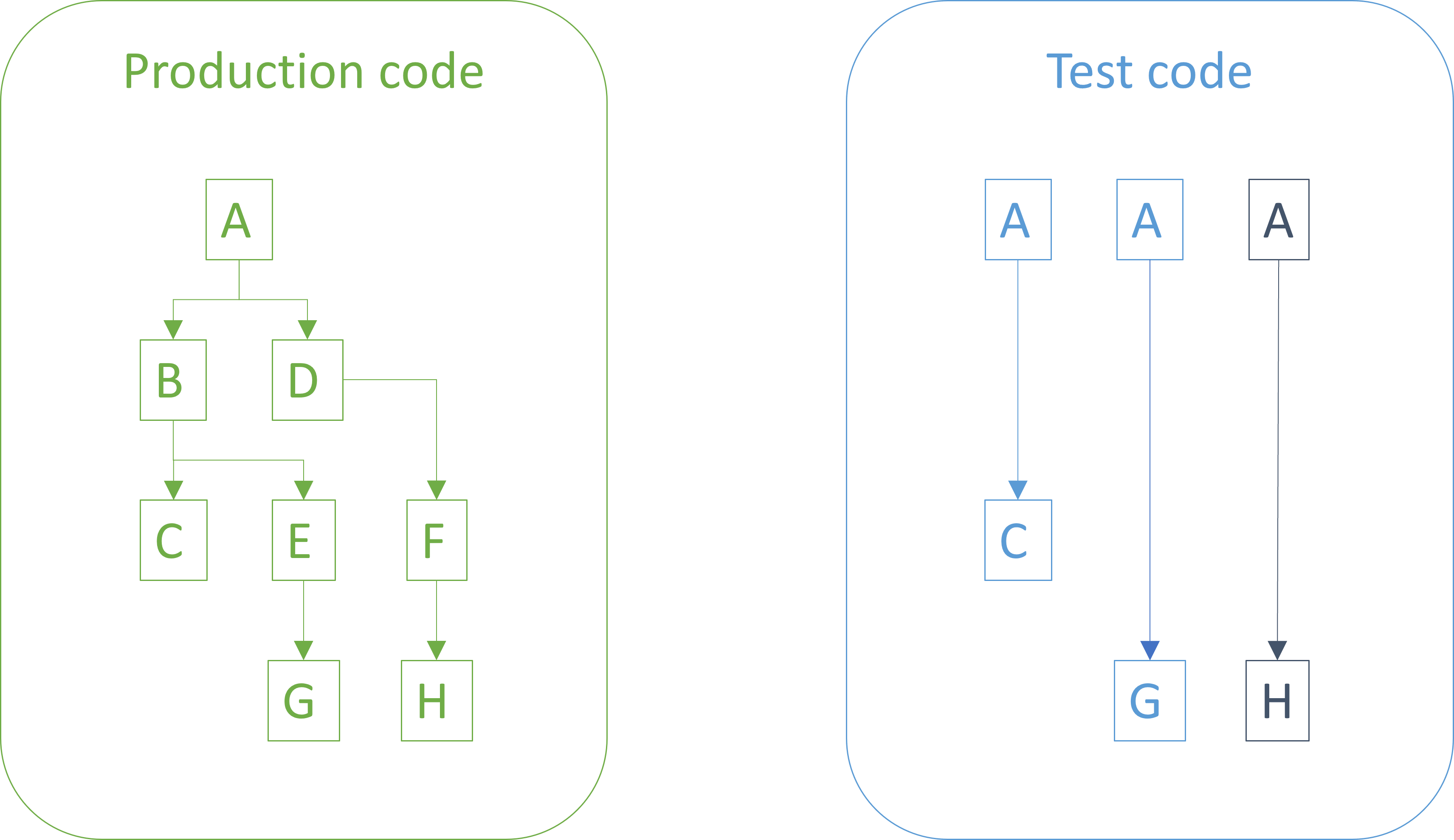

Comparing cells #

The reproduce function uses the pseudo-random number generators embedded in the CellState data type to decide whether a finch reproduces in a given round. Thus, the number generators change in deterministic, but by human cognition unpredictable, ways. It makes sense to exclude the generators from the assertions, apart from the above assertion that verifies the change itself.

Other functions in the Galapagos module also work on CellState values, but are entirely deterministic; that is, they don't make use of the pseudo-random number generators. One such function is groom, which models what happens when two finches meet and play out their game of Prisoner's Dilemma by deciding to groom the other for parasites, or not. The function has this type:

groom :: Params -> (CellState, CellState) -> (CellState, CellState)

By specification, this function has no random behaviour, which means that we expect the number generators to stay the same. Even so, due to the lack of an Eq instance, comparing cells is difficult.

testCase "Groom when right cell is empty" $ let cell1 = Galapagos.CellState (Just flipflop) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 1) actual = Galapagos.groom Galapagos.defaultParams (cell1, cell2) in do ( Galapagos.finchHP <$> Galapagos.cellFinch (fst actual)) @?= Galapagos.finchHP <$> Galapagos.cellFinch cell1 ( Galapagos.finchHP <$> Galapagos.cellFinch (snd actual)) @?= Nothing

Instead of comparing cells, this test only considers the contents of each cell, and it only compares a single field, finchHP, as a proxy for comparing the more complete data structure.

With FinchEq we have a better way of comparing two finches, but we don't have to stop there. We may introduce another test-utility type that can compare cells.

data CellStateEq = CellStateEq { cseqFinch :: Maybe FinchEq , cseqRNG :: StdGen } deriving (Eq, Show)

A helper function also turns out to be useful.

cellStateEq :: Galapagos.CellState -> CellStateEq cellStateEq cs = CellStateEq { cseqFinch = cellFinchEq cs , cseqRNG = Galapagos.cellRNG cs }

We can now rewrite the test to compare both cells in their entirety (minus the finchStrategy).

testCase "Groom when right cell is empty" $ let cell1 = Galapagos.CellState (Just flipflop) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 1) actual = Galapagos.groom Galapagos.defaultParams (cell1, cell2) in (cellStateEq <$> Pair actual) @?= cellStateEq <$> Pair (cell1, cell2)

Again, the test is both simpler and stronger.

A fly in the ointment #

Introducing FinchEq and CellStateEq allowed me to improve most of the tests, but a few annoying issues remain. The most illustrative example is this test of the core groom behaviour, which lets two example Finch values named samaritan and cheater interact.

testCase "Groom two finches" $ let cell1 = Galapagos.CellState (Just samaritan) (mkStdGen 0) cell2 = Galapagos.CellState (Just cheater) (mkStdGen 1) actual = Galapagos.groom Galapagos.defaultParams (cell1, cell2) expected = Just <$> Pair ( finchEq $ samaritan { Galapagos.finchHP = 16 } , finchEq $ cheater { Galapagos.finchHP = 13 } ) in (cellFinchEq <$> Pair actual) @?= expected

This test ought to compare cells with CellStateEq, but only compares finches. The practical reason is that defining the expected value as a pair of cells entails embedding the expected finches in their respective cells. This is possible, but awkward, due to the nested nature of the data types.

It's possible to do something about that, too, but that's the topic for another article.

Conclusion #

If a test is difficult to write, it may be a symptom that the System Under Test (SUT) has an API which is difficult to use. When doing test-driven development you may want to reconsider the API. Is there a way to model the desired data and behaviour in such a way that the tests become simpler? If so, the API may improve in general.

Sometimes, however, you can't change the SUT API. Perhaps it's already given. Perhaps improving it would be a breaking change. Or perhaps you simply can't think of a better way.

An alternative to changing the SUT API is to introduce test utilities, such as types with test-specific equality. This is hardly better than improving the SUT API, but may be useful in those situations where the best option is unavailable.

Tautological assertions are not always caused by aliasing

You can also make mistakes that compile in Haskell.

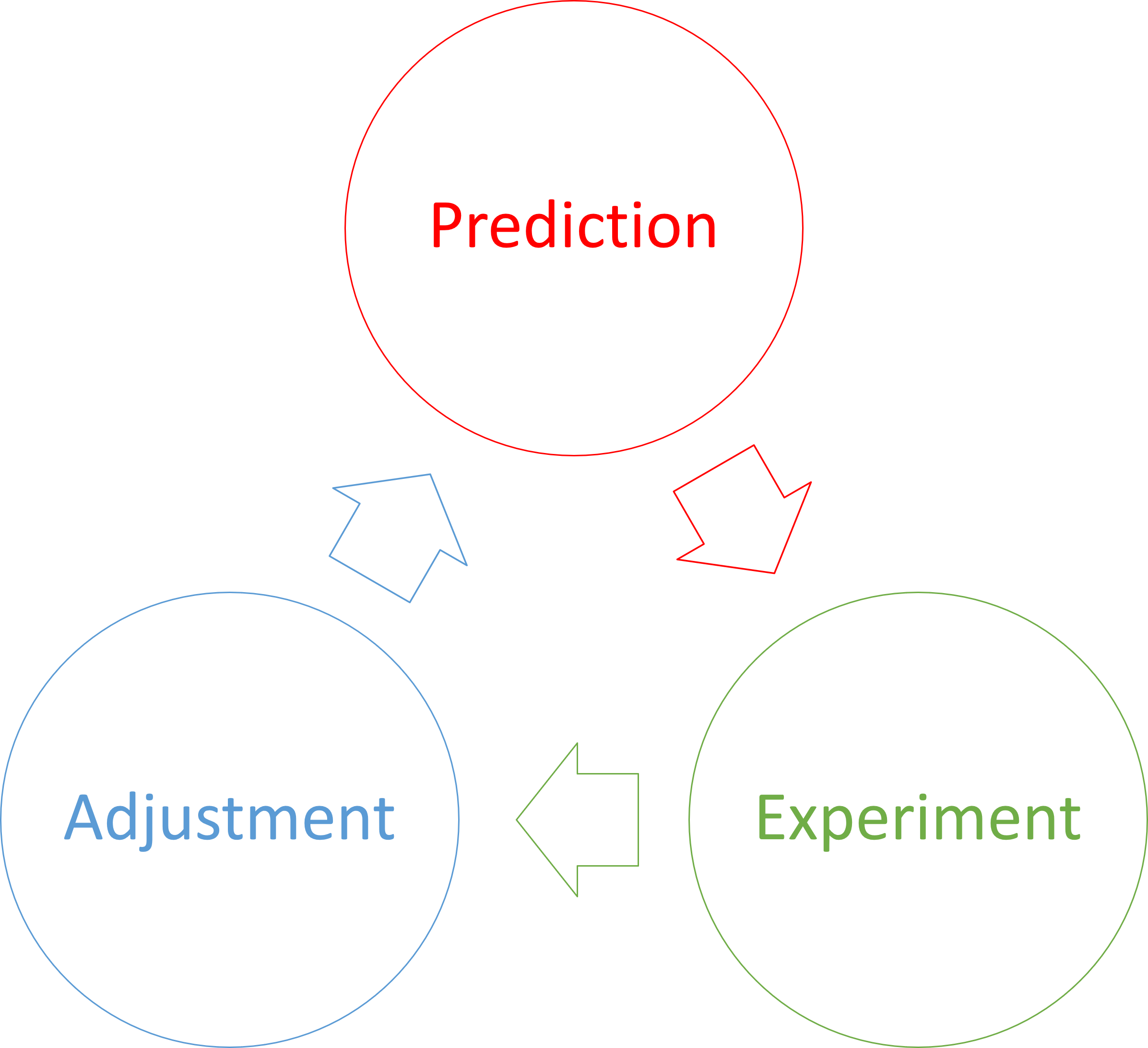

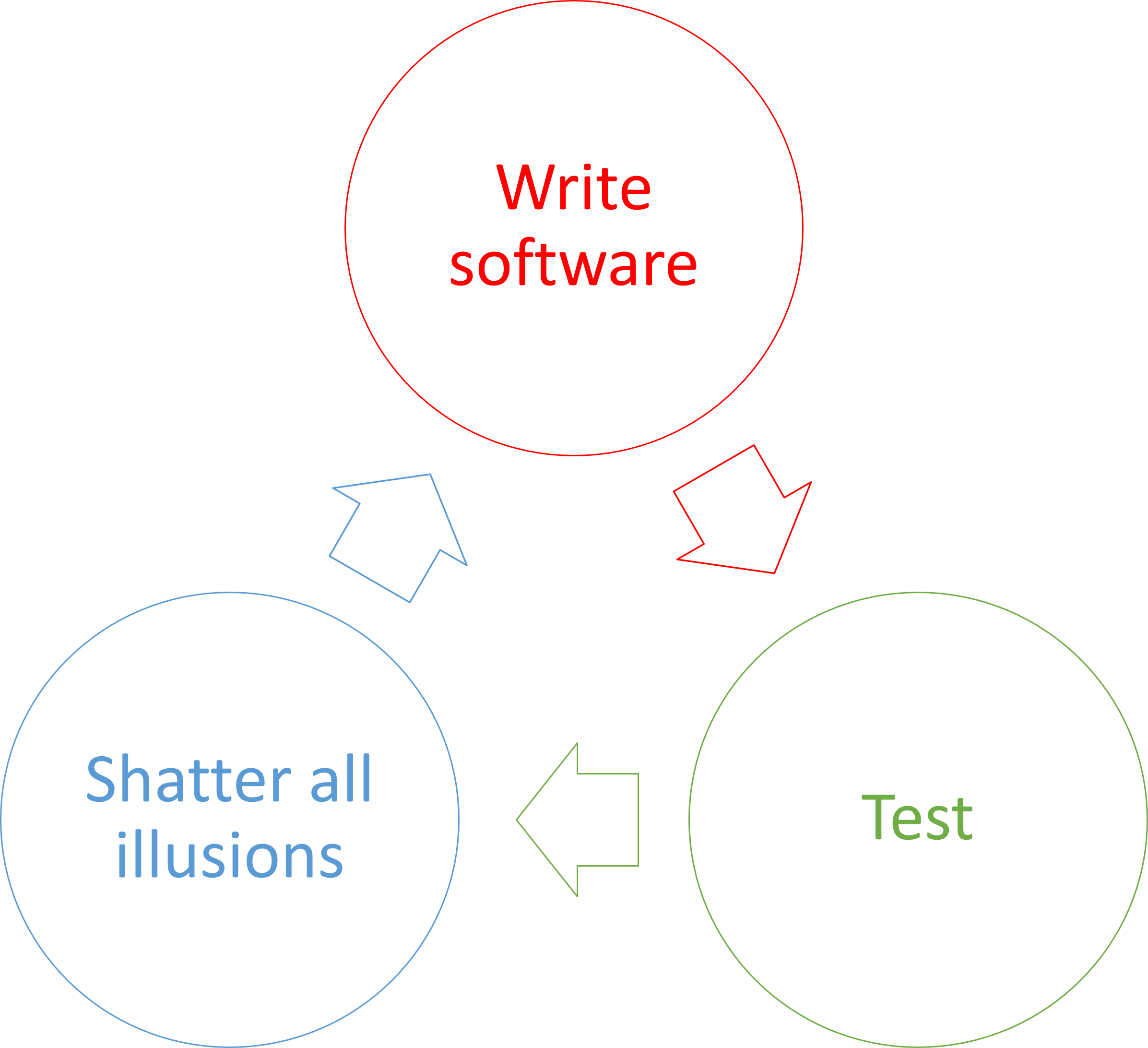

Seeing a (unit) test fail before making it pass is an important part of empirical software engineering. This is nothing new. We've known about the red-green-refactor cycle for at least twenty years, so we know that ensuring that a test can fail is of the essence.

A fundamental problem of automated testing is that we're writing code to test code. How do we know that our test code works? After all, it's easy enough to make mistakes, even with simple code.

The importance of discipline #

When I test-drive a programming task, it regularly happens that I write a test that I expect to fail, only for it to pass. Even so, it doesn't happen that often. During a week of intensive coding, it may happen once or twice.

After all, a unit test is supposed to be a simple, short block of code, ideally with a cyclomatic complexity of 1. If you're an experienced programmer, working in a language that you know, you wouldn't expect to make simple mistakes too often.

It's easy to get lulled into a false sense of security. When new tests fail (as they should) more than 95% of the time, you may be tempted to cut corners: Just write the test, implement the desired code, and move on.

My experience is, however, that I inadvertently write a passing test now and then. I've been doing test-driven development (TDD) for more than twenty years, and this still happens. It is therefore important to keep up discipline and run that damned test, even if you don't feel like it. It's exactly when your attention is flagging that you need this safety mechanism the most.

Until you've tried a couple of times writing a test that unexpectedly pass, it can be hard to grasp why the red phase is essential. Therefore I've always felt that it was important to publish examples.

Tautologies #

A new test that passes on the first run is almost always a tautology. Theoretically, it may be that you think that the System Under Test does not yet have a certain capability, but after writing the test, it turns out that, after all, it does. This almost never happens to me. I can't rule out that this may have been the case once or twice in the last few decades, but it's as scarce as hen's teeth.

Usually, the problem is that the test is a tautology. While you believe that the test correctly self-checks something relevant, in reality, it's written in such a way that it can't possibly fail.

The first time I had the opportunity to document such a tautological assertion the underlying problem turned out to be aliasing. The next examples I ran into also had their roots in aliasing.

I began to wonder: Is the problem of tautological assertions mostly related to aliasing? If so, does it mean that the phenomenon of writing a passing test by mistake is mostly isolated to procedural and object-oriented programming? Could it be that this doesn't happen in functional programming?

If it compiles, it works #

Many so-called functional programming languages are in reality mixed-paradigm languages. The one that I know best is F#, which Don Syme calls functional-first. Even so, it's explicitly designed to interact with .NET libraries written in other languages (almost always C#), so it can still suffer from aliasing problems. The same situation applies to Clojure and Scala, which both run on the JVM.

A few languages are, however, unapologetically functional. Haskell is probably the most famous. If you rule out actions that run in IO, shared mutable state is not a concern.

Haskell's type system is so advanced and powerful that there are programmers who still seem to approach the language with an implied attitude that if it compiles, it works. Clearly, as we shall see, that is not the case.

Example #

Last week, I was writing tests against an API that was already given. As the 53rd test, I wrote this:

testCase "Cell 1 does not reproduce" $ let cell1 = Galapagos.CellState (Just cheater) (mkStdGen 0) cell2 = Galapagos.CellState Nothing (mkStdGen 1) -- seeded: no repr. actual = Galapagos.reproduce Galapagos.defaultParams (cell1, cell2) in do -- Sanity check that cell 1 remains, sampling on strategy: ( Galapagos.finchStrategyExp <$> Galapagos.cellFinch (fst actual)) @?= Galapagos.finchStrategyExp <$> Galapagos.cellFinch cell1 ( Galapagos.finchHP <$> Galapagos.cellFinch cell2) @?= Nothing

To my surprise, it immediately passed. What's wrong with it? Before reading on, see if you can spot the problem.

I know that you don't know the problem domain or the particular API. Even so, the problem is in the test itself, not in the implementation code. After all, according to the red-green-refactor cycle, I hadn't yet added the code that would make this test pass.

The error is that I meant to verify that the actual value's second component remained Nothing, but either due to a brain fart, or because I copied an earlier test, the last assertion checks that cell2 is Nothing.

Since cell2 is originally initialized with Nothing, and Haskell values are immutable, there's no way it could be anything else. This is a tautological assertion. The correct assertion is

(Galapagos.finchHP <$> Galapagos.cellFinch (snd actual)) @?= Nothing

Notice that it examines snd actual rather than cell2.

Causes #

How could I be so stupid? First, to err is human, and this is exactly why it's important to start with a failing test. The error was mine, but even so, it sometimes pays to analyse if there might be an underlying driving force behind the error. In this case, I can identify at least two, although they are related.

First, I don't recall exactly how I wrote this test, but looking at previous commits, I find it likely that I copied and pasted an earlier test case. Evidently, I failed to correctly alter the assertion to specify the new test case.

Why was I even copying and pasting the test? Because the test is too complicated. As a rule of thumb, pay attention to copy-and-paste, also of test cases. Test code should be well-factored, without too much duplication, for the same reasons that apply to other code. If a test is too difficult to write, it indicates that the API is too difficult to use. This is feedback about the API design, and you should consider if simplification is possible.

And the test is, indeed, too complicated. What I wanted to verify is that Galapagos.cellFinch (snd actual) is Nothing. If so, why didn't I just write Galapagos.cellFinch (snd actual) @?= Nothing? Because that doesn't compile.

The issue is that the data type in question (Finch) doesn't have an Eq instance, which @?= requires. Thus, I had to project the value I wanted to examine to a value that does have an Eq instance, such finchHP, which is an Int.

Why didn't I assert on isNothing instead? Eventually, I did, but the problem with asserting on raw Boolean values is that when the assertion fails, you get no good feedback. The test runner will only tell you that the expected value was True, but the actual value False.

The assertBool action offers a solution, but then you have to write your own error message, and I wasn't yet ready to do that. Eventually, I did, though.

The bottom line is that the risk of making mistakes increases, the more complicated things become. This also applies to functional programming, but in reality, simple is rarely easy.

Conclusion #

Tautological assertions are not only caused by aliasing, but also plain human error. In this article, you've seen an example of such an error in Haskell. This is a language with a type system that can identify many errors at compile time. Even so, some errors are run-time errors, and when it comes to TDD, in the red phase, the absence of failure indicates an error.

I find it important to share such errors when they occur, because they are at the same time regular and uncommon. While rare, they still happen with some periodicity. Since, after all, they don't happen that often, it may take time if you only have your own mistakes to learn from. So learn from mine, too.

Pattern guards for a protocol

A Haskell example.

Recently, I was doing a Haskell project implementing a cellular automaton according to predefined rules. Specifically, the story was one of Galápagos finches meeting and deciding whether or not to groom each other for parasites, effectively playing out a round of prisoner's dilemma.

Each finch is equipped with a particular strategy for repeated play. This strategy is implemented in a custom domain-specific language that enables each finch to remember past actions of other finches, and make decisions based on this memory.

Meeting protocol #

An evaluator runs over the code that implements each strategy, returning a free monad that embeds the possible actions a finch may take when meeting another finch.

The possible actions are defined by this sum type:

data EvalOp a

= ErrorOp Error

| MeetOp (FinchID -> a)

| GroomOp (Bool -> a)

| IgnoreOp (Bool -> a)

When two finches a and b meet, they must interact according to a protocol.

- Evaluate the strategy of the finch a up to the next effect, which must be a

MeetOp. - Invoke the continuation of the

MeetOpwith thefinchIDof finch b. - Evaluate the strategy up to next effect, which must be

GroomOporIgnoreOp. This decides the behaviour of finch a during this meeting. - Similarly, determine the behaviour of finch b, using the

finchIDof finch a. - For each finch, invoke the continuation of the

GroomOporIgnoreOpwith the behaviour of the other finch (Truefor grooming,Falsefor ignoring), yielding two newStrategys (sic). - The resulting

Strategys (sic) are then stored in theFinchobjects for the next meeting of the finches.

If at any step a strategy does not produce one of the expected effects (or no effect at all), then the finch has behaved illegally. For example, if in step 1 the first effect is a GroomOp, then this is illegal.

This sounds rather complicated, and I was concerned that even though I could pattern-match against the EvalOp cases, I'd end up with duplicated and deeply indented code.

Normal pattern matching #

Worrying about duplication, I tried to see if I could isolate each finch's 'handshake' as a separate function. I was still concerned that using normal pattern matching would cause too much indentation, but subsequent experimentation shows that in this case, it's not really that bad.

Ultimately, I went with pattern guards, but I think that it may be more helpful to lead with an example of what the code would look like using plain vanilla pattern matching.

tryRun :: EvalM a -> FinchID -> Maybe (Bool -> EvalM a, Bool) tryRun (Free (MeetOp nextAfterMeet)) other = case nextAfterMeet other of (Free (GroomOp nextAfterGroom)) -> Just (nextAfterGroom, True) (Free (IgnoreOp nextAfterIgnore)) -> Just (nextAfterIgnore, False) _ -> Nothing tryRun _ _ = Nothing

Now that I write this article, I realize that I should have named the function handshake, but hindsight is twenty-twenty. In the moment, I went with tryRun, using the try prefix to indicate that this operation may fail, as also indicated by the Maybe return type. That naming convention is probably more idiomatic in F#, but I digress.

As announced, that's not half as bad as I had originally feared. There's the beginning of arrow code, but I suppose you could also say that of any use of if/then/else. Imagine, however, that the protocol involved a few more steps, and you'd have something quite ugly at hand.

Another slight imperfection is the repetition of returning Nothing. Again, this code would not keep me up at night, but it just so happened that I originally thought that it would be worse, so I immediately cast about for alternatives.

Using pattern guards #

Originally, I thought that perhaps view patterns would be suitable, but while looking around, I came across pattern guards and thought: What's that?

This language feature has been around since 2010, but it's new to me. It's a good fit for the problem at hand, and that's how I actually wrote the tryRun function:

tryRun :: EvalM a -> FinchID -> Maybe (Bool -> EvalM a, Bool) tryRun strategy other | (Free (MeetOp nextAfterMeet)) <- strategy , (Free (GroomOp nextAfterGroom)) <- nextAfterMeet other = Just (nextAfterGroom, True) tryRun strategy other | (Free (MeetOp nextAfterMeet)) <- strategy , (Free (IgnoreOp nextAfterIgnore)) <- nextAfterMeet other = Just (nextAfterIgnore, False) tryRun _ _ = Nothing

Now that I have the opportunity to compare the two alternatives, it's not clear that one is better than the other. You may even prefer the first, normal version.

The version using pattern guards has more lines of code, and code duplication in the repetition of the | (Free (MeetOp nextAfterMeet)) <- strategy pattern. On the other hand, we get rid of the duplicated Nothing return value. What is perhaps more interesting is that had the handshake protocol involved more steps, the pattern-guards version would remain flat, whereas the other version would require indentation.

To be honest, now that I write this article, the example has lost some of its initial lustre. Still, I learned about a Haskell language feature that I didn't know about, and thought I'd share the example.

Conclusion #

Most mature programming languages have so many features that a programmer may use a language for years, and still be unaware of useful alternatives. Haskell is the oldest language I use, and although I've programmed in it for a decade, I still learn new things.

In this article, you saw a simple example of using pattern guards. Perhaps you will find this language feature useful in your own code. Perhaps you already use it.

Treat test code like production code

You have to read and maintain test code, too.

I don't think I've previously published an article with the following simple message, which is clearly an omission on my part. Better late than never, though.

Treat test code like production code.

You should apply the same coding standards to test code as you do to production code. You should make sure the code is readable, well-factored, goes through review, etc., just like your production code.

Test mess #

It's not uncommon to encounter test code that has received a stepmotherly treatment. Such test code may still pay lip service to an organization's overall coding standards by having correct indents, placement of brackets, and other superficial signs of care. You don't have to dig deep, however, before you discover that the quality of the test code leaves much to be desired.

The most common problem is a disregard for the DRY principle. Duplication abound. It's almost as though people feel unburdened by the shackles of good software engineering practices, and as result relish in the freedom to copy and paste.

That freedom is, however, purely illusory. We'll return to that shortly.

Perhaps the second-most common category of poor coding practices applied to test code is the high frequency of Zombie Code. Commented-out code is common.

Other, less frequent examples of bad practices include use of arbitrary waits instead of proper thread synchronization, unwrapping of monadic values, including calling Task.Result instead of properly awaiting a value, and so on.

I'm sure that you can think of other examples.

Why good code is important #

I think that I can understand why people treat test code as a second-class citizen. It seems intuitive, although the intuition is wrong. Nevertheless, I think it goes like this: Since the test code doesn't go into production, it's seen as less important. And as we shall see below, there are, indeed, a few areas where you can safely cut corners when it comes to test code.

As a general rule, however, it's a bad idea to slack on quality in test code.

The reason lies in why we even have coding standards and design principles in the first place. Here's a hint: It's not to placate the computer.

"Any fool can write code that a computer can understand. Good programmers write code that humans can understand."

The reason we do our best to write code of good quality is that if we don't, it's going to make our work more difficult in the future. Either our own, or someone else's. But frequently, our own.

Forty (or fifty?) years of literature on good software development practices grapple with this fundamental problem. This is why my most recent book is called Code That Fits in Your Head. We apply software engineering heuristics and care about architecture because we know that if we fail to structure the code well, our mission is in jeopardy: We will not deliver on time, on budget, or with working features.

Once we understand this, we see how this applies to test code, too. If you have good test coverage, you will likely have a substantial amount of test code. You need to maintain this part of the code base too. The best way to do so is to treat it like your production code. Apply the same standards and design principles to test code as you do to your production code. This especially means keeping test code DRY.

Test-specific practices #

Since test code has a specialized purpose, you'll run into problems unique to that space. How should you structure a unit test? How should you organize them? How should you name them? How do you make them deterministic?

Fortunately, thoughtful people have collected and systematized their experience. The absolute most comprehensive such collection is xUnit Test Patterns, which has been around since 2007. Nothing in that book invalidates normal coding practices. Rather, it suggests specializations of good practices that apply to test code.

You may run into the notion that tests should be DAMP rather than DRY. If you expand the acronym, however, it stands for Descriptive And Meaningful Phrases, and you may realize that it's a desired quality of code independent of whether or not you repeat yourself. (Even the linked article fails, in my opinion, to erect a convincing dichotomy. Its notion of DRY is clearly not the one normally implied.) I think of the DAMP notion as related to Domain-Driven Design, which is another thematic take on making code fit in your head.

For a few years, however, I did, too, believe that copy-and-paste was okay in test code, but have long since learned that duplication slows you down in test code for exactly the same reason that it hurts in 'real' code. One simple change leads to Shotgun Surgery; many tests break, and you have to fix each one individually.

Dispensations #

All the same, there are exceptions to the general rule. In certain, well-understood ways, you can treat your test code with less care than production code.

Specifically, assuming that test code remains undeployed, you can skip certain security practices. You may, for example, hard-code test-only passwords directly in the tests. The code base that accompanies Code That Fits in Your Head contains an example of that.

You may also skip input validation steps, since you control the input for each test.

In my experience, security is the dominating exemption from the rule, but there may be other language- or platform-specific details where deviating from normal practices is warranted for test code.

One example may be in .NET, where a static code analysis rule may insist that you call ConfigureAwait. This rule is intended for library code that may run in arbitrary environments. When code runs in a unit-testing environment, on the other hand, the context is already known, and this rule can be dispensed with.

Another example is that in Haskell GHC may complain about orphan instances. In test code, it may occasionally be useful to give an existing type a new instance, most commonly an Arbitrary instance. While you can also get around this problem with well-named newtypes, you may also decide that orphan instances are no problem in a test code base, since you don't have to export the test modules as reusable libraries.

Conclusion #

You should treat test code like production code. The coding standards that apply to production code should also apply to test code. If you follow the DRY principle for production code, you should also follow the DRY principle in the test code base.

The reason is that most coding standards and design principles exist to make code maintainability easier. Since test code is also code, this still applies.

There are a few exception, most notably in the area of security, assuming that the test code is never deployed to production.

Result is the most boring sum type

If you don't see the point, you may be looking in the wrong place.

I regularly encounter programmers who are curious about statically typed functional programming, but are struggling to understand the point of sum types (also known as Discriminated Unions, Union Types, or similar). Particularly, I get the impression that recently various thought leaders have begun talking about Result types.

There are deep mathematical reasons to start with Results, but on the other hand, I can understand if many learners are left nonplussed. After all, Result types are roughly equivalent to exceptions, and since most languages already come with exceptions, it can be difficult to see the point.

The short response is that there's a natural explanation. A Result type is, so to speak, the fundamental sum type. From it, you can construct all other sum types. Thus, you can say that Results are the most abstract of all sum types. In that light, it's understandable if you struggle to see how they are useful.

Coproduct #

My goal with this article is to point out why and how Result types are the most abstract, and perhaps least interesting, sum types. The point is not to make Result types compelling, or sell sum types in general. Rather, my point is that if you're struggling to understand what all the fuss is about algebraic data types, perhaps Result types are the wrong place to start.

In the following, I will tend to call Result by another name: Either, which is also the name used in Haskell, where it encodes a universal construction called a coproduct. Where Result indicates a choice between success and failure, Either models a choice between 'left' and 'right'.

Although the English language allows the pun 'right = correct', and thereby the mnemonic that the right value is the opposite of failure, 'left' and 'right' are otherwise meant to be as neutral as possible. Ideally, Either carries no semantic value. This is what we would expect from a 'most abstract' representation.

Canonical representation #

As Thinking with Types does an admirable job of explaining, Either is part of a canonical representation of types, in which we can rewrite any type as a sum of products. In this context, a product is a pair, as also outlined by Bartosz Milewski.

The point is that we may rewrite any type to a combination of pairs and Either values, with the Either values on the outside. Such rewrites work both ways. You can rewrite any type to a combination of pairs and Eithers, or you can turn a combination of pairs and Eithers into more descriptive types. Since two-way translation is possible, we observe that the representations are isomorphic.

Isomorphism with Maybe #

Perhaps the simplest example is the natural transformation between Either () a and Maybe a, or, in F# syntax, Result<'a,unit> and 'a option. The Natural transformations article shows an F# example.

For illustration, we may translate this isomorphism to C# in order to help readers who are more comfortable with object-oriented C-like syntax. We may reuse the IgnoreLeft implementation, also from Natural transformations, to implement a translation from IEither<Unit, R> to IMaybe<R>.

public static IMaybe<R> ToMaybe<R>(this IEither<Unit, R> source) { return source.IgnoreLeft(); }

In order to go the other way, we may define ToMaybe's counterpart like this:

public static IEither<Unit, T> ToEither<T>(this IMaybe<T> source) { return source.Accept(new ToEitherVisitor<T>()); } private class ToEitherVisitor<T> : IMaybeVisitor<T, IEither<Unit, T>> { public IEither<Unit, T> VisitNothing => new Left<Unit, T>(Unit.Instance); public IEither<Unit, T> VisitJust(T just) { return new Right<Unit, T>(just); } }

The following two parametrized tests demonstrate the isomorphisms.

[Theory, ClassData(typeof(UnitIntEithers))] public void IsomorphismViaMaybe(IEither<Unit, int> expected) { var actual = expected.ToMaybe().ToEither(); Assert.Equal(expected, actual); } [Theory, ClassData(typeof(StringMaybes))] public void IsomorphismViaEither(IMaybe<string> expected) { var actual = expected.ToEither().ToMaybe(); Assert.Equal(expected, actual); }

The first test starts with an Either value, converts it to Maybe, and then converts the Maybe value back to an Either value. The second test starts with a Maybe value, converts it to Either, and converts it back to Maybe. In both cases, the actual value is equal to the expected value, which was also the original input.

Being able to map between Either and Maybe (or Result and Option, if you prefer) is hardly illuminating, since Maybe, too, is a general-purpose data structure. If this all seems a bit abstract, I can see why. Some more specific examples may help.

Calendar periods in F# #

Occasionally I run into the need to treat different calendar periods, such as days, months, and years, in a consistent way. To my recollection, the first time I described a model for that was in 2013. In short, in F# you can define a discriminated union for that purpose:

type Period = Year of int | Month of int * int | Day of int * int * int

A year is just a single integer, whereas a day is a triple, obviously with the components arranged in a sane order, with Day (2025, 11, 14) representing November 14, 2025.

Since this is a three-way discriminated union, whereas Either (or, in F#: Result) only has two mutually exclusive options, we need to nest one inside of another to represent this type in canonical form: Result<int, Result<(int * int), (int * (int * int))>>. Note that the 'outer' Result is a choice between a single integer (the year) and another Result value. The inner Result value then presents a choice between a month and a day.

Converting back and forth is straightforward:

let periodToCanonical = function | Year y -> Ok y | Month (y, m) -> Error (Ok (y, m)) | Day (y, m, d) -> Error (Error (y, (m, d))) let canonicalToPeriod = function | Ok y -> Year y | Error (Ok (y, m)) -> Month (y, m) | Error (Error (y, (m, d))) -> Day (y, m, d)

In F# this translations may strike you as odd, since the choice between Ok and Error hardly comes across as neutral. Even so, there's no loss of information when translating back and forth between the two representations.

> Month (2025, 12) |> periodToCanonical;; val it: Result<int,Result<(int * int),(int * (int * int))>> = Error (Ok (2025, 12)) > Error (Ok (2025, 12)) |> canonicalToPeriod;; val it: Period = Month (2025, 12)

The implied semantic difference between Ok and Error is one reason I favour Either over Result, when I have the choice. When translating to C#, I do have a choice, since there's no built-in coproduct data type.

Calendar periods in C# #

If you have been perusing the code base that accompanies Code That Fits in Your Head, you may have noticed an IPeriod interface. Since this is internal by default, I haven't discussed it much, but it's equivalent to the above F# discriminated union. It's used to enumerate restaurant reservations for a day, a month, or even a whole year.

Since this is a Visitor-encoded sum type, most of the information about data representation can be discerned from the Visitor interface:

internal interface IPeriodVisitor<T> { T VisitYear(int year); T VisitMonth(int year, int month); T VisitDay(int year, int month, int day); }

Given an appropriate Either definition, we can translate any IPeriod value into a nested Either, just like the above F# example.

private class ToCanonicalPeriodVisitor : IPeriodVisitor<IEither<int, IEither<(int, int), (int, (int, int))>>> { public IEither<int, IEither<(int, int), (int, (int, int))>> VisitDay( int year, int month, int day) { return new Right<int, IEither<(int, int), (int, (int, int))>>( new Right<(int, int), (int, (int, int))>((year, (month, day)))); } public IEither<int, IEither<(int, int), (int, (int, int))>> VisitMonth( int year, int month) { return new Right<int, IEither<(int, int), (int, (int, int))>>( new Left<(int, int), (int, (int, int))>((year, month))); } public IEither<int, IEither<(int, int), (int, (int, int))>> VisitYear(int year) { return new Left<int, IEither<(int, int), (int, (int, int))>>(year); } }

Look out! I've encoded year, month, and day from left to right as I did before. This means that the leftmost alternative indicates a year, and the rightmost alternative in the nested Either value indicates a day. This is structurally equivalent to the above F# encoding. If, however, you are used to thinking about left values indicating error, and right values indicating success, then the two mappings are not similar. In the F# encoding, an 'outer' Ok value indicates a year, whereas here, a left value indicates a year. This may be confusing if your are expecting a right value, corresponding to Ok.

The structure is the same, but this may be something to be aware of going forward.

You can translate the other way without loss of information.

private class ToPeriodVisitor : IEitherVisitor<int, IEither<(int, int), (int, (int, int))>, IPeriod> { public IPeriod VisitLeft(int left) { return new Year(left); } public IPeriod VisitRight(IEither<(int, int), (int, (int, int))> right) { return right.Accept(new ToMonthOrDayVisitor()); } private class ToMonthOrDayVisitor : IEitherVisitor<(int, int), (int, (int, int)), IPeriod> { public IPeriod VisitLeft((int, int) left) { var (y, m) = left; return new Month(y, m); } public IPeriod VisitRight((int, (int, int)) right) { var (y, (m, d)) = right; return new Day(y, m, d); } } }

It's all fairly elementary, although perhaps a bit cumbersome. The point of it all, should you have forgotten, is only to demonstrate that we can encode any sum type as a combination of Either values (and pairs).

A test like this demonstrates that these two translations comprise a true isomorphism:

[Theory, ClassData(typeof(PeriodData))] public void IsomorphismViaEither(IPeriod expected) { var actual = expected .Accept(new ToCanonicalPeriodVisitor()) .Accept(new ToPeriodVisitor()); Assert.Equal(expected, actual); }

This test converts arbitrary IPeriod values to nested Either values, and then translates them back to the same IPeriod value.

Payment types in F# #

Perhaps you are not convinced by a single example, so let's look at one more. In 2016 I was writing some code to integrate with a payment gateway. To model the various options that the gateway made available, I defined a discriminated union, repeated here:

type PaymentService = { Name : string; Action : string } type PaymentType = | Individual of PaymentService | Parent of PaymentService | Child of originalTransactionKey : string * paymentService : PaymentService

This looks a little more complicated, since it also makes use of the custom PaymentService type. Even so, converting to the canonical sum of products representation, and back again, is straightforward:

let paymentToCanonical = function | Individual { Name = n; Action = a } -> Ok (n, a) | Parent { Name = n; Action = a } -> Error (Ok (n, a)) | Child (k, { Name = n; Action = a }) -> Error (Error (k, (n, a))) let canonicalToPayment = function | Ok (n, a) -> Individual { Name = n; Action = a } | Error (Ok (n, a)) -> Parent { Name = n; Action = a } | Error (Error (k, (n, a))) -> Child (k, { Name = n; Action = a })

Again, there's no loss of information going back and forth between these two representations, even if the use of the Error case seems confusing.

> Child ("1234ABCD", { Name = "MasterCard"; Action = "Pay" }) |> paymentToCanonical;;

val it:

Result<(string * string),

Result<(string * string),(string * (string * string))>> =

Error (Error ("1234ABCD", ("MasterCard", "Pay")))

> Error (Error ("1234ABCD", ("MasterCard", "Pay"))) |> canonicalToPayment;;

val it: PaymentType = Child ("1234ABCD", { Name = "MasterCard"

Action = "Pay" })

Again, you might wonder why anyone would ever do that, and you'd be right. As a general rule, there's no reason to do this, and if you think that the canonical representation is more abstract, and harder to understand, I'm not going to argue. The point is, rather, that products (pairs) and coproducts (Either values) are universal building blocks of algebraic data types.

Payment types in C# #

As in the calendar-period example, it's possible to demonstrate the concept in alternative programming languages. For the benefit of programmers with a background in C-like languages, I once more present the example in C#. The starting point is where Visitor as a sum type ends. The code is also available on GitHub.

Translation to canonical form may be done with a Visitor like this:

private class ToCanonicalPaymentTypeVisitor : IPaymentTypeVisitor< IEither<(string, string), IEither<(string, string), (string, (string, string))>>> { public IEither<(string, string), IEither<(string, string), (string, (string, string))>> VisitChild(ChildPaymentService child) { return new Right<(string, string), IEither<(string, string), (string, (string, string))>>( new Right<(string, string), (string, (string, string))>( ( child.OriginalTransactionKey, ( child.PaymentService.Name, child.PaymentService.Action ) ))); } public IEither<(string, string), IEither<(string, string), (string, (string, string))>> VisitIndividual(PaymentService individual) { return new Left<(string, string), IEither<(string, string), (string, (string, string))>>( (individual.Name, individual.Action)); } public IEither<(string, string), IEither<(string, string), (string, (string, string))>> VisitParent(PaymentService parent) { return new Right<(string, string), IEither<(string, string), (string, (string, string))>>( new Left<(string, string), (string, (string, string))>( (parent.Name, parent.Action))); } }

If you find the method signatures horrible and near-unreadable, I don't blame you. This is an excellent example that C# (together with similar languages) inhabit the zone of ceremony; compare this ToCanonicalPaymentTypeVisitor to the above F# paymentToCanonical function, which performs exactly the same work while being as strongly statically typed.

Another Visitor translates the other way.

private class FromCanonicalPaymentTypeVisitor : IEitherVisitor< (string, string), IEither<(string, string), (string, (string, string))>, IPaymentType> { public IPaymentType VisitLeft((string, string) left) { var (name, action) = left; return new Individual(new PaymentService(name, action)); } public IPaymentType VisitRight(IEither<(string, string), (string, (string, string))> right) { return right.Accept(new CanonicalParentChildPaymentTypeVisitor()); } private class CanonicalParentChildPaymentTypeVisitor : IEitherVisitor<(string, string), (string, (string, string)), IPaymentType> { public IPaymentType VisitLeft((string, string) left) { var (name, action) = left; return new Parent(new PaymentService(name, action)); } public IPaymentType VisitRight((string, (string, string)) right) { var (k, (n, a)) = right; return new Child(new ChildPaymentService(k, new PaymentService(n, a))); } } }

Here I even had to split one generic type signature over multiple lines, in order to prevent horizontal scrolling. At least you have to grant the C# language that it's flexible enough to allow that.

Now that it's possible to translate both ways, a simple tests demonstrates the isomorphism.

[Theory, ClassData(typeof(PaymentTypes))] public void IsomorphismViaEither(IPaymentType expected) { var actual = expected .Accept(new ToCanonicalPaymentTypeVisitor()) .Accept(new FromCanonicalPaymentTypeVisitor()); Assert.Equal(expected, actual); }

This test translates an arbitrary IPaymentType into canonical form, then translates that representation back to an IPaymentType value, and checks that the two values are identical. There's no loss of information either way.

This and the previous example may seem like a detour around the central point that I'm trying to make: That Result (or Either) is the most boring sum type. I could have made the point, including examples, in a few lines of Haskell, but that language is so efficient at that kind of work that I fear that the syntax would look like pseudocode to programmers used to C-like languages. Additionally, Haskell programmers don't need my help writing small mappings like the ones shown here.

Conclusion #

Result (or Either) is as a fundamental building block in the context of algebraic data types. As such, this type may be considered the most boring sum type, since it effectively represents any other sum type, up to isomorphism.

Object-oriented programmers trying to learn functional programming sometimes struggle to see the light. One barrier to understanding the power of algebraic data types may be related to starting a learning path with a Result type. Since Results are equivalent to exceptions, people understandably have a hard time seeing how Result values are better. Since Result types are so abstract, it can be difficult to see the wider perspective. This doubly applies if a teacher leads with Result, with it's success/failure semantics, rather than with Either and its more neutral left/right labels.

Since Either is the fundamental sum type, it follows that any other sum type, being more specialized, carries more meaning, and therefore may be more interesting, and more illuminating as examples. In this article, I've used a few simple, but specialized sum types with the intention to help the reader see the difference.

Empirical software prototyping

How do you add tests to a proof-of-concept? Should you?

This is the second article in a small series on empirical test-after development. I'll try to answer an occasionally-asked question: Should one use test-driven development (TDD) for prototyping?

There are variations on this question, but it tends to come up when discussing TDD. Some software thought leaders proclaim that you should always take advantage of TDD when writing code. The short response is that there are exceptions to that rule. Those thought leaders know that, too, but they choose to communicate the way that they do in order to make a point. I don't blame them, and I use a similar style of communication from time to time. If you hedge every sentence you utter with qualifications, the message often disappears in unclear language. Thus, when someone admonishes that you should always follow TDD, what they really mean (I suppose; I'm not a telepath) is that you should predominantly use TDD. More than 80% of the time.

This is a common teaching tool. A competent teacher takes into account the skill level of a student. A new learner has to start with the basics before advanced topics. Early in the learning process, there's no room for sophistication. Even when a teacher understands that there are exceptions, he or she starts with a general rule, like 'you should always do TDD'.

While this may seem like a digression, this detour answers most of the questions related to software prototyping. People find it difficult to apply TDD when developing a prototype. I do, too. So don't.

Prototyping or proof-of-concept? #

I've already committed the sin of using prototype and proof-of-concept interchangeably. This only reflects how I use these terms in conversation. While I'm aware that the internet offers articles to help me distinguish, I find the differences too subtle to be of use when communicating with other people. Even if I learn the fine details that separate one from the other, I can't be sure that the person I'm talking to shares that understanding.

Since prototype is easier to say, I tend to use that term more than proof-of-concept. In any case, a prototype in this context is an exploration of an idea. You surmise that it's possible to do something in a certain way, but you're not sure. Before committing to the uncertain idea, you develop an isolated code base to vet the concept.

This could be an idea for an algorithm, use of a new technology such as a framework or reusable library, a different cloud platform, or even a new programming language.

Such exploration is already challenging in itself. How is the API of the library structured? Should you call IsFooble or HasFooble here? How do you write a for loop in APL? How does one even compile a Java program? How do you programmatically provision new resources in Microsoft Fabric? How do you convert a JPG file to PDF on the server?

There are plenty of questions like these where you don't even know the shape of things. The point of the exercise is often to figure those things out. When you don't know how APIs are organized, or which technologies are available to you, writing tests first is difficult. No wonder people sometimes ask me about this.

Code to throw away #

The very nature of a prototype is that it's an experiment designed to explore an idea. The safest way to engage with a prototype is to create an isolated code base for that particular purpose. A prototype is not an MVP or an early version of the product. It is a deliberately unstructured exploration of what's possible. The entire purpose of a prototype is to learn. Often the exploration process is time-boxed.

If the prototype turns out to be successful, you may proceed to implement the idea in your production code base. Even if you didn't use TDD for the prototype, you should now have learned enough that you can apply TDD for the production implementation.

The most common obstacle to this chain of events, I understand, is that 'bosses' demand that a successful prototype be put into production. Try to resist such demands. It often helps planning for this from the outset. If you can, do the prototype in a way that effectively prevents such predictable demands. If you're exploring the viability of a new algorithm, write it in an alternative programming language. For example, I've written prototypes in Haskell, which very effectively prevents demands that the code be put into production.

If your organization is a little behind the cutting edge, you can also write the prototype in a newer version of your language or run-time. Use functional programming if you normally use object-oriented design. Or you may pick an auxiliary technology incompatible with how you normally do things: Use the 'wrong' JSON serializer library, an in-memory database, write a command-line program if you need a GUI, or vice versa.

You're the technical expert. Make technical decisions. Surely, you can come up with something that sounds convincing enough to a non-technical stakeholder to prevent putting the prototype into production.

To be sure we're on firm moral ground here: I'm not advocating that you should be dishonest, or work against the interests of your organization. Rather, I suggest that you act politically. Understand what motivates other people. Non-technical stakeholders usually don't have the insight to understand why a successful prototype shouldn't be promoted to production code. Unfortunately, they often view programmers as typists, and with that view, it seems wasteful to repeat the work of typing in the prototype code. Typing, however, is not a bottleneck, but it can be hard to convince other people of this.

If all else fails, and you're forced to promote the prototype to production code, you now have a piece of legacy code on hand, in which case the techniques outlined in the previous article should prove useful.

Prototyping in existing code bases #

A special case occurs when you need to do a prototype in an existing code base. You already have a big, complicated system, and you would like to explore whether a particular idea is applicable in that context. Perhaps data access is currently too slow, and you have an idea of how to speed things up, for example by speculative prefetching. When the problem is one of performance, you'll need to measure in a realistic environment. This may prevent you from creating an isolated prototype code base.

In such cases, use Git to your advantage. Make a new prototype branch and work there. You may deliberately choose to make it a long-lived feature branch. Once the prototype is done, the code may now be so out of sync with master that 'merge conflicts' sounds like a plausible excuse to a non-technical stakeholder. As above, be political.

In any case, don't merge the prototype branch, even if you could. Instead, use the knowledge gained during the prototype work to re-implement the new idea, this time using empirical software engineering techniques like TDD.

Conclusion #

Prototyping is usually antithetical to TDD. On the other hand, TDD is an effective empirical method for software development. Without it, you have to seek other answers to the question: How do we know that this works?

Due to the explorative nature of prototyping, testing of the prototype tends to be explorative as well. Start up the prototype, poke at it, see if it behaves as expected. While you do gain a limited amount of empirical knowledge from such a process, it's unsystematic and non-repeatable, so little corroboration of hypothesis takes place. Therefore, once the prototype work is done, it's important to proceed on firmer footing if the prototype was successful.

The safest way is to put the prototype to the side, but use the knowledge to test-drive the production version of the idea. This may require a bit of political manoeuvring. If that fails, and you're forced to promote the prototype to production use, you may use the techniques for adding empirical Characterization Tests.

100% coverage is not that trivial

Dispelling a myth I helped propagate.

Most people who have been around automated testing for a few years understand that code coverage is a useless target measure. Unfortunately, through a game of Chinese whispers, this message often degenerates to the simpler, but incorrect, notion that code coverage is useless.

As I've already covered in that article, code coverage may be useful for other reasons. That's not my agenda for this article. Rather, something about this discussion have been bothering me for a long time.

Have you ever had an uneasy feeling about a topic, without being able to put your finger on exactly what the problem is? This happens to me regularly. I'm going along with the accepted narrative until the cognitive dissonance becomes so conspicuous that I can no longer ignore it.

In this article, I'll grapple with the notion that 'reaching 100% code coverage is easy.'

Origins #

This tends to come up when discussing code coverage. People will say that 100% code coverage isn't a useful measure, because it's easy to reach 100%. I have used that argument myself. Fortunately I also cited my influences in 2015; in this case Martin Fowler's Assertion Free Testing.

"[...] of course you can do this and have 100% code coverage - which is one reason why you have to be careful on interpreting code coverage data."

This may not be the only source of such a claim, but it may have been a contributing factor. There's little wrong with Fowler's article, which doesn't make any groundless claims, but I can imagine how semantic diffusion works on an idea like that.

Fowler also wrote that it's "a story from a friend of a friend." When the source of a story is twice-removed like that, alarm bells should go off. This is the stuff that urban legends are made of, and I wonder if this isn't rather an example of 'programmer folk wisdom'. I've heard variations of that story many times over the years, from various people.

It's not that easy #

Even though I've helped promulgate the idea that reaching 100% code coverage is easy if you cheat, I now realise that that's an overstatement. Even if you write no assertions, and surround the test code with a try/catch block, you can't trivially reach 100% coverage. There are going to be branches that you can't reach.

This often happens in real code bases that query databases, call web services, and so on. If a branch depends on indirect input, you can't force execution down that path just by suppressing exceptions.

An example is warranted.

Example #

Consider this ReadReservation method in the SqlReservationsRepository class from the code base that accompanies my book Code That Fits in Your Head:

public async Task<Reservation?> ReadReservation(int restaurantId, Guid id) { const string readByIdSql = @" SELECT [PublicId], [At], [Name], [Email], [Quantity] FROM [dbo].[Reservations] WHERE [PublicId] = @id"; using var conn = new SqlConnection(ConnectionString); using var cmd = new SqlCommand(readByIdSql, conn); cmd.Parameters.AddWithValue("@id", id); await conn.OpenAsync().ConfigureAwait(false); using var rdr = await cmd.ExecuteReaderAsync().ConfigureAwait(false); if (!rdr.Read()) return null; return ReadReservationRow(rdr); }

Even though it only has a cyclomatic complexity of 2, most of it is unreachable to a test that tries to avoid hard work.

You can try to cheat in the suggested way by adding a test like this:

[Fact] public async Task ReadReservation() { try { var sut = new SqlReservationsRepository("dunno"); var actual = await sut.ReadReservation(0, Guid.NewGuid()); } catch { } }

Granted, this test passes, and if you had 0% code coverage before, it does improve the metric slightly. Interestingly, the Coverlet collector for .NET reports that only the first line, which creates the conn variable, is covered. I wonder, though, if this is due to some kind of compiler optimization associated with asynchronous execution that the coverage tool fails to capture.

More understandably, execution reaches conn.OpenAsync() and crashes, since the test hasn't provided a connection to a real database. This is what happens if you run the test without the surrounding try/catch block.

Coverlet reports 18% coverage, and that's as high you can get with 'the easy hack'. 100% is some distance away.

Toward better coverage #

You may protest that we can do better than this. After all, with utter disregard for using proper arguments, I passed "dunno" as a connection string. Clearly that doesn't work.

Couldn't we easily get to 100% by providing a proper connection string? Perhaps, but what's a proper connection string?

It doesn't help if you pass a well-formed connection string instead of "dunno". In fact, it will only slow down the test, because then conn.OpenAsync() will attempt to open the connection. If the database is unreachable, that statement will eventually time out and fail with an exception.

Couldn't you, though, give it a connection string to a real database?

Yes, you could. If you do that, though, you should make sure that the database has a schema compatible with readByIdSql. Otherwise, the query will fail. What happens if the implied schema changes? Now you need to make sure that the database is updated, too. This sounds error-prone. Perhaps you should automate that.

Furthermore, you may easily cover the branch that returns null. After all, when you query for Guid.NewGuid(), that value is not going to be in the table. On the other hand, how will you cover the other branch; the one that returns a row?

You can only do that if you know the ID of a value already in that table. You may write a second test that queries for that known value. Now you have 100% coverage.

What you have done at this point, however, is no longer an easy cheat to get to 100%. You have, essentially, added integration tests of the data access subsystem.

How about adding some assertions to make the tests useful?

Integration tests for 100% #

In most systems, you will at least need some integration tests to reach 100% code coverage. While the code shown in Code That Fits in Your Head doesn't have 100% code coverage (that was never my goal), it looks quite good. (It's hard to get a single number, because Coverlet apparently can't measure coverage by running multiple test projects, so I can only get partial results. Coverage is probably better than 80%, I estimate.)

To test ReadReservation I wrote integration tests that automate setup and tear-down of a local test-specific database. The book, and the Git repository that accompanies it, has all the details.

Getting to 100%, or even 80%, requires dedicated work. In a realistic code base, the claim that reaching 100% is trivial is hardly true.

Conclusion #

Programmer folk wisdom 'knows' that code coverage is useless. One argument is that any fool can reach 100% by writing assertion-free tests surrounded by try/catch blocks.

This is hardly true in most significant code bases. Whenever you deal with indirect input, try/catch is insufficient to control whereto execution branches.

This suggests that high code-coverage numbers are good, and low numbers bad. What constitutes high and low is context-dependent. What seems to remain true, however, is that code coverage is a useless target. This has little to do with how trivial it is to reach 100%, but rather everything to do with how humans respond to incentives.

Empirical Characterization Testing

Gathering empirical evidence while adding tests to legacy code.

This article is part of a short series on empirical test-after techniques. Sometimes, test-driven development (TDD) is impractical. This often happens when faced with legacy code. Although there's a dearth of hard data, I guess that most code in the world falls into this category. Other software thought leaders seem to suggest the same notion.

For the purposes of this discussion, the definition of legacy code is code without automated tests.

"Code without tests is bad code. It doesn't matter how well written it is; it doesn't matter how pretty or object-oriented or well-encapsulated it is. With tests, we can change the behavior of our code quickly and verifiably. Without them, we really don't know if our code is getting better or worse."

As Michael Feathers suggest, the accumulation of knowledge is at the root of this definition. As I outlined in Epistemology of software, tests are the source of empirical evidence. In principle it's possible to apply a rigorous testing regimen with manual testing, but in most cases this is (also) impractical for reasons that are different from the barriers to automated testing. In the rest of this article, I'll exclusively discuss automated testing.

We may reasonably extend the definition of legacy code to a code base without adequate testing support.

When do we have enough tests? #

What, exactly, is adequate testing support? The answer is the same as in science, overall. When do you have enough scientific evidence that a particular theory is widely accepted? There's no universal answer to that, and no, p-values less than 0.05 isn't the answer, either.

In short, adequate empirical evidence is when a hypothesis is sufficiently corroborated to be accepted. Keep in mind that science can never prove a theory correct, but performing experiments against falsifiable predictions can disprove it. This applies to software, too.

"Testing shows the presence, not the absence of bugs."

The terminology of hypothesis, corroboration, etc. may be opaque to many software developers. Here's what it means in terms of software engineering: You have unspoken and implicit hypotheses about the code you're writing. Usually, once you're done with a task, your hypothesis is that the code makes the software work as intended. Anyone who's written more than a hello-world program knows, however, that believing the code to be correct is not enough. How many times have you written code that you assumed correct, only to find that it was not?

That's the lack of knowledge that testing attempts to address. Even manual testing. A test is an experiment that produces empirical evidence. As Dijkstra quipped, passing tests don't prove the software correct, but the more passing tests we have, the more confidence we gain. At some point, the passing tests provide enough confidence that you and other stakeholders consider it sensible to release or deploy the software. We say that the failure to find failing tests corroborates our hypothesis that the software works as intended.

In the context of legacy code, it's not the absolute lack of automated tests that characterizes legacy code. Rather, it's the lack of adequate test coverage. It's that you don't have enough tests. Thus, when working with legacy code, you want to add tests after the fact.

Characterization Test recipes #

A test written after the fact against a legacy code base is called a Characterization Test, because it characterizes (i.e. describes) the behaviour of the system under test (SUT) at the time it was written. It's not a given that this behaviour is correct or desirable.

Michael Feathers gives this recipe for writing a Characterization Test:

- "Use a piece of code in a test harness.

- "Write an assertion that you know will fail.

- "Let the failure tell you what the behavior is.

- "Change the test so that it expects the behavior that the code produces.

- "Repeat."

Notice the second step: "Write an assertion that you know will fail." Why is that important? Why not write the 'correct' assertion from the outset?

The reason is the same as I outlined in Epistemology of software: It happens with surprising regularity that you inadvertently write a tautological assertion. You could also make other mistakes, but writing a failing test is a falsifiable experiment. In this case, the implied hypothesis is that the test will fail. If it does not fail, you've falsified the implied prediction. To paraphrase Dijkstra, you've proven the test wrong.

If, on the other hand, the test fails, you've failed to falsify the hypothesis. You have not proven the test correct, but you've failed in proving it wrong. Epistemologically, that's the best result you may hope for.

I'm a little uneasy about the above recipe, because it involves a step where you change the test code to pass the test. How can you know that you, without meaning to, replaced a proper assertion with a tautological assertion?

For that reason, I sometimes follow a variation of the recipe:

- Write a test that exercises the SUT, including the correct assertion you have in mind.

- Run the test to see it pass.

- Sabotage the SUT so that it fails the assertion. If there are several assertions, do this for each, one after the other.

- Run the test to see it fail.

- Revert the sabotage.

- Run the test again to see it pass.

- Repeat.

The last test run is strictly not necessary if you've been rigorous about how you revert the sabotage, but psychologically, it gives me a better sense that all is good if I can end each cycle with a green test suite.

Example #

I don't get to interact that much with legacy code, but even so, I find myself writing Characterization Tests with surprising regularity. One example was when I was characterizing the song recommendations example. If you have the Git repository that accompanies that article series, you can see that the initial setup is adding one Characterization Test after the other. Even so, as I follow a policy of not adding commits with failing tests, you can't see the details of the process leading to each commit.

Perhaps a better example can be found in the Git repository that accompanies Code That Fits in Your Head. If you own the book, you also have access to the repository. In commit d66bc89443dc10a418837c0ae5b85e06272bd12b I wrote this message:

"Remove PostOffice dependency from Controller

"Instead, the PostOffice behaviour is now the responsibility of the EmailingReservationsRepository Decorator, which is configured in Startup.

"I meticulously edited the unit tests and introduced new unit tests as necessary. All new unit tests I added by following the checklist for Characterisation Tests, including seeing all the assertions fail by temporarily editing the SUT."

Notice the last paragraph, which is quite typical for how I tend to document my process when it's otherwise invisible in the Git history. Here's a breakdown of the process.

I first created the EmailingReservationsRepository without tests. This class is a Decorator, so quite a bit of it is boilerplate code. For instance, one method looks like this:

public Task<Reservation?> ReadReservation(int restaurantId, Guid id) { return Inner.ReadReservation(restaurantId, id); }

That's usually the case with such Decorators, but then one of the methods turned out like this:

public async Task Update(int restaurantId, Reservation reservation) { if (reservation is null) throw new ArgumentNullException(nameof(reservation)); var existing = await Inner.ReadReservation(restaurantId, reservation.Id) .ConfigureAwait(false); if (existing is { } && existing.Email != reservation.Email) await PostOffice .EmailReservationUpdating(restaurantId, existing) .ConfigureAwait(false); await Inner.Update(restaurantId, reservation) .ConfigureAwait(false); await PostOffice.EmailReservationUpdated(restaurantId, reservation) .ConfigureAwait(false); }

I then realized that I should probably cover this class with some tests after all, which I then proceeded to do in the above commit.

Consider one of the state-based Characterisation Tests I added to cover the Update method.