ploeh blog danish software design

Bifunctors

Bifunctors are like functors, only they vary in two dimensions instead of one. An article for object-oriented programmers.

This article is a continuation of the article series about functors and about applicative functors. In this article, you'll learn about a generalisation called a bifunctor. The prefix bi typically indicates that there's two of something, and that's also the case here.

As you've already seen in the functor articles, a functor is a mappable container of generic values, like Foo<T>, where the type of the contained value(s) can be any generic type T. A bifunctor is just a container with two independent generic types, like Bar<T, U>. If you can map each of the types independently of the other, you may have a bifunctor.

The two most common bifunctors are tuples and Either.

Maps #

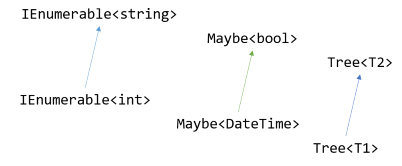

A normal functor is based on a structure-preserving map of the contents within a container. You can, for example, translate an IEnumerable<int> to an IEnumerable<string>, or a Maybe<DateTime> to a Maybe<bool>. The axis of variability is the generic type argument T. You can translate T1 to T2 inside a container, but the type of the container remains the same: you can translate Tree<T1> to Tree<T2>, but it remains a Tree<>.

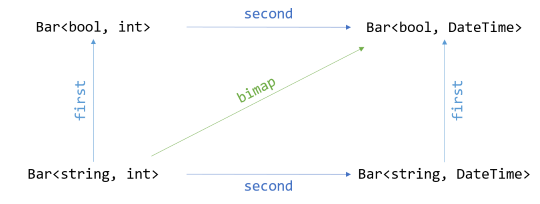

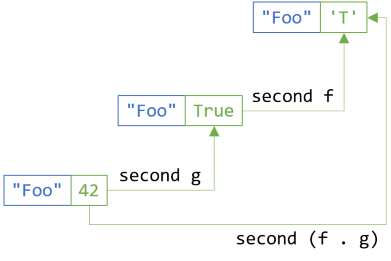

A bifunctor involves a pair of maps, one for each generic type. You can map a Bar<string, int> to a Bar<bool, int>, or to a Bar<string, DateTime>, or even to a Bar<bool, DateTime>. Notice that the last example, mapping from Bar<string, int> to Bar<bool, DateTime> could be viewed as translating both axes simultaneously.

In Haskell, the two maps are called first and second, while the 'simultaneous' map is called bimap.

The first translation translates the first, or left-most, value in the container. You can use it to map Bar<string, int> to a Bar<bool, int>. In C#, we could decide to call the method SelectFirst, or SelectLeft, in order to align with the C# naming convention of calling the functor morphism Select.

Likewise, the second map translates the second, or right-most, value in the container. This is where you map Bar<string, int> to Bar<string, DateTime>. In C#, we could call the method SelectSecond, or SelectRight.

The bimap function maps both values in the container in one go. This corresponds to a translation from Bar<string, int> to Bar<bool, DateTime>. In C#, we could call the method SelectBoth. There's no established naming conventions for bifunctors in C# that I know of, so these names are just some that I made up.

You'll see examples of how to implement and use such functions in the next articles:

Other bifunctors exist, but the first two are the most common.Identity laws #

As is the case with functors, laws govern bifunctors. Some of the functor laws carry over, but are simply repeated over both axes, while other laws are generalisations of the functor laws. For example, the first functor law states that if you translate a container with the identity function, the result is the original input. This generalises to bifunctors as well:

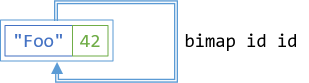

bimap id id ≡ id

This just states that if you translate both axes using the endomorphic Identity, it's equivalent to applying the Identity.

Using C# syntax, you could express the law like this:

bf.SelectBoth(id, id) == bf;

Here, bf is some bifunctor, and id is the identity function. The point is that if you translate over both axes, but actually don't perform a real translation, nothing happens.

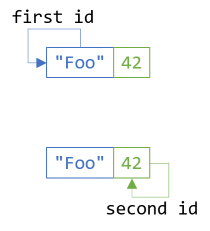

Likewise, if you consider a bifunctor a functor over two dimensions, the first functor law should hold for both:

first id ≡ id second id ≡ id

Both of those equalities only restate the first functor law for each dimension. If you map an axis with the identity function, nothing happens:

In C#, you can express both laws like this:

bf.SelectFirst(id) == bf; bf.SelectSecond(id) == bf;

When calling SelectFirst, you translate only the first axis while you keep the second axis constant. When calling SelectSecond it's the other way around: you translate only the second axis while keeping the first axis constant. In both cases, though, if you use the identity function for the translation, you effectively keep the mapped dimension constant as well. Therefore, one would expect the result to be the same as the input.

Consistency law #

As you'll see in the articles on tuple and Either bifunctors, you can derive bimap or SelectBoth from first/SelectFirst and second/SelectSecond, or the other way around. If, however, you decide to implement all three functions, they must act in a consistent manner. The name Consistency law, however, is entirely my own invention. If it has a more well-known name, I'm not aware of it.

In pseudo-Haskell syntax, you can express the law like this:

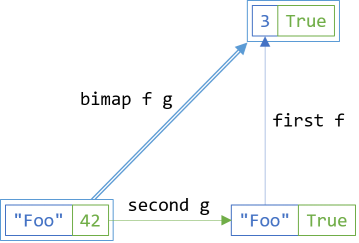

bimap f g ≡ first f . second g

This states that mapping (using the functions f and g) simultaneously should produce the same result as mapping using an intermediary step:

In C#, you could express it like this:

bf.SelectBoth(f, g) == bf.SelectSecond(g).SelectFirst(f);

You can project the input bifunctor bf using both f and g in a single step, or you can first translate the second dimension with g and then subsequently map that intermediary result along the first axis with f.

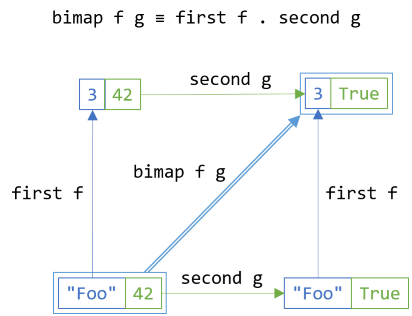

The above diagram ought to commute:

It shouldn't matter whether the intermediary step is applying f along the first axis or g along the second axis. In C#, we can write it like this:

bf.SelectFirst(f).SelectSecond(g) == bf.SelectSecond(g).SelectFirst(f);

On the left-hand side, you first translate the bifunctor bf along the first axis, using f, and then translate that intermediary result along the second axis, using g. On the right-hand side, you first project bf along the second axis, using g, and then map that intermediary result over the first dimension, using f.

Regardless of order of translation, the result should be the same.

Composition laws #

Similar to how the first functor law generalises to bifunctors, the second functor law generalises as well. For (mono)functors, the second functor law states that if you have two functions over the same dimension, it shouldn't matter whether you perform a projection in one, composed step, or in two steps with an intermediary result.

For bifunctors, you can generalise that law and state that you can project over both dimensions in one or two steps:

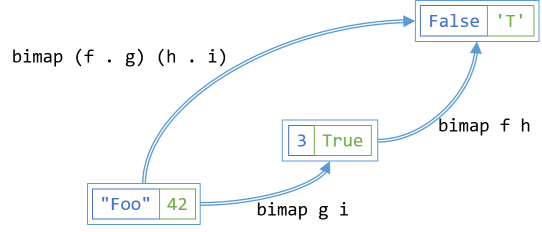

bimap (f . g) (h . i) ≡ bimap f h . bimap g i

If you have two functions, f and g, that compose, and two other functions, h and i, that also compose, you can translate in either one or two steps; the result should be the same.

In C#, you can express the law like this:

bf.SelectBoth(x => f(g(x)), y => h(i(y))) == bf.SelectBoth(g, i).SelectBoth(f, h);

On the left-hand side, the first dimension is translated in one step. For each x contained in bf, the translation first invokes g(x), and then immediately calls f with the output of g(x). The second dimension also gets translated in one step. For each y contained in bf, the translation first invokes i(y), and then immediately calls h with the output of i(y).

On the right-hand side, you first translate bf along both axes using g and i. This produces an intermediary result that you can use as input for a second translation with f and h.

The translation on the left-hand side should produce the same output as the right-hand side.

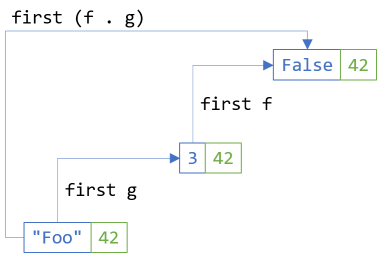

Finally, if you keep one of the dimensions fixed, you essentially have a normal functor, and the second functor law should still hold. For example, if you hold the second dimension fixed, translating over the first dimension is equivalent to a normal functor projection, so the second functor law should hold:

first (f . g) ≡ first f . first g

If you replace first with fmap, you have the second functor law.

In C#, you can write it like this:

bf.SelectFirst(x => f(g(x))) == bf.SelectFirst(g).SelectFirst(f);

Likewise, you can keep the first dimension constant and apply the second functor law to projections along the second axis:

second (f . g) ≡ second f . second g

Again, if you replace second with fmap, you have the second functor law.

In C#, you express it like this:

bf.SelectSecond(x => f(g(x))) == bf.SelectSecond(g).SelectSecond(f);

The last two of these composition laws are specialisations of the general composition law, but where you fix either one or the other dimension.

Summary #

A bifunctor is a container that can be translated over two dimensions, instead of a (mono)functor, which is a container that can be translated over a single dimension. In reality, there isn't a multitude of different bifunctors. While others exist, tuples and Either are the two most common bifunctors. They share an abstraction, but are still fundamentally different. A tuple always contains values of both dimensions at the same time, whereas Either only contains one of the values.

Do trifunctors, quadfunctors, and so on, exist? Nothing prevents that, but they aren't particularly useful; in practice, you never run into them.

Next: Tuple bifunctor.

The Lazy applicative functor

Lazy computations form an applicative functor.

This article is an instalment in an article series about applicative functors. A previous article has described how lazy computations form a functor. In this article, you'll see that lazy computations also form an applicative functor.

Apply #

As you have previously seen, C# isn't the best fit for the concept of applicative functors. Nevertheless, you can write an Apply extension method following the applicative 'code template':

public static Lazy<TResult> Apply<TResult, T>( this Lazy<Func<T, TResult>> selector, Lazy<T> source) { return new Lazy<TResult>(() => selector.Value(source.Value)); }

The Apply method takes both a lazy selector and a lazy value called source. It applies the function to the value and returns the result, still as a lazy value. If you have a lazy function f and a lazy value x, you can use the method like this:

Lazy<Func<int, string>> f = // ... Lazy<int> x = // ... Lazy<string> y = f.Apply(x);

The utility of Apply, however, mostly tends to emerge when you need to chain multiple containers together; in this case, multiple lazy values. You can do that by adding as many overloads to Apply as you need:

public static Lazy<Func<T2, TResult>> Apply<T1, T2, TResult>( this Lazy<Func<T1, T2, TResult>> selector, Lazy<T1> source) { return new Lazy<Func<T2, TResult>>(() => y => selector.Value(source.Value, y)); }

This overload partially applies the input function. When selector is a function that takes two arguments, you can apply a single of those two arguments, and the result is a new function that closes over the value, but still waits for its second input argument. You can use it like this:

Lazy<Func<char, int, string>> f = // ... Lazy<char> c = // ... Lazy<int> i = // ... Lazy<string> s = f.Apply(c).Apply(i);

Notice that you can chain the various overloads of Apply. In the above example, you have a lazy function that takes a char and an int as input, and returns a string. It could, for instance, be a function that invokes the equivalent string constructor overload.

Calling f.Apply(c) uses the overload that takes a Lazy<Func<T1, T2, TResult>> as input. The return value is a Lazy<Func<int, string>>, which the first Apply overload then picks up, to return a Lazy<string>.

Usually, you may have one, two, or several lazy values, whereas your function itself isn't contained in a Lazy container. While you can use a helper method such as Lazy.FromValue to 'elevate' a 'normal' function to a lazy function value, it's often more convenient if you have another Apply overload like this:

public static Lazy<Func<T2, TResult>> Apply<T1, T2, TResult>( this Func<T1, T2, TResult> selector, Lazy<T1> source) { return new Lazy<Func<T2, TResult>>(() => y => selector(source.Value, y)); }

The only difference to the equivalent overload is that in this overload, selector isn't a Lazy value, while source still is. This simplifies usage:

Func<char, int, string> f = // ... Lazy<char> c = // ... Lazy<int> i = // ... Lazy<string> s = f.Apply(c).Apply(i);

Notice that in this variation of the example, f is no longer a Lazy<Func<...>>, but just a normal Func.

F# #

F#'s type inference is more powerful than C#'s, so you don't have to resort to various overloads to make things work. You could, for example, create a minimal Lazy module:

module Lazy = // ('a -> 'b) -> Lazy<'a> -> Lazy<'b> let map f (x : Lazy<'a>) = lazy f x.Value // Lazy<('a -> 'b)> -> Lazy<'a> -> Lazy<'b> let apply (x : Lazy<_>) (f : Lazy<_>) = lazy f.Value x.Value

In this code listing, I've repeated the map function shown in a previous article. It's not required for the implementation of apply, but you'll see it in use shortly, so I thought it was convenient to include it in the listing.

If you belong to the camp of F# programmers who think that F# should emulate Haskell, you can also introduce an operator:

let (<*>) f x = Lazy.apply x f

Notice that this <*> operator simply flips the arguments of Lazy.apply. If you introduce such an operator, be aware that the admonition from the overview article still applies. In Haskell, the <*> operator applies to any Applicative, which makes it truly general. In F#, once you define an operator like this, it applies specifically to a particular container type, which, in this case, is Lazy<'a>.

You can replicate the first of the above C# examples like this:

let f : Lazy<int -> string> = // ... let x : Lazy<int> = // ... let y : Lazy<string> = Lazy.apply x f

Alternatively, if you want to use the <*> operator, you can compute y like this:

let y : Lazy<string> = f <*> x

Chaining multiple lazy computations together also works:

let f : Lazy<char -> int -> string> = // ... let c : Lazy<char> = // ... let i : Lazy<int> = // ... let s = Lazy.apply c f |> Lazy.apply i

Again, you can compute s with the operator, if that's more to your liking:

let s : Lazy<string> = f <*> c <*> i

Finally, if your function isn't contained in a Lazy value, you can start out with Lazy.map:

let f : char -> int -> string = // ... let c : Lazy<char> = // ... let i : Lazy<int> = // ... let s : Lazy<string> = Lazy.map f c |> Lazy.apply i

This works without requiring additional overloads. Since F# natively supports partial function application, the first step in the pipeline, Lazy.map f c has the type Lazy<int -> string> because f is a function of the type char -> int -> string, but in the first step, Lazy.map f c only supplies c, which contains a char value.

Once more, if you prefer the infix operator, you can also compute s as:

let s : Lazy<string> = lazy f <*> c <*> i

While I find operator-based syntax attractive in Haskell code, I'm more hesitant about such syntax in F#.

Haskell #

As outlined in the previous article, Haskell is already lazily evaluated, so it makes little sense to introduce an explicit Lazy data container. While Haskell's built-in Identity isn't quite equivalent to .NET's Lazy<T> object, some similarities remain; most notably, the Identity functor is also applicative:

Prelude Data.Functor.Identity> :t f f :: a -> Int -> [a] Prelude Data.Functor.Identity> :t c c :: Identity Char Prelude Data.Functor.Identity> :t i i :: Num a => Identity a Prelude Data.Functor.Identity> :t f <$> c <*> i f <$> c <*> i :: Identity String

This little GHCi session simply illustrates that if you have a 'normal' function f and two Identity values c and i, you can compose them using the infix map operator <$>, followed by the infix apply operator <*>. This is equivalent to the F# expression Lazy.map f c |> Lazy.apply i.

Still, this makes little sense, since all Haskell expressions are already lazily evaluated.

Summary #

The Lazy functor is also an applicative functor. This can be used to combine multiple lazily computed values into a single lazily computed value.

Next: Applicative monoids.

Danish CPR numbers in F#

An example of domain-modelling in F#, including a fine example of using the option type as an applicative functor.

This article is an instalment in an article series about applicative functors, although the applicative functor example doesn't appear until towards the end. This article also serves the purpose of showing an example of Domain-Driven Design in F#.

Danish personal identification numbers #

As outlined in the previous article, in Denmark, everyone has a personal identification number, in Danish called CPR-nummer (CPR number).

CPR numbers have a simple format: DDMMYY-SSSS, where the first six digits indicate a person's birth date, and the last four digits are a sequence number. Some information, however, is also embedded in the sequence number. An example could be 010203-1234, which indicates a woman born February 1, 1903.

One way to model this in F# is with a single-case discriminated union:

type CprNumber = private CprNumber of (int * int * int * int) with override x.ToString () = let (CprNumber (day, month, year, sequenceNumber)) = x sprintf "%02d%02d%02d-%04d" day month year sequenceNumber

This is a common idiom in F#. In object-oriented design with e.g. C# or Java, you'd typically create a class and put guard clauses in its constructor. This would prevent a user from initialising an object with invalid data (such as 401500-1234). While you can create classes in F# as well, a single-case union with a private case constructor can achieve the same degree of encapsulation.

In this case, I decided to use a quadruple (4-tuple) as the internal representation, but this isn't visible to users. This gives me the option to refactor the internal representation, if I need to, without breaking existing clients.

Creating CPR number values #

Since the CprNumber case constructor is private, you can't just create new values like this:

let cpr = CprNumber (1, 1, 1, 1118)

If you're outside the Cpr module that defines the type, this doesn't compile. This is by design, but obviously you need a way to create values. For convenience, input values for day, month, and so on, are represented as ints, which can be zero, negative, or way too large for CPR numbers. There's no way to statically guarantee that you can create a value, so you'll have to settle for a tryCreate function; i.e. a function that returns Some CprNumber if the input is valid, or None if it isn't. In Haskell, this pattern is called a smart constructor.

There's a couple of rules to check. All integer values must fall in certain ranges. Days must be between 1 and 31, months must be between 1 and 12, and so on. One way to enable succinct checks like that is with an active pattern:

let private (|Between|_|) min max candidate = if min <= candidate && candidate <= max then Some candidate else None

Straightforward: return Some candidate if candidate is between min and max; otherwise, None. This enables you to pattern-match input integer values to particular ranges.

Perhaps you've already noticed that years are represented with only two digits. CPR is an old system (from 1968), and back then, bits were expensive. No reason to waste bits on recording the millennium or century in which people were born. It turns out, after all, that there's a way to at least partially figure out the century in which people were born. The first digit of the sequence number contains that information:

// int -> int -> int let private calculateFourDigitYear year sequenceNumber = let centuryDigit = sequenceNumber / 1000 // Integer division // Table from https://da.wikipedia.org/wiki/CPR-nummer match centuryDigit, year with | Between 0 3 _, _ -> 1900 | 4 , Between 0 36 _ -> 2000 | 4 , _ -> 1900 | Between 5 8 _, Between 0 57 _ -> 2000 | Between 5 8 _, _ -> 1800 | _ , Between 0 36 _ -> 2000 | _ -> 1900 + year

As the code comment informs the reader, there's a table that defines the century, based on the two-digit year and the first digit of the sequence number. Note that birth dates in the nineteenth century are possible. No Danes born before 1900 are alive any longer, but at the time the CPR system was introduced, one person in the system was born in 1863!

The calculateFourDigitYear function starts by pulling the first digit out of the sequence number. This is a four-digit number, so dividing by 1,000 produces the digit. I left a comment about integer division, because I often miss that detail when I read code.

The big pattern-match expression uses the Between active pattern, but it ignores the return value from the pattern. This explains the wild cards (_), I hope.

Although a pattern-match expression is often formatted over several lines of code, it's a single expression that produces a single value. Often, you see code where a let-binding binds a named value to a pattern-match expression. Another occasional idiom is to pipe a pattern-match expression to a function. In the calculateFourDigitYear function I use a language construct I've never seen anywhere else: the eight-lines pattern-match expression returns an int, which I simply add to year using the + operator.

Both calculateFourDigitYear and the Between active pattern are private functions. They're only there as support functions for the public API. You can now implement a tryCreate function:

// int -> int -> int -> int -> CprNumber option let tryCreate day month year sequenceNumber = match month, year, sequenceNumber with | Between 1 12 m, Between 0 99 y, Between 0 9999 s -> let fourDigitYear = calculateFourDigitYear y s if 1 <= day && day <= DateTime.DaysInMonth (fourDigitYear, m) then Some (CprNumber (day, m, y, s)) else None | _ -> None

The tryCreate function begins by pattern-matching a triple (3-tuple) using the Between active pattern. The month must always be between 1 and 12 (both included), the year must be between 0 and 99, and the sequenceNumber must always be between 0 and 9999 (in fact, I'm not completely sure if 0000 is valid).

Finding the appropriate range for the day is more intricate. Is 31 always valid? Clearly not, because there's no November 31, for example. Is 30 always valid? No, because there's never a February 30. Is 29 valid? This depends on whether or not the year is a leap year.

This reveals why you need calculateFourDigitYear. While you can use DateTime.DaysInMonth to figure out how many days a given month has, you need the year. Specifically, February 1900 had 28 days, while February 2000 had 29.

Ergo, if day, month, year, and sequenceNumber all fall within their appropriate ranges, tryCreate returns a Some CprNumber value; otherwise, it returns None.

Notice how this is different from an object-oriented constructor with guard clauses. If you try to create an object with invalid input, it'll throw an exception. If you try to create a CprNumber value, you'll receive a CprNumber option, and you, as the client developer, must handle both the Some and the None case. The compiler will enforce this.

> let gjern = Cpr.tryCreate 11 11 11 1118;; val gjern : Cpr.CprNumber option = Some 111111-1118 > gjern |> Option.map Cpr.born;; val it : DateTime option = Some 11.11.1911 00:00:00

As most F# developers know, F# gives you enough syntactic sugar to make this a joy rather than a chore... and the warm and fuzzy feeling of safety is priceless.

CPR data #

The above FSI session uses Cpr.born, which you haven't seen yet. With the tools available so far, it's trivial to implement; all the work is already done:

// CprNumber -> DateTime let born (CprNumber (day, month, year, sequenceNumber)) = DateTime (calculateFourDigitYear year sequenceNumber, month, day)

While the CprNumber case constructor is private, it's still available from inside of the module. The born function pattern-matches day, month, year, and sequenceNumber out of its input argument, and trivially delegates the hard work to calculateFourDigitYear.

Another piece of data you can deduce from a CPR number is the gender of the person:

// CprNumber -> bool let isFemale (CprNumber (_, _, _, sequenceNumber)) = sequenceNumber % 2 = 0 let isMale (CprNumber (_, _, _, sequenceNumber)) = sequenceNumber % 2 <> 0

The rule is that if the sequence number is even, then the person is female; otherwise, the person is male (and if you change sex, you get a new CPR number).

> gjern |> Option.map Cpr.isFemale;; val it : bool option = Some true

Since 1118 is even, this is a woman.

Parsing CPR strings #

CPR numbers are often passed around as text, so you'll need to be able to parse a string representation. As described in the previous article, you should follow Postel's law. Input could include extra white space, and the middle dash could be missing.

The .NET Base Class Library contains enough utility methods working on string values that this isn't going to be particularly difficult. It can, however, be awkward to interoperate with object-oriented APIs, so you'll often benefit from adding a few utility functions that give you curried functions instead of objects with methods. Here's one that adapts Int32.TryParse:

module private Int = // string -> int option let tryParse candidate = match candidate |> Int32.TryParse with | true, i -> Some i | _ -> None

Nothing much goes on here. While F# has pleasant syntax for handling out parameters, it can be inconvenient to have to pattern-match every time you'd like to try to parse an integer.

Here's another helper function:

module private String = // int -> int -> string -> string option let trySubstring startIndex length (s : string) = if s.Length < startIndex + length then None else Some (s.Substring (startIndex, length))

This one comes with two benefits: The first benefit is that it's curried, which enables partial application and piping. You'll see an example of this further down. The second benefit is that it handles at least one error condition in a type-safe manner. When trying to extract a sub-string from a string, the Substring method can throw an exception if the index or length arguments are out of range. This function checks whether it can extract the requested sub-string, and returns None if it can't.

I wouldn't be surprised if there are edge cases (for example involving negative integers) that trySubstring doesn't handle gracefully, but as you may have noticed, this is a function in a private module. I only need it to handle a particular use case, and it does that.

You can now add the tryParse function:

// string -> CprNumber option let tryParse (candidate : string ) = let (<*>) fo xo = fo |> Option.bind (fun f -> xo |> Option.map f) let canonicalized = candidate.Trim().Replace("-", "") let dayCandidate = canonicalized |> String.trySubstring 0 2 let monthCandidate = canonicalized |> String.trySubstring 2 2 let yearCandidate = canonicalized |> String.trySubstring 4 2 let sequenceNumberCandidate = canonicalized |> String.trySubstring 6 4 Some tryCreate <*> Option.bind Int.tryParse dayCandidate <*> Option.bind Int.tryParse monthCandidate <*> Option.bind Int.tryParse yearCandidate <*> Option.bind Int.tryParse sequenceNumberCandidate |> Option.bind id

The function starts by defining a private <*> operator. Readers of the applicative functor article series will recognise this as the 'apply' operator. The reason I added it as a private operator is that I don't need it anywhere else in the code base, and in F#, I'm always worried that if I add <*> at a more visible level, it could collide with other definitions of <*> - for example one for lists. This one particularly makes option an applicative functor.

The first step in parsing candidate is to remove surrounding white space and the interior dash.

The next step is to use String.trySubstring to pull out candidates for day, month, and so on. Each of these four are string option values.

All four of these must be Some values before we can even start to attempt to turn them into a CprNumber value. If only a single value is None, tryParse should return None as well.

You may want to re-read the article on the List applicative functor for a detailed explanation of how the <*> operator works. In tryParse, you have four option values, so you apply them all using four <*> operators. Since four values are being applied, you'll need a function that takes four curried input arguments of the appropriate types. In this case, all four are int option values, so for the first expression in the <*> chain, you'll need an option of a function that takes four int arguments.

Lo and behold! tryCreate takes four int arguments, so the only action you need to take is to make it an option by putting it in a Some case.

The only remaining hurdle is that tryCreate returns CprNumber option, and since you're already 'in' the option applicative functor, you now have a CprNumber option option. Fortunately, bind id is always the 'flattening' combo, so that's easily dealt with.

> let andreas = Cpr.tryParse " 0109636221";; val andreas : Cpr.CprNumber option = Some 010963-6221

Since you now have both a way to parse a string, and turn a CprNumber into a string, you can write the usual round-trip property:

[<Fact>] let ``CprNumber correctly round-trips`` () = Property.check <| property { let! expected = Gen.cprNumber let actual = expected |> string |> Cpr.tryParse Some expected =! actual }

This test uses Hedgehog, Unquote, and xUnit.net. The previous article demonstrates a way to test that Cpr.tryParse can handle mangled input.

Summary #

This article mostly exhibited various F# design techniques you can use to achieve an even better degree of encapsulation than you can easily get with object-oriented languages. Towards the end, you saw how using option as an applicative functor enables you to compose more complex optional values from smaller values. If just a single value is None, the entire expression becomes None, but if all values are Some values, the computation succeeds.

This article is an entry in the F# Advent Calendar in English 2018.

Next: The Lazy applicative functor.

Set is not a functor

Sets aren't functors. An example for object-oriented programmers.

This article is an instalment in an article series about functors. The way functors are frequently presented to programmers is as a generic container (Foo<T>) equipped with a translation method, normally called map, but in C# idiomatically called Select.

It'd be tempting to believe that any generic type with a Select method is a functor, but it takes more than that. The Select method must also obey the functor laws. This article shows an example of a translation that violates the second functor law.

Mapping sets #

The .NET Base Class Library comes with a class called HashSet<T>. This generic class implements IEnumerable<T>, so, via extension methods, it has a Select method.

Unfortunately, that Select method isn't a structure-preserving translation of sets. The problem is that it treats sets as enumerable sequences, which implies an order to elements that isn't part of a set's structure.

In order to understand what the problem is, consider this xUnit.net test:

[Fact] public void SetAsEnumerableSelectLeadsToWrongConclusions() { var set1 = new HashSet<int> { 1, 2, 3 }; var set2 = new HashSet<int> { 3, 2, 1 }; Assert.True(set1.SetEquals(set2)); Func<int, int> id = x => x; var seq1 = set1.AsEnumerable().Select(id); var seq2 = set2.AsEnumerable().Select(id); Assert.False(seq1.SequenceEqual(seq2)); }

This test creates two sets, and by using a Guard Assertion demonstrates that they're equal to each other. It then proceeds to Select over both sets, using the identity function id. The return values aren't HashSet<T> objects, but rather IEnumerable<T> sequences. Due to an implementation detail in HashSet<T>, these two sequences are different, because they were populated in reverse order of each other.

The problem is that the Select extension method has the signature IEnumerable<TResult> Select<T, TResult>(IEnumerable<T>, Func<T, TResult>). It doesn't operate on HashSet<T>, but instead treats it as an ordered sequence of elements. A set isn't intrinsically ordered, so that's not the translation you need.

To be clear, IEnumerable<T> is a functor (as long as the sequence is referentially transparent). It's just not the functor you're looking for. What you need is a method with the signature HashSet<TResult> Select<T, TResult>(HashSet<T>, Func<T, TResult>). Fortunately, such a method is trivial to implement:

public static HashSet<TResult> Select<T, TResult>( this HashSet<T> source, Func<T, TResult> selector) { return new HashSet<TResult>(source.AsEnumerable().Select(selector)); }

This extension method offers a proper Select method that translates one HashSet<T> into another. It does so by enumerating the elements of the set, then using the Select method for IEnumerable<T>, and finally wrapping the resulting sequence in a new HashSet<TResult> object.

This is a better candidate for a translation of sets. Is it a functor, then?

Second functor law #

Even though HashSet<T> and the new Select method have the correct types, it's still only a functor if it obeys the functor laws. It's easy, however, to come up with a counter-example that demonstrates that Select violates the second functor law.

Consider a pair of conversion methods between string and DateTimeOffset:

public static DateTimeOffset ParseDateTime(string s) { DateTimeOffset dt; if (DateTimeOffset.TryParse(s, out dt)) return dt; return DateTimeOffset.MinValue; } public static string FormatDateTime(DateTimeOffset dt) { return dt.ToString("yyyy'-'MM'-'dd'T'HH':'mm':'sszzz"); }

The first method, ParseDateTime, converts a string into a DateTimeOffset value. It tries to parse the input, and returns the corresponding DateTimeOffset value if this is possible; for all other input values, it simply returns DateTimeOffset.MinValue. You may dislike how the method deals with input values it can't parse, but that's not important. In order to prove that sets aren't functors, I just need one counter-example, and the one I'll pick will not involve unparseable strings.

The FormatDateTime method converts any DateTimeOffset value to an ISO 8601 string.

The DateTimeOffset type is the interesting piece of the puzzle. In case you're not intimately familiar with it, a DateTimeOffset value contains a date, a time, and a time-zone offset. You can, for example create one like this:

> new DateTimeOffset(2018, 4, 17, 15, 9, 55, TimeSpan.FromHours(2)) [17.04.2018 15:09:55 +02:00]

This represents April 17, 2018, at 15:09:55 at UTC+2. You can convert that value to UTC:

> new DateTimeOffset(2018, 4, 17, 15, 9, 55, TimeSpan.FromHours(2)).ToUniversalTime() [17.04.2018 13:09:55 +00:00]

This value clearly contains different constituent elements, but it does represent the same instant in time. This is how DateTimeOffset implements Equals. These two object are considered equal, as the following test will demonstrate.

In a sense, you could argue that there's some sense in implementing equality for DateTimeOffset in that way, but this unusual behaviour provides just the counter-example that demonstrates that sets aren't functors:

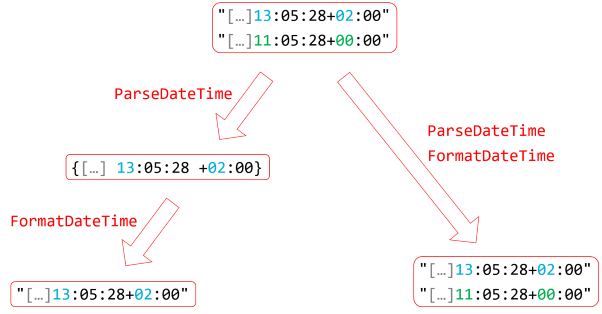

[Fact] public void SetViolatesSecondFunctorLaw() { var x = "2018-04-17T13:05:28+02:00"; var y = "2018-04-17T11:05:28+00:00"; Assert.Equal(ParseDateTime(x), ParseDateTime(y)); var set = new HashSet<string> { x, y }; var l = set.Select(ParseDateTime).Select(FormatDateTime); var r = set.Select(s => FormatDateTime(ParseDateTime(s))); Assert.False(l.SetEquals(r)); }

This passing test provides the required counter-example. It first creates two ISO 8601 representations of the same instant. As the Guard Assertion demonstrates, the corresponding DateTimeOffset values are considered equal.

In the act phase, the test creates a set of these two strings. It then performs two round-trips over DateTimeOffset and back to string. The second functor law states that it shouldn't matter whether you do it in one or two steps, but it does. Assert.False passes because l is not equal to r. Q.E.D.

An illustration #

The problem is that sets only contain one element of each value, and due to the way that DateTimeOffset interprets equality, two values that both represent the same instant are collapsed into a single element when taking an intermediary step. You can illustrate it like this:

In this illustration, I've hidden the date behind an ellipsis in order to improve clarity. The date segments are irrelevant in this example, since they're all identical.

You start with a set of two string values. These are obviously different, so the set contains two elements. When you map the set using the ParseDateTime function, you get two DateTimeOffset values that .NET considers equal. For that reason, HashSet<DateTimeOffset> considers the second value redundant, and ignores it. Therefore, the intermediary set contains only a single element. When you map that set with FormatDateTime, there's only a single element to translate, so the final set contains only a single string value.

On the other hand, when you map the input set without an intermediary set, each string value is first converted into a DateTimeOffset value, and then immediately thereafter converted back to a string value. Since the two resulting strings are different, the resulting set contains two values.

Which of these paths is correct, then? Both of them. That's the problem. When considering the semantics of sets, both translations produce correct results. Since the results diverge, however, the translation isn't a functor.

Summary #

A functor must not only preserve the structure of the data container, but also of functions. The functor laws express that a translation of functions preserves the structure of how the functions compose, in addition to preserving the structure of the containers. Mapping a set doesn't preserve those structures, and that's the reason that sets aren't functors.

P.S. #

2019-01-09. There's been some controversy around this post, and more than one person has pointed out to me that the underlying reason that the functor laws are violated in the above example is because DateTimeOffset behaves oddly. Specifically, its Equals implementation breaks the substitution property of equality. See e.g. Giacomo Citi's response for details.

The term functor originates from category theory, and I don't claim to be an expert on that topic. It's unclear to me if the underlying concept of equality is explicitly defined in category theory, but it wouldn't surprise me if it is. If that definition involves the substitution property, then the counter-example presented here says nothing about Set as a functor, but rather that DateTimeOffset has unlawful equality behaviour.

Next: Applicative functors.

Comments

The Problem here is not the HashSet, but the DateTimeOffset Class. It defines Equality loosely, so Time-Comparison is possible between different Time-Zones. The Functor Property can be restored by applying a stricter Equality Comparer like this one:

var l = set.Select(ParseDateTime, StrictDateTimeOffsetComparer._).Select(FormatDateTime);

/// <summary> Compares both <see cref="DateTimeOffset.Offset"/> and <see cref="DateTimeOffset.UtcTicks"/> </summary>

public class StrictDateTimeOffsetComparer : IEqualityComparer<DateTimeOffset> {

public bool Equals(DateTimeOffset x, DateTimeOffset y)

=> x.Offset.Equals(y.Offset) && x.UtcTicks == y.UtcTicks;

public int GetHashCode(DateTimeOffset obj) => HashCode.Combine(obj.Offset, obj.UtcTicks);

/// <summary> Singleton Instance </summary>

public static readonly StrictDateTimeOffsetComparer _ = new();

StrictDateTimeOffsetComparer(){}

}

As for preserving Referential Transparency, the Distinction between Equality and Identity is important.

By Default, Classes implement 'Equals' and '==' using ReferenceEquals which guarantees Substitution.

Structs don't have a Reference unless they are boxed, instead they are copied and usually have Value Semantics.

To be truly 'referentially transparent' though, Equality must be based on ALL Fields.

When someone overrides Equals with a weaker Equivalence Relation than ReferenceEquals,

it is their Responsibility to preserve Substitution.

No Library Author can cover all possible application cases for HashSet.

Matt, thank you for writing. DateTimeOffset already defines the EqualsExact method. Wouldn't it be easier to use that?

In order to prove that sets aren't functors, I just need one counter-example, and the one I'll pick will not involve unparseable strings.

In context, you are correct; you can pick whatever example you want to create a counter-example. However, your counter-example does not prove that HashSet<> is not a functor.

We often say that a type is or isn't a functor. That is not quite right. It is more correct to say that a type T<> and a function with inputs T<A> and A and output T<B> are or are not a functor. Then I think it is reasonable to say some type is a functor if (and only if) there exists a function such that the type and that functor are a functor. In that case, to conclude that HashSet<> is not a functor requires one to prove that, for all functions f, the pair HashSet<> and f are not a functor. Because of the universal quantification, this cannot be achieved via a single counter-example.

Your counter-example shows that the type HashSet<> and your function that you called Select is not a functor. Your Select function uses this constructor overload of HashSet<>, which eventually uses EqualityComparer<T>.Default in places like here and here. The instance of EqualityComparer<DateTimeOffset>.Default implements its Equals method via DateTimeOffset.Equals. The comments by Giacomo Citi and others explained "why" your Select function (and the type HashSet<>) doesn't satisfy the second functor law: you picked a constructor overload of HashSet<> that uses an implementation of IEqualityComparer<DateTimeOffset> that depends on DateTimeOffset.Equals, which doesn't satisfy the substitution property.

The term functor originates from category theory, and I don't claim to be an expert on that topic. It's unclear to me if the underlying concept of equality is explicitly defined in category theory, but it wouldn't surprise me if it is. If that definition involves the substitution property, then the counter-example presented here says nothing about Set as a functor, but rather that DateTimeOffset has unlawful equality behaviour.

I think this is a red herring. Microsoft can implement HashSet<> however it wants and you can implement your Select function however you want. Then the pair are either a functor or not. Independent of this is that Microsoft gets to define the contract for IEqualityComparer<>.Equals. The documentation states that a valid implementation must be reflexive, symmetric, and transitive but does not require that the substitution property is satisifed.

I think the correct question is this: Is HashSet<> a functor?...which is equivalent to: Does there exist a function such that HashSet<> and that function are a functor? I think the answer is "yes".

Implement Select by calling the constructor overload of HashSet<> that takes an instance of IEnumerable<> as well as an instance of IEqualityComparer<>. For the second argument, provide an instance that implements Equals by always returning false (and it suffices to implement GetHashCode by returning a constant). I claim that HashSet<> and that function are a functor. In fact, I further claim that this functor is isomorphic to the functor IEnumerable<> (with the function Enumerable.Select). That doesn't make it a very interesting functor, but it is a functor nonetheless. (Also, notice that this implementation of Equals is not reflexive, but I still used it to create a functor.)

Tyson, thank you for writing. Yes, I agree with most of what you write. As I added in the P.S., I'm aware that this post draws the wrong conclusions. I also understand why.

It's true that one way to think of a functor is a type and an associated function. When I (and others) sometimes fall into the trap of directly equating types with functors, it's because this is the most common implementation of functors (and monads) in statically typed programming. This is probably most explicit in Haskell, where a particular functor is explicitly associated with a type. This has the implication that in order to distinguish between multiple functors, Haskell comes with all sort of wrapper types. You mostly see this in the context of semigroups and monoids, where the Sum and Product wrappers exist only to distinguish between two monoids over the same type (numbers).

You could imagine something similar happening with Either and tuple bifunctors. By conventions (and because of a bit pseudo-principled hand-waving), we conventionally pick one of the their dimensions for the 'functor instance'. For example, we pick the right dimension of Either for the functor instance. In both Haskell, F#, and C#, this enables certain syntax, such as Haskell do notation, F# computation expressions, or C# query syntax.

If we wanted to instead make mapping over, say, left, the 'functor instance', we can't do that without throwing away the language support for the right side. The solution is to introduce a new type that comes with the desired function associated. This enables the compiler to distinguish between two (or more) functors over what would otherwise be the same type.

That aside, can we resolve the issue in the way that you suggest? Possibly. I haven't thought deep and long about it, but what you write sounds plausible.

For anyone who wants to use such a set functor for real code, I'd suggest wrapping it in another type, since relying on client developers to use the 'correct' constructor overload seems too brittle.

Well, yes, but since you have to implement a proper matching HashCode Function anyway, I thought it would be more obvious to write a matching Equals Function too.

Ah, so you're proposing to subclass HashSet<DateTimeOffset> and pass the proper EqualityComparer to the base class. Alright, that would be a proper Functor then.

The Test Data Generator applicative functor

Test Data Generator modelled as an applicative functor, with examples in C# and F#.

This article is an instalment in an article series about applicative functors. It shows yet another example of an applicative functor. I tend to think of applicative functors as an abstraction of 'combinations' of values and functions, but this is most evident for lists and other collections. Even so, I think that the intuition also holds for Maybe as an applicative functor as well as validation as an applicative functor. This is also the case for the Test Data Generator functor.

Applicative generator in C# #

In a previous article, you saw how to implement a Test Data Generator as a functor in C#. The core class is this Generator<T> class:

public class Generator<T> { private readonly Func<Random, T> generate; public Generator(Func<Random, T> generate) { if (generate == null) throw new ArgumentNullException(nameof(generate)); this.generate = generate; } public Generator<T1> Select<T1>(Func<T, T1> f) { if (f == null) throw new ArgumentNullException(nameof(f)); Func<Random, T1> newGenerator = r => f(this.generate(r)); return new Generator<T1>(newGenerator); } public T Generate(Random random) { if (random == null) throw new ArgumentNullException(nameof(random)); return this.generate(random); } }

This is merely repetition from the earlier article.

Generator<T> is already a functor, but you can make it an applicative functor by adding an extension method like this:

public static Generator<TResult> Apply<T, TResult>( this Generator<Func<T, TResult>> selectors, Generator<T> generator) { if (selectors == null) throw new ArgumentNullException(nameof(selectors)); if (generator == null) throw new ArgumentNullException(nameof(generator)); Func<Random, TResult> newGenerator = r => { var f = selectors.Generate(r); var x = generator.Generate(r); return f(x); }; return new Generator<TResult>(newGenerator); }

The Apply function combines a generator of functions with a generator of values. Given these two generators, it defines a closure over both, and packages that function in a new generator object.

CPR example #

When is it interesting to combine a randomly selected function with a randomly generated value? Here's an example.

In Denmark, everyone has a personal identification number, in Danish called CPR-nummer (CPR number). It's somewhat comparable to the U.S. Social Security number, but surely more Orwellian.

CPR numbers have a simple format: DDMMYY-SSSS, where the first six digits indicate a person's birth date, and the last four digits are a sequence number. An example could be 010203-1234, which indicates a woman born February 1, 1903. Assume that you're writing a system that has to accept CPR numbers as input. You've represented CPR number as a class called CprNumber, and you've already written a parser, but now it turns out that sometimes users enter slightly malformed data.

Sometimes users copy and paste CPR numbers from other sources, and occasionally, they inadvertently include a leading or trailing space. Other users forget the dash between the birth date and the sequence number. In other words, sometimes you receive input like "050301-4231 " or "1211993321".

Using your fancy Test Data Generator, you'd like to write a test that verifies that your parser follows Postel's law: Be conservative in what you send, be liberal in what you accept.

Assume that you already have a generator that can produce valid CprNumber objects. One test strategy, then, could be to generate a valid CprNumber object, convert it to a string, slightly taint or mangle that string, and then attempt to parse the tainted string.

There's more than one way to taint a valid CPR number string. You could, for example, define three functions like these:

Func<string, string> addLeadingSpace = s => " " + s; Func<string, string> addTrailingSpace = s => s + " "; Func<string, string> removeDash = s => s.Replace("-", "");

The first function adds a leading space to a string, the second adds a trailing space, and the third removes all dashes it finds. Can you make a generator out of those functions?

Yes, you can, with this common general-purpose method:

public static Generator<T> Elements<T>(params T[] alternatives) { if (alternatives == null) throw new ArgumentNullException(nameof(alternatives)); return new Generator<T>(r => { var index = r.Next(alternatives.Length); return alternatives[index]; }); }

This generator randomly selects a value from an array of values, with equal probability. It's a common method, because you'll find equivalent functions in QuickCheck, FsCheck, and Hedgehog (where it's called item).

You can write the entire test like this:

[Fact] public void ParseCorrectlyHandlesTaintedInput() { Func<string, string> addLeadingSpace = s => " " + s; Func<string, string> addTrailingSpace = s => s + " "; Func<string, string> removeDash = s => s.Replace("-", ""); Generator<Func<string, string>> tainters = Gen.Elements( addLeadingSpace, addTrailingSpace, removeDash, s => addLeadingSpace(removeDash(s)), s => addTrailingSpace(addLeadingSpace(s))); var g = tainters.Apply(Gen.CprNumber.Select(n => n.ToString())); var rnd = new Random(); var candidate = g.Generate(rnd); CprNumber dummy; var actual = CprNumber.TryParse(candidate, out dummy); Assert.True(actual); }

You first define the three 'tainting' functions, and then create the tainters generator. Notice that this generator not only contains the three functions, but also combinations of these functions. I didn't include all possible combinations of functions, but in an earlier article, you saw how to do just that.

Gen.CprNumber is a Generator<CprNumber>, while you actually need a Generator<string>. Since Generator<T> is a functor, you can easily map Gen.CprNumber with its Select method.

You now have a Generator<Func<string, string>> (tainters) and a Generator<string>, so you can combine them using Apply. The result g is another Generator<string> that generates 'tainted', but still valid, CPR string representations.

In order to keep the example as simple as possible, the Arrange and Assert phases of the test only checks if CprNumber.TryParse returns true. A better test would also verify that the resulting CprNumber value was correct, but in order to do that, you need to keep the originally generated CprNumber object around so that you can compare this expected value to the actual value. This is possible, but complicates the code, so I left it out of the example.

F# Hedgehog #

You can translate the above unit test to F#, using Hedgehog as a property-based testing library. Another option would be FsCheck. Without further ado, I present to you the test:

[<Fact>] let ``Correctly parses tainted text`` () = Property.check <| property { let removeDash (s : string) = s.Replace ("-", "") let! candidate = Gen.item [ sprintf " %s" sprintf "%s " removeDash removeDash >> sprintf " %s" sprintf " %s "] <*> Gen.map string Gen.cprNumber let actual = Cpr.tryParse candidate test <@ actual |> Option.isSome @> }

As I already mentioned in passing, Hedgehog's equivalent to the above Elements method is called Gen.item. Here, you see the same five functions as above passed in a list. Thanks to partial application and type inference, an expression like sprintf " %s" is already a function of the type string -> string, as is removeDash. For the last of the five functions, I found it easier to write (and read) sprintf " %s " instead of sprintf " %s" >> sprintf "%s ".

Equivalent to the C# example, Gen.cprNumber is a Gen<CprNumber>, so mapping it with the built-in string function translates it to a Gen<string>.

Hedgehog already includes an <*> operator; Gen is an applicative functor.

Summary #

Applicative functors are fairly common. I find it intuitive to think of them as an abstraction of how to make combinations of things. Modelling a Test Data Generator as an applicative functor enables you to create random combinations of functions and values.

While working on the Hedgehog example, I discovered another great use of option as an applicative functor. You can see this in the next article.

Next: Danish CPR numbers in F#.

Functional architecture: a definition

How do you know whether your software architecture follows good functional programming practices? Here's a way to tell.

Over the years, I've written articles on functional architecture, including Functional architecture is Ports and Adapters, given conference talks, and even produced a Pluralsight course on the topic. How should we define functional architecture, though?

People sometimes ask me about their F# code: How do I know that my F# code is functional?

Please permit me a little detour before I answer that question.

What's the definition of object-oriented design? #

Object-oriented design (OOD) has been around for decades; at least since the nineteen-sixties. Sometimes people get into discussions about whether or not a particular design is good object-oriented design. I know, since I've found myself in such discussions more than once.

These discussions usually die out without resolution, because it seems that no-one can provide a sufficiently rigorous definition of OOD that enables people to determine an outcome. One thing's certain, though, so I'd like to posit this corollary to Godwin's law:

As a discussion about OOD grows longer, the probability of a comparison involving Alan Kay approaches 1.Not that I, in any way, wish to suggest any logical relationship between Alan Kay and Hitler, but in a discussion about OOD, sooner or later someone states:

"That's not what Alan Kay had in mind!"That may be true, even.

My problem with that assertion is that I've never been able to figure out exactly what Alan Kay had in mind. It's something that involves message-passing and Smalltalk, and conceivably, the best modern example of this style of programming might be Erlang (often, ironically, touted as a functional programming language).

This doesn't seem to be a good basis for determining whether or not something is object-oriented.

In any case, despite what Alan Kay had in mind, that wasn't the object-oriented programming we got. While Eiffel is in many ways a strange programming language, the philosophy of OOD presented in Object-Oriented Software Construction feels, to me, like something from which Java could develop.

I'm not aware of the detailed history of Java, but the spirit of the language seems more compatible with Bertrand Meyer's vision than with Alan Kay's.

Subsequently, C# would hardly look the way it does had it not been for Java.

The OOD we got wasn't the OOD originally envisioned. To make matters worse, the OOD we did get seems to be driven by unclear principles. Yes, there's the idea about encapsulation, but while Meyer had some very specific ideas about design-by-contract, that was the distinguishing trait of his vision that didn't make the transition to Java or C#.

It's not clear what OOD is, but I think we can do better when it comes to functional programming (FP).

Referential transparency #

It's possible to pinpoint what FP is to a degree not possible with OOD. Some people may be uncomfortable with the following definition; I don't claim that this is a generally accepted definition. It does have, however, the advantage that it's precise and supports falsification.

The foundation of FP is referential transparency. It's the idea that, for an expression, the left- and right-hand sides of the equal sign are truly equal:

two = 1 + 1

In Haskell, this is enforced by the compiler. The = operator truly implies equality. To be clear, this isn't the case in C#:

var two = 1 + 1;

In C#, Java, and other imperative languages, the = implies assignment, not equality. Here, two can change, despite the absurdity of the claim.

When code is referentially transparent, then you can substitute the expression on the right-hand side with the symbol on the left-hand side. This seems obvious when we consider addition of two numbers, but becomes less clear when we consider function invocation:

i = findBestNumber [42, 1337, 2112, 90125]

In Haskell, functions are referentially transparent. You don't know exactly what findBestNumber does, but you do know that you can substitute i with findBestNumber [42, 1337, 2112, 90125], or vice versa.

In order for a function to be referentially transparent (also known as a pure function), it must have two properties:

- It must always return the same output for the same input. We call this quality determinism.

- It must have no side effects.

The reason I prefer this definition is that it supports falsification. You can assert that a function or value is pure; all it takes is a single counter-example to prove that it's not. A counter-example can be either an input value that doesn't always produce the same return value, or a function call that produces a side effect.

I'm not aware of any other definition that offers similar decision power.

IO #

All software produces side effects: Changing a pixel on a monitor is a side effect. Writing a byte to disk is a side effect. Transmitting a bit over a network is a side effect. It seems that it'd be impossible to interact with pure functions, and indeed, it is, without some sort of affordance for impurity.

Haskell resolves this problem with the IO monad, but the purpose of this article isn't to serve as an introduction to Haskell, monads, or IO. The point is only that in FP, you need some sort of 'wormhole' that will enable you to interact with the real world. There's no way around that, but logically, the rules still apply. Pure functions must stay deterministic and free of side effects.

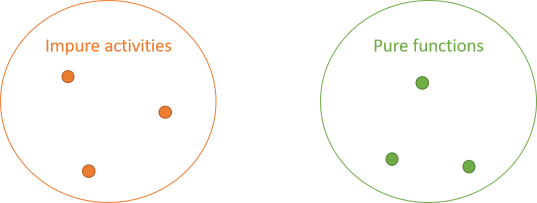

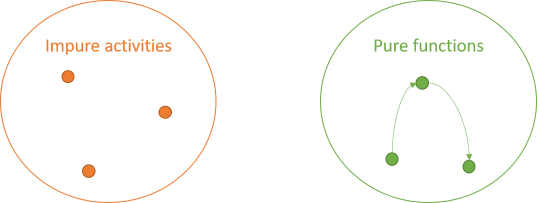

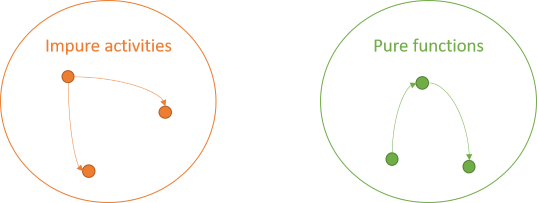

It follows that you have two groups of operations: impure activities and pure functions.

While there are rules for pure functions, those rules still allow for interaction. One pure function can call another pure function. Such an interaction doesn't change the properties of any of those functions. Both caller and callee remain side-effect-free and deterministic.

The impure activities can also interact. No rules apply to them:

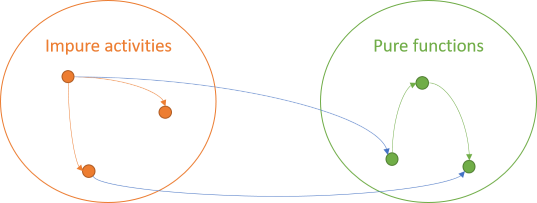

Finally, since no rules apply to impure activities, they can invoke pure functions:

Impure activities are unbound by rules, so they can do anything they need to do, including painting pixels, writing to files, or calling pure functions. A pure function is deterministic and has no side effects. Those properties don't change just because the result is subsequently displayed on a screen.

The fourth combination of arrows is, however, illegal.

A pure function can't invoke an impure activity.If it did, it would either transitively produce a side effect or non-deterministic behaviour.

This is the rule of functional architecture. You can also explain it with a table:

| Callee | |||

|---|---|---|---|

| Impure | Pure | ||

| Caller | Impure | Valid | Valid |

| Pure | Invalid | Valid | |

Clearly, you can trivially obey the functional interaction law by writing exclusively impure code. In a sense, this is what you do by default in imperative programming languages. If you're familiar with Haskell, imagine writing an entire program in IO. That would be possible, but pointless.

Thus, we need to add the qualifier that a significant part of the code base should consist of pure code. How much? The more, the better. Subjectively, I'd say significantly more than half the code base should be pure. I'm concerned, though, that stating a hard limit is as useful here as it is for code coverage.

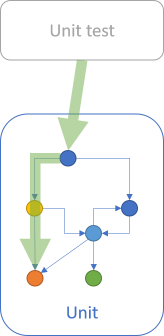

Tooling #

How do you verify that you obey the functional interaction law? Unfortunately, in most languages the answer is that this requires painstaking analysis. This can be surprisingly tricky to get right. Consider this realistic F# example:

let createEmailNotification templates msg (user : UserEmailData) = let { SubjectLine = subjectTemplate; Content = contentTemplate } = templates |> Map.tryFind user.Localization |> Option.defaultValue (Map.find Localizations.english templates) let r = Templating.append (Templating.replacementOfEnvelope msg) (Templating.replacementOfFlatRecord user) let subject = Templating.run subjectTemplate r let content = Templating.run contentTemplate r { RecipientUserId = user.UserId EmailAddress = user.EmailAddress NotificationSubjectLine = subject NotificationText = content CreatedDate = DateTime.UtcNow }

Is this a pure function?

You may protest that this isn't a fair question, because you don't know what, say, Templating.replacementOfFlatRecord does, but that turns out to be irrelevant. The presence of DateTime.UtcNow makes the entire function impure, because getting the current date and time is non-deterministic. This trait is transitive, which means that any code that calls createEmailNotification is also going to be impure.

That means that the purity of an expression like the following easily becomes obscure.

let emailMessages = specificUsers |> Seq.map (createEmailNotification templates msg)

Is this a pure expression? In this case, we've just established that createEmailNotification is impure, so that wasn't hard to answer. The problem, however, is that the burden is on you, the code reader, to remember which functions are pure, and which ones aren't. In a large code base, this soon becomes a formidable endeavour.

It'd be nice if there was a tool that could automatically check the functional interaction law.

This is where many people in the functional programming community become uncomfortable about this definition of functional architecture. The only tools that I'm aware of that enforce the functional interaction law are a few programming languages, most notably Haskell (others exist, too).

Haskell enforces the functional interaction law via its IO type. You can't use an IO value from within a pure function (a function that doesn't return IO). If you try, your code doesn't compile.

I've personally used Haskell repeatedly to understand the limits of functional architecture, for example to establish that Dependency Injection isn't functional because it makes everything impure.

The overall lack of tooling, however, may make people uncomfortable, because it means that most so-called functional languages (e.g. F#, Erlang, Elixir, and Clojure) offer no support for validating or enforcing functional architecture.

My own experience with writing entire applications in F# is that I frequently, inadvertently violate the functional interaction law somewhere deep in the bowels of my code.

Conclusion #

What's functional architecture? I propose that it's code that obeys the functional architecture law, and that is made up of a significant portion of pure functions.

This is a narrow definition. It excludes a lot of code bases that could easily be considered 'functional enough'. By the definition, I don't intend to denigrate fine programming languages like F#, Clojure, Erlang, etcetera. I personally find it a joy to write in F#, which is my default language choice for .NET programming.

My motivation for offering this definition, albeit restrictive, is to avoid the OOD situation where it seems entirely subjective whether or not something is object-oriented. With the functional interaction law, we may conclude that most (non-Haskell) programs are probably not 'really' functional, but at least we establish a falsifiable ideal to strive for.

This would enable us to look at, say, an F# code base and start discussing how close to the ideal is it?

Ultimately, functional architecture isn't a goal in itself. It's a means to achieve an objective, such as a sustainable code base. I find that FP helps me keep a code base sustainable, but often, 'functional enough' is sufficient to accomplish that.

Comments

Good idea of basing the definition on falsifiability!

The createEmailNotification example makes me wonder though. If it is not a functional design, then what design it actually is? I mean it has to be some design and it does not looks like object-oriented or procedural one.

Max, thank you for writing. I'm not sure whether the assertion that it has to be some design or other is axiomatically obvious, but I suppose that we humans have an innate need to categorise things.

Don Syme calls F# a functional-first language, and that epithet could easily apply to that style of programming as well. Other options could be near-functional, or perhaps, if we're in a slightly more academic mood, quasi-functional.

In daily use, we'd probably still call code like that functional, and I don't think it'll cause much confusion.

If I remember the history of programming correctly, the first attempts at functional programming didn't focus on referential transparency, but simply on the concept of functions as first-class language features, including lambda expressions and higher-order functions. The little I know of Lisp corroborates that view on the history of functional programming.

Only later (I think) did languages appear that make compile-time distinction between pure and impure code.

My purpose with this article wasn't to exclude a large number of languages or code bases from being functional, but just to offer a falsifiable test that we can use for evaluation purposes, if we're ever in doubt. This is something that I feel is sorely missing from the object-oriented debate, and while I can't think of a way to remedy the OOD situation, I hope that the present definition of functional architecture presents firmer ground upon which to have a debate.

Thank you for your definition that forms good grounds for reasoning about functional property of code. I remember some people say that even C# is functional as it allows for delegates. Several thoughts:

1. Remember [Pure] attribute and CodeContracts? It aided the purpose of defensive programming to hold pre/postconditions and invariants. I have this impression that both functional purity and defensive programming help us find ad-hoc quasi-mathematical proofs of code correctness after we load a codebase to our heads. It's way easier then to presume how it works and make changes. Of course it's not mathematical in the strict sense, but still - we tend to trust the code more (e.g. threading). I'm pretty sure unit tests belong to this family too.

2. Isn't the purity concept anywhere close to gateways (code that crosses the boundaries of program determinism control zone, e.g. IO, time, volatile memory)? It's especially evident while refactoring legacy code to make it unit testable. We often extract out infrastructure dependent parts (impure activities, e.g. DateTime.Now) for the other be deterministic (pure) and hence - testable.

3. Can the whole program/system be as pure as this:

INPUT -> PURE_CODE -> OUTPUTI'm afraid not as it'd mean the pure code needed to know all the required input data upfront. That's impossible most of times. So, when it comes to measures, I'd argue that the number of pure code lines is enough to tell how pure the codebase is. I'd accompany this with e.g. percentile distribution of pure chunk length (functions/blocks of contingent im/purity etc.). E.g. I'd personally favour 1) over 2) in the following:

1. INPUT -> 3 x LONG_PURE_CHUNK + 2 x LONG_IMPURE_CHUNK -> OUTPUT 2. INPUT -> 10 x SHORT_PURE_CHUNK + 7 x SHORT_IMPURE_CHUNK -> OUTPUT

4. I'd love to see such tools too :) I believe the purity concept does not pertain only to FP nor OO and is related to the early foundational days of computer programming with mathematical proofs they had.

Marcin, thank you for writing. To be clear, you can perform side effects or non-deterministic behaviour in C# delegates, so delegates aren't guaranteed to be referentially transparent. In fact, delegates, and particularly closures, are isomorphic to objects. In C#, they even compile to classes.

I'm aware that some people think that they're doing functional programming when they use lambda expressions in C#, but I disagree.

I'll see if I can address your other comments below.

Re: 1. #

Some functions are certainly ad-hoc, while others are grounded in mathematics. I agree that pure functions 'fit better in your brain', mostly because it's clear that the only stimuli that can affect the outcome of the function is the input. Granted, if we imagine a concrete, ad-hoc function that takes 23 function arguments, we might still consider it bad code. Small functions, though, tend to be easy to reason about.

I've previously written about the relationship between types and tests, and I believe that there's some sort of linearity in that relationship. The better the type system, the fewer tests you need. The weaker the type system, the more tests you need. It's no wonder that the unit testing culture related to languages like Ruby seems stronger than in the Haskell community.

Re: 2. #

In my experience, most people make legacy code more testable by introducing some variation of Dependency Injection. This may enable you to control some otherwise non-deterministic behaviour from tests, but it doesn't make the design more functional. Those types of dependencies (e.g. on DateTime.Now) are inherently impure, and therefore they make everything that invokes them impure as well. The above functional interaction law excludes such a design from being considered functional.

Functional code, on the other hand, is intrinsically testable. Watch out for a future series of articles that show how to move an object-oriented architecture towards something both more functional, more sustainable, and more testable.

Re: 3. #

A command-line utility could be as pure as you suggest, but most other programs will need to at least

- load some more data from impure sources, such as files, databases, or the current time,

- run some pure functions,

- output the results to some impure destinations, such as files, databases, UI, email, and so on.

Re: 4. #

I'm not sure I understand what you mean by that comment, but the mathematical proofs about computability pre-date computer programming. Gödel's work on recursive functions is from 1933, Church's work on lambda calculus is from 1936, and Turing's paper On Computable Numbers, with an Application to the Entscheidungsproblem is from later in 1936. Turing and Church later showed that all three definitions of computability are equivalent.

I don't know much about Gödel's work, but lambda calculus is defined entirely on the foundation of functions, while the Turing machines described in Turing's paper have nothing to do with functions in the mathematical sense.

Mathematical functions are, however, referentially transparent. They're also total, meaning that they always return a result for any input in the function's domain. Due to the halting problem, a Turing-complete language can't guarantee that all functions are total.

Mathematical functions are not always defined for all input, as seen with division (since it's only defined in ℝ ∖ {0}, real numbers without 0). We see for instance the term partial applied instead of total. You could say that the domain of division in ℝ is ℝ ∖ {0}.

Having total functions for some space S where the domain of the function is the same as S are nice to have, but not we are not always that fortunate.

Oskar, yes, you're right. I admit that what I wrote about totality above was a bit too sweeping. If we really start to dissect what I wrote, it's wrong on more levels. Clearly, mathematical functions (or, at least, algorithms) that never return exist; an algorithm to calculate all the decimals of π would be an example.

If I understand the results that both Church and Gödel arrived at, such algorithms can be encoded in such a way that we can also consider them mathematical functions. If so, then not all mathematical functions are total.

As you may be able to tell, it wasn't entirely clear to me what I was supposed to respond to, and it seems (now that I reread what I wrote) that I managed to write a few sentences that don't make clear sense. My main point, however, seems to have been that we can't write a general-purpose tool that'll be able to determine whether or not any arbitrary function is total or not. Does that part seem reasonable?

Thanks for this article. I feel there is a huge benefit of having a precise definition like that.

I want to point out that there is one more dimension in deciding how far a piece of code is from ‘the ideal functional architecture’. And that is developer's intention. I know, that sound stupid. And technically that code is not pure. But we are talking about how close to the ideal it is.

This is a problem I discovered some time ago. Especially in dynamic languages even the code that looks pure might not be strictly speaking pure because of some dynamic behaviour of the language and other quirks. There are some examples in JavaScript in this article: Do pure functions exist in JavaScript?.

Why is this a different dimention? For F# code there could exist a static analysis tool that would discover usage of impure function down in the code base. That is simply impossible in e.g. JavaScript. So I suggest that the intention the developer had and which is manifested in the code (assuming no harmful behaviour) should also play its role.

What do you and others think? Should such a soft view be considered?

Robin, thank you for writing. If I understand you correctly, you're asking whether we should consider code functional if the programmer intended it to be functional?

To be clear, I don't think that a static analysis tool like the one you suggest could be made for a language like F#. Not only do you need to analyse all of your own code, but if your code calls other libraries, you need to know whether or not these other libraries are pure as well. That's not a trivial undertaking.

My point is that even in F#, a programmer could intend to write pure code, yet still inadvertently fail to do so. Should we still consider it functional?

I admit that I haven't thought deeply about this, but I'd be inclined to say no. I'm sure that you can think of many examples, from other walks of life, where good intentions led to bad outcomes.

The goal of functional architecture is ultimately larger than just purity for the sake of purity. Why is functional programming valuable? I believe that it's valuable because a pure function holds few surprises. When you call a pure function, it doesn't have unintended side-effects. The lack of state mutation eradicates aliasing bugs.

If a programmer intends to write functional code, but nevertheless leaves behind many surprises in the code, the potential of functional programming remains unfulfilled.

Perhaps I misunderstood what you meant, so please elaborate if I missed your point.

You mention, that most languages provide no way to determine whatever or not, a function is pure. I agree, that this is an oversight in functional programming and while FSharp is my most favorite language, is there one that is, you could say, quite surprisingly well defined in this regard. Surprisingly because it is not a functional language per se. It supports nearly all the important concepts, while not something like an HMD type system and immutable data structures.

I am speaking about Nim. It has the keyword func for pure functions and opposite to this the keyword proc in order to perform procedures. In that context is it already obvious, what is the cause for all this confusion:

Calling one thing function, while it is actually something impure, has let to the acceptance that functions can be impure.

And this is the cause of all this trouble: Both in programming, and as well about this specific topic. Talking about implicit versus explicit as well.Matthias, thank you for writing. I didn't know about Nim.