ploeh blog danish software design

From Test Data Builders to the identity functor

The Test Data Builder unit testing design pattern is closely related to the identity functor.

The Test Data Builder design pattern is a valuable technique for managing data for unit testing. It enables you to express test cases in such a way that the important parts of the test case stands out in your code, while the unimportant parts disappear. It perfectly fits Robert C. Martin's definition of an abstraction:

"Abstraction is the elimination of the irrelevant and the amplification of the essential"Not only are Test Data Builders great abstractions, but they're also eminently composable. You can use fine-grained Test Data Builders as building blocks for more complex Test Data Builders. This turns out to be more than a coincidence. In this series of articles, you'll learn how Test Data Builders are closely related to the identity functor. If you don't know what a functor is, then keep reading; you'll learn about functors as well.

- Test Data Builders in C#

- Generalised Test Data Builder

- The Builder functor

- Builder as Identity

- Test data without Builders

- (The Test Data Generator functor)

- How to make your code easier to use in unit tests.

- What a functor is.

- How Test Data Builders generalise.

- Why Test Data Builders are composable.

For readers wondering if this is 'yet another monad tutorial', it's not; it's a functor tutorial.

Next: Test Data Builders in C#.

F# free monad recipe

How to create free monads in F#.

This is not a design pattern, but it's something related. Let's call it a recipe. A design pattern should, in my opinion, be fairly language-agnostic (although hardly universally applicable). This article, on the contrary, specifically addresses a problem in F#:

How do you create a free monad in F#?

By following the present recipe.

The recipe here is a step-by-step process, but be sure to first read the sections on motivation and when to use it. A free monads isn't a goal in itself.

This article doesn't attempt to explain the details of free monads, but instead serve as a reference. For an introduction to free monads, I think my article Pure times is a good place to start. See also the Motivating examples section, below.

Motivation #

A frequently asked question about F# is: what's the F# equivalent to an interface? There's no single answer to this question, because, as always, It Depends™. Why do you need an interface in the first place? What is its intended use?

Sometimes, in OOP, an interface can be used for a Strategy. This enables you to dynamically replace or select between different (sub)algorithms at run-time. If the algorithm is pure, then an idiomatic F# equivalent would be a function.

At other times, though, the person asking the question has Dependency Injection in mind. In OOP, dependencies are often modelled as interfaces with several members. Such dependencies are systematically impure, and thereby not part of functional design. If at all possible, prefer impure/pure/impure sandwiches over interactions. Sometimes, however, you'll need something that works like an interface or abstract base class. Free monads can address such situations.

In general, a free monad allows you to build a monad from any functor, but why would you want to do that? The most common reason I've encountered is exactly in order to model impure interactions in a pure manner; in other words: Dependency Injection.

Refactor interface to functor #

This recipe comes in three parts:

- A recipe for refactoring interfaces to a functor.

- The core recipe for creating a monad from any functor.

- A recipe for adding an interpreter.

Imagine that you have an interface that you'd like to refactor. In C# it might look like this:

public interface IFace { Out1 Member1(In1 input); Out2 Member2(In2 input); }

In F#, it'd look like this:

type IFace = abstract member Member1 : input:In1 -> Out1 abstract member Member2 : input:In2 -> Out2

I've deliberately kept the interface vague and abstract in order to showcase the recipe instead of a particular example. For realistic examples, refer to the examples section, further down.

To refactor such an interface to a functor, do the following:

- Create a discriminated union. Name it after the interface name, but append the word instruction as a suffix.

- Make the union type generic.

-

For each member in the interface, add a case.

- Name the case after the name of the member.

- Declare the type of data contained in the case as a pair (a two-element tuple).

- Declare the type of the first element in that tuple as the type of the input argument(s) to the interface member. If the member has more than one input argument, declare it as a (nested) tuple.

- Declare the type of the second element in the tuple as a function. The input type of that function should be the output type of the original interface member, and the output type of the function should be the generic type argument for the union type.

- Add a map function for the union type. I'd recommend making this function private and avoid naming it

mapin order to prevent naming conflicts. I usually name this functionmapI, where the I stands for instruction. - The map function should take a function of the type

'a -> 'bas its first (curried) argument, and a value of the union type as its second argument. It should return a value of the union type, but with the generic type argument changed from'ato'b. - For each case in the union type, map it to a value of the same case. Copy the (non-generic) first element of the pair over without modification, but compose the function in the second element with the input function to the map function.

type FaceInstruction<'a> = | Member1 of (In1 * (Out1 -> 'a)) | Member2 of (In2 * (Out2 -> 'a))

The map function becomes:

// ('a -> 'b) -> FaceInstruction<'a> -> FaceInstruction<'b> let private mapI f = function | Member1 (x, next) -> Member1 (x, next >> f) | Member2 (x, next) -> Member2 (x, next >> f)

Such a combination of union type and map function satisfies the functor laws, so that's how you refactor an interface to a functor.

Free monad recipe #

Given any functor, you can create a monad. The monad will be a new type that contains the functor; you will not be turning the functor itself into a monad. (Some functors can be turned into monads themselves, but if that's the case, you don't need to create a free monad.)

The recipe for turning any functor into a monad is as follows:

- Create a generic discriminated union. You can name it after the underlying functor, but append a suffix such as Program. In the following, this is called the 'program' union type.

- Add two cases to the union:

FreeandPure. - The

Freecase should contain a single value of the contained functor, generically typed to the 'program' union type itself. This is a recursive type definition. - The

Purecase should contain a single value of the union's generic type. - Add a

bindfunction for the new union type. The function should take two arguments: - The first argument to the

bindfunction should be a function that takes the generic type argument as input, and returns a value of the 'program' union type as output. In the rest of this recipe, this function is calledf. - The second argument to the

bindfunction should be a 'program' union type value. - The return type of the

bindfunction should be a 'program' union type value, with the same generic type as the return type of the first argument (f). - Declare the

bindfunction as recursive by adding thereckeyword. - Implement the

bindfunction by pattern-matching on theFreeandPurecases: - In the

Freecase, pipe the contained functor value to the functor's map function, usingbind fas the mapper function; then pipe the result of that toFree. - In the

Purecase, returnf x, wherexis the value contained in thePurecase. - Add a computation expression builder, using

bindforBindandPureforReturn.

type FaceProgram<'a> = | Free of FaceInstruction<FaceProgram<'a>> | Pure of 'a

It's worth noting that the Pure case always looks like that. While it doesn't take much effort to write it, you could copy and paste it from another free monad, and no changes would be required.

According to the recipe, the bind function should be implemented like this:

// ('a -> FaceProgram<'b>) -> FaceProgram<'a> -> FaceProgram<'b> let rec bind f = function | Free x -> x |> mapI (bind f) |> Free | Pure x -> f x

Apart from one small detail, the bind function always looks like that, so you can often copy and paste it from here and use it in your code, if you will. The only variation is that the underlying functor's map function isn't guaranteed to be called mapI - but if it is, you can use the above bind function as is. No modifications will be necessary.

In F#, a monad is rarely a goal in itself, but once you have a monad, you can add a computation expression builder:

type FaceBuilder () = member this.Bind (x, f) = bind f x member this.Return x = Pure x member this.ReturnFrom x = x member this.Zero () = Pure ()

While you could add more members (such as Combine, For, TryFinally, and so on), I find that usually, those four methods are all I need.

Create an instance of the builder object, and you can start writing computation expressions:

let face = FaceBuilder ()

Finally, as an optional step, if you've refactored an interface to an instruction set, you can add convenience functions that lift each instruction case to the free monad type:

- For each case, add a function of the same name, but camelCased instead of PascalCased.

- Each function should have input arguments that correspond to the first element of the case's contained tuple (i.e. the input argument for the original interface). I usually prefer the arguments in curried form, but that's not a requirement.

- Each function should return the corresponding instruction union case inside of the

Freecase. The case constructor must be invoked with the pair of data it requires. Populate the first element with values from the input arguments to the convenience function. The second element should be thePurecase constructor, passed as a function.

FaceInstruction<'a>:

// In1 -> FaceProgram<Out1> let member1 in1 = Free (Member1 (in1, Pure)) // In2 -> FaceProgram<Out2> let member2 in2 = Free (Member2 (in2, Pure))

Such functions are conveniences that make it easier to express what the underlying functor expresses, but in the context of the free monad.

Interpreters #

A free monad is a recursive type, and values are trees. The leafs are the Pure values. Often (if not always), the point of a free monad is to evaluate the tree in order to pull the leaf values out of it. In order to do that, you must add an interpreter. This is a function that recursively pattern-matches over the free monad value until it encounters a Pure case.

At least in the case where you've refactored an interface to a functor, writing an interpreter also follows a recipe. This is equivalent to writing a concrete class that implements an interface.

- For each case in the instruction-set functor, write an implementation function that takes the case's 'input' tuple element type as input, and returns a value of the type used in the case's second tuple element. Recall that the second element in the pair is a function; the output type of the implementation function should be the input type for that function.

- Add a function to implement the interpreter; I often call it

interpret. Make it recursive by adding thereckeyword. - Pattern-match on

Pureand each case contained inFree. - In the

Purecase, simply return the value contained in the case. - In the

Freecase, pattern-match the underlying pair out if each of the instruction-set functor's cases. The first element of that tuple is the 'input value'. Pipe that value to the corresponding implementation function, pipe the return value of that to the function contained in the second element of the tuple, and pipe the result of that recursively to the interpreter function.

imp1 and imp2 exist. According to the recipe, imp1 has the type In1 -> Out1, and imp2 has the type In2 -> Out2. Given these functions, the running example becomes:

// FaceProgram<'a> -> 'a let rec interpret = function | Pure x -> x | Free (Member1 (x, next)) -> x |> imp1 |> next |> interpret | Free (Member2 (x, next)) -> x |> imp2 |> next |> interpret

The Pure case always looks like that. Each of the Free cases use a different implementation function, but apart from that, they are, as you can tell, the spitting image of each other.

Interpreters like this are often impure because the implementation functions are impure. Nothing prevents you from defining pure interpreters, although they often have limited use. They do have their place in unit testing, though.

// Out1 -> Out2 -> FaceProgram<'a> -> 'a let rec interpretStub out1 out2 = function | Pure x -> x | Free (Member1 (_, next)) -> out1 |> next |> interpretStub out1 out2 | Free (Member2 (_, next)) -> out2 |> next |> interpretStub out1 out2

This interpreter effectively ignores the input value contained within each Free case, and instead uses the pure values out1 and out2. This is essentially a Stub - an 'implementation' that always returns pre-defined values.

The point is that you can have more than a single interpreter, pure or impure, just like you can have more than one implementation of an interface.

When to use it #

Free monads are often used instead of Dependency Injection. Note, however, that while the free monad values themselves are pure, they imply impure behaviour. In my opinion, the main benefit of pure code is that, as a code reader and maintainer, I don't have to worry about side-effects if I know that the code is pure. With a free monad, I do have to worry about side-effects, because, although the ASTs are pure, an impure interpreter will cause side-effects to happen. At least, however, the side-effects are known; they're restricted to a small subset of operations. Haskell enforces this distinction, but F# doesn't. The question, then, is how valuable you find this sort of design.

I think it still has some value, because a free monad explicitly communicates an intent of doing something impure. This intent becomes encoded in the types in your code base, there for all to see. Just as I prefer that functions return 'a option values if they may fail to produce a value, I like that I can tell from a function's return type that a delimited set of impure operations may result.

Clearly, creating free monads in F# requires some boilerplate code. I hope that this article has demonstrated that writing that boilerplate code isn't difficult - just follow the recipe. You almost don't have to think. Since a monad is a universal abstraction, once you've written the code, it's unlikely that you'll need to deal with it much in the future. After all, mathematical abstractions don't change.

Perhaps a more significant concern is how familiar free monads are to developers of a particular code base. Depending on your position, you could argue that free monads come with high cognitive overhead, or that they specifically lower the cognitive overhead.

Insights are obscure until you grasp them; after that, they become clear.

This applies to free monads as well. You have to put effort into understanding them, but once you do, you realise that they are more than a pattern. They are universal abstractions, governed by laws. Once you grok free monads, their cognitive load wane.

Consider, then, the developers who will be interacting with the free monad. If they already know free monads, or have enough of a grasp of monads that this might be their next step, then using free monads could be beneficial. On the other hand, if most developers are new to F# or functional programming, free monads should probably be avoided for the time being.

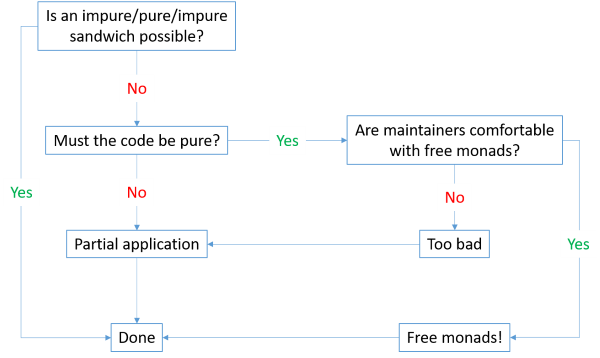

This flowchart summarises the above reflections:

Your first consideration should be whether your context enables an impure/pure/impure sandwich. If so, there's no reason to make things more complicated than they have to be. To use Fred Brooks' terminology, this should go a long way to avoid accidental complexity.

If you can't avoid long-running, impure interactions, then consider whether purity, or strictly functional design, is important to you. F# is a multi-paradigmatic language, and it's perfectly possible to write code that's impure, yet still well-structured. You can use partial application as an idiomatic alternative to Dependency Injection.

If you prefer to keep your code functional and explicit, you may consider using free monads. In this case, I still think you should consider the maintainers of the code base in question. If everyone involved are comfortable with free monads, or willing to learn, then I believe it's a viable option. Otherwise, I'd recommend falling back to partial application, even though Dependency Injection makes everything impure.

Motivating examples #

The strongest motivation, I believe, for introducing free monads into a code base is to model long-running, impure interactions in a functional style.

Like most other software design considerations, the overall purpose of application architecture is to deal with (essential) complexity. Thus, any example must be complex enough to warrant the design. There's little point in a Dependency Injection hello world example in C#. Likewise, a hello world example using free monads hardly seems justified. For that reason, examples are provided in separate articles.

A good place to start, I believe, is with the small Pure times article series. These articles show how to address a particular, authentic problem using strictly functional programming. The focus of these articles is on problem-solving, so they sometimes omit detailed explanations in order to keep the narrative moving.

If you need detailed explanations about all elements of free monads in F#, the present article series offers just that, particularly the Hello, pure command-line interaction article.

Variations #

The above recipes describe the regular scenario. Variations are possible. Obviously, you can choose different naming strategies and so on, but I'm not going to cover this in greater detail.

There are, however, various degenerate cases that deserve a few words. An interaction may return no data, or take no input. In F#, you can always model the lack of data as unit (()), so it's definitely possible to define an instruction case like Foo of (unit * Out1 -> 'a), or Bar of (In2 * unit -> 'a), but since unit doesn't contain any data, you can remove it without changing the abstraction.

The Hello, pure command-line interaction article contains a single type that exemplifies both degenerate cases. It defines this instruction set:

type CommandLineInstruction<'a> = | ReadLine of (string -> 'a) | WriteLine of string * 'a

The ReadLine case takes no input, so instead of containing a pair of input and continuation, this case contains only the continuation function. Likewise, the WriteLine case is also degenerate, but here, there's no output. This case does contain a pair, but the second element isn't a function, but a value.

This has some superficial consequences for the implementation of functor and monad functions. For example, the mapI function becomes:

// ('a -> 'b) -> CommandLineInstruction<'a> -> CommandLineInstruction<'b> let private mapI f = function | ReadLine next -> ReadLine (next >> f) | WriteLine (x, next) -> WriteLine (x, next |> f)

Notice that in the ReadLine case, there's no tuple on which to pattern-match. Instead, you can directly access next.

In the WriteLine case, the return value changes from function composition (next >> f) to a regular function call (next |> f, which is equivalent to f next).

The lift functions also change:

// CommandLineProgram<string> let readLine = Free (ReadLine Pure) // string -> CommandLineProgram<unit> let writeLine s = Free (WriteLine (s, Pure ()))

Since there's no input, readLine degenerates to a value, instead of a function. On the other hand, while writeLine remains a function, you'll have to pass a value (Pure ()) as the second element of the pair, instead of the regular function (Pure).

Apart from such minor changes, the omission of unit values for input or output has little significance.

Another variation from the above recipe that you may see relates to interpreters. In the above recipe, I described how, for each instruction, you should create an implementation function. Sometimes, however, that function is only a few lines of code. When that happens, I occasionally inline the function directly in the interpreter. Once more, the CommandLineProgram API provides an example:

// CommandLineProgram<'a> -> 'a let rec interpret = function | Pure x -> x | Free (ReadLine next) -> Console.ReadLine () |> next |> interpret | Free (WriteLine (s, next)) -> Console.WriteLine s next |> interpret

Here, no custom implementation functions are required, because Console.ReadLine and Console.WriteLine already exist and serve the desired purpose.

Summary #

This article describes a repeatable, and automatable, process for refactoring an interface to a free monad. I've done this enough times now that I believe that this process is always possible, but I have no formal proof for this.

I also strongly suspect that the reverse process is possible. For any instruction set elevated to a free monad, I think you should be able to define an object-oriented interface. If this is true, then object-oriented interfaces and AST-based free monads are isomorphic.

Combining free monads in F#

An example of how to compose free monads in F#.

This article is an instalment in a series of articles about modelling long-running interactions with pure, functional code. In the previous article, you saw how to combine a pure command-line API with an HTTP-client API in Haskell. In this article, you'll see how to translate the Haskell proof of concept to F#.

HTTP API client module #

You've already seen how to model command-line interactions as pure code in a previous article. You can define interactions with the online restaurant reservation HTTP API in the same way. First, define some types required for input and output to the API:

type Slot = { Date : DateTimeOffset; SeatsLeft : int } type Reservation = { Date : DateTimeOffset Name : string Email : string Quantity : int }

The Slot type contains information about how many available seats are left on a particular date. The Reservation type contains the information required in order to make a reservation. It's the same Reservation F# record type you saw in a previous article, but now it's moved here.

The online restaurant reservation HTTP API may afford more functionality than you need, but there's no reason to model more instructions than required:

type ReservationsApiInstruction<'a> = | GetSlots of (DateTimeOffset * (Slot list -> 'a)) | PostReservation of Reservation * 'a

This instruction set models two interactions. The GetSlots case models an instruction to request, from the HTTP API, the slots for a particular date. The PostReservation case models an instruction to make a POST HTTP request with a Reservation, thereby making a reservation.

While Haskell can automatically make this type a Functor, in F# you have to write the code yourself:

// ('a -> 'b) -> ReservationsApiInstruction<'a> // -> ReservationsApiInstruction<'b> let private mapI f = function | GetSlots (x, next) -> GetSlots (x, next >> f) | PostReservation (x, next) -> PostReservation (x, next |> f)

This turns ReservationsApiInstruction<'a> into a functor, which is, however, not the ultimate goal. The final objective is to enable syntactic sugar, so that you can write pure ReservationsApiInstruction<'a> Abstract Syntax Trees (ASTs) in standard F# syntax. In order to fulfil that ambition, you need a computation expression builder, and to create one of those, you need a monad.

You can turn ReservationsApiInstruction<'a> into a monad using the free monad recipe that you've already seen. Creating a free monad, however, involves adding another type that will become both monad and functor, so I deliberately make mapI private in order to prevent confusion. This is also the reason I didn't name the function map: you'll need that name for a different type. The I in mapI stands for instruction.

The mapI function pattern-matches on the (implicit) ReservationsApiInstruction argument. In the GetSlots case, it returns a new GetSlots value, but composes the next continuation with f. In the PostReservation case, it returns a new PostReservation value, but pipes next to f. The reason for the difference is that PostReservation is degenerate: next isn't a function, but a value.

Now that ReservationsApiInstruction<'a> is a functor, you can create a free monad from it. The first step is to introduce a new type for the monad:

type ReservationsApiProgram<'a> = | Free of ReservationsApiInstruction<ReservationsApiProgram<'a>> | Pure of 'a

This is a recursive type that enables you to assemble ASTs that ultimately can return a value. The Pure case enables you to return a value, while the Free case lets you describe what should happen next.

Using mapI, you can make a monad out of ReservationsApiProgram<'a> by adding a bind function:

// ('a -> ReservationsApiProgram<'b>) -> ReservationsApiProgram<'a> // -> ReservationsApiProgram<'b> let rec bind f = function | Free instruction -> instruction |> mapI (bind f) |> Free | Pure x -> f x

If you refer back to the bind implementation for CommandLineProgram<'a>, you'll see that it's the exact same code. In Haskell, creating a free monad from a functor is automatic. In F#, it's boilerplate.

Likewise, you can make ReservationsApiProgram<'a> a functor:

// ('a -> 'b) -> ReservationsApiProgram<'a> -> ReservationsApiProgram<'b> let map f = bind (f >> Pure)

Again, this is the same code as in the CommandLine module. You can copy and paste it. It is, however, not the same function, because the types are different.

Finally, to round off the reservations HTTP client API, you can supply functions that lift instructions to programs:

// DateTimeOffset -> ReservationsApiProgram<Slot list> let getSlots date = Free (GetSlots (date, Pure)) // Reservation -> ReservationsApiProgram<unit> let postReservation r = Free (PostReservation (r, Pure ()))

That's everything you need to create a small computation expression builder:

type ReservationsApiBuilder () = member this.Bind (x, f) = ReservationsApi.bind f x member this.Return x = Pure x member this.ReturnFrom x = x member this.Zero () = Pure ()

Create an instance of the ReservationsApiBuilder class in order to use reservationsApi computation expressions:

let reservationsApi = ReservationsApiBuilder ()

This, in total, defines a pure API for interacting with the online restaurant reservation system, including all the syntactic sugar you'll need to stay sane. As usual, some boilerplate code is required, but I'm not too worried about its maintenance overhead, as it's unlikely to change much, once you've added it. If you've followed the recipe, the API obeys the category, functor, and monad laws, so it's not something you've invented; it's an instance of a universal abstraction.

Monad stack #

The addition of the above ReservationsApi module is only a step towards the overall goal, which is to write a command-line wizard you can use to make reservations against the online API. In order to do so, you must combine the two monads CommandLineProgram<'a> and ReservationsApiProgram<'a>. In Haskell, you get that combination for free via the built-in generic FreeT type, which enables you to stack monads. In F#, you have to explicitly declare the type:

type CommandLineReservationsApiT<'a> = | Run of CommandLineProgram<ReservationsApiProgram<'a>>

This is a single-case discriminated union that stacks ReservationsApiProgram and CommandLineProgram. In this incarnation, it defines a single case called Run. The reason for this is that it enables you to follow the free monad recipe without having to do much thinking. Later, you'll see that it's possible to simplify the type.

The naming is inspired by Haskell. This type is a piece of the puzzle corresponding to Haskell's FreeT type. The T in FreeT stands for transformer, because FreeT is actually something called a monad transformer. That's not terribly important in an F# context, but that's the reason I also tagged on the T in CommandLineReservationsApiT<'a>.

FreeT is actually only a 'wrapper' around another monad. In order to extract the contained monad, you can use a function called runFreeT. That's the reason I called the F# case Run.

You can easily make your stack of monads a functor:

// ('a -> 'b) -> CommandLineProgram<ReservationsApiProgram<'a>> // -> CommandLineProgram<ReservationsApiProgram<'b>> let private mapStack f x = commandLine { let! x' = x return ReservationsApi.map f x' }

The mapStack function uses the commandLine computation expression to access the ReservationsApiProgram contained within the CommandLineProgram. Thanks to the let! binding, x' is a ReservationsApiProgram<'a> value. You can use ReservationsApi.map to map x' with f.

It's now trivial to make CommandLineReservationsApiT<'a> a functor as well:

// ('a -> 'b) -> CommandLineReservationsApiT<'a> // -> CommandLineReservationsApiT<'b> let private mapT f (Run p) = mapStack f p |> Run

The mapT function simply pattern-matches the monad stack out of the Run case, calls mapStack, and pipes the return value into another Run case.

By now, it's should be fairly clear that we're following the same recipe as before. You have a functor; make a monad out of it. First, define a type for the monad:

type CommandLineReservationsApiProgram<'a> = | Free of CommandLineReservationsApiT<CommandLineReservationsApiProgram<'a>> | Pure of 'a

Then add a bind function:

// ('a -> CommandLineReservationsApiProgram<'b>) // -> CommandLineReservationsApiProgram<'a> // -> CommandLineReservationsApiProgram<'b> let rec bind f = function | Free instruction -> instruction |> mapT (bind f) |> Free | Pure x -> f x

This is almost the same code as the above bind function for ReservationsApi. The only difference is that the underlying map function is named mapT instead of mapI. The types involved, however, are different.

You can also add a map function:

// ('a -> 'b) -> (CommandLineReservationsApiProgram<'a> // -> CommandLineReservationsApiProgram<'b>) let map f = bind (f >> Pure)

This is another copy-and-paste job. Such repeatable. Wow.

When you create a monad stack, you need a way to lift values from each of the constituent monads up to the combined monad. In Haskell, this is done with the lift and liftF functions, but in F#, you must explicitly add such functions:

// CommandLineProgram<ReservationsApiProgram<'a>> // -> CommandLineReservationsApiProgram<'a> let private wrap x = x |> Run |> mapT Pure |> Free // CommandLineProgram<'a> -> CommandLineReservationsApiProgram<'a> let liftCL x = wrap <| CommandLine.map ReservationsApiProgram.Pure x // ReservationsApiProgram<'a> -> CommandLineReservationsApiProgram<'a> let liftRA x = wrap <| CommandLineProgram.Pure x

The private wrap function takes the underlying 'naked' monad stack (CommandLineProgram<ReservationsApiProgram<'a>>) and turns it into a CommandLineReservationsApiProgram<'a> value. It first wraps x in Run, which turns x into a CommandLineReservationsApiT<'a> value. By piping that value into mapT Pure, you get a CommandLineReservationsApiT<CommandLineReservationsApiProgram<'a>> value that you can finally pipe into Free in order to produce a CommandLineReservationsApiProgram<'a> value. Phew!

The liftCL function lifts a CommandLineProgram (CL) to CommandLineReservationsApiProgram by first using CommandLine.map to lift x to a CommandLineProgram<ReservationsApiProgram<'a>> value. It then pipes that value to wrap.

Likewise, the liftRA function lifts a ReservationsApiProgram (RA) to CommandLineReservationsApiProgram. It simply elevates x to a CommandLineProgram value by using CommandLineProgram.Pure. Subsequently, it pipes that value to wrap.

In both of these functions, I used the slightly unusual backwards pipe operator <|. The reason for that is that it emphasises the similarity between liftCL and liftRA. This is easier to see if you remove the type comments:

let liftCL x = wrap <| CommandLine.map ReservationsApiProgram.Pure x let liftRA x = wrap <| CommandLineProgram.Pure x

This is how I normally write my F# code. I only add the type comments for the benefit of you, dear reader. Normally, when you have an IDE, you can always inspect the types using the built-in tools.

Using the backwards pipe operator makes it immediately clear that both functions depend in the wrap function. This would have been muddied by use of the normal forward pipe operator:

let liftCL x = CommandLine.map ReservationsApiProgram.Pure x |> wrap let liftRA x = CommandLineProgram.Pure x |> wrap

The behaviour is the same, but now wrap doesn't align, making it harder to discover the kinship between the two functions. My use of the backward pipe operator is motivated by readability concerns.

Following the free monad recipe, now create a computation expression builder:

type CommandLineReservationsApiBuilder () = member this.Bind (x, f) = CommandLineReservationsApi.bind f x member this.Return x = Pure x member this.ReturnFrom x = x member this.Zero () = Pure ()

Finally, create an instance of the class:

let commandLineReservationsApi = CommandLineReservationsApiBuilder ()

Putting the commandLineReservationsApi value in a module will enable you to use it for computation expressions whenever you open that module. I normally put it in a module with the [<AutoOpen>] attribute so that it automatically becomes available as soon as I open the containing namespace.

Simplification #

While there can be good reasons to introduce single-case discriminated unions in your F# code, they're isomorphic with their contained type. (This means that there's a lossless conversion between the union type and the contained type, in both directions.) Following the free monad recipe, I introduced CommandLineReservationsApiT as a discriminated union, but since it's a single-case union, you can refactor it to its contained type.

If you delete the CommandLineReservationsApiT type, you'll first have to change the definition of the program type to this:

type CommandLineReservationsApiProgram<'a> = | Free of CommandLineProgram<ReservationsApiProgram<CommandLineReservationsApiProgram<'a>>> | Pure of 'a

You simply replace CommandLineReservationsApiT<_> with CommandLineProgram<ReservationsApiProgram<_>>, effectively promoting the type contained in the Run case to be the container in the Free case.

Once CommandLineReservationsApiT is gone, you'll also need to delete the mapT function, and amend bind:

// ('a -> CommandLineReservationsApiProgram<'b>) // -> CommandLineReservationsApiProgram<'a> // -> CommandLineReservationsApiProgram<'b> let rec bind f = function | Free instruction -> instruction |> mapStack (bind f) |> Free | Pure x -> f x

Likewise, you must also adjust the wrap function:

let private wrap x = x |> mapStack Pure |> Free

The rest of the above code stays the same.

Wizard #

In Haskell, you get combinations of monads for free via the FreeT type, whereas in F#, you have to work for it. Once you have the combination in monadic form as well, you can write programs with that combination. Here's the wizard that collects your data and attempts to make a restaurant reservation on your behalf:

// CommandLineReservationsApiProgram<unit> let tryReserve = commandLineReservationsApi { let! count = liftCL readQuantity let! date = liftCL readDate let! availableSeats = ReservationsApi.getSlots date |> ReservationsApi.map (List.sumBy (fun slot -> slot.SeatsLeft)) |> liftRA if availableSeats < count then do! sprintf "Only %i remaining seats." availableSeats |> CommandLine.writeLine |> liftCL else let! name = liftCL readName let! email = liftCL readEmail do! { Date = date; Name = name; Email = email; Quantity = count } |> ReservationsApi.postReservation |> liftRA }

Notice that tryReserve is a value, and not a function. It's a pure value that contains an AST - a small program that describes the impure interactions that you'd like to take place. It's defined entirely within a commandLineReservationsApi computation expression.

It starts by using the readQuantity and readDate program values you saw in the previous F# article. Both of these values are CommandLineProgram values, so you have to use liftCL to lift them to CommandLineReservationsApiProgram values - only then can you let! bind them to an int and a DateTimeOffset, respectively. This is just like the use of lift in the previous article's Haskell example.

Once the program has collected the desired date from the user, it calls ReservationsApi.getSlots and calculates the sum over all the returned SeatsLeft labels. The ReservationsApi.getSlots function returns a ReservationsApiProgram<Slot list>, the ReservationsApi.map turns it into a ReservationsApiProgram<int> value that you must liftRA in order to be able to let! bind it to an int value. Let me stress once again: the program actually doesn't do any of that; it constructs an AST with instructions to that effect.

If it turns out that there's too few seats left, the program writes that on the command line and exits. Otherwise, it continues to collect the user's name and email address. That's all the data required to create a Reservation record and pipe it to ReservationsApi.postReservation.

Interpreters #

The tryReserve wizard is a pure value. It contains an AST that can be interpreted in such a way that impure operations happen. You've already seen the CommandLineProgram interpreter in a previous article, so I'm not going to repeat it here. I'll only note that I renamed it to interpretCommandLine because I want to use the name interpret for the combined interpreter.

The interpreter for ReservationsApiProgram values is similar to the CommandLineProgram interpreter:

// ReservationsApiProgram<'a> -> 'a let rec interpretReservationsApi = function | ReservationsApiProgram.Pure x -> x | ReservationsApiProgram.Free (GetSlots (d, next)) -> ReservationHttpClient.getSlots d |> Async.RunSynchronously |> next |> interpretReservationsApi | ReservationsApiProgram.Free (PostReservation (r, next)) -> ReservationHttpClient.postReservation r |> Async.RunSynchronously next |> interpretReservationsApi

The interpretReservationsApi function pattern-matches on its (implicit) ReservationsApiProgram argument, and performs the appropriate actions according to each instruction. In all Free cases, it delegates to implementations defined in a ReservationHttpClient module. The code in that module isn't shown here, but you can see it in the GitHub repository that accompanies this article.

You can combine the two 'leaf' interpreters in an interpreter of CommandLineReservationsApiProgram values:

// CommandLineReservationsApiProgram<'a> -> 'a let rec interpret = function | CommandLineReservationsApiProgram.Pure x -> x | CommandLineReservationsApiProgram.Free p -> p |> interpretCommandLine |> interpretReservationsApi |> interpret

As usual, in the Pure case, it simply returns the contained value. In the Free case, p is a CommandLineProgram<ReservationsApiProgram<CommandLineReservationsApiProgram<'a>>>. Since it's a CommandLineProgram value, you can interpret it with interpretCommandLine, which returns a ReservationsApiProgram<CommandLineReservationsApiProgram<'a>>. Since that's a ReservationsApiProgram, you can pipe it to interpretReservationsApi, which then returns a CommandLineReservationsApiProgram<'a>. An interpreter exists for that type as well, namely the interpret function itself, so recursively invoke it again. In other words, interpret will keep recursing until it hits a Pure case.

Execution #

Everything is now in place so that you can execute your program. This is the program's entry point:

[<EntryPoint>] let main _ = interpret Wizard.tryReserve 0 // return an integer exit code

When you run it, you'll be able to have an interaction like this:

Please enter number of diners: 4 Please enter your desired date: 2017-11-25 Please enter your name: Mark Seemann Please enter your email address: mark@example.net OK

If you want to run this code sample yourself, you're going to need an appropriate HTTP API with which you can interact. I hosted the API on my local machine, and afterwards verified that the record was, indeed, written in the reservations database.

Summary #

As expected, you can combine free monads in F#, although it requires more boilerplate code than in Haskell.

Next: F# free monad recipe.

Combining free monads in Haskell

An example on how to compose free monads in Haskell.

In the previous article in this series on pure interactions, you saw how to write a command-line wizard in F#, using a free monad to build an Abstract Syntax Tree (AST). The example collects information about a potential restaurant reservations you'd like to make. That example, however, didn't do more than that.

For a more complete experience, you'd like your command-line interface (CLI) to not only collect data about a reservation, but actually make the reservation, using the available HTTP API. This means that you'll also need to model interaction with the HTTP API as an AST, but a different AST. Then, you'll have to figure out how to compose these two APIs into a combined API.

In order to figure out how to do this in F#, I first had to do it in Haskell. In this article, you'll see how to do it in Haskell, and in the next article, you'll see how to translate this Haskell prototype to F#. This should ensure that you get a functional F# code base as well.

Command line API #

Let's make an easy start of it. In a previous article, you saw how to model command-line interactions as ASTs, complete with syntactic sugar provided by a computation expression. That took a fair amount of boilerplate code in F#, but in Haskell, it's declarative:

import Control.Monad.Trans.Free (Free, liftF) data CommandLineInstruction next = ReadLine (String -> next) | WriteLine String next deriving (Functor) type CommandLineProgram = Free CommandLineInstruction readLine :: CommandLineProgram String readLine = liftF (ReadLine id) writeLine :: String -> CommandLineProgram () writeLine s = liftF (WriteLine s ())

This is all the code required to define your AST and make it a monad in Haskell. Contrast that with all the code you have to write in F#!

The CommandLineInstruction type defines the instruction set, and makes use of a language extension called DeriveFunctor, which enables Haskell to automatically create a Functor instance from the type.

The type alias type CommandLineProgram = Free CommandLineInstruction creates a monad from CommandLineInstruction, since Free is a Monad when the underlying type is a Functor.

The readLine value and writeLine function are conveniences that lift the instructions from CommandLineInstruction into CommandLineProgram values. These were also one-liners in F#.

HTTP client API #

You can write a small wizard to collect restaurant reservation data with the CommandLineProgram API, but the new requirement is to make HTTP calls so that the CLI program actually makes the reservation against the back-end system. You could extend CommandLineProgram with more instructions, but that would be to mix concerns. It'd be more appropriate to define a new instruction set for making the required HTTP requests.

This API will send and receive more complex values than simple String values, so you can start by defining their types:

data Slot = Slot { slotDate :: ZonedTime, seatsLeft :: Int } deriving (Show) data Reservation = Reservation { reservationDate :: ZonedTime , reservationName :: String , reservationEmail :: String , reservationQuantity :: Int } deriving (Show)

The Slot type contains information about how many available seats are left on a particular date. The Reservation type contains the information required in order to make a reservation. It's similar to the Reservation F# record type you saw in the previous article.

The online restaurant reservation HTTP API may afford more functionality than you need, but there's no reason to model more instructions than required:

data ReservationsApiInstruction next = GetSlots ZonedTime ([Slot] -> next) | PostReservation Reservation next deriving (Functor)

This instruction set models two interactions. The GetSlots case models an instruction to request, from the HTTP API, the slots for a particular date. The PostReservation case models an instruction to make a POST HTTP request with a Reservation, thereby making a reservation.

Like the above CommandLineInstruction, this type is (automatically) a Functor, which means that we can create a Monad from it:

type ReservationsApiProgram = Free ReservationsApiInstruction

Once again, the monad is nothing but a type alias.

Finally, you're going to need the usual lifts:

getSlots :: ZonedTime -> ReservationsApiProgram [Slot] getSlots d = liftF (GetSlots d id) postReservation :: Reservation -> ReservationsApiProgram () postReservation r = liftF (PostReservation r ())

This is all you need to write a wizard that interleaves CommandLineProgram and ReservationsApiProgram instructions in order to create a more complex AST.

Wizard #

The wizard should do the following:

- Collect the number of diners, and the date for the reservation.

- Query the HTTP API about availability for the requested date. If insufficient seats are available, it should exit.

- If sufficient capacity remains, collect name and email.

- Make the reservation against the HTTP API.

readParse :: Read a => String -> String -> CommandLineProgram a readParse prompt errorMessage = do writeLine prompt l <- readLine case readMaybe l of Just dt -> return dt Nothing -> do writeLine errorMessage readParse prompt errorMessage

It first uses writeLine to write prompt to the command line - or rather, it creates an instruction to do so. The instruction is a pure value. No side-effects are involved until an interpreter evaluates the AST.

The next line uses readLine to read the user's input. While readLine is a CommandLineProgram String value, due to Haskell's do notation, l is a String value. You can now attempt to parse that String value with readMaybe, which returns a Maybe a value that you can handle with pattern matching. If readMaybe returns a Just value, then return the contained value; otherwise, write errorMessage and recursively call readParse again.

Like in the previous F# example, the only way to continue is to write something that readMaybe can parse. There's no other way to exit; there probably should be an option to quit, but it's not important for this demo purpose.

You may also have noticed that, contrary to the previous F# example, I here succumbed to the temptation to break the rule of three. It's easier to define a reusable function in Haskell, because you can leave it generic, with the proviso that the generic value must be an instance of the Read typeclass.

The readParse function returns a CommandLineProgram a value. It doesn't combine CommandLineProgram with ReservationsApiProgram. That's going to happen in another function, but before we look at that, you're also going to need another little helper:

readAnything :: String -> CommandLineProgram String readAnything prompt = do writeLine prompt readLine

The readAnything function simply writes a prompt, reads the user's input, and unconditionally returns it. You could also have written it as a one-liner like readAnything prompt = writeLine prompt >> readLine, but I find the above code more readable, even though it's slightly more verbose.

That's all you need to write the wizard:

tryReserve :: FreeT ReservationsApiProgram CommandLineProgram () tryReserve = do q <- lift $ readParse "Please enter number of diners:" "Not an Integer." d <- lift $ readParse "Please enter your desired date:" "Not a date." availableSeats <- liftF $ (sum . fmap seatsLeft) <$> getSlots d if availableSeats < q then lift $ writeLine $ "Only " ++ show availableSeats ++ " remaining seats." else do n <- lift $ readAnything "Please enter your name:" e <- lift $ readAnything "Please enter your email address:" liftF $ postReservation Reservation { reservationDate = d , reservationName = n , reservationEmail = e , reservationQuantity = q }

The tryReserve program first prompt the user for a number of diners and a date. Once it has the date d, it calls getSlots and calculates the sum of the remaining seats. availableSeats is an Int value like q, so you can compare those two values with each other. If the number of available seats is less than the desired quantity, the program writes that and exits.

This interaction demonstrates how to interleave CommandLineProgram and ReservationsApiProgram instructions. It would be a bad user experience if the program would ask the user to input all information, and only then discover that there's insufficient capacity.

If, on the other hand, there's enough remaining capacity, the program continues collecting information from the user, by prompting for the user's name and email address. Once all data is collected, it creates a new Reservation value and invokes postReservation.

Consider the type of tryReserve. It's a combination of CommandLineProgram and ReservationsApiProgram, contained within a type called FreeT. This type is also a Monad, which is the reason the do notation still works. This also begins to explain the various lift and liftF calls sprinkled over the code.

Whenever you use a <- arrow to 'pull the value out of the monad' within a do block, the right-hand side of the arrow must have the same type as the return type of the overall function (or value). In this case, the return type is FreeT ReservationsApiProgram CommandLineProgram (), whereas readParse returns a CommandLineProgram a value. As an example, lift turns CommandLineProgram Int into FreeT ReservationsApiProgram CommandLineProgram Int.

The way the type of tryReserve is declared, when you have a CommandLineProgram a value, you use lift, but when you have a ReservationsApiProgram a, you use liftF. This depends on the order of the monads contained within FreeT. If you swap CommandLineProgram and ReservationsApiProgram, you'll also need to use lift instead of liftF, and vice versa.

Interpreters #

tryReserve is a pure value. It's an Abstract Syntax Tree that combines two separate instruction sets to describe a complex interaction between user, command line, and an HTTP client. The program doesn't do anything until interpreted.

You can write an impure interpreter for each of the APIs, and a third one that uses the other two to interpret tryReserve.

Interpreting CommandLineProgram values is similar to the previous F# example:

interpretCommandLine :: CommandLineProgram a -> IO a interpretCommandLine program = case runFree program of Pure r -> return r Free (ReadLine next) -> do line <- getLine interpretCommandLine $ next line Free (WriteLine line next) -> do putStrLn line interpretCommandLine next

This interpreter is a recursive function that pattern-matches all the cases in any CommandLineProgram a. When it encounters a Pure case, it simply returns the contained value.

When it encounters a ReadLine value, it calls getLine, which returns an IO String value read from the command line, but thanks to the do block, line is a String value. The interpreter then calls next with line, and passes the return value of that recursively to itself.

A similar treatment is given to the WriteLine case. putStrLn line writes line to the command line, where after next is used as an input argument to interpretCommandLine.

Thanks to Haskell's type system, you can easily tell that interpretCommandLine is impure, because for every CommandLineProgram a it returns IO a. That was the intent all along.

Likewise, you can write an interpreter for ReservationsApiProgram values:

interpretReservationsApi :: ReservationsApiProgram a -> IO a interpretReservationsApi program = case runFree program of Pure x -> return x Free (GetSlots zt next) -> do slots <- HttpClient.getSlots zt interpretReservationsApi $ next slots Free (PostReservation r next) -> do HttpClient.postReservation r interpretReservationsApi next

The structure of interpretReservationsApi is similar to interpretCommandLine. It delegates its implementation to an HttpClient module that contains the impure interactions with the HTTP API. This module isn't shown in this article, but you can see it in the GitHub repository that accompanies this article.

From these two interpreters, you can create a combined interpreter:

interpret :: FreeT ReservationsApiProgram CommandLineProgram a -> IO a interpret program = do r <- interpretCommandLine $ runFreeT program case r of Pure x -> return x Free p -> do y <- interpretReservationsApi p interpret y

This function has the required type: it evaluates any FreeT ReservationsApiProgram CommandLineProgram a and returns an IO a. runFreeT returns the CommandLineProgram part of the combined program. Passing this value to interpretCommandLine, you get the underlying type - the a in CommandLineProgram a, if you will. In this case, however, the a is quite a complex type that I'm not going to write out here. Suffice it to say that, at the container level, it's a FreeF value, which can be either a Pure or a Free case that you can use for pattern matching.

In the Pure case, you're done, so you can simply return the underlying value.

In the Free case, the p contained inside is a ReservationsApiProgram value, which you can interpret with interpretReservationsApi. That returns an IO a value, and due to the do block, y is the a. In this case, however, a is FreeT ReservationsApiProgram CommandLineProgram a, but that means that the function can now recursively call itself with y in order to interpret the next instruction.

Execution #

Armed with both an AST and an interpreter, executing the program is trivial:

main :: IO () main = interpret tryReserve

When you run the program, you could produce an interaction like this:

Please enter number of diners:

4

Please enter your desired date:

2017-11-25 18-30-00Z

Not a date.

Please enter your desired date:

2017-11-25 18:30:00Z

Please enter your name:

Mark Seemann

Please enter your email address:

mark@example.org

Status {statusCode = 200, statusMessage = "OK"}

You'll notice that I initially made a mistake on the date format, which caused readParse to prompt me again.

If you want to run this code sample yourself, you're going to need an appropriate HTTP API with which you can interact. I hosted the API on my local machine, and afterwards verified that the record was, indeed, written in the reservations database.

Summary #

This proof of concept proves that it's possible to combine separate free monads. Now that we know that it works, and the overall outline of it, it should be possible to translate this to F#. You should, however, expect more boilerplate code.

Next: Combining free monads in F#.

Comments

Here's an additional simplification. Rather than writing FreeT ReservationsApiProgram CommandLineProgram which requires you to lift, you can instead form the sum (coproduct) of both functors:

import Data.Functor.Sum type Program = Free (Sum CommandLineInstruction ReservationsApiInstruction) liftCommandLine :: CommandLineInstruction a -> Program a liftCommandLine = liftF . InL liftReservation :: ReservationsApiInstruction a -> Program a liftReservation = liftF . InR

Now you can lift the helpers directly to Program, like so:

readLine :: Program String readLine = liftCommandLine (ReadLine id) writeLine :: String -> Program () writeLine s = liftCommandLine (WriteLine s ()) getSlots :: ZonedTime -> Program [Slot] getSlots d = liftReservation (GetSlots d id) postReservation :: Reservation -> Program () postReservation r = liftReservation (PostReservation r ())

Then (after you change the types of the read* helpers), you can drop all lifts from tryReserve:

tryReserve :: Program ()

tryReserve = do

q <- readParse "Please enter number of diners:" "Not an Integer."

d <- readParse "Please enter your desired date:" "Not a date."

availableSeats <- (sum . fmap seatsLeft) <$> getSlots d

if availableSeats < q

then writeLine $ "Only " ++ show availableSeats ++ " remaining seats."

else do

n <- readAnything "Please enter your name:"

e <- readAnything "Please enter your email address:"

postReservation Reservation

{ reservationDate = d

, reservationName = n

, reservationEmail = e

, reservationQuantity = q }

And finally your interpreter needs to dispatch over InL/InR (this is using functions from Control.Monad.Free, you can actually drop the Trans import at this point):

interpretCommandLine :: CommandLineInstruction (IO a) -> IO a

interpretCommandLine (ReadLine next) = getLine >>= next

interpretCommandLine (WriteLine line next) = putStrLn line >> next

interpretReservationsApi :: ReservationsApiInstruction (IO a) -> IO a

interpretReservationsApi (GetSlots zt next) = HttpClient.getSlots zt >>= next

interpretReservationsApi (PostReservation r next) = HttpClient.postReservation r >> next

interpret :: Program a -> IO a

interpret program =

iterM go program

where

go (InL cmd) = interpretCommandLine cmd

go (InR res) = interpretReservationsApi res

I find this to be quite clean!

George, thank you for writing. That alternative does, indeed, look simpler and cleaner than mine. Thank you for sharing.

FWIW, one reason I write articles on this blog is to learn and become better. I publish what I know and have learned so far, and sometimes, people tell me that there's a better way. That's great, because it makes me a better programmer, and hopefully, it may make other readers better as well.

In case you'll be puzzling over my next blog post, however, I'm going to share a little secret (which is not a secret if you look at the blog's commit history): I wrote this article series more than a month ago, which means that all the remaining articles are already written. While I agree that using the sum of functors instead of FreeT simplifies the Haskell code, I don't think it makes that much of a difference when translating to F#. I may be wrong, but I haven't tried yet. My point, though, is that the next article in the series is going to ignore this better alternative, because, when it was written, I didn't know about it. I invite any interested reader to post, as a comment to that future article, their better alternatives :)

Hi Mark,

I think you'll enjoy Data Types a la Carte. It's the definitive introduction to the style that George Pollard demonstrates above. Swierstra covers how to build datatypes with initial algebras over coproducts, compose them abstracting over the concrete functor, and tear them down generically. It's well written, too 😉

Benjamin

A pure command-line wizard

An example of a small Abstract Syntax Tree written with F# syntactic sugar.

In the previous article, you got an introduction to a functional command-line API in F#. The example in that article, however, was too simple to highlight its composability. In this article, you'll see a fuller example.

Command-line wizard for on-line restaurant reservations #

In previous articles, you can see variations on an HTTP-based back-end for an on-line restaurant reservation system. In this article, on the other hand, you're going to see a first attempt at a command-line client for the API.

Normally, an on-line restaurant reservation system would have GUIs hosted in web pages or mobile apps, but with an open HTTP API, a self-respecting geek would prefer a command-line interface (CLI)... right?!

Please enter number of diners:

four

Not an integer.

Please enter number of diners:

4

Please enter your desired date:

My next birthday

Not a date.

Please enter your desired date:

2017-11-25

Please enter your name:

Mark Seemann

Please enter your email address:

mark@example.com

{Date = 25.11.2017 00:00:00 +01:00;

Name = "Mark Seemann";

Email = "mark@example.com";

Quantity = 4;}

In this incarnation, the CLI only collects information in order to dump a rendition of an F# record on the command-line. In a future article, you'll see how to combine this with an HTTP client in order to make a reservation with the back-end system.

Notice that the CLI is a wizard. It leads you through a series of questions. You have to give an appropriate answer to each question before you can move on to the next question. For instance, you must type an integer for the number of guests; if you don't, the wizard will repeatedly ask you for an integer until you do.

You can develop such an interface with the commandLine computation expression from the previous article.

Reading quantities #

There are four steps in the wizard. The first is to read the desired quantity from the command line:

// CommandLineProgram<int> let rec readQuantity = commandLine { do! CommandLine.writeLine "Please enter number of diners:" let! l = CommandLine.readLine match Int32.TryParse l with | true, dinerCount -> return dinerCount | _ -> do! CommandLine.writeLine "Not an integer." return! readQuantity }

This small piece of interaction is defined entirely within a commandLine expression. This enables you to use do! expressions and let! bindings to compose smaller CommandLineProgram values, such as CommandLine.writeLine and CommandLine.readLine (both shown in the previous article).

After prompting the user to enter a number, the program reads the user's input from the command line. While CommandLine.readLine is a CommandLineProgram<string> value, the let! binding turns l into a string value. If you can parse l as an integer, you return the integer; otherwise, you recursively return readQuantity.

The readQuantity program will continue to prompt the user for an integer. It gives you no option to cancel the wizard. This is a deliberate simplification I did in order to keep the example as simple as possible, but a real program should offer an option to abort the wizard.

The function returns a CommandLineProgram<int> value. This is a pure value containing an Abstract Syntax Tree (AST) that describes the interactions to perform. It doesn't do anything until interpreted. Contrary to designing with Dependency Injection and interfaces, however, you can immediately tell, from the type, that explicitly delimited impure interactions may take place within that part of your code base.

Reading dates #

When you've entered a proper number of diners, you proceed to enter a date. The program for that looks similar to readQuantity:

// CommandLineProgram<DateTimeOffset> let rec readDate = commandLine { do! CommandLine.writeLine "Please enter your desired date:" let! l = CommandLine.readLine match DateTimeOffset.TryParse l with | true, dt -> return dt | _ -> do! CommandLine.writeLine "Not a date." return! readDate }

The readDate value is so similar to readQuantity that you might be tempted to refactor both into a single, reusable function. In this case, however, I chose to stick to the rule of three.

Reading strings #

Reading the customer's name and email address from the command line is easy, as no parsing is required:

// CommandLineProgram<string> let readName = commandLine { do! CommandLine.writeLine "Please enter your name:" return! CommandLine.readLine } // CommandLineProgram<string> let readEmail = commandLine { do! CommandLine.writeLine "Please enter your email address:" return! CommandLine.readLine }

Both of these values unconditionally accept whatever you write when prompted. From a security standpoint, all input is evil, so in a production code base, you should still perform some validation. This, on the other hand, is demo code, so with that caveat, it accepts all strings you might type.

These values are similar to each other, but once again I invoke the rule of three and keep them as separate values.

Composing the wizard #

Together with the general-purpose command line API, the above values are all you need to compose the wizard. In this incarnation, the wizard should collect the information you type, and create a single record with those values. This is the type of record it must create:

type Reservation = { Date : DateTimeOffset Name : string Email : string Quantity : int }

You can easily compose the wizard like this:

// CommandLineProgram<Reservation> let readReservationRequest = commandLine { let! count = readQuantity let! date = readDate let! name = readName let! email = readEmail return { Date = date; Name = name; Email = email; Quantity = count } }

There's really nothing to it. As all the previous code examples in this article, you compose the readReservationRequest value entirely inside a commandLine expression. You use let! bindings to collect the four data elements you need, and once you have all four, you can return a Reservation value.

Running the program #

You may have noticed that no code so far shown define functions; they are all values. They are small program fragments, expressed as ASTs, composed into slightly larger programs that are still ASTs. So far, all the code is pure.

In order to run the program, you need an interpreter. You can reuse the interpreter from the previous article when composing your main function:

[<EntryPoint>] let main _ = Wizard.readReservationRequest |> CommandLine.bind (CommandLine.writeLine << (sprintf "%A")) |> interpret 0 // return an integer exit code

Notice that most of the behaviour is defined by the above Wizard.readReservationRequest value. That program, however, returns a Reservation value that you should also print to the command line, using the CommandLine module. You can achieve that behaviour by composing Wizard.readReservationRequest with CommandLine.writeLine using CommandLine.bind. Another way to write the same composition would be by using a commandLine computation expression, but in this case, I find the small pipeline of functions easier to read.

When you bind two CommandLineProgram values to each other, the result is a third CommandLineProgram. You can pipe that to interpret in order to run the program. The result is an interaction like the one shown in the beginning of this article.

Summary #

In this article, you've seen how you can create larger ASTs from smaller ASTs, using the syntactic sugar that F# computation expressions afford. The point, so far, is that you can make side-effects and non-deterministic behaviour explicit, while retaining the 'normal' F# development experience.

In Haskell, impure code can execute within an IO context, but inside IO, any sort of side-effect or non-deterministic behaviour could take place. For that reason, even in Haskell, it often makes sense to define an explicitly delimited set of impure operations. In the previous article, you can see a small Haskell code snippet that defines a command-line instruction AST type using Free. When you, as a code reader, encounter a value of the type CommandLineProgram String, you know more about the potential impurities than if you encounter a value of the type IO String. The same argument applies, with qualifications, in F#.

When you encounter a value of the type CommandLineProgram<Reservation>, you know what sort of impurities to expect: the program will only write to the command line, or read from the command line. What if, however, you'd like to combine those particular interactions with other types of interactions?

Read on.

Hello, pure command-line interaction

A gentle introduction to modelling impure interactions with pure code.

Dependency Injection is a well-described concept in object-oriented programming, but as I've explained earlier, its not functional, because it makes everything impure. In general, you should reject the notion of dependencies by instead designing your application on the concept of an impure/pure/impure sandwich. This is possible more often than you'd think, but there's still a large group of applications where this will not work. If your application needs to interact with the impure world for an extended time, you need a way to model such interactions in a pure way.

This article introduces a way to do that.

Command line API #

Imagine that you have to write a command-line program that can ask a series of questions and print appropriate responses. In the general case, this is a (potentially) long-running series of interactions between the user and the program. To keep it simple, though, in this article we'll start by looking at a degenerate example:

Please enter your name. Mark Hello, Mark!

The program is simply going to request that you enter your name. Once you've done that, it prints the greeting. In object-oriented programming, using Dependency Injection, you might introduce an interface. Keeping it simple, you can restrict such an interface to two methods:

public interface ICommandLine { string ReadLine(); void WriteLine(string text); }

Please note that this is clearly a toy example. In later articles, you'll see how to expand the example to cover some more complex interactions, but you could also read a more realistic example already. Initially, the example is degenerate, because there's only a single interaction. In this case, an impure/pure/impure sandwich is still possible, but such a design wouldn't scale to more complex interactions.

The problem with defining and injecting an interface is that it isn't functional. What's the functional equivalent, then?

Instruction set #

Instead of defining an interface, you can define a discriminated union that describes a limited instruction set for command-line interactions:

type CommandLineInstruction<'a> = | ReadLine of (string -> 'a) | WriteLine of string * 'a

You may notice that it looks a bit like the above C# interface. Instead of defining two methods, it defines two cases, but the names are similar.

The ReadLine case is an instruction that an interpreter can evaluate. The data contained in the case is a continuation function. After evaluating the instruction, an interpreter must invoke this function with a string. It's up to the interpreter to figure out which string to use, but it could, for example, come from reading an input string from the command line. The continuation function is the next step in whatever program you're writing.

The WriteLine case is another instruction for interpreters. The data contained in this case is a tuple. The first element of the tuple is input for the interpreter, which can choose to e.g. print the value on the command line, or ignore it, and so on. The second element of the tuple is a value used to continue whatever program this case is a part of.

This enables you to write a small, specialised Abstract Syntax Tree (AST), but there's currently no way to return from it. One way to do that is to add a third 'stop' case. If you're interested in that option, Scott Wlaschin covers this as one iteration in his excellent explanation of the AST design.

Instead of adding a third 'stop' case to CommandLineInstruction<'a>, another option is to add a new wrapper type around it:

type CommandLineProgram<'a> = | Free of CommandLineInstruction<CommandLineProgram<'a>> | Pure of 'a

The Free case contains a CommandLineInstruction that always continues to a new CommandLineProgram value. The only way you can escape the AST is via the Pure case, which simply contains the 'return' value.

Abstract Syntax Trees #

With these two types you can write specialised programs that contain instructions for an interpreter. Notice that the types are pure by intent, although in F# we can't really tell. You can, however, repeat this exercise in Haskell, where the instruction set looks like this:

data CommandLineInstruction next = ReadLine (String -> next) | WriteLine String next deriving (Functor) type CommandLineProgram = Free CommandLineInstruction

Both of these types are pure, because IO is nowhere in sight. In Haskell, functions are pure by default. This also applies to the String -> next function contained in the ReadLine case.

Back in F# land, you can write an AST that implements the command-line interaction shown in the beginning of the article:

// CommandLineProgram<unit> let program = Free (WriteLine ( "Please enter your name.", Free (ReadLine ( fun s -> Free (WriteLine ( sprintf "Hello, %s!" s, Pure ()))))))

This AST defines a little program. The first step is a WriteLine instruction with the input value "Please enter your name.". The WriteLine case constructor takes a tuple as input argument. The first tuple element is that prompt, and the second element is the continuation, which has to be a new CommandLineInstruction<CommandLineProgram<'a>> value.

In this example, the continuation value is a ReadLine case, which takes a continuation function as input. This function should return a new program value, which it does by returning a WriteLine.

This second WriteLine value creates a string from the outer value s. The second tuple element for the WriteLine case must, again, be a new program value, but now the program is done, so you can use the 'stop' value Pure ().

You probably think that I should quit the mushrooms. No one in their right mind will want to write code like this. Neither would I. Fortunately, you can make the coding experience much better, but you'll see how to do that later.

Interpretation #

The above program value is a small CommandLineProgram<unit>. It's a pure value. In itself, it doesn't do anything.

Clearly, we'd like it to do something. In order to make that happen, you can write an interpreter:

// CommandLineProgram<'a> -> 'a let rec interpret = function | Pure x -> x | Free (ReadLine next) -> Console.ReadLine () |> next |> interpret | Free (WriteLine (s, next)) -> Console.WriteLine s next |> interpret

This interpreter is a recursive function that pattern-matches all the cases in any CommandLineProgram<'a>. When it encounters a Pure case, it simply returns the contained value.

When it encounters a ReadLine value, it calls Console.ReadLine (), which returns a string value read from the command line. It then pipes that input value to its next continuation function, which produces a new CommandLineInstruction<CommandLineProgram<'a>> value. Finally, it pipes that continuation value recursively to itself.

A similar treatment is given to the WriteLine case. Console.WriteLine s writes s to the command line, where after next is recursively piped to interpret.

When you run interpret program, you get an interaction like this:

Please enter your name. ploeh Hello, ploeh!

The program is pure; the interpret function is impure.

Syntactic sugar #

Clearly, you don't want to write programs as ASTs like the above. Fortunately, you don't have to. You can add syntactic sugar in the form of computation expressions. The way to do that is to turn your AST types into a monad. In Haskell, you'd already be done, because Free is a monad. In F#, some code is required.

Source functor #

The first step is to define a map function for the underlying instruction set union type. Conceptually, when you can define a map function for a type, you've created a functor (if it obeys the functor laws, that is). Functors are common, so it often pays off being aware of them.

// ('a -> 'b) -> CommandLineInstruction<'a> -> CommandLineInstruction<'b> let private mapI f = function | ReadLine next -> ReadLine (next >> f) | WriteLine (x, next) -> WriteLine (x, next |> f)

The mapI function takes a CommandLineInstruction<'a> value and maps it to a new value by mapping the 'underlying value'. I decided to make the function private because later, I'm also going to define a map function for CommandLineProgram<'a>, and I don't want to confuse users of the API with two different map functions. This is also the reason that the name of the function isn't simply map, but rather mapI, where the I stands for instruction.

mapI pattern-matches on the (implicit) input argument. If it's a ReadLine case, it returns a new ReadLine value, but it uses the mapper function f to translate the next function. Recall that next is a function of the type string -> 'a. When you compose it with f (which is a function of the type 'a -> 'b), you get (string -> 'a) >> ('a -> 'b), or string -> 'b. You've transformed the 'a to a 'b for the ReadLine case. If you can do the same for the WriteLine case, you'll have a functor.

Fortunately, the WriteLine case is similar, although a small tweak is required. This case contains a tuple of data. The first element (x) isn't a generic type (it's a string), so there's nothing to map. You can use it as-is in the new WriteLine value that you'll return. The WriteLine case is degenerate because next isn't a function, but rather a value. It has a type of 'a, and f is a function of the type 'a -> 'b, so piping next to f returns a 'b.

That's it: now you have a functor.

(In order to keep the category theorists happy, I should point out that such functors are really a sub-type of functors called endo-functors. Additionally, functors must obey some simple and intuitive laws in order to be functors, but that's all I'll say about that here.)

Free monad #