ploeh blog danish software design

Inlined HUnit test lists

An alternative way to organise tests lists with HUnit.

In the previous article you saw how to write parametrised test with HUnit. While the tests themselves were elegant and readable (in my opinion), the composition of test lists left something to be desired. This article offers a different way to organise test lists.

Duplication #

The main problem is one of duplication. Consider the main function for the test library, as defined in the previous article:

main = defaultMain $ hUnitTestToTests $ TestList [ "adjustToBusinessHours returns correct result" ~: adjustToBusinessHoursReturnsCorrectResult, "adjustToDutchBankDay returns correct result" ~: adjustToDutchBankDayReturnsCorrectResult, "Composed adjust returns correct result" ~: composedAdjustReturnsCorrectResult ]

It annoys me that I have a function with a (somewhat) descriptive name, like adjustToBusinessHoursReturnsCorrectResult, but then I also have to give the test a label - in this case "adjustToBusinessHours returns correct result". Not only is this duplication, but it also adds an extra maintenance overhead, because if I decide to rename the test, should I also rename the label?

Why do you even need the label? When you run the test, that label is printed during the test run, so that you can see what happens:

$ stack test --color never --ta "--plain"

ZonedTimeAdjustment-0.1.0.0: test (suite: ZonedTimeAdjustment-test, args: --plain)

:adjustToDutchBankDay returns correct result:

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

:adjustToBusinessHours returns correct result:

: [OK]

: [OK]

: [OK]

:Composed adjust returns correct result:

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

Test Cases Total

Passed 20 20

Failed 0 0

Total 20 20

I considered it redundant to give each test case in the parametrised tests their own labels, but I could have done that too, if I'd wanted to.

What happens if you remove the labels?

main = defaultMain $ hUnitTestToTests $ TestList $ adjustToBusinessHoursReturnsCorrectResult ++ adjustToDutchBankDayReturnsCorrectResult ++ composedAdjustReturnsCorrectResult

That compiles, but produces output like this:

$ stack test --color never --ta "--plain"

ZonedTimeAdjustment-0.1.0.0: test (suite: ZonedTimeAdjustment-test, args: --plain)

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

: [OK]

Test Cases Total

Passed 20 20

Failed 0 0

Total 20 20

If you don't care about the labels, then that's a fine solution. On the other hand, if you do care about the labels, then a different approach is warranted.

Inlined test lists #

Looking at an expression like "Composed adjust returns correct result" ~: composedAdjustReturnsCorrectResult, I find "Composed adjust returns correct result" more readable than composedAdjustReturnsCorrectResult, so if I want to reduce duplication, I want to go after a solution that names a test with a label, instead of a solution that names a test with a function name.

What is composedAdjustReturnsCorrectResult? It's just the name of a pure function (because its type is [Test]). Since it's referentially transparent, it means that in the test list in main, I can replace the function with its body! I can do this with all three functions, although, in order to keep things simplified, I'm only going to show you two of them:

main :: IO () main = defaultMain $ hUnitTestToTests $ TestList [ "adjustToBusinessHours returns correct result" ~: do (dt, expected) <- [ (zt (2017, 10, 2) (6, 59, 4) 0, zt (2017, 10, 2) (9, 0, 0) 0), (zt (2017, 10, 2) (9, 42, 41) 0, zt (2017, 10, 2) (9, 42, 41) 0), (zt (2017, 10, 2) (19, 1, 32) 0, zt (2017, 10, 3) (9, 0, 0) 0) ] let actual = adjustToBusinessHours dt return $ ZT expected ~=? ZT actual , "Composed adjust returns correct result" ~: do (dt, expected) <- [ (zt (2017, 1, 31) ( 7, 45, 55) 2 , zt (2017, 2, 28) ( 7, 0, 0) 0), (zt (2017, 2, 6) (10, 3, 2) 1 , zt (2017, 3, 6) ( 9, 3, 2) 0), (zt (2017, 2, 9) ( 4, 20, 0) 0 , zt (2017, 3, 9) ( 9, 0, 0) 0), (zt (2017, 2, 12) (16, 2, 11) 0 , zt (2017, 3, 10) (16, 2, 11) 0), (zt (2017, 3, 14) (13, 48, 29) (-1), zt (2017, 4, 13) (14, 48, 29) 0) ] let adjustments = reverse [adjustToNextMonth, adjustToBusinessHours, adjustToDutchBankDay, adjustToUtc] let adjust = appEndo $ mconcat $ Endo <$> adjustments let actual = adjust dt return $ ZT expected ~=? ZT actual ]

In order to keep the code listing to a reasonable length, I didn't include the third test "adjustToDutchBankDay returns correct result", but it works in exactly the same way.

This is a list with two values. You can see that the values are separated by a ,, just like list elements normally are. What's unusual, however, is that each element in the list is defined with a multi-line do block.

In C# and F#, I'm used to being able to just write new test functions, and they're automatically picked up by convention and executed by the test runner. I wouldn't be at all surprised if there was a mechanism using Template Haskell that enables something similar, but I find that there's something appealing about treating tests as first-class values all the way.

By inlining the tests, I can retain my F# and C# workflow. Just add a new test within the list, and it's automatically picked up by the main function. Not only that, but it's no longer possible to write a test that compiles, but is never executed by the test runner because it has the wrong type. This occasionally happens to me in F#, but with the technique outlined here, if I accidentally give the test the wrong type, it's not going to compile.

Conclusion #

Since HUnit tests are first-class values, you can define them inlined in test lists. For larger code bases, I'd assume that you'd want to spread your unit tests across multiple modules. In that case, I suppose that you could have each test module export a [Test] value. In the test library's main function, you'd need to manually concatenate all the exported test lists together, so a small maintenance burden remains. When you add a new test module, you'd have to add its exported tests to main.

I wouldn't be surprised, however, if a clever reader could point out to me how to avoid that as well.

Parametrised unit tests in Haskell

Here's a way to write parametrised unit tests in Haskell.

Sometimes you'd like to execute the same (unit) test for a number of test cases. The only thing that varies is the input values, and the expected outcome. The actual test code is the same for all test cases. Among object-oriented programmers, this is known as a parametrised test.

When I recently searched the web for how to do parametrised tests in Haskell, I could only find articles that talked about property-based testing, mostly with QuickCheck. I normally prefer property-based testing, but sometimes, I'd rather like to run a test with some deterministic test cases that are visible and readable in the code itself.

Here's one way I found that I could do that in Haskell.

Testing date and time adjustments in C# #

In an earlier article, I discussed how to model date and time adjustments as a monoid. The example code was written in C#, and I used a few tests to demonstrate that the composition of adjustments work as intended:

[Theory] [InlineData("2017-01-31T07:45:55+2", "2017-02-28T07:00:00Z")] [InlineData("2017-02-06T10:03:02+1", "2017-03-06T09:03:02Z")] [InlineData("2017-02-09T04:20:00Z" , "2017-03-09T09:00:00Z")] [InlineData("2017-02-12T16:02:11Z" , "2017-03-10T16:02:11Z")] [InlineData("2017-03-14T13:48:29-1", "2017-04-13T14:48:29Z")] public void AccumulatedAdjustReturnsCorrectResult( string dtS, string expectedS) { var dt = DateTimeOffset.Parse(dtS); var sut = DateTimeOffsetAdjustment.Accumulate( new NextMonthAdjustment(), new BusinessHoursAdjustment(), new DutchBankDayAdjustment(), new UtcAdjustment()); var actual = sut.Adjust(dt); Assert.Equal(DateTimeOffset.Parse(expectedS), actual); }

The above parametrised test uses xUnit.net (particularly its Theory feature) to execute the same test code for five test cases. Here's another example:

[Theory] [InlineData("2017-10-02T06:59:04Z", "2017-10-02T09:00:00Z")] [InlineData("2017-10-02T09:42:41Z", "2017-10-02T09:42:41Z")] [InlineData("2017-10-02T19:01:32Z", "2017-10-03T09:00:00Z")] public void AdjustReturnsCorrectResult(string dts, string expectedS) { var dt = DateTimeOffset.Parse(dts); var sut = new BusinessHoursAdjustment(); var actual = sut.Adjust(dt); Assert.Equal(DateTimeOffset.Parse(expectedS), actual); }

This one covers the three code paths through BusinessHoursAdjustment.Adjust.

These tests are similar, so they share some good and bad qualities.

On the positive side, I like how readable such tests are. The test code is only a few lines of code, and the test cases (input, and expected output) are in close proximity to the code. Unless you're on a phone with a small screen, you can easily see all of it at once.

For a problem like this, I felt that I preferred examples rather than using property-based testing. If the date and time is this, then the adjusted result should be that, and so on. When we read code, we tend to prefer examples. Good documentation often contains examples, and for those of us who consider tests documentation, including examples in tests should advance that cause.

On the negative side, tests like these still contain noise. Most of it relates to the problem that xUnit.net tests aren't first-class values. These tests actually ought to take a DateTimeOffset value as input, and compare it to another, expected DateTimeOffset value. Unfortunately, DateTimeOffset values aren't constants, so you can't use them in attributes, like the [InlineData] attribute.

There are other workarounds than the one I ultimately chose, but none that are as simple (that I'm aware of). Strings are constants, so you can put formatted date and time strings in the [InlineData] attributes, but the cost of doing this is two calls to DateTimeOffset.Parse. Perhaps this isn't a big price, you think, but it does make me wish for something prettier.

Comparing date and time #

In order to port the above tests to Haskell, I used Stack to create a new project with HUnit as the unit testing library.

The Haskell equivalent to DateTimeOffset is called ZonedTime. One problem with ZonedTime values is that you can't readily compare them; the type isn't an Eq instance. There are good reasons for that, but for the purposes of unit testing, I wanted to be able to compare them, so I defined this helper data type:

newtype ZonedTimeEq = ZT ZonedTime deriving (Show) instance Eq ZonedTimeEq where ZT (ZonedTime lt1 tz1) == ZT (ZonedTime lt2 tz2) = lt1 == lt2 && tz1 == tz2

This enables me to compare two ZonedTimeEq values, which are only considered equal if they represent the same date, the same time, and the same time zone.

Test Utility #

I also added a little function for creating ZonedTime values:

zt (y, mth, d) (h, m, s) tz = ZonedTime (LocalTime (fromGregorian y mth d) (TimeOfDay h m s)) (hoursToTimeZone tz)

The motivation is simply that, as you can tell, creating a ZonedTime value requires a verbose expression. Clearly, the ZonedTime API is flexible, but in order to define some test cases, I found it advantageous to trade readability for flexibility. The zt function enables me to compactly define some ZonedTime values for my test cases.

Testing business hours in Haskell #

In HUnit, a test is either a Test, a list of Test values, or an impure assertion. For a parametrised test, a [Test] sounded promising. At the beginning, I struggled with finding a readable way to express the tests. I wanted to be able to start with a list of test cases (inputs and expected outputs), and then fmap them to an executable test. At first, the readability goal seemed elusive, until I realised that I can also use do notation with lists (since they're monads):

adjustToBusinessHoursReturnsCorrectResult :: [Test] adjustToBusinessHoursReturnsCorrectResult = do (dt, expected) <- [ (zt (2017, 10, 2) (6, 59, 4) 0, zt (2017, 10, 2) (9, 0, 0) 0), (zt (2017, 10, 2) (9, 42, 41) 0, zt (2017, 10, 2) (9, 42, 41) 0), (zt (2017, 10, 2) (19, 1, 32) 0, zt (2017, 10, 3) (9, 0, 0) 0) ] let actual = adjustToBusinessHours dt return $ ZT expected ~=? ZT actual

This is the same test as the above C# test named AdjustReturnsCorrectResult, and it's about the same size as well. Since the test is written using do notation, you can take a list of test cases and operate on each test case at a time. Although the test creates a list of tuples, the <- arrow pulls each (ZonedTime, ZonedTime) tuple out of the list and binds it to (dt, expected).

This test literally consists of only three expressions, so according to my normal heuristic for test formatting, I don't even need white space to indicate the three phases of the AAA pattern. The first expression sets up the test case (dt, expected).

The next expression exercises the System Under Test - in this case the adjustToBusinessHours function. That's simply a function call.

The third expression verifies the result. It uses HUnit's ~=? operator to compare the expected and the actual values. Since ZonedTime isn't an Eq instance, both values are converted to ZonedTimeEq values. The ~=? operator returns a Test value, and since the entire test takes place inside a do block, you must return it. Since this particular do block takes place inside the list monad, the type of adjustToBusinessHoursReturnsCorrectResult is [Test]. I added the type annotation for the benefit of you, dear reader, but technically, it's not required.

Testing the composed function #

Translating the AccumulatedAdjustReturnsCorrectResult C# test to Haskell follows the same recipe:

composedAdjustReturnsCorrectResult :: [Test] composedAdjustReturnsCorrectResult = do (dt, expected) <- [ (zt (2017, 1, 31) ( 7, 45, 55) 2 , zt (2017, 2, 28) ( 7, 0, 0) 0), (zt (2017, 2, 6) (10, 3, 2) 1 , zt (2017, 3, 6) ( 9, 3, 2) 0), (zt (2017, 2, 9) ( 4, 20, 0) 0 , zt (2017, 3, 9) ( 9, 0, 0) 0), (zt (2017, 2, 12) (16, 2, 11) 0 , zt (2017, 3, 10) (16, 2, 11) 0), (zt (2017, 3, 14) (13, 48, 29) (-1), zt (2017, 4, 13) (14, 48, 29) 0) ] let adjustments = reverse [adjustToNextMonth, adjustToBusinessHours, adjustToDutchBankDay, adjustToUtc] let adjust = appEndo $ mconcat $ Endo <$> adjustments let actual = adjust dt return $ ZT expected ~=? ZT actual

The only notable difference is that this unit test consists of five expressions, so according to my formatting heuristic, I inserted some blank lines in order to make it easier to distinguish the three AAA phases from each other.

Running tests #

I also wrote a third test called adjustToDutchBankDayReturnsCorrectResult, but that one is so similar to the two you've already seen that I see no point showing it here. In order to run all three tests, I define the tests' main function as such:

main = defaultMain $ hUnitTestToTests $ TestList [ "adjustToBusinessHours returns correct result" ~: adjustToBusinessHoursReturnsCorrectResult, "adjustToDutchBankDay returns correct result" ~: adjustToDutchBankDayReturnsCorrectResult, "Composed adjust returns correct result" ~: composedAdjustReturnsCorrectResult ]

This uses defaultMain from test-framework, and hUnitTestToTests from test-framework-hunit.

I'm not happy about the duplication of text and test names, and the maintenance burden implied by having to explicitly add every test function to the test list. It's too easy to forget to add a test after you've written it. In the next article, I'll demonstrate an alternative way to compose the tests so that duplication is reduced.

Conclusion #

Since HUnit tests are first-class values, you can manipulate and compose them just like any other value. That includes passing them around in lists and binding them with do notation. Once you figure out how to write parametrised tests with HUnit, it's easy, readable, and elegant.

The overall configuration of the test runner, however, leaves a bit to be desired, so that's the topic for the next article.

Next: Inlined HUnit test lists.

Null Object as identity

When can you implement a Null Object? When the return types of your methods are monoids.

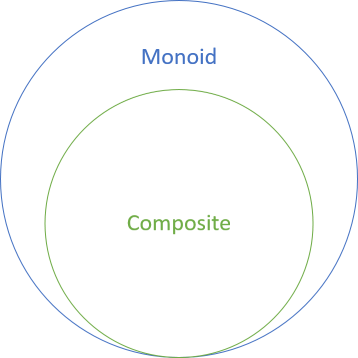

This article is part of a series of articles about design patterns and their category theory counterparts. In a previous article you learned how there's a strong relationship between the Composite design pattern and monoids. In this article you'll see that the Null Object pattern is essentially a special case of the Composite pattern.

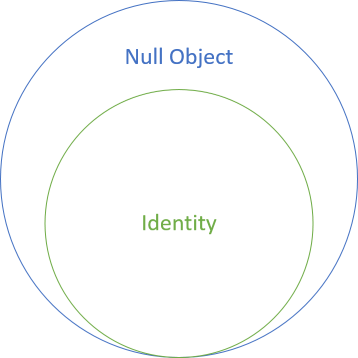

I also think that there's a relationship between monoidal identity and the Null Object pattern similar to the relationship between Composite and monoids in general:

Once more, I don't claim that an isomorphism exists. You may be able to produce Null Object examples that aren't monoidal, but on the other hand, I believe that all identities are Null Objects.

Null Object #

While the Null Object design pattern isn't one of the patterns covered in Design Patterns, I consider it a structural pattern because Composite is a structural pattern, and Null Object is a special case of Composite.

Bobby Woolf's original text contains an example in Smalltalk, and while I'm no Smalltalk expert, I think a fair translation to C# would be an interface like this:

public interface IController { bool IsControlWanted(); bool IsControlActive(); void Startup(); }

The idea behind the Null Object pattern is to add an implementation that 'does nothing', which in the example would be this:

public class NullController : IController { public bool IsControlActive() { return false; } public bool IsControlWanted() { return false; } public void Startup() { } }

Woolf calls his implementation NoController, but I find it more intent-revealing to call it NullController. Both of the Boolean methods return false, and the Startup method literally does nothing.

Doing nothing #

What exactly does it mean to 'do nothing'? In the case of the Startup method, it's clear, because it's a bona fide no-op. This is possible for all methods without return values (i.e. methods that return void), but for all other methods, the compiler will insist on a return value.

For IsControlActive and IsControlWanted, Woolf solves this by returning false.

Is false always the correct 'do nothing' value for Booleans? And what should you return if a method returns an integer, a string, or a custom type? The original text is vague on that question:

"Exactly what "do nothing" means is subjective and depends on the sort of behavior the Client is expecting."Sometimes, you can't get any closer than that, but I think that often it's possible to be more specific.

Doing nothing as identity #

From unit isomorphisms you know that methods without return values are isomorphic to methods that return unit. You also know that unit is a monoid. What does unit and bool have in common? They both form monoids; bool, in fact, forms four different monoids, of which all and any are the best-known.

In my experience, you can implement the Null Object pattern by returning various 'do nothing' values, depending on their types:

- For

bool, return a constant value. Usually,falseis the appropriate 'do nothing' value, but it does depend on the semantics of the operation. - For

string, return"". - For collections, return an empty collection.

- For numbers, return a constant value, such as

0. - For

void, do nothing, which is equivalent to returning unit.

bool and int, more than one monoid exist, and the identity depends on which one you pick:

- The identity for the any monoid is

false. - The identity for

stringis"". - The identity for collections is the empty collection.

- The identity for the addition monoid is

0. - The identity for unit is unit.

foo.Op(Foo.Identity) == foo

In other words: the identity does nothing!

As a preliminary result, then, when all return values of your interface form monoids, you can create a Null Object.

Relationship with Composite #

In the previous article, you saw how the Composite design pattern was equivalent with the Haskell function mconcat:

mconcat :: Monoid a => [a] -> a

This function, however, seems more limited than it has to be. It says that if you have a linked list of monoidal values, you can reduce them to a single monoidal value. Is this only true for linked lists?

After all, in a language like C#, you'd typically express a Composite as a container of 'some collection' of objects, typically modelled by the IReadOnlyCollection<T> interface. There are several implementations of that interface, including lists, arrays, collections, and so on.

It seems as though we ought to be able to generalise mconcat, and, indeed, we can. The Data.Foldable module defines a function called fold:

fold :: (Monoid m, Foldable t) => t m -> m

Let me decipher that for you. It says that any monoid m contained in a 'foldable' container t can be reduced to a single value (still of the type m). You can read t m as 'a foldable container that contains monoids'. In C#, you could attempt to express it as IFoldable<TMonoid>.

In other words, the Composite design pattern is equivalent to fold. Here's how it relates to the Null Object pattern:

As a first step, consider methods like those defined by the above IController interface. In order to implement NullController, all of those methods ignore their (non-existent) input and return an identity value. In other words, we're looking for a Haskell function of the type Monoid m => a -> m; that is: a function that takes input of the type a and returns a monoidal value m.

You can do that using fold:

nullify :: Monoid m => a -> m nullify = fold (Identity $ const mempty)

Recall that the input to fold must be a Foldable container. The good old Identity type is, among other capabilities, Foldable. That takes care of the container. The value that we put into the container is a single function that ignores its input (const) and always returns the identity value (mempty). The result is a function that always returns the identity value.

This demonstrates that Null Object is a special case of Composite, because nullify is a special application of fold.

There's no reason to write nullify in such a complicated way, though. You can simplify it like this:

nullify :: Monoid m => a -> m nullify = const mempty

Once you recall, however, that functions are monoids if their return values are monoids, you can simplify it even further:

nullify :: Monoid m => a -> m nullify = mempty

The identity element for monoidal functions is exactly a function that ignores its input and returns mempty.

Controller identity #

Consider the IController interface. According to object isomorphisms, you can represent this interface as a tuple of three functions:

type Controller = (ControllerState -> Any, ControllerState -> Any, ControllerState -> ())

This is cheating a little, because the third tuple element (the one that corresponds to Startup) is pure, although I strongly suspect that the intent is that it should mutate the state of the Controller. Two alternatives are to change the function to either ControllerState -> ControllerState or ControllerState -> IO (), but I'm going to keep things simple. It doesn't change the conclusion.

Notice that I've used Any as the return type for the two first tuples. As I've previously covered, Booleans form monoids like any and all. Here, we need to use Any.

This tuple is a monoid because all three functions are monoids, and a tuple of monoids is itself a monoid. This means that you can easily create a Null Object using mempty:

λ> nullController = mempty :: Controller

The nullController is a triad of functions. You can access them by pattern-matching them, like this:

λ> (isControlWanted, isControlActive, startup) = nullController

Now you can try to call e.g. isControlWanted with various values in order to verify that it always returns false. In this example, I cheated and simply made ControllerState an alias for String:

λ> isControlWanted "foo"

Any {getAny = False}

λ> isControlWanted "bar"

Any {getAny = False}

You'll get similar behaviour from isControlActive, and startup always returns () (unit).

Relaxation #

As Woolf wrote in the original text, what 'do nothing' means is subjective. I think it's perfectly reasonable to say that monoidal identity fits the description of doing nothing, but you could probably encounter APIs where 'doing nothing' means something else.

As an example, consider avatars for online forums, such as Twitter. If you don't supply a picture when you create your profile, you get a default picture. One way to implement such a feature could be by having a 'null' avatar, which is used in place of a proper avatar. Such a default avatar object could (perhaps) be considered a Null Object, but wouldn't necessarily be monoidal. There may not even be a binary operation for avatars.

Thus, it's likely that you could encounter or create Null Objects that aren't monoids. That's the reason that I don't claim that a Null Object always is monoidal identity, although I do think that the reverse relationship holds: if it's identity, then it's a Null Object.

Summary #

Monoids are associative binary operations with identity. The identity is the value that 'does nothing' in that binary operation, like, for example, 0 doesn't 'do' anything under addition. Monoids, however, can be more complex than operations on primitive values. Functions, for example, can be monoids as well, as can tuples of functions.

The implication of that is that objects can be monoids as well. When you have a monoidal object, then its identity is the 'natural' Null Object.

The question was: when can you implement the Null Object pattern? The answer is that you can do that when all involved methods return monoids.

Next: Builder as a monoid.

Endomorphic Composite as a monoid

A variation of the Composite design pattern uses endomorphic composition. That's still a monoid.

This article is part of a series of articles about design patterns and their category theory counterparts. In a previous article, you learned that the Composite design pattern is simply a monoid.

There is, however, a variation of the Composite design pattern where the return value from one step can be used as the input for the next step.

Endomorphic API #

Imagine that you have to implement some scheduling functionality. For example, you may need to schedule something to happen a month from now, but it should happen on a bank day, during business hours, and you want to know what the resulting date and time will be, expressed in UTC. I've previously covered the various objects for performing such steps. The common behaviour is this interface:

public interface IDateTimeOffsetAdjustment { DateTimeOffset Adjust(DateTimeOffset value); }

The Adjust method is an endomorphism; that is: the input type is the same as the return type, in this case DateTimeOffset. A previous article already established that that's a monoid.

Composite endomorphism #

If you have various implementations of IDateTimeOffsetAdjustment, you can make a Composite from them, like this:

public class CompositeDateTimeOffsetAdjustment : IDateTimeOffsetAdjustment { private readonly IReadOnlyCollection<IDateTimeOffsetAdjustment> adjustments; public CompositeDateTimeOffsetAdjustment( IReadOnlyCollection<IDateTimeOffsetAdjustment> adjustments) { if (adjustments == null) throw new ArgumentNullException(nameof(adjustments)); this.adjustments = adjustments; } public DateTimeOffset Adjust(DateTimeOffset value) { var acc = value; foreach (var adjustment in this.adjustments) acc = adjustment.Adjust(acc); return acc; } }

The Adjust method simply starts with the input value and loops over all the composed adjustments. For each adjustment it adjusts acc to produce a new acc value. This goes on until all adjustments have had a chance to adjust the value.

Notice that if adjustments is empty, the Adjust method simply returns the input value. In that degenerate case, the behaviour is similar to the identity function, which is the identity for the endomorphism monoid.

You can now compose the desired behaviour, as this parametrised xUnit.net test demonstrates:

[Theory] [InlineData("2017-01-31T07:45:55+2", "2017-02-28T07:00:00Z")] [InlineData("2017-02-06T10:03:02+1", "2017-03-06T09:03:02Z")] [InlineData("2017-02-09T04:20:00Z" , "2017-03-09T09:00:00Z")] [InlineData("2017-02-12T16:02:11Z" , "2017-03-10T16:02:11Z")] [InlineData("2017-03-14T13:48:29-1", "2017-04-13T14:48:29Z")] public void AdjustReturnsCorrectResult( string dtS, string expectedS) { var dt = DateTimeOffset.Parse(dtS); var sut = new CompositeDateTimeOffsetAdjustment( new NextMonthAdjustment(), new BusinessHoursAdjustment(), new DutchBankDayAdjustment(), new UtcAdjustment()); var actual = sut.Adjust(dt); Assert.Equal(DateTimeOffset.Parse(expectedS), actual); }

You can see the implementation for all four composed classes in the previous article. NextMonthAdjustment adjusts a date by a month into its future, BusinessHoursAdjustment adjusts a time to business hours, DutchBankDayAdjustment takes bank holidays and weekends into account in order to return a bank day, and UtcAdjustment convert a date and time to UTC.

Monoidal accumulation #

As you've learned in that previous article that I've already referred to, an endomorphism is a monoid. In this particular example, the binary operation in question is called Append. From another article, you know that monoids accumulate:

public static IDateTimeOffsetAdjustment Accumulate( IReadOnlyCollection<IDateTimeOffsetAdjustment> adjustments) { IDateTimeOffsetAdjustment acc = new IdentityDateTimeOffsetAdjustment(); foreach (var adjustment in adjustments) acc = Append(acc, adjustment); return acc; }

This implementation follows the template previously described:

- Initialize a variable

accwith the identity element. In this case, the identity is a class calledIdentityDateTimeOffsetAdjustment. - For each

adjustmentinadjustments,Appendtheadjustmenttoacc. - Return

acc.

mconcat. We'll get back to that in a moment, but first, here's another parametrised unit test that exercises the same test cases as the previous test, only against a composition created by Accumulate:

[Theory] [InlineData("2017-01-31T07:45:55+2", "2017-02-28T07:00:00Z")] [InlineData("2017-02-06T10:03:02+1", "2017-03-06T09:03:02Z")] [InlineData("2017-02-09T04:20:00Z" , "2017-03-09T09:00:00Z")] [InlineData("2017-02-12T16:02:11Z" , "2017-03-10T16:02:11Z")] [InlineData("2017-03-14T13:48:29-1", "2017-04-13T14:48:29Z")] public void AccumulatedAdjustReturnsCorrectResult( string dtS, string expectedS) { var dt = DateTimeOffset.Parse(dtS); var sut = DateTimeOffsetAdjustment.Accumulate( new NextMonthAdjustment(), new BusinessHoursAdjustment(), new DutchBankDayAdjustment(), new UtcAdjustment()); var actual = sut.Adjust(dt); Assert.Equal(DateTimeOffset.Parse(expectedS), actual); }

While the implementation is different, this monoidal composition has the same behaviour as the above CompositeDateTimeOffsetAdjustment class. This, again, emphasises that Composites are simply monoids.

Endo #

For comparison, this section demonstrates how to implement the above behaviour in Haskell. The code here passes the same test cases as those above. You can skip to the next section if you want to get to the conclusion.

Instead of classes that implement interfaces, in Haskell you can define functions with the type ZonedTime -> ZonedTime. You can compose such functions using the Endo newtype 'wrapper' that turns endomorphisms into monoids:

λ> adjustments = reverse [adjustToNextMonth, adjustToBusinessHours, adjustToDutchBankDay, adjustToUtc] λ> :type adjustments adjustments :: [ZonedTime -> ZonedTime] λ> adjust = appEndo $ mconcat $ Endo <$> adjustments λ> :type adjust adjust :: ZonedTime -> ZonedTime

In this example, I'm using GHCi (the Haskell REPL) to show the composition in two steps. The first step creates adjustments, which is a list of functions. In case you're wondering about the use of the reverse function, it turns out that mconcat composes from right to left, which I found counter-intuitive in this case. adjustToNextMonth should execute first, followed by adjustToBusinessHours, and so on. Defining the functions in the more intuitive left-to-right direction and then reversing it makes the code easier to understand, I hope.

(For the Haskell connoisseurs, you can also achieve the same result by composing Endo with the Dual monoid, instead of reversing the list of adjustments.)

The second step composes adjust from adjustments. It first maps adjustments to Endo values. While ZonedTime -> ZonedTime isn't a Monoid instances, Endo ZonedTime is. This means that you can reduce a list of Endo ZonedTime with mconcat. The result is a single Endo ZonedTime value, which you can then unwrap to a function using appEndo.

adjust is a function that you can call:

λ> dt 2017-01-31 07:45:55 +0200 λ> adjust dt 2017-02-28 07:00:00 +0000

In this example, I'd already prepared a ZonedTime value called dt. Calling adjust returns a new ZonedTime adjusted by all four composed functions.

Conclusion #

In general, you implement the Composite design pattern by calling all composed functions with the original input value, collecting the return value of each call. In the final step, you then reduce the collected return values to a single value that you return. This requires the return type to form a monoid, because otherwise, you can't reduce it.

In this article, however, you learned about an alternative implementation of the Composite design pattern. If the method that you compose has the same output type as its input, you can pass the output from one object as the input to the next object. In that case, you can escape the requirement that the return value is a monoid. That's the case with DateTimeOffset and ZonedTime: neither are monoids, because you can't add two dates and times together.

At first glance, then, it seems like a falsification of the original claim that Composites are monoids. As you've learned in this article, however, endomorphisms are monoids, so the claim still stands.

Next: Null Object as identity.

Coalescing Composite as a monoid

A variation of the Composite design pattern uses coalescing behaviour to return non-composable values. That's still a monoid.

This article is part of a series of articles about design patterns and their category theory counterparts. In a previous article, you learned that the Composite design pattern is simply a monoid.

Monoidal return types #

When all methods of an interface return monoids, you can create a Composite. This is fairly intuitive once you understand what a monoid is. Consider this example interface:

public interface ICustomerRepository { void Create(Customer customer); Customer Read(Guid id); void Update(Customer customer); void Delete(Guid id); }

While this interface is, in fact, not readily composable, most of the methods are. It's easy to compose the three void methods. Here's a composition of the Create method:

public void Create(Customer customer) { foreach (var repository in this.repositories) repository.Create(customer); }

In this case it's easy to compose multiple repositories, because void (or, rather, unit) forms a monoid. If you have methods that return numbers, you can add the numbers together (a monoid). If you have methods that return strings, you can concatenate the strings (a monoid). If you have methods that return Boolean values, you can or or and them together (more monoids).

What about the above Read method, though?

Picking the first Repository #

Why would you even want to compose two repositories? One scenario is where you have an old data store, and you want to move to a new data store. For a while, you wish to write to both data stores, but one of them stays the 'primary' data store, so this is the one from which you read.

Imagine that the old repository saves customer information as JSON files on disk. The new data store, on the other hand, saves customer data as JSON documents in Azure Blob Storage. You've already written two implementations of ICustomerRepository: FileCustomerRepository and AzureCustomerRepository. How do you compose them?

The three methods that return void are easy, as the above Create implementation demonstrates. The Read method, however, is more tricky.

One option is to only query the first repository, and return its return value:

public Customer Read(Guid id) { return this.repositories.First().Read(id); }

This works, but doesn't generalise. It works if you know that you have a non-empty collection of repositories, but if you want to adhere to the Liskov Substitution Principle, you should be able to handle the case where there's no repositories.

A Composite should be able to compose an arbitrary number of other objects. This includes a collection of no objects. The CompositeCustomerRepository class has this constructor:

private readonly IReadOnlyCollection<ICustomerRepository> repositories; public CompositeCustomerRepository( IReadOnlyCollection<ICustomerRepository> repositories) { if (repositories == null) throw new ArgumentNullException(nameof(repositories)); this.repositories = repositories; }

It uses standard Constructor Injection to inject an IReadOnlyCollection<ICustomerRepository>. Such a collection is finite, but can be empty.

Another problem with blindly returning the value from the first repository is that the return value may be empty.

In C#, people often use null to indicate a missing value, and while I find such practice unwise, I'll pursue this option for a bit.

A more robust Composite would return the first non-null value it gets:

public Customer Read(Guid id) { foreach (var repository in this.repositories) { var customer = repository.Read(id); if (customer != null) return customer; } return null; }

This implementation loops through all the injected repositories and calls Read on each until it gets a result that is not null. This will often be the first value, but doesn't have to be. If all repositories return null, then the Composite also returns null. To emphasise my position, I would never design C# code like this, but at least it's consistent.

If you've ever worked with relational databases, you may have had an opportunity to use the COALESCE function, which works in exactly the same way. This is the reason I call such an implementation a coalescing Composite.

The First monoid #

The T-SQL documentation for COALESCE describes the operation like this:

"Evaluates the arguments in order and returns the current value of the first expression that initially does not evaluate to NULL."

The Oracle documentation expresses it as:

"This may not be apparent, but that's a monoid.COALESCEreturns the first non-nullexprin the expression list."

Haskell's base library comes with a monoidal type called First, which is a

"Maybe monoid returning the leftmost non-Nothing value."Sounds familiar?

Here's how you can use it in GHCi:

λ> First (Just (Customer id1 "Joan")) <> First (Just (Customer id2 "Nigel"))

First {getFirst = Just (Customer {customerId = 1243, customerName = "Joan"})}

λ> First (Just (Customer id1 "Joan")) <> First Nothing

First {getFirst = Just (Customer {customerId = 1243, customerName = "Joan"})}

λ> First Nothing <> First (Just (Customer id2 "Nigel"))

First {getFirst = Just (Customer {customerId = 5cd5, customerName = "Nigel"})}

λ> First Nothing <> First Nothing

First {getFirst = Nothing}

(To be clear, the above examples uses First from Data.Monoid, not First from Data.Semigroup.)

The operator <> is an infix alias for mappend - Haskell's polymorphic binary operation.

As long as the left-most value is present, that's the return value, regardless of whether the right value is Just or Nothing. Only when the left value is Nothing is the right value returned. Notice that this value may also be Nothing, causing the entire expression to be Nothing.

That's exactly the same behaviour as the above implementation of the Read method.

First in C# #

It's easy to port Haskell's First type to C#:

public class First<T> { private readonly T item; private readonly bool hasItem; public First() { this.hasItem = false; } public First(T item) { if (item == null) throw new ArgumentNullException(nameof(item)); this.item = item; this.hasItem = true; } public First<T> FindFirst(First<T> other) { if (this.hasItem) return this; else return other; } }

Instead of nesting Maybe inside of First, as Haskell does, I simplified a bit and gave First<T> two constructor overloads: one that takes a value, and one that doesn't. The FindFirst method is the binary operation that corresponds to Haskell's <> or mappend.

This is only one of several alternative implementations of the first monoid.

In order to make First<T> a monoid, it must also have an identity, which is just an empty value:

public static First<T> Identity<T>() { return new First<T>(); }

This enables you to accumulate an arbitrary number of First<T> values to a single value:

public static First<T> Accumulate<T>(IReadOnlyList<First<T>> firsts) { var acc = Identity<T>(); foreach (var first in firsts) acc = acc.FindFirst(first); return acc; }

You start with the identity, which is also the return value if firsts is empty. If that's not the case, you loop through all firsts and update acc by calling FindFirst.

A composable Repository #

You can formalise such a design by changing the ICustomerRepository interface:

public interface ICustomerRepository { void Create(Customer customer); First<Customer> Read(Guid id); void Update(Customer customer); void Delete(Guid id); }

In this modified version, Read explicitly returns First<Customer>. The rest of the methods remain as before.

The reusable API of First makes it easy to implement a Composite version of Read:

public First<Customer> Read(Guid id) { var candidates = new List<First<Customer>>(); foreach (var repository in this.repositories) candidates.Add(repository.Read(id)); return First.Accumulate(candidates); }

You could argue that this seems to be wasteful, because it calls Read on all repositories. If the first Repository returns a value, all remaining queries are wasted. You can address that issue with lazy evaluation.

You can see (a recording of) a live demo of the example in this article in my Clean Coders video Composite as Universal Abstraction.

Summary #

While the typical Composite is implemented by directly aggregating the return values from the composed objects, variations exist. One variation picks the first non-empty value from a collection of candidates, reminiscent of the SQL COALESCE function. This is, however, still a monoid, so the overall conjecture that Composites are monoids still holds.

Another Composite variation exists, but that one turns out to be a monoid as well. Read on!

Comments

When all methods of an interface return monoids, you can create a Composite. This is fairly intuitive once you understand what a monoid is.

I've been struggling a bit with the terminology and I could use a bit of help.

I understand a Monoid<a> to be of type a -> a -> a. I further understand that it can be said that a "forms a monoid over" the specific a -> a -> a implementation. We can then say that an int forms two different monoids over addition and multiplication.

Is my understanding above accurate and if so wouldn't it be more accurate to say that you can create a composite from an interface only when all of its methods return values that form monoids? Saying that they have to return monoids (instead of values that form them) goes against my understanding that a monoid is a binary operation.

Thanks a lot for an amazing article series, and for this blog overall. Keep up the good work!

Nikola, thank you for writing. You're correct: it would be more correct to say that you can create a Composite from an interface when all of its methods return types that form monoids. Throughout this article series, I've been struggling to keep my language as correct and specific as possible, but I sometimes slip up.

This has come up before, so perhaps you'll find this answer helpful.

By the way, there's one exception to the rule that in order to be able to create a Composite, all methods must return types that form monoids. This is when the return type is the same as the input type. The resulting Composite is still a monoid, so the overall conclusion holds.

Maybe monoids

You can combine Maybe objects in several ways. An article for object-oriented programmers.

This article is part of a series about monoids. In short, a monoid is an associative binary operation with a neutral element (also known as identity).

You can combine Maybe objects in various ways, thereby turning them into monoids. There's at least two unconstrained monoids over Maybe values, as well as some constrained monoids. By constrained I mean that the monoid only exists for Maybe objects that contain certain values. You'll see such an example first.

Combining Maybes over semigroups #

If you have two Maybe objects, and they both (potentially) contain values that form a semigroup, you can combine the Maybe values as well. Here's a few examples.

public static Maybe<int> CombineMinimum(Maybe<int> x, Maybe<int> y) { if (x.HasItem && y.HasItem) return new Maybe<int>(Math.Min(x.Item, y.Item)); if (x.HasItem) return x; return y; }

In this first example, the semigroup operation in question is the minimum operation. Since C# doesn't enable you to write generic code over mathematical operations, the above method just gives you an example implemented for Maybe<int> values. If you also want to support e.g. Maybe<decimal> or Maybe<long>, you'll have to add overloads for those types.

If both x and y have values, you get the minimum of those, still wrapped in a Maybe container:

var x = new Maybe<int>(42); var y = new Maybe<int>(1337); var m = Maybe.CombineMinimum(x, y);

Here, m is a new Maybe<int>(42).

It's possible to combine any two Maybe objects as long as you have a way to combine the contained values in the case where both Maybe objects contain values. In other words, you need a binary operation, so the contained values must form a semigroup, like, for example, the minimum operation. Another example is maximum:

public static Maybe<decimal> CombineMaximum(Maybe<decimal> x, Maybe<decimal> y) { if (x.HasItem && y.HasItem) return new Maybe<decimal>(Math.Max(x.Item, y.Item)); if (x.HasItem) return x; return y; }

In order to vary the examples, I chose to implement this operation for decimal instead of int, but you can see that the implementation code follows the same template. When both x and y contains values, you invoke the binary operation. If, on the other hand, y is empty, then right identity still holds:

var x = new Maybe<decimal>(42); var y = new Maybe<decimal>(); var m = Maybe.CombineMaximum(x, y);

Since y in the above example is empty, the resulting object m is a new Maybe<decimal>(42).

You don't have to constrain yourself to semigroups exclusively. You can use a monoid as well, such as the sum monoid:

public static Maybe<long> CombineSum(Maybe<long> x, Maybe<long> y) { if (x.HasItem && y.HasItem) return new Maybe<long>(x.Item + y.Item); if (x.HasItem) return x; return y; }

Again, notice how most of this code is boilerplate code that follows the same template as above. In C#, unfortunately, you have to write out all the combinations of operations and contained types, but in Haskell, with its stronger type system, it all comes in the base library:

Prelude Data.Semigroup> Option (Just (Min 42)) <> Option (Just (Min 1337))

Option {getOption = Just (Min {getMin = 42})}

Prelude Data.Semigroup> Option (Just (Max 42)) <> mempty

Option {getOption = Just (Max {getMax = 42})}

Prelude Data.Semigroup> mempty <> Option (Just (Sum 1337))

Option {getOption = Just (Sum {getSum = 1337})}

That particular monoid over Maybe, however, does require as a minimum that the contained values form a semigroup. There are other monoids over Maybe that don't have any such constraints.

First #

As you can read in the introductory article about semigroups, there's two semigroup operations called first and last. Similarly, there's two operations by the same name defined over monoids. They behave a little differently, although they're related.

The first monoid operation returns the left-most non-empty value among candidates. You can view nothing as being a type-safe equivalent to null, in which case this monoid is equivalent to a null coalescing operator.

public static Maybe<T> First<T>(Maybe<T> x, Maybe<T> y) { if (x.HasItem) return x; return y; }

As long as x contains a value, First returns it. The contained values don't have to form monoids or semigroups, as this example demonstrates:

var x = new Maybe<Guid>(new Guid("03C2ECDBEF1D46039DE94A9994BA3C1E")); var y = new Maybe<Guid>(new Guid("A1B7BC82928F4DA892D72567548A8826")); var m = Maybe.First(x, y);

While I'm not aware of any reasonable way to combine GUIDs, you can still pick the left-most non-empty value. In the above example, m contains 03C2ECDBEF1D46039DE94A9994BA3C1E. If, on the other hand, the first value is empty, you get a different result:

var x = new Maybe<Guid>(); var y = new Maybe<Guid>(new Guid("2A2D19DE89D84EFD9E5BEE7C4ADAFD90")); var m = Maybe.First(x, y);

In this case, m contains 2A2D19DE89D84EFD9E5BEE7C4ADAFD90, even though it comes from y.

Notice that there's no guarantee that First returns a non-empty value. If both x and y are empty, then the result is also empty. The First operation is an associative binary operation, and the identity is the empty value (often called nothing or none). It's a monoid.

Last #

Since you can define a binary operation called First, it's obvious that you can also define one called Last:

public static Maybe<T> Last<T>(Maybe<T> x, Maybe<T> y) { if (y.HasItem) return y; return x; }

This operation returns the right-most non-empty value:

var x = new Maybe<Guid>(new Guid("1D9326CDA0B3484AB495DFD280F990A3")); var y = new Maybe<Guid>(new Guid("FFFC6CE263C7490EA0290017FE02D9D4")); var m = Maybe.Last(x, y);

In this example, m contains FFFC6CE263C7490EA0290017FE02D9D4, but while Last favours y, it'll still return x if y is empty. Notice that, like First, there's no guarantee that you'll receive a populated Maybe. If both x and y are empty, the result will be empty as well.

Like First, Last is an associative binary operation with nothing as the identity.

Generalisation #

The first examples you saw in this article (CombineMinimum, CombineMaximum, and so on), came with the constraint that the contained values form a semigroup. The First and Last operations, on the other hand, seem unconstrained. They work even on GUIDs, which notoriously can't be combined.

If you recall, though, first and last are both associative binary operations. They are, in fact, unconstrained semigroups. Recall the Last semigroup:

public static T Last<T>(T x, T y) { return y; }

This binary operation operates on any unconstrained type T, including Guid. It unconditionally returns y.

You could implement the Last monoid over Maybe using the same template as above, utilising the underlying semigroup:

public static Maybe<T> Last<T>(Maybe<T> x, Maybe<T> y) { if (x.HasItem && y.HasItem) return new Maybe<T>(Last(x.Item, y.Item)); if (x.HasItem) return x; return y; }

This implementation has exactly the same behaviour as the previous implementation of Last shown earlier. You can implement First in the same way.

That's exactly how Haskell works:

Prelude Data.Semigroup Data.UUID.Types> x =

sequence $ Last $ fromString "03C2ECDB-EF1D-4603-9DE9-4A9994BA3C1E"

Prelude Data.Semigroup Data.UUID.Types> x

Just (Last {getLast = 03c2ecdb-ef1d-4603-9de9-4a9994ba3c1e})

Prelude Data.Semigroup Data.UUID.Types> y =

sequence $ Last $ fromString "A1B7BC82-928F-4DA8-92D7-2567548A8826"

Prelude Data.Semigroup Data.UUID.Types> y

Just (Last {getLast = a1b7bc82-928f-4da8-92d7-2567548a8826})

Prelude Data.Semigroup Data.UUID.Types> Option x <> Option y

Option {getOption = Just (Last {getLast = a1b7bc82-928f-4da8-92d7-2567548a8826})}

Prelude Data.Semigroup Data.UUID.Types> Option x <> mempty

Option {getOption = Just (Last {getLast = 03c2ecdb-ef1d-4603-9de9-4a9994ba3c1e})}

The <> operator is the generic binary operation, and the way Haskell works, it changes behaviour depending on the type upon which it operates. Option is a wrapper around Maybe, and Last represents the last semigroup. When you stack UUID values inside of Option Last, you get the behaviour of selecting the right-most non-empty value.

In fact,

Any semigroup S may be turned into a monoid simply by adjoining an element e not in S and defining e • s = s = s • e for all s ∈ S.

That's just a mathematical way of saying that if you have a semigroup, you can add an extra value e and make e behave like the identity for the monoid you're creating. That extra value is nothing. The way Haskell's Data.Semigroup module models a monoid over Maybe instances aligns with the underlying mathematics.

Conclusion #

Just as there's more than one monoid over numbers, and more than one monoid over Boolean values, there's more than one monoid over Maybe values. The most useful one may be the one that elevates any semigroup to a monoid by adding nothing as the identity, but others exist. While, at first glance, the first and last monoids over Maybes look like operations in their own right, they're just applications of the general rule. They elevate the first and last semigroups to monoids by 'wrapping' them in Maybes, and using nothing as the identity.

Next: Lazy monoids.

The Maybe functor

An introduction to the Maybe functor for object-oriented programmers.

This article is an instalment in an article series about functors.

One of the simplest, and easiest to understand, functors is Maybe. It's also sometimes known as the Maybe monad, but this is not a monad tutorial; it's a functor tutorial. Maybe is many things; one of them is a functor. In F#, Maybe is called option.

Motivation #

Maybe enables you to model a value that may or may not be present. Object-oriented programmers typically have a hard time grasping the significance of Maybe, since it essentially does the same as null in mainstream object-oriented languages. There are differences, however. In languages like C# and Java, most things can be null, which can lead to much defensive coding. What happens more frequently, though, is that programmers forget to check for null, with run-time exceptions as the result.

A Maybe value, on the other hand, makes it explicit that a value may or may not be present. In statically typed languages, it also forces you to deal with the case where no data is present; if you don't, your code will not compile.

Finally, in a language like C#, null has no type, but a Maybe value always has a type.

If you appreciate the tenet that explicit is better than implicit, then you should favour Maybe over null.

Implementation #

If you've read the introduction, then you know that IEnumerable<T> is a functor. In many ways, Maybe is like IEnumerable<T>, but it's a particular type of collection that can only contain zero or one element(s). There are various ways in which you can implement Maybe in an object-oriented language like C#; here's one:

public sealed class Maybe<T> { internal bool HasItem { get; } internal T Item { get; } public Maybe() { this.HasItem = false; } public Maybe(T item) { if (item == null) throw new ArgumentNullException(nameof(item)); this.HasItem = true; this.Item = item; } public Maybe<TResult> Select<TResult>(Func<T, TResult> selector) { if (selector == null) throw new ArgumentNullException(nameof(selector)); if (this.HasItem) return new Maybe<TResult>(selector(this.Item)); else return new Maybe<TResult>(); } public T GetValueOrFallback(T fallbackValue) { if (fallbackValue == null) throw new ArgumentNullException(nameof(fallbackValue)); if (this.HasItem) return this.Item; else return fallbackValue; } public override bool Equals(object obj) { var other = obj as Maybe<T>; if (other == null) return false; return object.Equals(this.Item, other.Item); } public override int GetHashCode() { return this.HasItem ? this.Item.GetHashCode() : 0; } }

This is a generic class with two constructors. The parameterless constructor indicates the case where no value is present, whereas the other constructor overload indicates the case where exactly one value is available. Notice that a guard clause prevents you from accidentally passing null as a value.

The Select method has the correct signature for a functor. If a value is present, it uses the selector method argument to map item to a new value, and return a new Maybe<TResult> value. If no value is available, then a new empty Maybe<TResult> value is returned.

This class also override Equals. This isn't necessary in order for it to be a functor, but it makes it easier to compare two Maybe<T> values.

A common question about such generic containers is: how do you get the value out of the container?

The answer depends on the particular container, but in this example, I decided to enable that functionality with the GetValueOrFallback method. The only way to get the item out of a Maybe value is by supplying a fall-back value that can be used if no value is available. This is one way to guarantee that you, as a client developer, always remember to deal with the empty case.

Usage #

It's easy to use this Maybe class:

var source = new Maybe<int>(42);

This creates a new Maybe<int> object that contains the value 42. If you need to change the value inside the object, you can, for example, do this:

Maybe<string> dest = source.Select(x => x.ToString());

Since C# natively understands functors through its query syntax, you could also have written the above translation like this:

Maybe<string> dest = from x in source select x.ToString();

It's up to you and your collaborators whether you prefer one or the other of those alternatives. In both examples, though, dest is a new populated Maybe<string> object containing the string "42".

A more realistic example could be as part of a line-of-business application. Many enterprise developers are familiar with the Repository pattern. Imagine that you'd like to query a repository for a Reservation object. If one is found in the database, you'd like to convert it to a view model, so that you can display it.

var viewModel = repository.Read(id) .Select(r => r.ToViewModel()) .GetValueOrFallback(ReservationViewModel.Null);

The repository's Read method returns Maybe<Reservation>, indicating that it's possible that no object is returned. This will happen if you're querying the repository for an id that doesn't exist in the underlying database.

While you can translate the (potential) Reservation object to a view model (using the ToViewModel extension method), you'll have to supply a default view model to handle the case when the reservation wasn't found.

ReservationViewModel.Null is a static read-only class field implementing the Null Object pattern. Here, it's used for the fall-back value, in case no object was returned from the repository.

Notice that while you need a fall-back value at the end of your fluent interface pipeline, you don't need fall-back values for any intermediate steps. Specifically, you don't need a Null Object implementation for your domain model (Reservation). Furthermore, no defensive coding is required, because Maybe<T> guarantees that the object passed to selector is never null.

First functor law #

A Select method with the right signature isn't enough to be a functor. It must also obey the functor laws. Maybe obeys both laws, which you can demonstrate with a few examples. Here's some test cases for a populated Maybe:

[Theory] [InlineData("")] [InlineData("foo")] [InlineData("bar")] [InlineData("corge")] [InlineData("antidisestablishmentarianism")] public void PopulatedMaybeObeysFirstFunctorLaw(string value) { Func<string, string> id = x => x; var m = new Maybe<string>(value); Assert.Equal(m, m.Select(id)); }

This parametrised unit test uses xUnit.net to demonstrate that a populated Maybe value doesn't change when translated with the local id function, since id returns the input unchanged.

The first functor law holds for an empty Maybe as well:

[Fact] public void EmptyMaybeObeysFirstFunctorLaw() { Func<string, string> id = x => x; var m = new Maybe<string>(); Assert.Equal(m, m.Select(id)); }

When a Maybe starts empty, translating it with id doesn't change that it's empty. It's worth noting, however, that the original and the translated objects are considered equal because Maybe<T> overrides Equals. Even in the case of the empty Maybe, the value returned by Select(id) is a new object, with a memory address different from the original value.

Second functor law #

You can also demonstrate the second functor law with some examples, starting with some test cases for the populated case:

[Theory] [InlineData("", true)] [InlineData("foo", false)] [InlineData("bar", false)] [InlineData("corge", false)] [InlineData("antidisestablishmentarianism", true)] public void PopulatedMaybeObeysSecondFunctorLaw(string value, bool expected) { Func<string, int> g = s => s.Length; Func<int, bool> f = i => i % 2 == 0; var m = new Maybe<string>(value); Assert.Equal(m.Select(g).Select(f), m.Select(s => f(g(s)))); }

In this parametrised test, f and g are two local functions. g returns the length of a string (for example, the length of antidisestablishmentarianism is 28). f evaluates whether or not a number is even.

Whether you decide to first translate m with g, and then translate the return value with f, or you decide to translate the composition of those functions in a single Select method call, the result should be the same.

The second functor law holds for the empty case as well:

[Fact] public void EmptyMaybeObeysSecondFunctorLaw() { Func<string, int> g = s => s.Length; Func<int, bool> f = i => i % 2 == 0; var m = new Maybe<string>(); Assert.Equal(m.Select(g).Select(f), m.Select(s => f(g(s)))); }

Since m is empty, applying the translations doesn't change that fact - it only changes the type of the resulting object, which is an empty Maybe<bool>.

Haskell #

In Haskell, Maybe is built in. You can create a Maybe value containing an integer like this (the type annotations are optional):

source :: Maybe Int source = Just 42

Mapping source to a String can be done like this:

dest :: Maybe String dest = fmap show source

The function fmap corresponds to the above C# Select method.

It's also possible to use infix notation:

dest :: Maybe String dest = show <$> source

The <$> operator is an alias for fmap.

Whether you use fmap or <$>, the resulting dest value is Just "42".

If you want to create an empty Maybe value, you use the Nothing data constructor.

F# #

Maybe is also a built-in type in F#, but here it's called option instead of Maybe. You create an option containing an integer like this:

// int option let source = Some 42

While the case where a value is present was denoted with Just in Haskell, in F# it's called Some.

You can translate option values using the map function from the Option module:

// string option let dest = source |> Option.map string

Finally, if you want to create an empty option value, you can use the None case constructor.

Summary #

Together with a functor called Either, Maybe is one of the workhorses of statically typed functional programming. You aren't going to write much F# or Haskell before you run into it. In C# I've used variations of the above Maybe<T> class for years, with much success.

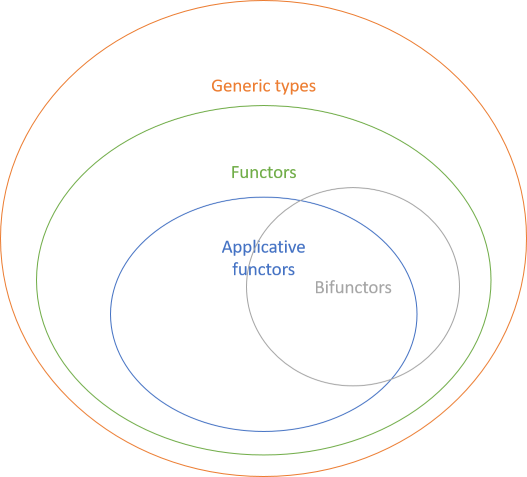

In this article, I only discussed Maybe in its role of being a functor, but it's so much more than that! It's also an applicative functor, a monad, and traversable (enumerable). Not all functors are that rich.

Next: An Either functor.

Comments

I think it's interesting to note that since C# 8.0 we don't require an extra generic type like Maybe<T> anymore in order to implement the maybe functor. Because C# 8.0 added nullable reference types, everything can be nullable now. By adding the right extension methods we can make T? a maybe functor and use its beautifully succinct syntax.

Due to the C#'s awkward dichotomy between value and reference types this involves some busy work, so I created a small nuget package for interested parties.

Robert, thank you for writing. That feature indeed seems like an improvement. I'm currently working in a code base with that feature enabled, and I definitely think that it's better than C# without it, but Maybe<T> it isn't. There's too many edge cases and backwards compatibility issues to make it as good.

Pragmatically, this is now what C# developers have to work with. Since it's now part of the language, it's likely that use of it will become more widespread than we could ever hope that Maybe<T> would be, so that's fine. On the other hand, Maybe<T> is now an impossible sell to C# developers.

Because of all the edge cases that the compiler could overlook, however, I don't see how this is ever going to be as good as a language without null references in the first place.

Functors

A functor is a common abstraction. While typically associated with functional programming, functors exist in C# as well.

This article series is part of a larger series of articles about functors, applicatives, and other mappable containers.

Programming is about abstraction, since you can't manipulate individual sub-atomic particles on your circuit boards. Some abstractions are well-known because they're rooted in mathematics. Particularly, category theory has proven to be fertile ground for functional programming. Some of the concepts from category theory apply to object-oriented programming as well; all you need is generics, which is a feature of both C# and Java.

In previous articles, you got an introduction to the specific Test Data Builder and Test Data Generator functors. Functors are more common than you may realise, although in programming, we usually work with a subset of functors called endofunctors. In daily speak, however, we just call them functors.

In the next series of articles, you'll see plenty of examples of functors, with code examples in both C#, F#, and Haskell. These articles are mostly aimed at object-oriented programmers curious about the concept.

- The Maybe functor

- An Either functor

- A Tree functor

- A rose tree functor

- A Visitor functor

- Reactive functor

- The Identity functor

- The Lazy functor

- Asynchronous functors

- The Const functor

- The State functor

- The Reader functor

- The IO functor

- Monomorphic functors

- Set is not a functor

IEnumerable<T>. Since the combination of IEnumerable<T> and query syntax is already well-described, I'm not going to cover it explicitly here.

If you understand how LINQ, IEnumerable<T>, and C# query syntax works, however, all other functors should feel intuitive. That's the power of abstractions.

Overview #

The purpose of this article isn't to give you a comprehensive introduction to the category theory of functors. Rather, the purpose is to give you an opportunity to learn how it translates to object-oriented code like C#. For a great introduction to functors, see Bartosz Milewski's explanation with illustrations.

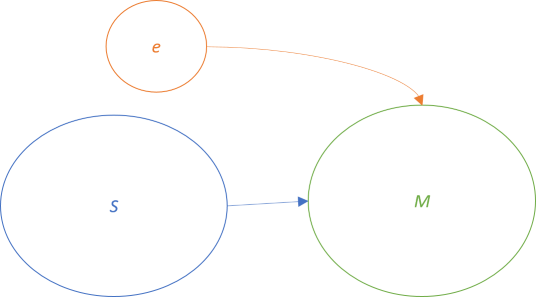

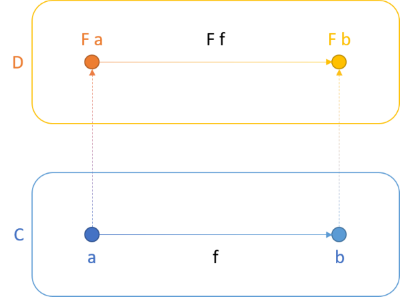

In short, a functor is a mapping between two categories. A functor maps not only objects, but also functions (called morphisms) between objects. For instance, a functor F may be a mapping between the categories C and D:

Not only does F map a from C to F a in D (and likewise for b), it also maps the function f to F f. Functors preserve the structure between objects. You'll often hear the phrase that a functor is a structure-preserving map. One example of this regards lists. You can translate a List<int> to a List<string>, but the translation preserves the structure. This means that the resulting object is also a list, and the order of values within the lists doesn't change.

In category theory, categories are often named C, D, and so on, but an example of a category could be IEnumerable<T>. If you have a function that translates integers to strings, the source object (that's what it's called, but it's not the same as an OOP object) could be IEnumerable<int>, and the destination object could be IEnumerable<string>. A functor, then, represents the ability to go from IEnumerable<int> to IEnumerable<string>, and since the Select method gives you that ability, IEnumerable<T>.Select is a functor. In this case, you sort of 'stay within' the category of IEnumerable<T>, only you change the generic type argument, so this functor is really an endofunctor (the endo prefix is from Greek, meaning within).

As a rule of thumb, if you have a type with a generic type argument, it's a candidate to be a functor. Such a type is not always a functor, because it also depends on where the generic type argument appears, and some other rules.

Fundamentally, you must be able to implement a method for your generic type that looks like this:

public Functor<TResult> Select<TResult>(Func<T, TResult> selector)

Here, I've defined the Select method as an instance method on a class called Functor<T>, but often, as is the case with IEnumerable<T>, the method is instead implemented as an extension method. You don't have to name it Select, but doing so enables query syntax in C#:

var dest = from x in source select x.ToString();

Here, source is a Functor<int> object.

If you don't name the method Select, it could still be a functor, but then query syntax wouldn't work. Instead, normal method-call syntax would be your only option. This is, however, a specific C# language feature. F#, for example, has no particular built-in awareness of functors, although most libraries name the central function map. In Haskell, Functor is a typeclass that defines a function called fmap.

The common trait is that there's an input value (Functor<T> in the above C# code snippet), which, when combined with a mapping function (Func<T, TResult>), returns an output value of the same generic type, but with a different generic type argument (Functor<TResult>).

Laws #

Defining a Select method isn't enough. The method must also obey the so-called functor laws. These are quite intuitive laws that govern that a functor behaves correctly.

The first law is that mapping the identity function returns the functor unchanged. The identity function is a function that returns all input unchanged. (It's called the identity function because it's the identity for the endomorphism monoid.) In F# and Haskell, this is simply a built-in function called id.

In C#, you can write a demonstration of the law as a unit test:

[Theory] [InlineData(-101)] [InlineData(-1)] [InlineData(0)] [InlineData(1)] [InlineData(42)] [InlineData(1337)] public void FunctorObeysFirstFunctorLaw(int value) { Func<int, int> id = x => x; var sut = new Functor<int>(value); Assert.Equal(sut, sut.Select(id)); }

While this doesn't prove that the first law holds for all values and all generic type arguments, it illustrates what's going on.

Since C# doesn't have a built-in identity function, the test creates a specialised identity function for integers, and calls it id. It simply returns all input values unchanged. Since id doesn't change the value, then Select(id) shouldn't change the functor, either. There's nothing more to the first law than this.

The second law states that if you have two functions, f and g, then mapping over one after the other should be the same as mapping over the composition of f and g. In C#, you can illustrate it like this:

[Theory] [InlineData(-101)] [InlineData(-1)] [InlineData(0)] [InlineData(1)] [InlineData(42)] [InlineData(1337)] public void FunctorObeysSecondFunctorLaw(int value) { Func<int, string> g = i => i.ToString(); Func<string, string> f = s => new string(s.Reverse().ToArray()); var sut = new Functor<int>(value); Assert.Equal(sut.Select(g).Select(f), sut.Select(i => f(g(i)))); }

Here, g is a function that translates an int to a string, and f reverses a string. Since g returns string, you can compose it with f, which takes string as input.

As the assertion points out, it shouldn't matter if you call Select piecemeal, first with g and then with f, or if you call Select with the composed function f(g(i)).

Summary #

This is not a monad tutorial; it's a functor tutorial. Functors are commonplace, so it's worth keeping an eye out for them. If you already understand how LINQ (or similar concepts in Java) work, then functors should be intuitive, because they are all based on the same underlying maths.

While this article is an overview article, it's also a part of a larger series of articles that explore what object-oriented programmers can learn from category theory.

Next: The Maybe functor.

Comments

As a rule of thumb, if you have a type with a generic type argument, it's a candidate to be a functor.

My guess is that you were thinking of Haskell's Functor typeclass when you wrote that. As Haskell documentation for Functor says,

An abstract datatypef a, which has the ability for its value(s) to be mapped over, can become an instance of theFunctortypeclass.

To explain the Haskell syntax for anyone unfamiliar, f is a generic type with type parameter a. So indeed, it is necessary to be a Haskell type to be generic to be an instance of the Functor typeclass. However, the concept of a functor in programming is not limited to Haskell's Functor typeclass.

In category theory, categories are often named C, D, and so on, but an example of a category could beIEnumerable<T>. If you have a function that translates integers to strings, the source object (that's what it's called, but it's not the same as an OOP object) could beIEnumerable<int>, and the destination object could beIEnumerable<string>. A functor, then, represents the ability to go fromIEnumerable<int>toIEnumerable<string>, and since the Select method gives you that ability,IEnumerable<T>.Selectis a functor. In this case, you sort of 'stay within' the category ofIEnumerable<T>, only you change the generic type argument, so this functor is really an endofunctor (the endo prefix is from Greek, meaning within).

For any type T including non-generic types, the category containing only a single object representing T can have (nontrivial) endofunctors. For example, consider a record of type R in F# with a field of both name and type F. Then let mapF f r = { r with F = f r.F } is the map function the satisfies the functor laws for the field F for record R. This holds even when F is not a type parameter of R. I would say that R is a functor in F.

I have realized within just the past three weeks how valuable it is to have a map function for every field of every record that I define. For example, I was able to greatly simplify the SubModelSeq sample in Elmish.WPF in part because of such map functions. I am especially pleased with the main update function, which heavily uses these map functions. It went from this to this.

In particular, I was able to eta-reduce the Model argument. To be clear, the goal is not point-free style for its own sake. Instead, it is one of perspective. In the previous implementation, the body explicitly depended on both the Msg and Model arguments and explicitly returned a Model instance. In the new implementation, the body only explicitly depends on the Msg argument and explicitly returns a function with type Model -> Model. By only having to pattern match on the Msg argument (and not having to worry about how to exactly map from one Model instance to the next), the implementation is much simpler. Such is the expressive power of high-order functions.

All this (and much more in my application at work) from realizing that a functor doesn't have to be generic.

I am looking into the possibility of generating these map functions using Myrid.

Tyson, thank you for writing. I think that you're right.

To be honest, I still find the category theory underpinnings of functional programming concepts to be a little hazy. For instance, in category theory, a functor is a mapping between categories. When category theory is translated into Haskell, people usually discuss Haskell as constituting a single large category called Hask. In that light, everything that goes on in Haskell just maps from objects in Hask to objects in Hask. In that light, I still find it difficult to distinguish between morphisms and functors.

I'm going to need to think about this some more, but my intuition is that one can also find 'smaller' categories embedded in Hask, e.g. the category of Boolean values, or the category of strings, and so on.

What I'm trying to get across is that I haven't thought enough about the boundaries of a concept such as functor. It's clear that some containers with generic types form functors, but are generics required? You argue that they aren't required, and I think that you are right.