ploeh blog danish software design

Magmas

A binary operation with no constraints on its behaviour is called a magma. An introduction for object-oriented programmers.

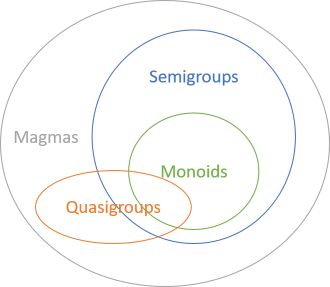

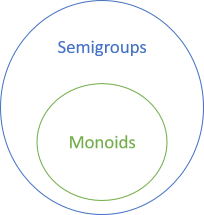

In the overall article series about group-like algebraic structures, you've so far seen examples of monoids, semigroups, and quasigroups. Common to all of these structures is that they are binary operations governed by at least one law. The laws are different for the different categories, but there are rules.

What if you have a binary operation that follows none of those rules?

All binary operations are magmas. If they have additional properties, we may call them quasigroups, or monoids, or some such, depending on the specific properties, but they're still all magmas. This is the most inclusive category.

You've already seen examples of monoids and semigroups, but what about magma examples? In a sense, you've already seen those as well, because all the examples you've seen so far have also been magma examples. After all, since all monoids are magmas, all the monoid examples you've seen have also been magma examples.

Still, it's not that hard to come up with some programming examples of magmas that aren't semi- or quasigroups. In the next articles, you'll see some examples.

Particularly the second example is fairly realistic, which demonstrates that as programmers, we can benefit from having vocabulary that enables us to describe any binary operation that doesn't obey any particular laws. In fact, establishing a vocabulary has been my primary motivation for writing this article series.Quasigroups

A brief introduction to quasigroups for object-oriented programmers.

This article is part of a larger series about monoids, semigroups, and other group-like algebraic structures. In this article, you'll get acquainted with the concept of a quasigroup. I don't think it plays that big of a role in software design, but it is a thing, and I thought that I'd cover it briefly with a well known-example.

During all this talk of monoids and semigroups, you've seen that normal arithmetic operations like addition and multiplication form monoids. Perhaps you've been wondering where subtraction fits in.

Subtraction forms a quasigroup.

What's a quasigroup? It's an invertible binary operation.

Inversion #

What does it mean for a binary operation to be invertible? It means that for any two elements a and b, there must exist two other elements x and y that turns a into b.

This is true for subtraction, as this FsCheck-based test demonstrates:

[Property(QuietOnSuccess = true)] public void SubtractionIsInvertible(int a, int b) { var x = a - b; var y = a + b; Assert.True(a - x == b); Assert.True(y - a == b); }

This example uses the FsCheck.Xunit glue library for xUnit.net. Notice that although FsCheck is written in F#, you can also use it from C#. This test (as well as all other tests in this article) passes.

For any a and b generated by FsCheck, we can calculate unique x and y that satisfy a - x == b and y - a == b.

Subtraction isn't the only invertible binary operation. In fact, addition is also invertible:

[Property(QuietOnSuccess = true)] public void AdditionIsInvertible(int a, int b) { var x = b - a; var y = b - a; Assert.True(a + x == b); Assert.True(y + a == b); Assert.Equal(x, y); }

Here I added a third assertion that demonstrates that for addition, the inversion is symmetric; x and y are equal.

Not only is integer addition a monoid - it's also a quasigroup. In fact, it's a group. Being associative or having identity doesn't preclude a binary operation from being a quasigroup, but these properties aren't required.

No identity #

No identity element exists for integer subtraction. For instance, 3 - 0 is 3, but 0 - 3 is not 3. Therefore, subtraction can't be a monoid.

No associativity #

Likewise, subtraction is not an associative operation. You can easily convince yourself of that by coming up with a counter-example, such as (3 - 2) - 1, which is 0, but different from 3 - (2 - 1), which is 2. Therefore, it can't be a semigroup either.

Summary #

A quasigroup is an invertible binary operation. Invertibility is the only required property of a quasigroup (apart from being a binary operation), but if it has other properties (like associativity), it's still a quasigroup.

I haven't had much utility from thinking about software design in terms of quasigroups, but I wanted to include it in case you were wondering how subtraction fits into all of this.

What if, however, you have a binary operation with no other properties?

Next: Magmas.

Semigroups accumulate

You can accumulate an arbitrary, non-zero number of semigroup values to a single value. An article for object-oriented programmers.

This article is part of a series about semigroups. In short, a semigroup is an associative binary operation.

As you've learned in a previous article, you can accumulate an arbitrary number of monoidal values to a single value. A corresponding property holds for semigroups.

Monoid accumulation #

When an instance method Op forms a monoid, you can easily write a function that accumulates an arbitrary number of Foo values:

public static Foo Accumulate(IReadOnlyCollection<Foo> foos) { var acc = Identity; foreach (var f in foos) acc = acc.Op(f); return acc; }

Notice how this generally applicable algorithm starts with the Identity value. One implication of this is that when foos is empty, the return value will be Identity. When Op is a semigroup, however, there's no identity, so this doesn't quite work. You need a value to start the accumulation; something you can return if the collection is empty.

Semigroup accumulation #

From Haskell you can learn that if you have a semigroup, you can accumulate any non-empty collection:

sconcat :: Semigroup a => NonEmpty a -> a

You can read this as: for any generic type a, when a forms a Semigroup, the sconcat function takes a non-empty list of a values, and reduces them to a single a value. NonEmpty a is a list with at least one element.

NotEmptyCollection #

You can also define a NotEmptyCollection<T> class in C#:

public class NotEmptyCollection<T> : IReadOnlyCollection<T> { public NotEmptyCollection(T head, params T[] tail) { if (head == null) throw new ArgumentNullException(nameof(head)); this.Head = head; this.Tail = tail; } public T Head { get; } public IReadOnlyCollection<T> Tail { get; } public int Count { get => this.Tail.Count + 1; } public IEnumerator<T> GetEnumerator() { yield return this.Head; foreach (var item in this.Tail) yield return item; } IEnumerator IEnumerable.GetEnumerator() { return this.GetEnumerator(); } }

Because of the way the constructor is defined, you must supply at least one element in order to create an instance. You can provide any number of extra elements via the tail array, but one is minimum.

The Count property returns the number of elements in Tail, plus one, because there's always a Head value.

The GetEnumerator method returns an iterator that always starts with the Head value, and proceeds with all the Tail values, if there are any.

Finding the maximum of a non-empty collection #

As you've already learned, Math.Max is a semigroup. Although the .NET Base Class Library has built-in methods for this, you can use a generally applicable algorithm to find the maximum value in a non-empty list of integers.

private static int Accumulate(NotEmptyCollection<int> numbers) { var acc = numbers.Head; foreach (var n in numbers.Tail) acc = Math.Max(acc, n); return acc; }

Notice how similar this algorithm is to monoid accumulation! The only difference is that instead of using Identity to get started, you can use Head, and then loop over all elements of Tail.

You can use it like this:

var nec = new NotEmptyCollection<int>(42, 1337, 123); var max = Accumulate(nec);

Here, max is obviously 1337.

As usual, however, this is much nicer, and more succinct in Haskell:

Prelude Data.Semigroup Data.List.NonEmpty> getMax $ sconcat $ fmap Max $ 42 :| [1337, 123] 1337

That's hardly the idiomatic way of getting a maximum element in Haskell, but it does show how you can 'click together' concepts in order to achieve a goal.

Aggregate #

Perhaps the observant reader will, at this point, have recalled to him- or herself that the .NET Base Class Library already includes an Aggregate extension method, with an overload that takes a seed. In general, the simpliest Aggregate method doesn't gracefully handle empty collections, but using the overload that takes a seed is more robust. You can rewrite the above Accumulate method using Aggregate:

private static int Accumulate(NotEmptyCollection<int> numbers) { return numbers.Tail.Aggregate( numbers.Head, (x, y) => Math.Max(x, y)); }

Notice how you can pass Head as the seed, and accumulate the Tail using that starting point. The Aggregate method is more like Haskell's sconcat for semigroups than mconcat for monoids.

Summary #

A semigroup operation can be used to reduce values to a single value, just like a monoid can. The only difference is that a semigroup operation can't reduce an empty collection, whereas a monoid can.

Next: Quasigroups

Bounding box semigroup

A semigroup example for object-oriented programmers.

This article is part of a larger series about monoids, semigroups, and other group-like algebraic structures. In this article, you'll see a non-trivial example of a semigroup that's not a monoid. In short, a semigroup is an associative binary operation.

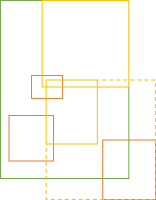

Shapes #

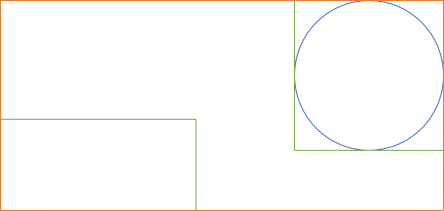

Imagine that you're developing a library of two-dimensional shapes, and that, for various reasons, each shape should have a bounding box. For example, here's a blue circle with a green bounding box:

The code for a circle shape could look like this:

public class Circle : ICanHasBox { public int X { get; } public int Y { get; } public int Radius { get; } public Circle(int x, int y, int radius) { this.X = x; this.Y = y; this.Radius = Math.Abs(radius); } public BoundingBox BoundingBox { get { return new BoundingBox( this.X - this.Radius, this.Y - this.Radius, this.Radius * 2, this.Radius * 2); } } }

In addition to the Circle class, you could have other shapes, such as rectangles, triangles, or even irregular shapes, each of which have a bounding box.

Bounding box unions #

If you have two shapes, you also have two (green) bounding boxes, but perhaps you'd like to find the (orange) bounding box of the union of both shapes.

That's fairly easy to do:

public BoundingBox Unite(BoundingBox other) { var newX = Math.Min(this.X, other.X); var newY = Math.Min(this.Y, other.Y); var newRightX = Math.Max(this.rightX, other.rightX); var newTopY = Math.Max(this.topY, other.topY); return new BoundingBox( newX, newY, newRightX - newX, newTopY - newY); }

The Unite method is an instance method on the BoundingBox class, so it's a binary operation. It's also associative, because for all x, y, and z, isAssociative is true:

var isAssociative = x.Unite(y).Unite(z) == x.Unite(y.Unite(z));

Since the operation is associative, it's at least a semigroup.

Lack of identity #

Is Unite also a monoid? In order to be a monoid, a binary operation must not only be associative, but also have an identity element. In a previous article, you saw how the union of two convex hulls formed a monoid. A bounding box seems to be conceptually similar to a convex hull, so you'd be excused to think that our previous experience applies here as well.

It doesn't.

There's no identity bounding box. The difference between a convex hull and a bounding box is that it's possible to define an empty hull as an empty set of coordinates. A bounding box, on the other hand, always has a coordinate and a size.

public struct BoundingBox { private readonly int rightX; private readonly int topY; public int X { get; } public int Y { get; } public int Width { get; } public int Height { get; } // More members, including Unite... }

An identity element, if one exists, is one where if you Unite it with another BoundingBox object, the return value will be the other object.

Consider, then, a (green) BoundingBox x. Any other BoundingBox inside of x, including x itself, is a candidate for an identity element:

In a real coordinate system, there's infinitely many candidates contained in x. As long as a candidate is wholly contained within x, then the union of the candidate and x will return x.

In the code example, however, coordinates are 32-bit integers, so for any bounding box x, there's only a finite number of candidates. Even for the smallest possible bounding box, though, the box itself is an identity candidate.

In order to be an identity element, however, the same element must behave as the identity element for all bounding boxes. It is, therefore, trivial to find a counter-example:

Just pick any other BoundingBox y outside of x. Every identity candidate must be within x, and therefore the union of the candidate and y cannot be y.

In code, you can demonstrate the lack of identity with an FsCheck-based test like this:

[Property(QuietOnSuccess = true)] public Property UniteHasNoIdentity(PositiveInt w, PositiveInt h) { var genCandidate = from xi in Gen.Choose(1, w.Get) from yi in Gen.Choose(1, h.Get) from wi in Gen.Choose(1, w.Get - xi + 1) from hi in Gen.Choose(1, h.Get - yi + 1) select new BoundingBox(xi, yi, wi, hi); return Prop.ForAll( genCandidate.ToArbitrary(), identityCandidate => { var x = new BoundingBox(1, 1, w.Get, h.Get); // Show that the candidate behaves like identity for x Assert.Equal(x, x.Unite(identityCandidate)); Assert.Equal(x, identityCandidate.Unite(x)); // Counter-example var y = new BoundingBox(0, 0, 1, 1); Assert.NotEqual(y, y.Unite(identityCandidate)); }); }

This example uses the FsCheck.Xunit glue library for xUnit.net. Notice that although FsCheck is written in F#, you can also use it from C#. This test passes.

It follows the above 'proof' by first generating an identity candidate for x. This is any BoundingBox contained within x, including x itself. In order to keep the code as simple as possible, x is always placed at the coordinate (1, 1).

The test proceeds to utilise two Guard Assertions to show that identityCandidate does, indeed, behave like an identity for x.

Finally, the test finds a trivial counter-example in y, and verifies that y.Unite(identityCandidate) is not equal to y. Therefore, identityCandidate is not the identity for y.

Unite is a semigroup, but not a monoid, because no identity element exists.

Summary #

This article demonstrates (via an example) that non-trivial semigroups exist in normal object-oriented programming.

Next: Semigroups accumulate.

Comments

Thanks

Yacoub, thank you for writing. The operation used here isn't the intersection, but rather the union of two bounding boxes; that's the reason I called the method Unite.

Hello Mark. I am aware of this, but maybe I did not explain my self correctly.

What I am trying to say is that when coming up with a counter-example, we should choose a BoundingBox y wholly outside of x (not just partially outside of x).

If we choose a BoundingBox y partially outside of x, then the intersection between x and y (the BoundingBox z = the area shared between x and y) is a valid identity element.

Yacoub, I think you're right; sorry about that!

Perhaps I should have written Just pick any other BoundingBox y partially or wholly outside of the candidate. Would that have been correct?

That would be correct. I am not sure though if this is the best way to explain it.

y being wholly ourside of x seems better to me.

Yacoub, I've corrected the text in the article. Thank you for the feedback!

Semigroups

Introduction to semigroups for object-oriented programmers.

This article is part of a larger series about monoids, semigroups, and other group-like algebraic structures. In this article, you'll learn what a semigroup is, and what distinguishes it from a monoid.

Semigroups form a superset of monoids. They are associative binary operations. While monoids additionally require that an identity element exists, no such requirement exist for semigroups. In other words, all monoids are semigroups, but not all semigroups are monoids.

This article gives you an overview of semigroups, as well as a few small examples. A supplemental article will show a more elaborate example.

Minimum #

An operation that returns the smallest of two values form a semigroup. In the .NET Base Class Library, such an operation is already defined for many numbers, for example 32-bit integers. Since associativity is a property of a semigroup, it makes sense to demonstrate it with a property-based test, here using FsCheck:

[Property(QuietOnSuccess = true)] public void IntMinimumIsAssociative(int x, int y, int z) { Assert.Equal( Math.Min(Math.Min(x, y), z), Math.Min(x, Math.Min(y, z))); }

This example uses the FsCheck.Xunit glue library for xUnit.net. Notice that although FsCheck is written in F#, you can also use it from C#. This test (as well as all other tests in this article) passes.

For mathematical integers, no identity element exists, so the minimum operation doesn't form a monoid. In practice, however, .NET 32-bit integers do have an identity element:

[Property(QuietOnSuccess = true)] public void MimimumIntHasIdentity(int x) { Assert.Equal(x, Math.Min(int.MaxValue, x)); Assert.Equal(x, Math.Min(x, int.MaxValue)); }

Int32.MaxValue is the maximum possible 32-bit integer value, so it effectively behaves as the identity for the 32-bit integer minimum operation. All 32-bit numbers are smaller than, or equal to, Int32.MaxValue. This effectively makes Math.Min(int, int) a monoid, but conceptually, it's not.

This may be clearer if, instead of 32-bit integers, you consider BigInteger, which is an arbitrarily large (or small) integer. The minimum operation is still associative:

[Property(QuietOnSuccess = true)] public void BigIntMinimumIsAssociative( BigInteger x, BigInteger y, BigInteger z) { Assert.Equal( BigInteger.Min(BigInteger.Min(x, y), z), BigInteger.Min(x, BigInteger.Min(y, z))); }

No identity element exists, however, because no matter which integer you have, you can always find one that's bigger: no maximum value exists. This makes BigInteger.Min a semigroup, but not a monoid.

Maximum #

Like minimum, the maximum operation forms a semigroup, here demonstrated by BigInteger.Max:

[Property(QuietOnSuccess = true)] public void BigIntMaximumIsAssociative( BigInteger x, BigInteger y, BigInteger z) { Assert.Equal( BigInteger.Max(BigInteger.Max(x, y), z), BigInteger.Max(x, BigInteger.Max(y, z))); }

Again, like minimum, no identity element exists because the set of integers is infinite; you can always find a bigger or smaller number.

Minimum and maximum operations aren't limited to primitive numbers. If values can be compared, you can always find the smallest or largest of two values, here demonstrated with DateTime values:

[Property(QuietOnSuccess = true)] public void DateTimeMaximumIsAssociative( DateTime x, DateTime y, DateTime z) { Func<DateTime, DateTime, DateTime> dtMax = (dt1, dt2) => dt1 > dt2 ? dt1 : dt2; Assert.Equal( dtMax(dtMax(x, y), z), dtMax(x, dtMax(y, z))); }

As was the case with 32-bit integers, however, the presence of DateTime.MinValue effectively makes dtMax a monoid, but conceptually, no identity element exists, because dates are infinite.

First #

Another binary operation simply returns the first of two values:

public static T First<T>(T x, T y) { return x; }

This may seem pointless, but First is associative:

[Property(QuietOnSuccess = true)] public void FirstIsAssociative(Guid x, Guid y, Guid z) { Assert.Equal( First(First(x, y), z), First(x, First(y, z))); }

On the other hand, there's no identity element, because there's no left identity. The left identity is an element e such that First(e, x) == x for any x. Clearly, for any generic type T, no such element exists because First(e, x) will only return x when x is equal to e. (There are, however, degenerate types for which an identity exists for First. Can you find an example?)

Last #

Like First, a binary operation that returns the last (second) of two values also forms a semigroup:

public static T Last<T>(T x, T y) { return y; }

Similar to First, Last is associative:

[Property(QuietOnSuccess = true)] public void LastIsAssociative(String x, String y, String z) { Assert.Equal( Last(Last(x, y), z), Last(x, Last(y, z))); }

As is also the case for First, no identity exists for Last, but here the problem is the lack of a right identity. The right identity is an element e for which Last(x, e) == x for all x. Clearly, Last(x, e) can only be equal to x if e is equal to x.

Aggregation #

Perhaps you think that operations like First and Last seem useless in practice, but when you have a semigroup, you can reduce any non-empty sequence to a single value. In C#, you can use the Aggregate LINQ method for this. For example

var a = new[] { 1, 0, 1337, -10, 42 }.Aggregate(Math.Min);

returns -10, while

var a = new[] { 1, 0, 1337, -10, 42 }.Aggregate(Math.Max);

returns 1337. Notice that the input sequence is the same in both examples, but the semigroup differs. Likewise, you can use Aggregate with First:

var a = new[] { 1, 0, 1337, -10, 42 }.Aggregate(First);

Here, a is 1, while

var a = new[] { 1, 0, 1337, -10, 42 }.Aggregate(Last);

returns 42.

LINQ has specialised methods like Min, Last, and so on, but from the perspective of behaviour, Aggregate would have been enough. While there may be performance reasons why some of those specialised methods exist, you can think of all of them as being based on the same abstraction: that of a semigroup.

Aggregate, and many of the specialised methods, throw an exception if the input sequence is empty. This is because there's no identity element in a semigroup. The method doesn't know how to create a value of the type T from an empty list.

If, on the other hand, you have a monoid, you can return the identity element in case of an empty sequence. Haskell explicitly makes this distinction with sconcat and mconcat, but I'm not going to go into that now.

Summary #

Semigroups are associative binary operations. In the previous article series about monoids you saw plenty of examples, and since all monoids are semigroups, you've already seen more than one semigroup example. In this article, however, you saw four examples of semigroups that are not monoids.

All four examples in this article are simple, and may not seem like 'real-world' examples. In the next article, then, you'll get a more realistic example of a semigroup that's not a monoid.

Next: Bounding box semigroup.

Monoids accumulate

You can accumulate an arbitrary number of monoidal values to a single value. An article for object-oriented programmers.

This article is part of a series about monoids. In short, a monoid is an associative binary operation with a neutral element (also known as identity).

Recall that a binary operation is an operation involving two arguments of the same type, and returning a value of that type.

public static Foo Op(Foo x, Foo y)

Notice that such an operation reduces two Foo values to a single Foo value.

Accumulation #

Since you have an operation that can reduce two values to a single value, you can use that single value as the input for yet another binary operation. This enables you to accumulate, or aggregate, an arbitrary number of values.

Consider the instance variation of the above Op method:

public Foo Op(Foo foo)

This is another representation of the operation, but instead of being a static method, it's an instance method on the Foo class.

When Op is a monoid, you can easily write a function that accumulates an arbitrary number of Foo values:

public static Foo Accumulate(IReadOnlyCollection<Foo> foos) { var acc = Identity; foreach (var f in foos) acc = acc.Op(f); return acc; }

You start with the Identity value, which also becomes the return value if foos is empty. Then you simply loop over each value in foos and use Op with the value accumulated so far (acc) and the current element in the sequence.

Once you're done looping, you return the accumulator.

This implementation isn't always guaranteed to be the most efficient, but you can always write accumulation like that. Sometimes, a more efficient algorithm exists, but that doesn't change the overall result that you can always reduce an arbitrary number of values whenever a monoid exists for those values.

Generalisation #

You can do this with any monoid. In Haskell, this function is called mconcat, and it has this type:

mconcat :: Monoid a => [a] -> a

The way you can read this is that for any monoid a, mconcat is a function that takes a linked list of a values as input, and returns a single a value as output.

This function seems both more general, and more constrained, than the above C# example. It's more general than the C# example because it works on any monoid, instead of just Foo.Op. On the other hand, it seems more limited because it works only on Haskell lists. The C# example, in contrast, can accumulate any IReadOnlyCollection<Foo>. Could you somehow combine those two generalisations?

Nothing stops you from doing that, but it's already in Haskell's Data.Foldable module:

fold :: (Monoid m, Foldable t) => t m -> m

The way to read this is that there's a function called fold, and it accumulates any monoid m contained in any 'foldable' data container t. That a data container is 'foldable' means that there's a way to somehow fold, or aggregate, the element(s) in the container into a value.

Linked lists, arrays, and other types of sequences are foldable, as are Maybe and trees.

In fact, there's little difference between Haskell's Foldable type class and .NET's IEnumerable<T>, but as the names suggest, their foci are different. In Haskell, the focus is being able to fold, accumulate, or aggregate a data structure, whereas on .NET the focus is on being able to enumerate the values inside the data structure. Ultimately, though, both abstractions afford the same capabilities.

In .NET, the focal abstraction is the Iterator pattern, which enables you to enumerate the values in the data container. On top of that abstraction, you can derive other behaviour, such as the ability to Aggregate data.

In Haskell, the focus is on the ability to fold, but from that central abstraction follows the ability to convert the data container into a linked list, which you can then enumerate.

Summary #

You can accumulate an arbitrary number of monoidal values as long as they're held in a container that enables you to 'fold' it. This includes all sorts of lists and arrays.

This article concludes the article series about monoids. In the next series of articles, you'll learn about a related category of operations.

Next: Semigroups.

Comments

@ploeh as always I loved your blog post but I don't 100% agree on your comparison of the iterator pattern with Foldable - the iterator pattern allows usually sideeffects and you have Traversable for that - you might also like this: http://www.cs.ox.ac.uk/jeremy.gibbons/publications/iterator.pdf …

(Comment submitted via Twitter)

Endomorphism monoid

A method that returns the same type of output as its input forms a monoid. An article for object-oriented programmers.

This article is part of a series about monoids. In short, a monoid is an associative binary operation with a neutral element (also known as identity). Methods that return the same type of value as their input form monoids over composition. The formal term for such an operation is an endomorphism.

Scheduling example #

Imagine that you have to develop some functionality for scheduling events in the future. As a concrete example, I recently wrote about adjusting dates while taking bank holidays into account. For instance, if you want to find the latest bank day before a given date, you could call the AdjustToLatestPrecedingDutchBankDay method. If you give it a normal bank day (say, a Thursday), it'll simply return the input date, but if you give it a Sunday, it'll return the preceding Friday. That is, unless that particular Friday is a bank holiday, in which case it'll return the Thursday before - as long as that's not also a bank holiday, and so on.

In that previous article, the AdjustToLatestPrecedingDutchBankDay method is an extension method, but you can also model it as an instance method, like this:

public DateTimeOffset Adjust(DateTimeOffset value) { var candidate = value; while (!(IsDutchBankDay(candidate.DateTime))) candidate = candidate.AddDays(-1); return candidate; }

This method would be part of a class that implements an interface:

public interface IDateTimeOffsetAdjustment { DateTimeOffset Adjust(DateTimeOffset value); }

You can make other implementations of this interface. Here's one that adjusts a date and time to business hours:

public class BusinessHoursAdjustment : IDateTimeOffsetAdjustment { private readonly static TimeSpan startOfBussiness = TimeSpan.FromHours(9); private readonly static TimeSpan endOfBusiness = TimeSpan.FromHours(17); public DateTimeOffset Adjust(DateTimeOffset value) { // Warning: May not handle DST changes appropriately! // It's only example code... if (value.TimeOfDay < startOfBussiness) return value - value.TimeOfDay + startOfBussiness; if (endOfBusiness < value.TimeOfDay) return (value - value.TimeOfDay + startOfBussiness).AddDays(1); return value; } }

To keep the example simple, business hours are hard-coded to 9-17.

You could also adapt conversion to UTC:

public class UtcAdjustment : IDateTimeOffsetAdjustment { public DateTimeOffset Adjust(DateTimeOffset value) { return value.ToUniversalTime(); } }

Or add a month:

public class NextMonthAdjustment : IDateTimeOffsetAdjustment { public DateTimeOffset Adjust(DateTimeOffset value) { return value.AddMonths(1); } }

Notice that the Adjust method returns a value of the same type as its input. So far when discussing monoids, we've been looking at binary operations, but Adjust is a unary operation.

An operation that returns the same type as its input is called an endomorphism. Those form monoids.

Composing adjustments #

It's easy to connect two adjustments. Perhaps, for example, you'd like to first use BusinessHoursAdjustment, followed by the bank day adjustment. This will adjust an original input date and time to a date and time that falls on a bank day, within business hours.

You can do this in a general-purpose, reusable way:

public static IDateTimeOffsetAdjustment Append( this IDateTimeOffsetAdjustment x, IDateTimeOffsetAdjustment y) { return new AppendedAdjustment(x, y); } private class AppendedAdjustment : IDateTimeOffsetAdjustment { private readonly IDateTimeOffsetAdjustment x; private readonly IDateTimeOffsetAdjustment y; public AppendedAdjustment( IDateTimeOffsetAdjustment x, IDateTimeOffsetAdjustment y) { this.x = x; this.y = y; } public DateTimeOffset Adjust(DateTimeOffset value) { return y.Adjust(x.Adjust(value)); } }

The Append method takes two IDateTimeOffsetAdjustment values and combines them by wrapping them in a private implementation of IDateTimeOffsetAdjustment. When AppendedAdjustment.Adjust is called, it first calls Adjust on x, and then calls Adjust on y with the return value from the first call.

In order to keep the example simple, I omitted null guards, but apart from that, Append should work with any two implementations of IDateTimeOffsetAdjustment. In other words, it obeys the Liskov Substitution Principle.

Associativity #

The Append method is a binary operation. It takes two IDateTimeOffsetAdjustment values and returns an IDateTimeOffsetAdjustment. It's also associative, as a test like this demonstrates:

private void AppendIsAssociative( IDateTimeOffsetAdjustment x, IDateTimeOffsetAdjustment y, IDateTimeOffsetAdjustment z) { Assert.Equal( x.Append(y).Append(z), x.Append(y.Append(z))); }

As usual in this article series, such a test doesn't prove that Append is associative for all values of IDateTimeOffsetAdjustment, but if you run it as a property-based test, it demonstrates that it's quite likely.

Identity #

In true monoidal fashion, IDateTimeOffsetAdjustment also has an identity element:

public class IdentityDateTimeOffsetAdjustment : IDateTimeOffsetAdjustment { public DateTimeOffset Adjust(DateTimeOffset value) { return value; } }

This implementation simply returns the input value without modifying it. That's a neutral operation, as a test like this demonstrates:

private void AppendHasIdentity(IDateTimeOffsetAdjustment x) { Assert.Equal( x.Append(new IdentityDateTimeOffsetAdjustment()), x); Assert.Equal( new IdentityDateTimeOffsetAdjustment().Append(x), x); }

These two assertions verify that left and right identity holds.

Since Append is associative and has identity, it's a monoid.

This holds generally for any method (or function) that returns the same type as it takes as input, i.e. T SomeOperation(T x). This matches the built-in library in Haskell, where Endo is a Monoid.

Conclusion #

A method that returns a value of the same type as its (singular) input argument is called an endomorphism. You can compose two such unary operations together in order to get a composed operation. You simply take the output of the first method and use it as the input argument for the second method. That composition is a monoid. Endomorphisms form monoids.

Next: Maybe monoids.

Comments

Hi, Mark!

I'm really enjoing your series on category theory. I think you're doing a great job in making such abstract concepts accessible to us programmers. However, I found this particular episode puzzling. You claim that any composition of endomorphisms is a monoid, and you also claim to have tested that your Append method is associative. However, it is not hard to come up with a counter-example:

[Fact]

public void CounterExample()

{

IDateTimeOffsetAdjustment weekend = new WeekendAdjustment();

IDateTimeOffsetAdjustment nextMonth = new NextMonthAdjustment();

var a = new AppendedAdjustment(weekend, nextMonth);

var b = new AppendedAdjustment(nextMonth, weekend);

var startDate = new DateTimeOffset(2019, 1, 5, 0, 0, 0, TimeSpan.Zero);

Assert.Equal(a.Adjust(startDate), b.Adjust(startDate));

}

Here, WeekendAdjustment is just a simplified DutchBankDayAdjustment.

It would also be interesting to see how you can do property based testing on this, i.e. how to automatically generate meaningful instances of IDateTimeOffsetAdjustment. When I try to run your test, I get: "IDateTimeOffsetAdjustment is not handled automatically by FsCheck. Consider using another type or writing and registering a generator for it."

Tor, thank you for writing, and for your kind words. I suppose that the CounterExample test fails when one executes it; you don't explicitly write that, but that's what I expect would happen.

The Append operation is, indeed, not commutative. This is, however, not a requirement for monoids, or even for groups. Some monoids, such as addition and multiplication, are also commutative, while others, like list concatenation or, here, the endomorphism monoid, aren't.

When it comes to the FsCheck properties, I admit that I cheated slightly with the code listing in the article. I did this because the properties are a bit more complicated than what I show, and I was concerned that the extra infrastructure surrounding those tests would detract from the overall message.

The associativity property in its entirety looks like this:

[Property(QuietOnSuccess = true)] public Property AppendIsAssociative() { return Prop.ForAll( GenerateAdjustment().Three().ToArbitrary(), t => AppendIsAssociative(t.Item1, t.Item2, t.Item3)); } private void AppendIsAssociative( IDateTimeOffsetAdjustment x, IDateTimeOffsetAdjustment y, IDateTimeOffsetAdjustment z) { Assert.Equal( x.Append(y).Append(z), x.Append(y.Append(z)), new DateTimeOffsetAdjustmentComparer()); }

Where GenerateAdjustment is defined like this:

private static Gen<IDateTimeOffsetAdjustment> GenerateAdjustment() { return Gen.Elements<IDateTimeOffsetAdjustment>( new IdentityDateTimeOffsetAdjustment(), new BusinessHoursAdjustment(), new DutchBankDayAdjustment(), new NextMonthAdjustment(), new UtcAdjustment()); }

Not only did I omit all that extra scaffolding, I also pretended that IDateTimeOffsetAdjustment objects could be compared using their default implementations of Equals, which they meaningfully can't. Notice that the full property listed here also asserts using a custom equality comparer:

private class DateTimeOffsetAdjustmentComparer : IEqualityComparer<IDateTimeOffsetAdjustment> { public bool Equals(IDateTimeOffsetAdjustment x, IDateTimeOffsetAdjustment y) { var rnd = new System.Random(); var dt = new DateTimeOffset(rnd.Next(), TimeSpan.Zero); return x.Adjust(dt) == y.Adjust(dt); } public int GetHashCode(IDateTimeOffsetAdjustment obj) { return 0; } }

The custom comparer's Equals method creates a random number of ticks and uses it to create a DateTimeOffset value. It then calls Adjust on both objects, which are considered equal if they produce the same result.

The test for identity is defined in a similar fashion; it also uses GenerateAdjustment and DateTimeOffsetAdjustmentComparer.

Thank you for such a prompt and thorough reply. You are of course correct, I have been confusing associativity with commutativity. I didn't run into the same mistake during the list concatenation article, though, maybe because list concatenation more obviously is associative and not commutative. In the current article, however, I intuitively felt that the operations needed to be commutative, but your reply clears that up.

It is also helpful to see the extra scaffolding around your property based test. The article itself seemed to imply that instances of IDateTimeOffsetAdjustment could be automatically generated. Your approach to set up such a test will come in handy now that I'm convinced that I should let more of my tests be property based, even in C#!

Tor, I made the same mistake several times when I started researching all of this. I think that there's something intuitive and fundamental about commutativity, so at a superficial glance, it seems odd that we can do without it, while at the same time requiring a less intuitive property like associativity.

When it comes to computation, though, I think that it's exactly the nature of associativity that enables us to decompose big problems into several small problems. The order of how you deal with those small problems doesn't matter, as long as you apply the intermediate results in the correct order.

Dear Mark!

These additional explanations and contextual information in the comment sections deserve a place in the original text. To keep the text uncluttered this site uses clickable popups: . If you click on some of the numbers, you'll see what I mean. You might consider adding this feature to the text.

Best regards, Norbert.

Dear Mark,

Although late to the game I really enjoy and appreciate your series here explaining monoids. Your examples are quite helful in getting to a deeper understanding.

This one however have me puzzled.

As I see it, it's fairly easy to produce a counterexample to your claim (if I understand it correctly) that composition of functions with similar domain and codomain forms a monoid. To make it simple I'll use the domain of integers and define a function f(x) = x + 1 and g(x) = x * 6. They both return the same type as they take as input and yet, the composition is clearly not associative.

The possible explanation I've been able to dig out, is if we assume the set of functions we talk about and the composition operator forms a category. However in this case, it's part of the definition of a category that an identity element exist and composition is associative. But then the claim seems circular.

Am I missing anything here?

Michael, thank you for writing. Can you share a counterexample?

I've tried some casual examples with your f and g functions, but associativity seems to hold:

Prelude> (f . (g . f)) (-1) 1 Prelude> ((f . g) . f) (-1) 1 Prelude> (f . (g . f)) 0 7 Prelude> ((f . g) . f) 0 7 Prelude> (f . (g . f)) 1 13 Prelude> ((f . g) . f) 1 13 Prelude> (f . (g . f)) 2 19 Prelude> ((f . g) . f) 2 19

Casual experiments with f . f . g also indicates associativity.

I've also tried with QuickCheck:

Prelude> import Test.QuickCheck

Prelude Test.QuickCheck> f x = x + 1

Prelude Test.QuickCheck> g x = x * 6

Prelude Test.QuickCheck> :{

Prelude Test.QuickCheck| endoIsAssociative :: Int -> Bool

Prelude Test.QuickCheck| endoIsAssociative x = ((f . g) . f) x == (f . (g . f)) x

Prelude Test.QuickCheck| :}

Prelude Test.QuickCheck> quickCheck $ withMaxSuccess 100000 endoIsAssociative

+++ OK, passed 100000 tests.

The composition f . g . f doesn't seem to easily produce any counterexamples. I haven't, however, tried all possible permutations of f and g, so that's no proof.

If I recall correctly, however, associativity of endomorphisms is a property that one can prove with equational reasoning, so I'd be surprised if a counterexample exists.

Mark, thanks for a quick and quite elaborate reply.

I'm slightly embarrased. First of all, my example attempting to disprove associativity should of course contain 3 functions, not 2 as I did. And second of all I could have / should have done exactly what you did and coded up an example - and then seen the error of my assumptions without taking your time. I apologise for the inconvenience. Lesson learned!

I do appreciate your answer - and clearly I was wrong.

Something still bothers my intuition around this. I trust your statement that it actually can be proven, so clearly my intuition have got it wrong. I'll continue to work on understanding this "associativity of endomorphisms", so I can develop a stronger intuition around monoids as well as associativity.

Thank you for your help :)

Michael, being wrong is rarely a pleasant experience, but it might be a symptom that you're learning. Admitting one's mistake in public is, I believe, a sign of maturity and personal integrity. Thank you for doing that.

I don't know if I can help your intuition along, but the proof isn't that hard. I originally learned equational reasoning from this article by Bartosz Milewski, so I'm using that notation. The goal is to prove that:

(f . g) . h == f . (g . h)

for any three (pure) functions f, g, and h, where the binary operation is question is the composition operator (.) given as:

(f . g) x = f (g x)

This is Haskell syntax, where a function call is simply written as f x or g x, meaning that you call the function f or g with the value x. Brackets in Haskell work the same way as brackets in F#, which again work like in mathematics. Thus, (f . g) x means 'the composed function (f . g) called with the argument x'.

The proof might go like this:

(f . g) . h

= { eta expansion }

(\x -> (f . g) x) . h

= { definition of (.) }

(\x -> f (g x)) . h

= { eta expansion }

\y -> ((\x -> f (g x)) . h) y

= { definition of (.) }

\y -> (\x -> f (g x)) (h y)

= { substitution (x = (h y)) }

\y -> f (g (h y))

= { definition of (.) }

\y -> f ((g . h) y)

= { definition of (.) }

\y -> (f . (g . h)) y

= { eta reduction }

f . (g . h)

Writing out a proof like this isn't something I do every day, so I may have made a mistake or two. Also, I can't shake the feeling that a simpler proof is available, but on the other hand, I found it a good exercise.

Mark, thank you for the kind words!

And thank you for taking the time to write down a proof. Yes, the proof is indeed helpful. Seeing it made it clear that all function composition is associative. I think your comment about this being about pure functions (which really is quite obvious) also helped. It triggered one of the original insights that has moved me towards functional programming, which is Referential Transparency. Suddenly it was blinding obvious that functional composition of pure functions really is just term substitution - so of course it's associative.

It feels good to have my intuition up to speed :)

This has been quite a fun and valuable exchange. Thank you for taking your time. I've learned something new and that's always a source of joy.

Onwards and upwards!

Function monoids

Methods are monoids if they return monoids. An article for object-oriented programmers.

This article is part of a series about monoids. In short, a monoid is an associative binary operation with a neutral element (also known as identity).

Functions #

In statically typed C-languages, like C# or Java, methods are typically declared like this:

public Foo Bar(Baz baz, Qux qux)

As you'll see in another article, however, you can refactor any method to a method that takes a single argument as input, and returns a single (possibly complex) value as output. In abstract form, we can write it like this:

public Out1 Op1(In1 arg)

Another way to abstract a method is by using generics:

public T Op1<T1, T>(T1 x)

Another article demonstrates how this is similar to a generic function. In F#, for instance, the type of the function would be written as 'a -> 'b, whereas in Haskell it'd be written a -> b. The way to read this is that the function takes a value of the generic type T1/'a/a as input, and returns a value of the generic type T/'b/b as output. For the rest of this article, I'll favour the Haskell syntax, since it has minimal noise.

To be clear, however, although I favour the Haskell syntax because of its succinctness, I don't mean to imply that the functions that I discuss are exclusively pure - think of an F# function 'a -> 'b which may or may not be pure.

Binary combination of functions #

A function a -> b is a monoid if b is a monoid. This means that you can combine two functions with the same type. In an object-oriented context, it means that you can combine two methods with the same signature into one method as long as the return type forms a monoid.

Consider the following (facetious) C# example. You're trying to establish how secure a GUID is. Primes are important in cryptography, so the more primes a GUID contains, the better... right?

private const string primes = "2357bd"; public static int CountPrimes(Guid g) { return g.ToString("N").Count(primes.Contains); }

The CountPrimes method counts the number of prime digits in a given GUID. So far so good, but you also think that hexadecimal notation is more exotic than decimal notation, so surely, the digits A-F are somehow more secure, being more unfamiliar. Thus, you have a method to count those as well:

private const string letters = "abcdef"; public static int CountLetters(Guid g) { return g.ToString("N").Count(letters.Contains); }

Good, but both of these numbers are, clearly, excellent indicators of how secure a GUID is. Which one should you choose? Both of them, of course!

Can you combine CountPrimes and CountLetters? Yes, you can, because, while GUIDs don't form a monoid, the return type int forms a monoid over addition. This enables you to write a Combine method:

public static Func<Guid, int> Combine( Func<Guid, int> f, Func<Guid, int> g) { return x => f(x) + g(x); }

Notice that Combine takes two Func<Guid, int> values and return a new Func<Guid, int> value. It's a binary operation. Here's an example of how to use it:

var calculateSecurity = Combine(CountPrimes, CountLetters); var actual = calculateSecurity(new Guid("10763FF5-E3C8-46D1-A70F-6C1D9EDA8120")); Assert.Equal(21, actual);

Now you have an excellent measure of the security strength of GUIDs! 21 isn't that good, though, is it?

Antics aside, Combine is a binary function. Is it a monoid?

Monoid laws #

In order to be a monoid, Combine must be associative, and it is, as the following FsCheck property demonstrates:

[Property(QuietOnSuccess = true)] public void CombineIsAssociative( Func<Guid, int> f, Func<Guid, int> g, Func<Guid, int> h, Guid guid) { Assert.Equal( Combine(Combine(f, g), h)(guid), Combine(f, Combine(g, h))(guid)); }

In this property-based test, FsCheck generates three functions with the same signature. Since functions don't have structural equality, the easiest way to compare them is to call them and see whether they return the same result. This explains why the assertion invokes both associative combinations with guid. The test passes.

In order to be a monoid, Combine must also have an identity element. It does:

public static Func<Guid, int> FuncIdentity = _ => 0;

This is simply a function that ignores its input and always returns 0, which is the identity value for addition. The following test demonstrates that it behaves as expected:

[Property(QuietOnSuccess = true)] public void CombineHasIdentity(Func<Guid, int> f, Guid guid) { Assert.Equal(f(guid), Combine(FuncIdentity, f)(guid)); Assert.Equal(f(guid), Combine(f, FuncIdentity)(guid)); }

As was the case with CombineIsAssociative, in order to assert that the combined functions behave correctly, you have to call them. This, again, explains why the assertion invokes the combined functions with guid. This test passes as well.

Combine is a monoid.

Generalisation #

While the above C# code is only an example, the general rule is that any function that returns a monoid is itself a monoid. In Haskell, this rule is articulated in the standard library:

instance Monoid b => Monoid (a -> b)

This means that for any monoid b, a function a -> b is also (automatically) a monoid.

Summary #

A function or method with a return type that forms a monoid is itself a monoid.

Next: Endomorphism monoid.

Tuple monoids

Tuples of monoids form monoids. Data objects of monoids also form monoids. An article for object-oriented programmers.

This article is part of a series about monoids. In short, a monoid is an associative binary operation with a neutral element (also known as identity). This article starts off with some easy-to-understand, but abstract results. Once these are established, however, you'll see how to use them in a relatable example, so keep reading!

Tuples #

A tuple is a group of elements. In statically typed programming languages, each element has a type, and the types don't have to be the same. As an example, in C#, you can create a tuple like this:

Tuple<int, string> pair = Tuple.Create(42, "Foo");

This creates a tuple where the first element must be an int and the second element a string. In the example, I've explicitly declared the type instead of using the var keyword, but this is only to make the type clearer (since you don't have an IDE in which to read the code).

The pair tuple is a two-tuple, which means that it must have exactly two elements, of the types given, but you can also create larger tuples:

Tuple<string, bool, int> triple = Tuple.Create("Bar", false, 42);

This is a three-tuple, but conceptually, tuples can have any size.

Pairs of monoids #

A pair (a two-tuple) forms a monoid if both elements form a monoid. Haskell formalises this by stating:

instance (Monoid a, Monoid b) => Monoid (a, b)

The way to read this is that for any monoid a and any monoid b, the pair (a, b) is also a monoid.

Perhaps this is easiest to understand with a C# example. Consider a tuple of the type Tuple<int, string>. Integers form monoids under both addition and multiplication, and strings are monoids under concatenation. Thus, you can make Tuple<int, string> form a monoid as well. For instance, use the multiplication monoid to define this binary operation:

public static Tuple<int, string> CombinePair( Tuple<int, string> x, Tuple<int, string> y) { return Tuple.Create(x.Item1 * y.Item1, x.Item2 + y.Item2); }

For this particular example, I've chosen multiplication as the binary operation for int, and the string concatenation operator + for string. The point is that since both elements are monoids, you can use their respective binary operations to return a new tuple with the combined values.

This operation is associative, as the following FsCheck property demonstrates:

[Property(QuietOnSuccess = true)] public void CombinePairIsAssociative( Tuple<int, string> x, Tuple<int, string> y, Tuple<int, string> z) { Assert.Equal( CombinePair(CombinePair(x, y), z), CombinePair(x, CombinePair(y, z))); }

This property passes for all the x, y, and z values that FsCheck generates.

The CombinePair operation has identity as well:

public static Tuple<int, string> PairIdentity = Tuple.Create(1, "");

Again, you can use the identity value for each of the elements in the tuple: 1 for the multiplication monoid, and "" for string concatenation.

This value behaves as the identity for CombinePair, at least for all non-null string values:

[Property(QuietOnSuccess = true)] public void CombinePairHasIdentity(Tuple<int, NonNull<string>> seed) { var x = Tuple.Create(seed.Item1, seed.Item2.Get); Assert.Equal(CombinePair(PairIdentity, x), CombinePair(x, PairIdentity)); Assert.Equal(x, CombinePair(x, PairIdentity)); }

Again, this test passes for all seed values generated by FsCheck.

The C# code here is only an example, but I hope it's clear how the result generalises.

Triples of monoids #

In the above section, you saw how pairs of monoids form a monoid. Not surprisingly, triples of monoids also form monoids. Here's another C# example:

public static Tuple<string, bool, int> CombineTriple( Tuple<string, bool, int> x, Tuple<string, bool, int> y) { return Tuple.Create( x.Item1 + y.Item1, x.Item2 || y.Item2, x.Item3 * y.Item3); }

The CombineTriple method is another binary operation. This time it combines two triples to a single triple. Since both string, bool, and int form monoids, it's possible to combine each element in the two tuples to create a new tuple. There's more than one monoid for integers, and the same goes for Boolean values, but here I've chosen multiplication and Boolean or, so the identity is this:

public static Tuple<string, bool, int> TripleIdentity = Tuple.Create("", false, 1);

This triple simply contains the identities for string concatenation, Boolean or, and multiplication. The operation is associative, but I'm not going to show this with a property-based test. Both tests for associativity and identity are similar to the above tests; you could consider writing them as an exercise, if you'd like.

This triple example only demonstrates a particular triple, but you can find the generalisation in Haskell:

instance (Monoid a, Monoid b, Monoid c) => Monoid (a, b, c)

This simply states that for monoids a, b, and c, the tuple (a, b, c) is also a monoid.

Generalisation #

At this point, it can hardly come as a surprise that quadruples and pentuples of monoids are also monoids. From Haskell:

instance (Monoid a, Monoid b, Monoid c, Monoid d) => Monoid (a, b, c, d) instance (Monoid a, Monoid b, Monoid c, Monoid d, Monoid e) => Monoid (a, b, c, d, e)

The Haskell standard library stops at pentuples (five-tuples), because it has to stop somewhere, but I'm sure you can see how this is a general rule.

Data objects as monoids #

If you're an object-oriented programmer, you probably don't use tuples much in your day-to-day work. I'd even suggest that you shouldn't, because tuples carry too little information to make good domain objects. For example, if you have Tuple<int, string, string>, what do the elements mean? A better design would be to introduce a small Value Object called Customer, with Id, FirstName, and LastName properties.

(In functional programming, you frequently use tuples, because they're useful for 'gluing' generic functions together. A Haskell programmer may instead say that they are useful for composing parametrically polymorphic functions, but the meaning would be the same.)

As on object-oriented developer, then why should you care that tuples of monoids are monoids?

The reason this is interesting in object-oriented programming is that there's a strong relationship between tuples and data objects (Value Objects or Data Transfer Objects). Consider the Customer examples that I sketched out a few paragraphs above. As you'll learn in a future article, you can refactor a tuple to a class, or a class to a tuple.

Example: Roster #

In Denmark, where I live, learning to swim is a mandatory part of the school curriculum. Teachers take the children to the nearby swimming stadium and teach them to swim. Since this is an activity outside of school, teachers would be prudent to keep a roster of the children. Modelled as a class, it might look like this:

public class Roster { public int Girls { get; } public int Boys { get; } public IReadOnlyCollection<string> Exemptions { get; } public Roster(int girls, int boys, params string[] exemptions) { Girls = girls; Boys = boys; Exemptions = exemptions; } // ... }

Some children may be temporarily exempt from a swimming lesson, perhaps because of a passing medical condition. This changes from lesson to lesson, so the roster keeps track of them separately. Additionally, the boys will need to go in the men's changing rooms, and the girls in the women's changing rooms. This is the reason the roster keeps track of the number of boys and girls separately.

This, however, presents a logistical problem, because there's only one teacher for a class. The children are small, so need the company of an adult.

The way my children's school solved that problem was to combine two groups of children (in Danish, en klasse, a class), each with their own teacher - one female, and one male.

To model that, the Roster class should have a Combine method:

public Roster Combine(Roster other) { return new Roster( this.Girls + other.Girls, this.Boys + other.Boys, this.Exemptions.Concat(other.Exemptions).ToArray()); }

Clearly, this is easy to implement. Just add the number of girls together, add the number of boys together, and concatenate the two lists of exemptions.

Here's an example of using the method:

[Fact] public void UsageExample() { var x = new Roster(11, 10, "Susan", "George"); var y = new Roster(12, 9, "Edward"); var roster = x.Combine(y); var expected = new Roster(23, 19, "Susan", "George", "Edward"); Assert.Equal(expected, roster); }

The Combine method is an instance method on the Roster class, taking a second Roster as input, and returning a new Roster value. It's a binary operation. Does it also have identity?

Yes, it does:

public static readonly Roster Identity = new Roster(0, 0);

Notice that the exemptions constructor argument is a params array, so omitting it means passing an empty array as the third argument.

The following properties demonstrate that the Combine operation is both associative and has identity:

[Property(QuietOnSuccess = true)] public void CombineIsAssociative(Roster x, Roster y, Roster z) { Assert.Equal( x.Combine(y).Combine(z), x.Combine(y.Combine(z))); } [Property(QuietOnSuccess = true)] public void CombineHasIdentity(Roster x) { Assert.Equal(x, Roster.Identity.Combine(x)); Assert.Equal(x, x.Combine(Roster.Identity)); }

In other words, Combine is a monoid.

This shouldn't surprise us in the least, since we've already established that tuples of monoids are monoids, and that a data class is more or less 'just' a tuple with named elements. Specifically, the Roster class is a 'tuple' of two addition monoids and the sequence concatenation monoid, so it follows that the Combine method is a monoid as well.

Roster isomorphism #

In future articles, you'll learn more about isomorphisms between various representations of objects, but in this context, I think it's relevant to show how the Roster example is isomorphic to a tuple. It's trivial, really:

public Tuple<int, int, string[]> ToTriple() { return Tuple.Create(this.Girls, this.Boys, this.Exemptions.ToArray()); } public static Roster FromTriple(Tuple<int, int, string[]> triple) { return new Roster(triple.Item1, triple.Item2, triple.Item3); }

This pair of methods turn a Roster into a triple, and a corresponding triple back into a Roster value. As the following two FsCheck properties demonstrate, these methods form an isomorphism:

[Property(QuietOnSuccess = true)] public void ToTripleRoundTrips(Roster x) { var triple = x.ToTriple(); Assert.Equal(x, Roster.FromTriple(triple)); } [Property(QuietOnSuccess = true)] public void FromTripleRoundTrips(Tuple<int, int, string[]> triple) { var roster = Roster.FromTriple(triple); Assert.Equal(triple, roster.ToTriple()); }

This isn't the only possible isomorphism between triples and Roster objects. You could create another one where the string[] element goes first, instead of last; where boys go before girls; and so on.

Summary #

Tuples of monoids are also monoids. This holds for tuples of any size, but all of the elements have to be monoids. By isomorphism, this result also applies to data objects.

Next: Function monoids.

Comments

Hi Mark, I have trouble understanding your usage of the term 'monoid' in this post. You apply it to the types string, bool, and int when you say that a tuple of those "monoids" is a monoid as well. But up to this point you made it very clear, that a type is NOT a monoid. A function can be a monoid. So, would it be more correct to say that a tuple of certain functions, which are monoids, is a monoid as well?

Punkislamist, thank you for writing. You're entirely correct that a monoid is an associative binary operation with identity. It's a function, not a type. If this article is unclear, the fault is all mine.

Not surprisingly, this topic is difficult to write about. The text has to be exact in order to avoid confusion, but since I'm only human, I sometimes make mistakes in how I phrase my explanations. While I've tried to favour the phrase that a type forms a monoid, I can see that I've slipped up once or twice in this article.

Some types form more than a single monoid. Boolean values, for instance, form exactly four monoids. Other types, like integers, form an infinite set of monoids, but the most commonly used integer monoids are addition and multiplication. Other types, particularly unit, only form a single monoid.

Why do I talk about types, then? There's at least two reasons. The first is the practical reason that most statically typed languages naturally come with a notion of types embedded. One could argue, I think, that types are a more fundamental concept than functions, since even functions have types (for instance, in Haskell, we'd characterise a binary operation with the type a -> a -> a).

A more abstract reason is that category theory mostly operates with the concepts of objects and morphisms. Such objects aren't objects in the sense of object-oriented programming, but rather correspond to types in programming languages. (Actually, a category theory object is a more fluffy concept than that, but that's the closest analogy that I'm aware of.)

In category theory, a particular monoid is an object in the category of monoids. For example, the integer addition monoid is an object in the category of monoids, as is the string concatenation monoid, etcetera.

When you consider a 'raw' programming language type like C#'s int, you're correct that it's not a monoid. It's just a type. The same goes for Haskell's corresponding Int32 type. As primitive values, we could say that the type of 32-bit integers is an object in some category (for example, the category of number representations). Such an object is not a monoid.

There exists, however, a morphism (a 'map') from the 32-bit integer object to the addition monoid (which is an object in the category of monoids). In Haskell, this morphism is the data constructor Sum:

Prelude Data.Monoid> :t Sum Sum :: a -> Sum a

What this states is that Sum is a function (i.e. a morphism) that takes an object a and turns it into an object Sum a. We have to be careful here, because Sum a is a Haskell type, whereas Sum is the function that 'elevates' an object a to Sum a. The names are similar, but the roles are different. This is a common idiom in Haskell, and has some mnemonic advantages, but it may be confusing until you get the hang of it.

We can think of Sum a as equivalent to the category theory object addition in the category of monoids. That's also how it works in Haskell: Sum a is a monoid:

Prelude Data.Monoid> Sum 40 <> Sum 2

Sum {getSum = 42}

In Haskell, <> is the polymorphic binary operation; exactly what it does depends on the object (that is: the type) on which it operates. When applied to two values of Sum a, the result of combining 40 and 2 is 42.

To be clear, Sum isn't the only morphism from the category of number representations to the category of monoids. Product is another:

Prelude Data.Monoid> :t Product

Product :: a -> Product a

Prelude Data.Monoid> Product 6 <> Product 7

Product {getProduct = 42}

Thus, there is a relationship between types and monoids, but it's most apparent in programming languages that are geared towards that way of thinking (like Haskell). In C#, it's difficult to translate some of these concepts into code, because C#'s type system isn't up to the task. Instead, when we consider a type like int, I think it's pragmatic to state that the type forms one or more monoids. I've also encountered the phrase that it gives rise to a monoid.

While you can represent a monoid with a C# interface, I've so far tried to avoid doing so, as I'm not sure whether or not it's helpful.

Hi Mark, I did not expect to recieve such an exhaustive answer. That is incredible, thank you so much! It did clear up my confusion as well. Since most of these terms and concepts are new to me, even a slight inconsistency can be really confusing. But with your additional explanation I think I got a good understanding of the terms again.

Your explanations of these concepts in general are very well written and make it easy for people unfamiliar with this topic to understand the terms and their significance. Thanks again for writing!

Punkislamist, thank you for those kind words. I'm happy to hear that what I wrote made sense to you; it makes sense to me, but I forgot to point out that I'm hardly an expert in category theory. Writing out the above answer helped clarify some things for me as well; as is common wisdom: you only really understand a topic when you teach it.

Convex hull monoid

The union of convex hulls form a monoid. Yet another non-trivial monoid example, this time in F#.

This article is part of a series about monoids. In short, a monoid is an associative binary operation with a neutral element (also known as identity).

If you're reading the series as an object-oriented programmer, I apologise for the digression, but this article exclusively contains F# code. The next article will return with more C# examples.

Convex hull #

In a past article I've described my adventures with finding convex hulls in F#. The convex hulls I've been looking at form the external convex boundary of a set of two-dimensional points. While you can generalise the concept of convex hulls to n dimensions, we're going to stick to two-dimensional hulls here.

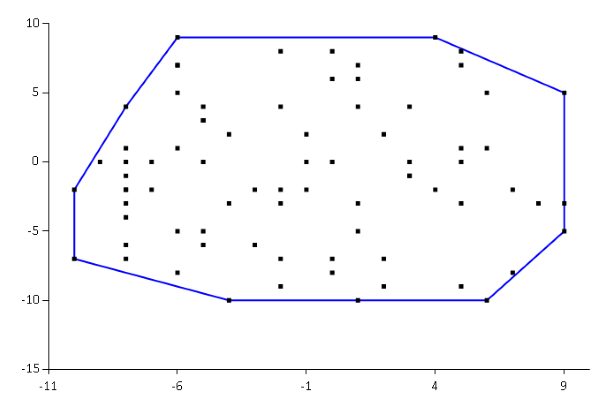

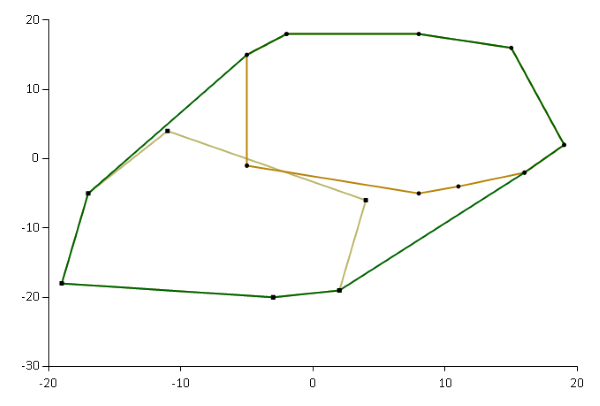

If you have two convex hulls, you can find the convex hull of both:

Here, the dark green outline is the convex hull of the two lighter-coloured hulls.

Finding the convex hull of two other hulls is a binary operation. Is it a monoid?

In order to examine that, I'm going to make some changes to my existing code base, the most important of which is that I'm going to introduce a Hull type. The intent is that if points are contained within this type, then only the convex hull remains. It'd be better if it was possible to make the

case constructor private, but if one does that, then the hull function can no longer be inlined and generic.

type Hull<'a> = Hull of ('a * 'a) list

With the addition of the Hull type, you can now add a binary operation:

// Hull<'a> -> Hull<'a> -> Hull<'a> let inline (+) (Hull x) (Hull y) = hull (x @ y)

This operation explicitly uses the + operator, so I'm clearly anticipating the turn of events here. Nothing much is going on, though. The function pattern-matches the points out of two Hull values. x and y are two lists of points. The + function concatenates the two lists with the @ operator, and finds the convex hull of this new list of points.

Associativity #

My choice of operator strongly suggests that the + operation is a monoid. If you have three hulls, the order in which you find the hulls doesn't matter. One way to demonstrate that property is with property-based testing. In this article, I'm using Hedgehog.

[<Fact>] let ``Hull addition is associative`` () = Property.check <| property { let! (x, y, z) = Range.linear -10000 10000 |> Gen.int |> Gen.tuple |> Gen.list (Range.linear 0 100) |> Gen.tuple3 (hull x + hull y) + hull z =! hull x + (hull y + hull z) }

This automated test generates three lists of points, x, y, and z. The hull function uses the Graham Scan algorithm to find the hull, and part of that algorithm includes calculating the cross product of three points. For large enough integers, the cross product will overflow, so the property constrains the point coordinates to stay within -10,000 and 10,000. The implication of that is that although + is associative, it's only associative for a subset of all 32-bit integers. I could probably change the internal implementation so that it calculates the cross product using bigint, but I'll leave that as an exercise to you.

For performance reasons, I also arbitrarily decided to constrain the size of each set of points to between 0 and 100 elements. If I change the maximum count to 1,000, it takes my laptop 9 seconds to run the test.

In addition to Hedgehog, this test also uses xUnit.net, and Unquote for assertions. The =! operator is the Unquote way of saying must equal. It's an assertion.

This property passes, which demonstrates that the + operator for convex hulls is associative.

Identity #

Likewise, you can write a property-based test that demonstrates that an identity element exists for the + operator:

[<Fact>] let `` Hull addition has identity`` () = Property.check <| property { let! x = Range.linear -10000 10000 |> Gen.int |> Gen.tuple |> Gen.list (Range.linear 0 100) let hasIdentity = Hull.identity + hull x = hull x + Hull.identity && hull x + Hull.identity = hull x test <@ hasIdentity @> }

This test generates a list of integer pairs (x) and applies the + operator to x and Hull.identity. The test passes for all x that Hedgehog generates.

What's Hull.identity?

It's simply the empty hull:

module Hull = let identity = Hull []

If you have a set of zero 2D points, then the convex hull is empty as well.

The + operator for convex hulls is a monoid for the set of coordinates where the cross product doesn't overflow.

Summary #

If you consider that the Hull type is nothing but a container for a list, it should come as no surprise that a monoid exists. After all, list concatenation is a monoid, and the + operator shown here is a combination of list concatenation (@) and a Graham Scan.

The point of this article was mostly to demonstrate that monoids exist not only for primitive types, but also for (some) more complex types. The + operator shown here is really a set union operation. What about intersections of convex hulls? Is that a monoid as well? I'll leave that as an exercise.

Next: Tuple monoids.

Comments

Is that true that you could replace hull with any other function, and (+) operator would still be a monoid? Since the operator is based on list concatenation, the "monoidness" is probably derived from there, not from function implementation.

Mikhail, thank you for writing. You can't replace hull with any other function and expect list concatenation to remain a monoid. I'm sorry if my turn of phrase gave that impression. I can see how one could interpret my summary in that way, but it wasn't my intention to imply that this relationship holds in general. It doesn't, and it's not hard to show, because we only need to come up with a single counter-example.

One counter example is a function that always removes the first element in a list - unless the list is empty, in which case it simply returns the empty list. In Haskell, we can define a newtype with this behaviour in mind:

Prelude Data.Monoid Data.List> newtype Drop1 a = Drop1 [a] deriving (Show, Eq)

For my own convenience, I wrote the entire counter-example in GHCi (the Haskell REPL), but imagine that the Drop1 data constructor is hidden from clients. The normal way to do that is to not export the data constructor from the module. In GHCi, we can't do that, but just pretend that the Drop1 data constructor is unavailable to clients. Instead, we'll have to use this function:

Prelude Data.Monoid Data.List> drop1 = Drop1 . drop 1

The drop1 function has the type [a] -> Drop1 a; it takes a list, and returns a Drop1 value, which contains the input list, apart from its first element.

We can attempt to make Drop 1 a monoid:

Prelude Data.Monoid Data.List> :{

Prelude Data.Monoid Data.List| instance Monoid (Drop1 a) where

Prelude Data.Monoid Data.List| mempty = drop1 []

Prelude Data.Monoid Data.List| mappend (Drop1 xs) (Drop1 ys) = drop1 (xs ++ ys)

Prelude Data.Monoid Data.List| :}

Hopefully, you can see that the implementation of mappend is similar to the above F# implementation of + for convex hulls. In F#, the list concatenation operator is @, whereas in Haskell, it's ++.

This compiles, but it's easy to come up with some counter-examples that demonstrate that the monoid laws don't hold. First, associativity:

Prelude Data.Monoid Data.List> (drop1 [1..3] <> drop1 [4..6]) <> drop1 [7..9] Drop1 [5,6,8,9] Prelude Data.Monoid Data.List> drop1 [1..3] <> (drop1 [4..6] <> drop1 [7..9]) Drop1 [3,6,8,9]

(The <> operator is an infix alias for mappend.)

Clearly, [5,6,8,9] is different from [3,6,8,9], so the operation isn't associative.

Equivalently, identity fails as well:

Prelude Data.Monoid Data.List> mempty <> drop1 [1..3] Drop1 [3] Prelude Data.Monoid Data.List> drop1 [1..3] Drop1 [2,3]

Again, [3] is different from [2,3], so mempty isn't a proper identity element.

It was easy to come up with this counter-example. I haven't attempted to come up with more, but I'd be surprised if I accidentally happened to pick the only counter-example there is. Rather, I conjecture that there are infinitely many counter-examples that each proves that there's no general rule about 'wrapped' lists operations being monoids.

Comments

Is division over real numbers also a quasigroup? I think so, for any number a and b we have x and y such that:

a / x = b

y / a = b

I think division over integers is not a quasigroup since for 5 and 10 there is no x such that 5 / x = 10 since 0.5 is not an integer.

Onur, thank you for writing. In general, when pondering pure mathematics rather than their programming aspects, I'm no authority. You'd be better off researching somewhere else. The Wikipedia article on quasigroups isn't too bad for that purpose, I find.

According to that article, division of non-zero rational or real numbers is an invertible binary operation.

As far as I can tell (I'm not a mathematician) you're correct that integer division doesn't form a quasigroup. When, as you suggestion, you pick a = 5 and b = 10, there's no integer x for which 5 / x = 10.