ploeh blog danish software design

DI and events: Composition

With Reactive Extensions, and a bit of composition, you can publish and subscribe to events in a structurally safe way.

Previously, in my series about Dependency Injection and events, you learned how to connect a publisher and a subscriber via a third party (often the Composition Root).

The problem with that approach is that while it's loosely coupled, it's too easy to forget to connect the publisher and the subscriber. It's also possible to forget to unsubscribe. In neither case can the compiler help you.

However, the advantage of using Reactive Extensions over .NET events is that IObserver<T> is composable. That turns out to be quite an important distinction!

The problem with IObservable<T> #

While I consider IObserver<T> to be an extremely versatile interface, I consider IObservable<T> to be of limited usefulness. Consider its definition:

public interface IObservable<out T> { IDisposable Subscribe(IObserver<T> observer); }

The idea is that the publisher (the Observable) receives a subscriber (the Observer) via Method Injection. When the method completes, the subscriber is considered subscribed to the publisher's events, until the subscriber disposes of the returned subscription reference.

There's a couple of problems with this design:

- It's too easy to forget to invoke the Subscribe method. This is not a problem if you're writing a system in which publishers dynamically subscribe to event streams, but it's problematic if your system relies on certain publishers and subscribers to be connected.

- It implies mutation in the publisher, because the publisher must somehow keep a list of all its subscribers.

- It breaks Command Query Separation (CQS).

- Since it implies mutation, it's not thread-safe by default.

From Method Injection to Constructor Injection #

As you learned in the last couple of articles, the subscriber should not require any dependency in order to react to events. Yet, if Method Injection via IObservable<T> isn't a good approach either, then what's left?

Good old Constructor Injection.

The important realization is that it's not the subscriber (NeedyClass, in previous examples) that requires a dependency - it's the publisher!

Imagine that until now, you've had a publisher implementing IObservable<T>. In keeping with the running example throughout this series, this was the publisher that originally implemented IDependency. Thus, it's still called RealDependency. For simplicity's sake, assume that its implementation is as simple as this:

public class RealDependency : IObservable<Unit> { private readonly Subject<Unit> subject; public RealDependency() { this.subject = new Subject<Unit>(); } public void MakeItHappen() { this.subject.OnNext(Unit.Default); } public IDisposable Subscribe(IObserver<Unit> observer) { return this.subject.Subscribe(observer); } }

What if, instead of implementing IObservable<Unit>, this class would use Constructor Injection to request an IObserver<Unit>? Then it would look like this:

public class RealDependency { private readonly IObserver<Unit> observer; public RealDependency(IObserver<Unit> observer) { this.observer = observer; } public void MakeItHappen() { this.observer.OnNext(Unit.Default); } }

That's much simpler, and you just got rid of an entire type (IObservable<Unit>)! Even better, you've also eliminated all use of IDisposable. Oh, and it also conforms to CQS, and is thread-safe.

Connection #

The names of the concrete classes are completely off by now, but you can connect publisher (RealDependency) with its subscriber (NeedyClass) from a third party (Composition Root):

var subscriber = new NeedyClass(); var publisher = new RealDependency(subscriber);

Not only is this easy, the statically typed structure of both classes helps you do the right thing: the compiler will issue an error if you don't supply a subscriber to the publisher.

But wait, you say: now the publisher is forced to have a single observer. Isn't the whole idea about publish/subscribe that you can have an arbitrary number of subscribers for a given publisher? Yes, it is, and that's still possible.

Composition #

More than a single subscriber is easy if you introduce a Composite:

public class CompositeObserver<T> : IObserver<T> { private readonly IEnumerable<IObserver<T>> observers; public CompositeObserver(IEnumerable<IObserver<T>> observers) { this.observers = observers; } public void OnCompleted() { foreach (var observer in this.observers) observer.OnCompleted(); } public void OnError(Exception error) { foreach (var observer in this.observers) observer.OnError(error); } public void OnNext(T value) { foreach (var observer in this.observers) observer.OnNext(value); } }

While it looks like a bit of work, this class is so reusable that I wonder why it's not included in the Rx library itself... It enables you to subscribe any number of subscribers to the publisher, e.g. two:

var sub1 = new NeedyClass(); var sub2 = new AnotherObserver(); var publisher = new RealDependency( new CompositeObserver<Unit>( new IObserver<Unit>[] { sub1, sub2 }));

I'll leave it as an exercise to the reader to figure out how to implement the scenario with no subscribers :)

Conclusion #

Sticking to IObserver<T> and simply injecting it into the publishers is much simpler than any other alternative I've described so far. Nonetheless, keep in mind that the reason this simplification works so well is because it assumes that you know all subscribers when you compose your object graph.

There's a reason the IObservable<T> interface exists, and that's to support scenarios where publishers and subscribers come and go during the lifetime of an application. The simplification described here doesn't handle that scenario, but if you don't need that flexibility, you can greatly simplify your eventing infrastructure by disposing of IObservable<T> ;)

DI and events: Reactive Extensions

With Reactive Extensions, you can convert event subscription to something that looks more object-oriented, but all is still not quite as it should be...

In my series about Dependency Injection and events, you previously saw how to let a third party connect publisher and subscriber. I think that approach is valuable in all those cases where you connect publishers and subscribers in a narrow and well-defined area of your application, such as in the Composition Root. However, it's not a good fit if you need to connect and disconnect publishers and subscribers throughout your application's code base.

This article examines alternatives based on Reactive Extensions.

Re-design #

For the rest of this article, I'm going to assume that you're in a position where you can change the design - particularly the design of the IDependency interface. If not, you can always convert a standard .NET event into the appropriate IObservable<T>.

Using small iterations, you can first make IDependency implement IObservable<Unit>:

public interface IDependency : IObservable<Unit> { event EventHandler ItHappened; }

This enables you to change the implementation of NeedyClass to subscribe to IObservable<Unit> instead of the ItHappened event:

public class NeedyClass : IObserver<Unit>, IDisposable { private readonly IDisposable subscription; public NeedyClass(IDependency dependency) { if (dependency == null) throw new ArgumentNullException("dependency"); this.subscription = dependency.Subscribe(this); } public void OnCompleted() { } public void OnError(Exception error) { } public void OnNext(Unit value) { // Handle event here } public void Dispose() { this.subscription.Dispose(); } }

Because IObservable<T>.Subscribe returns an IDisposable, NeedyClass still needs to store that object in a field and dipose of it when it's done, which also means that it must implement IDisposable itself. Thus, you seem to be no better off than with Constructor Subscription. Actually, you're slightly worse off, because now NeedyClass gained three new public methods (OnCompleted, OnError, and OnNext), compared to a single public method with Constructor Subscription.

What's even worse is that you're still violating Nikola Malovic's 4th law of IoC: Injection Constructors should perform no work.

This doesn't seem promising.

Simplification #

While you seem to have gained little from introducing Reactive Extensions, at least you can simplify IDependency a bit. No classes should have to subscribe to the old-fashioned .NET event, so you can remove that from IDependency:

public interface IDependency : IObservable<Unit> { }

That leaves IDependency degenerate, so you might as well dispense entirely with it and let NeedyClass subscribe directly to IObservable<Unit>:

public class NeedyClass : IObserver<Unit>, IDisposable { private readonly IDisposable subscription; public NeedyClass(IObservable<Unit> dependency) { if (dependency == null) throw new ArgumentNullException("dependency"); this.subscription = dependency.Subscribe(this); } public void OnCompleted() { } public void OnError(Exception error) { } public void OnNext(Unit value) { // Handle event here } public void Dispose() { this.subscription.Dispose(); } }

At least you got rid of a custom type in favor of a well-known abstraction, so that will have to count for something.

Injection disconnect #

If you refer back to the discussion about Constructor Subscription, you may recall an inkling that NeedyClass requests the wrong type of dependency via the constructor. If it's saving an IDisposable as its class field, then why is it requesting an IObservable<Unit>? Shouldn't it simply request an IDisposable?

This sounds promising, but in the end turns out to be a false lead. Still, this actually compiles:

public class NeedyClass : IObserver<Unit>, IDisposable { private readonly IDisposable subscription; public NeedyClass(IDisposable subscription) { if (subscription == null) throw new ArgumentNullException("subscription"); this.subscription = subscription; } public void OnCompleted() { } public void OnError(Exception error) { } public void OnNext(Unit value) { // Handle event here } public void Dispose() { this.subscription.Dispose(); } }

The problem with this is that while it compiles, it doesn't work. Considering the implementation, you should also be suspicious: it's basically a degenerate Decorator of IDisposable. That subscription field doesn't seem to add anything to NeedyClass...

Examining why it doesn't work should be enlightening, though. A third party can attempt to connect NeedyClass with the observable. One attempt might look like this:

var observable = new FakyDependency(); var nc = new NeedyClass(observable.Subscribe());

However, this doesn't work, because that overload of the Subscribe method only exists to evaluate an event stream for side effects. The overload you'd need is the Subscribe(IObserver<T>) overload. However, the IObserver<Unit> you'd like to supply is an instance of NeedyClass, but you can't supply an instance of NeedyClass before you've supplied an IDisposable to it (a Catch-22)!

Third-party Connect #

Once more, this suggests that NeedyClass really shouldn't have a dependency in order to react to events. You can simply let it be a stand-alone implementation of IObserver<Unit>:

public class NeedyClass : IObserver<Unit> { public void OnCompleted() { } public void OnError(Exception error) { } public void OnNext(Unit value) { // Handle event here } }

Once again, you have a nice, stand-alone class you can connect to a publisher:

var nc = new NeedyClass(); var subscription = observable.Subscribe(nc);

That pretty much puts you back at the Third-party Connect solution, so that didn't seem to buy you much.

(Preliminary) conclusion #

So far, using Reactive Extensions instead of .NET events seems to have provided little value. You're able to replace a custom IDependency interface with a BCL interface, and that's always good. Apart from that, there seems to be little value to be gained from Reactive Extensions in this scenario.

The greatest advantage gained so far is that, hopefully you've learned something. You've learned (through two refactoring attempts) that NeedyClass isn't needy at all, and should not have a dependency in order to react to events.

The last advantage gained by using Reactive Extensions may seem like a small thing, but actually turns out to the most important of them all: by using IObservable<T>/IObserver<T> instead of .NET events, you've converted your code to work with objects. You know, .NET events are not real objects, so they can't be passed around, but IObservable<T> and IObserver<T> instances are real objects. This means that now that you know that NeedyClass shouldn't have a dependency, perhaps some other class should. Remember what I originally said about Inversion of Inversion of Control? In the next article in the series, I'll address that issue, and arrive at a more idiomatic DI solution.

DI and events: Third-party Connect

Instead of using Constructor Injection to subscribe to events on a dependency, you can let a third party connect the subscriber to the publisher.

In the previous article in my series about Dependency Injection and events, you saw an example of how injecting a dependency that raises events violates Nikola Malovic's 4th law of IoC: Injection Constructors should perform no work.

In this article, you'll see the first of several alternatives.

Third-party Connect #

Take a step back and recall why you're using Dependency Injection in the first place. Hopefully, you use Dependency Injection because it provides the decoupling necessary to make your code more maintainable (and thus, you and your colleagues more productive). However, events are already a mechanism for decoupling. In .NET, events are simply a (limited) baked-in implementation of the Observer pattern.

Perhaps it's helpful if we consider alternative names for Dependency Injection. Nat Pryce prefers the term Third-party Connect, and I think that there's much sense in that name. Instead of focusing on the injection part, or even Inversion of Control, the term Third-party Connect focuses on the fact that when you decouple two objects, you need a third party to connect them with each other. In a well-designed application, this third party will often be the Composition Root.

If you already have a third party connecting NeedyClass with IDependency, must you use Constructor Injection?

Further decoupling #

Apparently, if you consider the code in the previous article, NeedyClass is required to do something whenever a particular IDependency instance raises its ItHappened event. What if, instead of injecting IDependency and subscribing a private event handler, you were to expose a public method that implements the same logic as that private event handler?

public class NeedyClass { public void DoSomethingInteresting() { // Handle event here } }

Notice how much simpler this implementation is, compared with the previous version. Nothing is injected, there are no interfaces in play. Such a class is very easy to unit test as well, so I think this looks very promising.

Doesn't this design break encapsulation? Not more than before. Remember, in the previous implementation, you could always inject an implementation of IDependency that enabled you to raise the ItHappened event, and thereby invoke the private event handler in NeedyClass. This new design just makes it a bit easier to invoke the method. Keep in mind that encapsulation isn't about public versus private members; encapsulation is about invariants.

This version of NeedyClass doesn't actually expose a public 'event handler', since the DoSomethingInteresting method doesn't match the event handler signature. Thus, if you use a static code analysis tool, it's not going to complain about a public event handler. The DoSomethingInteresting method is a method just like any other method. This design is much more decoupled than before, because NeedyClass knows nothing about IDependency. Hopefully, it makes sense as a stand-alone class with its own API. This makes it more reusable.

At this point, NeedyClass is no longer an appropriate name, but let's keep it for now.

Subscription #

In order to connect NeedyClass with IDependency, the third party (e.g. the Composition Root) can subscribe the DoSomethingInteresting method to the ItHappened event:

var nc = new NeedyClass(); dependency.ItHappened += (s, e) => nc.DoSomethingInteresting();

The advantage of this design is that it's much more decoupled than before. NeedyClass knows nothing about IDependency, and IDependency knows nothing about NeedyClass. However, one disadvantage is that it's easy to forget to connect these two objects with each other; the compiler no longer offers any help.

If both objects are long-lived objects (i.e. have the Singleton lifetime style), you probably only need to write and invoke that connection code once, in the Composition Root. In this case, one or two Smoke Tests should prevent any regressions.

However, if one or both of these objects have shorter lifetimes, you may want to encapsulate the creation of NeedyClass in some sort of Factory. Still, unless you make the NeedyClass constructor internal or private, programmers may forget to use the Factory, so this can still be a fragile solution.

Unsubscription #

Another problem that this Third-party Connect solution shares with Constructor Subscription is that you still need to think about disconnecting the two objects, once you're done with them. This isn't hard to do.

EventHandler handler = (s, e) => nc.DoSomethingInteresting(); dependency.ItHappened += handler; // Do something interesting here dependency.ItHappened -= handler;

While not particularly difficult, it does require you to take the extra step of assigning the event handler to a variable you can later use to unsubscribe. Still, the worst part of this attempt at a solution is probably that, just like you'll need to remember to subscribe NeedyClass to the event, you must also remember to unsubscribe it. At least, in this case, there's a better symmetry, because you must remember to both subscribe and unsubscribe, whereas with Constructor Subscription, you only needed to remember to unsubscribe (or dispose, as it were).

Conclusion #

Using Third-party Connect leads to a simpler, but more Fragile design. Still, I often find that the extreme simplicity of the involved classes trumps the fragility of the design; if I had to choose between Third-party Connect and Constructor Subscription, I'd select Third-party Connect. Fortunately, these aren't the only options available to you; in future articles, I'll approach a better alternative.

DI and events: Constructor Subscription

Using Constructor Injection, you can subscribe to an event within the constructor, but should you?

This post is the first in a series of articles about Dependency Injection and events.

Imagine that you have an interface that defines an event:

public interface IDependency { event EventHandler ItHappened; }

In order to keep the example simple, the ItHappened event carries no information; it just raises an event with an EventArgs instance. Thus, subscribers can react to the fact that this particular event happened, but there's no data contained in the event. However, the following discussion doesn't change if the event carries information.

A class must react to the events raised by IDependency implementations. A common approach is to subscribe to the event in the constructor. We can call this pattern Constructor Subscription:

public class NeedyClass { private readonly IDependency dependency; public NeedyClass(IDependency dependency) { if (dependency == null) throw new ArgumentNullException("dependency"); this.dependency = dependency; this.dependency.ItHappened += this.OnItHappened; } private void OnItHappened(object sender, EventArgs e) { // Handle event here } }

The concern is that, by subscribing to an event, the constructor violates Nikola Malovic's 4th law of IoC: Injection Constructors should perform no work.

This rule about Dependency Injection explains why you can compose big object graphs with confidence. Still, the most compelling reason for conforming strictly to the rule is most likely performance considerations. So, what if you subscribe to a single event or two during construction? Will it adversely (and noticeably) affect performance of your overall system?

As always, the answer to such questions is: measure. However, I'd be quite surprised if it turns out that a single event subscription has a huge impact on performance...

Smell #

Consider the implementation of NeedyClass: it contains a design smell. Can you spot it?

Given the definition of the IDependency interface, the ItHappened event is the only member defined by the interface. If this is the case, then why is NeedyClass holding on to a reference of the interface?

You can remove the dependency field from NeedyClass, and nothing is going to break:

public class NeedyClass { public NeedyClass(IDependency dependency) { if (dependency == null) throw new ArgumentNullException("dependency"); dependency.ItHappened += this.OnItHappened; } private void OnItHappened(object sender, EventArgs e) { // Handle event here } }

From the perspective of an outside observer, that's really strange. Why are we required to pass in an object that's not going to be used as is? Just like in a previous discussion about the implications of injecting IEnumerable<T>, if you're injecting an abstraction, and the constructor then starts querying, modifying, examining, or otherwise fidget with the injected object, then you're probably injecting the wrong object.

Keep in mind that Constructor Injection is a declaration of requirements. It's the declaring class that advertises to the world: these are (some of) my invariants. If the class can't use the injected dependency as is, it suggests that it requested the wrong object from its clients. The class with the Injection Constructor should declare what it really needs. In this case, it needs to react to events.

In the next post, I'll show you a better (i.e. simpler) design for reacting to events.

Unsubscription (Update, September 9, 2013, 11:48 UTC) #

Some readers have commented that NeedyClass must keep the reference to IDependency in order to unsubscribe from the event. This is true, and something I overlooked when I originally banged together the sample code yesterday evening. Apparently, three unit tests were at least a test too few... :$

From a perspective of basic correctness, NeedyClass works appropriately, but as Geert van Horrik and Claus Nielsen point out, this could easily lead to resource leaks (although in practice, that depends on the effective lifetime of NeedyClass).

What happens when we start taking resource management into account?

Well, NeedyClass must be sure to unsubscribe from the event when it goes out of scope. The most correct way of making sure this happens is to implement IDisposable:

public class NeedyClass : IDisposable { private readonly IDependency dependency; public NeedyClass(IDependency dependency) { if (dependency == null) throw new ArgumentNullException("dependency"); this.dependency = dependency; this.dependency.ItHappened += this.OnItHappened; } private void OnItHappened(object sender, EventArgs e) { // Handle event here } public void Dispose() { this.dependency.ItHappened -= this.OnItHappened; } }

While this weakens the argument that NeedyClass is requesting the wrong kind of dependency, this is a lot of work just to consume an event. Furthermore, it isn't particularly safe, because you'll have to remember to dispose of all of your NeedyClass instances.

Thus, a better approach is still warranted.

Comments

Or if you want to be explicit about the dependencies, then go for something like NeedyClass : IHandle<ItHappened>. Nevertheless, dependency on ItHappened within NeedyClass would still easily be found with static analysis. A few lines of CQLinq with NDepend.

Dependency Injection and events

Overview of articles about Dependency Injection and events.

One of my readers, Kenny Pflug, asks me about subscribing to events in the constructor of a class using Constructor Injection:

I want to register to a plain old .NET event of a dependency that is supplied via constructor injection. I read your book "DI in .NET" and in it you mention that injection constructors should be simple and not perform any other work than guarding for null values and assigning the dependencies to (read-only) fields. I also read on your blog that this is only a pattern and might be broken gently if the need would arise.

[...] do you know and prefer any patterns to solve this "registering to events of dependencies in a constructor" problem?

In short, I think that it's a code smell if a design requires you to subscribe to an event in the constructor; it's a smell that the dependency chain is slightly inverted. Inversion of Inversion of Control - how about that?

In the next series of posts, I'll attempt to provide various perspectives on this situation, starting with the original context.

- DI and events: Constructor Subscription.

- DI and events: Third-party Connect.

- DI and events: Reactive Extensions.

- DI and events: Composition.

In summary, it's probably not going to be a big problem if you subscribe to an event in a constructor, but it's a code smell, because it betrays a design issue.

Running a pure F# Web API on Azure Web Sites

This post explains how to make a pure F# implementation of an ASP.NET Web API run in an Azure Web Site.

In my previous post, I explained how to create an ASP.NET Web API project entirely in F#, without relying on a C# or VB host project. At the end of that article, I had a service which can be launched from Visual Studio 2012 and run in IIS Express, but it didn't run in Azure Web Sites. In this post, I'll explain how to make this possible.

Deploying to Azure #

The first problem to solve is how to even deploy the Web Project to Azure in the first place. Because the project is a 'real' Visual Studio Web Project, it's possible to right-click on the project and select "Publish..." This brings up the dialog for publishing a Web Project to Azure, so that seems promising.

However, actually attempting to do so soon produces this error message:

Exception in executing publishing : The method or operation is not implemented.Apparently, someone in Microsoft chose to violate the Liskov Substitution Principle...

Another, and, as it turns out, ultimately more productive, deployment options is to deploy via Git. Fortunately, I already kept a Git repository for the code, in order to make it easier for me to back out, if my experiments took me in wrong directions (which did happen a couple of times). Thus, I created the Web Site on the Azure portal, and configured it with a Git repository to which I can push.

This should enable me to simply go

$ git push azure master

in order to deploy to my new Azure Web Site. Unfortunately, it didn't quite work:

$ git push azure master Counting objects: 113, done. Delta compression using up to 4 threads. Compressing objects: 100% (101/101), done. Writing objects: 100% (113/113), 1.41 MiB | 22.00 KiB/s, done. Total 113 (delta 34), reused 0 (delta 0) remote: Updating branch 'master'. remote: Updating submodules. remote: Preparing deployment for commit id 'dd501baeaa'. remote: Generating deployment script. remote: . remote: info: Executing command site deploymentscript remote: info: Project file path: .\FebApi\FebApi.fsproj remote: info: Solution file path: .\FebApi.sln remote: info: Generating deployment script for .NET Web Application remote: info: Generated deployment script files remote: info: site deploymentscript command OK remote: Running deployment command... remote: Handling .NET Web Application deployment. remote: ..... remote: FebApi -> C:\DWASFiles\Sites\FebApi\VirtualDirectory0\site\repository\FebApi\bin\Release\FebApi.dll remote: C:\DWASFiles\Sites\FebApi\VirtualDirectory0\site\repository\FebApi\FebApi.fsproj : error MSB4057: The target "pipelinePreDeployCopyAllFilesToOneFolder" does not exist in the project. remote: An error has occurred during web site deployment. remote: remote: Error - Changes committed to remote repository but your website not updated. To https://******@febapi.scm.azurewebsites.net:443/FebApi.git * [new branch] master -> master

More work apparently remained.

Import MSBuild projects #

As you can tell from the error message, the "target "pipelinePreDeployCopyAllFilesToOneFolder" does not exist in the project." Knowing that Visual Studio project files are actually MSBuild files with another extension, this sounds like an MSBuild issue. To figure out what to do, I opened a C# Web Project and began looking for various Import elements.

After copying a couple of Import elements from a C# Web Project, and a bit of experimentation, I ended up with this in my .fsproj file:

<Import Project="$(VSToolsPath)\WebApplications\Microsoft.WebApplication.targets" Condition="'$(VSToolsPath)' != ''" /> <Import Project="$(MSBuildExtensionsPath32)\Microsoft\VisualStudio\v11.0\WebApplications\Microsoft.WebApplication.targets" Condition="true" />

My .fsproj file already had an existing Import element, so I added these two just below the existing element. I haven't experimented with removing one of these elements, so it may be possible to simplify this, or somehow make it more robust. What mattered to me was that this enabled me to move on:

$ git push azure master Counting objects: 7, done. Delta compression using up to 4 threads. Compressing objects: 100% (4/4), done. Writing objects: 100% (4/4), 404 bytes | 0 bytes/s, done. Total 4 (delta 3), reused 0 (delta 0) remote: Updating branch 'master'. remote: Updating submodules. remote: Preparing deployment for commit id 'd3625cfef0'. remote: Generating deployment script. remote: Running deployment command... remote: Handling .NET Web Application deployment. remote: .... remote: FebApi -> C:\DWASFiles\Sites\FebApi\VirtualDirectory0\site\repository\FebApi\bin\Release\FebApi.dll remote: Copying all files to temporary location below for package/publish: remote: C:\DWASFiles\Sites\FebApi\Temp\7a1a548e-d5fe-48d6-94ec-99146f20676a. remote: KuduSync.NET from: 'C:\DWASFiles\Sites\FebApi\Temp\7a1a548e-d5fe-48d6-94ec-99146f20676a' to: 'C:\DWASFiles\Sites\FebApi\VirtualDirectory0\site\wwwroot' remote: Deleting file: 'hostingstart.html' remote: Copying file: 'bin\FebApi.dll' remote: Copying file: 'bin\FebApi.XML' remote: Copying file: 'bin\FSharp.Core.dll' remote: Copying file: 'bin\Microsoft.Web.Infrastructure.dll' remote: Copying file: 'bin\Newtonsoft.Json.dll' remote: Copying file: 'bin\System.Net.Http.Formatting.dll' remote: Copying file: 'bin\System.Web.Http.dll' remote: Copying file: 'bin\System.Web.Http.WebHost.dll' remote: Finished successfully. remote: Deployment successful. To https://ploeh@febapi.scm.azurewebsites.net:443/FebApi.git 658e859..d3625cf master -> master

Alas, while deployment succeeded, I wasn't out of the woods yet.

Build actions #

Browsing to the (successfully deployed) site gave me this (rather disappointing) message:

You do not have permission to view this directory or page.

After digging around for a while, I discovered that neither Global.asax nor web.config were deployed to the actual site. The way to resolve that is to change the Build Action for these files to Content.

This was the last hurdle. Pushing those changes to the remote Git repository updated the API, which now works! For the time being, you can see it running here, although I will not promise to keep it around forever.

How to create a pure F# ASP.NET Web API project

How to create a single F# project for an ASP.NET Web API, without using an auxiliary C# or VB host project.

Implementing an ASP.NET Web API service in F# is a relatively well-described process, but everywhere I look, it seems that people are relying on a (Humble) C# Web Project to act as a host for a library written in F#. You can use an online Visual Studio template, or you can do this by hand - it's not particularly difficult. Where's the challenge in that?

Partly in a quest to demonstrate that F# is a general-purpose language, and partly just to see if it can be done, I wanted to create a pure F# ASP.NET Web API project, without relying on a C# host project. Most people on Twitter advised against it because there's no F# support for Razor, *.aspx, or *.cshtml. However, that's not a concern for a Web API, since it's not going to generate HTML anyway.

As @dotnetnerd puts it:

"not trivial but doable"That turns out to be quite an accurate description. Here's how.

Create an F# library project #

The first thing I did was to create a normal F# library project.

As you can see, there's nothing in that project yet.

Turn the project into a Web Project #

In contrast to C# and VB, there's no built-in F# Web Project template. However, in order to work with a web project in Visual Studio 2012, it helps if the project is a 'real' Web Project, because it means that you can launch it in IIS Express, and such things.

Because there's no built-in Web Project template for F#, I'm not aware of a nice way to do this through the Visual Studio UI, but you can open your .fsproj file in an text editor and hand-edit it. In order to turn my project into a Web Project, I added this line of code to my .fsproj file (just below the Project/PropertyGroup/ProjectGuid element):

<ProjectTypeGuids>{349c5851-65df-11da-9384-00065b846f21};{F2A71F9B-5D33-465A-A702-920D77279786}</ProjectTypeGuids>

The 349c5851-65df-11da-9384-00065b846f21 GUID tells Visual Studio that this is a Web Project, while F2A71F9B-5D33-465A-A702-920D77279786 indicates an F# project.

This isn't entirely without drawbacks (that I'll get to in a minute), but you can now reload the project in Visual Studio, and you actually have an F# Web Project.

Turn on IIS Express #

One of the things you can do with a Web Project is to right-click in Solution Explorer and select "Use IIS Express...", so I did that.

Add Web API dependencies #

In order to use the ASP.NET Web API, you'll need to add the appropriate dependencies. I did that by adding the Microsoft.AspNet.WebApi.WebHost NuGet package.

Add a code file #

One of the disadvantages of creating an F# Web Project is that it's an unknown combination, so you can't add anything to the project:

In order to work around this problem, I again hand-edited the .fsproj file, temporarily commenting out the ProjectTypeGuids element I previously added. Then I added an F# code file, and finally added the ProjectTypeGuids element again.

Change the output folder #

The default output folder for the debug build is "bin\Debug". Although I'm not sure whether this is strictly necessary, I changed it to "bin" because that's how C# Web Projects work...

Reference System.Web #

In order to be able to derive a Global class from System.Web.HttpApplication, I added a reference to System.Web. This is a BCL library, so I added it by using the old-fashioned Add reference menu item (instead of using NuGet).

Add Global.asax #

Next, I added a Global.asax file with this content:

<%@ Application Inherits="Ploeh.Samples.FebApi.Global" %>

The class Ploeh.Samples.FebApi.Global doesn't exist yet, so if you try to run the site at this stage, it's going to fail.

Add a Global class #

In order to add the Ploeh.Samples.FebApi.Global class, I wrote the first F# code in the project:

namespace Ploeh.Samples.FebApi open System type Global() = inherit System.Web.HttpApplication() member this.Application_Start (sender : obj) (e : EventArgs) = ()

The site still can't run, but at least now it doesn't complain about a missing Global class.

Add a Route #

The next step was to add a default Web API route:

namespace Ploeh.Samples.FebApi open System open System.Web.Http type HttpRouteDefaults = { Controller : string; Id : obj } type Global() = inherit System.Web.HttpApplication() member this.Application_Start (sender : obj) (e : EventArgs) = GlobalConfiguration.Configuration.Routes.MapHttpRoute( "DefaultAPI", "{controller}/{id}", { Controller = "Home"; Id = RouteParameter.Optional }) |> ignore

At this point, you actually have an ASP.NET Web API site, because now, when you attempt to run the site, you get this error message:

No HTTP resource was found that matches the request URI 'http://localhost:64176/'. No type was found that matches the controller named 'Home'.Which is great, because this is known territory.

Add HomeController #

Adding the missing HomeController class is easy:

type HomeController() = inherit ApiController()

Of course, it doesn't do anything yet, so when you browse to the API, you get this (entirely expected) error message:

The requested resource does not support http method 'GET'.This problem is easy to solve:

type HomeRendition() = [<DefaultValue>] val mutable Message : string [<DefaultValue>] val mutable Time : string type HomeController() = inherit ApiController() member this.Get() = this.Request.CreateResponse( HttpStatusCode.OK, HomeRendition( Message = "Hello from F#", Time = DateTimeOffset.Now.ToString("o")))

Now, when browsing to the API, you get something like this:

<HomeRendition xmlns="http://schemas.datacontract.org/2004/07/Ploeh.Samples.FebApi"> <Message>Hello from F#</Message> <Time>2013-08-23T10:26:40.8490741+02:00</Time> </HomeRendition>

Success, of a sort!

This Web API now runs in IIS Express on my local machine. However, at this point, it still doesn't run in an Azure Web Site (which is something I also wanted to enable), but I'll cover how to do that in a future post.

Update 2013.19.12: It turns out that it's possible to hack the registry to make it possible to add standard F# project items to the project.

Update 2014.02.17: Since I wrote this article, new F# templates are now available for Visual Studio, including a template for F# ASP.NET MVC 5 and Web API 2 projects.

Comments

This is so awesome!

One note: For adding a new file, I simply used Ctrl+N (i.e. File>New) to create a file in the Miscellaneous Files meta solution folder. I saved the file in the physical project folder, then in Solution Explorer I dragged it's icon from the Miscellaneous Files folder onto the F# Project node. Voila! New code file. Not as easy as it should be, but easier than unloading the project to edit the fsproj file.

Mike, that's a great tip! Thank you.

Another option is to create the file in the file system (with Windows Explorer, or your preferred shell), and then add the existing file to the project.

Checking math homework with F#

Teaching my daughter F# while checking her math homework.

My daughter Linea is 10 years old, and I've been looking for ways to make programming relevant to her. Last year, I discovered that her math homework was beginning to include simple functions, although they weren't called that. Instead, they were verbal assignments like: "for each of the following numbers, multiply the number by 3, and subtract 2." Still, it got me thinking whether Functional Programming would be a relevant introduction to programming.

In vacations, we've been doing a bit of F# programming, and I actually got so far as to help her implement FizzBuzz in F#. Still, I was struggling with coming up with a continuing set of small problems that would enable us to progress.

Until yesterday, that is. Linea actually likes doing her math homework, but she always wants me to check it for her. This is something I'm already using some time at, but suddenly I realized that we could do it together - in F#! She would have to check her results by typing in each question, and learn F# as we go along.

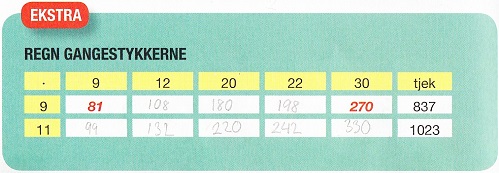

This makes F# relevant to her, and being the lazy programmer that I am, I'm at the same time trying to teach her a few shortcuts we can take here and there. Here's a scan of a small part of her homework:

The Danish text basically says: multiply the numbers. As you can tell, Linea first did her homework the old-fashioned way, filling the numbers into the table. Then we sat down to check her work with F#. Here's a copy of the part of tonight's F# Interactive (FSI, a REPL for F#) session related to that table:

> let m9 x = 9 * x;; val m9 : x:int -> int > m9 12;; val it : int = 108 > m9 20;; val it : int = 180 > m9 22;; val it : int = 198 > let m11 x = 11 * x;; val m11 : x:int -> int > let input = [9;12;20;22;30];; val input : int list = [9; 12; 20; 22; 30] > Seq.map m11 input;; val it : seq<int> = seq [99; 132; 220; 242; ...] > List.map m11 input;; val it : int list = [99; 132; 220; 242; 330] > input |> List.map m11;; val it : int list = [99; 132; 220; 242; 330] > input |> List.map m11 |> List.sum;; val it : int = 1023 > List.map m9 input;; val it : int list = [81; 108; 180; 198; 270] > input |> List.map m9;; val it : int list = [81; 108; 180; 198; 270] > input |> List.map m9 |> List.sum;; val it : int = 837 >

As you can tell, Linea first created a function (m9) in order to multiply a number with 9. This is the function form I've taught her back when we were doing FizzBuzz. You could write it more succinctly as let m9 = (*) 9, but I didn't want to confuse her :)

She evaluated the first row in the table using the m9 function one cell at a time, but when it came to the next row, I decided to teach her about List.map. First, I had her create a list (input) of all the column head numbers from the table. Then I taught her to invoke the map function with the input and her m11 function. As you can see, first I had her use the imperative function call style she already knew, and then I had her use the pipe operator. That gave us a list with all the results for the last row of the table, and we could now compare the list with her homework to confirm that her results were correct.

If you look at the table, you'll see that the last column is called tjek (check), and contains a pre-populated sum the pupil can use to check whether her homework is correct. I wanted Linea to use F# to calculate the sum to confirm that everything indeed adds up correctly, so I introduced her to List.sum, and how she could further pipe her result list into the sum function, to get the sum. As you can see from the FSI session, she did that for both rows.

Obviously, I helped her here and there, but she picked up some of the concepts quite easily, and was altogether happy about our session. She felt that she learned a bit of F#, and she could relate to the work we did because she likes math already.

My purpose of posting this was to share the idea of using F# (or another programming language) to teach kids programming. While you could use other languages, I find F# a good fit because its syntax is close to the math syntax Linea learns in school, and she doesn't have to relate to a lot of curly brackets and parentheses. Additionally, the FSI makes this kind of ad hoc work easily approachable.

LINQ versus the LSP

This post examines the conflict between Iterators, LINQ, and the Liskov Substitution Principle.

As a reaction to my my previous post on Defensive Coding, one of my readers sent me this question:

"[One] very prominent scenario of defensive coding is used when the input argument is IEnumerable<T>

public class Publisher { public Publisher(IEnumerable<Subscriber> subscribers) { // defensive copy -> good or bad? this.subscribers = subscribers.ToArray(); } // … }"You could argue: when the intention is to use IEnumerable<T>, the receiver shall not to believe this sequence is immutable. You could indicate an immutable sequence with ICollection for instance. In the example above, the caller might add a new subscriber silently to its own list and have it automatically injected into the 'publisher' (maybe that's the intention from the perspective of the caller). However, a defensive copy breaks this behavior because the injected sequence is now no longer under control of the caller. This shows how simple an implementation detail can change the behavior on the client.

"The reason you often see code like this is because we like immutable objects and second because of the unknown performance impact of IEnumerable. Once you take a copy of the input you can predict the performance of your class otherwise you can't.

"I tend to say it's 'bad' to take defensive copy (after reading many of your blog posts), but would be happy to hear your opinion on that."

This question warrants an in-depth answer.

Encapsulation #

IEnumerable<T> is one of the most misunderstood interfaces in .NET. It carries very few guarantees, and many method calls on it may actually break the Liskov Substitution Principle (LSP). ToArray is one of them, because it assumes that the sequence produced by the Iterator is finite, which it may not be. Thus, if you invoke ToArray on an infinite Iterator, you will eventually get an exception.

It doesn't really matter whether you call ToArray in the constructor, or in the class method(s) where you use the injected IEnumerable<T>. However, from a Fail First perspective, and in order to protect the class' invariant, if the class requires the sequence to be finite, you could argue that you should invoke ToArray (or ToList) in the constructor. However, this breaks Nikola Malovic's 4th law of IoC: constructors should do no work. This should make you stop and ponder: if you require an array, you should state that requirement up front:

public class Publisher { public Publisher(Subscriber[] subscribers) { this.subscribers = subscribers; } // … }

Notice that instead of requesting IEnumerable<T>, this version requests an array and simply assigns the array to a private field.

However, the problem is that an array isn't quite the same as an Iterator; the most profound difference is that the Publisher class is actually able to mutate the array by replacing elements within the array. This could be a problem if the array is shared with other clients.

Another problem is that if the Publisher doesn't need the ability to mutate the array, it now breaks the Robustness Principle, because a finite Iterator would have been good enough for its needs, but still, it puts an unwarranted demand on its clients.

Asking for ICollection<T>, as my reader suggests, is an even greater violation of the Robustness Principle, because that interface adds seven new methods on top of IEnumerable<T> - three of which are only about mutation. The rest of the methods have been made redundant by LINQ.

LINQ and the LSP #

In a previous post, I've talked about the conflict between IQueryable and the LSP, but even constraining the discussion to LINQ to Objects, it turns out that LINQ has lots of built-in LSP violations.

Recall the essence of the LSP: you should be able to pass any implementation of an interface to a client without changing the correctness of the system. While 'correctness' is application-specific, the lowest common denominator must be that if a method works for one implementation, it mustn't throw exceptions for another implementation. However, consider these two implementations of IEnumerable<string>:

new[] { "foo", "bar", "baz" };

and this one:

public class InfiniteStrings : IEnumerable<string> { public IEnumerator<string> GetEnumerator() { while (true) yield return "foo"; } System.Collections.IEnumerator System.Collections.IEnumerable.GetEnumerator() { return this.GetEnumerator(); } }

As I've already discussed, invoking ToArray (or ToList) on these two implementations changes the correctness of the system, because the second implementation (the infinite Iterator) will cause an exception to be thrown. In fact, as far as I can tell, the only LSP-compliant LINQ methods are:

- Any()

- AsEnumerable()

- Concat(IEnumerable<T>)

- DefaultIfEmpty()

- DefaultIfEmpty(T)

- Distinct (maybe...)

- Distinct(IEqualityComparer<T>) (maybe...)

- ElementAt(int)

- ElementAtOrDefault(int)

- First()

- FirstOrDefault()

- OfType<TResult>()

- Select(Func<TSource, TResult>)

- Select(Func<TSource, int, TResult>)

- SelectMany(Func<TSource, IEnumerable<TResult>>)

- SelectMany(Func<TSource, int, IEnumerable<TResult>>)

- SelectMany(Func<TSource, IEnumerable<TCollection>>, Func<TSource, TCollection, TResult>)

- SelectMany(Func<TSource, int, IEnumerable<TCollection>>, Func<TSource, TCollection, TResult>)

- Single()

- SingleOrDefault()

- Skip(int)

- Take(int)

- Where(Func<TSource, bool>)

- Where(Func<TSource, int, bool>)

- Zip(IEnumerable<TSecond>, Func<TFirst, TSecond, TResult>)

This actually demonstrates a need for a 'Finite Iterator' interface, and I have to admit that, before researching for this article, I'd never heard about IReadOnlyCollection<T>, but there it is; it's seems to be a new interface in .NET 4.5. I think I'll begin to use this interface some more!

Summary #

The bottom line is that defensive copying of IEnumerable<T> into arrays should be avoided. If you can get by with the LSP-compliant subset of LINQ, then all is good (but consider writing a couple of unit tests that involve infinite Iterators). If you require a finite sequence and you're on .NET 4.5, require IReadOnlyCollection<T> as an argument instead of IEnumerable<T>. If you require a finite sequence and you're not on .NET 4.5, ask for an array as an argument (and consider adding some unit tests that verify that your implementation doesn't mutate the array).

Defensive coding

This post examines the advantages and disadvantages of defensive coding.

One of my readers, Barry Giles, recently wrote me and asked a question that I think is interesting enough to warrant a discussion:

"Recently I came up against an interesting situation at work: I was getting a review and I had put defensive checks in – one for a null argument check against a constructor parameter, one for a null check on a property return value. I also had some assertions for private methods which I tend to use to state my assumptions.

"It seems the prevailing mood in my team is to not have any of the checks and just let it fail. To be honest I’m struggling with this concept as I am so used to developing in this way and thought it was good practice. I’m pretty sure it’s in most tutorials and blog posts.

"Can you advise on why it’s better to code defensively like this instead of just letting it fail and checking the stack trace please?"

Actually, I don't think defensive coding necessarily is better; I also don't think it's worse. As usual, there are different forces in play, so the short answer is the usual: it depends.

That answer is obviously useless unless you can answer the question: on what does it depend? In this article, I'll examine some of the forces at play. However, I'm not going to claim that this will be an exhaustive treatment of the subject.

Letting it fail #

What is the value of defensive coding, if all you're going to do is to immediately throw an exception? Wouldn't it be just as good to let the code fail? Let's look at some code.

public class ProductDetailsController { private readonly IUserRepository userRepository; private readonly IProductRepository productRepository; private readonly ICurrencyExchange exchange; public ProductDetailsController( IUserRepository userRepository, IProductRepository productRepository, ICurrencyExchange exchange) { this.userRepository = userRepository; this.productRepository = productRepository; this.exchange = exchange; } public ProductDetailsViewModel GetProductDetails( string userId, string productId) { var user = this.userRepository.Get(userId); var targetCurrency = user.PreferredCurrency; var product = this.productRepository.Get(productId); var convertedPrice = this.exchange.Convert(product.UnitPrice, targetCurrency); return new ProductDetailsViewModel { Name = product.Name, Price = convertedPrice.ToString() }; } }

In this simple ProductDetailsController, the GetProductDetails method produces a ProductDetailsViewModel instance by getting user and product information from injected Repositories, converting the product unit price to the user's desired currency, and returning data about the product for display purposes. For the sake of argument, let's focus only on null issues. How many things could go wrong in the GetProductDetails method? How many objects can be null?

Quite a few, it turns out. Even decoupled from its dependencies, this small piece of code can throw a NullReferenceException in at least six different ways. Imagine that you receive an error report from your production system. The stack trace points to the ProductDetailsViewModel method, and the exception is a NullReferenceException. Which of the six possible nulls caused the error?

By just letting the system fail, it's hard to answer that question. Keep in mind that this is even a demo example. Most production code I've seen has more than five to six lines of code, so looking for the cause of an error report can quickly become like looking for a needle in a haystack.

Just letting the code fail isn't particularly helpful. Obviously, if you write very short methods (a practice I highly recommend), the problem is less pronounced, but the longer your methods are, the less professional I think 'just letting it fail' sounds.

Defensive coding to the rescue? #

By adding explicit guards against null, you can throw more descriptive exception messages:

public class ProductDetailsController { private readonly IUserRepository userRepository; private readonly IProductRepository productRepository; private readonly ICurrencyExchange exchange; public ProductDetailsController( IUserRepository userRepository, IProductRepository productRepository, ICurrencyExchange exchange) { if (userRepository == null) throw new ArgumentNullException("userRepository"); if (productRepository == null) throw new ArgumentNullException("productRepository"); if (exchange == null) throw new ArgumentNullException("exchange"); this.userRepository = userRepository; this.productRepository = productRepository; this.exchange = exchange; } public ProductDetailsViewModel GetProductDetails( string userId, string productId) { var user = this.userRepository.Get(userId); if (user == null) throw new InvalidOperationException("User was null."); var targetCurrency = user.PreferredCurrency; var product = this.productRepository.Get(productId); if (product == null) throw new InvalidOperationException("Product was null."); var convertedPrice = this.exchange.Convert(product.UnitPrice, targetCurrency); if (convertedPrice == null) throw new InvalidOperationException("Converted price was null."); return new ProductDetailsViewModel { Name = product.Name, Price = convertedPrice.ToString() }; } }

Is this better? From a troubleshooting perspective it is, because with this version of the code, if you get an error message from your production system, the exception message will tell you exactly which of the six null situations your program encountered. If I were in maintenance mode, I know which of the two versions I'd like to support.

However, from a readability perspective, you're much worse off. The GetProductDetails method grew from five to eleven lines of code. Defensive coding more than doubled the line count! The flow through the method drowns in guard clauses, so it's less readable now. If you're a typical programmer, you read code much more than you write it, so a practice that makes it harder to read code should trigger your code smell sense. No wonder that many programmers consider defensive coding a bad practice.

Robustness #

Is it possible to balance the forces at play here? Yes it is, but in order to understand how, you need to understand the root cause of the problem. The original code example isn't particularly complicated, but even so, there are so many ways it can fail. When it comes to null references, the cause is a language design mistake, but in general, the question is whether or not you can trust input. The return values from invoking IUserRepository.Get is (indirect) input too.

Depending on the environment in which your program is running, you may or may not be able to trust input. Consider, for a moment, the situation where your software is running in the wild. It may be a reusable library or framework. In this case, you can't trust input at all. If fact, you may want to apply the robustness principle and make sure that, not only are you going to be very defensive about input, but you're also going to be careful and nice about the output of your program. In other words, you don't want to pass null (or other evil values) to your collaborators.

The sample code presented here may pass null to its dependencies, e.g. if userId is null, or (more subtly) if user.PreferredCurrency is null. Thus, to apply the robustness principle, you'd have to add even more defensive coding:

public class ProductDetailsController { private readonly IUserRepository userRepository; private readonly IProductRepository productRepository; private readonly ICurrencyExchange exchange; public ProductDetailsController( IUserRepository userRepository, IProductRepository productRepository, ICurrencyExchange exchange) { if (userRepository == null) throw new ArgumentNullException("userRepository"); if (productRepository == null) throw new ArgumentNullException("productRepository"); if (exchange == null) throw new ArgumentNullException("exchange"); this.userRepository = userRepository; this.productRepository = productRepository; this.exchange = exchange; } public ProductDetailsViewModel GetProductDetails( string userId, string productId) { if (userId == null) throw new ArgumentNullException("userId"); if (productId == null) throw new ArgumentNullException("productId"); var user = this.userRepository.Get(userId); if (user == null) throw new InvalidOperationException("User was null."); if (user.PreferredCurrency == null) throw new InvalidOperationException("Preferred currency was null."); var targetCurrency = user.PreferredCurrency; var product = this.productRepository.Get(productId); if (product == null) throw new InvalidOperationException("Product was null."); if (product.Name == null) throw new InvalidOperationException("Product name was null."); if (product.UnitPrice == null) throw new InvalidOperationException("Unit price was null."); var convertedPrice = this.exchange.Convert(product.UnitPrice, targetCurrency); if (convertedPrice == null) throw new InvalidOperationException("Converted price was null."); return new ProductDetailsViewModel { Name = product.Name, Price = convertedPrice.ToString() }; } }

This is clearly even more horrendous than the previous defensive programming example. Now you're not only defending yourself, but also standing up for your collaborators. Noble, but unreadable.

Still, when I write wildlife code, this is basically what I do, although I'd tend to refactor my code so that first I'd collect and check all the input, and then I'd pass on that input to another class that performs the logic.

Protected enclosures #

What if your code is a zoo animal? What if your code is running in an environment, where all the collaborators are other classes also part of the same code base, written by you and your colleagues? If you can trust each other to follow some consistent rules, you could skip much of the defensive coding.

In most of the teams I work with, I always suggest that we adopt the robustness principle. In practice, that means that null is never an acceptable return value. If a class member returns null, the bug is in that class, not in the consumer. Following that team rule, the code example can be reduced to this:

public class ProductDetailsController { private readonly IUserRepository userRepository; private readonly IProductRepository productRepository; private readonly ICurrencyExchange exchange; public ProductDetailsController( IUserRepository userRepository, IProductRepository productRepository, ICurrencyExchange exchange) { if (userRepository == null) throw new ArgumentNullException("userRepository"); if (productRepository == null) throw new ArgumentNullException("productRepository"); if (exchange == null) throw new ArgumentNullException("exchange"); this.userRepository = userRepository; this.productRepository = productRepository; this.exchange = exchange; } public ProductDetailsViewModel GetProductDetails( string userId, string productId) { if (userId == null) throw new ArgumentNullException("userId"); if (productId == null) throw new ArgumentNullException("productId"); var user = this.userRepository.Get(userId); if (user.PreferredCurrency == null) throw new InvalidOperationException("Preferred currency was null."); var targetCurrency = user.PreferredCurrency; var product = this.productRepository.Get(productId); if (product.Name == null) throw new InvalidOperationException("Product name was null."); if (product.UnitPrice == null) throw new InvalidOperationException("Unit price was null."); var convertedPrice = this.exchange.Convert(product.UnitPrice, targetCurrency); return new ProductDetailsViewModel { Name = product.Name, Price = convertedPrice.ToString() }; } }

That's better, but not quite good enough yet... but wait: readable properties are return values too, so you shouldn't have to check for those either:

public class ProductDetailsController { private readonly IUserRepository userRepository; private readonly IProductRepository productRepository; private readonly ICurrencyExchange exchange; public ProductDetailsController( IUserRepository userRepository, IProductRepository productRepository, ICurrencyExchange exchange) { if (userRepository == null) throw new ArgumentNullException("userRepository"); if (productRepository == null) throw new ArgumentNullException("productRepository"); if (exchange == null) throw new ArgumentNullException("exchange"); this.userRepository = userRepository; this.productRepository = productRepository; this.exchange = exchange; } public ProductDetailsViewModel GetProductDetails( string userId, string productId) { if (userId == null) throw new ArgumentNullException("userId"); if (productId == null) throw new ArgumentNullException("productId"); var user = this.userRepository.Get(userId); var targetCurrency = user.PreferredCurrency; var product = this.productRepository.Get(productId); var convertedPrice = this.exchange.Convert(product.UnitPrice, targetCurrency); return new ProductDetailsViewModel { Name = product.Name, Price = convertedPrice.ToString() }; } }

This is pretty good, because now you're almost back where you started. The only difference is the Guard Clauses at the beginning of each member. When following the robustness principle, most members tend to look like that. After a while, you get used to that, and you don't really see them any longer. I consider them a sort of preamble for each member. As a code reader, you can skip the Guard Clauses and concentrate on the program flow, without any interrupting defense checks interfering with the readability of the code.

Encapsulation #

If it's an error to return null, then how does the User class, or the Product class, adhere to the robustness principle? In exactly the same way:

public class User { private string preferredCurrency; public User() { this.preferredCurrency = "DKK"; } public string PreferredCurrency { get { return this.preferredCurrency; } set { if (value == null) throw new ArgumentNullException("value"); this.preferredCurrency = value; } } }

Notice how the User class protects its invariants. The PreferredCurrency property can never be null. This principle is also known by another name: it's called encapsulation.

Summary #

As always, it helps to understand the forces working on your code base. You have to be much more defensive if your code is running in the wild, than if it's running in a protected environment. Still, I generally think that it's a fallacy if you believe that you can get by with writing sloppy code; we should all be Ranger programmers.

Unstructured defensive coding hurts readability; it's just another excuse for writing Spaghetti Code. Conversely, structured defensive coding is encapsulation. I know what I prefer.

Comments

Tony, thank you for writing. That's a good observation, and you're right: if you need to make substantial transformation, filtering, aggregation, and so on, on your event streams, then it would be valuable to be able to leverage Reactive Extensions. Not being able to do that would be a loss.

Using IObserver, as I suggest here, does come with that disadvantage, so as always, it's important to weigh the advantages and disadvantages against each other. If you're doing lots of event stream processing, then it would most likely be best to go with idiomatic Reactive Extensions (and not the solution proposed in this article). If, on the other hand, you mostly need to make sure that some Command is executed once and only once (or at least once, depending on your delivery guarantees), then my proposed solution may be more appropriate.

At its heart, the choice is between pub/sub systems on one side, and point-to-point systems on the other side. If it's important that exactly one destination system receives the messages, it's a point-to-point channel in action. If, on the other hand, zero to any arbitrary number of subscribers are welcome to consume the messages, it's a pub/sub system.

I have briefly experimenting with this idea over the last couple of days.

In my experiemnt I found that rather than implement the IObserver interface on my subscribers it was easier to use Observer.Create and pass in an the Action I wanted to call to that.

This has left me wondering wether I could dispense with the whole IObserver interfacea and simply pass the Action that I wanted to call into the publisher.

Spencer, thank you for writing. Indeed, delegates are equivalent to single-method interfaces. Once you realise that, functional programming begins to look more and more attractive.

Actions, with their void return type, don't seem particularly functional, however, but you can build up an entire architecture on that concept.

Personally, in C#, I prefer to stick with interfaces for dependency injection, since I find it more idiomatic, but other people have different opinions on that.