ploeh blog danish software design

Testing Container Configurations

Here's a question I often get:

“Should I test my DI Container configuration?”

The motivation for asking mostly seems to be that people want to know whether or not their applications are correctly wired. That makes sense.

A related question I also often get is whether or not a particular container has a self-test feature? In this post I'll attempt to answer both questions.

Container Self-testing #

Some DI Containers have a method you can invoke to make it perform a consistency check on itself. As an example, StructureMap has the AssertConfigurationIsValid method that, according to documentation does “a full environment test of the configuration of [the] container.” It will “try to create every configured instance [...]”

Calling the method is really easy:

container.AssertConfigurationIsValid();

Such a self-test can often be an expensive operation (not only for StructureMap, but in general) because it's basically attempting to create an instance of each and every Service registered in the container. If the configuration is large, it's going to take some time, but it's still going to be faster than performing a manual test of the application.

Two questions remain: Is it worth invoking this method? Why don't all containers have such a method?

The quick answer is that such a method is close to worthless, which also explains why many containers don't include one.

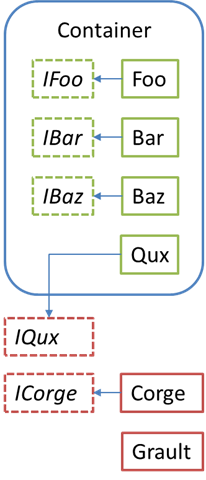

To understand the answer, consider the set of all components contained in the container in this figure:

The container contains the set of components IFoo, IBar, IBaz, Foo, Bar, Baz, and Qux so a self-test will try to create a single instance of each of these seven types. If all seven instances can be created, the test succeeds.

All this accomplishes is to verify that the configuration is internally consistent. Even so, an application could require instances of the ICorge, Corge or Grault types which are completely external to the configuration, in which case resolution would fail.

Even more subtly, resolution would also fail whenever the container is queried for an instance of IQux, since this interface isn't part of the configuration, even though it's related to the concrete Qux type which is registered in the container. A self-test only verifies that the concrete Qux class can be resolved, but it never attempts to create an instance of the IQux interface.

In short, the fact that a container's configuration is internally consistent doesn't guarantee that all services required by an application can be served.

Still, you may think that at least a self-test can constitute an early warning system: if the self-test fails, surely it must mean that the configuration is invalid? Unfortunately, that's not true either.

If a container is being configured using Auto-registration/Convention over Configuration to scan one or more assemblies and register certain types contained within, chances are that ‘too many' types will end up being registered - particularly if one or more of these assemblies are reusable libraries (as opposed to application-specific assemblies). Often, the number of redundant types added is negligible, but they may make the configuration internally inconsistent. However, if the inconsistency only affects the redundant types, it doesn't matter. The container will still be able to resolve everything the current application requires.

Thus, a container self-test method is worthless.

Then how can the container configuration be tested?

Explicit Testing of Container Configuration #

Since a container self-test doesn't achieve the desired goal, how can we ensure that an application can be composed correctly?

One option is to write an automated integration test (not a unit test) for each service that the application requires. Still, if done manually, you run the risk of forgetting to write a test for a specific service. A better option is to come up with a convention so that you can identify all the services required by a specific application, and then write a convention-based test to verify that the container can resolve them all.

Will this guarantee that the application can be correctly composed?

No, it only guarantees that it can be composed - not that this composition is correct.

Even when a composed instance can be created for each and every service, many things may still be wrong:

- Composition is just plain wrong:

- Decorators may be missing

- Decorators may have been added in the wrong order

- The wrong services are injected into consumers (this is more likely to happen when you follow the Reused Abstractions Principle, since there will be multiple concrete implementations of each interface)

- Configuration values like connection strings and such are incorrect - e.g. while a connection string is supplied to a constructor, it may not contain the correct values.

- Even if everything is correctly composed, the run-time environment may prevent the application from working. As an example, even if an injected connection string is correct, there may not be any connection to the database due to network or security misconfiguration.

In short, a Subcutaneous Test or full System Test is the only way to verify that everything is correctly wired. However, if you have good test coverage at the unit test level, a series of Smoke Tests is all that you need at the System Test level because in general you have good reason to believe that all behavior is correct. The remaining question is whether all this correct behavior can be correctly connected, and that tends to be an all-or-nothing proposition.

Conclusion #

While it would be possible to write a set of convention-based integration tests to verify the configuration of a DI Container, the return of investment is too low since it doesn't remove the need for a set of Smoke Tests at the System Test level.

Factory Overload

Recently I received a question from Kelly Sommers about good ways to refactor away from Factory Overload. Basically, she's working in a code base where there's an explosion of Abstract Factories which seems to be counter-productive. In this post I'll take a look at the example problem and propose a set of alternatives.

An Abstract Factory (and its close relative Product Trader) can serve as a solution to various challenges that come up when writing loosely coupled code (chapter 6 of my book describes the most common scenarios). However, introducing an Abstract Factory may be a leaky abstraction, so don't do it blindly. For example, an Abstract Factory is rarely the best approach to address lifetime management concerns. In other words, the Abstract Factory has to make sense as a pure model element.

That's not the case in the following example.

Problem Statement #

The question centers around a code base that integrates with a database closely guarded by DBA police. Apparently, every single database access must happen through a set of very fine-grained stored procedures.

For example, to update the first name of a user, a set of stored procedures exist to support this scenario, depending on the context of the current application user:

| User type | Stored procedure | Parameter name |

| Admin | update_admin_firstname | adminFirstName |

| Guest | update_guest_firstname | guestFirstName |

| Regular | update_regular_firstname | regularFirstName |

| Restricted | update_restricted_firstname | restrictedFirstName |

As this table demonstrates, not only is there a stored procedure for each user context, but the parameter name differs as well. However, in this particular case it seems as though there's a pattern to the names.

If this pattern is consistent, I think the easiest way to address these variations would be to algorithmically build the strings from a couple of templates.

However, this is not the route taken by Kelly's team, so I assume that things are more complicated than that; apparently, a templated approach is not viable, so for the rest of this article I'm going to assume that it's necessary to write at least some code to address each case individually.

The current solution that Kelly's team has implemented is to use an Abstract Factory (Product Trader) to translate the user type into an appropriate IUserFirstNameModifier instance. From the consuming code, it looks like this:

var modifier = factory.Create(UserTypes.Admin); modifier.Commit("first");

where the factory variable is an instance of the IUserFirstNameModifierFactory interface. This is certainly loosely coupled, but looks like a leaky abstraction. Why is a factory needed? It seems that its single responsibility is to translate a UserTypes instance (an enum) into an IUserFirstNameModifier. There's a code smell buried here - try to spot it before you read on :)

Proposed Solution #

Kelly herself suggests an alternative involving a concrete Builder which can create instances of a single concrete UserFirstNameModifier with or without an implicit conversion:

// Implicit conversion. UserFirstNameModifier modifier1 = builder.WithUserType(UserTypes.Guest); // Without implicit conversion. var modifier2 = builder .WithUserType(UserTypes.Restricted) .Create();

While this may seem to reduce the number of classes involved, it has several drawbacks:

- First of all, the Fluent Builder pattern implies that you can forgo invoking any of the WithXyz methods (WithUserType) and just accept all the default values encapsulated in the builder. This again implies that there's a default user type, which may or may not make sense in that particular domain. Looking at Kelly's code, UserTypes is an enum (and thus has a default value), so if WithUserType isn't invoked, the Create method defaults to UserTypes.Admin. That's a bit too implicit for my taste.

- Since all involved classes are now concrete, the proposed solution isn't extensibile (and by corollary hard to unit test).

- The builder is essentially a big switch statement.

Both the current implementation and the proposed solution involves passing an enum as a method parameter to a different class. If you've read and memorized Refactoring you should by now have recognized both a code smell and the remedy.

Alternative 1a: Make UserType Polymorphic #

The code smell is Feature Envy and a possible refactoring is to replace the enum with a Strategy. In order to do that, an IUserType interface is introduced:

public interface IUserType { IUserFirstNameModifer CreateUserFirstNameModifier(); }

Usage becomes as simple as this:

var modifier = userType.CreateUserFirstNameModifier();

Obviously, more methods can be added to IUserType to support other update operations, but care should be taken to avoid creating a Header Interface.

While this solution is much more object-oriented, I'm still not quite happy with it, because apparently, the context is a CQRS style architecture. Since an update operation is essentially a Command, then why model the implementation along the lines of a Query? Both Abstract Factory and Factory Method patterns represent Queries, so it seems redundant in this case. It should be possible to apply the Hollywood Principle here.

Alternative 1b: Tell, Don't Ask #

Why have the user type return an modifier? Why can't it perform the update itself? The IUserType interface should be changed to something like this:

public interface IUserType { void CommitUserFirtName(string firstName); }

This makes it easier for the consumer to commit the user's first name because it can be done directly on the IUserType instance instead of first creating the modifier.

It also makes it much easier to unit test the consumer because there's no longer a mix of Command and Queries within the same method. From Growing Object-Oriented Software we know that Queries should be modeled with Stubs and Commands with Mocks, and if you've ever tried mixing the two you know that it's a sort of interaction that should be minimized.

Alternative 2a: Distinguish by Type #

While I personally like alternative 1b best, it may not be practical in all situations, so it's always valuable to examine other alternatives.

The root cause of the problem is that there's a lot of stored procedures. I want to reiterate that I still think that the absolutely easiest solution would be to generate a SqlCommand from a string template, but given that this article assumes that this isn't possible or desirable, it follows that code must be written for each stored procedure.

Why not simply define an interface for each one? As an example, to update the user's first name in the context of being an ‘Admin' user, this Role Interface can be used:

public interface IUserFirstNameAdminModifier { void Commit(string firstName); }

Similar interfaces can be defined for the other user types, such as IUserFirstNameRestrictedModifier, IUserFirstNameGuestModifier and so on.

This is a very simple solution; it's easy to implement, but risks violating the Reused Abstractions Principle (RAP).

Alternative 2b: Distinguish by Generic Type #

The problem with introducing interfaces like IUserFirstNameAdminModifier, IUserFirstNameRestrictedModifier, IUserFirstNameGuestModifier etc. is that they differ only by name. The Commit method is the same for all these interfaces, so this seems to violate the RAP. It'd be better to merge all these interfaces into a single interface, which is what Kelly's team is currently doing. However, the problem with this is that the type carries no information about the role that the modifier is playing.

Another alternative is to turn the modifier interface into a generic interface like this:

public interface IUserFirstNameModifier<T> where T : IUserType { void Commit(string firstName); }

The IUserType is a Marker Interface, so .NET purists are not going to like this solution, since the .NET Type Design Guidelines recommend against using Marker Interfaces. However, it's impossible to constrain a generic type argument against an attribute, so the party line solution is out of the question.

This solution ensures that consumers can now have dependencies on IUserFirstNameModifier<AdminUserType>, IUserFirstNameModifier<RestrictedUserType>, etc.

However, the need for a marker interface gives off another odeur.

Alternative 3: Distinguish by Role #

The problem at the heart of alternative 2 is that it attempts to use the type of the interfaces as an indicator of the roles that Services play. It's seems that making the type distinct works against the RAP, but when the RAP is applied, the type becomes ambiguous.

However, as Ted Neward points out in his excellent series on Multiparadigmatic .NET, the type is only one axis of variability among many. Perhaps, in this case, it may be much easier to use the name of the dependency to communicate its role instead of the type.

Given a single, ambiguous IUserFirstNameModifier interface (just as in the original problem statement), a consumer can distinguish between the various roles of modifiers by their names:

public partial class SomeConsumer { private readonly IUserFirstNameModifier adminModifier; private readonly IUserFirstNameModifier guestModifier; public SomeConsumer( IUserFirstNameModifier adminModifier, IUserFirstNameModifier guestModifier) { this.adminModifier = adminModifier; this.guestModifier = guestModifier; } public void DoSomething() { if (this.UseAdmin) this.adminModifier.Commit("first"); else this.guestModifier.Commit("first"); } }

Now it's entirely up to the Composition Root to compose SomeConsumer with the correct modifiers, and while this can be done manually, it's an excellent case for a DI Container and a bit of Convention over Configuration.

Conclusion #

I'm sure that if I'd spent more time analyzing the problem I could have come up with more alternatives, but this post is becoming long enough already.

Of the alternatives I've suggested here, I prefer 1b or 3, depending on the exact requirements.

Comments

Personally I prefer 1b over 3. The if-statement in option 3 looks a bit suspicious to me as one day it might give rise to some maintenance if more user types have to be supported by the SomeConsumer type, so I am afraid it may violate the open/closed principle. Option 1b looks more straight forward to me.

sorry, way OT

I'm trying to implement a visitor pattern over a list of visitees object, constructed with a factory.

A visitee requires some external services, so, it might be resonable to have them injected already by IOC in the _factory (VisiteeFactory). isLast is an example of other contextual information, outside of dto, but required to create other visitee types.

Given that is a bounded context, how can I improve this design?

Pseudocode follows:

IVisiteeFactory

{

VisiteeAdapterBase Create(Dto dto, bool isLast);

}

VisiteeFactory : IVisiteeFactory

{

ctor VisiteeFactory(IExternalService service)

public VisiteeAdapterBase Create(Dto dto, bool isLast)

{

// lot of switches and ifs to determine the type of VisiteeAdapterBase

if (isLast && dto.Type == "1") {

return new Type1VisiteeAdapter(... dto props...);

}

}

}

ConsumerCtor(VisiteeFactory factory, List<Dto> dtoList)

{

// guards

_factory = factory;

_dtoList = dtoList;

}

ConsumerDoStuff

{

foreach (var dto in _dtoList) {

// isLast is additional logic, outside of dto

var visiteeAdapter = _factory.Create(dto, isLast);

visiteeAdapter.Accept(visitor);

}

}

The whole premise of the rest of the post is that for some reason, it's more complicated than that...

Polymorphic Consistency

Asynchronous message passing combined with eventual consistency makes it possible to build very scalable systems. However, sometimes eventual consistency isn't appropriate in parts of the system, while it's acceptable in other parts. How can a consistent architecture be defined to fit both ACID and eventual consistency? This article provides an answer.

The case of an online game #

Last week I visited Pixel Pandemic, a company that produces browser-based MMORPGs. Since each game world has lots of players who can all potentially interact with each other, scalability is very important.

In traditional line of business applications, eventual consistency is often an excellent fit because the application is a projection of the real world. My favorite example is an inventory system: it models what's going on in one or more physical warehouses, but the real world is the ultimate source of truth. A warehouse worker might accidentally drop and damage some of the goods, in which case the application must adjust after the fact.

In other words, the information contained within line of business applications tend to lag after the real world. It's impossible to guarantee that the application is always consistent with the real world, so eventual consistency is a natural fit.

That's not the case with an online game world. The game world itself is the source of truth, and it must be internally consistent at all times. As an example, in Zombie Pandemic, players fight against zombies and may take damage along the way. Players can heal themselves, but they would prefer (I gather) that the healing action takes place immediately, and not some time in the future where the character might be dead. Similarly, when a player hits a zombie, they'd prefer to apply the damage immediately. (However, I think that even here, eventual consistency might provide some interesting game mechanics, but that's another discussion.)

While discussing these matters with the nice people in Pixel Pandemic, it turned out that while some parts of the game world have to be internally consistent, it's perfectly acceptable to use eventual consistency in other cases. One example is the game's high score table. While a single player should have a consistent view of his or her own score, it's acceptable if the high score table lags a bit.

At this point it seemed clear that this particular online game could use an appropriate combination of ACID and eventual consistency, and I think this conclusion can be generalized. The question now becomes: how can a consistent architecture encompass both types of consistency?

Problem statement #

With the above example scenario in mind the problem statement can be generalized:

Given that an application should apply a mix of ACID and eventual consistency, how can a consistent architecture be defined?

Keep in mind that ACID consistency implies that all writes to a transactional resource must take place as a blocking method call. This seems to be at odds with the concept of asynchronous message passing that works so well with eventual consistency.

However, an application architecture where blocking ACID calls are fundamentally different than asynchronous message passing isn't really an architecture at all. Developers will have to decide up-front whether or not a given operation is or isn't synchronous, so the ‘architecture' offers little implementation guidance. The end result is likely to be a heterogeneous mix of Services, Repositories, Units of Work, Message Channels, etc. A uniform principle will be difficult to distill, and the whole thing threatens to devolve into Spaghetti Code.

The solution turns out to be not at all difficult, but it requires that we invert our thinking a bit. Most of us tend to think about synchronous code first. When we think about code performing synchronous work it seems difficult (perhaps even impossible) to retrofit asynchrony to that model. On the other hand, the converse isn't true.

Given an asynchronous API, it's trivial to provide a synchronous, blocking implementation.

Adopting an architecture based on asynchronous message passing (the Pipes and Filters architecture) enables both scenarios. Eventual consistency can be achieved by passing messages around on persistent queues, while ACID consistency can be achieved by handling a message in a blocking call that enlists a (potentially distributed) transaction.

An example seems to be in order here.

Example: keeping score #

In the online game world, each player accumulates a score based on his or her actions. From the perspective of the player, the score should always be consistent. When you defeat the zombie boss, you want to see the result in your score right away. That sounds an awful lot like the Player is an Aggregate Root and the score is part of that Entity. ACID consistency is warranted whenever the Player is updated.

On the other hand, each time a score changes it may influence the high score table, but this doesn't need to be ACID consistent; eventual consistency is fine in this case.

Once again, polymorphism comes to the rescue.

Imagine that the application has a GameEngine class that handles updates in the game. Using an injected IChannel<PointsChangedEvent> it can update the score for a player as simple as this:

/* Lots of other interesting things happen * here, like calculating the new score... */ var cmd = new ScoreChangedEvent(this.playerId, score); this.pointsChannel.Send(cmd);

The Send method returns void, so it's a good example of a naturally asynchronous API. However, the implementation must do two things:

- Update the Player Aggregate Root in a transaction

- Update the high score table (eventually)

That's two different types of consistency within the same method call.

The first step to enable this is to employ the trusty old Composite design pattern:

public class CompositeChannel<T> : IChannel<T> { private readonly IEnumerable<IChannel<T>> channels; public CompositeChannel(params IChannel<T>[] channels) { this.channels = channels; } public void Send(T message) { foreach (var c in this.channels) { c.Send(message); } } }

With a Composite channel it's possible to compose a polymorphic mix of IChannel<T> implementations, some blocking and some asynchronous.

ACID write #

To update the Player Aggregate Root a simple Adapter writes the event to a persistent data store. This could be a relational database, a document database, a REST resource or something else - it doesn't really matter exactly which technology is used.

public class PlayerStoreChannel : IChannel<ScoreChangedEvent> { private readonly IPlayerStore store; public PlayerStoreChannel(IPlayerStore store) { this.store = store; } public void Send(ScoreChangedEvent message) { this.store.Save(message.PlayerId, message); } }

The important thing to realize is that the IPlayerStore.Save method will be a blocking method call - perhaps wrapped in a distributed transaction. This ensures that updates to the Player Aggregate Root always leave the data store in a consistent state. Either the operation succeeds or it fails during the method call itself.

This takes care of the ACID consistent write, but the application must also update the high score table.

Asynchronous write #

Since eventual consistency is acceptable for the high score table, the message can be transmitted over a persistent queue to be picked up by a background process.

A generic class can server as an Adapter over an IQueue abstraction:

public class QueueChannel<T> : IChannel<T> { private readonly IQueue queue; private readonly IMessageSerializer serializer; public QueueChannel(IQueue queue, IMessageSerializer serializer) { this.queue = queue; this.serializer = serializer; } public void Send(T message) { this.queue.Enqueue( this.serializer.Serialize(message)); } }

Obvously, the Enqueue method is another void method. In the case of a persistent queue, it'll block while the message is being written to the queue, but that will tend to be a fast operation.

Composing polymorphic consistency #

Now all the building blocks are available to compose both channel implementations into the GameEngine via the CompositeChannel. That might look like this:

var playerConnString = ConfigurationManager .ConnectionStrings["player"].ConnectionString; var gameEngine = new GameEngine( new CompositeChannel<ScoreChangedEvent>( new PlayerStoreChannel( new DbPlayerStore(playerConnString)), new QueueChannel<ScoreChangedEvent>( new PersistentQueue("messageQueue"), new JsonSerializer())));

When the Send method is invoked on the channel, it'll first invoke a blocking call that ensures ACID consistency for the Player, followed by asynchronous message passing for eventual consistency in other parts of the application.

Conclusion #

Even when parts of an application must be implemented in a synchronous fashion to ensure ACID consistency, an architecture based on asynchronous message passing provides a flexible foundation that enables you to polymorphically mix both kinds of consistency in a single method call. From the perspective of the application layer, this provides a consistent and uniform architecture because all mutating actions are modeled as commands end events encapsulated in messages.

Comments

We're working on ways to make as many aspects of our games as possible fit with an eventual consistency model by, e.g. simply by changing the way we communicate information about the virtual game world state to players (to put them in a frame of mind in which eventual consistency fits naturally with their perception of the game state).

Looking very much forward to meeting with you again soon and discussing more details!

await this.store.Save(message.PlayerId, message);

this.queue.Enqueue(this.serializer.Serialize(message));

Keen to hear your thoughts

Jake

However, while the async CTP makes it easier to write asynchronous code, it doesn't help with blocking calls. It may be more efficient, but not necessarily faster. You can't know whether or not a transaction has committed until it actually happens, so you still need to wait for that before you decide whether or not to proceed.

BTW, F# has had async support since its inception, so it's interesting to look towards what people are doing with that. Agents (the F# word for Actors) seem to fit into that model pretty well, and as far as I can tell, an Agent is simply an in-memory asynchronous worker process.

I do have a question, though. I can see how this works for all future commands, because they will all need to load the aggregate work to work on it and that will be ACID at all times. What I'm not sure about is how that translates to the query side of the coin - where the query is *not* the high-score table, but must be immediately available on-screen.

Even if hypothetical, imagine the screen has a typical Heads-Up-Display of relevant information - stuff like 'ammo', 'health' and 'current score'. These are view concerns and will go down the query arm of the CQRS implementation. For example, the view-model backing this HUD could be stored in document storage for the player. This is, then, subject to eventual consistency and is not ACID, right?

I'm clearly not 'getting' this bit of the puzzle at the moment, hopefully you can enlighten me.

TDD improves reusability

There's this meme going around that software reuse is a fallacy. Bollocks! The reuse is a fallacy meme is a fallacy :) To be fair, I'm not claiming that everything can and should be reused, but my claim is that all code produced by Test-Driven Development (TDD) is being reused. Here's why:

When tests are written first, they act as a kind of REPL. Tests tease out the API of the SUT, as well as its behavior. In this point in the development process, the tests serve as a feedback mechanism. Only later, when the tests and the SUT stabilize, will the tests be repurposed (dare I say ‘reused'?) as regression tests. In other words: over time, tests written during TDD have more than one role:

- Feedback mechanism

- Regression test

Each test plays one of these roles at a time, but not both.

While the purpose of TDD is to evolve the SUT, the process produces two types of artifacts:

- Tests

- Production code

Notice how the tests appear before the production code, which is an artifact of the test code.

The unit tests are the first client of the production API.

When the production code is composed into an application, that application becomes the second client, so it reuses the API. This is a very beneficial effect of TDD, and probably one of the main reasons why TDD, if done correctly, produces code of high quality.

A colleague once told me (when referring to scale-out) that the hardest step is to go from one server to two servers, and I've later found that principle to apply much more broadly. Generalizing from a single case to two distinct cases is often the hardest step, and it becomes much easier to generalize further from two to an arbitrary number of cases.

This explains why TDD is such an efficient process. Apart from the beneficial side effect of producing a regression test suite, it also ensures that at the time the API goes into production, it's already being shared (or reused) between at least two distinct clients. If, at a later time, it becomes necessary to add a third client, the hard part is already done.

TDD produces reusable code because the production application reuses the API which were realized by the tests.

Comments

If you are using NUnit for TDD you may find useful NUnit.Snippets NuGet package - "almost every assert is only few keystrokes away" TM ;)

http://writesoft.wordpress.com/2011/08/14/nunit-snippets/

http://nuget.org/List/Packages/NUnit.Snippets

Do you use some test coverage software?

Is there some free test coverage tools thats worth to use? )

I have found for example "dotcover" from jetbrains - 140 its ok for the company )

I still occasionally use code coverage tools when I work in a team environment, so I have nothing against them. When I do, I just use the coverage tool which is built into Visual Studio. When used with the TestDriven.NET Visual Studio add-in it's quite friction-less.

Independency

Now that my book about Dependency Injection is out, it's only fitting that I also invert my own dependencies by striking out as an independent consultant/advisor. In the future I'm hoping to combine my writing and speaking efforts, as well as my open source interests, with helping out clients write better code.

If you'd like to get help with Dependency Injection or Test-Driven Development, SOLID, API design, application architecture or one of the other topics I regularly cover here on my blog, I'm available as a consultant worldwide.

When it comes to Windows Azure, I'll be renewing my alliance with my penultimate employer Commentor, so you can also hire me as part of larger deal with Commentor.

In case you are wondering what happened to my employment with AppHarbor, I resigned from my position there because I couldn't make it work with all the other things I also would like to do. I still think AppHarbor is a very interesting project, and I wish my former employers the best of luck with their endeavor.

This has been a message from the blog's sponsor (myself). Soon, regular content will resume.

Comments

Best of luck to you, I'll be reading the blog as always.

BTW - Got the physical book finally, even though I'm no newb of IoC and such, I'd have loved a solid read when I was learning about the options back in the day. ;)

Cheers!

As with all other endevours you set your mind to, you will for sure also excel as a free agent.

Cheers,

Flemming

Regards,

One of your Fans in USA,

SOLID concrete

Greg Young gave a talk at GOTO Aarhus 2011 titled Developers have a mental disorder, which was (semi-)humorously meant, but still addressed some very real concerns about the cognitive biases of software developers as a group. While I have no intention to provide a complete resume of the talk, Greg said one thing that made me think a bit (more) about SOLID code. To paraphrase, it went something like this:

Developers have a tendency to attempt to solve specific problems with general solutions. This leads to coupling and complexity. Instead of being general, code should be specific.

This sounds correct at first glance, but once again I think that SOLID code offers a solution. Due to the Single Responsibility Principle each SOLID concrete (pardon the pun) class will tend to very specifically address a very narrow problem.

Such a class may implement one (or more) general-purpose interface(s), but the concrete type is specific.

The difference between the generality of an interface and the specificity of a concrete type becomes more and more apparent the better a code base applies the Reused Abstractions Principle. This is best done by defining an API in terms of Role Interfaces, which makes it possible to define a few core abstractions that apply very broadly, while implementations are very specific.

As an example, consider AutoFixture's ISpecimenBuilder interface. This is a very central interface in AutoFixture (in fact, I don't even know just how many implementations it has, and I'm currently too lazy to count them). As an API, it has proven to be very generally useful, but each concrete implementation is still very specific, like the CurrentDateTimeGenerator shown here:

public class CurrentDateTimeGenerator : ISpecimenBuilder { public object Create(object request, ISpecimenContext context) { if (request != typeof(DateTime)) { return new NoSpecimen(request); } return DateTime.Now; } }

This is, literally, the entire implementation of the class. I hope we can agree that it's very specific.

In my opinion, SOLID is a set of principles that can help us keep an API general while each implementation is very specific.

In SOLID code all concrete types are specific.

Comments

For one, ctor injection for dependencies makes them explicit and increases readability of a particular class (you should be able to get a general idea of what a class does by looking at what dependencies it has in its ctor). However, if you're taking in more than a handful of dependencies, that is an indication that your class needs refactoring. Yes, you could accept dependencies in the form of concrete classes, but in such cases you are voiding the other benefits of using interfaces.

As far as API writing goes, using interfaces with implementations that are internal is a way to guide a person though what is important in your API and what isn't. Offering up a bunch of instantiatable classes in an API adds to the mental overhead of learning your code - whereas only marking the "entry point" classes as public will guide people to what is important.

Further, as far as API writing goes, there are many instances where Class A may have a concrete dependency on Class B, but you wish to hide the methods that Class A uses to talk to Class B. In this case, you may create an interface (Interface B) with all of the public methods that you wish to expose on Class B and have Class B implement them, then add your "private" methods as simple, non-virtual, methods on Class B itself. Class A will have a property of type Interface B, which simply returns a private field of type Class B. Class A can now invoke specific methods on Class B that aren't accessible though the public API using it's private reference to the concrete Class B.

Finally, there are many instances where you want to expose only parts of a class to another class. Let's say that you have an event publisher. You would normally only want to expose the methods that have to do with publishing to other code, yet that same class may include facilities that allow you to register handler objects with it. Using interfaces, you can limit what other classes can and can't do when they accept your objects as dependencies.

These are instances of what things you can do with interfaces that make them a useful construct on their own - but in addition to all of that, you get the ability to swap out implementations without changing code. I know that often times implementations are never swapped out in production (rather, the concrete classes themselves are changed), which is why I mention this last, but in the rare cases where it has to be done, interfaces make this scenario possible.

My ultimate point is that interfaces don't always equal generality or abstraction. They are simply tools that we can use to make code explicit and readable, and allow us to have greater control over method/property accessibility.

It's the same thing with the RAP: how can you know that it's possible to exchange one implementation of an interface with another if you've never tried it? Keep in mind that Test Doubles don't count because you can always create a bogus implementation of any interface. You could have an interface that's one big leaky abstraction: even though it's an interface, you can never meaningfully replace that single implementation with any other meaningful production implementation.

Also: using an interface alone doesn't guarantee the Liskov Substitution Principle. However, by following the RAP, you get a strong indication that you can, indeed, replace one implementation with another without changing the correctness of the code.

If an interface does not satisfy RAP, it does not absolutely mean that interface is invalid. Take the Customer and CustomerImpl types specified in the linked article. Perhaps the Customer interface simply exposes a public, readonly, interface for querying information about a customer. The CustomerImpl class, instantiated and acted upon behind the scenes in the domain services, may specify specific details such as OR/mapping or other behavior that isn't intended to be accessible to client code. Although a slightly contrived example (I would prefer the query model to be event sourced, not mapped to a domain model mapped to an RDBMS), I think this use is valid and should not immediately be thrown out because it does not satisfy RAP.

Your discussion about interfaces fit well with the Interface Segregation Principle and the concept of Role Interfaces, and I've also previously described how interfaces act as access modifiers.

Checking for exactly one item in a sequence using C# and F#

Here's a programming issue that comes up from time to time. A method takes a sequence of items as input, like this:

public void Route(IEnumerable<string> args)

While the signature of the method may be given, the implementation may be concerned with finding out whether there is exactly one element in the sequence. (I'd argue that this would be a violation of the Liskov Substitution Principle, but that's another discussion.) By corollary, we might also be interested in the result sets on each side of that single element: no elements and multiple elements.

Let's assume that we're required to raise the appropriate event for each of these three cases.

Naïve approach in C# #

A naïve implementation would be something like this:

public void Route(IEnumerable<string> args) { var countCategory = args.Count(); switch (countCategory) { case 0: this.RaiseNoArgument(); return; case 1: this.RaiseSingleArgument(args.Single()); return; default: this.RaiseMultipleArguments(args); return; } }

However, the problem with that is that IEnumerable<string> carries no guarantee that the sequence will ever end. In fact, there's a whole category of implementations that keep iterating forever - these are called Generators. If you pass a Generator to the above implementation, it will never return because the Count method will block forever.

Robust implementation in C# #

A better solution comes from the realization that we're only interested in knowing about which of the three categories the input matches: No elements, a single element, or multiple elements. The last case is covered if we find at least two elements. In other words, we don't have to read more than at most two elements to figure out the category. Here's a more robust solution:

public void Route(IEnumerable<string> args) { var countCategory = args.Take(2).Count(); switch (countCategory) { case 0: this.RaiseNoArgument(); return; case 1: this.RaiseSingleArgument(args.Single()); return; default: this.RaiseMultipleArguments(args); return; } }

Notice the inclusion of the Take(2) method call, which is the only difference from the first attempt. This will give us at most two elements that we can then count with the Count method.

While this is better, it still annoys me that it's necessary with a secondary LINQ call (to the Single method) to extract that single element. Not that it's particularly inefficient, but it still feels like I'm repeating myself here.

(We could also have converted the Take(2) iterator into an array, which would have enabled us to query its Length property, as well as index into it to get the single value, but it basically amounts to the same work.)

Implementation in F# #

In F# we can implement the same functionality in a much more compact manner, taking advantage of pattern matching against native F# lists:

member this.Route args = let atMostTwo = args |> Seq.truncate 2 |> Seq.toList match atMostTwo with | [] -> onNoArgument.Trigger(Unit.Default) | [arg] -> onSingleArgument.Trigger(arg) | _ -> onMultipleArguments.Trigger(args)

The first thing happening here is that the input is being piped through a couple of functions. The truncate method does the same thing as the Take LINQ method does, and the toList method subsequently converts that sequence of at most two elements into a native F# list.

The beautiful thing about native F# lists is that they support pattern matching, so instead of first figuring out in which category the input belongs, and then subsequently extract the data in the single element case, we can match and forward the element in a single statement.

Why is this important? I don't know… it's just satisfying on an aesthetic level :)

Comments

int count = 0;

foreach(var current in args)

{

item = current;

i ++;

if (i == 2)

{

RaiseMultipleArguments(args);

return;

}

}

if (i == 1)

this.RaiseSingleArgument(item);

else

RaiseNoArgument();

Weakly-typed versus Strongly-typed Message Channels

Soon after I posted my previous blog post on message dispatching without Service Location I received an email from Jeff Saunders with some great observations. Jeff has been so kind to allow me to quote his email here on the blog, so here it is:

“I enjoyed your latest blog post about message dispatching. I have to ask, though: why do we want weakly-typed messages? Why can't we just inject an appropriate IConsumer<T> into our services - they know which messages they're going to send or receive.

“A really good example of this is ISubject<T> from Rx. It implements both IObserver<T> (a message consumer) and IObservable<T> (a message producer) and the default implementation Subject<T> routes messages directly from its IObserver side to its IObservable side.

“We can use this with DI quite nicely - I have written an example in .NET Pad: http://dotnetpad.net/ViewPaste/woTkGk6_GEq3P9xTVEJYZg#c9,c26,

“The good thing about this is that we now have access to all of the standard LINQ query operators and the new ones added in Rx, so we can use a select query to map messages between layers, for instance.

“This way we get all the benefits of a weakly-typed IChannel interface, with the added advantages of strong typing for our messages and composability using Rx.

“One potential benefit of weak typing that could be raised is that we can have just a single implementation for IChannel, instead of an ISubject<T> for each message type. I don't think this is really a benefit, though, as we may want different propagation behaviour for each message type - there are other implementations of ISubject<T> that call consumers asynchronously, and we could pass any IObservable<T> or IObserver<T> into a service for testing purposes.”

These are great observations and I think that Rx holds much promise in this space. Basically you can say that in CQRS-style architectures we're already pushing events (and commands) around, so why not build upon what the framework offers?

Even if you find the IObserver<T> interface a bit too clunky with its OnNext, OnError and OnCompleted methods compared to the strongly typed IConsumer<T> interface, the question still remains: why do we want weakly-typed messages?

We don't, necessarily. My previous post wasn't meant as a particular endorsement of a weakly typed messaging channel. It was more an observation that I've seen many variations of this IChannel interface:

public interface IChannel { void Send<T>(T message); }

The most important thing I wanted to point out was that while the generic type argument may create the illusion that this is a strongly typed method, this is all it is: an illusion. IChannel isn't strongly typed because you can invoke the Send method with any type of message - and the code will still compile. This is no different than the mechanical distinction between a Service Locator and an Abstract Factory.

Thus, when defining a channel interface I normally prefer to make this explicit and instead model it like this:

public interface IChannel { void Send(object message); }

This achieves exactly the same and is more honest.

Still, this doesn't really answer Jeff's question: is this preferable to one or more strongly typed IConsumer<T> dependencies?

Any high-level application entry point that relies on a weakly typed IChannel can get by with a single IChannel dependency. This is flexible, but (just like with Service Locator), it might hide that the client may have (or (d)evolve) too many responsibilities.

If, instead, the client would rely on strongly typed dependencies it becomes much easier to see if/when it violates the Single Responsibility Principle.

In conclusion, I'd tend to prefer strongly typed Datatype Channels instead of a single weakly typed channel, but one shouldn't underestimate the flexibility of a general-purpose channel either.

Comments

Message Dispatching without Service Location

Once upon a time I wrote a blog post about why Service Locator is an anti-pattern, and ever since then, I occasionally receive rebuffs from people who agree with me in principle, but think that, still: in various special cases (the argument goes), Service Locator does have its uses.

Most of these arguments actually stem from mistaking the mechanics for the role of a Service Locator. Still, once in a while a compelling argument seems to come my way. One of the most insistent arguments concerns message dispatching - a pattern which is currently gaining in prominence due to the increasing popularity of CQRS, Domain Events and kindred architectural styles.

In this article I'll first provide a quick sketch of the scenario, followed by a typical implementation based on a ‘Service Locator', and then conclude by demonstrating why a Service Locator isn't necessary.

Scenario: Message Dispatching #

Appropriate use of message dispatching internally in an application can significantly help decouple the code and make roles explicit. A common implementation utilizes a messaging interface like this one:

public interface IChannel { void Send<T>(T message); }

Personally, I find that the generic typing of the Send method is entirely redundant (not to mention heavily reminiscent of the shape of a Service Locator), but it's very common and not particularly important right now (but more about that later).

An application might use the IChannel interface like this:

var registerUser = new RegisterUserCommand( Guid.NewGuid(), "Jane Doe", "password", "jane@ploeh.dk"); this.channel.Send(registerUser); // ... var changeUserName = new ChangeUserNameCommand( registerUser.UserId, "Jane Ploeh"); this.channel.Send(changeUserName); // ... var resetPassword = new ResetPasswordCommand( registerUser.UserId); this.channel.Send(resetPassword);

Obviously, in this example, the channel variable is an injected instance of the IChannel interface.

On the receiving end, these messages must be dispatched to appropriate consumers, which must all implement this interface:

public interface IConsumer<T> { void Consume(T message); }

Thus, each of the command messages in the example have a corresponding consumer:

public class RegisterUserConsumer : IConsumer<RegisterUserCommand> public class ChangeUserNameConsumer : IConsumer<ChangeUserNameCommand> public class ResetPasswordConsumer : IConsumer<ResetPasswordCommand>

This certainly is a very powerful pattern, so it's often used as an argument to prove that Service Locator is, after all, not an anti-pattern.

Message Dispatching using a DI Container #

In order to implement IChannel it's necessary to match messages to their appropriate consumers. One easy way to do this is by employing a DI Container. Here's an example that uses Autofac to implement IChannel, but any other container would do as well:

private class AutofacChannel : IChannel { private readonly IComponentContext container; public AutofacChannel(IComponentContext container) { if (container == null) throw new ArgumentNullException("container"); this.container = container; } public void Send<T>(T message) { var consumer = this.container.Resolve<IConsumer<T>>(); consumer.Consume(message); } }

This class is an Adapter from Autofac's IComponentContext interface to the IChannel interface. At this point I can always see the “Q.E.D.” around the corner: “look! Service Locator isn't an anti-pattern after all! I'd like to see you implement IChannel without a Service Locator.”

While I'll do the latter in just a moment, I'd like to dwell on the DI Container-based implementation for a moment.

- Is it simple? Yes.

- Is it flexible? Yes, although it has shortcomings.

- Would I use it like this? Perhaps. It depends :)

- Is it the only way to implement IChannel? No - see the next section.

- Does it use a Service Locator? No.

While AutofacChannel uses Autofac (a DI Container) to implement the functionality, it's not (necessarily) a Service Locator in action. This was the point I already tried to get across in my previous post about the subject: just because its mechanics look like Service Locator it doesn't mean that it is one. In my implementation, the AutofacChannel class is a piece of pure infrastructure code. I even made it a private nested class in my Composition Root to underscore the point. The container is still not available to the application code, so is never used in the Service Locator role.

One of the shortcomings about the above implementations is that it provides no fallback mechanism. What happens if the container can't resolve the matching consumer? Perhaps there isn't a consumer for the message. That's entirely possible because there are no safeguards in place to ensure that there's a consumer for every possibly message.

The shape of the Send method enables the client to send any conceivable message type, and the code still compiles even if no consumer exists. That may look like a problem, but is actually an important insight into implementing an alternative IChannel class.

Message Dispatching using weakly typed matching #

Consider the IChannel.Send method once again:

void Send<T>(T message);

Despite its generic signature it's important to realize that this is, in fact, a weakly typed method (at least when used with type inferencing, as in the above example). Equivalently to a bona fide Service Locator, it's possible for a developer to define a new class (Foo) and send it - and the code still compiles:

this.channel.Send(new Foo());

However, at run-time, this will fail because there's no matching consumer. Despite the generic signature of the Send method, it contains no type safety. This insight can be used to implement IChannel without a DI Container.

Before I go on I should point out that I don't consider the following solution intrinsically superior to using a DI Container. However, readers of my book will know that I consider it a very illuminating exercise to try to implement everything with Poor Man's DI once in a while.

Using Poor Man's DI often helps unearth some important design elements of DI because it helps to think about solutions in terms of patterns and principles instead of in terms of technology.

However, once I have arrived at an appropriate conclusion while considering Poor Man's DI, I still tend to prefer mapping it back to an implementation that involves a DI Container.

Thus, the purpose of this section is first and foremost to outline how message dispatching can be implemented without relying on a Service Locator.

While this alternative implementation isn't allowed to change any of the existing API, it's a pure implementation detail to encapsulate the insight about the weakly typed nature of IChannel into a similarly weakly typed consumer interface:

private interface IConsumer { void Consume(object message); }

Notice that this is a private nested interface of my Poor Man's DI Composition Root - it's a pure implementation detail. However, given this private interface, it's now possible to implement IChannel like this:

private class PoorMansChannel : IChannel { private readonly IEnumerable<IConsumer> consumers; public PoorMansChannel(params IConsumer[] consumers) { this.consumers = consumers; } public void Send<T>(T message) { foreach (var c in this.consumers) c.Consume(message); } }

Notice that this is another private nested type that belongs to the Composition Root. It loops though all injected consumers, so it's up to each consumer to decide whether or not to do anything about the message.

A final private nested class bridges the generically typed world with the weakly typed world:

private class Consumer<T> : IConsumer { private readonly IConsumer<T> consumer; public Consumer(IConsumer<T> consumer) { this.consumer = consumer; } public void Consume(object message) { if (message is T) this.consumer.Consume((T)message); } }

This generic class is another Adapter - this time adapting the generic IConsumer<T> interface to the weakly typed (private) IConsumer interface. Notice that it only delegates the message to the adapted consumer if the type of the message matches the consumer.

Each implementer of IConsumer<T> can be wrapped in the (private) Consumer<T> class and injected into the PoorMansChannel class:

var channel = new PoorMansChannel( new Consumer<ChangeUserNameCommand>( new ChangeUserNameConsumer(store)), new Consumer<RegisterUserCommand>( new RegisterUserConsumer(store)), new Consumer<ResetPasswordCommand>( new ResetPasswordConsumer(store)));

So there you have it: type-based message dispatching without a DI Container in sight. However, it would be easy to use convention-based configuration to scan an assembly and register all IConsumer<T> implementations and wrap them in Consumer<T> instances and use this list to compose a PoorMansChannel instance. However, I will leave this as an exercise to the reader (or a later blog post).

My claim still stands #

In conclusion, I find that I can still defend my original claim: Service Locator is an anti-pattern.

That claim, by the way, is falsifiable, so I do appreciate that people take it seriously enough by attempting to disprove it. However, until now, I've yet to be presented with a scenario where I couldn't come up with a better solution that didn't involve a Service Locator.

Keep in mind that a Service Locator is defined by the role it plays - not the shape of the API.

Comments

I'm helping a .NET vendor improve their blog by finding respected developers who will contribute guest posts. Each post will include your byline, URL, book link (with your Amazon affiliate link) and a small honorarium. It can either be a new post or one of your popular older posts.

Being an author myself, I know that getting in front of new audiences boosts sales, generates consulting opportunities and in this case, a little cash. Would you be interested? If so, let me know and I'll set you up.

Cheers,

Bob Walsh

Looking forward to getting your book later this month.

I think it comes down to the definition of Service Locator. I'm not sure that the AutofacChannel example is much different than a common example I come up against, which is a ViewModel factory that more or less wraps a call to the container, and then gets injected as a IViewModelFactory into classes that need to create VMs. I don't feel that this is "wrong," as I only allow this kind of thing in cases where more explicit injection is significantly more painful. However I do still think of it as Service Location, and it does violate the Three Calls Pattern. As long as I limit the number of places this is allowed and everyone is aware of them, I see little risk in doing it this way. Some might argue it's a slippery slope . . .

Whether it is a static dependency or an injected instance, it seems unnatural to me to call a service directly from a domain object. I think I've seen you say the same thing, but I'm not sure in what context.

Anyway, was wondering if you had any additional thoughts on the subject. I've been struggling with it for a while, and have settled (temporarily) on firing events outside of the domain object (i.e. call the domain.Method, then fire the event from the command handler).

Thanks for writing.

It's my experience that in MVVM one definitely needs some kind of factory to create ViewModels. However, there's no reason to define it as a Service Locator. A normal (generic) Abstract Factory is preferable, and achieves the same thing.

Regarding the question about whether or not to raise Domain Events from within Entities: it bothered me for a while until I realized that when you move towards CQRS and Event Sourcing, Commands and Events become first-class citizens and this problem tends to go away because you can keep the logic about which commands raises which events decoupled from the Entities. In this architectural style, Entities are immutable, so nothing ever changes within them.

In CQRS we have consumers that consume Commands and Events, and typically we have a single consumer which is responsible for receiving a Command and (upon validation) convert it to an Event. Such a consumer is a Service which can easily hold other Services, such as a channel upon which Events can be published.

Thanks.

I had that problem previously, but I thought I fixed it months ago. From where I'm sitting, the code looks OK both in Google Reader on the Web, Google Reader on Android as well as FeedDemon. Can you share exactly where and how it looks unreadable?

http://imgur.com/LvCfJ

Thanks.

Thanks.

Why I am posting:

I am currently designing the architecture of a new application and I want to design my domain models persistent ignorant but still use them directly in the NHibernate mapping to benefit from lazy loading and to not have near identical entity objects. One part of a PI domain model is that the models might rely on services to do their work which get injected using constructor injection. Now, NHibernate needs a default constructor by default but that can be changed (see: http://fabiomaulo.blogspot.com/2008/11/entities-behavior-injection.html). In the middle of this post there is a class implementation called ReflectionOptimizer that is responsible for creating the entities. It uses an injected container to receive an instance of the requested entity type or falls back to the default implementation of NHibernate if that type is unknown to the container.

Do you think this is using the container in a service locator role?

I think not, because a Poor Man's DI implementation of this class would get a list of factories, one for each supported entity and all of this is pure infrastructure.

The biggest benefit of changing the implementation in a way that it receives factories is that it fails fast: I am constructing all factories along with their dependencies in the composition root.

What is your view on this matter?

It'd be particularly clean if you could inject an Abstract Factory into your NHibernate infrastructure and keep the container itself isolated to the Composition Root. In any case I agree that this sounds like pure infrastructure code, so it doesn't sound like Service Location.

However, I'd think twice about injecting Services into Entities - see also this post from Miško Hevery.

If Message Bus is used - how is about Layers? Should it be some kind of "Superlayer" (visible for all other layers?)

How do you think - in which situation should Message Bus be involved?

Should it better be implemented or is there some good products? (C#, not commerce licence)

It's not too hard to implement a message bus on top of a queue system, but it might be worth taking a look at NServiceBus or Rebus.

I have implemented this pattern before and everything is well when the return type is void.

So, for dispatching messages, this is a really usefull and flexible pattern

However, I was looking into implementing the same thing for a query dispatcher. The structure is similar, with the difference that your messages are queries and that the consumer actually returns a result.

I do have a working implementation but I cannot get type inference to work on my query dispatcher. That means that every time I call the query dispatcher I need to specify the return type and the query type

This may seem a bit abstract, but you can check out it this question on StackoverFlow: type inference with interfaces and generic constraints.

I'm aware that the way I'm doing it there is not possible with c#, but I was wondering if you'd see a pattern that would allow me to do that.

What is your view on this matter?

Many thanks!

Kenneth, thank you for writing. Your question reminded my of my series about Role Hints, particularly Metadata, Role Interface, and Partial Type Name Role Hints. The examples in those posts aren't generically typed, but I wonder if you might still find those patterns useful?

Hi Mark,

I am also using this pattern as an in-process mediator for commands, queries and events. My mediator (channel in your case) now uses a dependency resolver, which is a pure wrapper around a DI container and only contains resolve methods.

I am now trying to refactor the dependency resolver away by creating separate factories for the command, query and event handlers (in your example this are the consumers). In my current code and also in yours and dozens of other implementations on the net don’t deal with releasing the handlers. My question is should this be a responsibility of the mediator (channel)? I think so because the mediator is the only place that knows about the existence of the handler. The problem I have with my answer is that the release of the handler is always called after a dispatch (send) even though the handler could be used again for sending another command of the same type during the same request (HTTP request for example). This implies that the handler’s lifetime is per HTTP request.

Maybe I am thinking in the wrong direction but I would like to hear your opinion about the releasing handler’s problem.

Many thanks in advance,

Martijn Burgers

Martijn, thank you for writing. The golden rule for decommissioning is that the object responsible for composing the object graph should also be responsible for releasing it. That's what some DI Containers (Castle Windsor, Autofac, MEF) do - typically using a Release method. The reason for that is that only the Composer knows if there are any nodes in the object graph that should be disposed of, so only the Composer can properly decommission an object graph.

You can also implement that Resolve/Release pattern using Pure DI. If you're writing a framework, you may need to define an Abstract Factory with an associated Release method, but otherwise, you can just release the graph when you're done with it.

In the present article, you're correct that I haven't addressed the lifetime management aspect. The Composer here is the last code snippet that composes the PoorMansChannel object. As shown here, the entire object graph has the same lifetime as the PoorMansChannel object, but I don't describe whether or not it's a Singleton, Per Graph, Transient, or something else. However, the code that creates the PoorMansChannel object should also be the code that releases it, if that's required.

In my book's chapter 8, I cover decommissioning in much more details, although I don't cover this particular example. I hope this answer helps; otherwise, please write again.

AutoFixture goes Continuous Delivery with Semantic Versioning

For the last couple of months I've been working on setting up AutoFixture for Continuous Delivery (thanks to the nice people at http://teamcity.codebetter.com/ for supplying the CI service) and I think I've finally succeeded. I've just pushed some code from my local Mercurial repository, and 5 minutes later the release is live on both the CodePlex site as well as on the NuGet Gallery.

The plan for AutoFixture going forward is to maintain Continuous Delivery and switch the versioning scheme from ad hoc to Semantic Versioning. This means that obviously you'll see releases much more often, and versions are going to be incremented much more often. Since the previous release the current release incidentally ended at version 2.2.44, but since the versioning scheme has now changed, you can expect to see 2.3, 2.4 etc. in rapid succession.

While I've been mostly focused on setting up Continuous Delivery, Nikos Baxevanis and Enrico Campidoglio have been busy writing new features:

- Support for anonymous delegates

- Heuristics for static factory methods

- Inline AutoData Theories

- More anonymous numbers

Apart from these excellent contributions, other new features are

- Added StableFiniteSequenceCustomization

- Added [FavorArrays], [FavorEnumerables] and [FavorLists] attributes to xUnit.net extension

- Added a Generator<T> class

- Added a completely new project/package called Idioms, which contains convention-based tests (more about this later)

- Probably some other things I've forgotten about…

While you can expect to see version numbers to increase more rapidly and releases to occur more frequently, I'm also beginning to think about AutoFixture 3.0. This release will streamline some of the API in the root namespace, which, I'll admit, was always a bit haphazard. For those people who care, I have no plans to touch the API in the Ploeh.AutoFixture.Kernel namespace. AutoFixture 3.0 will mainly target the API contained in the Ploeh.AutoFixture namespace itself.

Some of the changes I have in mind will hopefully make the default experience with AutoFixture more pleasant - I'm unofficially thinking about AutoFixture 3.0 as the ‘pit of success' release. It will also enable some of the various outstanding feature requests.

Feedback is, as usual, appreciated.

Comments

A failing smoke test won't always tell you exactly what went wrong (while that's a failing of the test, it's not always that easy to fix). Rather than investigating, I'd prefer to have a failing integration test that means the smoke test won't even be run.

I find the most value comes from tests that try and resolve the root of the object graph from the bootstrapped container, i.e. anything I (or a framework) explicitly try and resolve at runtime.

Their being green doesn't necessarily mean the application is fine, but their being red means it's definitely broken. It is a duplication of testing, but so are the smoke tests and some of the unit tests (hopefully!).

The correct wireing is already tested by the DI Container itself. An error prone configuration will be obvious in the first developer or at least user test.

So, is this kind of test overhead, useful or even necessary?

I probably wouldn't do these kind tests.

Would it be worthwhile strategy to unit test my container's configuration by mocking the container's resolver? I'd like to be able to run my registration on a container, then assert that the mocked resolver received the correct "Resolve" messages in the correct order. Currently I'm using validating this with an integration test, but I was thinking that this would be much simpler---if my container supports it.

I'll go back and think a bit more about how I can test the resulting behaviour instead of the implementation.

Nice post! We have a set of automated developer acceptance tests which start the system in an machine.specifications test, execute business commands, shuts down the system and does business asserts on the external subsystems which are stubbed away depending on the feature under test. With that we have an implicit container test: the system boots and behave as expected. If someone adds new ccomponents for that feature which are not properly registered the tests will fail.

Daniel

While I agree on the principle one question came to my mind related to the first part. I don't understand why resolving IQux is an issue because even in runtime it's not required and not registered?

Thanks

Thomas

So, if an application code base requires IQux, the application is going to fail even when the container self-test succeeds.

You have made a very basic and primitive argument to sell a complex but feasible process as pointless:

and without a working example of a better way you explain why you are right. I am an avid reader of your posts and often reference them but IMO this particular argument is not well reasoned.

Your opening argument explains why you may have an issue when using the Service Locator anti-pattern:

Assertions such as the following would ideally be specified in a set of automated tests regardless of the method of composition

&

And I fail to see how Pure DI would make the following into lesser issues

&

My response was prompted by a statement made by you on stackoverflow. You are most highly regarded when it comes to .NET and DI and I feel statements such as "Some people hope that you can ask a DI Container to self-diagnose, but you can't." are very dangerous when they are out-of-date.

Simple Injector will diagnose a number of issues beyond "do I have a registration for X". I'm not claiming that these tests alone are full-proof validation of your configuration but they are set of built in tests for issues that can occur in any configuration (including a Pure configuration) and these combined tests are far from worthless ...

IDisposableYour claim that "such a method is close to worthless" may be true for the majority of the available .NET DI Containers but it does not take Simple Injector's diagnostic services into consideration.