ploeh blog danish software design

An example of state based-testing in F#

While F# is a functional-first language, it's okay to occasionally be pragmatic and use mutable state, for example to easily write some sustainable state-based tests.

This article is an instalment in an article series about how to move from interaction-based testing to state-based testing. In the previous article, you saw how to write state-based tests in Haskell. In this article, you'll see how to apply what you've learned in F#.

The code shown in this article is available on GitHub.

A function to connect two users #

This article, like the others in this series, implements an operation to connect two users. I explain the example in details in my two Clean Coders videos, Church Visitor and Preserved in translation.

Like in the previous Haskell example, in this article we'll start with the implementation, and then see how to unit test it.

// ('a -> Result<User,UserLookupError>) -> (User -> unit) -> 'a -> 'a -> HttpResponse<User> let post lookupUser updateUser userId otherUserId = let userRes = lookupUser userId |> Result.mapError (function | InvalidId -> "Invalid user ID." | NotFound -> "User not found.") let otherUserRes = lookupUser otherUserId |> Result.mapError (function | InvalidId -> "Invalid ID for other user." | NotFound -> "Other user not found.") let connect = result { let! user = userRes let! otherUser = otherUserRes addConnection user otherUser |> updateUser return otherUser } match connect with Ok u -> OK u | Error msg -> BadRequest msg

While the original C# example used Constructor Injection, the above post function uses partial application for Dependency Injection. The two function arguments lookupUser and updateUser represent interactions with a database. Since functions are polymorphic, however, it's possible to replace them with Test Doubles.

A Fake database #

Like in the Haskell example, you can implement a Fake database in F#. It's also possible to implement the State monad in F#, but there's less need for it. F# is a functional-first language, but you can also write mutable code if need be. You could, then, choose to be pragmatic and base your Fake database on mutable state.

type FakeDB () = let users = Dictionary<int, User> () member val IsDirty = false with get, set member this.AddUser user = this.IsDirty <- true users.Add (user.UserId, user) member this.TryFind i = match users.TryGetValue i with | false, _ -> None | true, u -> Some u member this.LookupUser s = match Int32.TryParse s with | false, _ -> Error InvalidId | true, i -> match users.TryGetValue i with | false, _ -> Error NotFound | true, u -> Ok u member this.UpdateUser u = this.IsDirty <- true users.[u.UserId] <- u

This FakeDB type is a class that wraps a mutable dictionary. While it 'implements' LookupUser and UpdateUser, it also exposes what xUnit Test Patterns calls a Retrieval Interface: an API that tests can use to examine the state of the object.

Immutable values normally have structural equality. This means that two values are considered equal if they contain the same constituent values, and have the same structure. Mutable objects, on the other hand, typically have reference equality. This makes it harder to compare two objects, which is, however, what almost all unit testing is about. You compare expected state with actual state.

In the previous article, the Fake database was simply an immutable dictionary. This meant that tests could easily compare expected and actual values, since they were immutable. When you use a mutable object, like the above dictionary, this is harder. Instead, what I chose to do here was to introduce an IsDirty flag. This enables easy verification of whether or not the database changed.

Happy path test case #

This is all you need in terms of Test Doubles. You now have test-specific LookupUser and UpdateUser methods that you can pass to the post function.

Like in the previous article, you can start by exercising the happy path where a user successfully connects with another user:

[<Fact>] let ``Users successfully connect`` () = Property.check <| property { let! user = Gen.user let! otherUser = Gen.withOtherId user let db = FakeDB () db.AddUser user db.AddUser otherUser let actual = post db.LookupUser db.UpdateUser (string user.UserId) (string otherUser.UserId) test <@ db.TryFind user.UserId |> Option.exists (fun u -> u.ConnectedUsers |> List.contains otherUser) @> test <@ isOK actual @> }

All tests in this article use xUnit.net 2.3.1, Unquote 4.0.0, and Hedgehog 0.7.0.0.

This test first adds two valid users to the Fake database db. It then calls the post function, passing the db.LookupUser and db.UpdateUser methods as arguments. Finally, it verifies that the 'first' user's ConnectedUsers now contains the otherUser. It also verifies that actual represents a 200 OK HTTP response.

Missing user test case #

While there's one happy-path test case, there's four other test cases left. One of these is when the first user doesn't exist:

[<Fact>] let ``Users don't connect when user doesn't exist`` () = Property.check <| property { let! i = Range.linear 1 1_000_000 |> Gen.int let! otherUser = Gen.user let db = FakeDB () db.AddUser otherUser db.IsDirty <- false let uniqueUserId = string (otherUser.UserId + i) let actual = post db.LookupUser db.UpdateUser uniqueUserId (string otherUser.UserId) test <@ not db.IsDirty @> test <@ isBadRequest actual @> }

This test adds one valid user to the Fake database. Once it's done with configuring the database, it sets IsDirty to false. The AddUser method sets IsDirty to true, so it's important to reset the flag before the act phase of the test. You could consider this a bit of a hack, but I think it makes the intent of the test clear. This is, however, a position I'm ready to reassess should the tests evolve to make this design awkward.

As explained in the previous article, this test case requires an ID of a user that doesn't exist. Since this is a property-based test, there's a risk that Hedgehog might generate a number i equal to otherUser.UserId. One way to get around that problem is to add the two numbers together. Since i is generated from the range 1 - 1,000,000, uniqueUserId is guaranteed to be different from otherUser.UserId.

The test verifies that the state of the database didn't change (that IsDirty is still false), and that actual represents a 400 Bad Request HTTP response.

Remaining test cases #

You can write the remaining three test cases in the same vein:

[<Fact>] let ``Users don't connect when other user doesn't exist`` () = Property.check <| property { let! i = Range.linear 1 1_000_000 |> Gen.int let! user = Gen.user let db = FakeDB () db.AddUser user db.IsDirty <- false let uniqueOtherUserId = string (user.UserId + i) let actual = post db.LookupUser db.UpdateUser (string user.UserId) uniqueOtherUserId test <@ not db.IsDirty @> test <@ isBadRequest actual @> } [<Fact>] let ``Users don't connect when user Id is invalid`` () = Property.check <| property { let! s = Gen.alphaNum |> Gen.string (Range.linear 0 100) |> Gen.filter isIdInvalid let! otherUser = Gen.user let db = FakeDB () db.AddUser otherUser db.IsDirty <- false let actual = post db.LookupUser db.UpdateUser s (string otherUser.UserId) test <@ not db.IsDirty @> test <@ isBadRequest actual @> } [<Fact>] let ``Users don't connect when other user Id is invalid`` () = Property.check <| property { let! s = Gen.alphaNum |> Gen.string (Range.linear 0 100) |> Gen.filter isIdInvalid let! user = Gen.user let db = FakeDB () db.AddUser user db.IsDirty <- false let actual = post db.LookupUser db.UpdateUser (string user.UserId) s test <@ not db.IsDirty @> test <@ isBadRequest actual @> }

All tests inspect the state of the Fake database after the calling the post function. The exact interactions between post and db aren't specified. Instead, these tests rely on setting up the initial state, exercising the System Under Test, and verifying the final state. These are all state-based tests that avoid over-specifying the interactions.

Summary #

While the previous Haskell example demonstrated that it's possible to write state-based unit tests in a functional style, when using F#, it sometimes make sense to leverage the object-oriented features already available in the .NET framework, such as mutable dictionaries. It would have been possible to write purely functional state-based tests in F# as well, by porting the Haskell examples, but here, I wanted to demonstrate that this isn't required.

I tend to be of the opinion that it's only possible to be pragmatic if you know how to be dogmatic, but now that we know how to write state-based tests in a strictly functional style, I think it's fine to be pragmatic and use a bit of mutable state in F#. The benefit of this is that it now seems clear how to apply what we've learned to the original C# example.

The programmer as decision maker

As a programmer, your job is to make technical decisions. Make some more.

When I speak at conferences, people often come and talk to me. (I welcome that, BTW.) Among all the conversations I've had over the years, there's a pattern to some of them. The attendee will start by telling me how inspired (s)he is by the talk I just gave, or something I've written. That's gratifying, and a good way to start a conversation, but is often followed up like this:

Attendee: "I just wish that we could do something like that in our organisation..."

Let's just say that here we're talking about test-driven development, or perhaps just unit testing. Nothing too controversial. I'd typically respond,

Me: "Why can't you?"

Attendee: "Our boss won't let us..."

That's unfortunate. If your boss has explicitly forbidden you to write and run unit tests, then there's not much you can do. Let me make this absolutely clear: I'm not going on record saying that you should actively disobey a direct order (unless it's unethical, that is). I do wonder, however:

Why is the boss even involved in that decision?

It seems to me that programmers often defer too much authority to their managers.

A note on culture #

I'd like to preface the rest of this article with my own context. I've spent most of my programming career in Danish organisations. Even when I worked for Microsoft, I worked for Danish subsidiaries, with Danish managers.

The power distance in Denmark is (in)famously short. It's not unheard of for individual contributors to question their superiors' decisions; sometimes to their face, and sometimes even when other people witness this. When done respectfully (which it often is), this can be extremely efficient. Managers are as fallible as the rest of us, and often their subordinates know of details that could impact a decision that a manager is about to make. Immediately discussing such details can help ensure that good decisions are made, and bad decisions are cancelled.

This helps managers make better decisions, so enlightened managers welcome feedback.

In general, Danish employees also tend to have a fair degree of autonomy. What I'll suggest in this article is unlikely to get you fired in Denmark. Please use your own judgement if you consider transplanting the following to your own culture.

Technical decisions #

If your job is programmer, software developer, or similar, the value you add to the team is that you bring technical expertise. Maybe some of your colleagues are programmers as well, but together, you are the people with the technical expertise.

Even if the project manager or other superiors used to program, unless they're also writing code for the current code base, they only have general technical expertise, but not specific expertise related to the code base you're working with. The people with most technical expertise are you and your colleagues.

You are decision makers.

Whenever you interact with your code base, you make technical decisions.

In order to handle incoming HTTP requests to a /reservations resource, you may first decide to create a new file called ReservationsController.cs. You'd most likely also decide to open that file and start adding code to it.

Perhaps you add a method called Post that takes a Reservation argument. Perhaps you decide to inject an IMaîtreD dependency.

At various steps along the way, you may decide to compile the code.

Once you think that you've made enough changes to address your current work item, you may decide to run the program to see if it works. For a web-based piece of software, that typically involves starting up a browser and somehow interacting with the service. If your program is a web site, you may start at the front page, log in, click around, and fill in some forms. If your program is a REST API, you may interact with it via Fiddler or Postman (I prefer curl or Furl, but most people I've met still prefer something they can click on, it seems).

What often happens is that your changes don't work the first time around, so you'll have to troubleshoot. Perhaps you decide to use a debugger.

How many decisions are that?

I just described seven or eight types of the sort of decisions you make as a programmer. You make such decisions all the time. Do you ask your managers permission before you start a debugging session? Before you create a new file? Before you name a variable?

Of course you don't. You're the technical expert. There's no-one better equipped than you or your team members to make those decisions.

Decide to add unit tests #

If you want to add unit tests, why don't you just decide to add them? If you want to apply test-driven development, why don't you just do so?

A unit test is one or more code files. You're already authorised to make decisions about adding files.

You can run a test suite instead of launching the software every time you want to interact with it. It's likely to be faster, even.

Why should you ask permission to do that?

Decide to refactor #

Another complaint I hear is that people aren't allowed to refactor.

Why are you even asking permission to refactor?

Refactoring means reorganising the code without changing the behaviour of the system. Another word for that is editing the code. It's okay. You're already permitted to edit code. It's part of your job description.

I think I know what the underlying problem is, though...

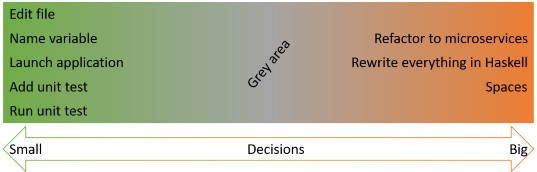

Make technical decisions in the small #

As an individual contributor, you're empowered to make small-scale technical decisions. These are decisions that are unlikely to impact schedules or allocation of programmers, including new hires. Big decisions probably should involve your manager.

I have an inkling of why people feel that they need permission to refactor. It's because the refactoring they have in mind is going to take weeks. Weeks in which nothing else can be done. Weeks where perhaps the code doesn't even compile.

Many years ago (but not as many as I'd like it to be), my colleague and I had what Eric Evans in DDD calls a breakthrough. We wanted to refactor towards deeper insight. What prompted the insight was a new feature that we had to add, and we'd been throwing design ideas back and forth for some time before the new insight arrived.

We could implement the new feature if we changed one of the core abstractions in our domain model, but it required substantial changes to the existing code base. We informed our manager of our new insight and our plan, estimating that it would take less than a week to make the changes and implement the new feature. Our manager agreed with the plan.

Two weeks later our code hadn't been in a compilable state for a week. Our manager pulled me away to tell me, quietly and equitably, that he was not happy with our lack of progress. I could only concur.

After more heroic work, we finally managed to complete the changes and implement the new feature. Nonetheless, blocking all other development for two-three weeks in order to make a change isn't acceptable.

That sort of change is a big decision because it impacts other team members, schedules, and perhaps overall business plans. Don't make those kinds of decisions without consulting with stakeholders.

This still leaves, I believe, lots of room for individual decision-making in the small. What I learned from the experience I just recounted was not to engage in big changes to a code base. Learn how to make multiple incremental changes instead. In case that's completely impossible, add the new model side-by-side with the old model, and incrementally change over. That's what I should have done those many years ago.

Don't be sneaky #

When I give talks about the blessings of functional programming, I sometimes get into another type of discussion.

Attendee: It's so inspiring how beautiful and simple complex domain models become in F#. How can we do the same in C#?

Me: You can't. If you're already using C#, you should strongly consider F# if you wish to do functional programming. Since it's also a .NET language, you can gradually introduce F# code and mix the compiled code with your existing C# code.

Attendee: Yes... [already getting impatient with me] But we can't do that...

Me: Why not?

Attendee: Because our manager will not allow it.

Based on the suggestions I've already made here, you may expect me to say that that's another technical decision that you should make without asking permission. Like the previous example about blocking refactorings, however, this is another large-scale decision.

Your manager may be concerned that it'd be hard to find new employees if the code base is written in some niche language. I tend to disagree with that position, but I do understand why a manager would take that position. While I think it suboptimal to restrict an entire development organisation to a single language (whether it's C#, Java, C++, Ruby, etc.), I'll readily accept that language choice is a strategic decision.

If every programmer got to choose the programming language they prefer the most that day, you'd have code bases written in dozens of different languages. While you can train bright new hires to learn a new language or two, it's unrealistic that a new employee will be able to learn thirty different languages in a short while.

I find it reasonable that a manager has the final word on the choice of language, even when I often disagree with the decisions.

The outcome usually is that people are stuck with C# (or Java, or...). Hence the question: How can we do functional programming in C#?

I'll give the answer that I often give here on the blog: mu (unask the question). You can, in fact, translate functional concepts to C#, but the result is so non-idiomatic that only the syntax remains of C#:

public static IReservationsInstruction<TResult> Select<T, TResult>( this IReservationsInstruction<T> source, Func<T, TResult> selector) { return source.Match<IReservationsInstruction<TResult>>( isReservationInFuture: t => new IsReservationInFuture<TResult>( new Tuple<Reservation, Func<bool, TResult>>( t.Item1, b => selector(t.Item2(b)))), readReservations: t => new ReadReservations<TResult>( new Tuple<DateTimeOffset, Func<IReadOnlyCollection<Reservation>, TResult>>( t.Item1, d => selector(t.Item2(d)))), create: t => new Create<TResult>( new Tuple<Reservation, Func<int, TResult>>( t.Item1, r => selector(t.Item2(r))))); }

Keep in mind the manager's motivation for standardising on C#. It's often related to concerns about being able to hire new employees, or move employees from project to project.

If you write 'functional' C#, you'll end up with code like the above, or the following real-life example:

return await sendRequest( ApiMethodNames.InitRegistration, new GSObject()) .Map(r => ValidateResponse.Validate(r) .MapFailure(_ => ErrorResponse.RegisterErrorResponse())) .Bind(r => r.RetrieveField("regToken")) .BindAsync(token => sendRequest( ApiMethodNames.RegisterAccount, CreateRegisterRequest( mailAddress, password, token)) .Map(ValidateResponse.Validate) .Bind(response => getIdentity(response) .ToResult(ErrorResponse.ExternalServiceResponseInvalid))) .Map(id => GigyaIdentity.CreateNewSiteUser(id.UserId, mailAddress));

(I'm indebted to Rune Ibsen for this example.)

A new hire can have ten years of C# experience and still have no chance in a code base like that. You'll first have to teach him or her functional programming. If you can do that, you might as well also teach a new language, like F#.

It's my experience that learning the syntax of a new language is easy, and usually doesn't take much time. The hard part is learning a new way to think.

Writing 'functional' C# makes it doubly hard on new team members. Not only do they have to learn a new paradigm (functional programming), but they have to learn it in a language unsuited for that paradigm.

That's why I think you should unask the question. If your manager doesn't want to allow F#, then writing 'functional' C# is just being sneaky. That'd be obeying the letter of the law while breaking the spirit of it. That is, in my opinion, immoral. Don't be sneaky.

Summary #

As a professional programmer, your job is to be a technical expert. In normal circumstances (at least the ones I know from my own career), you have agency. In order to get anything done, you make small decisions all the time, such as editing code. That's not only okay, but expected of you.

Some decision, on the other hand, can have substantial ramifications. Choosing to write code in an unsanctioned language tends to fall on the side where a manager should be involved in the decision.

In between is a grey area.

I don't even consider adding unit tests to be in the grey area, but some refactorings may be.

"It's easier to ask forgiveness than it is to get permission."

To navigate grey areas you need a moral compass.

I'll let you be the final judge of what you can get away with, but I consider it both appropriate and ethical to make the decision to add unit tests, and to continually improve code bases. You shouldn't have to ask permission to do that.

An example of state-based testing in Haskell

How do you do state-based testing when state is immutable? You use the State monad.

This article is an instalment in an article series about how to move from interaction-based testing to state-based testing. In the previous article, you saw an example of an interaction-based unit test written in C#. The problem that this article series attempts to address is that interaction-based testing can lead to what xUnit Test Patterns calls Fragile Tests, because the tests get coupled to implementation details, instead of overall behaviour.

My experience is that functional programming is better aligned with unit testing because functional design is intrinsically testable. While I believe that functional programming is no panacea, it still seems to me that we can learn many valuable lessons about programming from it.

People often ask me about F# programming: How do I know that my F# code is functional?

I sometimes wonder that myself, about my own F# code. One can certainly choose to ignore such a question as irrelevant, and I sometimes do, as well. Still, in my experience, asking such questions can create learning opportunities.

The best answer that I've found is: Port the F# code to Haskell.

Haskell enforces referential transparency via its compiler. If Haskell code compiles, it's functional. In this article, then, I take the problem from the previous article and port it to Haskell.

The code shown in this article is available on GitHub.

A function to connect two users #

In the previous article, you saw implementation and test coverage of a piece of software functionality to connect two users with each other. This was a simplification of the example running through my two Clean Coders videos, Church Visitor and Preserved in translation.

In contrast to the previous article, we'll start with the implementation of the System Under Test (SUT).

post :: Monad m => (a -> m (Either UserLookupError User)) -> (User -> m ()) -> a -> a -> m (HttpResponse User) post lookupUser updateUser userId otherUserId = do userRes <- first (\case InvalidId -> "Invalid user ID." NotFound -> "User not found.") <$> lookupUser userId otherUserRes <- first (\case InvalidId -> "Invalid ID for other user." NotFound -> "Other user not found.") <$> lookupUser otherUserId connect <- runExceptT $ do user <- ExceptT $ return userRes otherUser <- ExceptT $ return otherUserRes lift $ updateUser $ addConnection user otherUser return otherUser return $ either BadRequest OK connect

This is as direct a translation of the C# code as makes sense. If I'd only been implementing the desired functionality in Haskell, without having to port existing code, I'd designed the code differently.

This post function uses partial application as an analogy to dependency injection, but in order to enable potentially impure operations to take place, everything must happen inside of some monad. While the production code must ultimately run in the IO monad in order to interact with a database, tests can choose to run in another monad.

In the C# example, two dependencies are injected into the class that defines the Post method. In the above Haskell function, these two dependencies are instead passed as function arguments. Notice that both functions return values in the monad m.

The intent of the lookupUser argument is that it'll query a database with a user ID. It'll return the user if present, but it could also return a UserLookupError, which is a simple sum type:

data UserLookupError = InvalidId | NotFound deriving (Show, Eq)

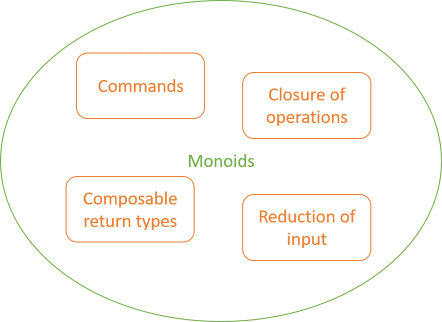

If both users are found, the function connects the users and calls the updateUser function argument. The intent of this 'dependency' is that it updates the database. This is recognisably a Command, since its return type is m () - unit (()) is equivalent to void.

State-based testing #

How do you unit test such a function? How do you use Mocks and Stubs in Haskell? You don't; you don't have to. While the post method can be impure (when m is IO), it doesn't have to be. Functional design is intrinsically testable, but that proposition depends on purity. Thus, it's worth figuring out how to keep the post function pure in the context of unit testing.

While IO implies impurity, most common monads are pure. Which one should you choose? You could attempt to entirely 'erase' the monadic quality of the post function with the Identity monad, but if you do that, you can't verify whether or not updateUser was invoked.

While you could write an ad-hoc Mock using, for example, the Writer monad, it might be a better choice to investigate if something closer to state-based testing would be possible.

In an object-oriented context, state-based testing implies that you exercise the SUT, which mutates some state, and then you verify that the (mutated) state matches your expectations. You can't do that when you test a pure function, but you can examine the state of the function's return value. The State monad is an obvious choice, then.

A Fake database #

Haskell's State monad is parametrised on the state type as well as the normal 'value type', so in order to be able to test the post function, you'll have to figure out what type of state to use. The interactions implied by the post function's lookupUser and updateUser arguments are those of database interactions. A Fake database seems an obvious choice.

For the purposes of testing the post function, an in-memory database implemented using a Map is appropriate:

type DB = Map Integer User

This is simply a dictionary keyed by Integer values and containing User values. You can implement compatible lookupUser and updateUser functions with State DB as the Monad. The updateUser function is the easiest one to implement:

updateUser :: User -> State DB () updateUser user = modify $ Map.insert (userId user) user

This simply inserts the user into the database, using the userId as the key. The type of the function is compatible with the general requirement of User -> m (), since here, m is State DB.

The lookupUser Fake implementation is a bit more involved:

lookupUser :: String -> State DB (Either UserLookupError User) lookupUser s = do let maybeInt = readMaybe s :: Maybe Integer let eitherInt = maybe (Left InvalidId) Right maybeInt db <- get return $ eitherInt >>= maybe (Left NotFound) Right . flip Map.lookup db

First, consider the type. The function takes a String value as an argument and returns a State DB (Either UserLookupError User). The requirement is a function compatible with the type a -> m (Either UserLookupError User). This works when a is String and m is, again, State DB.

The entire function is written in do notation, where the inferred Monad is, indeed, State DB. The first line attempts to parse the String into an Integer. Since the built-in readMaybe function returns a Maybe Integer, the next line uses the maybe function to handle the two possible cases, converting the Nothing case into the Left InvalidId value, and the Just case into a Right value.

It then uses the State module's get function to access the database db, and finally attempt a lookup against that Map. Again, maybe is used to convert the Maybe value returned by Map.lookup into an Either value.

Happy path test case #

This is all you need in terms of Test Doubles. You now have test-specific lookupUser and updateUser functions that you can pass to the post function.

Like in the previous article, you can start by exercising the happy path where a user successfully connects with another user:

testProperty "Users successfully connect" $ \ user otherUser -> runStateTest $ do put $ Map.fromList [toDBEntry user, toDBEntry otherUser] actual <- post lookupUser updateUser (show $ userId user) (show $ userId otherUser) db <- get return $ isOK actual && any (elem otherUser . connectedUsers) (Map.lookup (userId user) db)

Here I'm inlining test cases as anonymous functions - this time expressing the tests as QuickCheck properties. I'll later return to the runStateTest helper function, but first I want to focus on the test body itself. It's written in do notation, and specifically, it runs in the State DB monad.

user and otherUser are input arguments to the property. These are both User values, since the test also defines Arbitrary instances for that type (not shown in this article; see the source code repository for details).

The first step in the test is to 'save' both users in the Fake database. This is easily done by converting each User value to a database entry:

toDBEntry :: User -> (Integer, User) toDBEntry = userId &&& id

Recall that the Fake database is nothing but an alias over Map Integer User, so the only operation required to turn a User into a database entry is to extract the key.

The next step in the test is to exercise the SUT, passing the test-specific lookupUser and updateUser Test Doubles to the post function, together with the user IDs converted to String values.

In the assert phase of the test, it first extracts the current state of the database, using the State library's built-in get function. It then verifies that actual represents a 200 OK value, and that the user entry in the database now contains otherUser as a connected user.

Missing user test case #

While there's one happy-path test case, there's four other test cases left. One of these is when the first user doesn't exist:

testProperty "Users don't connect when user doesn't exist" $ \ (Positive i) otherUser -> runStateTest $ do let db = Map.fromList [toDBEntry otherUser] put db let uniqueUserId = show $ userId otherUser + i actual <- post lookupUser updateUser uniqueUserId (show $ userId otherUser) assertPostFailure db actual

What ought to trigger this test case is that the 'first' user doesn't exist, even if the otherUser does exist. For this reason, the test inserts the otherUser into the Fake database.

Since the test is a QuickCheck property, i could be any positive Integer value - including the userId of otherUser. In order to properly exercise the test case, however, you'll need to call the post function with a uniqueUserId - thas it: an ID which is guaranteed to not be equal to the userId of otherUser. There's several options for achieving this guarantee (including, as you'll see soon, the ==> operator), but a simple way is to add a non-zero number to the number you need to avoid.

You then exercise the post function and, as a verification, call a reusable assertPostFailure function:

assertPostFailure :: (Eq s, Monad m) => s -> HttpResponse a -> StateT s m Bool assertPostFailure stateBefore resp = do stateAfter <- get let stateDidNotChange = stateBefore == stateAfter return $ stateDidNotChange && isBadRequest resp

This function verifies that the state of the database didn't change, and that the response value represents a 400 Bad Request HTTP response. This verification doesn't actually verify that the error message associated with the BadRequest case is the expected message, like in the previous article. This would, however, involve a fairly trivial change to the code.

Missing other user test case #

Similar to the above test case, users will also fail to connect if the 'other user' doesn't exist. The property is almost identical:

testProperty "Users don't connect when other user doesn't exist" $ \ (Positive i) user -> runStateTest $ do let db = Map.fromList [toDBEntry user] put db let uniqueOtherUserId = show $ userId user + i actual <- post lookupUser updateUser (show $ userId user) uniqueOtherUserId assertPostFailure db actual

Since this test body is so similar to the previous test, I'm not going to give you a detailed walkthrough. I did, however, promise to describe the runStateTest helper function:

runStateTest :: State (Map k a) b -> b runStateTest = flip evalState Map.empty

Since this is a one-liner, you could also write all the tests by simply in-lining that little expression, but I thought that it made the tests more readable to give this function an explicit name.

It takes any State (Map k a) b and runs it with an empty map. Thus, all State-valued functions, like the tests, must explicitly put data into the state. This is also what the tests do.

Notice that all the tests return State values. For example, the assertPostFailure function returns StateT s m Bool, of which State s Bool is an alias. This fits State (Map k a) b when s is Map k a, which again is aliased to DB. Reducing all of this, the tests are simply functions that return Bool.

Invalid user ID test cases #

Finally, you can also cover the two test cases where one of the user IDs is invalid:

testProperty "Users don't connect when user Id is invalid" $ \ s otherUser -> isIdInvalid s ==> runStateTest $ do let db = Map.fromList [toDBEntry otherUser] put db actual <- post lookupUser updateUser s (show $ userId otherUser) assertPostFailure db actual , testProperty "Users don't connect when other user Id is invalid" $ \ s user -> isIdInvalid s ==> runStateTest $ do let db = Map.fromList [toDBEntry user] put db actual <- post lookupUser updateUser (show $ userId user) s assertPostFailure db actual

Both of these properties take a String value s as input. When QuickCheck generates a String, that could be any String value. Both tests require that the value is an invalid user ID. Specifically, it mustn't be possible to parse the string into an Integer. If you don't constrain QuickCheck, it'll generate various strings, including e.g. "8" and other strings that can be parsed as numbers.

In the above "Users don't connect when user doesn't exist" test, you saw how one way to explicitly model constraints on data is to project a seed value in such a way that the constraint always holds. Another way is to use QuickCheck's built-in ==> operator to filter out undesired values. In this example, both tests employ the isIdInvalid function:

isIdInvalid :: String -> Bool isIdInvalid s = let userInt = readMaybe s :: Maybe Integer in isNothing userInt

Using isIdInvalid with the ==> operator guarantees that s is an invalid ID.

Summary #

While state-based testing may, at first, sound incompatible with strictly functional programming, it's not only possible with the State monad, but even, with good language support, easily done.

The tests shown in this article aren't concerned with the interactions between the SUT and its dependencies. Instead, they compare the initial state with the state after exercising the SUT. Comparing values, even complex data structures such as maps, tends to be trivial in functional programming. Immutable values typically have built-in structural equality (in Haskell signified by the automatic Eq type class), which makes comparing them trivial.

Now that we know that state-based testing is possible even with Haskell's enforced purity, it should be clear that we can repeat the feat in F#.

Code quality isn't software quality

A trivial observation made explicit.

You'd think that it's evident that code quality and software quality are two different things. Yet, I often see or hear arguments about one or the other that indicates to me that some people don't make that distinction. I wonder why; I do.

Software quality #

There's a school of thought leaders who advocate that, ultimately, we write code to solve problems, or to improve life, for people. I have nothing against that line of reasoning; it's just not one that I pursue much. Why should I use my energy on this message when someone like Dan North does it so much better than I could?

Dan North is far from the only person making the point that our employers, or clients, or end-users don't care about the code; he is, in my opinion, one of the best communicators in that field. It makes sense that, with that perspective on software development, you'd invent something like behaviour-driven development.

The evaluation criterion used in this discourse is one of utility. Does the software serve a purpose? Does it do it well?

In that light, quality software is software that serves its purpose beyond expectation. It rarely, if ever, crashes. It's easy to use. It's sufficiently responsive. It's pretty. It works both on-line and off-line. Attributes like that are externally observable qualities.

You can write quality software in many different languages, using various styles. When you evaluate the externally observable qualities of software, the code is invisible. It's not part of the evaluation.

It seems to me that some people try to make an erroneous conclusion from this premise. They'd say that since no employer, client, or end user evaluates the software based on the code that produced it, then no one cares about the code.

Code quality #

It's easy to refute that argument. All you have to do is to come up with a counter-example. You just have to find one person who cares about the code. That's easy.

You care about the code.

Perhaps you react negatively to that assertion. Perhaps you say: "No! I'm not one of those effete aesthetes who only program in Plankalkül." Fine. Maybe you're not the type who likes to polish the code; maybe you're the practical, down-to-earth type who just likes to get stuff done, so that your employer/client/end-user is happy.

Even so, I think that you still care about the code. Have you ever looked with bewilderment at a piece of code and thought: "Who the hell wrote this piece of shit!?" How many WTFs/m is your code?

I think every programmer cares about their code bases; if not in an active manner, then at least in a passive way. Bad code can seriously impede progress. I've seen more than one organisation effectively go out of business because of bad legacy code.

Code quality is when you care about the readability and malleability of the code. It's when you care about the code's ability to sustain the business, not only today, but also in the future.

Sustainable code #

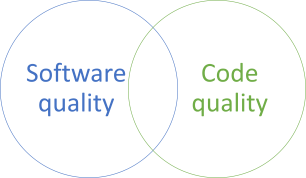

I often get the impression that some people look at code quality and software quality as a (false) dichotomy.

Such arguments often seem to imply that you can't have one without sacrificing the other. You must choose.

The reality is, of course, that you can do both.

At the intersection between software and code quality the code sustains the business both now, and in the future.

Yes, you should write code such that it produces software that provides value here and now, but you should also do your best to enable it to provide value in the future. This is sustainable code. It's code that can sustain the organisation during its lifetime.

No gold-plating #

To be clear: this is not a call for gold plating or speculative generality. You probably can't predict the future needs of the stake-holders.

Quality code doesn't have to be able to perfectly address all future requirements. In order to be sustainable, though, it should be easy to modify in the future, or perhaps just easy to throw away and rewrite. I think a good start is to write humane code; code that fits in your brain.

At least, do your best to avoid writing legacy code.

Summary #

Software quality and code quality can co-exist. You can write quality code that compiles to quality software, but one doesn't imply the other. These are two independent quality dimensions.

An example of interaction-based testing in C#

An example of using Mocks and Stubs for unit testing in C#.

This article is an instalment in an article series about how to move from interaction-based testing to state-based testing. In this series, you'll be presented with some alternatives to interaction-based testing with Mocks and Stubs. Before we reach the alternatives, however, we need to establish an example of interaction-based testing, so that you have something against which you can compare those alternatives. In this article, I'll present a simple example, in the form of C# code.

The code shown in this article is available on GitHub.

Connect two users #

For the example, I'll use a simplified version of the example that runs through my two Clean Coders videos, Church Visitor and Preserved in translation.

The desired functionality is simple: implement a REST API that enables one user to connect to another user. You could imagine some sort of social media platform, or essentially any sort of online service where users might be interested in connecting with, or following, other users.

In essence, you could imagine that a user interface makes an HTTP POST request against our REST API:

POST /connections/42 HTTP/1.1

Content-Type: application/json

{

"otherUserId": 1337

}

Let's further imagine that we implement the desired functionality with a C# method with this signature:

public IHttpActionResult Post(string userId, string otherUserId)

We'll return to the implementation later, but I want to point out a few things.

First, notice that both userId and otherUserId are string arguments. While the above example encodes both IDs as numbers, essentially, both URLs and JSON are text-based. Following Postel's law, the method should also accept JSON like { "otherUserId": "1337" }. That's the reason the Post method takes string arguments instead of int arguments.

Second, the return type is IHttpActionResult. Don't worry if you don't know that interface. It's just a way to model HTTP responses, such as 200 OK or 400 Bad Request.

Depending on the input values, and the state of the application, several outcomes are possible:

| Other user | ||||

|---|---|---|---|---|

| Found | Not found | Invalid | ||

| User | Found | Other user | "Other user not found." |

"Invalid ID for other user." |

| Not found | "User not found." |

"User not found." |

"User not found." |

|

| Invalid | "Invalid user ID." |

"Invalid user ID." |

"Invalid user ID." |

|

"foo" that doesn't represent a number), then it doesn't matter if the other user exists. Likewise, even if the first user ID is well-formed, it might still be the case that no user with that ID exists in the database.

The assumption here is that the underlying user database uses integers as row IDs.

When both users are found, the other user should be returned in the HTTP response, like this:

HTTP/1.1 200 OK

Content-Type: application/json

{

"id": 1337,

"name": "ploeh",

"connections": [{

"id": 42,

"name": "fnaah"

}, {

"id": 2112,

"name": "ndøh"

}]

}

The intent is that when the first user (e.g. the one with the 42 ID) successfully connects to user 1337, a user interface can show the full details of the other user, including the other user's connections.

Happy path test case #

Since there's five distinct outcomes, you ought to write at least five test cases. You could start with the happy-path case, where both user IDs are well-formed and the users exist.

All tests in this article use xUnit.net 2.3.1, Moq 4.8.1, and AutoFixture 4.1.0.

[Theory, UserManagementTestConventions] public void UsersSuccessfullyConnect( [Frozen]Mock<IUserReader> readerTD, [Frozen]Mock<IUserRepository> repoTD, User user, User otherUser, ConnectionsController sut) { readerTD .Setup(r => r.Lookup(user.Id.ToString())) .Returns(Result.Success<User, IUserLookupError>(user)); readerTD .Setup(r => r.Lookup(otherUser.Id.ToString())) .Returns(Result.Success<User, IUserLookupError>(otherUser)); var actual = sut.Post(user.Id.ToString(), otherUser.Id.ToString()); var ok = Assert.IsAssignableFrom<OkNegotiatedContentResult<User>>( actual); Assert.Equal(otherUser, ok.Content); repoTD.Verify(r => r.Update(user)); Assert.Contains(otherUser.Id, user.Connections); }

To be clear, as far as Overspecified Software goes, this isn't a bad test. It only has two Test Doubles, readerTD and repoTD. My current habit is to name any Test Double with the TD suffix (for Test Double), instead of explicitly naming them readerStub and repoMock. The latter would have been more correct, though, since the Mock<IUserReader> object is consistently used as a Stub, whereas the Mock<IUserRepository> object is used only as a Mock. This is as it should be, because it follows the rule that you should use Mocks for Commands, Stubs for Queries.

IUserRepository.Update is, indeed a Command:

public interface IUserRepository { void Update(User user); }

Since the method returns void, unless it doesn't do anything at all, the only thing it can do is to somehow change the state of the system. The test verifies that IUserRepository.Update was invoked with the appropriate input argument.

This is fine.

I'd like to emphasise that this isn't the biggest problem with this test. A Mock like this verifies that a desired interaction took place. If IUserRepository.Update isn't called in this test case, it would constitute a defect. The software wouldn't have the desired behaviour, so the test ought to fail.

The signature of IUserReader.Lookup, on the other hand, implies that it's a Query:

public interface IUserReader { IResult<User, IUserLookupError> Lookup(string id); }

In C# and most other languages, you can't be sure that implementations of the Lookup method have no side effects. If, however, we assume that the code base in question obeys the Command Query Separation principle, then, by elimination, this must be a Query (since it's not a Command, because the return type isn't void).

For a detailed walkthrough of the IResult<S, E> interface, see my Preserved in translation video. It's just an Either with different terminology, though. Right is equivalent to SuccessResult, and Left corresponds to ErrorResult.

The test configures the IUserReader Stub twice. It's necessary to give the Stub some behaviour, but unfortunately you can't just use Moq's It.IsAny<string>() for configuration, because in order to model the test case, the reader should return two different objects for two different inputs.

This starts to look like Overspecified Software.

Ideally, a Stub should just be present to 'make happy noises' in case the SUT decides to interact with the dependency, but with these two Setup calls, the interaction is overspecified. The test is tightly coupled to how the SUT is implemented. If you change the interaction implemented in the Post method, you could break the test.

In any case, what the test does specify is that when you query the UserReader, it returns a Success object for both user lookups, a 200 OK result is returned, and the Update method was called with user.

Invalid user ID test case #

If the first user ID is invalid (i.e. not an integer) then the return value should represent 400 Bad Request and the message body should indicate as much. This test verifies that this is the case:

[Theory, UserManagementTestConventions] public void UsersFailToConnectWhenUserIdIsInvalid( [Frozen]Mock<IUserReader> readerTD, [Frozen]Mock<IUserRepository> repoTD, string userId, User otherUser, ConnectionsController sut) { Assert.False(int.TryParse(userId, out var _)); readerTD .Setup(r => r.Lookup(userId)) .Returns(Result.Error<User, IUserLookupError>( UserLookupError.InvalidId)); var actual = sut.Post(userId, otherUser.Id.ToString()); var err = Assert.IsAssignableFrom<BadRequestErrorMessageResult>(actual); Assert.Equal("Invalid user ID.", err.Message); repoTD.Verify(r => r.Update(It.IsAny<User>()), Times.Never()); }

This test starts with a Guard Assertion that userId isn't an integer. This is mostly an artefact of using AutoFixture. Had you used specific example values, then this wouldn't have been necessary. On the other hand, had you written the test case as a property-based test, it would have been even more important to explicitly encode such a constraint.

Perhaps a better design would have been to use a domain-specific method to check for the validity of the ID, but there's always room for improvement.

This test is more brittle than it looks. It only defines what should happen when IUserReader.Lookup is called with the invalid userId. What happens if IUserReader.Lookup is called with the Id associated with otherUser?

This currently doesn't matter, so the test passes.

The test relies, however, on an implementation detail. This test implicitly assumes that the implementation short-circuits as soon as it discovers that userId is invalid. What if, however, you'd made some performance measurements, and you'd discovered that in most cases, the software would run faster if you Lookup both users in parallel?

Such an innocuous performance optimisation could break the test, because the behaviour of readerTD is unspecified for all other cases than for userId.

Invalid ID for other user test case #

What happens if the other user ID is invalid? This unit test exercises that test case:

[Theory, UserManagementTestConventions] public void UsersFailToConnectWhenOtherUserIdIsInvalid( [Frozen]Mock<IUserReader> readerTD, [Frozen]Mock<IUserRepository> repoTD, User user, string otherUserId, ConnectionsController sut) { Assert.False(int.TryParse(otherUserId, out var _)); readerTD .Setup(r => r.Lookup(user.Id.ToString())) .Returns(Result.Success<User, IUserLookupError>(user)); readerTD .Setup(r => r.Lookup(otherUserId)) .Returns(Result.Error<User, IUserLookupError>( UserLookupError.InvalidId)); var actual = sut.Post(user.Id.ToString(), otherUserId); var err = Assert.IsAssignableFrom<BadRequestErrorMessageResult>(actual); Assert.Equal("Invalid ID for other user.", err.Message); repoTD.Verify(r => r.Update(It.IsAny<User>()), Times.Never()); }

Notice how the test configures readerTD twice: once for the Id associated with user, and once for otherUserId. Why does this test look different from the previous test?

Why is the first Setup required? Couldn't the arrange phase of the test just look like the following?

Assert.False(int.TryParse(otherUserId, out var _)); readerTD .Setup(r => r.Lookup(otherUserId)) .Returns(Result.Error<User, IUserLookupError>( UserLookupError.InvalidId));

If you wrote the test like that, it would resemble the previous test (UsersFailToConnectWhenUserIdIsInvalid). The problem, though, is that if you remove the Setup for the valid user, the test fails.

This is another example of how the use of interaction-based testing makes the tests brittle. The tests are tightly coupled to the implementation.

Missing users test cases #

I don't want to belabour the point, so here's the two remaining tests:

[Theory, UserManagementTestConventions] public void UsersDoNotConnectWhenUserDoesNotExist( [Frozen]Mock<IUserReader> readerTD, [Frozen]Mock<IUserRepository> repoTD, string userId, User otherUser, ConnectionsController sut) { readerTD .Setup(r => r.Lookup(userId)) .Returns(Result.Error<User, IUserLookupError>( UserLookupError.NotFound)); var actual = sut.Post(userId, otherUser.Id.ToString()); var err = Assert.IsAssignableFrom<BadRequestErrorMessageResult>(actual); Assert.Equal("User not found.", err.Message); repoTD.Verify(r => r.Update(It.IsAny<User>()), Times.Never()); } [Theory, UserManagementTestConventions] public void UsersDoNotConnectWhenOtherUserDoesNotExist( [Frozen]Mock<IUserReader> readerTD, [Frozen]Mock<IUserRepository> repoTD, User user, int otherUserId, ConnectionsController sut) { readerTD .Setup(r => r.Lookup(user.Id.ToString())) .Returns(Result.Success<User, IUserLookupError>(user)); readerTD .Setup(r => r.Lookup(otherUserId.ToString())) .Returns(Result.Error<User, IUserLookupError>( UserLookupError.NotFound)); var actual = sut.Post(user.Id.ToString(), otherUserId.ToString()); var err = Assert.IsAssignableFrom<BadRequestErrorMessageResult>(actual); Assert.Equal("Other user not found.", err.Message); repoTD.Verify(r => r.Update(It.IsAny<User>()), Times.Never()); }

Again, notice the asymmetry of these two tests. The top one passes with only one Setup of readerTD, whereas the bottom test requires two in order to pass.

You can add a second Setup to the top test to make the two tests equivalent, but people often forget to take such precautions. The result is Fragile Tests.

Post implementation #

In the spirit of test-driven development, I've shown you the tests before the implementation.

public class ConnectionsController : ApiController { public ConnectionsController( IUserReader userReader, IUserRepository userRepository) { UserReader = userReader; UserRepository = userRepository; } public IUserReader UserReader { get; } public IUserRepository UserRepository { get; } public IHttpActionResult Post(string userId, string otherUserId) { var userRes = UserReader.Lookup(userId).SelectError( error => error.Accept(UserLookupError.Switch( onInvalidId: "Invalid user ID.", onNotFound: "User not found."))); var otherUserRes = UserReader.Lookup(otherUserId).SelectError( error => error.Accept(UserLookupError.Switch( onInvalidId: "Invalid ID for other user.", onNotFound: "Other user not found."))); var connect = from user in userRes from otherUser in otherUserRes select Connect(user, otherUser); return connect.SelectBoth(Ok, BadRequest).Bifold(); } private User Connect(User user, User otherUser) { user.Connect(otherUser); UserRepository.Update(user); return otherUser; } }

This is a simplified version of the code shown towards the end of my Preserved in translation video, so I'll refer you there for a detailed explanation.

Summary #

The premise of Refactoring is that in order to be able to refactor, the "precondition is [...] solid tests". In reality, many development organisations have the opposite experience. When programmers attempt to make changes to how their code is organised, tests break. In xUnit Test Patterns this problem is called Fragile Tests, and the cause is often Overspecified Software. This means that tests are tightly coupled to implementation details of the System Under Test (SUT).

It's easy to inadvertently fall into this trap when you use Mocks and Stubs, even when you follow the rule of using Mocks for Commands and Stubs for Queries. In my experience, it's often the explicit configuration of Stubs that tend to make tests brittle. A Command represents an intentional side effect, and you want to verify that such a side effect takes place. A Query, on the other hand, has no side effect, so a black-box test shouldn't be concerned with any interactions involving Queries.

Yet, using an 'isolation framework' such as Moq, FakeItEasy, NSubstitute, and so on, will pull you towards overspecifying the interactions the SUT has with its Query dependencies.

How can we improve? One strategy is to move towards a more functional design, which is intrinsically testable. In the next article, you'll see how to rewrite both tests and implementation in Haskell.

Comments

Hi Mark,

I think I came to the same conclusion (maybe not the same solution), meaning you can't write solid tests when mocking all the dependencies interaction : all these dependencies interaction are implementation details (even the database system you chose). For writing solid tests I chose to write my tests like this : start all the services I can in test environment (database, queue ...), mock only things I have no choice (external PSP or Google Captcha), issue command (using MediatR) and check the result with a query. You can find some of my work here . The work is not done on all the tests but this is the way I want to go. Let me know what you think about it.

I could have launched the tests at the Controller level but I chose Command and Query handler.

Can't wait to see your solution

Rémi, thank you for writing. Hosting services as part of a test run can be a valuable addition to an overall testing or release pipeline. It's reminiscent of the approach taken in GOOS. I've also touched on this option in my Pluralsight course Outside-In Test-Driven Development. This is, however, a set of tests I would identify as belonging towards the top of a Test Pyramid. In my experience, such tests tend to run (an order of magnitude) slower than unit tests.

That doesn't preclude their use. Depending on circumstances, I still prefer having tests like that. I think that I've written a few applications where tests like that constituted the main body of unit tests.

I do, however, also find this style of testing too limiting in many situation. I tend to prefer 'real' unit tests, since they tend to be easier to write, and they execute faster.

Apart from performance and maintainability concerns, one problem that I often see with integration tests is that it's practically impossible to cover all edge cases. This tends to lead to either bug-ridden software, or unmaintainable test suites.

Still, I think that, ultimately, having enough experience with different styles of testing enables one to make an informed choice. That's my purpose with these articles: to point out that alternatives exist.

From interaction-based to state-based testing

Indiscriminate use of Mocks and Stubs can lead to brittle test suites. A more functional design can make state-based testing easier, leading to more robust test suites.

The original premise of Refactoring was that in order to refactor, you must have a trustworthy suite of unit tests, so that you can be confident that you didn't break any functionality.

The idea is that you can change how the code is organised, and as long as you don't break any tests, all is good. The experience that most people seem to have, though, is that when they change something in the code, tests break."to refactor, the essential precondition is [...] solid tests"

This is a well-known test smell. In xUnit Test Patterns this is called Fragile Test, and it's often caused by Overspecified Software. Even if you follow the proper practice of using Mocks for Commands, Stubs for Queries, you can still end up with a code base where the tests are highly coupled to implementation details of the software.

The cause is often that when relying on Mocks and Stubs, test verification hinges on how the System Under Test (SUT) interacts with its dependencies. For that reason, we can call such tests interaction-based tests. For more information, watch my Pluralsight course Advanced Unit Testing.

Lessons from functional programming #

Another way to verify the outcome of a test is to inspect the state of the system after exercising the SUT. We can, quite naturally, call this state-based testing. In object-oriented design, this can lead to other problems. Nat Pryce has pointed out that state-based testing breaks encapsulation.

Interestingly, in his article, Nat Pryce concludes:

"I have come to think of object oriented programming as an inversion of functional programming. In a lazy functional language data is pulled through functions that transform the data and combine it into a single result. In an object oriented program, data is pushed out in messages to objects that transform the data and push it out to other objects for further processing."That's an impressively perceptive observation to make in 2004. I wish I was that perspicacious, but I only reached a similar conclusion ten years later.

Functional programming is based on the fundamental principle of referential transparency, which, among other things, means that data must be immutable. Thus, no objects change state. Instead, functions can return data that contains immutable state. In unit tests, you can verify that return values are as expected. Functional design is intrinsically testable; we can consider it a kind of state-based testing, although the states you'd be verifying are immutable return values.

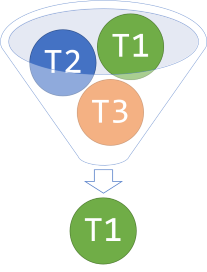

In this article series, you'll see three different styles of testing, from interaction-based testing with Mocks and Stubs in C#, over strictly functional state-based testing in Haskell, to pragmatic state-based testing in F#, finally looping back to C# to apply the lessons from functional programming.

- An example of interaction-based testing in C#

- An example of state-based testing in Haskell

- An example of state based-testing in F#

- An example of state-based testing in C#

- A pure Test Spy

Summary #

Adopting a more functional design, even in a fundamentally object-oriented language like C# can, in my experience, lead to a more sustainable code base. Various maintenance tasks become easier, including unit tests. Functional programming, however, is no panacea. My intent with this article series is only to inspire; to show alternatives to the ways things are normally done. Adopting one of those alternatives could lead to better code, but you must still exercise context-specific judgement.

Asynchronous Injection

How to combine asynchronous programming with Dependency Injection without leaky abstractions.

C# has decent support for asynchronous programming, but it ultimately leads to leaky abstractions. This is often conspicuous when combined with Dependency Injection (DI). This leads to frequently asked questions around the combination of DI and asynchronous programming. This article outlines the problem and suggests an alternative.

The code base supporting this article is available on GitHub.

A synchronous example #

In this article, you'll see various stages of a small sample code base that pretends to implement the server-side behaviour of an on-line restaurant reservation system (my favourite example scenario). In the first stage, the code uses DI, but no asynchronous I/O.

At the boundary of the application, a Post method receives a Reservation object:

public class ReservationsController : ControllerBase { public ReservationsController(IMaîtreD maîtreD) { MaîtreD = maîtreD; } public IMaîtreD MaîtreD { get; } public IActionResult Post(Reservation reservation) { int? id = MaîtreD.TryAccept(reservation); if (id == null) return InternalServerError("Table unavailable"); return Ok(id.Value); } }

The Reservation object is just a simple bundle of properties:

public class Reservation { public DateTimeOffset Date { get; set; } public string Email { get; set; } public string Name { get; set; } public int Quantity { get; set; } public bool IsAccepted { get; set; } }

In a production code base, I'd favour a separation of DTOs and domain objects with proper encapsulation, but in order to keep the code example simple, here the two roles are combined.

The Post method simply delegates most work to an injected IMaîtreD object, and translates the return value to an HTTP response.

The code example is overly simplistic, to the point where you may wonder what is the point of DI, since it seems that the Post method doesn't perform any work itself. A slightly more realistic example includes some input validation and mapping between layers.

The IMaîtreD implementation is this:

public class MaîtreD : IMaîtreD { public MaîtreD(int capacity, IReservationsRepository repository) { Capacity = capacity; Repository = repository; } public int Capacity { get; } public IReservationsRepository Repository { get; } public int? TryAccept(Reservation reservation) { var reservations = Repository.ReadReservations(reservation.Date); int reservedSeats = reservations.Sum(r => r.Quantity); if (Capacity < reservedSeats + reservation.Quantity) return null; reservation.IsAccepted = true; return Repository.Create(reservation); } }

The protocol for the TryAccept method is that it returns the reservation ID if it accepts the reservation. If the restaurant has too little remaining Capacity for the requested date, it instead returns null. Regular readers of this blog will know that I'm no fan of null, but this keeps the example realistic. I'm also no fan of state mutation, but the example does that as well, by setting IsAccepted to true.

Introducing asynchrony #

The above example is entirely synchronous, but perhaps you wish to introduce some asynchrony. For example, the IReservationsRepository implies synchrony:

public interface IReservationsRepository { Reservation[] ReadReservations(DateTimeOffset date); int Create(Reservation reservation); }

In reality, though, you know that the implementation of this interface queries and writes to a relational database. Perhaps making this communication asynchronous could improve application performance. It's worth a try, at least.

How do you make something asynchronous in C#? You change the return type of the methods in question. Therefore, you have to change the IReservationsRepository interface:

public interface IReservationsRepository { Task<Reservation[]> ReadReservations(DateTimeOffset date); Task<int> Create(Reservation reservation); }

The Repository methods now return Tasks. This is the first leaky abstraction. From the Dependency Inversion Principle it follows that

The"clients [...] own the abstract interfaces"

MaîtreD class is the client of the IReservationsRepository interface, which should be designed to support the needs of that class. MaîtreD doesn't need IReservationsRepository to be asynchronous.

The change of the interface has nothing to with what MaîtreD needs, but rather with a particular implementation of the IReservationsRepository interface. Because this implementation queries and writes to a relational database, this implementation detail leaks into the interface definition. It is, therefore, a leaky abstraction.

On a more practical level, accommodating the change is easily done. Just add async and await keywords in appropriate places:

public async Task<int?> TryAccept(Reservation reservation) { var reservations = await Repository.ReadReservations(reservation.Date); int reservedSeats = reservations.Sum(r => r.Quantity); if (Capacity < reservedSeats + reservation.Quantity) return null; reservation.IsAccepted = true; return await Repository.Create(reservation); }

In order to compile, however, you also have to fix the IMaîtreD interface:

public interface IMaîtreD { Task<int?> TryAccept(Reservation reservation); }

This is the second leaky abstraction, and it's worse than the first. Perhaps you could successfully argue that it was conceptually acceptable to model IReservationsRepository as asynchronous. After all, a Repository conceptually represents a data store, and these are generally out-of-process resources that require I/O.

The IMaîtreD interface, on the other hand, is a domain object. It models how business is done, not how data should be accessed. Why should business logic be asynchronous?

It's hardly news that async and await is infectious. Once you introduce Tasks, it's async all the way!

That doesn't mean that asynchrony isn't one big leaky abstraction. It is.

You've probably already realised what this means in the context of the little example. You must also patch the Post method:

public async Task<IActionResult> Post(Reservation reservation) { int? id = await MaîtreD.TryAccept(reservation); if (id == null) return InternalServerError("Table unavailable"); return Ok(id.Value); }

Pragmatically, I'd be ready to accept the argument that this isn't a big deal. After all, you just replace all return values with Tasks, and add async and await keywords where they need to go. This hardly impacts the maintainability of a code base.

In C#, I'd be inclined to just acknowledge that, hey, there's a leaky abstraction. Moving on...

On the other hand, sometimes people imply that it has to be like this. That there is no other way.

Falsifiable claims like that often get my attention. Oh, really?!

Move impure interactions to the boundary of the system #

We can pretend that Task<T> forms a functor. It's also a monad. Monads are those incredibly useful programming abstractions that have been propagating from their origin in statically typed functional programming languages to more mainstream languages like C#.

In functional programming, impure interactions happen at the boundary of the system. Taking inspiration from functional programming, you can move the impure interactions to the boundary of the system.

In the interest of keeping the example simple, I'll only move the impure operations one level out: from MaîtreD to ReservationsController. The approach can be generalised, although you may have to look into how to handle pure interactions.

Where are the impure interactions in MaîtreD? They are in the two interactions with IReservationsRepository. The ReadReservations method is non-deterministic, because the same input value can return different results, depending on the state of the database when you call it. The Create method causes a side effect to happen, because it creates a row in the database. This is one way in which the state of the database could change, which makes ReadReservations non-deterministic. Additionally, Create also violates Command Query Separation (CQS) by returning the ID of the row it creates. This, again, is non-deterministic, because the same input value will produce a new return value every time the method is called. (Incidentally, you should design Create methods so that they don't violate CQS.)

Move reservations to a method argument #

The first refactoring is the easiest. Move the ReadReservations method call to the application boundary. In the above state of the code, the TryAccept method unconditionally calls Repository.ReadReservations to populate the reservations variable. Instead of doing this from within TryAccept, just pass reservations as a method argument:

public async Task<int?> TryAccept( Reservation[] reservations, Reservation reservation) { int reservedSeats = reservations.Sum(r => r.Quantity); if (Capacity < reservedSeats + reservation.Quantity) return null; reservation.IsAccepted = true; return await Repository.Create(reservation); }

This no longer compiles until you also change the IMaîtreD interface:

public interface IMaîtreD { Task<int?> TryAccept(Reservation[] reservations, Reservation reservation); }

You probably think that this is a much worse leaky abstraction than returning a Task. I'd be inclined to agree, but trust me: ultimately, this will matter not at all.

When you move an impure operation outwards, it means that when you remove it from one place, you must add it to another. In this case, you'll have to query the Repository from the ReservationsController, which also means that you need to add the Repository as a dependency there:

public class ReservationsController : ControllerBase { public ReservationsController( IMaîtreD maîtreD, IReservationsRepository repository) { MaîtreD = maîtreD; Repository = repository; } public IMaîtreD MaîtreD { get; } public IReservationsRepository Repository { get; } public async Task<IActionResult> Post(Reservation reservation) { var reservations = await Repository.ReadReservations(reservation.Date); int? id = await MaîtreD.TryAccept(reservations, reservation); if (id == null) return InternalServerError("Table unavailable"); return Ok(id.Value); } }

This is a refactoring in the true sense of the word. It just reorganises the code without changing the overall behaviour of the system. Now the Post method has to query the Repository before it can delegate the business decision to MaîtreD.

Separate decision from effect #

As far as I can tell, the main reason to use DI is because some impure interactions are conditional. This is also the case for the TryAccept method. Only if there's sufficient remaining capacity does it call Repository.Create. If it detects that there's too little remaining capacity, it immediately returns null and doesn't call Repository.Create.

In object-oriented code, DI is the most common way to decouple decisions from effects. Imperative code reaches a decision and calls a method on an object based on that decision. The effect of calling the method can vary because of polymorphism.

In functional programming, you typically use a functor like Maybe or Either to separate decisions from effects. You can do the same here.

The protocol of the TryAccept method already communicates the decision reached by the method. An int value is the reservation ID; this implies that the reservation was accepted. On the other hand, null indicates that the reservation was declined.

You can use the same sort of protocol, but instead of returning a Nullable<int>, you can return a Maybe<Reservation>:

public async Task<Maybe<Reservation>> TryAccept( Reservation[] reservations, Reservation reservation) { int reservedSeats = reservations.Sum(r => r.Quantity); if (Capacity < reservedSeats + reservation.Quantity) return Maybe.Empty<Reservation>(); reservation.IsAccepted = true; return reservation.ToMaybe(); }

This completely decouples the decision from the effect. By returning Maybe<Reservation>, the TryAccept method communicates the decision it made, while leaving further processing entirely up to the caller.

In this case, the caller is the Post method, which can now compose the result of invoking TryAccept with Repository.Create:

public async Task<IActionResult> Post(Reservation reservation) { var reservations = await Repository.ReadReservations(reservation.Date); Maybe<Reservation> m = await MaîtreD.TryAccept(reservations, reservation); return await m .Select(async r => await Repository.Create(r)) .Match( nothing: Task.FromResult(InternalServerError("Table unavailable")), just: async id => Ok(await id)); }

Notice that the Post method never attempts to extract 'the value' from m. Instead, it injects the desired behaviour (Repository.Create) into the monad. The result of calling Select with an asynchronous lambda expression like that is a Maybe<Task<int>>, which is a awkward combination. You can fix that later.

The Match method is the catamorphism for Maybe. It looks exactly like the Match method on the Church-encoded Maybe. It handles both the case when m is empty, and the case when m is populated. In both cases, it returns a Task<IActionResult>.

Synchronous domain logic #

At this point, you have a compiler warning in your code:

Warning CS1998 This async method lacks 'await' operators and will run synchronously. Consider using the 'await' operator to await non-blocking API calls, or 'await Task.Run(...)' to do CPU-bound work on a background thread.Indeed, the current incarnation of

TryAccept is synchronous, so remove the async keyword and change the return type:

public Maybe<Reservation> TryAccept( Reservation[] reservations, Reservation reservation) { int reservedSeats = reservations.Sum(r => r.Quantity); if (Capacity < reservedSeats + reservation.Quantity) return Maybe.Empty<Reservation>(); reservation.IsAccepted = true; return reservation.ToMaybe(); }

This requires a minimal change to the Post method: it no longer has to await TryAccept:

public async Task<IActionResult> Post(Reservation reservation) { var reservations = await Repository.ReadReservations(reservation.Date); Maybe<Reservation> m = MaîtreD.TryAccept(reservations, reservation); return await m .Select(async r => await Repository.Create(r)) .Match( nothing: Task.FromResult(InternalServerError("Table unavailable")), just: async id => Ok(await id)); }

Apart from that, this version of Post is the same as the one above.

Notice that at this point, the domain logic (TryAccept) is no longer asynchronous. The leaky abstraction is gone.

Redundant abstraction #

The overall work is done, but there's some tidying up remaining. If you review the TryAccept method, you'll notice that it no longer uses the injected Repository. You might as well simplify the class by removing the dependency:

public class MaîtreD : IMaîtreD { public MaîtreD(int capacity) { Capacity = capacity; } public int Capacity { get; } public Maybe<Reservation> TryAccept( Reservation[] reservations, Reservation reservation) { int reservedSeats = reservations.Sum(r => r.Quantity); if (Capacity < reservedSeats + reservation.Quantity) return Maybe.Empty<Reservation>(); reservation.IsAccepted = true; return reservation.ToMaybe(); } }

The TryAccept method is now deterministic. The same input will always return the same input. This is not yet a pure function, because it still has a single side effect: it mutates the state of reservation by setting IsAccepted to true. You could, however, without too much trouble refactor Reservation to an immutable Value Object.