ploeh blog danish software design

Rose tree bifunctor

A rose tree forms a bifunctor. An article for object-oriented developers.

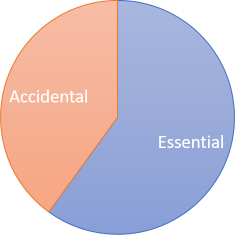

This article is an instalment in an article series about bifunctors. While the overview article explains that there's essentially two practically useful bifunctors, here's a third one. rose trees.

Mapping both dimensions #

Like in the previous article on the Either bifunctor, I'll start by implementing the simultaneous two-dimensional translation SelectBoth:

public static IRoseTree<N1, L1> SelectBoth<N, N1, L, L1>( this IRoseTree<N, L> source, Func<N, N1> selectNode, Func<L, L1> selectLeaf) { return source.Cata( node: (n, branches) => new RoseNode<N1, L1>(selectNode(n), branches), leaf: l => (IRoseTree<N1, L1>)new RoseLeaf<N1, L1>(selectLeaf(l))); }

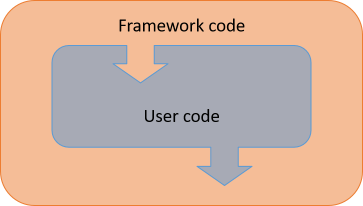

This article uses the previously shown Church-encoded rose tree and its catamorphism Cata.

In the leaf case, the l argument received by the lambda expression is an object of the type L, since the source tree is an IRoseTree<N, L> object; i.e. a tree with leaves of the type L and nodes of the type N. The selectLeaf argument is a function that converts an L object to an L1 object. Since l is an L object, you can call selectLeaf with it to produce an L1 object. You can use this resulting object to create a new RoseLeaf<N1, L1>. Keep in mind that while the RoseLeaf class requires two type arguments, it never requires an object of its N type argument, which means that you can create an object with any node type argument, including N1, even if you don't have an object of that type.

In the node case, the lambda expression receives two objects: n and branches. The n object has the type N, while the branches object has the type IEnumerable<IRoseTree<N1, L1>>. In other words, the branches have already been translated to the desired result type. That's how the catamorphism works. This means that you only have to figure out how to translate the N object n to an N1 object. The selectNode function argument can do that, so you can then create a new RoseNode<N1, L1> and return it.

This works as expected:

> var tree = RoseTree.Node("foo", new RoseLeaf<string, int>(42), new RoseLeaf<string, int>(1337)); > tree RoseNode<string, int>("foo", IRoseTree<string, int>[2] { 42, 1337 }) > tree.SelectBoth(s => s.Length, i => i.ToString()) RoseNode<int, string>(3, IRoseTree<int, string>[2] { "42", "1337" })

This C# Interactive example shows how to convert a tree with internal string nodes and integer leaves to a tree of internal integer nodes and string leaves. The strings are converted to strings by counting their Length, while the integers are turned into strings using the standard ToString method available on all objects.

Mapping nodes #

When you have SelectBoth, you can trivially implement the translations for each dimension in isolation. For tuple bifunctors, I called these methods SelectFirst and SelectSecond, while for Either bifunctors, I chose to name them SelectLeft and SelectRight. Continuing the trend of naming the translations after what they translate, instead of their positions, I'll name the corresponding methods here SelectNode and SelectLeaf. In Haskell, the functions associated with Data.Bifunctor are always called first and second, but I see no reason to preserve such abstract naming in C#. In Haskell, these functions are part of the Bifunctor type class; the abstract names serve an actual purpose. This isn't the case in C#, so there's no reason to retain the abstract names. You might as well use names that communicate intent, which is what I've tried to do here.

If you want to map only the internal nodes, you can implement a SelectNode method based on SelectBoth:

public static IRoseTree<N1, L> SelectNode<N, N1, L>( this IRoseTree<N, L> source, Func<N, N1> selector) { return source.SelectBoth(selector, l => l); }

This simply uses the l => l lambda expression as an ad-hoc identity function, while passing selector as the selectNode argument to the SelectBoth method.

You can use this to map the above tree to a tree made entirely of numbers:

> var tree = RoseTree.Node("foo", new RoseLeaf<string, int>(42), new RoseLeaf<string, int>(1337)); > tree.SelectNode(s => s.Length) RoseNode<int, int>(3, IRoseTree<int, int>[2] { 42, 1337 })

Such a tree is, incidentally, isomorphic to a 'normal' tree. It might be a good exercise, if you need one, to demonstrate the isormorphism by writing functions that convert a Tree<T> into an IRoseTree<T, T>, and vice versa.

Mapping leaves #

Similar to SelectNode, you can also trivially implement SelectLeaf:

public static IRoseTree<N, L1> SelectLeaf<N, L, L1>( this IRoseTree<N, L> source, Func<L, L1> selector) { return source.SelectBoth(n => n, selector); }

This is another one-liner calling SelectBoth, with the difference that the identity function n => n is passed as the first argument, instead of as the last. This ensures that only RoseLeaf values are mapped:

> var tree = RoseTree.Node("foo", new RoseLeaf<string, int>(42), new RoseLeaf<string, int>(1337)); > tree.SelectLeaf(i => i % 2 == 0) RoseNode<string, bool>("foo", IRoseTree<string, bool>[2] { true, false })

In the above C# Interactive session, the leaves are mapped to Boolean values, indicating whether they're even or odd.

Identity laws #

Rose trees obey all the bifunctor laws. While it's formal work to prove that this is the case, you can get an intuition for it via examples. Often, I use a property-based testing library like FsCheck or Hedgehog to demonstrate (not prove) that laws hold, but in this article, I'll keep it simple and only cover each law with a parametrised test.

private static T Id<T>(T x) => x; public static IEnumerable<object[]> BifunctorLawsData { get { yield return new[] { new RoseLeaf<int, string>("") }; yield return new[] { new RoseLeaf<int, string>("foo") }; yield return new[] { RoseTree.Node<int, string>(42) }; yield return new[] { RoseTree.Node(42, new RoseLeaf<int, string>("bar")) }; yield return new[] { exampleTree }; } } [Theory, MemberData(nameof(BifunctorLawsData))] public void SelectNodeObeysFirstFunctorLaw(IRoseTree<int, string> t) { Assert.Equal(t, t.SelectNode(Id)); }

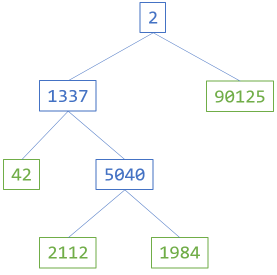

This test uses xUnit.net's [Theory] feature to supply a small set of example input values. The input values are defined by the BifunctorLawsData property, since I'll reuse the same values for all the bifunctor law demonstration tests. The exampleTree object is the tree shown in Church-encoded rose tree.

The tests also use the identity function implemented as a private function called Id, since C# doesn't come equipped with such a function in the Base Class Library.

For all the IRoseTree<int, string> objects t, the test simply verifies that the original tree t is equal to the tree projected over the first axis with the Id function.

Likewise, the first functor law applies when translating over the second dimension:

[Theory, MemberData(nameof(BifunctorLawsData))] public void SelectLeafObeysFirstFunctorLaw(IRoseTree<int, string> t) { Assert.Equal(t, t.SelectLeaf(Id)); }

This is the same test as the previous test, with the only exception that it calls SelectLeaf instead of SelectNode.

Both SelectNode and SelectLeaf are implemented by SelectBoth, so the real test is whether this method obeys the identity law:

[Theory, MemberData(nameof(BifunctorLawsData))] public void SelectBothObeysIdentityLaw(IRoseTree<int, string> t) { Assert.Equal(t, t.SelectBoth(Id, Id)); }

Projecting over both dimensions with the identity function does, indeed, return an object equal to the input object.

Consistency law #

In general, it shouldn't matter whether you map with SelectBoth or a combination of SelectNode and SelectLeaf:

[Theory, MemberData(nameof(BifunctorLawsData))] public void ConsistencyLawHolds(IRoseTree<int, string> t) { DateTime f(int i) => new DateTime(i); bool g(string s) => string.IsNullOrWhiteSpace(s); Assert.Equal(t.SelectBoth(f, g), t.SelectLeaf(g).SelectNode(f)); Assert.Equal( t.SelectNode(f).SelectLeaf(g), t.SelectLeaf(g).SelectNode(f)); }

This example creates two local functions f and g. The first function, f, creates a new DateTime object from an integer, using one of the DateTime constructor overloads. The second function, g, just delegates to string.IsNullOrWhiteSpace, although I want to stress that this is just an example. The law should hold for any two (pure) functions.

The test then verifies that you get the same result from calling SelectBoth as when you call SelectNode followed by SelectLeaf, or the other way around.

Composition laws #

The composition laws insist that you can compose functions, or translations, and that again, the choice to do one or the other doesn't matter. Along each of the axes, it's just the second functor law applied. This parametrised test demonstrates that the law holds for SelectNode:

[Theory, MemberData(nameof(BifunctorLawsData))] public void SecondFunctorLawHoldsForSelectNode(IRoseTree<int, string> t) { char f(bool b) => b ? 'T' : 'F'; bool g(int i) => i % 2 == 0; Assert.Equal( t.SelectNode(x => f(g(x))), t.SelectNode(g).SelectNode(f)); }

Here, f is a local function that returns the the character 'T' for true, and 'F' for false; g is the even function. The second functor law states that mapping f(g(x)) in a single step is equivalent to first mapping over g and then map the result of that using f.

The same law applies if you fix the first dimension and translate over the second:

[Theory, MemberData(nameof(BifunctorLawsData))] public void SecondFunctorLawHoldsForSelectLeaf(IRoseTree<int, string> t) { bool f(int x) => x % 2 == 0; int g(string s) => s.Length; Assert.Equal( t.SelectLeaf(x => f(g(x))), t.SelectLeaf(g).SelectLeaf(f)); }

Here, f is the even function, whereas g is a local function that returns the length of a string. Again, the test demonstrates that the output is the same whether you map over an intermediary step, or whether you map using only a single step.

This generalises to the composition law for SelectBoth:

[Theory, MemberData(nameof(BifunctorLawsData))] public void SelectBothCompositionLawHolds(IRoseTree<int, string> t) { char f(bool b) => b ? 'T' : 'F'; bool g(int x) => x % 2 == 0; bool h(int x) => x % 2 == 0; int i(string s) => s.Length; Assert.Equal( t.SelectBoth(x => f(g(x)), y => h(i(y))), t.SelectBoth(g, i).SelectBoth(f, h)); }

Again, whether you translate in one or two steps shouldn't affect the outcome.

As all of these tests demonstrate, the bifunctor laws hold for rose trees. The tests only showcase five examples, but I hope it gives you an intuition how any rose tree is a bifunctor. After all, the SelectNode, SelectLeaf, and SelectBoth methods are all generic, and they behave the same for all generic type arguments.

Summary #

Rose trees are bifunctors. You can translate the node and leaf dimension of a rose tree independently of each other, and the bifunctor laws hold for any pure translation, no matter how you compose the projections.

As always, there can be performance differences between the various compositions, but the outputs will be the same regardless of composition.

A functor, and by extension, a bifunctor, is a structure-preserving map. This means that any projection preserves the structure of the underlying container. For rose trees this means that the shape of the tree remains the same. The number of leaves remain the same, as does the number of internal nodes.

Next: Contravariant functors.

Rose tree catamorphism

The catamorphism for a tree with different types of nodes and leaves is made up from two functions.

This article is part of an article series about catamorphisms. A catamorphism is a universal abstraction that describes how to digest a data structure into a potentially more compact value.

This article presents the catamorphism for a rose tree, as well as how to identify it. The beginning of this article presents the catamorphism in C#, with examples. The rest of the article describes how to deduce the catamorphism. This part of the article presents my work in Haskell. Readers not comfortable with Haskell can just read the first part, and consider the rest of the article as an optional appendix.

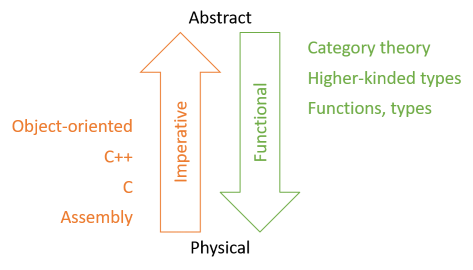

A rose tree is a general-purpose data structure where each node in a tree has an associated value. Each node can have an arbitrary number of branches, including none. The distinguishing feature from a rose tree and just any tree is that internal nodes can hold values of a different type than leaf values.

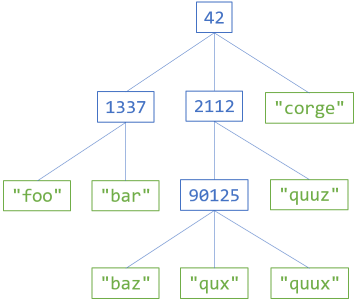

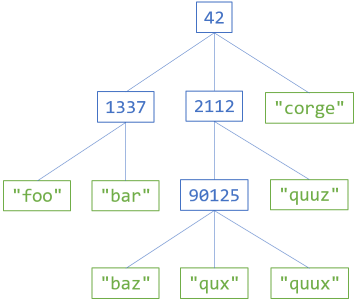

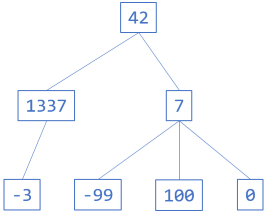

The diagram shows an example of a tree of internal integers and leaf strings. All internal nodes contain integer values, and all leaves contain strings. Each node can have an arbitrary number of branches.

C# catamorphism #

As a C# representation of a rose tree, I'll use the Church-encoded rose tree I've previously described. The catamorphism is this extension method:

public static TResult Cata<N, L, TResult>( this IRoseTree<N, L> tree, Func<N, IEnumerable<TResult>, TResult> node, Func<L, TResult> leaf) { return tree.Match( node: (n, branches) => node(n, branches.Select(t => t.Cata(node, leaf))), leaf: leaf); }

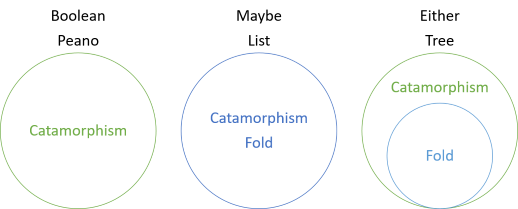

Like most of the other catamorphisms shown in this article series, this one consists of two functions. One that handles the leaf case, and one that handles the partially reduced node case. Compare it with the tree catamorphism: notice that the rose tree catamorphism's node function is identical to the the tree catamorphism. The leaf function, however, is new.

In previous articles, you've seen other examples of catamorphisms for Church-encoded types. The most common pattern has been that the Church encoding (the Match method) was also the catamorphism, with the Peano catamorphism being the only exception so far. When it comes to the Peano catamorphism, however, I'm not entirely confident that the difference between Church encoding and catamorphism is real, or whether it's just an artefact of the way I originally designed the Church encoding.

When it comes to the present rose tree, however, notice that the catamorphisms is distinctly different from the Church encoding. That's the reason I called the method Cata instead of Match.

The method simply delegates the leaf handler to Match, while it adds behaviour to the node case. It works the same way as for the 'normal' tree catamorphism.

Examples #

You can use Cata to implement most other behaviour you'd like IRoseTree<N, L> to have. In a future article, you'll see how to turn the rose tree into a bifunctor and functor, so here, we'll look at some other, more ad hoc, examples. As is also the case for the 'normal' tree, you can calculate the sum of all nodes, if you can associate a number with each node.

Consider the example tree in the above diagram. You can create it as an IRoseTree<int, string> object like this:

IRoseTree<int, string> exampleTree = RoseTree.Node(42, RoseTree.Node(1337, new RoseLeaf<int, string>("foo"), new RoseLeaf<int, string>("bar")), RoseTree.Node(2112, RoseTree.Node(90125, new RoseLeaf<int, string>("baz"), new RoseLeaf<int, string>("qux"), new RoseLeaf<int, string>("quux")), new RoseLeaf<int, string>("quuz")), new RoseLeaf<int, string>("corge"));

If you want to calculate a sum for a tree like that, you can use the integers for the internal nodes, and perhaps the length of the strings of the leaves. That hardly makes much sense, but is technically possible:

> exampleTree.Cata((x, xs) => x + xs.Sum(), x => x.Length) 93641

Perhaps slightly more useful is to count the number of leaves:

> exampleTree.Cata((_, xs) => xs.Sum(), _ => 1) 7

A leaf node has, by definition, exactly one leaf node, so the leaf lambda expression always returns 1. In the node case, xs contains the partially summed leaf node count, so just Sum those together while ignoring the value of the internal node.

You can also measure the maximum depth of the tree:

> exampleTree.Cata((_, xs) => 1 + xs.Max(), _ => 0) 3

Consistent with the example for 'normal' trees, you can arbitrarily decide that the depth of a leaf node is 0, so again, the leaf lambda expression just returns a constant value. The node lambda expression takes the Max of the partially reduced xs and adds 1, since an internal node represents another level of depth in a tree.

Rose tree F-Algebra #

As in the previous article, I'll use Fix and cata as explained in Bartosz Milewski's excellent article on F-Algebras.

As always, start with the underlying endofunctor:

data RoseTreeF a b c = NodeF { nodeValue :: a, nodes :: ListFix c } | LeafF { leafValue :: b } deriving (Show, Eq, Read) instance Functor (RoseTreeF a b) where fmap f (NodeF x ns) = NodeF x $ fmap f ns fmap _ (LeafF x) = LeafF x

Instead of using Haskell's standard list ([]) for the nodes, I've used ListFix from the article on list catamorphism. This should, hopefully, demonstrate how you can build on already established definitions derived from first principles.

As usual, I've called the 'data' types a and b, and the carrier type c (for carrier). The Functor instance as usual translates the carrier type; the fmap function has the type (c -> c1) -> RoseTreeF a b c -> RoseTreeF a b c1.

As was the case when deducing the recent catamorphisms, Haskell isn't too happy about defining instances for a type like Fix (RoseTreeF a b). To address that problem, you can introduce a newtype wrapper:

newtype RoseTreeFix a b = RoseTreeFix { unRoseTreeFix :: Fix (RoseTreeF a b) } deriving (Show, Eq, Read)

You can define Bifunctor, Bifoldable, Bitraversable, etc. instances for this type without resorting to any funky GHC extensions. Keep in mind that ultimately, the purpose of all this code is just to figure out what the catamorphism looks like. This code isn't intended for actual use.

A pair of helper functions make it easier to define RoseTreeFix values:

roseLeafF :: b -> RoseTreeFix a b roseLeafF = RoseTreeFix . Fix . LeafF roseNodeF :: a -> ListFix (RoseTreeFix a b) -> RoseTreeFix a b roseNodeF x = RoseTreeFix . Fix . NodeF x . fmap unRoseTreeFix

roseLeafF creates a leaf node:

Prelude Fix List RoseTree> roseLeafF "ploeh"

RoseTreeFix {unRoseTreeFix = Fix (LeafF "ploeh")}

roseNodeF is a helper function to create internal nodes:

Prelude Fix List RoseTree> roseNodeF 6 (consF (roseLeafF 0) nilF)

RoseTreeFix {unRoseTreeFix = Fix (NodeF 6 (ListFix (Fix (ConsF (Fix (LeafF 0)) (Fix NilF)))))}

Even with helper functions, construction of RoseTreeFix values is cumbersome, but keep in mind that the code shown here isn't meant to be used in practice. The goal is only to deduce catamorphisms from more basic universal abstractions, and you now have all you need to do that.

Haskell catamorphism #

At this point, you have two out of three elements of an F-Algebra. You have an endofunctor (RoseTreeF a b), and an object c, but you still need to find a morphism RoseTreeF a b c -> c. Notice that the algebra you have to find is the function that reduces the functor to its carrier type c, not any of the 'data types' a or b. This takes some time to get used to, but that's how catamorphisms work. This doesn't mean, however, that you get to ignore a or b, as you'll see.

As in the previous articles, start by writing a function that will become the catamorphism, based on cata:

roseTreeF = cata alg . unRoseTreeFix where alg (NodeF x ns) = undefined alg (LeafF x) = undefined

While this compiles, with its undefined implementations, it obviously doesn't do anything useful. I find, however, that it helps me think. How can you return a value of the type c from the LeafF case? You could pass a function argument to the roseTreeF function and use it with x:

roseTreeF fl = cata alg . unRoseTreeFix where alg (NodeF x ns) = undefined alg (LeafF x) = fl x

While you could, technically, pass an argument of the type c to roseTreeF and then return that value from the LeafF case, that would mean that you would ignore the x value. This would be incorrect, so instead, make the argument a function and call it with x. Likewise, you can deal with the NodeF case in the same way:

roseTreeF :: (a -> ListFix c -> c) -> (b -> c) -> RoseTreeFix a b -> c roseTreeF fn fl = cata alg . unRoseTreeFix where alg (NodeF x ns) = fn x ns alg (LeafF x) = fl x

This works. Since cata has the type Functor f => (f a -> a) -> Fix f -> a, that means that alg has the type f a -> a. In the case of RoseTreeF, the compiler infers that the alg function has the type RoseTreeF a b c -> c, which is just what you need!

You can now see what the carrier type c is for. It's the type that the algebra extracts, and thus the type that the catamorphism returns.

This, then, is the catamorphism for a rose tree. As has been the most common pattern so far, it's a pair, made from two functions. It's still not the only possible catamorphism, since you could trivially flip the arguments to roseTreeF, or the arguments to fn.

I've chosen the representation shown here because it's similar to the catamorphism I've shown for a 'normal' tree, just with the added function for leaves.

Basis #

You can implement most other useful functionality with roseTreeF. Here's the Bifunctor instance:

instance Bifunctor RoseTreeFix where bimap f s = roseTreeF (roseNodeF . f) (roseLeafF . s)

Notice how naturally the catamorphism implements bimap.

From that instance, the Functor instance trivially follows:

instance Functor (RoseTreeFix a) where fmap = second

You could probably also add Applicative and Monad instances, but I find those hard to grasp, so I'm going to skip them in favour of Bifoldable:

instance Bifoldable RoseTreeFix where bifoldMap f = roseTreeF (\x xs -> f x <> fold xs)

The Bifoldable instance enables you to trivially implement the Foldable instance:

instance Foldable (RoseTreeFix a) where foldMap = bifoldMap mempty

You may find the presence of mempty puzzling, since bifoldMap takes two functions as arguments. Is mempty a function?

Yes, mempty can be a function. Here, it is. There's a Monoid instance for any function a -> m, where m is a Monoid instance, and mempty is the identity for that monoid. That's the instance in use here.

Just as RoseTreeFix is Bifoldable, it's also Bitraversable:

instance Bitraversable RoseTreeFix where bitraverse f s = roseTreeF (\x xs -> roseNodeF <$> f x <*> sequenceA xs) (fmap roseLeafF . s)

You can comfortably implement the Traversable instance based on the Bitraversable instance:

instance Traversable (RoseTreeFix a) where sequenceA = bisequenceA . first pure

That rose trees are Traversable turns out to be useful, as a future article will show.

Relationships #

As was the case for 'normal' trees, the catamorphism for rose trees is more powerful than the fold. There are operations that you can express with the Foldable instance, but other operations that you can't. Consider the tree shown in the diagram at the beginning of the article. This is also the tree that the above C# examples use. In Haskell, using RoseTreeFix, you can define that tree like this:

exampleTree = roseNodeF 42 ( consF ( roseNodeF 1337 ( consF (roseLeafF "foo") $ consF (roseLeafF "bar") nilF)) $ consF ( roseNodeF 2112 ( consF ( roseNodeF 90125 ( consF (roseLeafF "baz") $ consF (roseLeafF "qux") $ consF (roseLeafF "quux") nilF)) $ consF (roseLeafF "quuz") nilF)) $ consF ( roseLeafF "corge") nilF)

You can trivially calculate the sum of string lengths of all leaves, using only the Foldable instance:

Prelude RoseTree> sum $ length <$> exampleTree 25

You can also fairly easily calculate a sum of all nodes, using the length of the strings as in the above C# example, but that requires the Bifoldable instance:

Prelude Data.Bifoldable Data.Semigroup RoseTree> bifoldMap Sum (Sum . length) exampleTree

Sum {getSum = 93641}

Fortunately, we get the same result as above.

Counting leaves, or measuring the depth of a tree, on the other hand, is impossible with the Foldable instance, but interestingly, it turns out that counting leaves is possible with the Bifoldable instance:

countLeaves :: (Bifoldable p, Num n) => p a b -> n countLeaves = getSum . bifoldMap (const $ Sum 0) (const $ Sum 1)

This works well with the example tree:

Prelude RoseTree> countLeaves exampleTree 7

Notice, however, that countLeaves works for any Bifoldable instance. Does that mean that you can 'count the leaves' of a tuple? Yes, it does:

Prelude RoseTree> countLeaves ("foo", "bar")

1

Prelude RoseTree> countLeaves (1, 42)

1

Or what about EitherFix:

Prelude RoseTree Either> countLeaves $ leftF "foo" 0 Prelude RoseTree Either> countLeaves $ rightF "bar" 1

Notice that 'counting the leaves' of tuples always returns 1, while 'counting the leaves' of Either always returns 0 for Left values, and 1 for Right values. This is because countLeaves considers the left, or first, data type to represent internal nodes, and the right, or second, data type to indicate leaves.

You can further follow that train of thought to realise that you can convert both tuples and EitherFix values to small rose trees:

fromTuple :: (a, b) -> RoseTreeFix a b fromTuple (x, y) = roseNodeF x (consF (roseLeafF y) nilF) fromEitherFix :: EitherFix a b -> RoseTreeFix a b fromEitherFix = eitherF (`roseNodeF` nilF) roseLeafF

The fromTuple function creates a small rose tree with one internal node and one leaf. The label of the internal node is the first value of the tuple, and the label of the leaf is the second value. Here's an example:

Prelude RoseTree> fromTuple ("foo", 42)

RoseTreeFix {unRoseTreeFix = Fix (NodeF "foo" (ListFix (Fix (ConsF (Fix (LeafF 42)) (Fix NilF)))))}

The fromEitherFix function turns a left value into an internal node with no leaves, and a right value into a leaf. Here are some examples:

Prelude RoseTree Either> fromEitherFix $ leftF "foo"

RoseTreeFix {unRoseTreeFix = Fix (NodeF "foo" (ListFix (Fix NilF)))}

Prelude RoseTree Either> fromEitherFix $ rightF 42

RoseTreeFix {unRoseTreeFix = Fix (LeafF 42)}

While counting leaves can be implemented using Bifoldable, that's not the case for measuring the depths of trees (I think; leave a comment if you know of a way to do this with one of the instances shown here). You can, however, measure tree depth with the catamorphism:

treeDepth :: RoseTreeFix a b -> Integer treeDepth = roseTreeF (\_ xs -> 1 + maximum xs) (const 0)

The implementation is similar to the implementation for 'normal' trees. I've arbitrarily decided that leaves have a depth of zero, so the function that handles leaves always returns 0. The function that handles internal nodes receives xs as a partially reduced list of depths below the node in question. Take the maximum of those and add 1, since each internal node has a depth of one.

Prelude RoseTree> treeDepth exampleTree 3

This, hopefully, illustrates that the catamorphism is more capable, and that the fold is just a (list-biased) specialisation.

Summary #

The catamorphism for rose trees is a pair of functions. One function transforms internal nodes with their partially reduced branches, while the other function transforms leaves.

For a realistic example of using a rose tree in a real program, see Picture archivist in Haskell.

This article series has so far covered progressively more complex data structures. The first examples (Boolean catamorphism and Peano catamorphism) were neither functors, applicatives, nor monads. All subsequent examples, on the other hand, are all of these, and more. The next example presents a functor that's neither applicative nor monad, yet still foldable. Obviously, what functionality it offers is still based on a catamorphism.

Church-encoded rose tree

A rose tree is a tree with leaf nodes of one type, and internal nodes of another.

This article is part of a series of articles about Church encoding. In the previous articles, you've seen how to implement a Maybe container, and how to implement an Either container. Through these examples, you've learned how to model sum types without explicit language support. In this article, you'll see how to model a rose tree.

A rose tree is a general-purpose data structure where each node in a tree has an associated value. Each node can have an arbitrary number of branches, including none. The distinguishing feature from a rose tree and just any tree is that internal nodes can hold values of a different type than leaf values.

The diagram shows an example of a tree of internal integers and leaf strings. All internal nodes contain integer values, and all leaves contain strings. Each node can have an arbitrary number of branches.

Contract #

In C#, you can represent the fundamental structure of a rose tree with a Church encoding, starting with an interface:

public interface IRoseTree<N, L> { TResult Match<TResult>( Func<N, IEnumerable<IRoseTree<N, L>>, TResult> node, Func<L, TResult> leaf); }

The structure of a rose tree includes two mutually exclusive cases: internal nodes and leaf nodes. Since there's two cases, the Match method takes two arguments, one for each case.

The interface is generic, with two type arguments: N (for Node) and L (for leaf). Any consumer of an IRoseTree<N, L> object must supply two functions when calling the Match method: a function that turns a node into a TResult value, and a function that turns a leaf into a TResult value.

Both cases must have a corresponding implementation.

Leaves #

The leaf implementation is the simplest:

public sealed class RoseLeaf<N, L> : IRoseTree<N, L> { private readonly L value; public RoseLeaf(L value) { this.value = value; } public TResult Match<TResult>( Func<N, IEnumerable<IRoseTree<N, L>>, TResult> node, Func<L, TResult> leaf) { return leaf(value); } public override bool Equals(object obj) { if (!(obj is RoseLeaf<N, L> other)) return false; return Equals(value, other.value); } public override int GetHashCode() { return value.GetHashCode(); } }

The RoseLeaf class is an Adapter over a value of the generic type L. As is always the case with Church encoding, it implements the Match method by unconditionally calling one of the arguments, in this case the leaf function, with its adapted value.

While it doesn't have to do this, it also overrides Equals and GetHashCode. This is an immutable class, so it's a great candidate to be a Value Object. Making it a Value Object makes it easier to compare expected and actual values in unit tests, among other benefits.

Nodes #

The node implementation is slightly more complex:

public sealed class RoseNode<N, L> : IRoseTree<N, L> { private readonly N value; private readonly IEnumerable<IRoseTree<N, L>> branches; public RoseNode(N value, IEnumerable<IRoseTree<N, L>> branches) { this.value = value; this.branches = branches; } public TResult Match<TResult>( Func<N, IEnumerable<IRoseTree<N, L>>, TResult> node, Func<L, TResult> leaf) { return node(value, branches); } public override bool Equals(object obj) { if (!(obj is RoseNode<N, L> other)) return false; return Equals(value, other.value) && Enumerable.SequenceEqual(branches, other.branches); } public override int GetHashCode() { return value.GetHashCode() ^ branches.GetHashCode(); } }

A node contains both a value (of the type N) and a collection of sub-trees, or branches. The class implements the Match method by unconditionally calling the node function argument with its constituent values.

Again, it overrides Equals and GetHashCode for the same reasons as RoseLeaf. This isn't required to implement Church encoding, but makes comparison and unit testing easier.

Usage #

You can use the RoseLeaf and RoseNode constructors to create new trees, but it sometimes helps to have a static helper method to create values. It turns out that there's little value in a helper method for leaves, but for nodes, it's marginally useful:

public static IRoseTree<N, L> Node<N, L>(N value, params IRoseTree<N, L>[] branches) { return new RoseNode<N, L>(value, branches); }

This enables you to create tree objects, like this:

IRoseTree<string, int> tree = RoseTree.Node("foo", new RoseLeaf<string, int>(42), new RoseLeaf<string, int>(1337));

That's a single node with the label "foo" and two leaves with the values 42 and 1337, respectively. You can create the tree shown in the above diagram like this:

IRoseTree<int, string> exampleTree = RoseTree.Node(42, RoseTree.Node(1337, new RoseLeaf<int, string>("foo"), new RoseLeaf<int, string>("bar")), RoseTree.Node(2112, RoseTree.Node(90125, new RoseLeaf<int, string>("baz"), new RoseLeaf<int, string>("qux"), new RoseLeaf<int, string>("quux")), new RoseLeaf<int, string>("quuz")), new RoseLeaf<int, string>("corge"));

You can add various extension methods to implement useful functionality. In later articles, you'll see some more compelling examples, so here, I'm only going to show a few basic examples. One of the simplest features you can add is a method that will tell you if an IRoseTree<N, L> object is a node or a leaf:

public static IChurchBoolean IsLeaf<N, L>(this IRoseTree<N, L> source) { return source.Match<IChurchBoolean>( node: (_, __) => new ChurchFalse(), leaf: _ => new ChurchTrue()); } public static IChurchBoolean IsNode<N, L>(this IRoseTree<N, L> source) { return new ChurchNot(source.IsLeaf()); }

Since this article is part of the overall article series on Church encoding, and the purpose of that article series is also to show how basic language features can be created from Church encodings, these two methods return Church-encoded Boolean values instead of the built-in bool type. I'm sure you can imagine how you could change the type to bool if you'd like.

You can use these methods like this:

> IRoseTree<Guid, double> tree = new RoseLeaf<Guid, double>(-3.2); > tree.IsLeaf() ChurchTrue { } > tree.IsNode() ChurchNot(ChurchTrue) > IRoseTree<long, string> tree = RoseTree.Node<long, string>(42); > tree.IsLeaf() ChurchFalse { } > tree.IsNode() ChurchNot(ChurchFalse)

In a future article, you'll see some more compelling examples.

Terminology #

It's not entirely clear what to call a tree like the one shown here. The Wikipedia entry doesn't state one way or the other whether internal node types ought to be distinguishable from leaf node types, but there are indications that this could be the case. At least, it seems that the term isn't well-defined, so I took the liberty to retcon the name rose tree to the data structure shown here.

In the paper that introduces the rose tree term, Meertens writes:

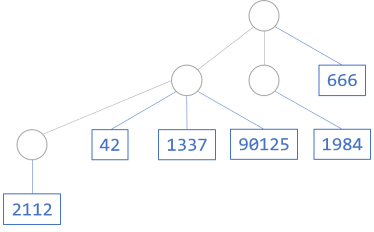

While the concept is foreign in C#, you can trivially introduce a unit data type:"We consider trees whose internal nodes may fork into an arbitrary (natural) number of sub-trees. (If such a node has zero descendants, we still consider it internal.) Each external node carries a data item. No further information is stored in the tree; in particular, internal nodes are unlabelled."

public class Unit { public readonly static Unit Instance = new Unit(); private Unit() { } }

This enables you to create a rose tree according to Meertens' definition:

IRoseTree<Unit, int> meertensTree = RoseTree.Node(Unit.Instance, RoseTree.Node(Unit.Instance, RoseTree.Node(Unit.Instance, new RoseLeaf<Unit, int>(2112)), new RoseLeaf<Unit, int>(42), new RoseLeaf<Unit, int>(1337), new RoseLeaf<Unit, int>(90125)), RoseTree.Node(Unit.Instance, new RoseLeaf<Unit, int>(1984)), new RoseLeaf<Unit, int>(666));

Visually, you could draw it like this:

Thus, the tree structure shown here seems to be a generalisation of Meertens' original definition.

I'm not a mathematician, so I may have misunderstood some things. If you have a better name than rose tree for the data structure shown here, please leave a comment.

Yeats #

Now that we're on the topic of rose tree as a term, you may, as a bonus, enjoy a similarly-titled poem:

As far as I can tell, though, Yeats' metaphor is dissimilar to Meertens'.THE ROSE TREE

"O words are lightly spoken"

Said Pearse to Connolly,

"Maybe a breath of politic words

Has withered our Rose Tree;

Or maybe but a wind that blows

Across the bitter sea.""It needs to be but watered,"

James Connolly replied,

"To make the green come out again

And spread on every side,

And shake the blossom from the bud

To be the garden's pride."

"But where can we draw water"

Said Pearse to Connolly,

"When all the wells are parched away?

O plain as plain can be

There's nothing but our own red blood

Can make a right Rose Tree."

Summary #

You may occasionally find use for a tree that distinguishes between internal and leaf nodes. You can model such a tree with a Church encoding, as shown in this article.

Next: Catamorphisms.

Comments

If you have a better name than rose tree for the data structure shown here, please leave a comment.

I would consider using heterogeneous rose tree.

In your linked Twitter thread, Keith Battocchi shared a link to the thesis of Jeremy Gibbons (which is titled Algebras for Tree Algorithms). In his thesis, he defines rose tree as you have and then derives from that (on page 45) the special cases that he calls unlabelled rose tree, leaf-labeled rose tree, branch-labeled rose tree, and homogeneous rose tree.

The advantage of Jeremy's approach is that the name of each special case is formed from the named of the general case by adding an adjective. The disadvantage is the ambiguity that comes from being inconsistent with the definition of rose tree compared to both previous works and current usage (as shown by your numerous links).

The goal of naming is communication, and the name rose tree risks miscommunication, which is worse than no communication at all. Miscommunication would result by (for example) calling this heterogeneous rose tree a rose tree and someone that knows the rose tree definition in Haskell skims over your definition thinking that they already know it. However, I think you did a good job with this article and made that unlikely.

The advantage of heterogeneous rose tree is that the name is not overloaded and heterogeneous clearly indicates the intended variant. If a reader has heard of a rose tree, then they probably know there are several variants and can infer the correct one from this additional adjective.

In the end though, I think using the name rose tree as you did was a good choice. Your have now written several articles involving rose trees and they all use the same variant. Since you always use the same variant, it would be a bit verbose to always include an additional adjective to specify the variant.

The only thing I would have considered changing is the first mention of rose tree in this article. It is common in academic writing to start with the general definition and then give shorter alternatives for brevity. This is one way it could have been written in this article.

A heterogeneous rose tree is a tree with leaf nodes of one type, and internal nodes of another.

This article is part of a series of articles about Church encoding. In the previous articles, you've seen how to implement a Maybe container, and how to implement an Either container. Through these examples, you've learned how to model sum types without explicit language support. In this article, you'll see how to model a heterogeneous rose tree.

A heterogeneous rose tree is a general-purpose data structure where each node in a tree has an associated value. Each node can have an arbitrary number of branches, including none. The distinguishing feature from a heterogeneous rose tree and just any tree is that internal nodes can hold values of a different type than leaf values. For brevity, we will omit heterogeneous and simply call this data structure a rose tree.

Tyson, thank you for writing. I agree that specificity is desirable. I haven't read the Gibbons paper, so I'll have to reflect on your summary. If I understand you correctly, a type like IRoseTree shown in this article constitutes the general case. In Haskell, I simply named it Tree a b, which is probably too general, but may help to illustrate the following point.

As far as I remember, C# doesn't have type aliases, so Haskell makes the point more succinct. If I understand you correctly, then, you could define a heterogeneous rose tree as:

type HeterogeneousRoseTree = Tree

Furthermore, I suppose that a leaf-labeled rose tree is this:

type LeafLabeledRoseTree b = Tree () b

Would the following be a branch-labeled rose tree?

type BranchLabeledRoseTree a = Tree a ()

And this is, I suppose, a homogeneous rose tree:

type HomogeneousRoseTree a = Tree a a

I can't imagine what an unlabelled rose tree is, unless it's this:

type UnlabelledRoseTree = Tree () ()

I don't see how that'd be of much use, but I suppose that's just my lack of imagination.

Chain of Responsibility as catamorphisms

The Chain of Responsibility design pattern can be viewed as a list fold over the First monoid, followed by a Maybe fold.

This article is part of a series of articles about specific design patterns and their category theory counterparts. In it, you'll see how the Chain of Responsibility design pattern is equivalent to a succession of catamorphisms. First, you apply the First Maybe monoid over the list catamorphism, and then you conclude the reduction with the Maybe catamorphism.

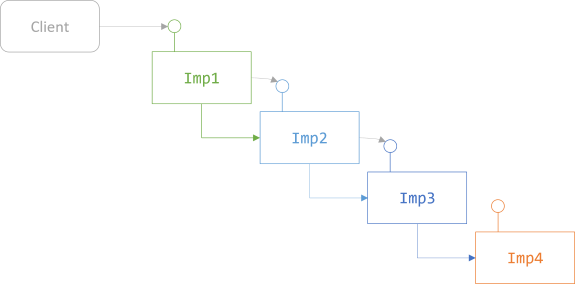

Pattern #

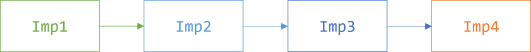

The Chain of Responsibility design pattern gives you a way to model cascading conditionals with an object structure. It's a chain (or linked list) of objects that all implement the same interface (or base class). Each object (apart from the the last) has a reference to the next object in the list.

A client (some other code) calls a method on the first object in the list. If that object can handle the request, it does so, and the interaction ends there. If the method returns a value, the object returns the value.

If the first object determines that it can't handle the method call, it calls the next object in the chain. It only knows the next object as the interface, so the only way it can delegate the call is by calling the same method as the first one. In the above diagram, Imp1 can't handle the method call, so it calls the same method on Imp2, which also can't handle the request and delegates responsibility to Imp3. In the diagram, Imp3 can handle the method call, so it does so and returns a result that propagates back up the chain. In that particular example, Imp4 never gets involved.

You'll see an example below.

One of the advantages of the pattern is that you can rearrange the chain to change its behaviour. You can even do this at run time, if you'd like, since all objects implement the same interface.

User icon example #

Consider an online system that maintains user profiles for users. A user is modelled with the User class:

public User(int id, string name, string email, bool useGravatar, bool useIdenticon)

While I only show the signature of the class' constructor, it should be enough to give you an idea. If you need more details, the entire example code base is available on GitHub.

Apart from an id, a name and email address, a user also has two flags. One flag tracks whether the user wishes to use his or her Gravatar, while another flag tracks if the user would like to use an Identicon. Obviously, both flags could be true, in which case the current business rule states that the Gravatar should take precedence.

If none of the flags are set, users might still have a picture associated with their profile. This could be a picture that they've uploaded to the system, and is being tracked by a database.

If no user icon can be found or generated, ultimately the system should use a fallback, default icon:

![]()

To summarise, the current rules are:

- Use Gravatar if flag is set.

- Use Identicon if flag is set.

- Use uploaded picture if available.

- Use default icon.

To request an icon, a client can use the IIconReader interface:

public interface IIconReader { Icon ReadIcon(User user); }

The Icon class is just a Value Object wrapper around a URL. The idea is that such a URL can be used in an img tag to show the icon. Again, the full source code is available on GitHub if you'd like to investigate the details.

The various rules for icon retrieval can be implemented using this interface.

Gravatar reader #

Although you don't have to implement the classes in the order in which you are going to compose them, it seems natural to do so, starting with the Gravatar implementation.

public class GravatarReader : IIconReader { private readonly IIconReader next; public GravatarReader(IIconReader next) { this.next = next; } public Icon ReadIcon(User user) { if (user.UseGravatar) return new Icon(new Gravatar(user.Email).Url); return next.ReadIcon(user); } }

The GravatarReader class both implements the IIconReader interface, but also decorates another object of the same polymorphic type. If user.UseGravatar is true, it generates the appropriate Gravatar URL based on the user's Email address; otherwise, it delegates the work to the next object in the Chain of Responsibility.

The Gravatar class contains the implementation details to generate the Gravatar Url. Again, please refer to the GitHub repository if you're interested in the details.

Identicon reader #

When you compose the chain, according to the above business logic, the next type of icon you should attempt to generate is an Identicon. It's natural to implement the Identicon reader next, then:

public class IdenticonReader : IIconReader { private readonly IIconReader next; public IdenticonReader(IIconReader next) { this.next = next; } public Icon ReadIcon(User user) { if (user.UseIdenticon) return new Icon(new Uri(baseUrl, HashUser(user))); return next.ReadIcon(user); } // Implementation details go here... }

Again, I'm omitting implementation details in order to focus on the Chain of Responsibility design pattern. If user.UseIdenticon is true, the IdenticonReader generates the appropriate Identicon and returns the URL for it; otherwise, it delegates the work to the next object in the chain.

Database icon reader #

The DBIconReader class attempts to find an icon ID in a database. If it succeeds, it creates a URL corresponding to that ID. The assumption is that that resource exists; either it's a file on disk, or it's an image resource generated on the spot based on binary data stored in the database.

public class DBIconReader : IIconReader { private readonly IUserRepository repository; private readonly IIconReader next; public DBIconReader(IUserRepository repository, IIconReader next) { this.repository = repository; this.next = next; } public Icon ReadIcon(User user) { if (!repository.TryReadIconId(user.Id, out string iconId)) return next.ReadIcon(user); var parameters = new Dictionary<string, string> { { "iconId", iconId } }; return new Icon(urlTemplate.BindByName(baseUrl, parameters)); } private readonly Uri baseUrl = new Uri("https://example.com"); private readonly UriTemplate urlTemplate = new UriTemplate("users/{iconId}/icon"); }

This class demonstrates some variations in the way you can implement the Chain of Responsibility design pattern. The above GravatarReader and IdenticonReader classes both follow the same implementation pattern of checking a condition, and then performing work if the condition is true. The delegation to the next object in the chain happens, in those two classes, outside of the if statement.

The DBIconReader class, on the other hand, reverses the structure of the code. It uses a Guard Clause to detect whether to exit early, which is done by delegating work to the next object in the chain.

If TryReadIconId returns true, however, the ReadIcon method proceeds to create the appropriate icon URL.

Another variation on the Chain of Responsibility design pattern demonstrated by the DBIconReader class is that it takes a second dependency, apart from next. The repository is the usual misapplication of the Repository design pattern that everyone think they use correctly. Here, it's used in the common sense to provide access to a database. The main point, though, is that you can add as many other dependencies to a link in the chain as you'd like. All links, apart from the last, however, must have a reference to the next link in the chain.

Default icon reader #

Like linked lists, a Chain of Responsibility has to ultimately terminate. You can use the following DefaultIconReader for that.

public class DefaultIconReader : IIconReader { public Icon ReadIcon(User user) { return Icon.Default; } }

This class unconditionally returns the Default icon. Notice that it doesn't have any next object it delegates to. This terminates the chain. If no previous implementation of the IIconReader has returned an Icon for the user, this one does.

Chain composition #

With four implementations of IIconReader, you can now compose the Chain of Responsibility:

IIconReader reader = new GravatarReader( new IdenticonReader( new DBIconReader(repo, new DefaultIconReader())));

The first link in the chain is a GravatarReader object that contains an IdenticonReader object as its next link, and so on. Referring back to the source code of GravatarReader, notice that its next dependency is declared as an IIconReader. Since the IdenticonReader class implements that interface, you can compose the chain like this, but if you later decide to change the order of the objects, you can do so simply by changing the composition. You could remove objects altogether, or add new classes, and you could even do this at run time, if required.

The DBIconReader class requires an extra IUserRepository dependency, here simply an existing object called repo.

The DefaultIconReader takes no other dependencies, so this effectively terminates the chain. If you try to pass another IIconReader to its constructor, the code doesn't compile.

Haskell proof of concept #

When evaluating whether a design is a functional architecture, I often port the relevant parts to Haskell. You can do the same with the above example, and put it in a form where it's clearer that the Chain of Responsibility pattern is equivalent to two well-known catamorphisms.

Readers not comfortable with Haskell can skip the next few sections. The object-oriented example continues below.

User and Icon types are defined by types equivalent to above. There's no explicit interface, however. Creation of Gravatars and Identicons are both pure functions with the type User -> Maybe Icon. Here's the Gravatar function, but the Identicon function looks similar:

gravatarUrl :: String -> String gravatarUrl email = "https://www.gravatar.com/avatar/" ++ show (hashString email :: MD5Digest) getGravatar :: User -> Maybe Icon getGravatar u = if useGravatar u then Just $ Icon $ gravatarUrl $ userEmail u else Nothing

Reading an icon ID from a database, however, is an impure operation, so the function to do this has the type User -> IO (Maybe Icon).

Lazy I/O in Haskell #

Notice that the database icon-querying function has the return type IO (Maybe Icon). In the introduction you read that the Chain of Responsibility design pattern is a sequence of catamorphisms - the first one over a list of First values. While First is, in itself, a Semigroup instance, it gives rise to a Monoid instance when combined with Maybe. Thus, to showcase the abstractions being used, you could create a list of Maybe (First Icon) values. This forms a Monoid, so is easy to fold.

The problem with that, however, is that IO is strict under evaluation, so while it works, it's no longer lazy. You can combine IO (Maybe (First Icon)) values, but it leads to too much I/O activity.

You can solve this problem with a newtype wrapper:

newtype FirstIO a = FirstIO (MaybeT IO a) deriving (Functor, Applicative, Monad, Alternative) firstIO :: IO (Maybe a) -> FirstIO a firstIO = FirstIO . MaybeT getFirstIO :: FirstIO a -> IO (Maybe a) getFirstIO (FirstIO (MaybeT x)) = x instance Semigroup (FirstIO a) where (<>) = (<|>) instance Monoid (FirstIO a) where mempty = empty

This uses the GeneralizedNewtypeDeriving GHC extension to automatically make FirstIO Functor, Applicative, Monad, and Alternative. It also uses the Alternative instance to implement Semigroup and Monoid. You may recall from the documentation that Alternative is already a "monoid on applicative functors."

Alignment #

You now have three functions with different types: two pure functions with the type User -> Maybe Icon and one impure database-bound function with the type User -> IO (Maybe Icon). In order to have a common abstraction, you should align them so that all types match. At first glance, User -> IO (Maybe (First Icon)) seems like a type that fits all implementations, but that causes too much I/O to take place, so instead, use User -> FirstIO Icon. Here's how to lift the pure getGravatar function:

getGravatarIO :: User -> FirstIO Icon getGravatarIO = firstIO . return . getGravatar

You can lift the other functions in similar fashion, to produce getGravatarIO, getIdenticonIO, and getDBIconIO, all with the mutual type User -> FirstIO Icon.

Haskell composition #

The goal of the Haskell proof of concept is to compose a function that can provide an Icon for any User - just like the above C# composition that uses Chain of Responsibility. There's, however, no way around impurity, because one of the steps involve a database, so the aim is a composition with the type User -> IO Icon.

While a more compact composition is possible, I'll show it in a way that makes the catamorphisms explicit:

getIcon :: User -> IO Icon getIcon u = do let lazyIcons = fmap (\f -> f u) [getGravatarIO, getIdenticonIO, getDBIconIO] m <- getFirstIO $ fold lazyIcons return $ fromMaybe defaultIcon m

The getIcon function starts with a list of all three functions. For each of them, it calls the function with the User value u. This may seem inefficient and redundant, because all three function calls may not be required, but since the return values are FirstIO values, all three function calls are lazily evaluated - even under IO. The result, lazyIcons, is a [FirstIO Icon] value; i.e. a lazily evaluated list of lazily evaluated values.

This first step is just to put the potential values in a form that's recognisable. You can now fold the lazyIcons to a single FirstIO Icon value, and then use getFirstIO to unwrap it. Due to do notation, m is a Maybe Icon value.

This is the first catamorphism. Granted, the generalisation that fold offers is not really required, since lazyIcons is a list; mconcat would have worked just as well. I did, however, choose to use fold (from Data.Foldable) to emphasise the point. While the fold function itself isn't the catamorphism for lists, we know that it's derived from the list catamorphism.

The final step is to utilise the Maybe catamorphism to reduce the Maybe Icon value to an Icon value. Again, the getIcon function doesn't use the Maybe catamorphism directly, but rather the derived fromMaybe function. The Maybe catamorphism is the maybe function, but you can trivially implement fromMaybe with maybe.

For golfers, it's certainly possible to write this function in a more compact manner. Here's a point-free version:

getIcon :: User -> IO Icon getIcon = fmap (fromMaybe defaultIcon) . getFirstIO . fold [getGravatarIO, getIdenticonIO, getDBIconIO]

This alternative version utilises that a -> m is a Monoid instance when m is a Monoid instance. That's the reason that you can fold a list of functions. The more explicit version above doesn't do that, but the behaviour is the same in both cases.

That's all the Haskell code we need to discern the universal abstractions involved in the Chain of Responsibility design pattern. We can now return to the C# code example.

Chains as lists #

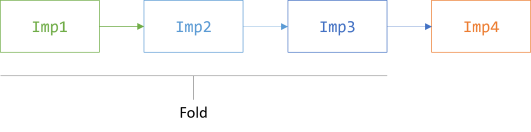

The Chain of Responsibility design pattern is often illustrated like above, in a staircase-like diagram. There's, however, no inherent requirement to do so. You could also flatten the diagram:

This looks a lot like a linked list.

The difference is, however, that the terminator of a linked list is usually empty. Here, however, you have two types of objects. All objects apart from the rightmost object represent a potential. Each object may, or may not, handle the method call and produce an outcome; if an object can't handle the method call, it'll delegate to the next object in the chain.

The rightmost object, however, is different. This object can't delegate any further, but must handle the method call. In the icon reader example, this is the DefaultIconReader class.

Once you start to see most of the list as a list of potential values, you may realise that you'll be able to collapse into it a single potential value. This is possible because a list of values where you pick the first non-empty value forms a monoid. This is sometimes called the First monoid.

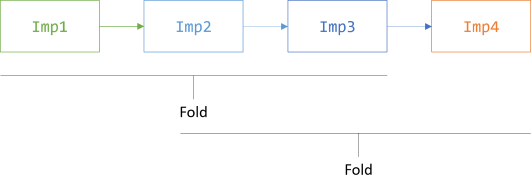

In other words, you can reduce, or fold, all of the list, except the rightmost value, to a single potential value:

When you do that, however, you're left with a single potential value. The result of folding most of the list is that you get the leftmost non-empty value in the list. There's no guarantee, however, that that value is non-empty. If all the values in the list are empty, the result is also empty. This means that you somehow need to combine a potential value with a value that's guaranteed to be present: the terminator.

You can do that wither another fold:

This second fold isn't a list fold, but rather a Maybe fold.

Maybe #

The First monoid is a monoid over Maybe, so add a Maybe class to the code base. In Haskell, the catamorphism for Maybe is called maybe, but that's not a good method name in object-oriented design. Another option is some variation of fold, but in C#, this functionality tends to be called Aggregate, at least for IEnumerable<T>, so I'll reuse that terminology:

public TResult Aggregate<TResult>(TResult @default, Func<T, TResult> func) { if (func == null) throw new ArgumentNullException(nameof(func)); return hasItem ? func(item) : @default; }

You can implement another, more list-like Aggregate overload from this one, but for this article, you don't need it.

From TryReadIconId to Maybe #

In the above code examples, DBIconReader depends on IUserRepository, which defined this method:

bool TryReadIconId(int userId, out string iconId);

From Tester-Doer isomorphisms we know, however, that such a design is isomorphic to returning a Maybe value, and since that's more composable, do that:

Maybe<string> ReadIconId(int userId);

This requires you to refactor the DBIconReader implementation of the ReadIcon method:

public Icon ReadIcon(User user) { Maybe<string> mid = repository.ReadIconId(user.Id); Lazy<Icon> lazyResult = mid.Aggregate( @default: new Lazy<Icon>(() => next.ReadIcon(user)), func: id => new Lazy<Icon>(() => CreateIcon(id))); return lazyResult.Value; }

A few things are worth a mention. Notice that the above Aggregate method (the Maybe catamorphism) requires you to supply a @default value (to be used if the Maybe object is empty). In the Chain of Responsibility design pattern, however, the fallback value is produced by calling the next object in the chain. If you do this unconditionally, however, you perform too much work. You're only supposed to call next if the current object can't handle the method call.

The solution is to aggregate the mid object to a Lazy<Icon> and then return its Value. The @default value is now a lazy computation that calls next only if its Value is read. When mid is populated, on the other hand, the lazy computation calls the private CreateIcon method when Value is accessed. The private CreateIcon method contains the same logic as before the refactoring.

This change of DBIconReader isn't strictly necessary in order to change the overall Chain of Responsibility to a pair of catamorphisms, but serves, I think, as a nice introduction to the use of the Maybe catamorphism.

Optional icon readers #

Previously, the IIconReader interface required each implementation to return an Icon object:

public interface IIconReader { Icon ReadIcon(User user); }

When you have an object like GravatarReader that may or may not return an Icon, this requirement leads toward the Chain of Responsibility design pattern. You can, however, shift the responsibility of what to do next by changing the interface:

public interface IIconReader { Maybe<Icon> ReadIcon(User user); }

An implementation like GravatarReader becomes simpler:

public class GravatarReader : IIconReader { public Maybe<Icon> ReadIcon(User user) { if (user.UseGravatar) return new Maybe<Icon>(new Icon(new Gravatar(user.Email).Url)); return new Maybe<Icon>(); } }

No longer do you have to pass in a next dependency. Instead, you just return an empty Maybe<Icon> if you can't handle the method call. The same change applies to the IdenticonReader class.

Since Maybe is a functor, and the DBIconReader already works on a Maybe<string> value, its implementation is greatly simplified:

public Maybe<Icon> ReadIcon(User user) { return repository.ReadIconId(user.Id).Select(CreateIcon); }

Since ReadIconId returns a Maybe<string>, you can simply use Select to transform the icon ID to an Icon object if the Maybe is populated.

Coalescing Composite #

As an intermediate step, you can compose the various readers using a Coalescing Composite:

public class CompositeIconReader : IIconReader { private readonly IIconReader[] iconReaders; public CompositeIconReader(params IIconReader[] iconReaders) { this.iconReaders = iconReaders; } public Maybe<Icon> ReadIcon(User user) { foreach (var iconReader in iconReaders) { var mIcon = iconReader.ReadIcon(user); if (IsPopulated(mIcon)) return mIcon; } return new Maybe<Icon>(); } private static bool IsPopulated<T>(Maybe<T> m) { return m.Aggregate(false, _ => true); } }

I prefer a more explicit design over this one, so this is just an intermediate step. This IIconReader implementation composes an array of other IIconReader objects and queries each in order to return the first populated Maybe value it finds. If it doesn't find any populated value, it returns an empty Maybe object.

You can now compose your IIconReader objects into a Composite:

IIconReader reader = new CompositeIconReader( new GravatarReader(), new IdenticonReader(), new DBIconReader(repo));

While this gives you a single object on which you can call ReadIcon, the return value of that method is still a Maybe<Icon> object. You still need to reduce the Maybe<Icon> object to an Icon object. You can do this with a Maybe helper method:

public T GetValueOrDefault(T @default) { return Aggregate(@default, x => x); }

Given a User object named user, you can now use the composition and the GetValueOrDefault method to get an Icon object:

Icon icon = reader.ReadIcon(user).GetValueOrDefault(Icon.Default);

First you use the composed reader to produce a Maybe<Icon> object, and then you use the GetValueOrDefault method to reduce the Maybe<Icon> object to an Icon object.

The latter of these two steps, GetValueOrDefault, is already based on the Maybe catamorphism, but the first step is still too implicit to clearly show the nature of what's actually going on. The next step is to refactor the Coalescing Composite to a list of monoidal values.

First #

While not strictly necessary, you can introduce a First<T> wrapper:

public sealed class First<T> { public First(T item) { if (item == null) throw new ArgumentNullException(nameof(item)); Item = item; } public T Item { get; } public override bool Equals(object obj) { if (!(obj is First<T> other)) return false; return Equals(Item, other.Item); } public override int GetHashCode() { return Item.GetHashCode(); } }

In this particular example, the First<T> class adds no new capabilities, so it's technically redundant. You could add to it methods to combine two First<T> objects into one (since First forms a semigroup), and perhaps a method or two to accumulate multiple values, but in this article, none of those are required.

While the class as shown above doesn't add any behaviour, I like that it signals intent, so I'll use it in that role.

Lazy I/O in C# #

Like in the above Haskell code, you'll need to be able to combine two First<T> objects in a lazy fashion, in such a way that if the first object is populated, the I/O associated with producing the second value never happens. In Haskell I addressed that concern with a newtype that, among other abstractions, is a monoid. You can do the same in C# with an extension method:

public static Lazy<Maybe<First<T>>> FindFirst<T>( this Lazy<Maybe<First<T>>> m, Lazy<Maybe<First<T>>> other) { if (m.Value.IsPopulated()) return m; return other; } private static bool IsPopulated<T>(this Maybe<T> m) { return m.Aggregate(false, _ => true); }

The FindFirst method returns the first (leftmost) non-empty object of two options. It's a lazy version of the First monoid, and that's still a monoid. It's truly lazy because it never accesses the Value property on other. While it has to force evaluation of the first lazy computation, m, it doesn't have to evaluate other. Thus, whenever m is populated, other can remain non-evaluated.

Since monoids accumulate, you can also write an extension method to implement that functionality:

public static Lazy<Maybe<First<T>>> FindFirst<T>(this IEnumerable<Lazy<Maybe<First<T>>>> source) { var identity = new Lazy<Maybe<First<T>>>(() => new Maybe<First<T>>()); return source.Aggregate(identity, (acc, x) => acc.FindFirst(x)); }

This overload just uses the earlier FindFirst extension method to fold an arbitrary number of lazy First<T> objects into one. Notice that Aggregate is the C# name for the list catamorphisms.

You can now compose the desired functionality using the basic building blocks of monoids, functors, and catamorphisms.

Composition from universal abstractions #

The goal is still a function that takes a User object as input and produces an Icon object as output. While you could compose that functionality directly in-line where you need it, I think it may be helpful to package the composition in a Facade object.

public class IconReaderFacade { private readonly IReadOnlyCollection<IIconReader> readers; public IconReaderFacade(IUserRepository repository) { readers = new IIconReader[] { new GravatarReader(), new IdenticonReader(), new DBIconReader(repository) }; } public Icon ReadIcon(User user) { IEnumerable<Lazy<Maybe<First<Icon>>>> lazyIcons = readers .Select(r => new Lazy<Maybe<First<Icon>>>(() => r.ReadIcon(user).Select(i => new First<Icon>(i)))); Lazy<Maybe<First<Icon>>> m = lazyIcons.FindFirst(); return m.Value.Aggregate(Icon.Default, fi => fi.Item); } }

When you initialise an IconReaderFacade object, it creates an array of the desired readers. Whenever ReadIcon is invoked, it first transforms all those readers to a sequence of potential icons. All the values in the sequence are lazily evaluated, so in this step, nothing actually happens, even though it looks as though all readers' ReadIcon method gets called. The Select method is a structure-preserving map, so all readers are still potential producers of Icon objects.

You now have an IEnumerable<Lazy<Maybe<First<Icon>>>>, which must be a good candidate for the prize for the most nested generic .NET type of 2019. It fits, though, the input type for the above FindFirst overload, so you can call that. The result is a single potential value m. That's the list catamorphism applied.

Finally, you force evaluation of the lazy computation and apply the Maybe catamorphism (Aggregate). The @default value is Icon.Default, which gets returned if m turns out to be empty. When m is populated, you pull the Item out of the First object. In either case, you now have an Icon object to return.

This composition has exactly the same behaviour as the initial Chain of Responsibility implementation, but is now composed from universal abstractions.

Summary #

The Chain of Responsibility design pattern describes a flexible way to implement conditional logic. Instead of relying on keywords like if or switch, you can compose the conditional logic from polymorphic objects. This gives you several advantages. One is that you get better separations of concerns, which will tend to make it easier to refactor the code. Another is that it's possible to change the behaviour at run time, by moving the objects around.

You can achieve a similar design, with equivalent advantages, by composing polymorphically similar functions in a list, map the functions to a list of potential values, and then use the list catamorphism to reduce many potential values to one. Finally, you apply the Maybe catamorphism to produce a value, even if the potential value is empty.

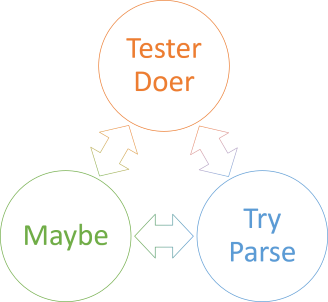

Tester-Doer isomorphisms

The Tester-Doer pattern is equivalent to the Try-Parse idiom; both are equivalent to Maybe.

This article is part of a series of articles about software design isomorphisms. An isomorphism is when a bi-directional lossless translation exists between two representations. Such translations exist between the Tester-Doer pattern and the Try-Parse idiom. Both can also be translated into operations that return Maybe.

Given an implementation that uses one of those three idioms or abstractions, you can translate your design into one of the other options. This doesn't imply that each is of equal value. When it comes to composability, Maybe is superior to the two other alternatives, and Tester-Doer isn't thread-safe.

Tester-Doer #

The first time I explicitly encountered the Tester-Doer pattern was in the Framework Design Guidelines, which is from where I've taken the name. The pattern is, however, older. The idea that you can query an object about whether a given operation would be possible, and then you only perform it if the answer is affirmative, is almost a leitmotif in Object-Oriented Software Construction. Bertrand Meyer often uses linked lists and stacks as examples, but I'll instead use the example that Krzysztof Cwalina and Brad Abrams use:

ICollection<int> numbers = // ... if (!numbers.IsReadOnly) numbers.Add(1);

The idea with the Tester-Doer pattern is that you test whether an intended operation is legal, and only perform it if the answer is affirmative. In the example, you only add to the numbers collection if IsReadOnly is false. Here, IsReadOnly is the Tester, and Add is the Doer.

As Jeffrey Richter points out in the book, this is a dangerous pattern:

"The potential problem occurs when you have multiple threads accessing the object at the same time. For example, one thread could execute the test method, which reports that all is OK, and before the doer method executes, another thread could change the object, causing the doer to fail."In other words, the pattern isn't thread-safe. While multi-threaded programming was always supported in .NET, this was less of a concern when the guidelines were first published (2006) than it is today. The guidelines were in internal use in Microsoft years before they were published, and there wasn't many multi-core processors in use back then.

Another problem with the Tester-Doer pattern is with discoverability. If you're looking for a way to add an element to a collection, you'd usually consider your search over once you find the Add method. Even if you wonder Is this operation safe? Can I always add an element to a collection? you might consider looking for a CanAdd method, but not an IsReadOnly property. Most people don't even ask the question in the first place, though.

From Tester-Doer to Try-Parse #

You could refactor such a Tester-Doer API to a single method, which is both thread-safe and discoverable. One option is a variation of the Try-Parse idiom (discussed in detail below). Using it could look like this:

ICollection<int> numbers = // ... bool wasAdded = numbers.TryAdd(1);

In this special case, you may not need the wasAdded variable, because the original Add operation never returned a value. If, on the other hand, you do care whether or not the element was added to the collection, you'd have to figure out what to do in the case where the return value is true and false, respectively.

Compared to the more idiomatic example of the Try-Parse idiom below, you may have noticed that the TryAdd method shown here takes no out parameter. This is because the original Add method returns void; there's nothing to return. From unit isomorphisms, however, we know that unit is isomorphic to void, so we could, more explicitly, have defined a TryAdd method with this signature:

public bool TryAdd(T item, out Unit unit)

There's no point in doing this, however, apart from demonstrating that the isomorphism holds.

From Tester-Doer to Maybe #

You can also refactor the add-to-collection example to return a Maybe value, although in this degenerate case, it makes little sense. If you automate the refactoring process, you'd arrive at an API like this:

public Maybe<Unit> TryAdd(T item)

Using it would look like this:

ICollection<int> numbers = // ... Maybe<Unit> m = numbers.TryAdd(1);

The contract is consistent with what Maybe implies: You'd get an empty Maybe<Unit> object if the add operation 'failed', and a populated Maybe<Unit> object if the add operation succeeded. Even in the populated case, though, the value contained in the Maybe object would be unit, which carries no further information than its existence.

To be clear, this isn't close to a proper functional design because all the interesting action happens as a side effect. Does the design have to be functional? No, it clearly isn't in this case, but Maybe is a concept that originated in functional programming, so you could be misled to believe that I'm trying to pass this particular design off as functional. It's not.

A functional version of this API could look like this:

public Maybe<ICollection<T>> TryAdd(T item)

An implementation wouldn't mutate the object itself, but rather return a new collection with the added item, in case that was possible. This is, however, always possible, because you can always concatenate item to the front of the collection. In other words, this particular line of inquiry is increasingly veering into the territory of the absurd. This isn't, however, a counter-example of my proposition that the isomorphism exists; it's just a result of the initial example being degenerate.

Try-Parse #

Another idiom described in the Framework Design Guidelines is the Try-Parse idiom. This seems to be a coding idiom more specific to the .NET framework, which is the reason I call it an idiom instead of a pattern. (Perhaps it is, after all, a pattern... I'm sure many of my readers are better informed about how problems like these are solved in other languages, and can enlighten me.)

A better name might be Try-Do, since the idiom doesn't have to be constrained to parsing. The example that Cwalina and Abrams supply, however, relates to parsing a string into a DateTime value. Such an API is already available in the base class library. Using it looks like this:

bool couldParse = DateTime.TryParse(candidate, out DateTime dateTime);

Since DateTime is a value type, the out parameter will never be null, even if parsing fails. You can, however, examine the return value couldParse to determine whether the candidate could be parsed.

In the running commentary in the book, Jeffrey Richter likes this much better:

"I like this guideline a lot. It solves the race-condition problem and the performance problem."I agree that it's better than Tester-Doer, but that doesn't mean that you can't refactor such a design to that pattern.

From Try-Parse to Tester-Doer #

While I see no compelling reason to design parsing attempts with the Tester-Doer pattern, it's possible. You could create an API that enables interaction like this:

DateTime dateTime = default(DateTime); bool canParse = DateTimeEnvy.CanParse(candidate); if (canParse) dateTime = DateTime.Parse(candidate);

You'd need to add a new CanParse method with this signature:

public static bool CanParse(string candidate)

In this particular example, you don't have to add a Parse method, because it already exists in the base class library, but in other examples, you'd have to add such a method as well.

This example doesn't suffer from issues with thread safety, since strings are immutable, but in general, that problem is always a concern with the Tester-Doer anti-pattern. Discoverability still suffers in this example.

From Try-Parse to Maybe #

While the Try-Parse idiom is thread-safe, it isn't composable. Every time you run into an API modelled over this template, you have to stop what you're doing and check the return value. Did the operation succeed? Was should the code do if it didn't?

Maybe, on the other hand, is composable, so is a much better way to model problems such as parsing. Typically, methods or functions that return Maybe values are still prefixed with Try, but there's no longer any out parameter. A Maybe-based TryParse function could look like this:

public static Maybe<DateTime> TryParse(string candidate)

You could use it like this:

Maybe<DateTime> m = DateTimeEnvy.TryParse(candidate);

If the candidate was successfully parsed, you get a populated Maybe<DateTime>; if the string was invalid, you get an empty Maybe<DateTime>.

A Maybe object composes much better with other computations. Contrary to the Try-Parse idiom, you don't have to stop and examine a Boolean return value. You don't even have to deal with empty cases at the point where you parse. Instead, you can defer the decision about what to do in case of failure until a later time, where it may be more obvious what to do in that case.

Maybe #