ploeh blog danish software design

Functional architecture is Ports and Adapters

Functional architecture tends to fall into a pit of success that looks a lot like Ports and Adapters.

In object-oriented architecture, we often struggle towards the ideal of the Ports and Adapters architecture, although we often call it something else: layered architecture, onion architecture, hexagonal architecture, and so on. The goal is to decouple the business logic from technical implementation details, so that we can vary each independently.

This creates value because it enables us to manoeuvre nimbly, responding to changes in business or technology.

Ports and Adapters #

The idea behind the Ports and Adapters architecture is that ports make up the boundaries of an application. A port is something that interacts with the outside world: user interfaces, message queues, databases, files, command-line prompts, etcetera. While the ports constitute the interface to the rest of the world, adapters translate between the ports and the application model.

The word adapter is aptly chosen, because the role of the Adapter design pattern is exactly to translate between two different interfaces.

You ought to arrive at some sort of variation of Ports and Adapters if you apply Dependency Injection, as I've previously attempted to explain.

The problem with this architecture, however, is that it seems to take a lot of explaining:

- My book about Dependency Injection is 500 pages long.

- Robert C. Martin's book about the SOLID principles, package and component design, and so on is 700 pages long.

- Domain-Driven Design is 500 pages long.

- and so on...

It's possible to implement a Ports and Adapters architecture with object-oriented programming, but it takes so much effort. Does it have to be that difficult?

Haskell as a learning aid #

Someone recently asked me: how do I know I'm being sufficiently Functional?

I was wondering that myself, so I decided to learn Haskell. Not that Haskell is the only Functional language out there, but it enforces purity in a way that neither F#, Clojure, nor Scala does. In Haskell, a function must be pure, unless its type indicates otherwise. This forces you to be deliberate in your design, and to separate pure functions from functions with (side) effects.

If you don't know Haskell, code with side effects can only happen inside of a particular 'context' called IO. It's a monadic type, but that's not the most important point. The point is that you can tell by a function's type whether or not it's pure. A function with the type ReservationRendition -> Either Error Reservation is pure, because IO appears nowhere in the type. On the other hand, a function with the type ConnectionString -> ZonedTime -> IO Int is impure because its return type is IO Int. This means that the return value is an integer, but that this integer originates from a context where it could change between function calls.

There's a fundamental distinction between a function that returns Int, and one that returns IO Int. Any function that returns Int is, in Haskell, referentially transparent. This means that you're guaranteed that the function will always return the same value given the same input. On the other hand, a function returning IO Int doesn't provide such a guarantee.

In Haskell programming, you should strive towards maximising the amount of pure functions you write, pushing the impure code to the edges of the system. A good Haskell program has a big core of pure functions, and a shell of IO code. Does that sound familiar?

It basically means that Haskell's type system enforces the Ports and Adapters architecture. The ports are all your IO code. The application's core is all your pure functions. The type system automatically creates a pit of success.

Haskell is a great learning aid, because it forces you to explicitly make the distinction between pure and impure functions. You can even use it as a verification step to figure out whether your F# code is 'sufficiently Functional'. F# is a Functional first language, but it also allows you to write object-oriented or imperative code. If you write your F# code in a Functional manner, though, it's easy to translate to Haskell. If your F# code is difficult to translate to Haskell, it's probably because it isn't Functional.

Here's an example.

Accepting reservations in F#, first attempt #

In my Test-Driven Development with F# Pluralsight course (a free, condensed version is also available), I demonstrate how to implement an HTTP API that accepts reservation requests for an on-line restaurant booking system. One of the steps when handling the reservation request is to check whether the restaurant has enough remaining capacity to accept the reservation. The function looks like this:

// int // -> (DateTimeOffset -> int) // -> Reservation // -> Result<Reservation,Error> let check capacity getReservedSeats reservation = let reservedSeats = getReservedSeats reservation.Date if capacity < reservation.Quantity + reservedSeats then Failure CapacityExceeded else Success reservation

As the comment suggests, the second argument, getReservedSeats, is a function of the type DateTimeOffset -> int. The check function calls this function to retrieve the number of already reserved seats on the requested date.

When unit testing, you can supply a pure function as a Stub; for example:

let getReservedSeats _ = 0 let actual = Capacity.check capacity getReservedSeats reservation

When finally composing the application, instead of using a pure function with a hard-coded return value, you can compose with an impure function that queries a database for the desired information:

let imp = Validate.reservation >> bind (Capacity.check 10 (SqlGateway.getReservedSeats connectionString)) >> map (SqlGateway.saveReservation connectionString)

Here, SqlGateway.getReservedSeats connectionString is a partially applied function, the type of which is DateTimeOffset -> int. In F#, you can't tell by its type that it's impure, but I know that this is the case because I wrote it. It queries a database, so isn't referentially transparent.

This works well in F#, where it's up to you whether a particular function is pure or impure. Since that imp function is composed in the application's Composition Root, the impure functions SqlGateway.getReservedSeats and SqlGateway.saveReservation are only pulled in at the edge of the system. The rest of the system is nicely protected against side-effects.

It feels Functional, but is it?

Feedback from Haskell #

In order to answer that question, I decided to re-implement the central parts of this application in Haskell. My first attempt to check the capacity was this direct translation:

checkCapacity :: Int

-> (ZonedTime -> Int)

-> Reservation

-> Either Error Reservation

checkCapacity capacity getReservedSeats reservation =

let reservedSeats = getReservedSeats $ date reservation

in if capacity < quantity reservation + reservedSeats

then Left CapacityExceeded

else Right reservation

This compiles, and at first glance seems promising. The type of the getReservedSeats function is ZonedTime -> Int. Since IO appears nowhere in this type, Haskell guarantees that it's pure.

On the other hand, when you need to implement the function to retrieve the number of reserved seats from a database, this function must, by its very nature, be impure, because the return value could change between two function calls. In order to enable that in Haskell, the function must have this type:

getReservedSeatsFromDB :: ConnectionString -> ZonedTime -> IO Int

While you can partially apply the first ConnectionString argument, the return value is IO Int, not Int.

A function with the type ZonedTime -> IO Int isn't the same as ZonedTime -> Int. Even when executing inside of an IO context, you can't convert ZonedTime -> IO Int to ZonedTime -> Int.

You can, on the other hand, call the impure function inside of an IO context, and extract the Int from the IO Int. That doesn't quite fit with the above checkCapacity function, so you'll need to reconsider the design. While it was 'Functional enough' for F#, it turns out that this design isn't really Functional.

If you consider the above checkCapacity function, though, you may wonder why it's necessary to pass in a function in order to determine the number of reserved seats. Why not simply pass in this number instead?

checkCapacity :: Int -> Int -> Reservation -> Either Error Reservation

checkCapacity capacity reservedSeats reservation =

if capacity < quantity reservation + reservedSeats

then Left CapacityExceeded

else Right reservation

That's much simpler. At the edge of the system, the application executes in an IO context, and that enables you to compose the pure and impure functions:

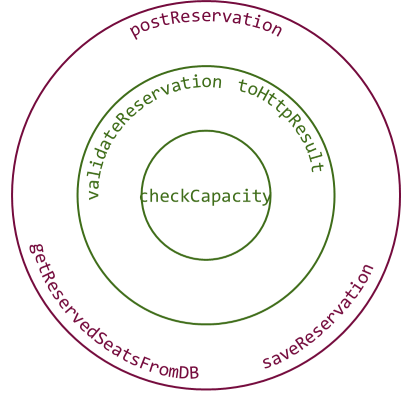

import Control.Monad.Trans (liftIO) import Control.Monad.Trans.Either (EitherT(..), hoistEither) postReservation :: ReservationRendition -> IO (HttpResult ()) postReservation candidate = fmap toHttpResult $ runEitherT $ do r <- hoistEither $ validateReservation candidate i <- liftIO $ getReservedSeatsFromDB connStr $ date r hoistEither $ checkCapacity 10 i r >>= liftIO . saveReservation connStr

(Complete source code is available here.)

Don't worry if you don't understand all the details of this composition. The highlights are these:

The postReservation function takes a ReservationRendition (think of it as a JSON document) as input, and returns an IO (HttpResult ()) as output. The use of IO informs you that this entire function is executing within the IO monad. In other words: it's impure. This shouldn't be surprising, since this is the edge of the system.

Furthermore, notice that the function liftIO is called twice. You don't have to understand exactly what it does, but it's necessary to use in order to 'pull out' a value from an IO type; for example pulling out the Int from an IO Int. This makes it clear where the pure code is, and where the impure code is: the liftIO function is applied to the functions getReservedSeatsFromDB and saveReservation. This tells you that these two functions are impure. By exclusion, the rest of the functions (validateReservation, checkCapacity, and toHttpResult) are pure.

It's interesting to observe how you can interleave pure and impure functions. If you squint, you can almost see how the data flows from the pure validateReservation function, to the impure getReservedSeatsFromDB function, and then both output values (r and i) are passed to the pure checkCapacity function, and finally to the impure saveReservation function. All of this happens within an (EitherT Error IO) () do block, so if any of these functions return Left, the function short-circuits right there and returns the resulting error. See e.g. Scott Wlaschin's excellent article on railway-oriented programming for an exceptional, lucid, clear, and visual introduction to the Either monad.

The value from this expression is composed with the built-in runEitherT function, and again with this pure function:

toHttpResult :: Either Error () -> HttpResult () toHttpResult (Left (ValidationError msg)) = BadRequest msg toHttpResult (Left CapacityExceeded) = StatusCode Forbidden toHttpResult (Right ()) = OK ()

The entire postReservation function is impure, and sits at the edge of the system, since it handles IO. The same is the case of the getReservedSeatsFromDB and saveReservation functions. I deliberately put the two database functions in the bottom of the below diagram, in order to make it look more familiar to readers used to looking at layered architecture diagrams. You can imagine that there's a cylinder-shaped figure below the circles, representing a database.

You can think of the validateReservation and toHttpResult functions as belonging to the application model. While pure functions, they translate between the external and internal representation of data. Finally, the checkCapacity function is part of the application's Domain Model, if you will.

Most of the design from my first F# attempt survived, apart from the Capacity.check function. Re-implementing the design in Haskell has taught me an important lesson that I can now go back and apply to my F# code.

Accepting reservations in F#, even more Functionally #

Since the required change is so little, it's easy to apply the lesson learned from Haskell to the F# code base. The culprit was the Capacity.check function, which ought to instead be implemented like this:

let check capacity reservedSeats reservation = if capacity < reservation.Quantity + reservedSeats then Failure CapacityExceeded else Success reservation

This simplifies the implementation, but makes the composition slightly more involved:

let imp = Validate.reservation >> map (fun r -> SqlGateway.getReservedSeats connectionString r.Date, r) >> bind (fun (i, r) -> Capacity.check 10 i r) >> map (SqlGateway.saveReservation connectionString)

This almost looks more complicated than the Haskell function. Haskell has the advantage that you can automatically use any type that implements the Monad typeclass inside of a do block, and since (EitherT Error IO) () is a Monad instance, the do syntax is available for free.

You could do something similar in F#, but then you'd have to implement a custom computation expression builder for the Result type. Perhaps I'll do this in a later blog post...

Summary #

Good Functional design is equivalent to the Ports and Adapters architecture. If you use Haskell as a yardstick for 'ideal' Functional architecture, you'll see how its explicit distinction between pure and impure functions creates a pit of success. Unless you write your entire application to execute within the IO monad, Haskell will automatically enforce the distinction, and push all communication with the external world to the edges of the system.

Some Functional languages, like F#, don't explicitly enforce this distinction. Still, in F#, it's easy to informally make the distinction and compose applications with impure functions pushed to the edges of the system. While this isn't enforced by the type system, it still feels natural.

Ad-hoc Arbitraries - now with pipes

Slightly improved syntax for defining ad-hoc, in-line Arbitraries for FsCheck.Xunit.

Last year, I described how to define and use ad-hoc, in-line Arbitraries with FsCheck.Xunit. The final example code looked like this:

[<Property(QuietOnSuccess = true)>] let ``Any live cell with > 3 live neighbors dies`` (cell : int * int) = let nc = Gen.elements [4..8] |> Arb.fromGen Prop.forAll nc (fun neighborCount -> let liveNeighbors = cell |> findNeighbors |> shuffle |> Seq.take neighborCount |> Seq.toList let actual : State = calculateNextState (cell :: liveNeighbors |> shuffle |> set) cell Dead =! actual)

There's one tiny problem with this way of expressing properties using Prop.forAll: the use of brackets to demarcate the anonymous multi-line function. Notice how the opening bracket appears to the left of the fun keyword, while the closing bracket appears eleven lines down, after the end of the expression Dead =! actual.

While it isn't pretty, I haven't found it that bad for readability, either. When you're writing the property, however, this syntax is cumbersome.

Issues writing the code #

After having written Prop.forAll nc, you start writing (fun neighborCount -> ). After you've typed the closing bracket, you have to move your cursor one place to the left. When entering new lines, you have to keep diligent, making sure that the closing bracket is always to the right of your cursor.

If you ever need to use a nested level of brackets within that anonymous function, your vigilance will be tested to its utmost. When I wrote the above property, for example, when I reached the point where I wanted to call the calculateNextState function, I wrote:

let actual : State =

calculateNextState (

The editor detects that I'm writing an opening bracket, but since I have the closing bracket to the immediate right of the cursor, this is what happens:

let actual : State =

calculateNextState ()

What normally happens when you type an opening bracket is that the editor automatically inserts a closing bracket to the right of your cursor, but in this case, it doesn't do that. There's already a closing bracket immediately to the right of the cursor, and the editor assumes that this bracket belongs to the opening bracket I just typed. What it really should have done was this:

let actual : State = calculateNextState ())

The editor doesn't have a chance, though, because at this point, I'm still typing, and the code doesn't compile. None of the alternatives compile at this point. You can't really blame the editor.

To make a long story short, enclosing a multi-line anonymous function in brackets is a source of errors. If only there was a better alternative.

Backward pipe #

The solution started to dawn on me because I've been learning Haskell for the last half year, and in Haskell, you often use the $ operator to get rid of unwanted brackets. F# has an equivalent operator: <|, the backward pipe operator.

I rarely use the <| operator in F#, but in this case, it works really well:

[<Property(QuietOnSuccess = true)>] let ``Any live cell with > 3 live neighbors dies`` (cell : int * int) = let nc = Gen.elements [4..8] |> Arb.fromGen Prop.forAll nc <| fun neighborCount -> let liveNeighbors = cell |> findNeighbors |> shuffle |> Seq.take neighborCount |> Seq.toList let actual : State = calculateNextState (cell :: liveNeighbors |> shuffle |> set) cell Dead =! actual

Notice that there's no longer any need for a closing bracket after Dead =! actual.

Summary #

When the last argument passed to a function is another function, you can replace the brackets with a single application of the <| operator. I only use this operator sparingly, but in the case of in-line ad-hoc FsCheck Arbitraries, I find it useful.

Addendum 2016-05-17: You can get rid of the nc value with TIE fighter infix notation.

Types + Properties = Software: other properties

Even simple functions may have properties that can be expressed independently of the implementation.

This article is the eighth in a series of articles that demonstrate how to develop software using types and properties. In previous articles, you've seen an extensive example of how to solve the Tennis Kata with type design and Property-Based Testing. Specifically, in the third article, you saw the introduction of a function called other. In that article we didn't cover that function with automatic tests, but this article rectifies that omission.

The source code for this article series is available on GitHub.

Implementation duplication #

The other function is used to find the opponent of a player. The implementation is trivial:

let other = function PlayerOne -> PlayerTwo | PlayerTwo -> PlayerOne

Unless you're developing software where lives, or extraordinary amounts of money, are at stake, you probably need not test such a trivial function. I'm still going to show you how you could do this, mostly because it's a good example of how Property-Based Testing can sometimes help you test the behaviour of a function, instead of the implementation.

Before I show you that, however, I'll show you why example-based unit tests are inadequate for testing this function.

You could test this function using examples, and because the space of possible input is so small, you can even cover it in its entirety:

[<Fact>] let ``other than playerOne returns correct result`` () = PlayerTwo =! other PlayerOne [<Fact>] let ``other than playerTwo returns correct result`` () = PlayerOne =! other PlayerTwo

The =! operator is a custom operator defined by Unquote, an assertion library. You can read the first expression as player two must equal other than player one.

The problem with these tests, however, is that they don't state anything not already stated by the implementation itself. Basically, what these tests state is that if the input is PlayerOne, the output is PlayerTwo, and if the input is PlayerTwo, the output is PlayerOne.

That's exactly what the implementation does.

The only important difference is that the implementation of other states this relationship more succinctly than the tests.

It's as though the tests duplicate the information already in the implementation. How does that add value?

Sometimes, this can be the only way to cover functionality with tests, but in this case, there's an alternative.

Behaviour instead of implementation #

Property-Based Testing inspires you to think about the observable behaviour of a system, rather than the implementation. Which properties do the other function have?

If you call it with a Player value, you'd expect it to return a Player value that's not the input value:

[<Property>] let ``other returns a different player`` player = player <>! other player

The <>! operator is another custom operator defined by Unquote. You can read the expression as player must not equal other player.

This property alone doesn't completely define the behaviour of other, but combined with the next property, it does:

[<Property>] let ``other other returns same player`` player = player =! other (other player)

If you call other, and then you take the output of that call and use it as input to call other again, you should get the original Player value. This property is an excellent example of what Scott Wlaschin calls there and back again.

These two properties, together, describe the behaviour of the other function, without going into details about the implementation.

Summary #

You've seen a simple example of how to describe the properties of a function without resorting to duplicating the implementation in the tests. You may not always be able to do this, but it always feels right when you can.

If you're interested in learning more about Property-Based Testing, you can watch my introduction to Property-based Testing with F# Pluralsight course.

Types + Properties = Software: finite state machine

How to compose the tennis game code into a finite state machine.

This article is the seventh in a series of articles that demonstrate how to develop software using types and properties. In the previous article, you saw how to define the initial state of a tennis game. In this article, you'll see how to define the tennis game as a finite state machine.

The source code for this article series is available on GitHub.

Scoring a sequence of balls #

Previously, you saw how to score a game in an ad-hoc fashion:

> let firstBall = score newGame PlayerTwo;;

val firstBall : Score = Points {PlayerOnePoint = Love;

PlayerTwoPoint = Fifteen;}

> let secondBall = score firstBall PlayerOne;;

val secondBall : Score = Points {PlayerOnePoint = Fifteen;

PlayerTwoPoint = Fifteen;}

You'll quickly get tired of that, so you may think that you can do something like this instead:

> newGame |> (fun s -> score s PlayerOne) |> (fun s -> score s PlayerTwo);;

val it : Score = Points {PlayerOnePoint = Fifteen;

PlayerTwoPoint = Fifteen;}

That does seem a little clumsy, though, but it's not really what you need to be able to do either. What you'd probably appreciate more is to be able to calculate the score given a sequence of wins. A sequence of wins is a list of Player values; for instance, [PlayerOne; PlayerOne; PlayerTwo] means that player one won the first two balls, and then player two won the third ball.

Since we already know the initial state of a game, and how to calculate the score for each ball, it's a one-liner to calculate the score for a sequence of balls:

let scoreSeq wins = Seq.fold score newGame wins

This function has the type Player seq -> Score. It uses newGame as the initial state of a left fold over the wins. The aggregator function is the score function.

You can use it with a sequence of wins, like this:

> scoreSeq [PlayerOne; PlayerOne; PlayerTwo];;

val it : Score = Points {PlayerOnePoint = Thirty;

PlayerTwoPoint = Fifteen;}

Since player one won the first two balls, and player two then won the third ball, the score at that point is thirty-fifteen.

Properties for the state machine #

Can you think of any properties for the scoreSeq function?

There are quite a few, it turns out. It may be a good exercise if you pause reading for a couple of minutes, and try to think of some properties.

If you have a list of properties, you can compare them with the ones I though of.

Before we start, you may find the following helper functions useful:

let isPoints = function Points _ -> true | _ -> false let isForty = function Forty _ -> true | _ -> false let isDeuce = function Deuce -> true | _ -> false let isAdvantage = function Advantage _ -> true | _ -> false let isGame = function Game _ -> true | _ -> false

These can be useful to express some properties, but I don't think that they are generally useful, so I put them together with the test code.

Limited win sequences #

If you look at short win sequences, you can already say something about them. For instance, it takes at least four balls to finish a game, so you know that if you have fewer wins than four, the game must still be on-going:

[<Property>] let ``A game with less then four balls isn't over`` (wins : Player list) = let actual : Score = wins |> Seq.truncate 3 |> scoreSeq test <@ actual |> (not << isGame) @>

The built-in function Seq.truncate returns only the n (in this case: 3) first elements of a sequence. If the sequence is shorter than that, then the entire sequence is returned. The use of Seq.truncate 3 guarantees that the sequence passed to scoreSeq is no longer than three elements long, no matter what FsCheck originally generated.

Likewise, you can't reach deuce in less than six balls:

[<Property>] let ``A game with less than six balls can't be Deuce`` (wins : Player list) = let actual = wins |> Seq.truncate 5 |> scoreSeq test <@ actual |> (not << isDeuce) @>

This is similar to the previous property. There's one more in that family:

[<Property>] let ``A game with less than seven balls can't have any player with advantage`` (wins : Player list) = let actual = wins |> Seq.truncate 6 |> scoreSeq test <@ actual |> (not << isAdvantage) @>

It takes at least seven balls before the score can reach a state where one of the players have the advantage.

Longer win sequences #

Conversely, you can also express some properties related to win sequences of a minimum size. This one is more intricate to express with FsCheck, though. You'll need a sequence of Player values, but the sequence should be guaranteed to have a minimum length. There's no built-in in function for that in FsCheck, so you need to define it yourself:

// int -> Gen<Player list> let genListLongerThan n = let playerGen = Arb.generate<Player> let nPlayers = playerGen |> Gen.listOfLength (n + 1) let morePlayers = playerGen |> Gen.listOf Gen.map2 (@) nPlayers morePlayers

This function takes an exclusive minimum size, and returns an FsCheck generator that will generate Player lists longer than n. It does that by combining two of FsCheck's built-in generators. Gen.listOfLength generates lists of the requested length, and Gen.listOf generates all sorts of lists, including the empty list.

Both nPlayers and morePlayers are values of the type Gen<Player list>. Using Gen.map2, you can concatenate these two list generators using F#'s built-in list concatenation operator @. (All operators in F# are also functions. The (@) function has the type 'a list -> 'a list -> 'a list.)

With the genListLongerThan function, you can now express properties related to longer win sequences. As an example, if the players have played more than four balls, the score can't be a Points case:

[<Property>] let ``A game with more than four balls can't be Points`` () = let moreThanFourBalls = genListLongerThan 4 |> Arb.fromGen Prop.forAll moreThanFourBalls (fun wins -> let actual = scoreSeq wins test <@ actual |> (not << isPoints) @>)

As previously explained, Prop.forAll expresses a property that must hold for all lists generated by moreThanFourBalls.

Correspondingly, if the players have played more than five balls, the score can't be a Forty case:

[<Property>] let ``A game with more than five balls can't be Forty`` () = let moreThanFiveBalls = genListLongerThan 5 |> Arb.fromGen Prop.forAll moreThanFiveBalls (fun wins -> let actual = scoreSeq wins test <@ actual |> (not << isForty) @>)

This property is, as you can see, similar to the previous example.

Shortest completed game #

Do you still have your list of tennis game properties? Let's see if you thought of this one. The shortest possible way to complete a tennis game is when one of the players wins four balls in a row. This property should hold for all (two) players:

[<Property>] let ``A game where one player wins all balls is over in four balls`` (player) = let fourWins = Seq.init 4 (fun _ -> player) let actual = scoreSeq fourWins let expected = Game player expected =! actual

This test first defines fourWins, of the type Player seq. It does that by using the player argument created by FsCheck, and repeating it four times, using Seq.init.

The =! operator is a custom operator defined by Unquote, an assertion library. You can read the expression as expected must equal actual.

Infinite games #

The tennis scoring system turns out to be a rich domain. There are still more properties. We'll look at a final one, because it showcases another way to use FsCheck's API, and then we'll call it a day.

An interesting property of the tennis scoring system is that a game isn't guaranteed to end. It may, theoretically, continue forever, if players alternate winning balls:

[<Property>] let ``A game where players alternate never ends`` firstWinner = let alternateWins = firstWinner |> Gen.constant |> Gen.map (fun p -> [p; other p]) |> Gen.listOf |> Gen.map List.concat |> Arb.fromGen Prop.forAll alternateWins (fun wins -> let actual = scoreSeq wins test <@ actual |> (not << isGame) @>)

This property starts by creating alternateWins, an Arbitrary<Player list>. It creates lists with alternating players, like [PlayerOne; PlayerTwo], or [PlayerTwo; PlayerOne; PlayerTwo; PlayerOne; PlayerTwo; PlayerOne]. It does that by using the firstWinner argument (of the type Player) as a constant starting value. It's only constant within each test, because firstWinner itself varies.

Using that initial Player value, it then proceeds to map that value to a list with two elements: the player, and the other player, using the other function. This creates a Gen<Player list>, but piped into Gen.listOf, it becomes a Gen<Player list list>. An example of the sort of list that would generate could be [[PlayerTwo; PlayerOne]; [PlayerTwo; PlayerOne]]. Obviously, you need to flatten such lists, which you can do with List.concat; you have to do it inside of a Gen, though, so it's Gen.map List.concat. That gives you a Gen<Player list>, which you can finally turn into an Arbitrary<Player list> with Arb.fromGen.

This enables you to use Prop.forAll with alternateWins to express that regardless of the size of such alternating win lists, the game never reaches a Game state.

Summary #

In this article, you saw how to implement a finite state machine for calculating tennis scores. It's a left fold over the score function, always starting in the initial state where both players are at love. You also saw how you can express various properties that hold for a game of tennis.

In this article series, you've seen an extensive example of how to design with types and properties. You may have noticed that out of the six example articles so far, only the first one was about designing with types, and the next five articles were about Property-Based Testing. It looks as though not much is gained from designing with types.

On the contrary, much is gained by designing with types, but you don't see it, exactly because it's efficient. If you don't design with types in order to make illegal states unrepresentable, you'd have to write even more automated tests in order to test what happens when input is invalid. Also, if illegal states are representable, it would have been much harder to write Property-Based Tests. Instead of trusting that FsCheck generates only valid values based exclusively on the types, you'd have to write custom Generators or Arbitraries for each type of input. That would have been even more work than you've seen in this articles series.

Designing with types makes Property-Based Testing a comfortable undertaking. Together, they enable you to develop software that you can trust.

If you're interested in learning more about Property-Based Testing, you can watch my introduction to Property-based Testing with F# Pluralsight course.

Types + Properties = Software: initial state

How to define the initial state in a tennis game, using F#.

This article is the sixth in a series of articles that demonstrate how to develop software using types and properties. In the previous article, you saw how to compose a function that returns a new score based on a previous score, and information about which player won the ball. In this article, you'll see how to define the initial state of a tennis game.

The source code for this article series is available on GitHub.

Initial state #

You may recall from the article on designing with types that a Score is a discriminated union. One of the union cases is the Points case, which you can use to model the case where both players have either Love, Fifteen, or Thirty points.

The game starts with both players at love. You can define this as a value:

let newGame = Points { PlayerOnePoint = Love; PlayerTwoPoint = Love }

Since this is a value (that is: not a function), it would make no sense to attempt to test it. Thus, you can simply add it, and move on.

You can use this value to calculate ad-hoc scores from the beginning of a game, like this:

> let firstBall = score newGame PlayerTwo;;

val firstBall : Score = Points {PlayerOnePoint = Love;

PlayerTwoPoint = Fifteen;}

> let secondBall = score firstBall PlayerOne;;

val secondBall : Score = Points {PlayerOnePoint = Fifteen;

PlayerTwoPoint = Fifteen;}

You'll soon get tired of defining firstBall, secondBall, thirdBall, and so on, so a more general way to handle and calculate scores is warranted.

To be continued... #

In this article, you saw how to define the initial state for a tennis game. There's nothing to it, but armed with this value, you now have half of the requirements needed to turn the tennis score function into a finite state machine. You'll see how to do that in the next article.

Types + Properties = Software: composition

In which a general transition function is composed from specialised transition functions.

This article is the fifth in a series of articles that demonstrate how to develop software using types and properties. In the previous article, you witnessed the continued walk-through of the Tennis Kata done with Property-Based Test-Driven Development. In these articles, you saw how to define small, specific functions that model the transition out of particular states. In this article, you'll see how to compose these functions to a more general function.

The source code for this article series is available on GitHub.

Composing the general function #

If you recall the second article in this series, what you need to implement is a state transition of the type Score -> Player -> Score. What you have so far are the following functions:

scoreWhenPoints : PointsData -> Player -> ScorescoreWhenForty : FortyData -> Player -> ScorescoreWhenDeuce : Player -> ScorescoreWhenAdvantage : Player -> Player -> ScorescoreWhenGame : Player -> Score

These five functions are all the building blocks you need to implement the desired function of the type Score -> Player -> Score. You may recall that Score is a discriminated union defined as:

type Score = | Points of PointsData | Forty of FortyData | Deuce | Advantage of Player | Game of Player

Notice how these cases align with the five functions above. That's not a coincidence. The driving factor behind the design of these five function was to match them with the five cases of the Score type. In another article series, I've previously shown this technique, applied to a different problem.

You can implement the desired function by clicking the pieces together:

let score current winner = match current with | Points p -> scoreWhenPoints p winner | Forty f -> scoreWhenForty f winner | Deuce -> scoreWhenDeuce winner | Advantage a -> scoreWhenAdvantage a winner | Game g -> scoreWhenGame g

There's not a lot to it, apart from matching on current. If, for example, current is a Forty value, the match is the Forty case, and f represents the FortyData value in that case. The destructured f can be passed as an argument to scoreWhenForty, together with winner. The scoreWhenForty function returns a Score value, so the score function has the type Score -> Player -> Score - exactly what you want!

Here's an example of using the function:

> score Deuce PlayerOne;; val it : Score = Advantage PlayerOne

When the score is deuce and player one wins the ball, the resulting score is advantage to player one.

Properties for the score function #

Can you express some properties for the score function? Yes and no. You can't state particularly interesting properties about the function in isolation, but you can express meaningful properties about sequences of scores. We'll return to that in a later article. For now, let's focus on the function in itself.

You can, for example, state that the function can handle all input:

[<Property>] let ``score returns a value`` (current : Score) (winner : Player) = let actual : Score = score current winner true // Didn't crash - this is mostly a boundary condition test

This property isn't particularly interesting. It's mostly a smoke test that I added because I thought that it might flush out boundary issues, if any exist. That doesn't seem to be the case.

You can also add properties that examine each case of input:

[<Property>] let ``score Points returns correct result`` points winner = let actual = score (Points points) winner let expected = scoreWhenPoints points winner expected =! actual

Such a property is unlikely to be of much use, because it mostly reproduces the implementation details of the score function. Unless you're writing high-stakes software (e.g. for medical purposes), such properties are likely to have little or negative value. After all, tests are also code; do you trust the test code more than the production code? Sometimes, you may, but if you look at the source code for the score function, it's easy to review.

You can write four other properties, similar to the one above, but I'm going to skip them here. They are in the source code repository, though, so you're welcome to look there if you want to see them.

To be continued... #

In this article, you saw how to compose the five specific state transition functions into an overall state transition function. This is only a single function that calculates a score based on another score. In order to turn this function into a finite state machine, you must define an initial state and a way to transition based on a sequence of events.

In the next article, you'll see how to define the initial state, and in the article beyond that, you'll see how to move through a sequence of transitions.

If you're interested in learning more about designing with types, you can watch my Type-Driven Development with F# Pluralsight course.

Types + Properties = Software: properties for the Forties

An example of how to constrain generated input with FsCheck.

This article is the fourth in a series of articles that demonstrate how to develop software using types and properties. In the previous article, you saw how to express properties for a simple state transition: finding the next tennis score when the current score is advantage to a player. These properties were simple, because they had to hold for all input of the given type (Player). In this article, you'll see how to constrain the input that FsCheck generates, in order to express properties about tennis scores where one of the players have forty points.

The source code for this article series is available on GitHub.

Winning the game #

When one of the players have forty points, there are three possible outcomes of the next ball:

- If the player with forty points wins the ball, (s)he wins the game.

- If the other player has thirty points, and wins the ball, the score is deuce.

- If the other player has less than thirty points, and wins the ball, his or her points increases to the next level (from love to fifteen, or from fifteen to thirty).

You may recall that when one of the players have forty points, you express that score with the FortyData record type:

type FortyData = { Player : Player; OtherPlayerPoint : Point }

Since Point is defined as Love | Fifteen | Thirty, it's clear that the Player who has forty points has a higher score than the other player - regardless of the OtherPlayerPoint value. This means that if that player wins, (s)he wins the game. It's easy to express that property with FsCheck:

[<Property>] let ``Given player: 40 when player wins then score is correct`` (current : FortyData) = let actual = scoreWhenForty current current.Player let expected = Game current.Player expected =! actual

Notice that this test function takes a single function argument called current, of the type FortyData. Since illegal states are unrepresentable, FsCheck can only generate legal values of that type.

The scoreWhenForty function is a function that explicitly models what happens when the score is in the Forty case. The first argument is the data associated with the current score: current. The second argument is the winner of the next ball. In this test case, you want to express the case where the winner is the player who already has forty points: current.Player.

The expected outcome of this is always that current.Player wins the game.

The =! operator is a custom operator defined by Unquote, an assertion library. You can read the expression as expected must equal actual.

In order to pass this property, you must implement a scoreWhenForty function. This is the simplest implementation that could possible work:

let scoreWhenForty current winner = Game winner

While this passes all tests, it's obviously not a complete implementation.

Getting even #

Another outcome of a Forty score is that if the other player has thirty points, and wins the ball, the new score is deuce. Expressing this as a property is only slightly more involved:

[<Property>] let ``Given player: 40 - other: 30 when other wins then score is correct`` (current : FortyData) = let current = { current with OtherPlayerPoint = Thirty } let actual = scoreWhenForty current (other current.Player) Deuce =! actual

In this test case, the other player specifically has thirty points. There's no variability involved, so you can simply set OtherPlayerPoint to Thirty.

Notice that instead of creating a new FortyData value from scratch, this property takes current (which is generated by FsCheck) and uses a copy-and-update expression to explicitly bind OtherPlayerPoint to Thirty. This ensures that all other values of current can vary, but OtherPlayerPoint is fixed.

The first line of code shadows the current argument by binding the result of the copy-and-update expression to a new value, also called current. Shadowing means that the original current argument is no longer available in the rest of the scope. This is exactly what you want, because the function argument isn't guaranteed to model the test case where the other player has forty points. Instead, it can be any FortyData value. You can think of the argument provided by FsCheck as a seed used to arrange the Test Fixture.

The property proceeds to invoke the scoreWhenForty function with the current score, and indicating that the other player wins the ball. You saw the other function in the previous article, but it's so small that it can be repeated here without taking up much space:

let other = function PlayerOne -> PlayerTwo | PlayerTwo -> PlayerOne

Finally, the property asserts that deuce must equal actual. In other words, the expected result is deuce.

This property fails until you fix the implementation:

let scoreWhenForty current winner = if current.Player = winner then Game winner else Deuce

This is a step in the right direction, but still not the complete implementation. If the other player has only love or fifteen points, and wins the ball, the new score shouldn't be deuce.

Incrementing points #

The last test case is where it gets interesting. The situation you need to test is that one of the players has forty points, and the other player has either love or fifteen points. This feels like the previous case, but has more variable parts. In the previous test case (above), the other player always had thirty points, but in this test case, the other player's points can vary within a constrained range.

Perhaps you've noticed that so far, you haven't seen any examples of using FsCheck's API. Until now, we've been able to express properties from values generated by FsCheck without constraints. This is no longer possible, but fortunately, FsCheck comes with an excellent API that enables you to configure it. Here, you'll see how to configure it to create values from a proper subset of all possible values:

[<Property>] let ``Given player: 40 - other: < 30 when other wins then score is correct`` (current : FortyData) = let opp = Gen.elements [Love; Fifteen] |> Arb.fromGen Prop.forAll opp (fun otherPlayerPoint -> let current = { current with OtherPlayerPoint = otherPlayerPoint } let actual = scoreWhenForty current (other current.Player) let expected = incrementPoint current.OtherPlayerPoint |> Option.map (fun np -> { current with OtherPlayerPoint = np }) |> Option.map Forty expected =! Some actual)

That's only nine lines of code, and some of it is similar to the previous property you saw (above). Still, F# code can have a high degree of information density, so I'll walk you through it.

FsCheck's API is centred around Generators and Arbitraries. Ultimately, when you need to configure FsCheck, you'll need to define an Arbitrary<'a>, but you'll often use a Gen<'a> value to do that. In this test case, you need to tell FsCheck to use only the values Love and Fifteen when generating Point values.

This is done in the first line of code. Gen.elements creates a Generator that creates random values by drawing from a sequence of possible values. Here, we pass it a list of two values: Love and Fifteen. Because both of these values are of the type Point, the result is a value of the type Gen<Point>. This Generator is piped to Arb.fromGen, which turns it into an Arbitrary (for now, you don't have to worry about exactly what that means). Thus, opp is a value of type Arbitrary<Point>.

You can now take that Arbitrary and state that, for all values created by it, a particular property must hold. This is what Prop.forAll does. The first argument passed is opp, and the second argument is a function that expresses the property. When the test runs, FsCheck call this function 100 times (by default), each time passing a random value generated by opp.

The next couple of lines are similar to code you've seen before. As in the case where the other player had thirty points, you can shadow the current argument with a new value where the other player's points is set to a value drawn from opp; that is, Love or Fifteen.

Notice how the original current value comes from an argument to the containing test function, whereas otherPlayerPoint comes from the opp Arbitrary. FsCheck is smart enough to enable this combination, so you still get the variation implied by combining these two sources of random data.

The actual value is bound to the result of calling scoreWhenForty with the current score, and indicating that the other player wins the ball.

The expected outcome is a new Forty value that originates from current, but with the other player's points incremented. There is, however, something odd-looking going on with Option.map - and where did that incrementPoint function come from?

In the previous article, you saw how sometimes, a test triggers the creation of a new helper function. Sometimes, such a helper function is of such general utility that it makes sense to put it in the 'production code'. Previously, it was the other function. Now, it's the incrementPoint function.

Before I show you the implementation of the incrementPoint function, I would like to suggest that you reflect on it. The purpose of this function is to return the point that comes after a given point. Do you remember how, in the article on designing with types, we quickly realised that love, fifteen, thirty, and forty are mere labels; that we don't need to do arithmetic on these values?

There's one piece of 'arithmetic' you need to do with these values, after all: you must be able to 'add one' to a value, in order to get the next value. That's the purpose of the incrementPoint function: given Love, it'll return Fifteen, and so on - but with a twist!

What should it return when given Thirty as input? Forty? That's not possible. There's no Point value higher than Thirty. Forty doesn't exist.

The object-oriented answer would be to throw an exception, but in functional programming, we don't like such arbitrary jump statements in our code. GOTO is, after all, considered harmful.

Instead, we can return None, but that means that we must wrap all the other return values in Some:

let incrementPoint = function | Love -> Some Fifteen | Fifteen -> Some Thirty | Thirty -> None

This function has the type Point -> Point option, and it behaves like this:

> incrementPoint Love;; val it : Point option = Some Fifteen > incrementPoint Fifteen;; val it : Point option = Some Thirty > incrementPoint Thirty;; val it : Point option = None

Back to the property: the expected value is that the other player's points are incremented, and this new points value (np, for New Points) is bound to OtherPlayerPoint in a copy-and-update expression, using current as the original value. In other words, this expression returns the current score, with the only change that OtherPlayerPoint now has the incremented Point value.

This has to happen inside of an Option.map, though, because incrementPoint may return None. Furthermore, the new value created from current is of the type FortyData, but you need a Forty value. This can be achieved by piping the option value into another map that composes the Forty case constructor.

The expected value has the type Score option, so in order to be able to compare it to actual, which is 'only' a Score value, you need to make actual a Score option value as well. This is the reason expected is compared to Some actual.

One implementation that passes this and all previous properties is:

let scoreWhenForty current winner = if current.Player = winner then Game winner else match incrementPoint current.OtherPlayerPoint with | Some p -> Forty { current with OtherPlayerPoint = p } | None -> Deuce

Notice that the implementation also uses the incrementPoint function.

To be continued... #

In this article, you saw how, even when illegal states are unrepresentable, you may need to further constrain the input into a property in order to express a particular test case. The FsCheck combinator library can be used to do that. It's flexible and well thought-out.

While I could go on and show you how to express properties for more state transitions, you've now seen the most important techniques. If you want to see more of the tennis state transitions, you can always check out the source code accompanying this article series.

In the next article, instead, you'll see how to compose all these functions into a system that implements the tennis scoring rules.

If you're interested in learning more about Property-Based Testing, you can watch my introduction to Property-based Testing with F# Pluralsight course.

Types + Properties = Software: properties for the advantage state

An example of Property-Based Test-Driven Development.

This article is the third in a series of articles that demonstrate how to develop software using types and properties. In the previous article, you saw how to get started with Property-Based Testing, using a Test-Driven Development tactic. In this article, you'll see the previous Tennis Kata example continued. This time, you'll see how to express properties for the state when one of the players have the advantage.

The source code for this article series is available on GitHub.

Winning the game #

When one of the players have the advantage in tennis, the result can go one of two ways: either the player with the advantage wins the ball, in which case he or she wins the game, or the other player wins, in which case the next score is deuce. This implies that you'll have to write at least two properties: one for each situation. Let's start with the case where the advantaged player wins the ball. Using FsCheck, you can express that property like this:

[<Property>] let ``Given advantage when advantaged player wins then score is correct`` (advantagedPlayer : Player) = let actual : Score = scoreWhenAdvantage advantagedPlayer advantagedPlayer let expected = Game advantagedPlayer expected =! actual

As explained in the previous article, FsCheck will interpret this function and discover that it'll need to generate arbitrary Player values for the advantagedPlayer argument. Because illegal states are unrepresentable, you're guaranteed valid values.

This property calls the scoreWhenAdvantage function (that doesn't yet exist), passing advantagedPlayer as argument twice. The first argument is an indication of the current score. The scoreWhenAdvantage function only models how to transition out of the Advantage case. The data associated with the Advantage case is the player currently having the advantage, so passing in advantagedPlayer as the first argument describes the current state to the function.

The second argument is the winner of the ball. In this test case, the same player wins again, so advantagedPlayer is passed as the second argument as well. The exact value of advantagedPlayer doesn't matter; this property holds for all (two) players.

The =! operator is a custom operator defined by Unquote, an assertion library. You can read the expression as expected must equal actual.

In order to pass this property, you can implement the function in the simplest way that could possibly work:

let scoreWhenAdvantage advantagedPlayer winner = Game advantagedPlayer

Here, I've arbitrarily chosen to return Game advantagedPlayer, but as an alternative, Game winner also passes the test.

Back to deuce #

The above implementation of scoreWhenAdvantage is obviously incorrect, because it always claims that the advantaged player wins the game, regardless of who wins the ball. You'll need to describe the other test case as well, which is slightly more involved, yet still easy:

[<Property>] let ``Given advantage when other player wins then score is correct`` (advantagedPlayer : Player) = let actual = scoreWhenAdvantage advantagedPlayer (other advantagedPlayer) Deuce =! actual

The first argument to the scoreWhenAdvantage function describes the current score. The test case is that the other player wins the ball. In order to figure out who the other player is, you can call the other function.

Which other function?

The function you only now create for this express purpose:

let other = function PlayerOne -> PlayerTwo | PlayerTwo -> PlayerOne

As the name suggests, this function returns the other player, for any given player. In the previous article, I promised to avoid point-free style, but here I broke that promise. This function is equivalent to this:

let other player = match player with | PlayerOne -> PlayerTwo | PlayerTwo -> PlayerOne

I decided to place this function in the same module as the scoreWhenGame function, because it seemed like a generally useful function, more than a test-specific function. It turns out that the tennis score module, indeed, does need this function later.

Since the other function is part of the module being tested, shouldn't you test it as well? For now, I'll leave it uncovered by directed tests, because it's so simple that I'm confident that it works, just by looking at it. Later, I can return to it in order to add some properties.

With the other function in place, the new property fails, so you need to change the implementation of scoreWhenAdvantage in order to pass all tests:

let scoreWhenAdvantage advantagedPlayer winner = if advantagedPlayer = winner then Game winner else Deuce

This implementation deals with all combinations of input.

It turned out that, in this case, two properties are all we need in order to describe which tennis score comes after advantage.

To be continued... #

In this article, you saw how to express the properties associated with the advantage state of a tennis game. These properties were each simple. You can express each of them based on any arbitrary input of the given type, as shown here.

Even when all test input values are guaranteed to be valid, sometimes you need to manipulate an arbitrary test value in order to describe a particular test case. You'll see how to do this in the next article.

If you're interested in learning more about Property-Based Testing, you can watch my introduction to Property-based Testing with F# Pluralsight course.

Types + Properties = Software: state transition properties

Specify valid state transitions as properties.

This article is the second in a series of articles that demonstrate how to develop software using types and properties. In the previous article, you saw how to design with types so that illegal states are unrepresentable. In this article, you'll see an example of how to express properties for transitions between legal states.

The source code for this article series is available on GitHub.

This article continues the Tennis Kata example from the previous article. It uses FsCheck to generate test values, and xUnit.net as the overall test framework. It also uses Unquote for assertions.

State transitions #

While the types defined in the previous article make illegal states unrepresentable, they don't enforce any rules about how to transition from one state into another. There's yet no definition of what a state transition is, in the tennis domain. Let's make a definition, then.

A state transition should be a function that takes a current Score and the winner of a ball and returns a new Score. More formally, it should have the type Score -> Player -> Score.

(If you're thinking that all this terminology sounds like we're developing a finite state machine, you're bang on; that's exactly the case.)

For a simple domain like tennis, it'd be possible to define properties directly for such a function, but I often prefer to define a smaller function for each case, and test the properties of each of these functions. When you have all these small functions, you can easily combine them into the desired state transition function. This is the strategy you'll see in use here.

Deuce property #

The tennis types defined in the previous article guarantee that when you ask FsCheck to generate values, you will get only legal values. This makes it easy to express properties for transitions. Let's write the property first, and let's start with the simplest state transition: the transition out of deuce.

[<Property>] let ``Given deuce when player wins then score is correct`` (winner : Player) = let actual : Score = scoreWhenDeuce winner let expected = Advantage winner expected =! actual

This test exercises a transition function called scoreWhenDeuce. The case of deuce is special, because there's no further data associated with the state; when the score is deuce, it's deuce. This means that when calling scoreWhenDeuce, you don't have to supply the current state of the game; it's implied by the function itself.

You do need, however, to pass a Player argument in order to state which player won the ball. Instead of coming up with some hard-coded examples, the test simply requests Player values from FsCheck (by requiring the winner function argument).

Because the Player type makes illegal states unrepresentable, you're guaranteed that only valid Player values will be passed as the winner argument.

(In this particular example, Player can only be the values PlayerOne and PlayerTwo. FsCheck will, because of its default settings, run the property function 100 times, which means that it will generate 100 Player values. With an even distribution, that means that it will generate approximately 50 PlayerOne values, and 50 PlayerTwo values. Wouldn't it be easier, and faster, to use a [<Theory>] that deterministically generates only those two values, without duplication? Yes, in this case it would; This sometimes happens, and it's okay. In this example, though, I'm going to keep using FsCheck, because I think this entire example is a good stand-in for a more complex business problem.)

Regardless of the value of the winner argument, the property should hold that the return value of the scoreWhenDeuce function is that the winner now has the advantage.

The =! operator is a custom operator defined by Unquote. You can read it as a must equal operator: expected must equal actual.

Red phase #

When you apply Test-Driven Development, you should follow the Red/Green/Refactor cycle. In this example, we're doing just that, but at the moment the code doesn't even compile, because there's no scoreWhenDeuce function.

In the Red phase, it's important to observe that the test fails as expected before moving on to the Green phase. In order to make that happen, you can create this temporary implementation of the function:

let scoreWhenDeuce winner = Deuce

With this definition, the code compiles, and when you run the test, you get this test failure:

Test 'Ploeh.Katas.TennisProperties.Given deuce when player wins then score is correct' failed: FsCheck.Xunit.PropertyFailedException : Falsifiable, after 1 test (0 shrinks) (StdGen (427100699,296115298)): Original: PlayerOne ---- Swensen.Unquote.AssertionFailedException : Test failed: Advantage PlayerOne = Deuce false

This test failure is the expected failure, so you should now feel confident that the property is correctly expressed.

Green phase #

With the Red phase properly completed, it's time to move on to the Green phase: make the test(s) pass. For deuce, this is trivial:

let scoreWhenDeuce winner = Advantage winner

This passes 'all' tests:

Output from Ploeh.Katas.TennisProperties.Given deuce when player wins then score is correct: Ok, passed 100 tests.

The property holds.

Refactor #

When you follow the Red/Green/Refactor cycle, you should now refactor the implementation, but there's little to do at this point

You could, in fact, perform an eta reduction:

let scoreWhenDeuce = Advantage

This would be idiomatic in Haskell, but not quite so much in F#. In my experience, many people find the point-free style less readable, so I'm not going to pursue this type of refactoring for the rest of this article series.

To be continued... #

In this article, you've seen how to express the single property that when the score is deuce, the winner of the next ball will have the advantage. Because illegal states are unrepresentable, you can declaratively state the type of value(s) the property requires, and FsCheck will have no choice but to give you valid values.

In the next article, you'll see how to express properties for slightly more complex state transitions. In this article, I took care to spell out each step in the process, but in the next article, I promise to increase the pace.

If you're interested in learning more about Property-Based Testing, you can watch my introduction to Property-based Testing with F# Pluralsight course.

Types + Properties = Software: designing with types

Design types so that illegal states are unrepresentable.

This article is the first in a series of articles that demonstrate how to develop software using types and properties. In this article, you'll see an example of how to design with algebraic data types, and in future articles, you'll see how to specify properties that must hold for the system. This example uses F#, but you can design similar types in Haskell.

The source code for this article series is available on GitHub.

Tennis #

The example used in this series of articles is the Tennis Kata. Although a tennis match consists of multiple sets that again are played as several games, in the kata, you only have to implement the scoring system for a single game:

- Each player can have either of these points in one game: Love, 15, 30, 40.

- If you have 40 and you win the ball, you win the game. There are, however, special rules.

- If both have 40, the players are deuce.

- If the game is in deuce, the winner of a ball will have advantage and game ball.

- If the player with advantage wins the ball, (s)he wins the game.

- If the player without advantage wins, they are back at deuce.

You can take on this problem in many different ways, but in this article, you'll see how F#'s type system can be used to make illegal states unrepresentable. Perhaps you think this is overkill for such a simple problem, but think of the Tennis Kata as a stand-in for a more complex domain problem.

Players #

In tennis, there are two players, which we can easily model with a discriminated union:

type Player = PlayerOne | PlayerTwo

When designing with types, I often experiment with values in FSI (the F# REPL) to figure out if they make sense. For such a simple type as Player, that's not strictly necessary, but I'll show you anyway, take illustrate the point:

> PlayerOne;; val it : Player = PlayerOne > PlayerTwo;; val it : Player = PlayerTwo > PlayerZero;; PlayerZero;; ^^^^^^^^^^ error FS0039: The value or constructor 'PlayerZero' is not defined > PlayerThree;; PlayerThree;; ^^^^^^^^^^^ error FS0039: The value or constructor 'PlayerThree' is not defined

As you can see, both PlayerOne and PlayerTwo are values inferred to be of the type Player, whereas both PlayerZero and PlayerThree are illegal expressions.

Not only is it possible to represent all valid values, but illegal values are unrepresentable. Success.

Naive point attempt with a type alias #

If you're unfamiliar with designing with types, you may briefly consider using a type alias to model players' points:

type Point = int

This easily enables you to model some of the legal point values:

> let p : Point = 15;; val p : Point = 15 > let p : Point = 30;; val p : Point = 30

It looks good so far, but how do you model love? It's not really an integer.

Still, both players start with love, so it's intuitive to try to model love as 0:

> let p : Point = 0;; val p : Point = 0

It's a hack, but it works.

Are illegal values unrepresentable? Hardly:

> let p : Point = 42;; val p : Point = 42 > let p : Point = -1337;; val p : Point = -1337

With a type alias, it's possible to assign every value that the 'real' type supports. For a 32-bit integer, this means that we have four legal representations (0, 15, 30, 40), and 4,294,967,291 illegal representations of a tennis point. Clearly this doesn't meet the goal of making illegal states unrepresentable.

Second point attempt with a discriminated Union #

If you think about the problem for a while, you're likely to come to the realisation that love, 15, 30, and 40 aren't numbers, but rather labels. No arithmetic is performed on them. It's easy to constrain the domain of points with a discriminated union:

type Point = Love | Fifteen | Thirty | Forty

You can play around with values of this Point type in FSI if you will, but there should be no surprises.

A Point value isn't a score, though. A score is a representation of a state in the game, with (amongh other options) a point to each player. You can model this with a record:

type PointsData = { PlayerOnePoint : Point; PlayerTwoPoint : Point }

You can experiment with this type in FSI, like you can with the other types:

> { PlayerOnePoint = Love; PlayerTwoPoint = Love };;

val it : PointsData = {PlayerOnePoint = Love;

PlayerTwoPoint = Love;}

> { PlayerOnePoint = Love; PlayerTwoPoint = Thirty };;

val it : PointsData = {PlayerOnePoint = Love;

PlayerTwoPoint = Thirty;}

> { PlayerOnePoint = Thirty; PlayerTwoPoint = Forty };;

val it : PointsData = {PlayerOnePoint = Thirty;

PlayerTwoPoint = Forty;}

That looks promising. What happens if players are evenly matched?

> { PlayerOnePoint = Forty; PlayerTwoPoint = Forty };;

val it : PointsData = {PlayerOnePoint = Forty;

PlayerTwoPoint = Forty;}

That works as well, but it shouldn't!

Forty-forty isn't a valid tennis score; it's called deuce.

Does it matter? you may ask. Couldn't we 'just' say that forty-forty 'means' deuce and get on with it?

You could. You should try. I've tried doing this (I've done the Tennis Kata several times, in various languages), and it turns out that it's possible, but it makes the code more complicated. One of the reasons is that deuce is more than a synonym for forty-forty. The score can also be deuce after advantage to one of the players. You can, for example, have a score progression like this:

- ...

- Forty-thirty

- Deuce

- Advantage to player one

- Deuce

- Advantage to player two

- Deuce

- ...

Additionally, if you're into Domain-Driven Design, you prefer using the ubiquitous language of the domain. When the tennis domain language says that it's not called forty-forty, but deuce, the code should reflect that.

Final attempt at a point type #

Fortunately, you don't have to throw away everything you've done so far. The love-love, fifteen-love, etc. values that you can represent with the above PointsData type are all valid. Only when you approach the boundary value of forty do problems appear.

A solution is to remove the offending Forty case from Point. The updated definition of Point is this:

type Point = Love | Fifteen | Thirty

You can still represent the 'early' scores using PointsData:

> { PlayerOnePoint = Love; PlayerTwoPoint = Love };;

val it : PointsData = {PlayerOnePoint = Love;

PlayerTwoPoint = Love;}

> { PlayerOnePoint = Love; PlayerTwoPoint = Thirty };;

val it : PointsData = {PlayerOnePoint = Love;

PlayerTwoPoint = Thirty;}

Additionally, the illegal forty-forty state is now unrepresentable:

> { PlayerOnePoint = Forty; PlayerTwoPoint = Forty };;

{ PlayerOnePoint = Forty; PlayerTwoPoint = Forty };;

-------------------^^^^^

error FS0039: The value or constructor 'Forty' is not defined

This is much better, apart from the elephant in the room: you also lost the ability to model valid states where only one of the players have forty points:

> { PlayerOnePoint = Thirty; PlayerTwoPoint = Forty };;

{ PlayerOnePoint = Thirty; PlayerTwoPoint = Forty };;

--------------------------------------------^^^^^

error FS0039: The value or constructor 'Forty' is not defined

Did we just throw the baby out with the bathwater?

Not really, because we weren't close to being done anyway. While we were making progress on modelling the score as a pair of Point values, remaining work includes modelling deuce, advantage and winning the game.

Life begins at forty #

At this point, it may be helpful to recap what we have:

type Player = PlayerOne | PlayerTwo type Point = Love | Fifteen | Thirty type PointsData = { PlayerOnePoint : Point; PlayerTwoPoint : Point }

While this enables you to keep track of the score when both players have less than forty points, the following phases of a game still remain:

- One of the players have forty points.

- Deuce.

- Advantage to one of the players.

- One of the players won the game.

type FortyData = { Player : Player; OtherPlayerPoint : Point }

This is a record that specifically keeps track of the situation where one of the players have forty points. The Player element keeps track of which player has forty points, and the OtherPlayerPoint element keeps track of the other player's score. For instance, this value indicates that PlayerTwo has forty points, and PlayerOne has thirty:

> { Player = PlayerTwo; OtherPlayerPoint = Thirty };;

val it : FortyData = {Player = PlayerTwo;

OtherPlayerPoint = Thirty;}

This is a legal score. Other values of this type exist, but none of them are illegal.

Score #

Now you have two distinct types, PointsData and FortyData, that keep track of the score at two different phases of a tennis game. You still need to model the remaining three phases, and somehow turn all of these into a single type. This is an undertaking that can be surprisingly complicated in C# or Java, but is trivial to do with a discriminated union:

type Score = | Points of PointsData | Forty of FortyData | Deuce | Advantage of Player | Game of Player

This Score type states that a score is a value in one of these five cases. As an example, the game starts with both players at (not necessarily in) love:

> Points { PlayerOnePoint = Love; PlayerTwoPoint = Love };;

val it : Score = Points {PlayerOnePoint = Love;

PlayerTwoPoint = Love;}

If, for example, PlayerOne has forty points, and PlayerTwo has thirty points, you can create this value:

> Forty { Player = PlayerOne; OtherPlayerPoint = Thirty };;

val it : Score = Forty {Player = PlayerOne;

OtherPlayerPoint = Thirty;}

Notice how both values are of the same type (Score), even though they don't share the same data structure. Other example of valid values are:

> Deuce;; val it : Score = Deuce > Advantage PlayerTwo;; val it : Score = Advantage PlayerTwo > Game PlayerOne;; val it : Score = Game PlayerOne

This model of the tennis score system enables you to express all legal values, while making illegal states unrepresentable.

It's impossible to express that the score is seven-eleven:

> Points { PlayerOnePoint = Seven; PlayerTwoPoint = Eleven };;

Points { PlayerOnePoint = Seven; PlayerTwoPoint = Eleven };;

--------------------------^^^^^

error FS0039: The value or constructor 'Seven' is not defined

It's impossible to state that the score is fifteen-thirty-fifteen:

> Points { PlayerOnePoint = Fifteen; PlayerTwoPoint = Thirty; PlayerThreePoint = Fifteen };;

Points { PlayerOnePoint = Fifteen; PlayerTwoPoint = Thirty; PlayerThreePoint = Fifteen };;

------------------------------------------------------------^^^^^^^^^^^^^^^^

error FS1129: The type 'PointsData' does not contain a field 'PlayerThreePoint'

It's impossible to express that both players have forty points:

> Points { PlayerOnePoint = Forty; PlayerTwoPoint = Forty };;

Points { PlayerOnePoint = Forty; PlayerTwoPoint = Forty };;

--------------------------^^^^^

error FS0001: This expression was expected to have type

Point

but here has type

FortyData -> Score

> Forty { Player = PlayerOne; OtherPlayerPoint = Forty };;

Forty { Player = PlayerOne; OtherPlayerPoint = Forty };;

-----------------------------------------------^^^^^

error FS0001: This expression was expected to have type

Point

but here has type

FortyData -> Score

In the above example, I even attempted to express forty-forty in two different ways, but none of them work.

Summary #

You now have a model of the domain that enables you to express valid values, but that makes illegal states unrepresentable. This is half the battle won, without any moving parts. These types govern what can be stated in the domain, but they don't provide any rules for how values can transition from one state to another.

This is a task you can take on with Property-Based Testing. Since all values of these types are valid, it's easy to express the properties of a tennis game score. In the next article, you'll see how to start that work.

If you're interested in learning more about designing with types, you can watch my Type-Driven Development with F# Pluralsight course.

Comments

Great post as always. However, I saw that you are using IO monad. I wonder if you shouldn't use IO action instead? To be honest, I'm not proficient in Haskell. But according to that post they are encouraging to use IO action rather than IO monad if we are not talking about monadic properties.

Arkadiusz, thank you for writing. It's the first time I see that post, but if I understand the argument correctly, it argues against the (over)use of the monad terminology when talking about IO actions that aren't intrinsically monadic in nature. As far as I can tell, it doesn't argue against the use of the

IOtype in Haskell.It seems a reasonable point that it's unhelpful to newcomers to throw them straight into a discussion about monads, when all they want to see is a hello world example.

As some of the comments to that post point out, however, you'll soon need to compose IO actions in Haskell, and it's the monadic nature of the