ploeh blog danish software design

Composition Root Reuse

A Composition Root is application-specific. It makes no sense to reuse it across code bases.

At regular intervals, I get questions about how to reuse Composition Roots. The short answer is: you don't.

Since it appears to be a Frequently Asked Question, it seems that a more detailed answer is warranted.

Composition Roots define applications #

A Composition Root is application-specific; it's what defines a single application. After having written nice, decoupled code throughout your code base, the Composition Root is where you finally couple everything, from data access to (user) interfaces.

Here's a simplified example:

public class CompositionRoot : IHttpControllerActivator { public IHttpController Create( HttpRequestMessage request, HttpControllerDescriptor controllerDescriptor, Type controllerType) { if(controllerType == typeof(ReservationsController)) { var ctx = new ReservationsContext(); var repository = new SqlReservationRepository(ctx); request.RegisterForDispose(ctx); request.RegisterForDispose(repository); return new ReservationsController( new ApiValidator(), repository, new MaîtreD(10), new ReservationMapper()); } // Handle more controller types here... throw new ArgumentException( "Unknown Controller type: " + controllerType, "controllerType"); } }

This is an excerpt of a Composition Root for an ASP.NET Web API service, but the hosting framework isn't particularly important. What's important is that this piece of code pulls in objects from all over an application's code base in order to compose the application.

The ReservationsContext class derives from DbContext; an instance of it is injected into a SqlReservationRepository object. From this, it's clear that this application uses SQL Server for it data persistence. This decision is hard-coded into the Composition Root. You can't change this unless you recompile the Composition Root.

At another boundary of this hexagonal/onion/whatever architecture is a ReservationsController, which derives from ApiController. From this, it's clear that this application is a REST API that uses ASP.NET Web API for its implementation. This decision, too, is hard-coded into the Composition Root.

From this, it should be clear that the Composition Root is what defines the application. It combines all the loosely coupled components into an application: software that can be executed in an OS process.

It makes no more sense to attempt to reuse a Composition Root than it does attempting to 'reuse' an application.

Container-based Composition Roots #

The above example uses Pure DI in order to explain how a Composition Root is equivalent to an application definition. What about DI Container-based Composition Roots, then?

It's essentially the same story, but with a twist. Imagine that you want to use Castle Windsor to compose your Web API; I've already previously explained how to do that, but here's the IHttpControllerActivator implementation repeated:

public class WindsorCompositionRoot : IHttpControllerActivator { private readonly IWindsorContainer container; public WindsorCompositionRoot(IWindsorContainer container) { this.container = container; } public IHttpController Create( HttpRequestMessage request, HttpControllerDescriptor controllerDescriptor, Type controllerType) { var controller = (IHttpController)this.container.Resolve(controllerType); request.RegisterForDispose( new Release( () => this.container.Release(controller))); return controller; } private class Release : IDisposable { private readonly Action release; public Release(Action release) { this.release = release; } public void Dispose() { this.release(); } } }

Indeed, there's no application-specific code there; this class is completely reusable. However, this reusability is accomplished because the container is injected into the object. The container itself must be configured in order to work, and configuration code is just as application-specific as the above Pure DI example.

Thus, with a DI Container, you may be able to decouple and reuse the part of the Composition Root that performs the run-time composition. However, the design-time composition is where you put the configuration code that select which concrete types should be used in which cases. This is the part that specifies the application, and that's not reusable.

Summary #

Composition Roots aren't reusable.

Sometimes, you might be building a suite of applications that share a substantial subset of dependencies. Imagine, for example, that you're building a REST API and a batch job that together solve a business problem. The Domain Model and the Data Access Layer may be shared between these two applications, but the boundary isn't. The batch job isn't going to need any Controllers, and the REST API isn't going to need whatever is going to kick off the batch job (e.g. a scheduler). These two applications may have a lot in common, but they're still different. Thus, they can't share a Composition Root.

What about the DRY principle, then?

Different applications have differing Composition Roots. Even if they start with substantial amounts of duplicated Composition Root code, in my experience, the 'common' code tends to diverge the more the applications are allowed to evolve. That duplication may be accidental, and attempting to eliminate it may increase coupling. It's better to understand why you want to avoid duplication in the first place.

When it comes to Composition Roots, duplication tends to be accidental. Composition Roots aren't reusable.

Placement of Abstract Factories

Where should you define an Abstract Factory? Where should you implement it? Not where you'd think.

An Abstract Factory is one of the workhorses of Dependency Injection, although it's a somewhat blunt instrument. As I've described in chapter 6 of my book, whenever you need to map a run-time value to an abstraction, you can use an Abstract Factory. However, often there are more sophisticated options available.

Still, it can be a useful pattern, but you have to understand where to define it, and where to implement it. One of my readers ask:

These are good question that deserve a more thorough treatment than a tweet in reply.

Situation #

Based on the above questions, I imagine that the situation can be depicted like this:

Two or more applications share a common library. These applications may be a web service and a batch job, a web site and a mobile app, or any other combination that makes sense to you.

Defining the Abstract Factory #

Where should an Abstract Factory be defined? In order to answer this question, first you must understand what an Abstract Factory is. Essentially, it's an interface (or Abstract Base Class - it's not important) that looks like this:

public interface IFactory<T> { T Create(object context); }

Sometimes, the Abstract Factory is a non-generic interface; sometimes it takes more than a single parameter; often, the parameter(s) have stronger types than object.

Where do interfaces go?

From Agile Principles, Patterns, and Practices, chapter 11, we know that the Dependency Inversion Principle means that "clients [...] own the abstract interfaces". This makes sense, if you think about it.

Imagine that the (shared) library defines a concrete class Foo that takes three values in its constructor:

public Foo(Guid bar, int baz, string qux)

Why would the library ever need to define an interface like the following?

public interface IFooFactory { Foo Create(Guid bar, int baz, string qux); }

It could, but what would be the purpose? The library itself doesn't need the interface, because the Foo class has a nice constructor it can use.

Often, the Abstract Factory pattern is most useful if you have some of the values available at composition time, but can't fully compose the object because you're waiting for one of the values to materialize at run-time. If you think this sounds abstract, my article series on Role Hints contains some realistic examples - particularly the articles Metadata Role Hint, Role Interface Role Hint, and Partial Type Name Role Hint.

A client may, for example, wait for the bar value before it can fully compose an instance of Foo. Thus, the client can define an interface like this:

public interface IFooFactory { Foo Create(Guid bar); }

This makes sense to the client, but not to the library. From the library's perspective, what's so special about bar? Why should the library define the above Abstract Factory, but not the following?

public partial interface IFooFactory { Foo Create(int baz); }

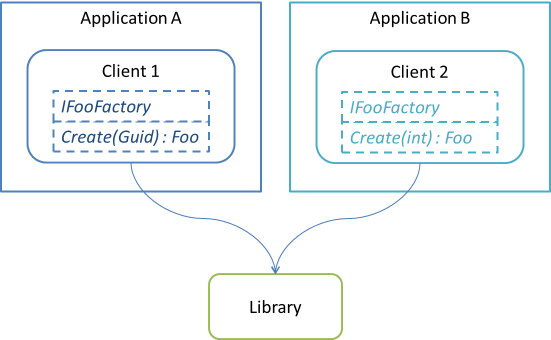

Such Abstract Factories make no sense to the library; they are meaningful only to their clients. Since the client owns the interfaces, they should be defined together with their clients. A more detailed diagram illustrates these relationships:

As you can see, although both definitions of IFooFactory depend on the shared library (since they both return instances of Foo), they are two different interfaces. In Client 1, apparently, the run-time value to be mapped to Foo is bar (a Guid), whereas in Client 2, the run-time value to be mapped to Foo is baz (an int).

The bottom line is: by default, libraries shouldn't define Abstract Factories for their own concrete types. Clients should define the Abstract Factories they need (if any).

Implementing the Abstract Factory #

While I've previously described how to implement an Abstract Factory, I may then have given short shrift to the topic of where to put the implementation.

Perhaps a bit surprising, this (at least partially) depends on the return type of the Abstract Factory's Create method. In the case of both IFooFactory definitions above, the return type of the Create methods is the concrete Foo class, defined in the shared library. This means that both Client 1 and Client 2 already depends on the shared library. In such situations, they can each implement their Abstract Factories as Manually Coded Factories. They don't have to do this, but at least it's an option.

On the other hand, if the return type of an Abstract Factory is another interface defined by the client itself, it's a different story. Imagine, as an alternative scenario, that a client depends on an IPloeh interface that it has defined by itself. It may also define an Abstract Factory like this:

public interface IPloehFactory { IPloeh Create(Guid bar); }

This has nothing to do with the library that defines the Foo class. However, another library, somewhere else, may implement an Adapter of IPloeh over Foo. If this is the case, that implementing third party could also implement IPloehFactory. In such cases, the library that defines IPloeh and IPloehFactory must not implement either interface, because that creates the coupling it works so hard to avoid.

The third party that ultimately implements these interfaces is often the Composition Root. If this is the case, other implementation options are Container-based Factories or Dynamic Proxies.

Summary #

In order to answer the initial question: my default approach would be to implement the Abstract Factory multiple times, favouring decoupling over DRY. These days I prefer Pure DI, so I'd tend to go with the Manually Coded Factory.

Still, this answer of mine presupposes that Abstract Factory is the correct answer to a design problem. Often, it's not. It can lead to horrible interfaces like IFooManagerFactoryStrategyFactoryFactory, so I consider Abstract Factory as a last resort. Often, the Metadata, Role Interface, or Partial Type Name Role Hints are better options. In the degenerate case where there's no argument to the Abstract Factory's Create method, a Decoraptor is worth considering.

This article explains the matter in terms of relatively simple dependency graphs. In general, dependency graphs should be shallow, but if you want to learn about principles for composing more complex dependency graphs, Agile Principles, Patterns, and Practices contains a chapter on Principles of Package and Component Design (chapter 28) that I recommend.

Exception messages are for programmers

Exception messages should be aimed at other developers, not end users.

Once in a while, I come across fellow programmers with uncertain understanding of the purpose of exception messages. In my experience, when it comes to writing exception messages, most developers approach the job with one of these mindsets:

- Write the shortest possible exception message; if possible, ignore good grammar, punctuation, and proper spelling.

- Write lovingly crafted error messages for the end users.

Damn the torpedoes #

As far as I can tell, the most common approach to writing error messages is to get it over with as quickly as possible. Apparently, writing a good exception message is such tedious work that a developer can't possibly be bothered with it. Here are some examples:

throw new Exception("Unknown FaileType");

or:

throw new Exception("Unecpected workingDirectory");

These aren't particularly helpful. At the very least, is it too much to ask that they apply correct spelling and punctuation? Would it have been a horrible experience for the developer to write "Unknown FailureType.", or "Unexpected workingDirectory."?

Even if spelling, grammar, and punctuation are corrected, these exception messages aren't particularly helpful to anyone. Fair enough that the FailureType or workingDirectory values were unknown or unexpected, but what were they, then?

If you're seeing such exceptions while working with the code in your IDE, you may be able to attach a debugger and figure out what was the wrong value, but if you're looking at an error log from a production system, you'd really like to know the exact value that was unexpected.

Unfortunately, most developers apparently can't be bothered with supplying even such rudimentary information.

Exception messages for end users? #

Developers who do care about exception messages often seem to have end users in mind. They may spell exception messages correctly, but shy away from providing technical information in the messages, because they are considering the user experience for the end users. They would argue that the "Unknown FailureType" shouldn't be included in the exception message, because it's too technical.

It is, indeed, too technical for end users, but the misconception here is that exception messages should be exposed to end users at all. These developers seems to have a plan for exception handling, which essentially involves catching all exceptions at the boundary of the application, and simply show the exception message to the end user.

That's unlikely to provide a good user experience.

It should be clear that "Unknown FailureType." isn't particularly helpful to a fellow programmer, but it's even more useless to a non-technical end user. Thus, in an effort to shield end users from the technical failure modes of our program, we make exception messages useless to end users and fellow programmers alike.

Security #

Another argument I often encounter is one of security - in this case Information Disclosure. According to the argument, exception messages shouldn't include run-time values, because that may leak information about how the system works. Again, this argument seems to hinge on the implied usage of exception messages shown to end users.

Language #

Exception messages aren't for end users. End users don't speak your language. They don't speak 'Technical'. They may not even speak English. What if your target demographic is all Dutch? Are you going to write all of your exception messages in Dutch? Even if you do, what about exceptions thrown by your Base Class Library or third-party libraries? These are likely to be in English, so if you catch and display all exceptions, you may occasionally display an English message to your Dutch users.

What if your application is designed for a Swiss audience? In which language are you going to write your exception messages? German? French? Italian? Romansh?

Conceptual coupling #

All of the above arguments even implicitly assume that you're writing exception messages for a known system. What if you're writing a reusable library? If you're writing a reusable library, you don't know the context in which it's going to be used. If you don't know the context, then how can you write an appropriate message for an end user?

It should be clear that when writing a reusable library, you're unlikely to know the language of the end user, but it's worse than that: you don't know anything about the end user. You don't even know if there's going to be an end user at all; your library may as well be running inside a batch job.

The converse is true as well. Even if you aren't writing a reusable library, end user-targeted exception messages increases the coupling in the system, because exception messages would be coupled to a particular user interface. This sort of coupling doesn't occur in the type system, but is conceptual. Exactly because it's not tied to any type system, an automated tool can't detect it, so it's much harder to notice, and more insidious as a result.

Exception messages are not for end users. Applications can catch known exception types and translate them to context-aware error messages, but this is a user interface concern - not a technical concern.

Exception messages for client developers #

Now that you understand that exception messages shouldn't be aimed at end users, who's left? Your fellow programmers, including your future self. As I explain in my encapsulation course, exception messages can be used as documentation of the system.

Imagine that you're using a new library that you don't yet know well. Which exception message would you, as a programmer, prefer to encounter?

"Unecpected workingDirectory"or this:

How do those two messages make you feel?"You tried to provide a working directory string that doesn't represent a working directory. It's not your fault, because it wasn't possible to design the FileStore class in such a way that this is a statically typed pre-condition, but please supply a valid path to an existing directory.

"The invalid value was: "fllobdedy"."

The first type of exception message often make me feel that I suck. The second is mostly an annoyance that my program doesn't work, followed by delight that the object I'm using makes it easy for me to solve my problem.

If you encounter the first exception message, you'll often need to attach a debugger to figure out what was wrong, or at least open the offending code to understand what made it fail. In the second example, the object you're using does its best to provide you with all the information you need in order to solve the problem.

Call to action #

Do write useful exception messages aimed at other developers. Use correct grammar, spelling, and punctuation. Try to phrase the message in a non-blaming way. Supply information about the context or the offending value. If possible, suggest how to resolve the error.

It will take you an extra five minutes to write such an exception message, but will save your colleagues, your clients, and your future self hours of troubleshooting and debugging.

Comments

Exceptions with exceptionally great error messages is a pet peeve of mine, and I've always had the personal philosophy that exceptions should not be a slap in the face; they should be a step forward. Therefore, I usually follow the rule that exceptions must provide both a) details about the error (including, of course, the wrong value and information about the expected domain), and b) one or more suggestions for a way forward (which may have been done implicitly already by elaborating on the domain, but may sometimes require extra effort like e.g. helping with coming up with a proper value).

I have a question for you though, regarding exceptions: I usually introduce a special exception when I'm building software for clients: a DomainException! As you might have guessed, it's a special exception that is meant to be consumed by humanoids, i.e. the end user. This means that it is crafted to be displayed without a stack trace, providing all the necessary context through its Message alone. This is the exception that the domain model may throw from deep down, telling the user intimate details about a failed validation that could only be checked at that time and place.

As far as I can see, there's two problems with my DomainException: 1) It's not that exceptional since it's used as a fallback validation that can be used when the UI is too lazy to provide the validation (or has higher prioritized work to do), and 2) I wouldn't know how to introduce a second language in my application with this approach.

The 1st "problem" isn't really a problem in my book - I get that it's silly to use exceptions for control flow, but I bet there's no way you can provide a clearer and more succinct way of guarding the domain model's integrity AND provide an excellent error message at the same time than you can by throwing an appropriate DomainException.

The 2nd problem could be a problem though - luckily, I haven't yet tried building something that didn't use either Danish or English as its only language, but I guess I could make the DomainException localizable somehow...

I'm curious, though, as to how other people solve the problem of deep-domain validation, and now - since you wrote this blog post - I'm asking you: how do you do it? :)

I wholeheartedly agree with you, Mark. Users shouldn't see technical error messages and they really don't care about them. They just want it to work and when it doesn't, they want to feel sure that somebody is looking into it.

Exceptions and error handling are, obviously, tightly connected and I have written about this. Its a long piece, but the gist of it is that there are three general classes of errors:

- Unrecoverable errors - the computers and the users involved cannot do anything about.

- Recoverable errors requiring user intervention - e.g. spelling mistakes.

- Recoverable errors not requiring user intervention - e.g. time outs, which can be automatically retried.

What is important for an exception is for it to contain enough information for the UI-layer (be it web, desktop, whatever), to ensure the user that somebody is actually on the go to solve the problem. It could be something as simple as a link to where the user can be updated about progress. This of course means that exceptions should be logged and meticulously followed up upon, which takes time and resources.

This leads on to the general concept of application logging, which I have not written about, yet ;)

To Mogens: I think your DomainException should not contain any text, per say, just the data. The text should then be stored

in resources, the same way as any other localizable text should be.

I sympathize with Mogens. I, too, have found situations where an exception wants to be able to provide a user-facing message. However, I think I've found a better pattern for this that avoids a lot of problems. I posted about it here.

It's a good article which mostly forgotten by developers. The points are well delivered. I also have some of those badly-formed exceptions. Which I just fixed after reading this article.

As for Mogens, I kinda agree with Torben. The DomainException may contain text or raw data, and let the

top-level exception handler at your application handle the message format for you. It can decrease coupling as you can

handle the message to different UI (batch, web, desktop, mobile). It also provide more properly formatted text.

Mogens, thank you for writing. I know that you have a passion for well-written exception messages; in fact, you are one of the many people who inspired me to make exception messages more and more helpful.

How do I deal with 'deep' exceptions? First of all, I try hard to avoid them in the first place. One of the ways to do that is to validate input as close to the application boundary as possible, and transform it into something that properly protects its invariants; I recently wrote an article with a simple example of doing that (you may also want to read the comments, which contain a few gems).

Even if you can eliminate many 'deep' exceptions, you can't get rid of all of them. Particularly if you integrate with legacy systems, it can be difficult to validate everything up front, because the 'rules' of the legacy system may not be clear or public. In such cases, I prefer to throw custom exceptions. In each place where I need to throw an exception, I create a new Exception class and throw that. This means that any client can catch that particular exception type, and convert it to a friendly user message. If you need to provide additional information to the user, the custom Exception class can have extra properties with the required information.

Typically, if you start with the approach of validating input, and protecting invariants of objects, you aren't going to need a ton of custom Exception classes, so I find this approach manageable, although still not 'nice'.

The solution described by James Jensen above is one that I've never seen before, but it sounds like a good alternative.

As an overall comment, it's true that we shouldn't use exceptions for control flow, but unfortunately, most object-oriented languages give us no nice alternatives. However, in languages with Sum Types (Discriminated Unions in F#), there are much better options for error handling. That's what I use when I write F# code, which is my preferred language these days.

I agree with this post at a basic level. Starting out this is the right way to handle exceptions. The thing is, you haven't really addressed the problems this restriction leads on to.

Internally caught exceptions are for the programmer to handle and write code for. You may want different types to indicate the handling. For example, I have a piece of code that has to communicate over an unreliable network connection. When this fails, it tends to be more efficient to catch to exception and make certain decisions about retrying than to have the error bounce all the way back up the chain of commands where all that will happen in most circumstances anyway is that a retry attempt will be made.

What happens when the programmer doesn't catch the exception? Why can't it be for the user? What if there is something more you can do to help the user beyond "computer said no"? There might be a reason that meme exists. Something unexpected can happen in the code and IO, the user is one of those components. Why can't it be handled there or in another system the exception propagates to? This is a weird one if you think about it. For any other IO fault I can just throw an exception saying what is wrong but when it comes to the user, I can't use exceptions for this?

I believe that in any large multiple interface project that exceptions can and potentially should be for everyone. However I do not believe this should be ambiguous.

I solve this by creating special "UserDoneBadExceptions" so to speak (not literally called that). A few other names and approaches come to mind such as safe exception and friendly exception (hi *wave*). I'll stick to safe exception from now on. Different languages or frameworks will influence which approach for this is best. Personally I've found myself having to monkey patch some nasty kludges for this.

If something reaches the top level without being caught, then it will be forwarded to the next system if it is a safe exception otherwise the current exception will be consumed (such a to the log) and a safe exception will be sent. A safe exception is no different really to those special exceptions that help tell the programmer how to handle them or what to do (retry, fallback, log and ignore, abort, reopen, reconnect, clean up in a certain way, etc). I tend to describe exceptions that need to be converted as give up, complain to support, prey, retry, figure out a workaround, do what you want or shrug exceptions. Basically they're an ouch, the error has been logged, the developer *may* look at the logs and fix it at some date but if it's really important, workaround, go to support, etc.

Establishing that it might be a good idea for an exception system to indicate action at every level raises the question of what to indicate for an exception exiting the system to another system. You always assume at some point it will reach a user so the default is typically that a safe exception triggered by any other exception should explain to the user that an error has occured, not much can be done, perhaps a link to support, definitely some means of linking to the error log (at least a timestamp) and in extreme cases the error log encrypted so they can copy and paste it to support.

There is a repeat ambiguity with safe exceptions because the receiving system may be able to handle the exception. In fact it may be handled both in how it deals with the user and through automation. It is common to extend safe exceptions so that they contain action for the user in the sense of providing them with feedback about what they can do as well as sufficient data for the system so that if it is capable it can take action. I've found the two most common cases for this is to either be able to provide auxilery updated data if the front end is out of date or to indicate a redirect (which can double as a refresh, be careful, very careful). A simple way to look at it is if you cared for using HTTP status codes then which codes and messages should you exceptions indicate the use of?

What this really comes down to is that you need to define separate domains for your exceptions. Are they internal or external? Are they some combination of both (if you are poking your own RPC service then it's technically internal external but really just internal, you can propagate it as-is)? The concept could be called intersystem exceptions. The benefit I find for this is better code reuse and generally less coded needed. I can use all of my permissions systems for RPC for anything with sometimes a thin layer of conversion for different output types and it works pretty well as a one size fits all. Whether my exceptions are poking out into AJAX, websocket XML RPC, JSON RPC, HTML full page load, etc in each case a thin layer of conversion, handling, etc manages it all.

With that it is important to make special safe exceptions reasonably abstract avoiding specifics unless specifics are needed or work better. It's something to think about. Should a login required safe exception simply be a redirect exception to the login page or should it be down to the system to interpret the error type/code and to then be self aware about where the login prompt should be and where to display it? I would personally opt for the latter in a lot of cases but it really depends on what systems you're deailing with. This approach wont be for everyone. Not that many projects become large enough to justify architecting a complex inter system exception system.

Good times with F#

Some operations take time to perform, and you may want to capture that information. This post describes one way to do it.

Occasionally, you may find yourself in the situation where your software must make a decision based on how much time it has left. Is there time to handle one more message before shutting down? How much time did the last operation take? What was the time when it started?

It can be tempting to simply use DateTime.Now or DateTimeOffset.Now, but the problem is that it couples your code to the machine clock. It also tends to interleave timing logic with other logic, so that it becomes difficult to vary these independently. Incidentally, it also tends to make it difficult to unit test the time-dependent code.

Timed<'a> #

One alternative to DateTimeOffset.Now that I've recently found useful is to define a Timed<'a> generic type, like this:

type Timed<'a> = { Started : DateTimeOffset Stopped : DateTimeOffset Result : 'a } member this.Duration = this.Stopped - this.Started

A value of Timed<'a> not only carries with it the result of some computation, but also information about when it started and stopped. It also has a calculated property called Duration, which you can use to examine how long the operation took.

Untimed transformations #

Timed<'a> looks like a standard monadic type, and you can also implement standard Bind and Map functions for it:

module Untimed = let bind f x = let t = f x.Result { t with Started = x.Started } let map f x = { Started = x.Started; Stopped = x.Stopped; Result = f x.Result }

Notice, though, that these functions only transforms the Result (and, in the case of Bind, Stopped), but no actual time measurement is taking place; that's the reason I put both functions in a module called Untimed.

In the case of the Bind function, the time measurement is implicitly taking place when the f function is executed, because it returns a Timed<'b>. In the Map function, on the other hand, no time measurement takes place at all. The function simply maps Result from one value to another using the f function.

So far, I've found no use for the Bind function, and only occasional use for the Untimed.map function. What really matters, after all, is to measure time.

Timing functions #

Ultimately, Timed<'a> is only interesting if you measure the time computations take. Here are a few functions I've found useful to do that:

module Timed = let capture clock x = let now = clock() { Started = now; Stopped = now; Result = x } let map clock f x = let result = f x.Result let stopped = clock () { Started = x.Started; Stopped = stopped; Result = result }

Notice that both functions take a clock argument. This is a function with the signature unit -> DateTimeOffset, enabling the capture and map functions to get the time. As the name indicates, the capture function is used to capture a value and 'promote' it to a Timed<'a> value. This value can subsequently be used with the map function to measure the time it took to exectute the f function.

Clocks #

Using the computer's clock is easy:

let machineClock () = DateTimeOffset.Now

As you can tell, this function returns the value of DateTimeOffset.Now every time it's invoked.

You can also implement other clocks, e.g. for unit testing purposes. Here's one (not particularly Functional) way of doing it:

let qlock (q : System.Collections.Generic.Queue<DateTimeOffset>) () = q.Dequeue()

Obviously, since this clock is based on a Queue, I decided to call it qlock. Using that, you can define an easier-to-use function based on a sequence of DateTimeOffset values:

let seqlock (l : DateTimeOffset seq) = qlock (System.Collections.Generic.Queue<DateTimeOffset>(l))

Here's a fake clock that counts up all the days before Christmas in December (so obviously, I had to name it xlock):

let xlock = [1 .. 24] |> List.map (fun d -> DateTimeOffset(DateTime(2014, 12, d))) |> seqlock

The first time you execute xlock:

(xlock ()).ToString("d")

you get "01.12.2014", the next time you get "02.12.2014", the third time "03.12.2014", and so on.

Such fake clocks are mostly useful for testing, but you could also use them to perform simulations in accelerated time - or perhaps even move time backwards! (Last time I used it, WorldWide Telescope enabled you to look at the solar system in accelerated time. I don't know how that feature is implemented, but it sounds like a good case for a custom clock. The point of this digression is that depending on your application's need, being able to manipulate time may not be as exotic as it first sounds.)

Measuring the time of execution #

These are all the building blocks you need to measure the time it takes to perform an operation. There are various ways to do it, but given a clock of the type unit -> DateTimeOffset, you can close over the clock like this:

let time f x = x |> Timed.capture clock |> Timed.map clock f

The time function has the type ('a -> 'b) -> 'a -> Timed<'b>, so, if for example you have a function called readCustomerFromDb of the type int -> Customer option, you can time it like this:

let c = time readCustomerFromDb 42

This might give you a result like this:

{Started = 17.12.2014 11:24:38 +01:00;

Stopped = 17.12.2014 11:24:39 +01:00;

Result = Some {Id = 42; Name = "Foo";};}

As you can tell, the result of querying the database for customer 42 is the customer data, as well as information about when the operation started and stopped.

Concluding remarks #

The nice quality of Timed<'a> is that it decouples timing of operations from the result of those operations. This enables you to compose functions without explicitly having to consider how to measure their execution times.

It also helps with unit testing, because if you want to test what happens in a function if a previous function ran on a Sunday, or if it took more than three seconds to run, you can simply create a value of Timed<'a> that captures that information:

let c' = { Started = DateTimeOffset(DateTime(2014, 12, 14, 11, 43, 11)) Stopped = DateTimeOffset(DateTime(2014, 12, 14, 11, 43, 15)) Result = Some { Id = 1337; Name = "Ploeh" } }

This value can now be used in a unit test to verify the behaviour in that particular case.

In a future post, I will demonstrate how to compose more complex time-sensitive behaviour using Timed<'a> and the associated modules.

This post is number 17 in the 2014 F# Advent Calendar.

Update 2017-05-25: A more recent treatment, including a more realistic usage scenario, is available in the article Type Driven Development.

Name this operation

A type of operation seems to be repeatedly appearing in my code, but I can't figure out what to call it; can you help me?

Across various code bases that I work with, it seems like operations (methods or functions) like these keep appearing:

public IDictionary<string, string> Foo( IDictionary<string, string> bar, Baz baz)

In order to keep the example general, I've renamed the method to Foo, and the arguments to bar and baz; in this article throughout, I'll make heavy use of metasyntactic variables. The types involved aren't particularly important; in this example they are IDictionary<string, string> and Baz, but they could be anything. The crux of the matter is that the output type is the same as one of the inputs (in this case, IDictionary<string, string> is both input and output).

Another C# example could be:

public int Qux(int corge, string grault)

Notice that the types involved don't have to be interfaces or complex types. The only recurring motif seems to be that the type of one of the arguments is identical to the return type.

Although the return value shares its type with one of the input arguments, the implementation doesn't have to echo the value as output. In fact, this is often not the case.

At this point, there's also no implicit assumption that the operation is referentially transparent. Thus, we may have implementation where some database is queried. Another possibility is that the operation is a closure over additional values. Here's an F# example:

let sign calculateSignature issuer (expiry : DateTimeOffset) claims = claims |> List.append [ { Type = "signature"; Value = calculateSignature claims } { Type = "issuer"; Value = issuer } { Type = "expiry"; Value = expiry.ToString "o" }]

You could imagine that the purpose of this function is to sign a set of security claims, by calculating a signature and add this and other issuer claims to the input list of claims. The signature of this particular function is (Claim list -> string) -> string -> DateTimeOffset -> Claim list -> Claim list. However, the leftmost arguments aren't going to vary much, so it would make sense to partially apply the function. Imagine that you have a correctly implemented signature function called calculateHMacSha, and that the name of the issuer should be "Ploeh":

let sign' = sign calculateHMacSha "Ploeh"

The type of the sign' function is DateTimeOffset -> Claim list -> Claim list, which is equivalent to the above Foo and Qux methods.

The question is: What should we call such an operation?

Why care?

In its general form, such an operation would have the shape

public interface IGrault<T1, T2> { T1 Garply(T1 waldo, T2 fred); }

in C# syntax, or 'a -> 'b -> 'b type in F# syntax.

Why should you care about operations like these?

It turns out that they are rather composable. For instance, it's trivial to implement the Null Object pattern, because you can always simply return the matching argument. Often, it also makes sense to make Composites out of them, or chain them in various ways.

In Functional Programming, you may just do this by composing functions together (while not particularly considering the terminology of what you're doing), but in Object-Oriented languages like C#, we have to name the abstraction before we can use it (because we have to define the type).

If T2 or 'a is a predicate, the operation might be a filter, but as illustrated with the above sign' function, the operation may also enrich the input. In some cases, it may even replace the original input and return a completely different value (of the same type). Thus, such an operation could be a 'filter', an 'expansion', or a 'replacement'; those are quite vague properties, so any name for such operations are likely to be vague or broad as well.

My question to you is: what do we call this type of operation?

While I do have a short-list of candidate names, I encounter these constructs so often that I'd be surprised if such operations don't already have a conceptual name. If so, what is it? If not, what should we call them?

(I'd hoped that this type of operation was already a 'thing' in functional programming, so I asked on Twitter, but the context is often lost on Twitter, so therefore this blog post.)

Comments

Hey Mark,

In a mathematical sense, I would say that this operation is an accumulation: you take a (complex or simple) value and according to the second parameter (and possible other contextual information), this value is transformed, which could mean that either subvalues are changed on the (mutable) target or a new value (which is probably immutable) is created from the given information. This whole process could imply that information is added or stripped from the first parameter.

What I found interesting is that there is always exactly one other parameter (not two or more), and it feels like the information from two is incorporated into parameter one.

Thus I would call this operation a Junction or Consolidation because information of parameter 2 is joined with parameter 1.

Kenny, thank you for your suggestions. Others have pointed out the similarities with accumulation, so that looks like the most popular candidate. Junction and Consolidation are new to me, but I've added both to my list.

When it comes to the second argument, I decided to present the problem with only a second argument in order to keep it simple. You could also have variations with two or three arguments, but since you can rephrase any parameter list to a Parameter Object, it's not particularly important.

Dear Mark,

I choose Junction over accumulation because it is a word that (almost) everybody is familiar with: a junction of rivers. When two or more rivers meet, they form a new river or the tributary rivers flow into the main river. I think this natural phenomenon is a reasonable metaphor for the operation your describing in your post.

Accumulation in contrast is specific to mathematics and IMHO I don't think everybody would understand the concept immediately (especially when lacking academic math education). Thus I think the name Junction can be grasped more easily - especially if you think of other OOP patterns like Factory, Command, Memento or Decorator: the pattern name provides a metaphor to a thing or phenomena (almost) everybody knows, which make them easy to learn and remember.

It was never my intention to imply that I necessarily considered Accumulation the best option, but it has been mentioned independently multiple times.

At first, I didn't make the connection to river junctions, but now that you've explained the metaphor, I really like it. It's like a tributary joining a major river. The Danube is still the Danube after the Inn has joined it. Thank you.

Sorry, I didn't want to sound like a teacher :-)

Not sure if you came to a conclusion here. Here are some relevant terms I've witnessed other places.

- In f#, these kinds of functions are often composed by piping (|>), so maybe IPipeable

- Clojure has the threading macro. Unfortunately, thread has interference with scheduling threads

- The clojure library pedestal operates around a pattern that is effectively lists of strategies. They call it interceptor, but that has interference with the more AOP concept of interceptor

I'm curious, did you experiment with this pattern in a language without partial application? It seems like shepherding operations into the shared type would be verbose.

Spencer, thank you for writing. You're certainly reaching back into the past!

Identifying a name for this kind of API was something that I struggled with at least as far back as 2010, where, for lack of better terms at the time I labelled them Closure of Operations and Reduction of Input. As I learned much later, these are essentially endomorphisms.

I've learned a lot from your content! I decided to start at the beginning to experience your evolution of thought over time. I love how deeply you think and your clear explanations of complex ideas. Thank you for sharing your experience!

Endomorphism makes sense. I got too focused on my notion of piping and forgot the key highlighted value was closure.

The IsNullOrWhiteSpace trap

The IsNullOrWhiteSpace method may seem like a useful utility method, but poisons your design perspective.

The string.IsNullOrWhiteSpace method, together with its older sibling string.IsNullOrEmpty, may seem like useful utility methods. In reality, they aren't. In fact, they trick your mind into thinking that null is equivalent to white space, which it isn't.

Null isn't equivalent to anything; it's the absence of a value.

Various empty and white space strings ("", " ", etc), on the other hand, are values, although, perhaps, not particularly interesting values.

Example: search canonicalization #

Imagine that you have to write a simple search canonicalization algorithm for a music search service. The problem you're trying to solve is that when users search for music, the may use variations of upper and lower case letters, as well as type the artist name before the song title, or vice versa. In order to make your system as efficient as possible, you may want to cache popular search results, but it means that you'll need to transform each search term into a canonical form.

In order to keep things simple, let's assume that you only need to convert all letters to upper case, and order words alphabetically.

Here are five test cases, represented as a Parameterized Test:

[Theory] [InlineData("Seven Lions Polarized" , "LIONS POLARIZED SEVEN" )] [InlineData("seven lions polarized" , "LIONS POLARIZED SEVEN" )] [InlineData("Polarized seven lions" , "LIONS POLARIZED SEVEN" )] [InlineData("Au5 Crystal Mathematics", "AU5 CRYSTAL MATHEMATICS")] [InlineData("crystal mathematics au5", "AU5 CRYSTAL MATHEMATICS")] public void CanonicalizeReturnsCorrectResult( string searchTerm, string expected) { string actual = SearchTerm.Canonicalize(searchTerm); Assert.Equal(expected, actual); }

Here's one possible implementation that passes all five test cases:

public static string Canonicalize(string searchTerm) { return searchTerm .Split(new[] { ' ' }) .Select(x => x.ToUpper()) .OrderBy(x => x) .Aggregate((x, y) => x + " " + y); }

This implementation uses the space character to split the string into an array, then converts each sub-string to upper case letters, sorts the sub-strings in ascending order, and finally concatenates them all together to a single string, which is returned.

Continued example: making the implementation more robust #

The above implementation is quite naive, because it doesn't properly canonicalize if the user entered extra white space, such as in these extra test cases:

[InlineData("Seven Lions Polarized", "LIONS POLARIZED SEVEN")] [InlineData(" Seven Lions Polarized ", "LIONS POLARIZED SEVEN")]

Notice that these new test cases don't pass with the above implementation, because it doesn't properly remove all the white spaces. Here's a more robust implementation that passes all test cases:

public static string Canonicalize(string searchTerm) { return searchTerm .Split(new[] { ' ' }, StringSplitOptions.RemoveEmptyEntries) .Select(x => x.ToUpper()) .OrderBy(x => x) .Aggregate((x, y) => x + " " + y); }

Notice the addition of StringSplitOptions.RemoveEmptyEntries.

Testing for null #

If you consider the above implementation, does it have any other problems?

One, fairly obvious, problem is that if searchTerm is null, the method is going to throw a NullReferenceException, because you can't invoke the Split method on null.

Therefore, in order to protect the invariants of the method, you must test for null:

[Fact] public void CanonicalizeNullThrows() { Assert.Throws<ArgumentNullException>( () => SearchTerm.Canonicalize(null)); }

In this case, you've decided that null is simply invalid input, and I agree. Searching for null (the absence of a value) isn't meaningful; it must be a defect in the calling code.

Often, I see programmers implement their null checks like this:

public static string Canonicalize(string searchTerm) { if (string.IsNullOrWhiteSpace(searchTerm)) throw new ArgumentNullException("searchTerm"); return searchTerm .Split(new[] { ' ' }, StringSplitOptions.RemoveEmptyEntries) .Select(x => x.ToUpper()) .OrderBy(x => x) .Aggregate((x, y) => x + " " + y); }

Notice the use of IsNullOrWhiteSpace. While it passes all tests so far, it's wrong for a number of reasons.

Problems with IsNullOrWhiteSpace #

The first problem with this use of IsNullOrWhiteSpace is that it may give client programmers wrong messages. For example, if you pass the empty string ("") as searchTerm, you'll still get an ArgumentNullException. This is misleading, because it gives the wrong message: it states that searchTerm was null when it wasn't (it was "").

You may then argue that you could change the implementation to throw an ArgumentException.

if (string.IsNullOrWhiteSpace(searchTerm)) throw new ArgumentException("Empty or null.", "searchTerm");

This isn't incorrect per se, but not as explicit as it could have been. In other words, it's not as helpful to the client developer as it could have been. While it may not seem like a big deal in a single method like this, it's sloppy code like this that eventually wear client developers down; it's death by a thousand paper cuts.

Moreover, this implementation doesn't follow the Robustness Principle. Is there any rational reason to reject white space strings?

Actually, with a minor tweak, we can make the implementation work with white space as well. Consider these new test cases:

[InlineData("", "")] [InlineData(" ", "")] [InlineData(" ", "")]

These currently fail because of the use of IsNullOrWhiteSpace, but they ought to succeed.

The correct implementation of the Canonicalize method is this:

public static string Canonicalize(string searchTerm) { if (searchTerm == null) throw new ArgumentNullException("searchTerm"); return searchTerm .Split(new[] { ' ' }, StringSplitOptions.RemoveEmptyEntries) .Select(x => x.ToUpper()) .OrderBy(x => x) .Aggregate("", (x, y) => x + " " + y) .Trim(); }

First of all, the correct Guard Clause is to test only for null; null is the only invalid value. Second, the method uses another overload of the Aggregate method where an initial seed (in this case "") is used to initialize the Fold operation. Third, the final call to the Trim method ensures that there's no leading or trailing white space.

The IsNullOrWhiteSpace mental model #

The overall problem with IsNullOrWhiteSpace and IsNullOrEmpty is that they give you the impression that null is equivalent to white space strings. This is the wrong mental model: white space strings are proper string values that you can very often manipulate just as well as any other string.

If you insist on the mental model that white space strings are equivalent to null, you'll tend to put them in the same bucket of 'invalid' data. However, if you take a hard look at the preconditions for your classes, methods, or functions, you'll find that often, a white space string is going to be perfectly acceptable input. Why reject input you can understand? That will only make your code more difficult to use.

In testing terms, it's my experience that null rarely falls in the same Equivalence Class as white space strings. Therefore, it's wrong to implicitly treat them as if they do.

The IsNullOrWhiteSpace and IsNullOrEmpty methods imply that null and white space strings are equivalent, and this will often give you the wrong mental model of the boundary cases of your software. Be careful when using these methods.

Comments

I agree if this is used at code library, which will be used by other programmer. However when directly used at application level layer, it is common to use them, at least the IsNullOrEmpty one, and they are quite powerful. And I don't find any problem in using that.

"Dog" means "DOG" means "dog"

I'm pretty sure you are hating ("hate-ing") on the wrong statement in this code, and have taken the idea past the point of practicality.

if (string.IsNullOrWhiteSpace(searchTerm)) //this is not the part that is wrong (in this context*)

throw new ArgumentNullException("searchTerm"); //this is

Its wrong for two (or three) reasons...

- It is throwing an exception type that does not match the conditional statement that it comes from. The condition could be null or whitespace , but the exception is specific to null

- The message it tells the caller is misinforming the them about the problem

- * The lack of bracing is kind of wrong too - its so easy to avoid potential bugs and uninteded side effects by just adding braces. More on this later

That code should look more like this, where the ex and message match the conditional, and aligns with both how humans (even programmers) understand written language and one of your versions of the function (but with braces =D)

if (string.IsNullOrWhiteSpace(searchTerm))

{

throw new ArgumentException("A search term must be provided");

}

For most (if not all) human written languages, there is no semantic difference between words based on casing of letters. "Dog" means "DOG" means "dog". Likewise, for a function that accepts user input in order to arrange it for use in a search, there is no need to differentiate between values like null, "", " ", "\r\n\t", <GS>, and other unprintable whitespace characters. None of those inputs are acceptable except for very rare and specific use cases (not when the user is expected to provide some search criteria), so we only need to inform the caller correctly and not mislead them.

It is definitely useful to have null and empty be different values so that we can represent "not known" or "not set" for datum, but that does not apply in this case as the user has set the value by providing the function's argument. And I get what you are trying to say about robustness but in most cases the end result is the same for the user - they get no results. Catching the bad inputs earlier would save the resources needed to execute a futile search and would avoid wasting the user's time waiting on search results for data entry that was most likely a mistake.

RE: Bracing

If you object to the bracing being needed and do want to shorten the above further, use an extension method like this..

public static string NullIfWhiteSpace(this string value)

{

return string.IsNullOrWhiteSpace(value) ? null : value;

}

... then your function would could look like this...

public static string Canonicalize(string searchTerm)

{

_ = searchTerm.NullIfWhiteSpace() ?? throw new ArgumentException("A search term must be provided");

// etc...

This can be made into a Template (resharper) or snippet so you can have even less to type.

StingyJack, thank you for writing. You bring up more than one point. I'll try to address them in order.

You seem to infer from the example that the showcased Canonicalize function implements the search functionality. It doesn't. It canonicalises a search term. A question a library designer must consider is this: What is the contract of the Canonicalize function? What are the preconditions? What are the postconditions? Are there any invariants?

The point that I found most interesting about A Philosophy of Software Design was to favour generality over specialisation. As I also hint at in my review of that book, I think that I identified a connection with Postel's law that isn't explicitly mentioned there. The upshot, in any case, is that it's a good idea to make a function like Canonicalize as robust as possible.

When considering preconditions, then, what are the minimum requirements for it to be able to produce an output?

It turns out that it can, indeed, canonicalise whitespace strings. Since this is possible, it would artificially constrain the function to disallow that. This seems redundant.

When considering Postel's law, there's often a tension between allowing degenerate input and also allowing null input. It wouldn't be hard to handle a null searchTerm by also returning the empty string in that case. This would arguably be more robust, so why not do that?

That's never easy to decide, and someone might be able to convince me that this would, in fact, be more appropriate. On the other hand, one might ask: Is there a conceivable scenario where the search term is validly null?

In most code bases, I'd tend to consider null values as symptomatic of a bug in the calling code. If so, silently accepting null input might entail that defects go undetected. Thus, I tend to favour throwing exceptions on null input.

The bottom line is that there's a fundamental difference between null strings and whitespace strings, just like there's a fundamental difference between null integers and zero integers. It's often dangerous to conflate the two.

Regarding the bracing style, I'm well aware of the argument that omitting the braces can be dangerous. See e.g. the discussion on page 749 in Code Complete.

Impressed by such arguments, I used to insist on always including curly braces even for one-liners, until one day, someone (I believe it was Robert C. Martin) pointed out that these kinds of potential bugs are easily detected by unit tests. Since I routinely have test coverage of my code, I consider the extra braces redundant safety. If there was no cost to having them, I'd leave them, but in practice, they take up space.

Unit Testing methods with the same implementation

How do you comprehensibly unit test two methods that are supposed to have the same behaviour?

Occasionally, you may find yourself in situations where you need to have two (or more) public methods with the same behaviour. How do you properly cover them with unit tests?

The obvious answer is to copy and paste all the tests, but that leads to test duplication. People with a superficial knowledge of DAMP (Descriptive And Meaningful Phrases) would argue that test duplication isn't a problem, but it is, simply because it's more code that you need to maintain.

Another approach may be to simply ignore one of those methods, effectively not testing it, but if you're writing Wildlife Software, you have to have mechanisms in place that can protect you from introducing breaking changes.

This article outlines one approach you can use if your unit testing framework supports Parameterized Tests.

Example problem #

Recently, I was working on a class with a complex AppendAsync method:

public Task AppendAsync(T @event) { // Lots of stuff going on here... }

To incrementally define the behaviour of that AppendAsync method, I had used Test-Driven Development to implement it, ending with 13 test methods. None of those test methods were simple one-liners; they ranged from 3 to 18 statements, and averaged 8 statements per test.

After having fully implemented the AppendAsync method, I wanted to make the containing class implement IObserver<T>, with the OnNext method implemented like this:

public void OnNext(T value) { this.AppendAsync(value).Wait(); }

That was my plan, but how could I provide appropriate coverage with unit tests of that OnNext implementation, given that I didn't want to copy and paste those 13 test methods?

Parameterized Tests with varied behaviour #

In this code base, I was already using xUnit.net with its nice support for Parameterized Tests; in fact, I was using AutoFixture.Xunit with attribute-based test input, so my tests looked like this:

[Theory, AutoAtomData] public void AppendAsyncFirstEventWritesPageBeforeIndex( [Frozen(As = typeof(ITypeResolver))]TestEventTypeResolver dummyResolver, [Frozen(As = typeof(IContentSerializer))]XmlContentSerializer dummySerializer, [Frozen(As = typeof(IAtomEventStorage))]SpyAtomEventStore spyStore, AtomEventObserver<XmlAttributedTestEventX> sut, XmlAttributedTestEventX @event) { sut.AppendAsync(@event).Wait(); var feed = Assert.IsAssignableFrom<AtomFeed>( spyStore.ObservedArguments.Last()); Assert.Equal(sut.Id, feed.Id); }

This is the sort of test I needed to duplicate, but instead of calling sut.AppendAsync(@event).Wait(); I needed to call sut.OnNext(@event);. The only variation in the test should be in the exercise SUT phase of the test. How can you do that, while maintaining the terseness of using attributes for defining test cases? The problem with using attributes is that you can only supply primitive values as input.

First, I briefly experimented with applying the Template Method pattern, and while I got it working, I didn't like having to rely on inheritance. Instead, I devised this little, test-specific type hierarchy:

public interface IAtomEventWriter<T> { void WriteTo(AtomEventObserver<T> observer, T @event); } public enum AtomEventWriteUsage { AppendAsync = 0, OnNext } public class AppendAsyncAtomEventWriter<T> : IAtomEventWriter<T> { public void WriteTo(AtomEventObserver<T> observer, T @event) { observer.AppendAsync(@event).Wait(); } } public class OnNextAtomEventWriter<T> : IAtomEventWriter<T> { public void WriteTo(AtomEventObserver<T> observer, T @event) { observer.OnNext(@event); } } public class AtomEventWriterFactory<T> { public IAtomEventWriter<T> Create(AtomEventWriteUsage use) { switch (use) { case AtomEventWriteUsage.AppendAsync: return new AppendAsyncAtomEventWriter<T>(); case AtomEventWriteUsage.OnNext: return new OnNextAtomEventWriter<T>(); default: throw new ArgumentOutOfRangeException("Unexpected value."); } } }

This is test-specific code that I added to my unit tests, so there's no test-induced damage here. These types are defined in the unit test library, and are not available in the SUT library at all.

Notice that I defined an interface to abstract how to use the SUT for this particular test purpose. There are two small classes implementing that interface: one using AppendAsync, and one using OnNext. However, this small polymorphic type hierarchy can't be supplied from attributes, so I also added an enum, and a concrete factory to translate from the enum to the writer interface.

This test-specific type system enabled me to refactor my unit tests to something like this:

[Theory] [InlineAutoAtomData(AtomEventWriteUsage.AppendAsync)] [InlineAutoAtomData(AtomEventWriteUsage.OnNext)] public void WriteFirstEventWritesPageBeforeIndex( AtomEventWriteUsage usage, [Frozen(As = typeof(ITypeResolver))]TestEventTypeResolver dummyResolver, [Frozen(As = typeof(IContentSerializer))]XmlContentSerializer dummySerializer, [Frozen(As = typeof(IAtomEventStorage))]SpyAtomEventStore spyStore, AtomEventWriterFactory<XmlAttributedTestEventX> writerFactory, AtomEventObserver<XmlAttributedTestEventX> sut, XmlAttributedTestEventX @event) { writerFactory.Create(usage).WriteTo(sut, @event); var feed = Assert.IsAssignableFrom<AtomFeed>( spyStore.ObservedArguments.Last()); Assert.Equal(sut.Id, feed.Id); }

Notice that this is the same test case as before, but because of the two occurrences of the [InlineAutoAtomData] attribute, each test method is being executed twice: once with AppendAsync, and once with OnNext.

This gave me coverage, and protection against breaking changes of both methods, without making any test-induced damage.

The entire source code in this article is available here. The commit just before the refactoring is this one, and the commit with the completed refactoring is this one.

Comments

Ricardo, thank you for writing. xUnit.net's equivalent to [TestCaseSource] is to combine the [Theory] attribute with one of the built-in [PropertyData], [ClassData], etc. attributes. However, in this particular case, there's a couple of reasons that I didn't find those attractive:

- When supplying test data in this way, you can write any code you'd like, but you have to supply values for all the parameters for all the test cases. In the above example, I wanted to vary the System Under Test (SUT), while I didn't care about varying any of the other parameters. The above approach enabled my to do so in a fairly DRY manner.

- In general, I don't like using [PropertyData], [ClassData] (or NUnit's [TestCaseSource]) unless I truly have a set of reusable test cases. Having a member or type encapsulating a set of test cases implies that these test cases are reusable - but they rarely are.

That's an interesting take on test reuse. Exude also reminds me of F#'s Expecto. I'd be curious what you think of a few other reuse approaches.

First, I've found expecto's composable tests lists make reuse quite intuitive.

Second, some dev friends of mine have been contemplating test reuse.

The root idea sourced from a friend thinking about the fragile test problem. He concluded that tests should be decoupled from the system. Effectively, the test creates it's own anti-corruption layer which allows tests to stay clean even if the system is messy. This pushes tests to reflect requirements over implementations, which are much more stable. Initially skeptical, I've found significant value in the approach. Systems with role-based interfaces (e.g. ports and adapters) can write one core test suite verifying behaviors for all implementations of that interface.

I've been using this paradigm to map test reuse back to C#. The test list is a class with facts/theories and a constructor that takes the test list's anti-corruption abstraction as a constructor argument. Then I can make implementation-specific tests by inheriting the list and passing an implementation. The same could be done against a role interface instead of a test-specific abstraction if desired.

Taking it even further, I've been wondering if such tests could practically act as acceptance tests between teams as a lower cost alternative to gherkin.

That ended up long. Any depth of feedback would be appreciated.

Spencer, thank you for writing. Tests as first-class values are certainly much to be preferred. That's how I write parametrised tests in Haskell, because HUnit models tests as values. I'm aware that Expecto does the same.

I also agree with you that decoupling tests from implementation details is beneficial. People tend to think of this as only possible with Acceptance Tests or BDD-style tests, but I find that it's also possible in the small. In general, I've found property-based testing to be useful as a technique to add some distance between the test and the system under test.

The idea about using Acceptance Tests between teams sounds like a technique that Ian Robinson calls Consumer-Driven Contracts.

Thank you for your feedback. I'm glad you've also found value in tests as values and decoupling tests from implementations.

Consumer-Driven Contracts does seem to share a lot in common with my idea for using implementation-decoupled tests as acceptance tests. Ian's idea starts from thinking about the provider and how it can manage message compatibility with consumers. Mine started from the consumer and testing multiple implementations of a port abstraction. Then, realizing those tests could also be reused by external consumers (extension contributors, upstream teams).

I think the test-focused approach is able to partially mitigate a few of his concerns. First, the overall added work may be less because the tests already need to be defined for the consumer whether or not there is a complex externally-owned provider. Second, package systems have reached general adoption since his post and greatly improve coordination of shared code. Unit tests [can already be shared with a package manager](https://spencerfarley.com/2021/11/05/acceptance-test-logisitcs/), though there is definitely room for improvement.

I'm a smidge sad because I thought I'd had an original idea, but I'm very glad to discover another author to follow. Thanks for connecting me!

AtomEventStore

Announcing AtomEventStore: an open source, server-less .NET Event Store.

Grean has kindly open-sourced a library we've used internally for several projects: AtomEventStore. It's pretty nice, if I may say so.

It comes with quite a bit of documentation, and is also available on NuGet.

The original inspiration came from Yves Reynhout's article Your EventStream is a linked list.

Faking a continuously Polling Consumer with scheduled tasks

How to (almost) continuously poll a queue when you can only run a task at discrete intervals.

In a previous article, I described how you set up Azure Web Jobs to run only a single instance of a background process. However, the shortest time interval you can currently configure is to run a scheduled task every minute. If you want to use this trick to set up a Polling Consumer, it may seem limiting that you'll have to wait up to a minute before the scheduled task can pull new messages off the queue.

The problem #

The problem is this: a Web Job is a command-line executable you can schedule. The most frequent schedule you can set up is once per minute. Thus, once per minute, the executable can start and query a queue in order to see if there are new messages since it last looked.

If there are new messages, it can pull these messages off the queue and handle them one by one, until the queue is empty.

If there are no new messages, the executable exits. It will then be up to a minute before it will run again. This means that if a message arrives just after the executable exits, it will sit in the queue up to a minute before being handled.

At least, that's the naive implementation.

A more sophisticated approach #

Instead of exiting immediately, what if the executable was to wait for a small period, and then check again? This means that you'd be able to increase the polling frequency to run much faster than once per minute.

If the executable also keeps track of when it started, it can gracefully exit slightly before the minute is up, enabling the task scheduler to start a new process soon after.

Example: a recursive F# implementation #

You can implement the above strategy in any language. Here's an F# version. The example code below is the main 'loop' of the program, but a few things have been put into place before that. Most of these are configuration values pulled from the configuration system:

-

timeoutis a TimeSpan value that specifies for how long the executable should run before exiting. This value comes from the configuration system. Since the minimum schedule frequency for Azure Web Jobs is 1 minute, it makes sense to set this value to 1 minute. -

stopBeforeis essentiallyDateTimeOffset.Now + timeout. -

estimatedDurationis a TimeSpan containing your (conservative) estimate of how long time it takes to handle a single message. It's only used if there are no messages to be handled in the queue, as the algorithm then has no statistics about the average execution time for each message. This value comes from the configuration system. In a recent system, I just arbitrarily set it to 2 seconds. -

toleranceFactoris a decimal used as a multiplier in order to produce a margin for when the executable should exit, so that it can exit beforestopBefore, instead of idling for too long. This value comes from the configuration system. You'll have to experiment a bit with this value. When I originally deployed the code below, I had it set to 2, but it seems like the Azure team changed how Web Jobs are initialized, so currently I have it set to 5. -

idleTimeis a TimeSpan that controls how long the executable should sit idly waiting, if there are no messages in the queue. This value comes from the configuration system. In a recent system, I set it to 5 seconds, which means that you may experience up to 5 seconds delay from a message arrives on an empty queue, until it's picked up and handled. -

dequeueis the function that actually pulls a message off the queue. It has the signatureunit -> (unit -> unit) option. That looks pretty weird, but it means that it's a function that takes no input arguments. If there's a message in the queue, it returns a handler function, which is used to handle the message; otherwise, it returns None.

let rec handleUntilTimedOut (durations : TimeSpan list) = let avgDuration = match durations with | [] -> estimatedDuration | _ -> (durations |> List.sumBy (fun x -> x.Ticks)) / (int64 durations.Length) |> TimeSpan.FromTicks let margin = (decimal avgDuration.Ticks) * toleranceFactor |> int64 |> TimeSpan.FromTicks if DateTimeOffset.Now + margin < stopBefore then match dequeue() with | Some handle -> let before = DateTimeOffset.Now handle() let after = DateTimeOffset.Now let duration = after - before let newDurations = duration :: durations handleUntilTimedOut newDurations | _ -> if DateTimeOffset.Now + idleTime < stopBefore then Async.Sleep (int idleTime.TotalMilliseconds) |> Async.RunSynchronously handleUntilTimedOut durations handleUntilTimedOut []

As you can see, the first thing the handleUntilTimedOut function does is to attempt to calculate the average duration of handling a message. Calculating the average is easy enough if you have at least one observation, but if you have no observations at all, you can't calculate the average. This is the reason the algorithm needs the estimatedDuration to get started.

Based on the the average (or estimated) duration, the algorithm next calculates a safety margin. This margin is intended to give the executable a chance to exit in time: if it can see that it's getting too close to the timeout, it'll exit instead of attempting to handle another message, since handling another message may take so much time that it may push it over the timeout limit.

If it decides that, based on the margin, it still have time to handle a message, it'll attempt to dequeue a message.

If there's a message, it'll measure the time it takes to handle the message, and then append the measured duration to the list of already observed durations, and recursively call itself.

If there's no message in the queue, the algorithm will idle for the configured period, and then call itself recursively.

Finally, the handleUntilTimedOut [] expression kicks off the polling cycle with an empty list of observed durations.

Observations #

When appropriately configured, a typical Azure Web Job log sequence looks like this:

- 1 minute ago (55 seconds running time)

- 2 minutes ago (55 seconds running time)

- 3 minutes ago (56 seconds running time)

- 4 minutes ago (55 seconds running time)

- 5 minutes ago (56 seconds running time)

- 6 minutes ago (58 seconds running time)

- 7 minutes ago (55 seconds running time)

- 8 minutes ago (55 seconds running time)

- 9 minutes ago (58 seconds running time)

- 10 minutes ago (56 seconds running time)

Summary #

If you have a situation where you need to run a scheduled job (as opposed to a continuously running service or daemon), but you want it to behave like it was a continuously running service, you can make it wait until just before a new scheduled job is about to start, and then exit. This is an approach you can use anywhere you find yourself in that situation - not only on Azure.

Update 2015 August 11: See my article about Type Driven Development for an example of how to approach this problem in a well-designed, systematic, iterative fashion.

Comments

Indeed, this technique really shines when the task runs at discrete intervals and the actual job takes less time than each interval.

For example, if a job takes 20 seconds to complete, then inside a single task of 1 minute it's possible to run 2 jobs, using the technique described in this article. – This is very nice!

FWIW, this talk and this post seem to be related with the technique shown in this article.

OTOH, in the context of Windows Services, or similar, it's possible to run a task at even smaller intervals than 1 minute.

In this case, the trick is to measure how much time it takes to run the actual job. – Then, schedule the task to run in any period less than the measured time.

As an example, if a job takes 1 seconds to complete, schedule the task to run every 0.5 seconds on a background thread while blocking the foreground thread:

open System.Threading

open System.Threading.Tasks

module Scheduler =

let run job (period : TimeSpan) =

// Start

let cancellationTokenSource =

new CancellationTokenSource()

(new Task(fun () ->

let token = cancellationTokenSource.Token