ploeh blog danish software design

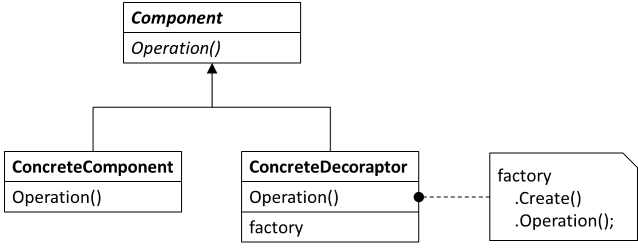

Decoraptor

A Dependency Injection design pattern

This article describes a Dependency Injection design pattern called Decoraptor. It can be used as a solution to the problem:

How can you address lifetime mismatches without introducing a Leaky Abstraction?

By adding a combination of a Decorator and Adapter between the Client and its Service.

Sometimes, when a Client wishes to talk to a Service (Component in the figure; in practice often an interface), the Client may have a long lifetime, whereas, because of technical constraints in the implementation, the Service must only have a short lifetime. If not addressed, this may lead to a Captive Dependency: the long-lived Client holds onto the Service for too long.

One solution to this is a Decoraptor - a combination of a Decorator and an Adapter. A Decoraptor is a long-lived object that adapts an Abstract Factory, and uses the factory to create a short-lived instance of the interface that it, itself, implements.

How it works #

A Decoraptor is an Adapter that looks almost like a Decorator. It implements an interface (Component in the above figure) by adapting an Abstract Factory. The factory creates other instances of the implemented interface, so the Decoraptor implements each operation defined by the interface by

- Invoking the factory to create a new instance of the same interface

- Delegating the operation to the new instance

While not strictly a Decorator, a Decoraptor is closely related because it ultimately delegates all implementation to another implementation of the interface it, itself, implements. The only purpose of a Decoraptor is to match two otherwise incompatible lifetimes.

When to use it #

While a Decoraptor is a fairly simple piece of infrastructure code, it still adds complexity to a code base, so it should only be used if necessary. The simplest solution to the Captive Dependency problem is to align the Client's lifetime to the Service's lifetime; in other words, make the Client's lifetime as short as the Service's lifetime. This doesn't require a Decoraptor: it only requires you to compose the Client/Service object graph every time you want to create a new instance of the Service.

Another alternative to a Decoraptor is to consider whether it's possible to refactor the Service so that it can have a longer lifetime. Often, the reason why a Service should have a short life span is because it isn't thread-safe. Sometimes, making a Service thread-safe isn't that difficult; in such cases, this may be a better solution (but sometimes, making something thread-safe is extremely difficult, in which case Decoraptor may be a much simpler solution).

Still, there may be cases where creating a new Client every time you want to use it isn't desirable, or even possible. The most common concern is often performance, although in my experience, that's rarely a real problem. More substantial is a concern that sometimes, due to technical constraints, it isn't possible to make the Client shorter-lived. In these cases, a Decoraptor is a good solution.

A Decoraptor is a better alternative to injecting an Abstract Factory directly into the Client, because doing that is a Leaky Abstraction. The Client is programming against an interface, and, according to the Dependency Inversion Principle,

Therefore, it would be a Leaky Abstraction if the Client were to define the interface based on the requirements of a particular implementation of that interface. A Decoraptor is a better alternative, because it enables the Client to define the interface it needs, and the Service to implement the interface as it can, and the Decoraptor's single responsibility is to make those two ends meet."clients [...] own the abstract interfaces"

- Agile Principles, Patterns, and Practices, chapter 11

Implementation details #

Let the Client define the interface it needs, without taking lifetime issues into consideration. Implement the interface in a separate class, again disregarding specific lifetime issues.

Define an Abstract Factory that creates new instances of the interface required by the Client. Create a new class that implements the interface, but takes the factory as a dependency. This is the Decoraptor. In the Decoraptor, implement each method by invoking the factory to create the short-lived instance of the interface, and delegate the method implementation to that instance.

Create a class that implements the Abstract Factory by creating new instances of the short-lived Service.

Inject the factory into the Decoraptor, and inject the Decoraptor into the long-lived Client. The Decoraptor has the same lifetime as the Client, but each Service instance only exists for the duration of a single method call.

Motivating example #

When using Passive Attributes with ASP.NET Web API, the Filter containing all the behaviour must be added to the overall collection of Filters:

var filter = new MeteringFilter(observer); config.Filters.Add(filter);

Due to the way ASP.NET Web API works, this configuration happens during Application_Start, so there's only going to be a single instance of MeteringFilter around. In other work, the MeteringFilter is a long-lived Client; it effectively has Singleton lifetime scope.

MeteringFilter depends on IObserver<MeterRecord> (See the article about Passive Attributes for the full code of MeteringFilter). The observer injected into the MeteringFilter object will have the same lifetime as the MeteringFilter object. The Filter will be used from concurrent threads, and while MeteringFilter itself is thread-safe (it has immutable state), the injected observer may not be.

To be more concrete, this question was recently posted on Stack Overflow:

"The filter I'm implementing has a dependency on a repository, which itself has a dependency on a custom DbContext. [...] I'm not sure how to implement this, while taking advantage of the DI container's lifetime management capabilities (so that a new DbContext will be used per request)."It sounds like the DbContext in question is probably an Entity Framework DbContext, which makes sense: last time I looked, DbContext wasn't thread-safe. Imagine that the IObserver<MeterRecord> implementation looks like this:

public class SqlMeter : IObserver<MeterRecord> { private readonly MeteringContext ctx; public SqlMeter(MeteringContext ctx) { this.ctx = ctx; } public void OnNext(MeterRecord value) { this.ctx.Records.Add(value); this.ctx.SaveChanges(); } public void OnCompleted() { } public void OnError(Exception error) { } }

The SqlMeter class here takes the place of the Service in the more abstract language used above. In the Stack Overflow question, it sounds like there's a Repository between the Client and the DbContext, but I think this example captures the essence of the problem.

This is exactly the issue outlined above. Due to technical constraints, the MeteringFilter must be a Singleton, while (again due to technical constraints) each Observer must be either Transient or scoped to each request.

Refactoring notes #

In order to resolve a problem like the example above, you can introduce a Decoraptor, which sits between the Client (MeteringFilter) and the Service (SqlMeter). The Decoraptor relies on an Abstract Factory to create new instances of SqlMeter:

public interface IFactory<T> { T Create(); }

In this case, the Abstract Factory is a generic interface, but it could also be a non-generic interface that only creates instances of IObserver<MeterRecord>. Some people prefer delegates instead, so would use e.g. Func<IObserver<MeterRecord>>. You could also define an Abstract Base Class instead of an interface, if that's more to your liking.

This particular example also disregards decommissioning concerns. Effectively, the Abstract Factory will create instances of SqlMeter, but since these instances simply go out of scope, the contained MeteringContext isn't being disposed of in a deterministic manner. In a future article, I will remedy this situation.

Example: generic Decoraptor for Observers #

In order to resolve the lifetime mismatch between MeteringFilter and SqlMeter you can introduce a generic Decoraptor:

public class Observeraptor<T> : IObserver<T> { private readonly IFactory<IObserver<T>> factory; public Observeraptor(IFactory<IObserver<T>> factory) { this.factory = factory; } public void OnCompleted() { this.factory.Create().OnCompleted(); } public void OnError(Exception error) { this.factory.Create().OnError(error); } public void OnNext(T value) { this.factory.Create().OnNext(value); } }

Notice that Observeraptor<T> can adapt any IObserver<T> because it depends on the generic IFactory<IObserver<T>> interface. In each method defined by IObserver<T>, it first uses the factory to create an instance of a short-lived IObserver<T>, and then delegates the implementation to that object.

Since MeteringFilter depends on IObserver<MeterRecord>, you also need an implementation of IFactory<IObserver<MeterRecord>> in order to compose the MeteringFilter object graph:

public class SqlMeterFactory : IFactory<IObserver<MeterRecord>> { public IObserver<MeterRecord> Create() { return new SqlMeter(new MeteringContext()); } }

This implementation simply creates a new instance of SqlMeter and MeteringContext every time the Create method is invoked; hardly surprising, I should hope.

You now have all building blocks to correctly compose MeteringFilter in Application_Start:

var factory = new SqlMeterFactory(); var observer = new Observeraptor<MeterRecord>(factory); var filter = new MeteringFilter(observer); config.Filters.Add(filter);

Because SqlMeterFactory creates new instances of SqlMeter every time MeteringFilter invokes IObserver<MeterRecord>.OnNext, the lifetime requirements of the DbContext are satisfied. Moreover, MeteringFilter only depends on IObserver<MeterRecord>, so is perfectly shielded from that implementation detail, so no Leaky Abstraction was introduced.

Known examples #

Questions related to the issue of lifetime mismatches are regularly being asked on Stack Overflow, and I've had a bit of success recommending Decoraptor as a solution, although at that time, I had yet to come up with the name:

- Dependency Injection - new instance required in several of a classes methods

- IOC DI Multi-Threaded Lifecycle Scoping in Background Tasks

CQS versus server generated IDs

How can you both follow Command Query Separation and assign unique IDs to Entities when you save them? This post examines some options.

In my Encapsulation and SOLID Pluralsight course, I explain why Command Query Separation (CQS) is an important component of Encapsulation. When first exposed to CQS, many people start to look for edge cases, so a common question is something like this:

What would you do if you had a service that saves to a database and returns the ID (which is set by the database)? The Save operation is a Command, but if it returns void, how do I get the ID?This is actually an exercise I've given participants when I've given the course as a workshop, so before you read my proposed solutions, consider taking a moment to see if you can come up with a solution yourself.

Typical, CQS-violating design #

The problem is that in many code bases, you occasionally need to save a brand-new Entity to persistent storage. Since the object is an Entity (as defined in Domain-Driven Design), it must have a unique ID. If your system is a concurrent system, you can't just pick a number and expect it to be unique... or can you?

Instead, many programmers rely on their underlying database to add a unique ID to the Entity when it saves it, and then return the ID to the client. Accordingly, they introduce designs like this:

public interface IRepository<T> { int Create(T item); // other members }

The problem with this design, obviously, is that it violates CQS. The Create method ought to be a Command, but it returns a value. From the perspective of Encapsulation, this is problematic, because if we look only at the method signature, we could be tricked into believing that since it returns a value, the Create method is a Query, and therefore has no side-effects - which it clearly has. That makes it difficult to reason about the code.

It's also a leaky abstraction, because most developers or architects implicitly rely on a database implementation to provide the ID. What if an implementation doesn't have the ability to come up with a unique ID? This sounds like a Liskov Substitution Principle violation just waiting to happen!

The easiest solution #

When I wrote "you can't just pick a number and expect it to be unique", I almost gave away the easiest solution to this problem:

public interface IRepository<T> { void Create(Guid id, T item); // other members }

Just change the ID from an integer to a GUID (or UUID, if you prefer). This does enable clients to come up with a unique ID for the Entity - after all, that's the whole point of GUIDs.

Notice how, in this alternative design, the Create method returns void, making it a proper Command. From an encapsulation perspective, this is important because it enables you to immediately identify that this is a method with side-effects - just by looking at the method signature.

But wait: what if you do need the ID to be an integer? That's not a problem; there's also a solution for that.

A solution with integer IDs #

You may not like the easy solution above, but it is a good solution that fits in a wide variety in situations. Still, there can be valid reasons that using a GUID isn't an acceptable solution:

- Some databases (e.g. SQL Server) don't particularly like if you use GUIDs as table keys. You can, but integer keys often perform better, and they are also shorter.

- Sometimes, the Entity ID isn't only for internal use. Once, I worked on a customer support ticket system, and my suggestion of using a GUID as an ID wasn't met with enthusiasm. When a customer calls on the phone about an existing support case, it isn't reasonable to ask him or her to read an entire GUID aloud; it's simply too error-prone.

Here's my modified solution:

public interface IRepository<T> { void Create(Guid id, T item); int GetHumanReadableId(Guid id); // other members }

Notice that the Create method is the same as before. The client must still supply a GUID for the Entity, and that GUID may or may not be the 'real' ID of the Entity. However, in addition to the GUID, the system also associates a (human-readable) integer ID with the Entity. Which one is the 'real' ID isn't particularly important; the important part is that there's another method you can use to get the integer ID associated with the GUID: the GetHumanReadableId method.

The Create method is a Command, which is only proper, because creating (or saving) the Entity mutates the state of the system. The GetHumanReadableId method, on the other hand, is a Query: you can call it as many times as you like: it doesn't change the state of the system.

If you want to store your Entities in a database, you can still do that. When you save the Entity, you save it with the GUID, but you can also let the database engine assign an integer ID; it might even be a monotonically increasing ID (1, 2, 3, 4, etc.). At the same time, you could have a secondary index on the GUID.

When a client invokes the GetHumanReadableId method, the database can use the secondary index on the GUID to quickly find the Entity and return its integer ID.

Performance #

"But," you're likely to say, "I don't like that! It's bad for performance!"

Perhaps. My first impulse is to quote Donald Knuth at you, but in the end, I may have to yield that my proposed design may result in two out-of-process calls instead of one. Still, I never promised that my solution wouldn't involve a trade-off. Most software design decisions involve trade-offs, and so does this one. You gain better encapsulation for potentially worse performance.

Still, the performance drawback may not involve problems as such. First, while having to make two round trips to the database may perform worse than a single one, it may still be fast enough. Second, even if you need to know the (human-readable) integer ID, you may not need to know it when you create it. Sometimes you can get away with saving the Entity in one action, and then you may only need to know what the ID is seconds or minutes later. In this case, separating reads and writes may actually turn out to be an advantage.

Must all code be designed like this? #

Even if you disregard your concerns about performance, you may find this design overly complex, and more difficult to use. Do I really recommend that all code should be designed like that?

No, I don't recommend that all code should be designed according to the CQS principle. As Martin Fowler points out, in Object-Oriented Software Construction, Bertrand Meyer explains how to design an API for a Stack with CQS. It involves a Pop Command, and a Top Query: in order to use it, you would first have to invoke the Top Query to get the item on the top of the stack, and then subsequently invoke the Pop Command to modify the state of the stack.

One of the problems with such a design is that it isn't thread-safe. It's also more unwieldy to use than e.g. the standard Stack<T>.Pop method.

My point with all of this isn't to dictate to you that you should always follow CQS. The point is that you can always come up with a design that does.

In my career, I've met many programmers who write a poorly designed class or interface, and then put a little apology on top of it:

public interface IRepository<T> { // CQS is impossible here, because we need the ID int Create(T item); // other members }

Many times, we don't know of a better way to do things, but it doesn't mean that such a way doesn't exist; we just haven't learned about it yet. In this article, I've shown you how to solve a seemingly impossible design challenge with CQS.

Knowing that even a design problem such as this can be solved with CQS should put you in a better position to make an informed decision. You may still decide to violate CQS and define a Create method that returns an integer, but if you've done that, it's because you've weighed the alternatives; not because you thought it impossible.

Summary #

It's possible to apply CQS to most problems. As always, there are trade-offs involved, but knowing how to apply CQS, even if, at first glance, it seems impossible, is an important design skill.

Personally, I tend to adhere closely to CQS when I do Object-Oriented Design, but I also, occasionally, decide to break that principle. When I do, I do so realising that I'm making a trade-off, and I don't do it lightly.

Comments

POST /api/users

This should return the Location of the created url. Would you suggest, the location be the status of the create user command.

GET /api/users/createtoken/abc123

UI Developers do not like this pattern. It creates extra work for them.

(Bear in mind I'm pretty ignorant when it comes to CQRS but I figured that it would get around not being able to return anything directly from a command)

But then again I suppose you'd still need the GetHumanReadableId method for later on...

Tony, thank you for writing. What you suggest is one way to model it. Essentially, if a client starts with:

POST /api/users

One option for a response is, as you suggest, something like:

HTTP/1.1 202 Accepted Location: /api/status/1234

A client can poll on that URL to get the status of the task. When the resource is ready, it will include a link to the user resource that was finally created. This is essentially a workflow just like the RESTbucks example in REST in Practice. You'll also see an example of this approach in my Pluralsight course on Functional Architecture, and yes, this makes it more complicated to implement the client. One example is the publicly available sample client code for that course.

While it puts an extra burden on the client developer, it's a very robust implementation because it's based on asynchronous workflows, which not only makes it horizontally scalable, but also makes it much easier to implement robust occasionally connected clients.

However, this isn't the only RESTful option. Here's an alternative. The request is the same as before:

POST /api/users

But now, the response is instead:

HTTP/1.1 201 Created

Location: /api/users/8765

{ "name" : "Mark Seemann" }

Notice that this immediately creates the resource, as well as returns a representation of it in the response (consider the JSON in the example a placeholder). This should be easier on the poor UI Developers :)

In any case, this discussion is entirely orthogonal to CQS, because at the boundaries, applications aren't Object-Oriented - thus, CQS doesn't apply to REST API design. Both POST, PUT, and DELETE verbs imply the intention to modify a resource's state, yet the HTTP specification describes how such requests should return proper responses. These verbs violate CQS because they both involve state mutation and returning a response.

Steve, thank you for writing. Yes, raising an event from a Command is definitely valid, since a Command is all about side-effects, and an event is also a side effect. The corollary of this is that you can't raise events from Queries if you want to adhere to CQS. In my Pluralsight course on encapsulation, I briefly touch on this subject in the Encapsulation module (module 2), in the clip called Queries, at 1:52.

(BTW, in this article, and the course as well, I'm discussing CQS (Command Query Separation), not CQRS (Command Query Responsibility Segregation). Although these ideas are related, it's important to realise that they aren't synonymous. CQS dates back to the 1980s, while CQRS is a more recent invention, from the 2000s - the earliest dated publication that's easy to find is from 2010, but it discusses CQRS as though people already know what it is, so the idea must be a bit older than that.)

Mark, thank you for this post and sorry for my late response, but I've got some questions about it.

First of all, I would not have designed the repository the way you showed at the beginning of your post - instead I would have opted for something like this:

public interface IRepository<T> { T CreateEntity(); void AttachEntity(T entity); }

The CreateEntity method behaves like a factory in that it creates a new instance of the specified entity without an ID assigned (the entity is also not attached to the repository). The AttachEntity method takes an existing entity and assigns a valid ID to it. After a call to this method, the client can get the new ID by using the corresponding get method on the entity. This way the repository acts like a service facade as it provides creational, querying and persistance services to the client for a single type of entities.

What do you think about this? From my point of view, the AttachEntity method is clearly a command, but what about the CreateEntity method? I would say it is a query because it does not change the state of the underlying repository when it is called - but is this really the case? If not, could we say that factories always violate CQS? What about a CreateEntity implementation that attaches the newly created entity to the repository?

Kenny, thank you for writing. FWIW, I wouldn't have designed a Repository like I show in the beginning of the article either, but I've seen lots of Repositories designed like that.

Your proposed solution looks like it respects CQS as well, so that's another alternative to my suggested solutions. Based on your suggested method signatures, at least, CreateEntity looks like a Query. If a factory only creates a new object and returns it, it doesn't change the observable state of the system (no class fields mutated, no files were written to disk, no bytes were sent over the network, etc.).

If, on the other hand, the CreateEntity method also attaches the newly created entity to the repository, then it would violate CQS, because that would change the observable state of the system.

Your design isn't too far from the one I suggest in this very article - I just don't include the CreateEntity method in my interface definition, because I consider the creation of an Entity (in memory) to be a separate concern from being able to persist it.

Then, if you omit the CreateEntity method, you'll see that your AttachEntity method plays the same role is my Create method; the only difference is that I keep the ID separate from the Entity, while you have the ID as a writable property on the Entity. Instead of invoking a separate Query (GetHumanReadableId) to figure out what the server-generated ID is, you invoke a Query attached to the Entity (its ID property).

The reason I didn't chose this design is because it messes with the invariants of the Entity. If you consider an Entity as defined in Domain-Driven Design, an Entity is an object that has a long-lasting identity. This is most often implemented by giving the Entity an Id property; I do that too, but make sure that the id is mandatory by requiring clients to supply it through the constructor. As I explain in my Pluralsight course about encapsulation, a very important part about encapsulation is to make sure that it's impossible (or at least difficult) to put an object into an invalid state. If you allow an Entity to be put into a state where it has no ID, I would consider that an invalid state.

Thank you for your comprehensive answer, Mark. I also like the idea of injecting the ID of an entity directly into its constructor - but I haven't actually applied this 'pattern' in my projects yet. Although it's a bit off-topic, I have to ask: the ID injection would result in something like this:

public abstract class Entity<T> { private readonly T _id; protected Entity(T id) { _id = id; } public T ID { get { return _id; } } public override int GetHashCode() { return _id.GetHashCode(); } public override bool Equals(object other) { var otherEntity = other as Entity; if (otherEntity == null) return false; return _id == otherEntity._id; } }

I know it is not perfect, so please think about it as a template.

Anyway, I prefer this design but often this results in unnecessarily complicated code, e.g. when the user is able to create an entity object temporarily in a dialog and he or she can choose whether to add it or discard of it at the end of the dialog. In this case I would use a DTO / View Model etc. that only exists because I cannot assign a valid ID to an entity yet.

Do you have a pattern that circumvents this problem? E.g. clone an existing entity with an temporary / invalid ID and assign a valid one to it (which I would find very reasonable)?

Kenny, thank you for writing. Yes, you could model it like that Entity<T> class, although there are various different concerns mixed here. Whether or not you want to override Equals and GetHashCode is independent of the discussion about protecting invariants. This may already be clear to you, but I just wanted to get that out of the way.

The best answer to your question, then, is to make it simpler, if at all possible. The first question you should ask yourself is: can the ID really be any type? Can you have an Entity<object>? Entity<Random>? Entity<UriBuilder>? That seems strange to me, and probably not what you had in mind.

The simplest solution to the problem you pose is simply to settle on GUIDs as IDs. That would make an Entity look like this:

public class Foo

{

private readonly Guid id;

public Foo(Guid id)

{

if (id == Guid.Empty)

throw new ArgumentException("Empty GUIDs not allowed.", "id");

this.id = id;

}

public Guid Id

{

get { return this.id; }

}

// other members go here...

}

Obviously, this makes the simplification that the ID is a GUID, which makes it easy to create a 'temporary' instance if you need to do that. You'll also note that it protects its invariants by guarding against the empty GUID.

(Although not particularly relevant for this discussion, you'll also notice that I made this a concrete class. In general, I favour composition over inheritance, and I see no reason to introduce an abstract base class only in order to take and expose an ID - such code is unlikely to change much. There's no behaviour here because I also don't override Equals or GetHashCode, because I've come to realise that doing so with the implementation you suggest is counter-productive... but that, again, is another discussion.)

Then what if you can't use a GUID. Well, you could still resort to the solution that I outline above, and still use a GUID, and then have another Query that can give you an integer that corresponds to the GUID.

However, ultimately, this isn't how I tend to architect my systems these days. As Greg Young has pointed out in a white paper draft (sadly) no longer available on the internet, you can't really expect to make Distributed Domain-Driven Design work with the 'classic' n-layer architecture. However, once you separate reads and writes (AKA CQRS) you no longer have the sort of problem you ask about, because your write model should be modelling commands and events, instead of Entities. FWIW, in my Functional Architecture with F# Pluralsight course, I also touch on some of those concepts.

It's a great topic. There's another approach used for this problem in some ORMs, particularly in NHibernate: Hi/Lo algorithm.

It is possible to preload a batch of IDs and distribute them among new objects. I've written a blog post about it: link

In the proposed solution that uses both integer IDs and Guids, would you expect child tables to use GUIDs as foreign keys also?

If the aggregate is passed in its entirety into a stored procedure, then scope_identity() can come into play. When a merge/replication scenario is not of concern, then you would be able to make use of integer IDs everywhere internally.

Irfan, thank you for writing. To the degree you choose to use GUIDs as described in this article, it's in order to obey the CQS principle. Thus, it's entirely a concern of the client(s). How you relate tables inside of a relational database is an implementation detail.

If by 'aggregate' you mean an Aggregate Root as described in DDD, then the root is the root of a tree. Translated to typical relational database design, it'd be a row in a table. By definition, any children of such a root don't have individual identity, so I don't see how it'd make sense to associate a GUID with the children; you'd never query them individually, because if you do, then the Aggregate Root is no longer an Aggregate Root.

How you associate the children with the root is an implementation detail. Again, in typical, normalised database design, you'd typically find children via foreign key relationship. Since this is an implementation detail, you can use any key type you'd like for that purpose; most likely, that'll be some sort of integer.

Hi Mark. I noticed in a comment to one of Kenny's questions you said you would not design a repository the way it was originally designed in this example. How would you design a repository layer? I've always found this type of repository design to not quite feel right but see it all over the place.

Thanks,

Ben

Ben, thank you for writing. To be clear, the Repository pattern is one of the most misunderstood and misrepresented design patterns that I can think of. It was originally described in Martin Fowler's book Patterns of Enterprise Application Architecture, but if you look up the pattern description there, it has nothing to do with how people normally use it. It's a prime example of design patterns being ambiguous and open not only to interpretation, but to semantic shift.

Regardless of the interpretation, I don't use the Repository design pattern. Following the Dependency Inversion Principle, "clients […] own the abstract interfaces" (APPP, chapter 11), so if I need to, say, read some data from a database, I define a data reader interface in the client library. Following other SOLID principles as well, I'd typically end up with most interfaces having only a single member. These days, though, if I do Dependency Injection at all, I mostly use partial application of higher-order functions.

Mark, when you get to choose the database design, do most of your tables include both a (supplied) Guid ID and a (database-generated) int ID?

Tyson, thank you for writing. There are a couple of options.

You could just use a GUID as the table key. DBAs typically dislike that option because, if you use that as the table's clustered index, it'll force the database to physically rearrange the table every time you insert a row (since GUIDs aren't, in general, increasing). Notice, however, that this argument is entirely concerned with performance. Using a GUID as a table key has no impact on database design in general. It is, after all, just an integer.

Thus, if the table in question is likely to remain small (i.e. have few rows) and with only the occasional insert, I see no reason to have the standard INT IDENTITY column.

For busier tables, you can have both columns. For example, the code base I'm currently working with defines its Reservations table like this:

CREATE TABLE [dbo].[Reservations] ( [Id] INT NOT NULL IDENTITY, [At] DATETIME2 (7) NOT NULL, [Name] NVARCHAR (50) NOT NULL, [Email] NVARCHAR (50) NOT NULL, [Quantity] INT NOT NULL, [PublicId] UNIQUEIDENTIFIER NOT NULL UNIQUE, [RestaurantId] INT NOT NULL PRIMARY KEY CLUSTERED ([Id] ASC) )

The Id column exclusively exists to keep the database happy. The code that interacts with the database never uses it; it uses PublicId.

To be honest, I used to have a decent grasp on database design, SQL Server, and related performance considerations, but it's about a decade back. I'm sure you could produce some cases where such a design would be detrimental, but on the other hand, I believe that the majority of code out there isn't even near the extremes where you need to compromise on the design.

Hello, Mark! Thank you for the encyclopedia of knowledge and experience you have shared and continue to share with us through this blog and your books!

I've recently read Domain Modeling Made Functional by Scott Wlaschin and am currently reading your new book, Code That Fits in Your Head. I've taken quite a liking to the idea that not just entities, but also events should be modeled within a domain. In addition to common events such as queries/commands, any expected error or success cases can also be wrapped in a type. This manner of extensive typing seems to help improve the "What you see is all there is" factor when learning/understanding a code base. Given that we have some expected events that occur when interacting with a database through commands and queries, it might make sense to encapsulate that domain model in the return types of these methods.

The query portion is straightforward. As you've discussed in your book, we can use Maybe<T> to indicate a successful or unsuccessful query. We might also be so crazy as to use an Either<T, TException> to propagate some exception message/data to be logged/returned at the bread-end of our impure/pure workflow.

Would it violate CQS to do the same for commands? We're doing a side effect on the database, no question. We're also probably returning some data that is relevant to the incoming command parameter within the resulting message from either the IOSuccess or IOFailure. A pattern I've been using for a web API project is something similar to the following:

public sealed record IOSuccess(string Value); public abstract record Failure(string Value); public sealed record ValidationFailure(string Value) : Failure(Value); public sealed record IOFailure(string Value) : Failure(Value); public sealed record GetBalloonQuery(BalloonId Id); public sealed record UpdateBalloonCommand(Balloon Balloon); public sealed record PopBalloonCommand(BalloonId Id, IPointyObject Needle); public interface IBalloonRepository { Task<Either<IReadOnlyCollection<Balloon>, IOFailure>> GetBalloonsAsync(); Task<Either<Balloon, IOFailure>> GetBalloonAsync(GetBalloonQuery query); Task<Either<IOSuccess, IOFailure>> UpdateBalloonAsync(UpdateBalloonCommand command); Task<Either<IOSuccess, IOFailure>> DeleteBalloonAsync(PopBalloonCommand command); }

I think this fits quite well with the idea that we can XXX out the name of the method and the types will tell the full story, no question at all. I also like that this seems to follow from Domain Driven Design, where we are being super explicit about the domain event because of the specificity of the object parameters we're sending down the wire.

Does this idea hold any merit when looking to design APIs that follow the CQS philosophy? Where might you direct a greenhorn developer to learn more about this topic?

Zach, thank you for writing.

As I write above, it's up to you if you want to follow CQS. If you do, however, you have to play by the rules, and the rules are that Commands don't return data.

We may have to make some concessions to the language in which we work. In C#, for example, we have to accept that Task (without a generic type argument) is 'asynchronous void', even though it looks like a return value.

If you want to be explicit about errors, then yes, there's always a risk that a state mutation may fail. Being explicit about that, too, might compel you to model a Command as having a return type like Task<Either<MyError, Unit>> (customarily, for Either values, errors go to the left, contrary to the isomorphic Result, where they go to the right). I don't, however, design side-effecting actions like that in C#.

Even so, in none of these variations does a Command return data in the happy-path scenario.

The API you suggest violate the rule that Commands mustn't return data. You are, of course, free to design APIs as you like, but CQS it isn't. As a counterexample, UpdateBalloonAsync returns an IOSuccess in the happy-path scenario, and that value again contains a string.

If you removed the string Value from IOSuccess it'd be isomorphic to unit. Transitively, then, the return type of UpdateBalloonAsync would be isomorphic to Task<Either<IOFailure, Unit>> (again, errors go to the left, because 'success is right'). If you allow Commands to throw exceptions, that again would be isomorphic to Task, with the implied understanding that the Command may throw an exception.

Where would I direct someone who want to learn more about the topic?

That's not that easy to answer. As Bertrand Meyer writes:

"Side-effect-producing functions, which have been elevated by some languages (C seems to be the most extreme example) to the status of an institution, conflict with the classical notion of function in mathematics."

As far as I can tell, that 'institution' continues with most mainstream languages like C#, Java, JavaScript, etcetera. This implies that you're unlikely to find strong treatment of the topic of CQS in 'traditional' object-oriented material.

As the above quote also implies by its use of the word function, you'll find that this distinction between pure functions and impure actions is more prevalent and explicit in functional programming.

Why DRY?

Code duplication is often harmful - except when it isn't. Learn how to think about the trade-offs involved.

Good programmers know that code duplication should be avoided. There's a cost associated with duplicated code, so we have catchphrases like Don't Repeat Yourself (DRY) in order to remind ourselves that code duplication is evil.

It seems to me that some programmers see themselves as Terminators: out to eliminate all code duplication with extreme prejudice; sometimes, perhaps, without even considering the trade-offs involved. Every time you remove duplicated code, you add a level of indirection, and as you've probably heard before, all problems in computer science can be solved by another level of indirection, except for the problem of too many levels of indirection.

Removing code duplication is important, but it tends to add a cognitive overhead. Therefore, it's important to understand why code duplication is harmful - or rather: when it's harmful.

Rates of change #

Imagine that you copy a piece of code and paste it into ten other code bases, and then never touch that piece of code again. Is that harmful?

Probably not.

Here's one of my favourite examples. When protecting the invariants of objects, I always add Guard Clauses against nulls:

if (subject == null) throw new ArgumentNullException("subject");

In fact, I have a Visual Studio code snippet for this; I've been using this code snippet for years, which means that I have code like this Guard Clause duplicated all over my code bases. Most likely, there are thousands of examples of such Guard Clauses on my hard drive, with the only variation being the name of the parameter. I don't mind, because, in my experience, these two lines of code never change.

Yet many programmers see that as a violation of DRY, so instead, they introduce something like this:

Guard.AgainstNull(subject, "subject");

The end result of this is that you've slightly increased the cognitive overhead, but what have you gained? As far as I can tell: nothing. The code still has the same number of Guard Clauses. Instead of idiomatic if statements, they are now method calls, but it's hardly DRY when you have to repeat those calls to Guard.AgainstNull all over the place. You'd still be repeating yourself.

The point here is that DRY is a catchphrase, but shouldn't be an excuse for avoiding thinking explicitly about any given problem.

If the duplicated code is likely to change a lot, the cost of duplication is likely to be high, because you'll have to spend time making the same change in lots of different places - and if you forget one, you'll be introducing bugs in the system. If the duplicated code is unlikely to change, perhaps the cost is low. As with all other risk management, you conceptually multiply the risk of the adverse event happening with the cost of the damage associated with that event. If the product is low, don't bother addressing the risk.

The Rule of Three #

It's not a new observation that unconditional elimination of duplicated code can be harmful. The Rule of Three exists for this reason:

- Write a piece of code.

- Write the same piece of code again. Resist the urge to generalise.

- Write the same piece of code again. Now you are allowed to consider generalising it.

Another reason is that even if the duplication is 'real' (and not coincidental), you may not have enough examples to enable you to make the correct refactoring. Often, even duplicated code comes with small variations:

- The logic is the same, but a string value differs.

- The logic is almost the same, but one duplicate performs an extra small step.

- The logic looks similar, but operates on two different types of object.

- etc.

If you refactor too prematurely, you may perform the wrong refactoring. Often, people introduce helper methods, and then when they realize that the axis of variability was not what they expected, they add more and more parameters to the helper method, and more and more complexity to its implementation. This leads to ripple effects. Ripple effects lead to thrashing. Thrashing leads to poor maintainability. Poor maintainability leads to low productivity.

This is, in my experience, the most important reason to follow the Rule of Three: wait, until you have more facts available to you. You don't have to take the rule literally either. You can wait until you have four, five, or six examples of the duplication, if the rate of change is low.

The parallel to statistics #

If you've ever taken a course in statistics, you would have learned that the less data you have, the less confidence you can have in any sort of analysis. Conversely, the more samples you have, the more confidence can you have if you are trying to find or verify some sort of correlation.

The same holds true for code duplication, I believe. The more samples you have of duplicated code, the better you understand what is truly duplicated, and what varies. The better you understand the axes of variability, the better a refactoring you can perform in order to get rid of the duplication.

Summary #

Code duplication is costly - but only if the code changes. The cost of code duplication, thus, is C*p, where C is the cost incurred, when you need to change the code, and p is the probability that you'll need to change the code. In my experience, for example, the Null Guard Clause in this article has a cost of duplication of 0, because the probability that I'll need to change it is 0.

There's a cost associated with removing duplication - particularly if you make the wrong refactoring. Thus, depending on the values of C and p, you may be better off allowing a bit of duplication, instead of trying to eradicate it as soon as you see it.

You may not be able to quantify C and p (I'm not), but you should be able to estimate whether these values are small or large. This should help you decide if you need to eliminate the duplication right away, or if you'd be better off waiting to see what happens.

Comments

I would add one more aspect to this article: Experience.

The more experience you gain the more likely you will perceive parts of your code which could possibly benefit from a generalization; but not yet because it would be premature.

I try to keep these places in mind and try to simplify the potential later refactoring. When your code meets the rule of three, you’re able to adopt your preparations to efficiently refactor your code. This leads to better code even if the code isn't repeated for the third time.

Needless to say that experience is always crucial but I like to show people, who are new to programing, which decisions are based on experience and which are based on easy to follow guidelines like the rule of three.

Markus, thank you for writing. Yes, I agree that experience is always helpful.

Wonderful article but I'd like to say that I prefer sticking to the Zero one infinity rule instead of the Rule Of Three

That way I do overcome my laziness of searching for duplicates and extracting them to a procedure. Also it does help to keep code DRY without insanity to use it only on 2 or more lines of code. If it's a non-ternary oneliner I'm ok with that. If it's 2 lines of code I may consider making a procedure out of it and 3 lines and more are extracted every time I need to repeat them.

I generally agree with the points raised here, but I'd like to point out another component to the example used of argument validation. I realize this post is fairly old now, so I'm not entirely sure if you'd agree or not considering new development since then.

While it is fair to say that argument validation is simple enough not to warrant some sort of encapsulation like the Guard class, this can quickly change once you add other factors into the mix, like number of arguments, and project-specific style rules. Take this sample code as an example:

if (subject1 == null) { throw new ArgumentNullException("subject1"); } if (subject2 == null) { throw new ArgumentNullException("subject2"); } if (subject3 == null) { throw new ArgumentNullException("subject3"); } if (subject4 == null) { throw new ArgumentNullException("subject4"); } if (subject5 == null) { throw new ArgumentNullException("subject5"); }

This uses "standard" StyleCop rules with 5 parameters (probably the very limit of what is acceptable before having to refactor the code). Every block has the explicit scope added, and a line break after the scope terminator, as per style guidelines. This can get incredibly verbose and distract from the main logic of the method, which in cases like this, can become smaller than the argument validation code itself! Now, compare that to a more modern approach using discards:

_ = subject1 ?? throw new ArgumentNullException("subject1"); _ = subject2 ?? throw new ArgumentNullException("subject2"); _ = subject3 ?? throw new ArgumentNullException("subject3"); _ = subject4 ?? throw new ArgumentNullException("subject4"); _ = subject5 ?? throw new ArgumentNullException("subject5");

Or even better, using C#6 expression name deduction and the new, native guard method:

ArgumentNullException.ThrowIfNull(subject1); ArgumentNullException.ThrowIfNull(subject2); ArgumentNullException.ThrowIfNull(subject3); ArgumentNullException.ThrowIfNull(subject4); ArgumentNullException.ThrowIfNull(subject5);

Now, even that can be improved upon, and C# is moving in the direction of making contract checks like this even easier.

I only wanted to make the point that, in this particular example, there are other benefits to the refactor besides just DRY, and those can be quite important as well since we read code much more than we write it: any gains in clarity and conciseness can net substantial productivity improvements.

Juliano, thank you for writing. I agree that the first example is too verbose, but when addressing it, I'd start from the position that it's just a symptom. The underlying problem, here, is that the style guide is counterproductive. For the same reason, I don't write null guards that way.

I'm not sure that the following was your intent, so please excuse me as I take this topic in the direction of one of my pet peeves. In general, I think that 'we' have a tendency towards action bias. Instead of taking a step back and consider whether a problem even ought to be a problem, we often launch directly into producing a solution.

In the spirit of five whys, we may first find that the underlying explanation of the problem is the style guide.

Asking one more time, we may begin to consider the need for null guards altogether. Why do we need them? Because objects may be null. Is there a way to avoid that? In modern C#, you can turn on the nullable reference types feature. Other languages like Haskell famously have no nulls.

I realise that this line of inquiry is unproductive when you have existing C# code bases.

Your point, however, remains valid. It's perfectly fine to extract a helper method when the motivation is to make the code more readable. And while my book Code That Fits in Your Head is an attempt to instrumentalise readability to some extend, a subjective component will always linger.

I don't agree that the style guide is counter productive per se. There are fair justifications for prefering the explicit braces and the blank lines in the StyleCop documentation. They might not be the easiest in the eyes in this particular scenario, but we have to take into consideration the ease of enforcement in the entire project of a particular style. If you deviate from the style only in certain scenarios, it becomes much harder to manage on a big team. Applying the single rule everywhere is much easier. I do understand however that this is fairly subjective, so I won't pursue the argument here.

Now, regarding nullable reference types, I'd just like to point out that one cannot only rely on that feature for argument validation. As you are aware, that's an optional feature, that might not be enabled on consumer code. If you are writing a reusable library for example, and you rely solely on Nullable Reference Types as your argument validation strategy, this will still cause runtime exceptions if a consumer that does not have NRTs enabled calls you passing null, and this would be a very bad developer experience for them as the stack trace will be potentially very cryptic. For that reason, it is a best practice to keep the runtime checks in place even when using nullable reference types.

Juliano, thank you for writing. I'm sure that there are reasons for that style guide. I admit that I haven't read them (you didn't link to them), but I can think of some arguments myself. Unless you're interested in that particular discussion, I'll leave it at that. Ultimately, this blog post is about DRY in general, and not particularly about Guard Clauses.

I'm aware of the limitations of nullable reference types. This once again highlights the difference between developing and maintaining a reusable library versus a complete application.

Understanding the forces that apply in a particular context is, I believe, a key to making good decisions.

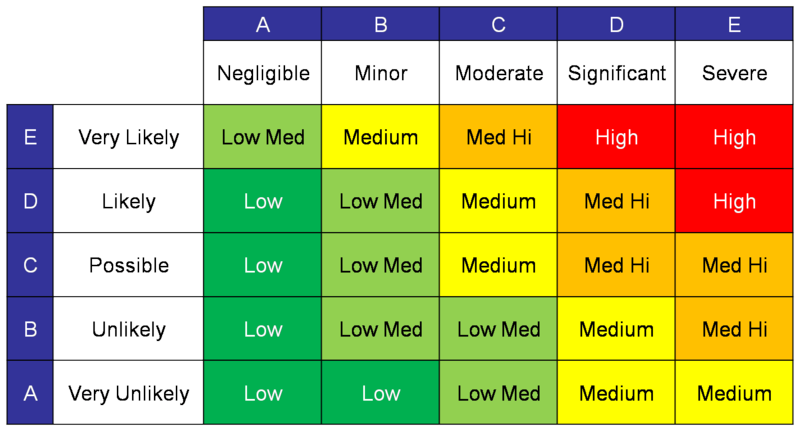

I love the call out of risk * probability. I use threat matrixes to guide decisions all the time.

I'd also like to elaborate that duplication is determined not just by similar code, but by similar forces that change the code. If code looks similar, but changes for different reasons, then centralizing will cause tension between the different cases.

Using the example above, guard clauses are unlikely to change together. Most likely, the programmmer changes a parameter and just the single guard clause is effected. Since the clauses don't change for the same reason, the effect of duplication is very low.

Different forces might effect all guard clauses and justify centralizing. For example, if a system wanted to change its global null handling strategy at debug-time versus production.

However, I often see semantically separate code struggling to share an implementation. I think header interfaces and conforming container-like library wrappers are companion smells of this scenario. For example, I frequently see multiple flows trying to wrap email notifications behind some generic email notification abstraction. The parent flow is still coupled to the idea of email, the two notifications still change separately, and there's an extra poor abstraction between us and sending emails.

Encapsulation and SOLID Pluralsight course

My latest Pluralsight course is now available. This time it's about fundamental programming techniques.

Most programmers I meet know about encapsulation and Object-Oriented Design (OOD) - at least, until I start drilling them on specifics. Apparently, the way most people have been taught OOD is at odds with my view on the subject. For a long time, I've wanted to teach OOD the way I think it should be taught. My perspective is chiefly based on Bertrand Meyer's Object-Oriented Software Construction and Robert C. Martin's SOLID principles (presented, among other sources, in Agile Principles, Patterns, and Practices in C#). My focus is on Command Query Separation, protection of invariants, and favouring Composition over Inheritance.

In my new Pluralsight course, I'm happy to be able to teach OOD the way I think it should be taught. The course is aimed at professional developers with a couple of years of experience, but it's based on decades of experience (mine and others'), so I hope that even seasoned programmers can learn a thing or two from watching it.

What you will learn #

How do you make your code easily usable for other people? By following the actionable, measurable rules laid forth by Command/Query Separation as well as Postel’s law.

Learn how to write maintainable software that can easily respond to changing requirements, using object-oriented design principles. First, you'll learn about the fundamental object-oriented design principle of Encapsulation, and then you'll learn about the five SOLID principles - also known as the principles of object-oriented design. There are plenty of code examples along the way; they are in C#, but written in such a way that they should be easily understandable to readers of Java, or other curly-brace-based languages.

Is OOD still relevant? #

Functional Programming is becoming increasingly popular, and regular readers of this blog will have noticed that I, myself, write a fair amount of F# code. In light of this, is OOD still relevant?

There's a lot of Object-Oriented code out there, or at least, code written in a nominally Object-Oriented language, and it's not going to go away any time soon. Getting better at maintaining and evolving Object-Oriented code is, in my opinion, still important.

Some of the principles I cover in this course are also relevant in Functional Programming.

Comments

Dan, thank you for writing. You are talking about this implementation, I assume:

public bool TryRead(int id, out string message) { message = null; var path = this.GetFileName(id); if (!File.Exists(path)) return false; message = File.ReadAllText(path); return true; }

This implementation isn't thread-safe, because File.Exists(path) may return true, and then, before File.ReadAllText(path) is invoked, another thread or process could delete the file, causing an exception to be thrown when File.ReadAllText(path) is invoked.

It's possible to make the TryRead method thread-safe (I know of at least two alternatives), but I usually leave that as an exercise for the reader :)

The only safe implementation that I am aware of would be something along the lines of:

public bool TryRead(int id, out string message) { var path = this.GetFileName(id); try { message = File.ReadAllText(path); return true; } catch { message = null; return false; } }

Am I missing something?

Bart, no, you aren't missing anything; that looks about right :) The other alternative is just a variation on your solution.

Drain

A Drain is a filter abstraction over an Iterator, with the purpose of making out-of-process queries more efficient.

For some years now, the Reused Abstractions Principle has pushed me towards software architectures based upon fewer and fewer abstractions, where each abstraction, on the other hand, is reused over and over again.

In my Pluralsight course about A Functional Architecture with F#, I describe how to build an entire mainstream application based on only two abstractions:

More specifically, I use Reactive Extensions for Commands, and the Seq module for Queries (but if you're on C#, you can use LINQ instead).The problem #

It turns out that these two abstractions are enough to build an entire, mainstream system, but in practice, there's a performance problem. If you have only Iterators, you'll have to read all your data into memory, and then filter in memory. If you have lots of data on storage, this is obviously going to be prohibitively slow, so you'll need a way to select only a subset out of your persistent store.

This problem should be familiar to every student of software development. Pure abstractions tend not to survive contact with reality (there are examples in both Object-Oriented, Functional, and Logical or Relational programming), but we should still strive towards keeping abstractions as pure as possible.

One proposed solution to the Query Problem is to use something like IQueryable, but unfortunately, IQueryable is an extremely poor abstraction (and so are F# query expressions, too).

In my experience, the most important feature of IQueryable is the ability to filter before loading data; normally, you can perform projections in memory, but when you read from persistent storage, you need to select your desired subset before loading it into memory.

Inspired by talks by Bart De Smet, in my Pluralsight course, I define custom filter interfaces like:

type IReservations = inherit seq<Envelope<Reservation>> abstract Between : DateTime -> DateTime -> seq<Envelope<Reservation>>

or

type INotifications = inherit seq<Envelope<Notification>> abstract About : Guid -> seq<Envelope<Notification>>

Both of these interfaces derive from IEnumerable<T> and add a single extra method that defines a custom filter. Storage-aware implementations can implement this method by returning a new sequence of only those items on storage that match the filter. Such a method may

- make a SQL query against a database

- make a query against a document database

- read only some files from the file system

- etc.

Generalized interface #

The custom interfaces shown above follow a common template: the interface derives from IEnumerable<T> and adds a single 'filter' method, which filters the sequence based on the input argument(s). In the above examples, IReservations define a Between method with two arguments, while INotifications defines an About method with a single argument.

In order to generalize, it's necessary to find a common name for the interface and its single method, as well as deal with variations in method arguments.

All the really obvious names like Filter, Query, etc. are already 'taken', so I hit a thesaurus and settled on the name Drain. A Drain can potentially drain a sequence of elements to a smaller sequence.

When it comes to variations in input arguments, the solution is to use generics. The Between method that takes two arguments could also be modelled as a method taking a single tuple argument. Eventually, I've arrived at this general definition:

module Drain = type IDrainable<'a, 'b> = inherit seq<'a> abstract On : 'b -> seq<'a> let on x (d : IDrainable<'a, 'b>) = d.On x

As you can see, I decided to name the extra method On, as well as add an on function, which enables clients to use a Drain like this:

match tasks |> Drain.on id |> Seq.toList with

In the above example, tasks is defined as IDrainable<TaskRendition, string>, and id is a string, so the result of draining on the ID is a sequence of TaskRendition records.

Here's another example:

match statuses |> Drain.on(id, conversationId) |> Seq.toList with

Here, statuses is defined as IDrainable<string * Guid, string * string> - not the most well-designed instance, I admit: I should really introduce some well-named records instead of those tuples, but the point is that you can also drain on multiple values by using a tuple (or a record type) as the value on which to drain.

In-memory implementation #

One of the great features of Drains is that an in-memory implementation is easy, so you can add this function to the Drain module:

let ofSeq areEqual s = { new IDrainable<'a, 'b> with member this.On x = s |> Seq.filter (fun y -> areEqual y x) member this.GetEnumerator() = s.GetEnumerator() member this.GetEnumerator() = (this :> 'a seq).GetEnumerator() :> System.Collections.IEnumerator }

This enables you to take any IEnumerable<T> (seq<'a>) and turn it into an in-memory Drain by supplying an equality function. Here's an example:

let private toDrainableTasks (tasks : TaskRendition seq) = tasks |> Drain.ofSeq (fun x y -> x.Id = y)

This little helper function takes a sequence of TaskRendition records and defines the equality function as a comparison on each TaskRendition record's Id property. The result is a drain that you can use to select one or more TaskRendition records based on their IDs.

I use this a lot for unit testing.

Empty Drains #

It's also easy to define an empty drain, by adding this value to the Drain module:

let empty<'a, 'b> = Seq.empty<'a> |> ofSeq (fun x (y : 'b) -> false)

Here's a usage example:

let mappedUsers = Drain.empty<UserMapped, string>

Again, this can be handy when unit testing.

Other implementations #

While the in-memory implementation is useful when unit testing, the entire purpose of the Drain abstraction is to enable various implementations to implement the On method to perform a custom selection against a well-known data source. As an example, you could imagine an implementation that translates the input arguments of the On method into a SQL query.

If you want to see examples of this, my Pluralsight course demonstrates how to implement IReservations and INotifications with various data stores - I trust you can extrapolate from those examples.

Summary #

You can base an entire mainstream application on the two abstractions of Iterator and Observer. However, the problem when it comes to Iterators is that conceptually, you'll need to iterate over all potentially relevant elements in your system - and that may be millions of records!

However impure it is to introduce a third interface into the mix, I still prefer to introduce a single generic interface, instead of multiple custom interfaces, because once you and your co-workers understand the Drain abstraction, the cognitive load is still quite low. A Drain is an Iterator with a twist, so in the end, you'll have a system built on 2½ abstractions.

P.S. 2018-06-20. While this article is a decent attempt to generalise the query side of a fundamentally object-oriented approach to software architecture, I've later realised that dependency injection, even when disguised as partial application, isn't functional. The problem is that querying a database, reading files, and so on, is essentially non-deterministic, even when no side effects are incurred. The functional approach is to altogether reject the notion of dependencies.

Comments

Hire me

July-October 2014 I have some time available, if you'd like to hire me.

Since I became self-employed in 2011, I've been as busy as always, but it looks like I have some time available in the next months. If you'd like to hire me for small or big tasks, please contact me. See here for details.

Passive Attributes

Passive Attributes are Dependency Injection-friendly.

In my article about Dependency Injection-friendly frameworks, towards the end I touched on the importance of defining attributes without behaviour, but I didn't provide a constructive example of how to do this. In this article, I'll outline how to write a Dependency Injection-friendly attribute for use with ASP.NET Web API, but as far as I recall, you can do something similar with ASP.NET MVC.

Problem statement #

In ASP.NET Web API, you can adorn your Controllers and their methods with various Filter attributes, which is a way to implement cross-cutting concerns, such as authorization or error handling. The problem with this approach is that attribute instances are created by the run-time, so you can't use proper Dependency Injection (DI) patterns such as Constructor Injection. If an attribute defines behaviour (which many of the Web API attributes do), the most common attempt at writing loosely coupled code is to resort to a static Service Locator (an anti-pattern).

This again seems to lead framework designers towards attempting to make their frameworks 'DI-friendly' by introducing a Conforming Container (another anti-pattern).

The solution is simple: define attributes without behaviour.

Metering example #

Common examples of cross-cutting concerns are authentication, authorization, error handling, logging, and caching. In these days of multi-tenant on-line services, another example would be metering, so that you can bill each user based on consumption.

Imagine that you're writing an HTTP API where some actions must be metered, whereas others shouldn't. It might be nice to adorn the metered actions with an attribute to indicate this:

[Meter] public IHttpActionResult Get(int id)

Metering is a good example of a cross-cutting concern with behaviour, because, in order to be useful, you'd need to store the metering records somewhere, so that you can bill your users based on these records.

A passive Meter attribute would simply look like this:

[AttributeUsage(AttributeTargets.Method, AllowMultiple = false)] public class MeterAttribute : Attribute { }

In order to keep the example simple, this attribute defines no data, and can only be used on methods, but nothing prevents you from adding (primitive) values to it, or extend its usage to classes as well as methods.

As you can tell from the example, the MeterAttribute has no behaviour.

In order to implement a metering cross-cutting concern, you'll need to define an IActionFilter implementation, but that's a 'normal' class that can take dependencies:

public class MeteringFilter : IActionFilter { private readonly IObserver<MeterRecord> observer; public MeteringFilter(IObserver<MeterRecord> observer) { if (observer == null) throw new ArgumentNullException("observer"); this.observer = observer; } public Task<HttpResponseMessage> ExecuteActionFilterAsync( HttpActionContext actionContext, CancellationToken cancellationToken, Func<Task<HttpResponseMessage>> continuation) { var meterAttribute = actionContext .ActionDescriptor .GetCustomAttributes<MeterAttribute>() .SingleOrDefault(); if (meterAttribute == null) return continuation(); var operation = actionContext.ActionDescriptor.ActionName; var user = actionContext.RequestContext.Principal.Identity.Name; var started = DateTimeOffset.Now; return continuation().ContinueWith(t => { var completed = DateTimeOffset.Now; var duration = completed - started; var record = new MeterRecord { Operation = operation, User = user, Started = started, Duration = duration }; this.observer.OnNext(record); return t.Result; }); } public bool AllowMultiple { get { return true; } } }

This MeteringFilter class implements IActionFilter. It looks for the [Meter] attribute. If it doesn't find the attribute on the method, it immediately returns; otherwise, it starts collecting data about the invoked action:

- From

actionContext.ActionDescriptorit retrieves the name of the operation. If you try this out for yourself, you may find thatActionNamealone doesn't provide enough information to uniquely identify the method - it basically just contains the value "Get". However, theactionContextcontains enough information about the action that you can easily build up a better string; I just chose to skip doing that in order to keep the example simple. - From

actionContext.RequestContext.Principalyou can get information about the current user. In order to be useful, the user must be authenticated, but if you need to meter the usage of your service, you'll probably not allow anonymous access. - Before invoking the

continuation, the MeteringFilter records the current time. - After the

continuationhas completed, the MeteringFilter again records the current time and calculates the duration. - Finally, it publishes a MeterRecord to an injected dependency.

Configuring the service #

MeteringFilter is a normal class with behaviour, which you can register as a cross-cutting concern in your Web API service as easily as this:

var filter = new MeteringFilter(observer); config.Filters.Add(filter);

where observer is your preferred implementation of IObserver<MeterRecord>. This example illustrates the Pure DI approach, but if you rather prefer to resolve MeteringFilter with your DI Container of choice, you can obviously do this as well.

The above code typically goes into your Global.asax file, or at least a class directly or indirectly invoked from Application_Start. This constitutes (part of) the Composition Root of your service.

Summary #

Both ASP.NET Web API and ASP.NET MVC supports cross-cutting concerns in the shape of filters that you can add to the service. Such filters can look for passive attributes in order to decide whether or not to trigger. The advantage of this approach is that you can use normal Constructor Injection with these filters, which completely eliminates the need for a Service Locator or Conforming Container.

The programming model remains the same as with active attributes: if you want a particular cross-cutting concern to apply to a particular method or class, you adorn it with the appropriate attribute. Passive attributes have all the advantages of active attributes, but none of the disadvantages.

Comments

What if the dependency I'm injecting needs to be a transient dependency? Injecting a transient service into a singleton (the filter), would cause issues. My initial idea is to create an abstract factory as a dependency, then when the filter action executes, create the transient dependency, use it, and dispose. Do you have any better ideas?

Jonathan, thank you for writing. Does this article on the Decoraptor pattern (and this Stack Overflow answer) answer your question?

Yes, that's a good solution for this situation. Thanks!

Reading through Asp.Net Core Type and Service Filters, do you think it's sufficient to go that way (I know that TypeFilter is a bit clumsy), but let's assume that I need simple injection in my filter - ServiceFilterAttribute looks promising. Or you still would recomment to implement logic via traditional filter pipeline: `services.AddMvc(o => o.Filters.Add(...));`?

Valdis, thank you for writing. I haven't looked into the details of ASP.NET Core recently, but even so: on a more basic level, I don't understand the impulse to put behaviour into attributes. An attribute is an annotation. It's a declaration that a method, or type, is somehow special. Why not keep the annotation decoupled from the behaviour it 'triggers'? This would enable you to reuse the behaviour in other scenarios than by putting an attribute on a method.

I got actually very similar feelings, just wanted to get your opinion. And by the way - there is a catch, you can mismatch type and will notice that only during runtime. For instance: `[ServiceFilter(typeof(HomeController))]` will generate exception, because given type is not derived from `IFilterMetadata`

Indeed, one of the most problematic aspects of container-based DI (as opposed to Pure DI) is the loss of compile-time safety - to little advantage, I might add.

I'm concerned with using attributes for AOP at all in these cases. Obviously using attributes that define behaviors that rely on external depedencies is a bad idea as you and others have already previously covered. But is using attributes that define metadata for custom behaviors all that much better? For example, if one provides a framework with libraries containing common controllers, and another pulls those controllers into their composition root in order to host them, there is no indication at compile time that these custom attributes may be present. Would it not be better to require that behavior be injected into the constructor and simply consume the behavior at the relevant points within the controller? Or if attributes must be used, would it not be better for the component that implements the behavior to somehow be injected into the controller and given the opportunity to intercept requests to the controller earlier in the execution pipeline so that it can check for the attributes? Due to the nature of the Web API and .Net MVC engines, attributes defined with behavior can enforce their behaviors to be executed by default. And while attributes without behavior do indicate the need for a behavior to be executed for the class they are decorating, it does not appear that they can enforce said behavior to be executed by default. They are too easy to miss or ignore. There has got to be a better way. I have encountered this problem while refactoring some code that I'm working on right now (retro fitting said code with more modern, DI based code). I'm hoping to come up with a solution that informs the consuming developer in the composition root that this behavior is required, and still be able enforce the behavior with something closer to a decoration rather than a function call.

Tyree, thank you for writing. That's a valid concern, but I don't think it's isolated to passive attributes. The problem you outline is also present if you attempt to address cross-cutting concerns with the Decorator design pattern, or with dynamic interception as I describe in chapter 9 of my book. You also have this problem with the Composite pattern, because you can't have any compile-time guarantee that you've remembered to compose all required elements into the Composite, or that they're defined in the appropriate order (if that matters).

In fact, you can extend this argument to any use of polymorphism: how can you guarantee, at compile-time, that a particular polymorphic object contains the behaviour that you desire, instead of, say, being a Null Object? You can't. That's the entire point of polymorphism.

Even with attributes, how can you guarantee that the attributes stay there? What if another developer comes by and removes an attribute? The code is still going to compile.

Ultimately, code exists in order to implement some desired behaviour. There are guarantees you can get from the type system, but static typing can't provide all guarantees. If it could, you be in the situation where, 'if it compiles, it works'. No programming language I've heard of provides that guarantee, although there's a spectrum of languages with stronger or weaker type systems. Instead, we'll have to get feedback from multiple sources. Attributes often define cross-cutting concerns, and I find that these are often best verified with a set of integration tests.

As always in software development, you have to find the balance that's right for a particular scenario. In some cases, it's catastrophic if something is incorrectly configured; in other cases, it's merely unfortunate. More rigorous verification is required in the first case.

Thanks for the post, I tried to do the same for a class attribute (AttributeTargets.Class) and I am getting a null object every time I get the custom attributes. Does this only work for Methods? Or how can I make it work with classes? Thanks.

Cristian, thank you for writing. The example code shown in this article only looks in the action context's ActionDescriptor, which is an object that describes the action method. If you want to look for the attribute on the class, you should look in the action context's ControllerDescriptor instead, like this:

var meterAttribute = actionContext .ControllerContext .ControllerDescriptor .GetCustomAttributes<MeterAttribute>() .SingleOrDefault();

Obviously, if you want to support putting the attribute both on the class and the method, you'd need to look in both places, and decide which one to use if you find more than one.

I'm really struggling to get everything hooked up so it's initialised in the correct order, with one DbContext created per web request or scheduled task. The action filter is my biggest sticking point.