ploeh blog danish software design

Continuous delivery without a CI server

An illustrative example.

More than a decade ago, I worked on a small project. It was a small single-page application (SPA) with a REST API backend, deployed to Azure. As far as I recall, the REST API used blob storage, so all in all it wasn't a complex system.

We were two developers, and although we wanted to do continuous delivery (CD), we didn't have much development infrastructure. This was a little startup, and back then, there weren't a lot of free build services available. We were using GitHub, but it was before it had any free services to compile your code and run tests.

Given those constraints, we figured out a simple way to do CD, even though we didn't have a continuous integration (CI) server.

I'll tell you how we did this.

Shining an extraordinary light on the mundane #

The reason I'm relating this little story isn't to convince you that you, too, should do it that way. Rather, it's a didactic device. By doing something extreme, we can sometimes learn about the ordinary.

You can only be pragmatic if you know how to be dogmatic.

From what I hear and read, it seems that there's a lot of organizations that believe that they're doing CI (or perhaps even CD) because they have a CI server. What the following tale will hopefully highlight is that, while build servers are useful, they aren't a requirement for CI or CD.

Distributed CD #

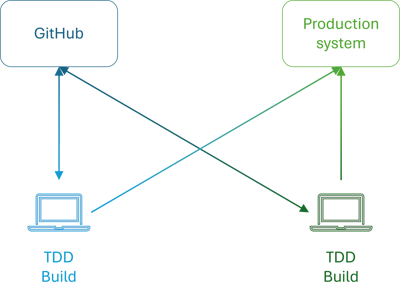

Dramatis personae: My colleague and me. Scene: One small SPA project with a REST API and blob storage, to be deployed to Azure. Code base in GitHub. Two laptops. Remote work.

One of us (let's say me) would start on implementing a feature, or fixing a bug. I'd use test-driven development (TDD) to get feedback on API ideas, as well as to accumulate a suite of regression tests. After a few hours of effective work, I'd send a pull request to my colleague.

Since we were only two people on the team, the responsibility was clear. It was the other person's job to review the pull request. It was also clear that the longer the reviewer dawdled, the less efficient the process would be. For that reason, we'd typically have agile pull requests with a good turnaround time.

While we were taking advantage of GitHub as a central coordination hub for pull requests, Git itself is famously distributed. Thus, we wondered whether it'd be possible to make the CD process distributed as well.

Yes, apart from GitHub, what we did was already distributed.

A little more automation #

Since we were both doing TDD, we already had automated tests. Due to the simple setup of the system, we'd already automated more than 80% of our process. It wasn't much of a stretch to automate whatever else needed automation. Such as deployment.

We agreed on a few simple rules:

- Every part of our process should be automated.

- Reviewing a pull request included running all tests.

When people review pull requests, they often just go to GitHub and look around before issuing an LGTM.

But, you do realize that this is Git, right? You can pull down the proposed changes and run them.

What if you're already in the middle of something, working on the same code base? Stash your changes and pull down the code.

The consequence of this process was that every time a pull request was accepted, we already knew that it passed all automated tests on two physical machines. We actually didn't need a server to run the tests a third time.

After a merge, the final part of the development process mandated that the original author should deploy to production. We had Bash script that did that.

Simplicity #

This process came with some built-in advantages. First of all, it was simple. There wasn't a lot of moving parts, so there weren't many steps that could break.

Have you ever had the pleasure of troubleshooting a build? The code works on your machine, but not on the build server.

It sometimes turns out that there's a configuration mismatch with the compiler or test tools. Thus, the problem with the build server doesn't mean that you prevented a dangerous defect from being deployed to production. No, the code just didn't compile on the build server, but would actually have run fine on the production system.

It's much easier troubleshooting issues on your own machine than on some remote server.

I've also seen build servers that were set up to run tests, but along the way, something had failed and the tests didn't run. And no-one was looking at logs or warning emails from the build system because that system would already be sending hundreds of warnings a day.

By agreeing to manually(!) run the automated tests as part of the review process, we were sure that they were exercised.

Finally, by keeping the process simple, we could focus on what mattered: Delivering value to our customer. We didn't have to waste time learning how a proprietary build system worked.

Does it scale? #

I know what you're going to say: This may have worked because the overall requirements were so simple. This will never work in a 'real' development organization, with a 'real' code base.

I understand. I never claimed that it would.

The point of this story is to highlight what CI and CD is. It's a way of working where you continuously integrate your code with everyone else's code, and where you continuously deploy changes to production.

In reality, having a dedicated build system for that can be useful. These days, such systems tend to be services that integrate with GitHub or other sites, rather than an actual server that you have to care for. Even so, having such a system doesn't mean that your organization makes use of CI or CD.

(Oh, and for the mathematically inclined: In this context continuous doesn't mean actually continuous. It just means arbitrarily often.)

Conclusion #

CI and CD are processes that describe how we work with code, and how we work together.

Continuous integration means that you often integrate your code with everyone else's code. How often? More than once a day.

Continuous deployment means that you often deploy code changes to production. How often? Every time new code is integrated.

A build system can be convenient to help along such processes, but it's strictly speaking not required.

Fundamentals

How to stay current with technology progress.

A long time ago, I landed my dream job. My new employer was a consulting company, and my role was to be the resident Azure expert. Cloud computing was still in its infancy, and there was a good chance that I might be able to establish myself as a leading regional authority on the topic.

As part of the role, I was supposed to write articles and give presentations showing how to solve various problems with Azure. I dug in with fervour, writing sample code bases and even an MSDN Magazine article. To my surprise, after half a year I realized that I was bored.

At that time I'd already spent more than a decade learning new technology, and I knew that I was good at it. For instance, I worked five years for Microsoft Consulting Services, and a dirty little secret of that kind of role is that, although you're sold as an expert in some new technology, you're often only a few weeks ahead of your customer. For example, I was once engaged as a Windows Workflow Foundation expert at a time when it was still in beta. No-one had years of experience with that technology, but I was still expected to know much more about it than my customer.

I had lots of engagements like that, and they usually went well. I've always been good at cramming, and as a consultant you're also unencumbered by all the daily responsibilities and politics that often occupy the time and energy of regular employees. The point being that while I'm decent at learning new stuff, the role of being a consultant also facilitates that sort of activity.

After more then a decade of learning new frameworks, new software libraries, new programming languages, new tools, new online services, it turned out that I was ready for something else. After spending a few months learning Azure, I realized that I'd lost interest in that kind of learning. When investigating a new Azure SDK, I'd quickly come to the conclusion that, oh, this is just another object-oriented library. There are these objects, and you call this method to do that, etc. That's not to say that learning a specific technology is a trivial undertaking. The worse the design, the more difficult it is to learn.

Still, after years of learning new technologies, I'd started recognizing certain patterns. Perhaps, I thought, well-designed technologies are based on some fundamental ideas that may be worth learning instead.

Staying current #

A common lament among software developers is that the pace of technology is so overwhelming that they can't keep up. This is true. You can't keep up.

There will always be something that you don't know. In fact, most things you don't know. This isn't a condition isolated only to technology. The sum total of all human knowledge is so vast that you can't know it all. What you will learn, even after a lifetime of diligent study, will be a nanoscopic fraction of all human knowledge - even of everything related to software development. You can't stay current. Get used to it.

A more appropriate question is: How do I keep my skill set relevant?

Assuming that you wish to stay employable in some capacity, it's natural to be concerned with how your mad Flash skillz will land you the next gig.

Trying to keep abreast of all new technologies in your field is likely to lead to burnout. Rather, put yourself in a position so that you can quickly learn necessary skills, just in time.

Study fundamentals, rather than specifics #

Those many years ago, I realized that it'd be a better investment of my time to study fundamentals. Often, once you have some foundational knowledge, you can apply it in many circumstances. Your general knowledge will enable you to get quickly up to speed with specific technologies.

Success isn't guaranteed, but knowing fundamentals increases your chances.

This may still seem too abstract. Which fundamentals should you learn?

In the remainder of this article, I'll give you some examples. The following collection of general programmer knowledge spans software engineering, computer science, broad ideas, but also specific tools. I only intend this set of examples to serve as inspiration. The list isn't complete, nor does it constitute a minimum of what you should learn.

If you have other interests, you may put together your own research programme. What follows here are just some examples of fundamentals that I've found useful during my career.

A criterion, however, for constituting foundational knowledge is that you should be able to apply that knowledge in a wide variety of contexts. The fundamental should not be tied to a particular programming language, platform, or operating system.

Design patterns #

Perhaps the first foundational notion that I personally encountered was that of design patterns. As the Gang of Four (GoF) wrote in the book, a design pattern is an abstract description of a solution that has been observed 'in the wild', more than once, independently evolved.

Please pay attention to the causality. A design pattern isn't prescriptive, but descriptive. It's an observation that a particular code organisation tends to solve a particular problem.

There are lots of misconceptions related to design patterns. One of them is that the 'library of patterns' is finite, and more or less constrained to the patterns included in the original book.

There are, however, many more patterns. To illustrate how much wider this area is, here's a list of some patterns books in my personal library:

- Design Patterns

- Pattern Languages of Program Design 3

- Patterns of Enterprise Application Architecture

- Enterprise Integration Patterns

- xUnit Test Patterns

- Service Design Patterns

- Implementation Patterns

- RESTful Web Services Cookbook

- AntiPatterns

In addition to these, there are many more books in my library that are patterns-adjacent, including one of my own. The point is that software design patterns is a vast topic, and it pays to know at least the most important ones.

A design pattern fits the criterion that you can apply the knowledge independently of technology. The original GoF book has examples in C++ and Smalltalk, but I've found that they apply well to C#. Other people employ them in their Java code.

Knowing design patterns not only helps you design solutions. That knowledge also enables you to recognize patterns in existing libraries and frameworks. It's this fundamental knowledge that makes it easier to learn new technologies.

Often (although not always) successful software libraries and frameworks tend to follow known patterns, so if you're aware of these patterns, it becomes easier to learn such technologies. Again, be aware of the causality involved. I'm not claiming that successful libraries are explicitly designed according to published design patterns. Rather, some libraries become successful because they offer good solutions to certain problems. It's not surprising if such a good solution falls into a pattern that other people have already observed and recorded. It's like parallel evolution.

This was my experience when I started to learn the details of Azure. Many of those SDKs and APIs manifested various design patterns, and once I'd recognized a pattern it became much easier to learn the rest.

The idea of design patterns, particularly object-oriented design patterns, have its detractors, too. Let's visit that as the next set of fundamental ideas.

Functional programming abstractions #

As I'm writing this, yet another Twitter thread pokes fun at object-oriented design (OOD) patterns as being nothing but a published collection of workarounds for the shortcomings of object orientation. The people who most zealously pursue that agenda tends to be functional programmers.

Well, I certainly like functional programming (FP) better than OOD too, but rather than poking fun at OOD, I'm more interested in how design patterns relate to universal abstractions. I also believe that FP has shortcomings of its own, but I'll have more to say about that in a future article.

Should you learn about monoids, functors, monads, catamorphisms, and so on?

Yes you should, because these ideas also fit the criterion that the knowledge is technology-independent. I've used my knowledge of these topics in Haskell (hardly surprising) and F#, but also in C# and Python. The various LINQ methods are really just well-known APIs associated with, you guessed it, functors, monads, monoids, and catamorphisms.

Once you've learned these fundamental ideas, it becomes easier to learn new technologies. This has happened to me multiple times, for example in contexts as diverse as property-based testing and asynchronous message-passing architectures. Once I realize that an API gives rise to a monad, say, I know that certain functions must be available. I also know how I should best compose larger code blocks from smaller ones.

Must you know all of these concepts before learning, say, F#? No, not at all. Rather, a language like F# is a great vehicle for learning such fundamentals. There's a first time for learning anything, and you need to start somewhere. Rather, the point is that once you know these concepts, it becomes easier to learn the next thing.

If, for example, you already know what a monad is when learning F#, picking up the idea behind computation expressions is easy once you realize that it's just a compiler-specific way to enable syntactic sugaring of monadic expressions. You can learn how computation expressions work without that knowledge, too; it's just harder.

This is a recurring theme with many of these examples. You can learn a particular technology without knowing the fundamentals, but you'll have to put in more time to do that.

On to the next example.

SQL #

Which object-relational mapper (ORM) should you learn? Hibernate? Entity Framework?

How about learning SQL? I learned SQL in 1999, I believe, and it's served me well ever since. I consider raw SQL to be more productive than using an ORM. Once more, SQL is largely technology-independent. While each database typically has its own SQL dialect, the fundamentals are the same. I'm most well-versed in the SQL Server dialect, but I've also used my SQL knowledge to interact with Oracle and PostgreSQL. Once you know one SQL dialect, you can quickly solve data problems in one of the other dialects.

It doesn't matter much whether you're interacting with a database from .NET, Haskell, Python, Ruby, or another language. SQL is not only universal, the core of the language is stable. What I learned in 1999 is still useful today. Can you say the same about your current ORM?

Most programmers prefer learning the newest, most cutting-edge technology, but that's a risky gamble. Once upon a time Silverlight was a cutting-edge technology, and more than one of my contemporaries went all-in on it.

On the contrary, most programmers find old stuff boring. It turns out, though, that it may be worthwhile learning some old technologies like SQL. Be aware of the Lindy effect. If it's been around for a long time, it's likely to still be around for a long time. This is true for the next example as well.

HTTP #

The HTTP protocol has been around since 1991. It's an effectively text-based protocol, and you can easily engage with a web server on a near-protocol level. This is true for other older protocols as well.

In my first IT job in the late 1990s, one of my tasks was to set up and maintain Exchange Servers. It was also my responsibility to make sure that email could flow not only within the organization, but that we could exchange email with the rest of the internet. In order to test my mail servers, I would often just telnet into them on port 25 and type in the correct, text-based instructions to send a test email.

Granted, it's not that easy to telnet into a modern web server on port 80, but a ubiquitous tool like curl accomplishes the same goal. I recently wrote how knowing curl is better than knowing Postman. While this wasn't meant as an attack on Postman specifically, neither was it meant as a facile claim that curl is the only tool useful for ad-hoc interaction with HTTP-based APIs. Sometimes you only realize an underlying truth when you write about a thing and then other people find fault with your argument. The underlying truth, I think, is that it pays to understand HTTP and being able to engage with an HTTP-based web service at that level of abstraction.

Preferably in an automatable way.

Shells and scripting #

The reason I favour curl over other tools to interact with HTTP is that I already spend quite a bit of time at the command line. I typically have a little handful of terminal windows open on my laptop. If I need to test an HTTP server, curl is already available.

Many years ago, an employer introduced me to Git. Back then, there were no good graphical tools to interact with Git, so I had to learn to use it from the command line. I'm eternally grateful that it turned out that way. I still use Git from the command line.

When you install Git, by default you also install Git Bash. Since I was already using that shell to interact with Git, it began to dawn on me that it's a full-fledged shell, and that I could do all sorts of other things with it. It also struck me that learning Bash would be a better investment of my time than learning PowerShell. At the time, there was no indication that PowerShell would ever be relevant outside of Windows, while Bash was already available on most systems. Even today, knowing Bash strikes me as more useful than knowing PowerShell.

It's not that I do much Bash-scripting, but I could. Since I'm a programmer, if I need to automate something, I naturally reach for something more robust than shell scripting. Still, it gives me confidence to know that, since I already know Bash, Git, curl, etc., I could automate some tasks if I needed to.

Many a reader will probably complain that the Git CLI has horrible developer experience, but I will, again, postulate that it's not that bad. It helps if you understand some fundamentals.

Algorithms and data structures #

Git really isn't that difficult to understand once you realize that a Git repository is just a directed acyclic graph (DAG), and that branches are just labels that point to nodes in the graph. There are basic data structures that it's just useful to know. DAGs, trees, graphs in general, adjacency lists or adjacency matrices.

Knowing that such data structures exist is, however, not that useful if you don't know what you can do with them. If you have a graph, you can find a minimum spanning tree or a shortest-path tree, which sometimes turn out to be useful. Adjacency lists or matrices give you ways to represent graphs in code, which is why they are useful.

Contrary to certain infamous interview practices, you don't need to know these algorithms by heart. It's usually enough to know that they exist. I can't remember Dijkstra's algorithm off the top of my head, but if I encounter a problem where I need to find the shortest path, I can look it up.

Or, if presented with the problem of constructing current state from an Event Store, you may realize that it's just a left fold over a linked list. (This isn't my own realization; I first heard it from Greg Young in 2011.)

Now we're back at one of the first examples, that of FP knowledge. A list fold is its catamorphism. Again, these things are much easier to learn if you already know some fundamentals.

What to learn #

These examples may seems overwhelming. Do you really need to know all of that before things become easier?

No, that's not the point. I didn't start out knowing all these things, and some of them, I'm still not very good at. The point is rather that if you're wondering how to invest your limited time so that you can remain up to date, consider pursuing general-purpose knowledge rather than learning a specific technology.

Of course, if your employer asks you to use a particular library or programming language, you need to study that, if you're not already good at it. If, on the other hand, you decide to better yourself, you can choose what to learn next.

Ultimately, if your're learning for your own sake, the most important criterion may be: Choose something that interests you. If no-one forces you to study, it's too easy to give up if you lose interest.

If, however, you have the choice between learning Noun.js or design patterns, may I suggest the latter?

For life #

When are you done, you ask?

Never. There's more stuff than you can learn in a lifetime. I've met a lot of programmers who finally give up on the grind to keep up, and instead become managers.

As if there's nothing to learn when you're a manager. I'm fortunate that, before I went solo, I mainly had good managers. I'm under no illusion that they automatically became good managers. All I've heard said about management is that there's a lot to learn in that field, too. Really, it'd be surprising if that wasn't the case.

I can understand, however, how just keep learning the next library, the next framework, the next tool becomes tiring. As I've already outlined, I hit that wall more than a decade ago.

On the other hand, there are so many wonderful fundamentals that you can learn. You can do self-study, or you can enrol in a more formal programme if you have the opportunity. I'm currently following a course on compiler design. It's not that I expect to pivot to writing compilers for the rest of my career, but rather,

- "It is considered a topic that you should know in order to be "well-cultured" in computer science.

- "A good craftsman should know his tools, and compilers are important tools for programmers and computer scientists.

- "The techniques used for constructing a compiler are useful for other purposes as well.

- "There is a good chance that a programmer or computer scientist will need to write a compiler or interpreter for a domain-specific language."

That's good enough for me, and so far, I'm enjoying the course (although it's also hard work).

You may not find this particular topic interesting, but then hopefully you can find something else that you fancy. 3D rendering? Machine learning? Distributed systems architecture?

Conclusion #

Technology moves at a pace with which it's impossible to keep up. It's not just you who's falling behind. Everyone is. Even the best-paid GAMMA programmer knows next to nothing of all there is to know in the field. They may have superior skills in certain areas, but there will be so much other stuff that they don't know.

You may think of me as a thought leader if you will. If nothing else, I tend to be a prolific writer. Perhaps you even think I'm a good programmer. I should hope so. Who fancies themselves bad at something?

You should, however, have seen me struggle with C programming during a course on computer systems programming. There's a thing I'm happy if I never have to revisit.

You can't know it all. You can't keep up. But you can focus on learning the fundamentals. That tends to make it easier to learn specific technologies that build on those foundations.

Gratification

Some thoughts on developer experience.

Years ago, I was introduced to a concept called developer ergonomics. Despite the name, it's not about good chairs, standing desks, or multiple monitors. Rather, the concept was related to how easy it'd be for a developer to achieve a certain outcome. How easy is it to set up a new code base in a particular language? How much work is required to save a row in a database? How hard is it to read rows from a database and display the data on a web page? And so on.

These days, we tend to discuss developer experience rather than ergonomics, and that's probably a good thing. This term more immediately conveys what it's about.

I've recently had some discussions about developer experience (DevEx, DX) with one of my customers, and this has lead me to reflect more explicitly on this topic than previously. Most of what I'm going to write here are opinions and beliefs that go back a long time, but apparently, it's only recently that these notions have congealed in my mind under the category name developer experience.

This article may look like your usual old-man-yells-at-cloud article, but I hope that I can avoid that. It's not the case that I yearn for some lost past where 'we' wrote Plankalkül in Edlin. That, in fact, sounds like a horrible developer experience.

The point, rather, is that most attractive things come with consequences. For anyone who have been reading this blog even once in a while, this should come as no surprise.

Instant gratification #

Fat foods, cakes, and wine can be wonderful, but can be detrimental to your health if you overindulge. It can, however, be hard to resist a piece of chocolate, and even if we think that we shouldn't, we often fail to restrain ourselves. The temptation of instant gratification is simply too great.

There are other examples like this. The most obvious are the use of narcotics, lack of exercise, smoking, and dropping out of school. It may feel good in the moment, but can have long-term consequences.

Small children are notoriously bad at delaying gratification, and we often associate the ability to delay gratification with maturity. We all, however, fall in from time to time. Food and wine are my weak spots, while I don't do drugs, and I didn't drop out of school.

It strikes me that we often talk about ideas related to developer experience in a way where we treat developers as children. To be fair, many developers also act like children. I don't know how many times I've heard something like, "I don't want to write tests/go through a code review/refactor! I just want to ship working code now!"

Fine, so do I.

Even if wine is bad for me, it makes life worth living. As the saying goes, even if you don't smoke, don't drink, exercise rigorously, eat healthily, don't do drugs, and don't engage in dangerous activities, you're not guaranteed to live until ninety, but you're guaranteed that it's going to feel that long.

Likewise, I'm aware that doing everything right can sometimes take so long that by the time we've deployed the software, it's too late. The point isn't to always or never do certain things, but rather to be aware of the consequences of our choices.

Developer experience #

I've no problem with aiming to make the experience of writing software as good as possible. Some developer-experience thought leaders talk about the importance of documentation, predictability, and timeliness. Neither do I mind that a development environment looks good, completes my words, or helps me refactor.

To return to the analogy of human vices, not everything that feels good is ultimately bad for you. While I do like wine and chocolate, I also love sushi, white asparagus, turbot, chanterelles, lumpfish roe caviar, true morels, Norway lobster, and various other foods that tend to be categorized as healthy.

A good IDE with refactoring support, statement completion, type information, test runner, etc. is certainly preferable to writing all code in Notepad.

That said, there's a certain kind of developer tooling and language features that strikes me as more akin to candy. These are typically tools and technologies that tend to demo well. Recent examples include OpenAPI, GitHub Copilot, C# top-level statements, code generation, and Postman. Not all of these are unequivocally bad, but they strike me as mostly aiming at immature developers.

The point of this article isn't to single out these particular products, standards, or language features, but on the other hand, in order to make a point, I do have to at least outline why I find them problematic. They're just examples, and I hope that by explaining what is on my mind, you can see the pattern and apply it elsewhere.

OpenAPI #

A standard like OpenAPI, for example, looks attractive because it automates or standardizes much work related to developing and maintaining REST APIs. Frameworks and tools that leverage that standard automatically creates machine-readable schema and contract, which can be used to generate client code. Furthermore, an OpenAPI-aware framework can also autogenerate an entire web-based graphical user interface, which developers can use for ad-hoc testing.

I've worked with clients who also published these OpenAPI user interfaces to their customers, so that it was easy to get started with the APIs. Easy onboarding.

Instant gratification.

What's the problem with this? There are clearly enough apparent benefits that I usually have a hard time talking my clients out of pursuing this strategy. What are the disadvantages? Essentially, OpenAPI locks you into level 2 APIs. No hypermedia controls, no smooth conneg-based versioning, no HATEOAS. In fact, most of what makes REST flexible is lost. What remains is an ad-hoc, informally-specified, bug-ridden, slow implementation of half of SOAP.

I've previously described my misgivings about Copilot, and while I actually still use it, I don't want to repeat all of that here. Let's move on to another example.

Top-level statements #

Among many other language features, C# 9 got top-level-statements. This means that you don't need to write a Main method in a static class. Rather, you can have a single C# code file where you can immediately start executing code.

It's not that I consider this language feature particularly harmful, but it also solves what seems to me a non-problem. It demos well, though. If I understand the motivation right, the feature exists because 'modern' developers are used to languages like Python where you can, indeed, just create a .py file and start adding code statements.

In an attempt to make C# more attractive to such an audience, it, too, got that kind of developer experience enabled.

You may argue that this is a bid to remove some of the ceremony from the language, but I'm not convinced that this moves that needle much. The level of ceremony that a language like C# has is much deeper than that. That's not to target C# in particular. Java is similar, and don't even get me started on C or C++! Did anyone say header files?

Do 'modern' developers choose Python over C# because they can't be arsed to write a Main method? If that's the only reason, it strikes me as incredibly immature. I want instant gratification, and writing a Main method is just too much trouble!

If developers do, indeed, choose Python or JavaScript over C# and Java, I hope and believe that it's for other reasons.

This particular C# feature doesn't bother me, but I find it symptomatic of a kind of 'innovation' where language designers target instant gratification.

Postman #

Let's consider one more example. You may think that I'm now attacking a company that, for all I know, makes a decent product. I don't really care about that, though. What I do care about is the developer mentality that makes a particular tool so ubiquitous.

I've met web service developers who would be unable to interact with the HTTP APIs that they are themselves developing if they didn't have Postman. Likewise, there are innumerable questions on Stack Overflow where people ask questions about HTTP APIs and post screen shots of Postman sessions.

It's okay if you don't know how to interact with an HTTP API. After all, there's a first time for everything, and there was a time when I didn't know how to do this either. Apparently, however, it's easier to install an application with a graphical user interface than it is to use curl.

Do yourself a favour and learn curl instead of using Postman. Curl is a command-line tool, which means that you can use it for both ad-hoc experimentation and automation. It takes five to ten minutes to learn the basics. It's also free.

It still seems to me that many people are of a mind that it's easier to use Postman than to learn curl. Ultimately, I'd wager that for any task you do with some regularity, it's more productive to learn the text-based tool than the point-and-click tool. In a situation like this, I'd suggest that delayed gratification beats instant gratification.

CV-driven development #

It is, perhaps, easy to get the wrong impression from the above examples. I'm not pointing fingers at just any 'cool' new technology. There are techniques, languages, frameworks, and so on, which people pick up because they're exciting for other reasons. Often, such technologies solve real problems in their niches, but are then applied for the sole reason that people want to get on the bandwagon. Examples include Kubernetes, mocks, DI Containers, reflection, AOP, and microservices. All of these have legitimate applications, but we also hear about many examples where people use them just to use them.

That's a different problem from the one I'm discussing in this article. Usually, learning about such advanced techniques requires delaying gratification. There's nothing wrong with learning new skills, but part of that process is also gaining the understanding of when to apply the skill, and when not to. That's a different discussion.

Innovation is fine #

The point of this article isn't that every innovation is bad. Contrary to Charles Petzold, I don't really believe that Visual Studio rots the mind, although I once did publish an article that navigated the same waters.

Despite my misgivings, I haven't uninstalled GitHub Copilot, and I do enjoy many of the features in both Visual Studio (VS) and Visual Studio Code (VS Code). I also welcome and use many new language features in various languages.

I can certainly appreciate how an IDE makes many things easier. Every time I have to begin a new Haskell code base, I long for the hand-holding offered by Visual Studio when creating a new C# project.

And although I don't use the debugger much, the built-in debuggers in VS and VS Code sure beat GDB. It even works in Python!

There's even tooling that I wish for, but apparently never will get.

Simple made easy #

In Simple Made Easy Rich Hickey follows his usual look-up-a-word-in-the-dictionary-and-build-a-talk-around-the-definition style to contrast simple with easy. I find his distinction useful. A tool or technique that's close at hand is easy. This certainly includes many of the above instant-gratification examples.

An easy technique is not, however, necessarily simple. It may or may not be. Rich Hickey defines simple as the opposite of complex. Something that is complex is assembled from parts, whereas a simple thing is, ideally, single and undivisible. In practice, truly simple ideas and tools may not be available, and instead we may have to settle with things that are less complex than their alternatives.

Once you start looking for things that make simple things easy, you see them in many places. A big category that I personally favour contains all the language features and tools that make functional programming (FP) easier. FP tends to be simpler than object-oriented or procedural programming, because it explicitly distinguishes between and separates predictable code from unpredictable code. This does, however, in itself tend to make some programming tasks harder. How do you generate a random number? Look up the system time? Write a record to a database?

Several FP languages have special features that make even those difficult tasks easy. F# has computation expressions and Haskell has do notation.

Let's say you want to call a function that consumes a random number generator. In Haskell (as in .NET) random number generators are actually deterministic, as long as you give them the same seed. Generating a random seed, on the other hand, is non-deterministic, so has to happen in IO.

Without do notation, you could write the action like this:

rndSelect :: Integral i => [a] -> i -> IO [a] rndSelect xs count = (\rnd -> rndGenSelect rnd xs count) <$> newStdGen

(The type annotation is optional.) While terse, this is hardly readable, and the developer experience also leaves something to be desired. Fortunately, however, you can rewrite this action with do notation, like this:

rndSelect :: Integral i => [a] -> i -> IO [a] rndSelect xs count = do rnd <- newStdGen return $ rndGenSelect rnd xs count

Now we can clearly see that the action first creates the rnd random number generator and then passes it to rndGenSelect. That's what happened before, but it was buried in a lambda expression and Haskell's right-to-left causality. Most people would find the first version (without do notation) less readable, and more difficult to write.

Related to developer ergonomics, though, do notation makes the simple code (i.e. code that separates predictable code from unpredictable code) easy (that is; at hand).

F# computation expressions offer the same kind of syntactic sugar, making it easy to write simple code.

Delay gratification #

While it's possible to set up a development context in such a way that it nudges you to work in a way that's ultimately good for you, temptation is everywhere.

Not only may new language features, IDE functionality, or frameworks entice you to do something that may be disadvantageous in the long run. There may also be actions you don't take because it just feels better to move on.

Do you take the time to write good commit messages? Not just a single-line heading, but a proper message that explains your context and reasoning?

Most people I've observed working with source control 'just want to move on', and can't be bothered to write a useful commit message.

I hear about the same mindset when it comes to code reviews, particularly pull request reviews. Everyone 'just wants to write code', and no-one want to review other people's code. Yet, in a shared code base, you have to live with the code that other people write. Why not review it so that you have a chance to decide what that shared code base should look like?

Delay your own gratification a bit, and reap the awards later.

Conclusion #

The only goal I have with this article is to make you think about the consequences of new and innovative tools and frameworks. Particularly if they are immediately compelling, they may be empty calories. Consider if there may be disadvantages to adopting a new way of doing things.

Some tools and technologies give you instant gratification, but may be unhealthy in the long run. This is, like most other things, context-dependent. In the long run your company may no longer be around. Sometimes, it pays to deliberately do something that you know is bad, in order to reach a goal before your competition. That was the original technical debt metaphor.

Often, however, it pays to delay gratification. Learn curl instead of Postman. Learn to design proper REST APIs instead of relying on OpenAI. If you need to write ad-hoc scripts, use a language suitable for that.

Conservative codomain conjecture

An API design heuristic.

For a while now, I've been wondering whether, in the language of Postel's law, one should favour being liberal in what one accepts over being conservative in what one sends. Yes, according to the design principle, a protocol or API should do both, but sometimes, you can't do that. Instead, you'll have to choose. I've recently reached the tentative conclusion that it may be a good idea favouring being conservative in what one sends.

Good API design explicitly considers contracts. What are the preconditions for invoking an operation? What are the postconditions? Are there any invariants? These questions are relevant far beyond object-oriented design. They are equally important in Functional Programming, as well as in service-oriented design.

If you have a type system at your disposal, you can often model pre- and postconditions as types. In practice, however, it frequently turns out that there's more than one way of doing that. You can model an additional precondition with an input type, but you can also model potential errors as a return type. Which option is best?

That's what this article is about, and my conjecture is that constraining the input type may be preferable, thus being conservative about what is returned.

An average example #

That's all quite abstract, so for the rest of this article, I'll discuss this kind of problem in the context of an example. We'll revisit the good old example of calculating an average value. This example, however, is only a placeholder for any kind of API design problem. This article is only superficially about designing an API for calculating an average. More generally, this is about API design. I like the average example because it's easy to follow, and it does exhibit some characteristics that you can hopefully extrapolate from.

In short, what is the contract of the following method?

public static TimeSpan Average(this IEnumerable<TimeSpan> timeSpans) { var sum = TimeSpan.Zero; var count = 0; foreach (var ts in timeSpans) { sum += ts; count++; } return sum / count; }

What are the preconditions? What are the postconditions? Are there any invariants?

Before I answer these questions, I'll offer equivalent code in two other languages. Here it is in F#:

let average (timeSpans : TimeSpan seq) = timeSpans |> Seq.averageBy (_.Ticks >> double) |> int64 |> TimeSpan.FromTicks

And in Haskell:

average :: (Fractional a, Foldable t) => t a -> a average xs = sum xs / fromIntegral (length xs)

These three examples have somewhat different implementations, but the same externally observable behaviour. What is the contract?

It seems straightforward: If you input a sequence of values, you get the average of all of those values. Are there any preconditions? Yes, the sequence can't be empty. Given an empty sequence, all three implementations throw an exception. (The Haskell version is a little more nuanced than that, but given an empty list of NominalDiffTime, it does throw an exception.)

Any other preconditions? At least one more: The sequence must be finite. All three functions allow infinite streams as input, but if given one, they will fail to return an average.

Are there any postconditions? I can only think of a statement that relates to the preconditions: If the preconditions are fulfilled, the functions will return the correct average value (within the precision allowed by floating-point calculations).

All of this, however, is just warming up. We've been over this ground before.

Modelling contracts #

Keep in mind that this average function is just an example. Think of it as a stand-in for a procedure that's much more complicated. Think of the most complicated operation in your code base.

Not only do real code bases have many complicated operations. Each comes with its own contract, different from the other operations, and if the team isn't explicitly thinking in terms of contracts, these contracts may change over time, as the team adds new features and fixes bugs.

It's difficult work to keep track of all those contracts. As I argue in Code That Fits in Your Head, it helps if you can automate away some of that work. One way is having good test coverage. Another is to leverage a static type system, if you're fortunate enough to work in a language that has one. As I've also already covered, you can't replace all rules with types, but it doesn't mean that using the type system is ineffectual. Quite the contrary. Every part of a contract that you can offload to the type system frees up your brain to think about something else - something more important, hopefully.

Sometimes there's no good way to to model a precondition with a type, or perhaps it's just too awkward. At other times, there's really only a single way to address a concern. When it comes to the precondition that you can't pass an infinite sequence to the average function, change the type so that it takes some finite collection instead. That's not what this article is about, though.

Assuming that you've already dealt with the infinite-sequence issue, how do you address the other precondition?

Error-handling #

A typical object-oriented move is to introduce a Guard Clause:

public static TimeSpan Average(this IReadOnlyCollection<TimeSpan> timeSpans) { if (!timeSpans.Any()) throw new ArgumentOutOfRangeException( nameof(timeSpans), "Can't calculate the average of an empty collection."); var sum = TimeSpan.Zero; foreach (var ts in timeSpans) sum += ts; return sum / timeSpans.Count; }

You could do the same in F#:

let average (timeSpans : TimeSpan seq) = if Seq.isEmpty timeSpans then raise ( ArgumentOutOfRangeException( nameof timeSpans, "Can't calculate the average of an empty collection.")) timeSpans |> Seq.averageBy (_.Ticks >> double) |> int64 |> TimeSpan.FromTicks

You could also replicate such behaviour in Haskell, but it'd be highly unidiomatic. Instead, I'd rather discuss one idiomatic solution in Haskell, and then back-port it.

While you can throw exceptions in Haskell, you typically handle predictable errors with a sum type. Here's a version of the Haskell function equivalent to the above C# code:

average :: (Foldable t, Fractional a) => t a -> Either String a average xs = if null xs then Left "Can't calculate the average of an empty collection." else Right $ sum xs / fromIntegral (length xs)

For the readers that don't know the Haskell base library by heart, null is a predicate that checks whether or not a collection is empty. It has nothing to do with null pointers.

This variation returns an Either value. In practice you shouldn't just return a String as the error value, but rather a strongly-typed value that other code can deal with in a robust manner.

On the other hand, in this particular example, there's really only one error condition that the function is able to detect, so you often see a variation where instead of a single error message, such a function just doesn't return anything:

average :: (Foldable t, Fractional a) => t a -> Maybe a average xs = if null xs then Nothing else Just $ sum xs / fromIntegral (length xs)

This iteration of the function returns a Maybe value, indicating that a return value may or may not be present.

Liberal domain #

We can back-port this design to F#, where I'd also consider it idiomatic:

let average (timeSpans : IReadOnlyCollection<TimeSpan>) = if timeSpans.Count = 0 then None else timeSpans |> Seq.averageBy (_.Ticks >> double) |> int64 |> TimeSpan.FromTicks |> Some

This version returns a TimeSpan option rather than just a TimeSpan. While this may seem to put the burden of error-handling on the caller, nothing has really changed. The fundamental situation is the same. Now the function is just being more explicit (more honest, you could say) about the pre- and postconditions. The type system also now insists that you deal with the possibility of error, rather than just hoping that the problem doesn't occur.

In C# you can expand the codomain by returning a nullable TimeSpan value, but such an option may not always be available at the language level. Keep in mind that the Average method is just an example standing in for something that may be more complicated. If the original return type is a reference type rather than a value type, only recent versions of C# allows statically-checked nullable reference types. What if you're working in an older version of C#, or another language that doesn't have that feature?

In that case, you may need to introduce an explicit Maybe class and return that:

public static Maybe<TimeSpan> Average(this IReadOnlyCollection<TimeSpan> timeSpans)

{

if (timeSpans.Count == 0)

return new Maybe<TimeSpan>();

var sum = TimeSpan.Zero;

foreach (var ts in timeSpans)

sum += ts;

return new Maybe<TimeSpan>(sum / timeSpans.Count);

}

Two things are going on here; one is obvious while the other is more subtle. Clearly, all of these alternatives change the static type of the function in order to make the pre- and postconditions more explicit. So far, they've all been loosening the codomain (the return type). This suggests a connection with Postel's law: be conservative in what you send, be liberal in what you accept. These variations are all liberal in what they accept, but it seems that the API design pays the price by also having to widen the set of possible return values. In other words, such designs aren't conservative in what they send.

Do we have other options?

Conservative codomain #

Is it possible to instead design the API in such a way that it's conservative in what it returns? Ideally, we'd like it to guarantee that it returns a number. This is possible by making the preconditions even more explicit. I've also covered that alternative already, so I'm just going to repeat the C# code here without further comments:

public static TimeSpan Average(this NotEmptyCollection<TimeSpan> timeSpans) { var sum = timeSpans.Head; foreach (var ts in timeSpans.Tail) sum += ts; return sum / timeSpans.Count; }

This variation promotes another precondition to a type. The precondition that the input collection mustn't be empty can be explicitly modelled with a type. This enables us to be conservative about the codomain. The method now guarantees that it will return a value.

This idea is also easily ported to F#:

type NonEmpty<'a> = { Head : 'a; Tail : IReadOnlyCollection<'a> }

let average (timeSpans : NonEmpty<TimeSpan>) =

[ timeSpans.Head ] @ List.ofSeq timeSpans.Tail

|> List.averageBy (_.Ticks >> double)

|> int64

|> TimeSpan.FromTicks

The average function now takes a NonEmpty collection as input, and always returns a proper TimeSpan value.

Haskell already comes with a built-in NonEmpty collection type, and while it oddly doesn't come with an average function, it's easy enough to write:

import qualified Data.List.NonEmpty as NE average :: Fractional a => NE.NonEmpty a -> a average xs = sum xs / fromIntegral (NE.length xs)

You can find a recent example of using a variation of that function here.

Choosing between the two alternatives #

While Postel's law recommends having liberal domains and conservative codomains, in the case of the average API, we can't have both. If we design the API with a liberal input type, the output type has to be liberal as well. If we design with a restrictive input type, the output can be guaranteed. In my experience, you'll often find yourself in such a conundrum. The average API examined in this article is just an example, while the problem occurs often.

Given such a choice, what should you choose? Is it even possible to give general guidance on this sort of problem?

For decades, I considered such a choice a toss-up. After all, these solutions seem to be equivalent. Perhaps even isomorphic?

When I recently began to explore this isomorphism more closely, it dawned on me that there's a small asymmetry in the isomorphism that favours the conservative codomain option.

Isomorphism #

An isomorphism is a two-way translation between two representations. You can go back and forth between the two alternatives without loss of information.

Is this possible with the two alternatives outlined above? For example, if you have the conservative version, can create the liberal alternative? Yes, you can:

average' :: Fractional a => [a] -> Maybe a average' = fmap average . NE.nonEmpty

Not surprisingly, this is trivial in Haskell. If you have the conservative version, you can just map it over a more liberal input.

In F# it looks like this:

module NonEmpty =

let tryOfSeq xs =

if Seq.isEmpty xs then None

else Some { Head = Seq.head xs; Tail = Seq.tail xs |> List.ofSeq }

let average' (timeSpans : IReadOnlyCollection<TimeSpan>) =

NonEmpty.tryOfSeq timeSpans |> Option.map average

In C# we can create a liberal overload that calls the conservative method:

public static TimeSpan? Average(this IReadOnlyCollection<TimeSpan> timeSpans)

{

if (timeSpans.Count == 0)

return null;

var arr = timeSpans.ToArray();

return new NotEmptyCollection<TimeSpan>(arr[0], arr[1..]).Average();

}

Here I just used a Guard Clause and explicit construction of the NotEmptyCollection. I could also have added a NotEmptyCollection.TryCreate method, like in the F# and Haskell examples, but I chose the above slightly more imperative style in order to demonstrate that my point isn't tightly coupled to the concept of functors, mapping, and other Functional Programming trappings.

These examples highlight how you can trivially make a conservative API look like a liberal API. Is it possible to go the other way? Can you make a liberal API look like a conservative API?

Yes and no.

Consider the liberal Haskell version of average, shown above; that's the one that returns Maybe a. Can you make a conservative function based on that?

average' :: Fractional a => NE.NonEmpty a -> a average' xs = fromJust $ average xs

Yes, this is possible, but only by resorting to the partial function fromJust. I'll explain why that is a problem once we've covered examples in the two other languages, such as F#:

let average' (timeSpans : NonEmpty<TimeSpan>) = [ timeSpans.Head ] @ List.ofSeq timeSpans.Tail |> average |> Option.get

In this variation, average is the liberal version shown above; the one that returns a TimeSpan option. In order to make a conservative version, the average' function can call the liberal average function, but has to resort to the partial function Option.get.

The same issue repeats a third time in C#:

public static TimeSpan Average(this NotEmptyCollection<TimeSpan> timeSpans)

{

return timeSpans.ToList().Average().Value;

}

This time, the partial function is the unsafe Value property, which throws an InvalidOperationException if there's no value.

This even violates Microsoft's own design guidelines:

"AVOID throwing exceptions from property getters."

I've cited Cwalina and Abrams as the authors, since this rule can be found in my 2006 edition of Framework Design Guidelines. This isn't a new insight.

While the two alternatives are 'isomorphic enough' that we can translate both ways, the translations are asymmetric in the sense that one is safe, while the other has to resort to an inherently unsafe operation to make it work.

Encapsulation #

I've called the operations fromJust, Option.get, and Value partial, and only just now used the word unsafe. You may protest that neither of the three examples are unsafe in practice, since we know that the input is never empty. Thus, we know that the liberal function will always return a value, and therefore it's safe to call a partial function, even though these operations are unsafe in the general case.

While that's true, consider how the burden shifts. When you want to promote a conservative variant to a liberal variant, you can rely on all the operations being total. On the other hand, if you want to make a liberal variant look conservative, the onus is on you. None of the three type systems on display here can perform that analysis for you.

This may not be so bad when the example is as simple as taking the average of a collection of numbers, but does it scale? What if the operation you're invoking is much more complicated? Can you still be sure that you safely invoke a partial function on the return value?

As Code That Fits in Your Head argues, procedures quickly become so complicated that they no longer fit in your head. If you don't have well-described and patrolled contracts, you don't know what the postconditions are. You can't trust the return values from method calls, or even the state of the objects you passed as arguments. This tend to lead to defensive coding, where you write code that checks the state of everything all too often.

The remedy is, as always, good old encapsulation. In this case, check the preconditions at the beginning, and capture the result of that check in an object or type that is guaranteed to be always valid. This goes beyond making illegal states unrepresentable because it also works with predicative types. Once you're past the Guard Clauses, you don't have to check the preconditions again.

This kind of thinking illustrates why you need a multidimensional view on API design. As useful as Postel's law sometimes is, it doesn't address all problems. In fact, it turned out to be unhelpful in this context, while another perspective proves more fruitful. Encapsulation is the art and craft of designing APIs in such a way that they suggest or even compels correct interactions. The more I think of this, the more it strikes me that a ranking is implied: Preconditions are more important than postconditions, because if the preconditions are unfulfilled, you can't trust the postconditions, either.

Mapping #

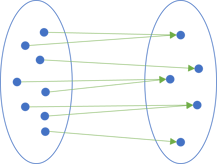

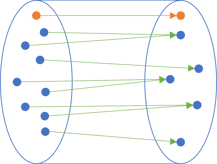

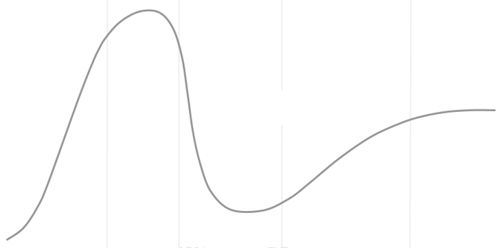

What's going on here? One perspective is to view types as sets. In the average example, the function maps from one set to another:

Which sets are they? We can think of the average function as a mapping from the set of non-empty collections of numbers to the set of real numbers. In programming, we can't represent real numbers, so instead, the left set is going to be the set of all the non-empty collections the computer or the language can represent and hold in (virtual) memory, and the right-hand set is the set of all the possible numbers of whichever type you'd like (32-bit signed integers, 64-bit floating-point numbers, 8-bit unsigned integers, etc.).

In reality, the left-hand set is much larger than the set to the right.

Drawing all those arrows quickly becomes awkward , so instead, we may draw each mapping as a pipe. Such a pipe also corresponds to a function. Here's an intermediate step in such a representation:

One common element is, however, missing from the left set. Which one?

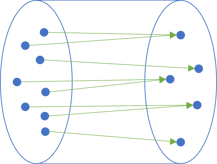

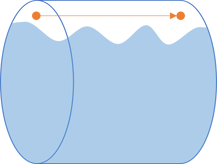

Pipes #

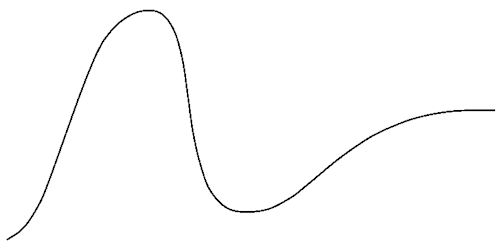

The above mapping corresponds to the conservative variation of the function. It's a total function that maps all values in the domain to a value in the codomain. It accomplishes this trick by explicitly constraining the domain to only those elements on which it's defined. Due to the preconditions, that excludes the empty collection, which is therefore absent from the left set.

What if we also want to allow the empty collection to be a valid input?

Unless we find ourselves in some special context where it makes sense to define a 'default average value', we can't map an empty collection to any meaningful number. Rather, we'll have to map it to some special value, such as Nothing, None, or null:

This extra pipe is free, because it's supplied by the Maybe functor's mapping (Select, map, fmap).

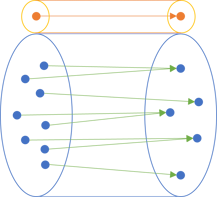

What happens if we need to go the other way? If the function is the liberal variant that also maps the empty collection to a special element that indicates a missing value?

In this case, it's much harder to disentangle the mappings. If you imagine that a liquid flows through the pipes, we can try to be careful and avoid 'filling up' the pipe.

The liquid represents the data that we do want to transmit through the pipe. As this illustration suggests, we now have to be careful that nothing goes wrong. In order to catch just the right outputs on the right side, you need to know how high the liquid may go, and attach a an 'flat-top' pipe to it:

As this illustration tries to get across, this kind of composition is awkward and error-prone. What's worse is that you need to know how high the liquid is going to get on the right side. This depends on what actually goes on inside the pipe, and what kind of input goes into the left-hand side.

This is a metaphor. The longer the pipe is, the more difficult it gets to keep track of that knowledge. The stubby little pipe in these illustrations may correspond to the average function, which is an operation that easily fits in our heads. It's not too hard to keep track of the preconditions, and how they map to postconditions.

Thus, turning such a small liberal function into a conservative function is possible, but already awkward. If the operation is complicated, you can no longer keep track of all the details of how the inputs relate to the outputs.

Additive extensibility #

This really shouldn't surprise us. Most programming languages come with all sorts of facilities that enable extensibility: The ability to add more functionality, more behaviour, more capabilities, to existing building blocks. Conversely, few languages come with removability facilities. You can't, commonly, declare that an object is an instance of a class, except one method, or that a function is just like another function, except that it doesn't accept a particular subset of input.

This explains why we can safely make a conservative function liberal, but why it's difficult to make a liberal function conservative. This is because making a conservative function liberal adds functionality, while making a liberal function conservative attempts to remove functionality.

Conjecture #

All this leads me to the following conjecture: When faced with a choice between two versions of an API, where one has a liberal domain, and the other a conservative codomain, choose the design with the conservative codomain.

If you need the liberal version, you can create it from the conservative operation. The converse need not be true.

Conclusion #

Postel's law encourages us to be liberal with what we accept, but conservative with what we return. This is a good design heuristic, but sometimes you're faced with mutually exclusive alternatives. If you're liberal with what you accept, you'll also need to be too loose with what you return, because there are input values that you can't handle. On the other hand, sometimes the only way to be conservative with the output is to also be restrictive when it comes to input.

Given two such alternatives, which one should you choose?

This article conjectures that you should choose the conservative alternative. This isn't a political statement, but simply a result of the conservative design being the smaller building block. From a small building block, you can compose something bigger, whereas from a bigger unit, you can't easily extract something smaller that's still robust and useful.

Service compatibility is determined based on policy

A reading of the fourth Don Box tenet, with some commentary.

This article is part of a series titled The four tenets of SOA revisited. In each of these articles, I'll pull one of Don Box's four tenets of service-oriented architecture (SOA) out of the original MSDN Magazine article and add some of my own commentary. If you're curious why I do that, I cover that in the introductory article.

In this article, I'll go over the fourth tenet, quoting from the MSDN Magazine article unless otherwise indicated.

Service compatibility is determined based on policy #

The fourth tenet is the forgotten one. I could rarely remember exactly what it included, but it does give me an opportunity to bring up a few points about compatibility. The articles said:

Object-oriented designs often confuse structural compatibility with semantic compatibility. Service-orientation deals with these two axes separately. Structural compatibility is based on contract and schema and can be validated (if not enforced) by machine-based techniques (such as packet-sniffing, validating firewalls). Semantic compatibility is based on explicit statements of capabilities and requirements in the form of policy.

Every service advertises its capabilities and requirements in the form of a machine-readable policy expression. Policy expressions indicate which conditions and guarantees (called assertions) must hold true to enable the normal operation of the service. Policy assertions are identified by a stable and globally unique name whose meaning is consistent in time and space no matter which service the assertion is applied to. Policy assertions may also have parameters that qualify the exact interpretation of the assertion. Individual policy assertions are opaque to the system at large, which enables implementations to apply simple propositional logic to determine service compatibility.

As you can tell, this description is the shortest of the four. This is also the point where I begin to suspect that my reading of the third tenet may deviate from what Don Box originally had in mind.

This tenet is also the most baffling to me. As I understand it, the motivation behind the four tenets was to describe assumptions about the kind of systems that people would develop with Windows Communication Foundation (WCF), or SOAP in general.

While I worked with WCF for a decade, the above description doesn't ring a bell. Reading it now, the description of policy sounds more like a system such as clojure.spec, although that's not something I know much about either. I don't recall WCF ever having a machine-readable policy subsystem, and if it had, I never encountered it.

It does seem, however, as though what I interpret as contract, Don Box called policy.

Despite my confusion, the word compatibility is worth discussing, regardless of whether that was what Don Box meant. A well-designed service is one where you've explicitly considered forwards and backwards compatibility.

Versioning #

Planning for forwards and backwards compatibility does not imply that you're expected to be able to predict the future. It's fine if you have so much experience developing and maintaining online systems that you may have enough foresight to plan for certain likely changes that you may have to make in the future, but that's not what I have in mind.

Rather, what you should do is to have a system that enables you to detect breaking changes before you deploy them. Furthermore you should have a strategy for how to deal with the perceived necessity to introduce breaking changes.

The most effective strategy that I know of is to employ explicit versioning, particularly message versioning. You can version an entire service as one indivisible block, but I often find it more useful to version at the message level. If you're designing a REST API, for example, you can take advantage of Content Negotiation.

If you like, you can use Semantic Versioning as a versioning scheme, but for services, the thing that mostly matters is the major version. Thus, you may simply label your messages with the version numbers 1, 2, etc.

If you already have a published service without explicit message version information, then you can still retrofit versioning afterwards. Imagine that your existing data looks like this:

{

"singleTable": {

"capacity": 16,

"minimalReservation": 10

}

}

This JSON document has no explicit version information, but you can interpret that as implying that the document has the 'default' version, which is always 1:

{

"singleTable": {

"version": 1,

"capacity": 16,

"minimalReservation": 10

}

}

If you later realize that you need to make a breaking change, you can do that by increasing the (major) version:

{

"singleTable": {

"version": 2,

"id": 12,

"capacity": 16,

"minimalReservation": 10

}

}

Recipients can now look for the version property to learn how to interpret the rest of the message, and failing to find it, infer that this is version 1.

As Don Box wrote, in a service-oriented system, you can't just update all systems in a single coordinated release. Therefore, you must never break compatibility. Versioning enables you to move forward in a way that does break with the past, but without breaking existing clients.

Ultimately, you may attempt to retire old service versions, but be ready to keep them around for a long time.

For more of my thoughts about backwards compatibility, see Backwards compatibility as a profunctor.

Conclusion #

The fourth tenet is the most nebulous, and I wonder if it was ever implemented. If it was, I'm not aware of it. Even so, compatibility is an important component of service design, so I took the opportunity to write about that. In most cases, it pays to think explicitly about message versioning.

I have the impression that Don Box had something in mind more akin to what I call contract. Whether you call it one thing or another, it stands to reason that you often need to attach extra rules to simple types. The schema may define an input value as a number, but the service does require that this particular number is a natural number. Or that a string is really a proper encoding of a date. Perhaps you call that policy. I call it contract. In any case, clearly communicating such expectations is important for systems to be compatible.

Fitting a polynomial to a set of points

The story of a fiasco.

This is the second in a small series of articles titled Trying to fit the hype cycle. In the introduction, I've described the exercise I had in mind: Determining a formula, or at least a piecewise function, for the Gartner hype cycle. This, to be clear, is an entirely frivolous exercise with little practical application.

In the previous article, I extracted a set of (x, y) coordinates from a bitmap. In this article, I'll showcase my failed attempt at fitting the data to a polynomial.

Failure #

I've already revealed that I failed to accomplish what I set out to do. Why should you read on, then?

You don't have to, and I can't predict the many reasons my readers have for occasionally swinging by. Therefore, I can't tell you why you should keep reading, but I can tell you why I'm writing this article.

This blog is a mix of articles that I write because readers ask me interesting questions, and partly, it's my personal research-and-development log. In that mode, I write about things that I've learned, and I write in order to learn. One can learn from failure as well as from success.

I'm not that connected to 'the' research community (if such a thing exists), but I'm getting the sense that there's a general tendency in academia that researchers rarely publish their negative results. This could be a problem, because this means that the rest of us never learn about the thousands of ways that don't work.

Additionally, in 'the' programming community, we also tend to boast our victories and hide our failures. More than one podcast (sorry about the weasel words, but I don't remember which ones) have discussed how this gives young programmers the wrong impression of what programming is like. It is, indeed, a process of much trial and error, but usually, we only publish our polished, final result.

Well, I did manage to produce code to fit a polynomial to the Gartner hype cycle, but I never managed to get a good fit.

The big picture #

I realize that I have a habit of burying the lede when I write technical articles. I don't know if I've picked up that tendency from F#, which does demand that you define a value or function before you can use it. This, by the way, is a good feature.

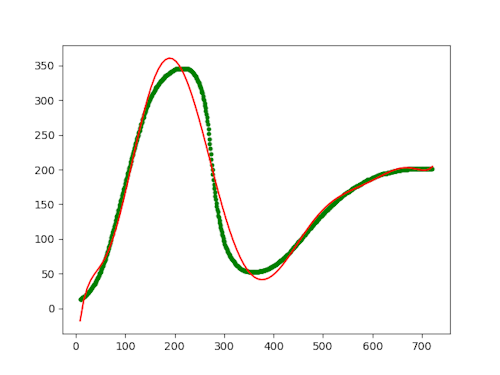

Here, I'll try to do it the other way around, and start with the big picture:

data = numpy.loadtxt('coords.txt', delimiter=',') x = data[:, 0] t = data[:, 1] w = fit_polynomial(x, t, 9) plot_fit(x, t, w)

This, by the way, is a Python script, and it opens with these imports:

import numpy import matplotlib.pyplot as plt

The first line of code reads the CSV file into the data variable. The first column in that file contains all the x values, and the second column the y values. The book that I've been following uses t for the data, rather than y. (Now that I think about it, I believe that this may only be because it works from an example in which the data to be fitted are 100 m dash times, denoted t.)

Once the script has extracted the data, it calls the fit_polynomial function to produce a set of weights w. The constant 9 is the degree of polynomial to fit, although I think that I've made an off-by-one error so that the result is only a eighth-degree polynomial.

Finally, the code plots the original data together with the polynomial:

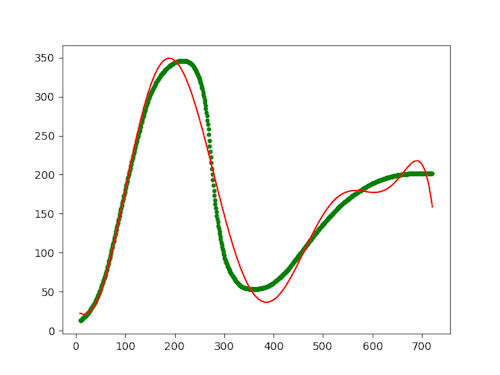

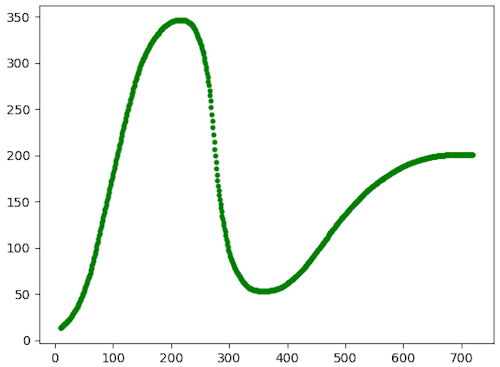

The green dots are the (x, y) coordinates that I extracted in the previous article, while the red curve is the fitted eighth-degree polynomial. Even though we're definitely in the realm of over-fitting, it doesn't reproduce the Gartner hype cycle.

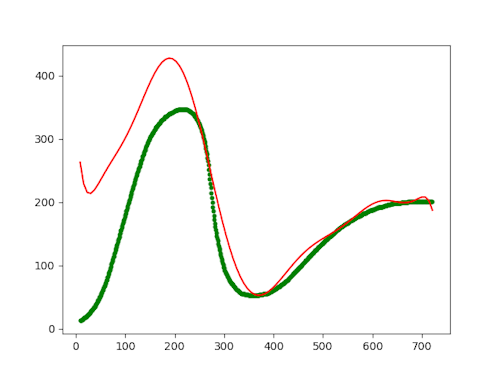

I've even arrived at the value 9 after some trial and error. After all, I wasn't trying to do any real science here, so over-fitting is definitely allowed. Even so, 9 seems to be the best fit I can achieve. With lover values, like 8, below, the curve deviates too much:

The value 10 looks much like 9, but above that (11), the curve completely disconnects from the data, it seems:

I'm not sure why it does this, to be honest. I would have thought that the more degrees you added, the more (over-)fitted the curve would be. Apparently, this is not so, or perhaps I made a mistake in my code.

Calculating the weights #

The fit_polynomial function calculates the polynomial coefficients using a linear algebra formula that I've found in at least two text books. Numpy makes it easy to invert, transpose, and multiply matrices, so the formula itself is just a one-liner. Here it is in the entire context of the function, though:

def fit_polynomial(x, t, degree): """ Fits a polynomial to the given data. Parameters ---------- x : Array of shape [n_samples] t : Array of shape [n_samples] degree : degree of the polynomial Returns ------- w : Array of shape [degree + 1] """ # This expansion creates a matrix, so we name that with an upper-case letter # rather than a lower-case letter, which is used for vectors. X = expand(x.reshape((len(x), 1)), degree) return numpy.linalg.inv(X.T @ X) @ X.T @ t

This may look daunting, but is really just two lines of code. The rest is docstring and a comment.

The above-mentioned formula is the last line of code. The one before that expands the input data t from a simple one-dimensional array to a matrix of those values squared, cubed, etc. That's how you use the least squares method if you want to fit it to a polynomial of arbitrary degree.

Expansion #

The expand function looks like this:

def expand(x, degree): """ Expands the given array to polynomial elements of the given degree. Parameters ---------- x : Array of shape [n_samples, 1] degree : degree of the polynomial Returns ------- Xp : Array of shape [n_samples, degree + 1] """ Xp = numpy.ones((len(x), 1)) for i in range(1, degree + 1): Xp = numpy.hstack((Xp, numpy.power(x, i))) return Xp

The function begins by creating a column vector of ones, here illustrated with only three rows:

>>> Xp = numpy.ones((3, 1))

>>> Xp

array([[1.],

[1.],

[1.]])

It then proceeds to loop over as many degrees as you've asked it to, each time adding a column to the Xp matrix. Here's an example of doing that up to a power of three, on example input [1,2,3]:

>>> x = numpy.array([1,2,3]).reshape((3, 1))

>>> x

array([[1],

[2],

[3]])

>>> Xp = numpy.hstack((Xp, numpy.power(x, 1)))

>>> Xp

array([[1., 1.],

[1., 2.],

[1., 3.]])

>>> Xp = numpy.hstack((Xp, numpy.power(x, 2)))

>>> Xp

array([[1., 1., 1.],

[1., 2., 4.],

[1., 3., 9.]])

>>> Xp = numpy.hstack((Xp, numpy.power(x, 3)))

>>> Xp

array([[ 1., 1., 1., 1.],

[ 1., 2., 4., 8.],

[ 1., 3., 9., 27.]])

Once it's done looping, the expand function returns the resulting Xp matrix.

Plotting #

Finally, here's the plot_fit procedure: