ploeh blog danish software design

What's a sandwich?

Ultimately, it's more about programming than food.

The Sandwich was named after John Montagu, 4th Earl of Sandwich because of his fondness for this kind of food. As popular story has it, he found it practical because it enabled him to eat without greasing the cards he often played.

A few years ago, a corner of the internet erupted in good-natured discussion about exactly what constitutes a sandwich. For instance, is the Danish smørrebrød a sandwich? It comes in two incarnations: Højtbelagt, the luxury version which is only consumable with knife and fork and the more modest, everyday håndmad (literally hand food), which, while open-faced, can usually be consumed without cutlery.

If we consider the 4th Earl of Sandwich's motivation as a yardstick, then the depicted højtbelagte smørrebrød is hardly a sandwich, while I believe a case can be made that a håndmad is:

Obviously, you need a different grip on a håndmad than on a sandwich. The bread (rugbrød) is much denser than wheat bread, and structurally more rigid. You eat it with your thumb and index finger on each side, and remaining fingers supporting it from below. The bottom line is this: A single piece of bread with something on top can also solve the original problem.

What if we go in the other direction? How about a combo consisting of bread, meat, bread, meat, and bread? I believe that I've seen burgers like that. Can you eat that with one hand? I think that this depends more on how greasy and overfilled it is, than on the structure.

What if you had five layers of meat and six layers of bread? This is unlikely to work with traditional Western leavened bread which, being a foam, will lose structural integrity when cut too thin. Imagining other kinds of bread, though, and thin slices of meat (or other 'content'), I don't see why it couldn't work.

FP sandwiches #

As regular readers may have picked up over the years, I do like food, but this is, after all, a programming blog.

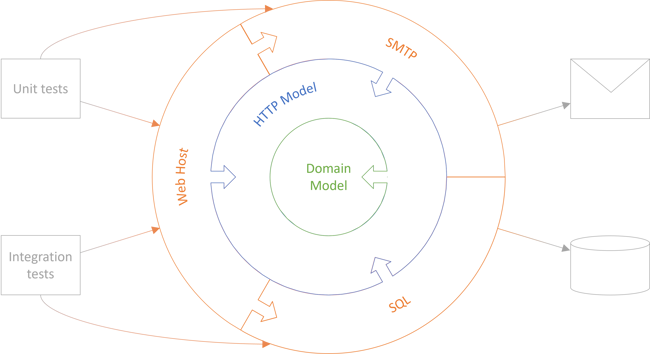

A few years ago I presented a functional-programming design pattern named Impureim sandwich. It argues that it's often beneficial to structure a code base according to the functional core, imperative shell architecture.

The idea, in a nutshell, is that at every entry point (Main method, message handler, Controller action, etcetera) you first perform all impure actions necessary to collect input data for a pure function, then you call that pure function (which may be composed by many smaller functions), and finally you perform one or more impure actions based on the function's return value. That's the impure-pure-impure sandwich.

My experience with this pattern is that it's surprisingly often possible to apply it. Not always, but more often than you think.

Sometimes, however, it demands a looser interpretation of the word sandwich.

Even the examples from the article aren't standard sandwiches, once you dissect them. Consider, first, the Haskell example, here recoloured:

tryAcceptComposition :: Reservation -> IO (Maybe Int) tryAcceptComposition reservation = runMaybeT $ liftIO (DB.readReservations connectionString $ date reservation) >>= MaybeT . return . flip (tryAccept 10) reservation >>= liftIO . DB.createReservation connectionString

The date function is a pure accessor that retrieves the date and time of the reservation. In C#, it's typically a read-only property:

public async Task<IActionResult> Post(Reservation reservation) { return await Repository.ReadReservations(reservation.Date) .Select(rs => maîtreD.TryAccept(rs, reservation)) .SelectMany(m => m.Traverse(Repository.Create)) .Match(InternalServerError("Table unavailable"), Ok); }

Perhaps you don't think of a C# property as a function. After all, it's just an idiomatic grouping of language keywords:

public DateTimeOffset Date { get; }

Besides, a function takes input and returns output. What's the input in this case?

Keep in mind that a C# read-only property like this is only syntactic sugar for a getter method. In Java it would have been a method called getDate(). From Function isomorphisms we know that an instance method is isomorphic to a function that takes the object as input:

public static DateTimeOffset GetDate(Reservation reservation)

In other words, the Date property is an operation that takes the object itself as input and returns DateTimeOffset as output. The operation has no side effects, and will always return the same output for the same input. In other words, it's a pure function, and that's the reason I've now coloured it green in the above code examples.

The layering indicated by the examples may, however, be deceiving. The green colour of reservation.Date is adjacent to the green colour of the Select expression below it. You might interpret this as though the pure middle part of the sandwich partially expands to the upper impure phase.

That's not the case. The reservation.Date expression executes before Repository.ReadReservations, and only then does the pure Select expression execute. Perhaps this, then, is a more honest depiction of the sandwich:

public async Task<IActionResult> Post(Reservation reservation) { var date = reservation.Date; return await Repository.ReadReservations(date) .Select(rs => maîtreD.TryAccept(rs, reservation)) .SelectMany(m => m.Traverse(Repository.Create)) .Match(InternalServerError("Table unavailable"), Ok); }

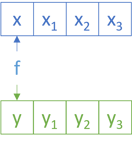

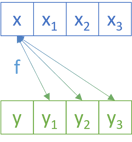

The corresponding 'sandwich diagram' looks like this:

If you want to interpret the word sandwich narrowly, this is no longer a sandwich, since there's 'content' on top. That's the reason I started this article discussing Danish smørrebrød, also sometimes called open-faced sandwiches. Granted, I've never seen a håndmad with two slices of bread with meat both between and on top. On the other hand, I don't think that having a smidgen of 'content' on top is a showstopper.

Initial and eventual purity #

Why is this important? Whether or not reservation.Date is a little light of purity in the otherwise impure first slice of the sandwich actually doesn't concern me that much. After all, my concern is mostly cognitive load, and there's hardly much gained by extracting the reservation.Date expression to a separate line, as I did above.

The reason this interests me is that in many cases, the first step you may take is to validate input, and validation is a composed set of pure functions. While pure, and a solved problem, validation may be a sufficiently significant step that it warrants explicit acknowledgement. It's not just a property getter, but complex enough that bugs could hide there.

Even if you follow the functional core, imperative shell architecture, you'll often find that the first step is pure validation.

Likewise, once you've performed impure actions in the second impure phase, you can easily have a final thin pure translation slice. In fact, the above C# example contains an example of just that:

public IActionResult Ok(int value) { return new OkActionResult(value); } public IActionResult InternalServerError(string msg) { return new InternalServerErrorActionResult(msg); }

These are two tiny pure functions used as the final translation in the sandwich:

public async Task<IActionResult> Post(Reservation reservation) { var date = reservation.Date; return await Repository.ReadReservations(date) .Select(rs => maîtreD.TryAccept(rs, reservation)) .SelectMany(m => m.Traverse(Repository.Create)) .Match(InternalServerError("Table unavailable"), Ok); }

On the other hand, I didn't want to paint the Match operation green, since it's essentially a continuation of a Task, and if we consider task asynchronous programming as an IO surrogate, we should, at least, regard it with scepticism. While it might be pure, it probably isn't.

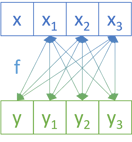

Still, we may be left with an inverted 'sandwich' that looks like this:

Can we still claim that this is a sandwich?

At the metaphor's limits #

This latest development seems to strain the sandwich metaphor. Can we maintain it, or does it fall apart?

What seems clear to me, at least, is that this ought to be the limit of how much we can stretch the allegory. If we add more tiers we get a Dagwood sandwich which is clearly a gimmick of little practicality.

But again, I'm appealing to a dubious metaphor, so instead, let's analyse what's going on.

In practice, it seems that you can rarely avoid the initial (pure) validation step. Why not? Couldn't you move validation to the functional core and do the impure steps without validation?

The short answer is no, because validation done right is actually parsing. At the entry point, you don't even know if the input makes sense.

A more realistic example is warranted, so I now turn to the example code base from my book Code That Fits in Your Head. One blog post shows how to implement applicative validation for posting a reservation.

A typical HTTP POST may include a JSON document like this:

{

"id": "bf4e84130dac451b9c94049da8ea8c17",

"at": "2024-11-07T20:30",

"email": "snomob@example.com",

"name": "Snow Moe Beal",

"quantity": 1

}

In order to handle even such a simple request, the system has to perform a set of impure actions. One of them is to query its data store for existing reservations. After all, the restaurant may not have any remaining tables for that day.

Which day, you ask? I'm glad you asked. The data access API comes with this method:

Task<IReadOnlyCollection<Reservation>> ReadReservations(

int restaurantId, DateTime min, DateTime max);

You can supply min and max values to indicate the range of dates you need. How do you determine that range? You need the desired date of the reservation. In the above example it's 20:30 on November 7 2024. We're in luck, the data is there, and understandable.

Notice, however, that due to limitations of wire formats such as JSON, the date is a string. The value might be anything. If it's sufficiently malformed, you can't even perform the impure action of querying the database, because you don't know what to query it about.

If keeping the sandwich metaphor untarnished, you might decide to push the parsing responsibility to an impure action, but why make something impure that has a well-known pure solution?

A similar argument applies when performing a final, pure translation step in the other direction.

So it seems that we're stuck with implementations that don't quite fit the ideal of the sandwich metaphor. Is that enough to abandon the metaphor, or should we keep it?

The layers in layered application architecture aren't really layers, and neither are vertical slices really slices. All models are wrong, but some are useful. This is the case here, I believe. You should still keep the Impureim sandwich in mind when structuring code: Keep impure actions at the application boundary - in the 'Controllers', if you will; have only two phases of impurity - the initial and the ultimate; and maximise use of pure functions for everything else. Keep most of the pure execution between the two impure phases, but realistically, you're going to need a pure validation phase in front, and a slim translation layer at the end.

Conclusion #

Despite the prevalence of food imagery, this article about functional programming architecture has eluded any mention of burritos. Instead, it examines the tension between an ideal, the Impureim sandwich, with real-world implementation details. When you have to deal with concerns such as input validation or translation to egress data, it's practical to add one or two more thin slices of purity.

In functional architecture you want to maximise the proportion of pure functions. Adding more pure code is hardly a problem.

The opposite is not the case. We shouldn't be cavalier about adding more impure slices to the sandwich. Thus, the adjusted definition of the Impureim sandwich seems to be that it may have at most two impure phases, but from one to three pure slices.

Dependency Whac-A-Mole

AKA Framework Whac-A-Mole, Library Whac-A-Mole.

I have now three times used the name Whac-A-Mole about a particular kind of relationship that may evolve with some dependencies. According to the rule of three, I can now extract the explanation to a separate article. This is that article.

Architecture smell #

Dependency Whac-A-Mole describes the situation when you're spending too much time investigating, learning, troubleshooting, and overall satisfying the needs of a dependency (i.e. library or framework) instead of delivering value to users.

Examples include Dependency Injection containers, object-relational mappers, validation frameworks, dynamic mock libraries, and perhaps the Gherkin language.

From the above list it does not follow that those examples are universally bad. I can think of situations where some of them make sense. I might even use them myself.

Rather, the Dependency Whac-A-Mole architecture smell occurs when a given dependency causes more trouble than the benefit it was supposed to provide.

Causes #

We rarely set out to do the wrong thing, but we often make mistakes in good faith. You may decide to take a dependency on a library or framework because

- it worked well for you in a previous context

- it looks as though it'll address a major problem you had in a previous context

- you've heard good things about it

- you saw a convincing demo

- you heard about it in a podcast, conference talk, YouTube video, etc.

- a FAANG company uses it

- it's the latest tech

- you want it on your CV

There could be other motivations as well, and granted, some of those I listed aren't really good reasons. Even so, I don't think anyone chooses a dependency with ill intent.

And what might work in one context may turn out to not work in another. You can't always predict such consequences, so I imply no judgement on those who choose the 'wrong' dependency. I've done it, too.

It is, however, important to be aware that this risk is always there. You picked a library with the best of intentions, but it turns out to slow you down. If so, acknowledge the mistake and kill your darlings.

Background #

Whenever you use a library or framework, you need to learn how to use it effectively. You have to learn its concepts, abstractions, APIs, pitfalls, etc. Not only that, but you need to stay abreast of changes and improvements.

Microsoft, for example, is usually good at maintaining backwards compatibility, but even so, things don't stand still. They evolve libraries and frameworks the same way I would do it: Don't introduce breaking changes, but do introduce new, better APIs going forward. This is essentially the Strangler pattern that I also write about in Code That Fits in Your Head.

While it's a good way to evolve a library or framework, the point remains: Even if you trust a supplier to prioritise backwards compatibility, it doesn't mean that you can stop learning. You have to stay up to date with all your dependencies. If you don't, sooner or later, the way that you use something like, say, Entity Framework is 'the old way', and it's not really supported any longer.

In order to be able to move forward, you'll have to rewrite those parts of your code that depend on that old way of doing things.

Each dependency comes with benefits and costs. As long as the benefits outweigh the costs, it makes sense to keep it around. If, on the other hand, you spend more time dealing with it than it would take you to do the work yourself, consider getting rid of it.

Symptoms #

Perhaps the infamous left-pad incident is too easy an example, but it does highlight the essence of this tension. Do you really need a third-party package to pad a string, or could you have done it yourself?

You can spend much time figuring out how to fit a general-purpose library or framework to your particular needs. How do you make your object-relational mapper (ORM) fit a special database schema? How do you annotate a class so that it produces validation messages according to the requirements in your jurisdiction? How do you configure an automatic mapping library so that it correctly projects data? How do you tell a Dependency Injection (DI) Container how to compose a Chain of Responsibility where some objects also take strings or integers in their constructors?

Do such libraries or frameworks save time, or could you have written the corresponding code quicker? To be clear, I'm not talking about writing your own ORM, your own DI Container, your own auto-mapper. Rather, instead of using a DI Container, Pure DI is likely easier. As an alternative to an ORM, what's the cost of just writing SQL? Instead of an ad-hoc, informally-specified, bug-ridden validation framework, have you considered applicative validation?

Things become really insidious if your chosen library never really solves all problems. Every time you figure out how to use it for one exotic corner case, your 'solution' causes a new problem to arise.

A symptom of Dependency Whac-A-Mole is when you have to advertise after people skilled in a particular technology.

Again, it's not necessarily a problem. If you're getting tremendous value out of, say, Entity Framework, it makes sense to list expertise as a job requirement. If, on the other hand, you have to list a litany of libraries and frameworks as necessary skills, it might pay to stop and reconsider. You can call it your 'tech stack' all you will, but is it really an inadvertent case of vendor lock-in?

Anecdotal evidence #

I've used the term Whac-A-Mole a couple of times to describe the kind of situation where you feel that you're fighting a technology more than it's helping you. It seems to resonate with other people than me.

Here are the original articles where I used the term:

These are only the articles where I explicitly use the term. I do, however, think that the phenomenon is more common. I'm particularly sensitive to it when it comes to Dependency Injection, where I generally believe that DI Containers make the technique harder that it has to be. Composing object graphs is easily done with code.

Conclusion #

Sometimes a framework or library makes it more difficult to get things done. You spend much time kowtowing to its needs, researching how to do things 'the xyz way', learning its intricate extensibility points, keeping up to date with its evolving API, and engaging with its community to lobby for new features.

Still, you feel that it makes you compromise. You might have liked to organise your code in a different way, but unfortunately you can't, because it doesn't fit the way the dependency works. As you solve issues with it, new ones appear.

These are symptoms of Dependency Whac-A-Mole, an architecture smell that indicates that you're using the wrong tool for the job. If so, get rid of the dependency in favour of something better. Often, the better alternative is just plain vanilla code.

Comments

The most obvious example of this for me is definitely AutoMapper. I used to think it was great and saved so much time, but more often than not, the mapping configuration ended up being more complex (and fragile) than just mapping the properties manually.

I could imagine. AutoMapper is not, however, a library I've used enough to evaluate.

The moment I lost any faith in AutoMapper was after trying to debug a mapping that was silently failing on a single property. Three of us were looking at it for a good amount of time before one of us noticed a single character typo on the destination property. As the names did not match, no mapping occurred. It is unfortunately a black box, and obfuscated a problem that a manual mapping would have handled gracefully.

Mark, it is interesting that you mention Gherkin as potentially one of these moles. It is something I've been evaluating in the hopes of making our tests more business focused, but considering it again now, you can achieve a lot of what Gherkin offers with well defined namespaces, classes and methods in your test assemblies, something like:

- Namespace: GivenSomePrecondition

- TestClass: WhenCarryingOutAnAction

- TestMethod: ThenTheExpectedPostConditionResults

Callum, I was expecting someone to comment on including Gherkin on the list.

I don't consider all my examples as universally problematic. Rather, they often pop up in contexts where people seem to be struggling with a concept or a piece of technology with no apparent benefit.

I'm sure that when Dan North came up with the idea of BDD and Gherkin, he actually used it. When used in the way it was originally intended, I can see it providing value.

Apart from Dan himself, however, I'm not aware that I've ever met anyone who has used BDD and Gherkin in that way. On the contrary, I've had more than one discussion that went like this:

Interlocutor: "We use BDD and Gherkin. It's great! You should try it."

Me: "Why?"

Interlocutor: "It enables us to organise our tests."

Me: "Can't you do that with the AAA pattern?"

Interlocutor: "..."

Me: "Do any non-programmers ever look at your tests?"

Interlocutor: "No..."

If only programmers look at the test code, then why impose an artificial constraint? Given-when-then is just arrange-act-assert with different names, but free of Gherkin and the tooling that typically comes with it, you're free to write test code that follows normal good coding practices.

(As an aside, yes: Sometimes constraints liberate, but what I've seen of Gherkin-based test code, this doesn't seem to be one of those cases.)

Finally, to be quite clear, although I may be repeating myself: If you're using Gherkin to interact with non-programmers on a regular basis, it may be beneficial. I've just never been in that situation, or met anyone other than Dan North who have.

The case of the mysterious comparison

A ploeh mystery.

I was recently playing around with the example code from my book Code That Fits in Your Head, refactoring the Table class to use a predicative NaturalNumber wrapper to represent a table's seating capacity.

Originally, the Table constructor and corresponding read-only data looked like this:

private readonly bool isStandard; private readonly Reservation[] reservations; public int Capacity { get; } private Table(bool isStandard, int capacity, params Reservation[] reservations) { this.isStandard = isStandard; Capacity = capacity; this.reservations = reservations; }

Since I wanted to show an example of how wrapper types can help make preconditions explicit, I changed it to this:

private readonly bool isStandard; private readonly Reservation[] reservations; public NaturalNumber Capacity { get; } private Table(bool isStandard, NaturalNumber capacity, params Reservation[] reservations) { this.isStandard = isStandard; Capacity = capacity; this.reservations = reservations; }

The only thing I changed was the type of Capacity and capacity.

As I did that, two tests failed.

Evidence #

Both tests failed in the same way, so I only show one of the failures:

Ploeh.Samples.Restaurants.RestApi.Tests.MaitreDScheduleTests.Schedule Source: MaitreDScheduleTests.cs line 16 Duration: 340 ms Message: FsCheck.Xunit.PropertyFailedException : Falsifiable, after 2 tests (0 shrinks) (StdGen (48558275,297233133)): Original: <null> (Ploeh.Samples.Restaurants.RestApi.MaitreD, [|Ploeh.Samples.Restaurants.RestApi.Reservation|]) ---- System.InvalidOperationException : Failed to compare two elements in the array. -------- System.ArgumentException : At least one object must implement IComparable. Stack Trace: ----- Inner Stack Trace ----- GenericArraySortHelper`1.Sort(T[] keys, Int32 index, Int32 length, IComparer`1 comparer) Array.Sort[T](T[] array, Int32 index, Int32 length, IComparer`1 comparer) EnumerableSorter`2.QuickSort(Int32[] keys, Int32 lo, Int32 hi) EnumerableSorter`1.Sort(TElement[] elements, Int32 count) OrderedEnumerable`1.ToList() Enumerable.ToList[TSource](IEnumerable`1 source) MaitreD.Allocate(IEnumerable`1 reservations) line 91 <>c__DisplayClass21_0.<Schedule>b__4(<>f__AnonymousType7`2 <>h__TransparentIdentifier1) line 114 <>c__DisplayClass2_0`3.<CombineSelectors>b__0(TSource x) SelectIPartitionIterator`2.GetCount(Boolean onlyIfCheap) Enumerable.Count[TSource](IEnumerable`1 source) MaitreDScheduleTests.ScheduleImp(MaitreD sut, Reservation[] reservations) line 31 <>c.<Schedule>b__0_2(ValueTuple`2 t) line 22 ForAll@15.Invoke(Value arg00) Testable.evaluate[a,b](FSharpFunc`2 body, a a) ----- Inner Stack Trace ----- Comparer.Compare(Object a, Object b) ObjectComparer`1.Compare(T x, T y) EnumerableSorter`2.CompareAnyKeys(Int32 index1, Int32 index2) ComparisonComparer`1.Compare(T x, T y) ArraySortHelper`1.SwapIfGreater(T[] keys, Comparison`1 comparer, Int32 a, Int32 b) ArraySortHelper`1.IntroSort(T[] keys, Int32 lo, Int32 hi, Int32 depthLimit, Comparison`1 comparer) GenericArraySortHelper`1.Sort(T[] keys, Int32 index, Int32 length, IComparer`1 comparer)

The code highlighted with red is user code (i.e. my code). The rest comes from .NET or FsCheck.

While a stack trace like that can look intimidating, I usually navigate to the top stack frame of my own code. As I reproduce my investigation, see if you can spot the problem before I did.

Understand before resolving #

Before starting the investigation proper, we might as well acknowledge what seems evident. I had a fully passing test suite, then I edited two lines of code, which caused the above error. The two nested exception messages contain obvious clues: Failed to compare two elements in the array, and At least one object must implement IComparable.

The only edit I made was to change an int to a NaturalNumber, and NaturalNumber didn't implement IComparable. It seems straightforward to just make NaturalNumber implement that interface and move on, and as it turns out, that is the solution.

As I describe in Code That Fits in Your Head, when troubleshooting, first seek to understand the problem. I've seen too many people go immediately into 'action mode' when faced with a problem. It's often a suboptimal strategy.

First, if the immediate solution turns out not to work, you can waste much time trashing, trying various 'fixes' without understanding the problem.

Second, even if the resolution is easy, as is the case here, if you don't understand the underlying cause and effect, you can easily build a cargo cult-like 'understanding' of programming. This could become one such experience: All wrapper types must implement IComparable, or some nonsense like that.

Unless people are getting hurt or you are bleeding money because of the error, seek first to understand, and only then fix the problem.

First clue #

The top user stack frame is the Allocate method:

private IEnumerable<Table> Allocate( IEnumerable<Reservation> reservations) { List<Table> allocation = Tables.ToList(); foreach (var r in reservations) { var table = allocation.Find(t => t.Fits(r.Quantity)); if (table is { }) { allocation.Remove(table); allocation.Add(table.Reserve(r)); } } return allocation; }

The stack trace points to line 91, which is the first line of code; where it calls Tables.ToList(). This is also consistent with the stack trace, which indicates that the exception is thrown from ToList.

I am, however, not used to ToList throwing exceptions, so I admit that I was nonplussed. Why would ToList try to sort the input? It usually doesn't do that.

Now, I did notice the OrderedEnumerable`1 on the stack frame above Enumerable.ToList, but this early in the investigation, I failed to connect the dots.

What does the caller look like? It's that scary DisplayClass21...

Immediate caller #

The code that calls Allocate is the Schedule method, the System Under Test:

public IEnumerable<TimeSlot> Schedule( IEnumerable<Reservation> reservations) { return from r in reservations group r by r.At into g orderby g.Key let seating = new Seating(SeatingDuration, g.Key) let overlapping = reservations.Where(seating.Overlaps) select new TimeSlot(g.Key, Allocate(overlapping).ToList()); }

While it does orderby, it doesn't seem to be sorting the input to Allocate. While overlapping is a filtered subset of reservations, the code doesn't sort reservations.

Okay, moving on, what does the caller of that method look like?

Test implementation #

The caller of the Schedule method is this test implementation:

private static void ScheduleImp( MaitreD sut, Reservation[] reservations) { var actual = sut.Schedule(reservations); Assert.Equal( reservations.Select(r => r.At).Distinct().Count(), actual.Count()); Assert.Equal( actual.Select(ts => ts.At).OrderBy(d => d), actual.Select(ts => ts.At)); Assert.All(actual, ts => AssertTables(sut.Tables, ts.Tables)); Assert.All( actual, ts => AssertRelevance(reservations, sut.SeatingDuration, ts)); }

Notice how the first line of code calls Schedule, while the rest is 'just' assertions.

Because I had noticed that OrderedEnumerable`1 on the stack, I was on the lookout for an expression that would sort an IEnumerable<T>. The ScheduleImp method surprised me, though, because the reservations parameter is an array. If there was any problem sorting it, it should have blown up much earlier.

I really should be paying more attention, but despite my best resolution to proceed methodically, I was chasing the wrong clue.

Which line of code throws the exception? The stack trace says line 31. That's not the sut.Schedule(reservations) call. It's the first assertion following it. I failed to notice that.

Property #

I was stumped, and not knowing what to do, I looked at the fourth and final piece of user code in that stack trace:

[Property] public Property Schedule() { return Prop.ForAll( (from rs in Gens.Reservations from m in Gens.MaitreD(rs) select (m, rs)).ToArbitrary(), t => ScheduleImp(t.m, t.rs)); }

No sorting there. What's going on?

In retrospect, I'm struggling to understand what was going on in my mind. Perhaps you're about to lose patience with me. I was chasing the wrong 'clue', just as I said above that 'other' people do, but surely, it's understood, that I don't.

WYSIATI #

In Code That Fits in Your Head I spend some time discussing how code relates to human cognition. I'm no neuroscientist, but I try to read books on other topics than programming. I was partially inspired by Thinking, Fast and Slow in which Daniel Kahneman (among many other topics) presents how System 1 (the inaccurate fast thinking process) mostly works with what's right in front of it: What You See Is All There Is, or WYSIATI.

That OrderedEnumerable`1 in the stack trace had made me look for an IEnumerable<T> as the culprit, and in the source code of the Allocate method, one parameter is clearly what I was looking for. I'll repeat that code here for your benefit:

private IEnumerable<Table> Allocate( IEnumerable<Reservation> reservations) { List<Table> allocation = Tables.ToList(); foreach (var r in reservations) { var table = allocation.Find(t => t.Fits(r.Quantity)); if (table is { }) { allocation.Remove(table); allocation.Add(table.Reserve(r)); } } return allocation; }

Where's the IEnumerable<T> in that code?

reservations, right?

Revelation #

As WYSIATI 'predicts', the brain gloms on to what's prominent. I was looking for IEnumerable<T>, and it's right there in the method declaration as the parameter IEnumerable<Reservation> reservations.

As covered in multiple places (my book, The Programmer's Brain), the human brain has limited short-term memory. Apparently, while chasing the IEnumerable<T> clue, I'd already managed to forget another important datum.

Which line of code throws the exception? This one:

List<Table> allocation = Tables.ToList();

The IEnumerable<T> isn't reservations, but Tables.

While the code doesn't explicitly say IEnumerable<Table> Tables, that's just what it is.

Yes, it took me way too long to notice that I'd been barking up the wrong tree all along. Perhaps you immediately noticed that, but have pity with me. I don't think this kind of human error is uncommon.

The culprit #

Where do Tables come from? It's a read-only property originally injected via the constructor:

public MaitreD( TimeOfDay opensAt, TimeOfDay lastSeating, TimeSpan seatingDuration, IEnumerable<Table> tables) { OpensAt = opensAt; LastSeating = lastSeating; SeatingDuration = seatingDuration; Tables = tables; }

Okay, in the test then, where does it come from? That's the m in the above property, repeated here for your convenience:

[Property] public Property Schedule() { return Prop.ForAll( (from rs in Gens.Reservations from m in Gens.MaitreD(rs) select (m, rs)).ToArbitrary(), t => ScheduleImp(t.m, t.rs)); }

The m variable is generated by Gens.MaitreD, so let's follow that clue:

internal static Gen<MaitreD> MaitreD( IEnumerable<Reservation> reservations) { return from seatingDuration in Gen.Choose(1, 6) from tables in Tables(reservations) select new MaitreD( TimeSpan.FromHours(18), TimeSpan.FromHours(21), TimeSpan.FromHours(seatingDuration), tables); }

We're not there yet, but close. The tables variable is generated by this Tables helper function:

/// <summary> /// Generate a table configuration that can at minimum accomodate all /// reservations. /// </summary> /// <param name="reservations">The reservations to accommodate</param> /// <returns>A generator of valid table configurations.</returns> private static Gen<IEnumerable<Table>> Tables( IEnumerable<Reservation> reservations) { // Create a table for each reservation, to ensure that all // reservations can be allotted a table. var tables = reservations.Select(r => Table.Standard(r.Quantity)); return from moreTables in Gen.Choose(1, 12).Select( i => Table.Standard(new NaturalNumber(i))).ArrayOf() let allTables = tables.Concat(moreTables).OrderBy(t => t.Capacity) select allTables.AsEnumerable(); }

And there you have it: OrderBy(t => t.Capacity)!

The Capacity property was exactly the property I changed from int to NaturalNumber - the change that made the test fail.

As expected, the fix was to let NaturalNumber implement IComparable<NaturalNumber>.

Conclusion #

I thought this little troubleshooting session was interesting enough to write down. I spent perhaps twenty minutes on it before I understood what was going on. Not disastrously long, but enough time that I was relieved when I figured it out.

Apart from the obvious (look for the problem where it is), there is one other useful lesson to be learned, I think.

Deferred execution can confuse even the most experienced programmer. It took me some time before it dawned on me that even though the the MaitreD constructor had run and the object was 'safely' initialised, it actually wasn't.

The implication is that there's a 'disconnect' between the constructor and the Allocate method. The error actually happens during initialisation (i.e. in the caller of the constructor), but it only manifests when you run the method.

Ever since I discovered the IReadOnlyCollection<T> interface in 2013 I've resolved to favour it over IEnumerable<T>. This is one example of why that's a good idea.

Despite my best intentions, I, too, cut corners from time to time. I've done it here, by accepting IEnumerable<Table> instead of IReadOnlyCollection<Table> as a constructor parameter. I really should have known better, and now I've paid the price.

This is particularly ironic because I also love Haskell so much. Haskell is lazy by default, so you'd think that I run into such issues all the time. An expression like OrderBy(t => t.Capacity), however, wouldn't have compiled in Haskell unless the sort key implemented the Ord type class. Even C#'s type system can express that a generic type must implement an interface, but OrderBy doesn't do that.

This problem could have been caught at compile-time, but unfortunately it wasn't.

Comments

I made a pull request describing the issue.

As this is likely a breaking change I don't have high hopes for it to be fixed, though…

Do ORMs reduce the need for mapping?

With some Entity Framework examples in C#.

In a recent comment, a reader asked me to expand on my position on object-relational mappers (ORMs), which is that I'm not a fan:

I consider ORMs a waste of time: they create more problems than they solve.

While I acknowledge that only a Sith deals in absolutes, I favour clear assertions over guarded language. I don't really mean it that categorically, but I do stand by the general sentiment. In this article I'll attempt to describe why I don't reach for ORMs when querying or writing to a relational database.

As always, any exploration of such a kind is made in a context, and this article is no exception. Before proceeding, allow me to delineate the scope. If your context differs from mine, what I write may not apply to your situation.

Scope #

It's been decades since I last worked on a system where the database 'came first'. The last time that happened, the database was hidden behind an XML-based RPC API that tunnelled through HTTP. Not a REST API by a long shot.

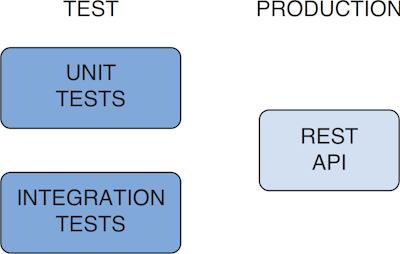

Since then, I've worked on various systems. Some used relational databases, some document databases, some worked with CSV, or really old legacy APIs, etc. Common to these systems was that they were not designed around a database. Rather, they were developed with an eye to the Dependency Inversion Principle, keeping storage details out of the Domain Model. Many were developed with test-driven development (TDD).

When I evaluate whether or not to use an ORM in situations like these, the core application logic is my main design driver. As I describe in Code That Fits in Your Head, I usually develop (vertical) feature slices one at a time, utilising an outside-in TDD process, during which I also figure out how to save or retrieve data from persistent storage.

Thus, in systems like these, storage implementation is an artefact of the software architecture. If a relational database is involved, the schema must adhere to the needs of the code; not the other way around.

To be clear, then, this article doesn't discuss typical CRUD-heavy applications that are mostly forms over relational data, with little or no application logic. If you're working with such a code base, an ORM might be useful. I can't really tell, since I last worked with such systems at a time when ORMs didn't exist.

The usual suspects #

The most common criticism of ORMs (that I've come across) is typically related to the queries they generate. People who are skilled in writing SQL by hand, or who are concerned about performance, may look at the SQL that an ORM generates and dislike it for that reason.

It's my impression that ORMs have come a long way over the decades, but frankly, the generated SQL is not really what concerns me. It never was.

In the abstract, Ted Neward already outlined the problems in the seminal article The Vietnam of Computer Science. That problem description may, however, be too theoretical to connect with most programmers, so I'll try a more example-driven angle.

Database operations without an ORM #

Once more I turn to the trusty example code base that accompanies Code That Fits in Your Head. In it, I used SQL Server as the example database, and ADO.NET as the data access technology.

I considered this more than adequate for saving and reading restaurant reservations. Here, for example, is the code that creates a new reservation row in the database:

public async Task Create(int restaurantId, Reservation reservation) { if (reservation is null) throw new ArgumentNullException(nameof(reservation)); using var conn = new SqlConnection(ConnectionString); using var cmd = new SqlCommand(createReservationSql, conn); cmd.Parameters.AddWithValue("@Id", reservation.Id); cmd.Parameters.AddWithValue("@RestaurantId", restaurantId); cmd.Parameters.AddWithValue("@At", reservation.At); cmd.Parameters.AddWithValue("@Name", reservation.Name.ToString()); cmd.Parameters.AddWithValue("@Email", reservation.Email.ToString()); cmd.Parameters.AddWithValue("@Quantity", reservation.Quantity); await conn.OpenAsync().ConfigureAwait(false); await cmd.ExecuteNonQueryAsync().ConfigureAwait(false); } private const string createReservationSql = @" INSERT INTO [dbo].[Reservations] ( [PublicId], [RestaurantId], [At], [Name], [Email], [Quantity]) VALUES (@Id, @RestaurantId, @At, @Name, @Email, @Quantity)";

Yes, there's mapping, even if it's 'only' from a Domain Object to command parameter strings. As I'll argue later, if there's a way to escape such mapping, I'm not aware of it. ORMs don't seem to solve that problem.

This, however, seems to be the reader's main concern:

"I can work with raw SQL ofcourse... but the mapping... oh the mapping..."

It's not a concern that I share, but again I'll remind you that if your context differs substantially from mine, what doesn't concern me could reasonably concern you.

You may argue that the above example isn't representative, since it only involves a single table. No foreign key relationships are involved, so perhaps the example is artificially easy.

In order to work with a slightly more complex schema, I decided to port the read-only in-memory restaurant database (the one that keeps track of the restaurants - the tenants - of the system) to SQL Server.

Restaurants schema #

In the book's sample code base, I'd only stored restaurant configurations as JSON config files, since I considered it out of scope to include an online tenant management system. Converting to a relational model wasn't hard, though. Here's the database schema:

CREATE TABLE [dbo].[Restaurants] ( [Id] INT NOT NULL, [Name] NVARCHAR (50) NOT NULL UNIQUE, [OpensAt] TIME NOT NULL, [LastSeating] TIME NOT NULL, [SeatingDuration] TIME NOT NULL PRIMARY KEY CLUSTERED ([Id] ASC) ) CREATE TABLE [dbo].[Tables] ( [Id] INT NOT NULL IDENTITY, [RestaurantId] INT NOT NULL REFERENCES [dbo].[Restaurants](Id), [Capacity] INT NOT NULL, [IsCommunal] BIT NOT NULL PRIMARY KEY CLUSTERED ([Id] ASC) )

This little subsystem requires two database tables: One that keeps track of the overall restaurant configuration, such as name, opening and closing times, and another database table that lists all a restaurant's physical tables.

You may argue that this is still too simple to realistically capture the intricacies of existing database systems, but conversely I'll remind you that the scope of this article is the sort of system where you develop and design the application first; not a system where you're given a relational database upon which you must create an application.

Had I been given this assignment in a realistic setting, a relational database probably wouldn't have been my first choice. Some kind of document database, or even blob storage, strikes me as a better fit. Still, this article is about ORMs, so I'll pretend that there are external circumstances that dictate a relational database.

To test the system, I also created a script to populate these tables. Here's part of it:

INSERT INTO [dbo].[Restaurants] ([Id], [Name], [OpensAt], [LastSeating], [SeatingDuration]) VALUES (1, N'Hipgnosta', '18:00', '21:00', '6:00') INSERT INTO [dbo].[Tables] ([RestaurantId], [Capacity], [IsCommunal]) VALUES (1, 10, 1) INSERT INTO [dbo].[Restaurants] ([Id], [Name], [OpensAt], [LastSeating], [SeatingDuration]) VALUES (2112, N'Nono', '18:00', '21:00', '6:00') INSERT INTO [dbo].[Tables] ([RestaurantId], [Capacity], [IsCommunal]) VALUES (2112, 6, 1) INSERT INTO [dbo].[Tables] ([RestaurantId], [Capacity], [IsCommunal]) VALUES (2112, 4, 1) INSERT INTO [dbo].[Tables] ([RestaurantId], [Capacity], [IsCommunal]) VALUES (2112, 2, 0) INSERT INTO [dbo].[Tables] ([RestaurantId], [Capacity], [IsCommunal]) VALUES (2112, 2, 0) INSERT INTO [dbo].[Tables] ([RestaurantId], [Capacity], [IsCommunal]) VALUES (2112, 4, 0) INSERT INTO [dbo].[Tables] ([RestaurantId], [Capacity], [IsCommunal]) VALUES (2112, 4, 0)

There are more rows than this, but this should give you an idea of what data looks like.

Reading restaurant data without an ORM #

Due to the foreign key relationship, reading restaurant data from the database is a little more involved than reading from a single table.

public async Task<Restaurant?> GetRestaurant(string name) { using var cmd = new SqlCommand(readByNameSql); cmd.Parameters.AddWithValue("@Name", name); var restaurants = await ReadRestaurants(cmd); return restaurants.SingleOrDefault(); } private const string readByNameSql = @" SELECT [Id], [Name], [OpensAt], [LastSeating], [SeatingDuration] FROM [dbo].[Restaurants] WHERE [Name] = @Name SELECT [RestaurantId], [Capacity], [IsCommunal] FROM [dbo].[Tables] JOIN [dbo].[Restaurants] ON [dbo].[Tables].[RestaurantId] = [dbo].[Restaurants].[Id] WHERE [Name] = @Name";

There are more than one option when deciding how to construct the query. You could make one query with a join, in which case you'd get rows with repeated data, and you'd then need to detect duplicates, or you could do as I've done here: Query each table to get multiple result sets.

I'm not claiming that this is better in any way. I only chose this option because I found the code that I had to write less offensive.

Since the IRestaurantDatabase interface defines three different kinds of queries (GetAll(), GetRestaurant(int id), and GetRestaurant(string name)), I invoked the rule of three and extracted a helper method:

private async Task<IEnumerable<Restaurant>> ReadRestaurants(SqlCommand cmd) { var conn = new SqlConnection(ConnectionString); cmd.Connection = conn; await conn.OpenAsync(); using var rdr = await cmd.ExecuteReaderAsync(); var restaurants = Enumerable.Empty<Restaurant>(); while (await rdr.ReadAsync()) restaurants = restaurants.Append(ReadRestaurantRow(rdr)); if (await rdr.NextResultAsync()) while (await rdr.ReadAsync()) restaurants = ReadTableRow(rdr, restaurants); return restaurants; }

The ReadRestaurants method does the overall work of opening the database connection, executing the query, and moving through rows and result sets. Again, we'll find mapping code hidden in helper methods:

private static Restaurant ReadRestaurantRow(SqlDataReader rdr) { return new Restaurant( (int)rdr["Id"], (string)rdr["Name"], new MaitreD( new TimeOfDay((TimeSpan)rdr["OpensAt"]), new TimeOfDay((TimeSpan)rdr["LastSeating"]), (TimeSpan)rdr["SeatingDuration"])); }

As the name suggests, ReadRestaurantRow reads a row from the Restaurants table and converts it into a Restaurant object. At this time, however, it creates each MaitreD object without any tables. This is possible because one of the MaitreD constructors takes a params array as the last parameter:

public MaitreD( TimeOfDay opensAt, TimeOfDay lastSeating, TimeSpan seatingDuration, params Table[] tables) : this(opensAt, lastSeating, seatingDuration, tables.AsEnumerable()) { }

Only when the ReadRestaurants method moves on to the next result set can it add tables to each restaurant:

private static IEnumerable<Restaurant> ReadTableRow( SqlDataReader rdr, IEnumerable<Restaurant> restaurants) { var restaurantId = (int)rdr["RestaurantId"]; var capacity = (int)rdr["Capacity"]; var isCommunal = (bool)rdr["IsCommunal"]; var table = isCommunal ? Table.Communal(capacity) : Table.Standard(capacity); return restaurants.Select(r => r.Id == restaurantId ? AddTable(r, table) : r); }

As was also the case in ReadRestaurantRow, this method uses string-based indexers on the rdr to extract the data. I'm no fan of stringly-typed code, but at least I have automated tests that exercise these methods.

Could an ORM help by creating strongly-typed classes that model database tables? To a degree; I'll discuss that later.

In any case, since the entire code base follows the Functional Core, Imperative Shell architecture, the entire Domain Model is made of immutable data types with pure functions. Thus, ReadTableRow has to iterate over all restaurants and add the table when the Id matches. AddTable does that:

private static Restaurant AddTable(Restaurant restaurant, Table table) { return restaurant.Select(m => m.WithTables(m.Tables.Append(table).ToArray())); }

I can think of other ways to solve the overall mapping task when using ADO.NET, but this was what made most sense to me.

Reading restaurants with Entity Framework #

Does an ORM like Entity Framework (EF) improve things? To a degree, but not enough to outweigh the disadvantages it also brings.

In order to investigate, I followed the EF documentation to scaffold code from a database I'd set up for only that purpose. For the Tables table it created the following Table class and a similar Restaurant class.

public partial class Table { public int Id { get; set; } public int RestaurantId { get; set; } public int Capacity { get; set; } public bool IsCommunal { get; set; } public virtual Restaurant Restaurant { get; set; } = null!; }

Hardly surprising. Also, hardly object-oriented, but more about that later, too.

Entity Framework didn't, by itself, add a Tables collection to the Restaurant class, so I had to do that by hand, as well as modify the DbContext-derived class to tell it about this relationship:

entity.OwnsMany(r => r.Tables, b => { b.Property<int>(t => t.Id).ValueGeneratedOnAdd(); b.HasKey(t => t.Id); });

I thought that such a simple foreign key relationship would be something an ORM would help with, but apparently not.

With that in place, I could now rewrite the above GetRestaurant method to use Entity Framework instead of ADO.NET:

public async Task<Restaurants.Restaurant?> GetRestaurant(string name) { using var db = new RestaurantsContext(ConnectionString); var dbRestaurant = await db.Restaurants.FirstOrDefaultAsync(r => r.Name == name); if (dbRestaurant == null) return null; return ToDomainModel(dbRestaurant); }

The method now queries the database, and EF automatically returns a populated object. This would be nice if it was the right kind of object, but alas, it isn't. GetRestaurant still has to call a helper method to convert to the correct Domain Object:

private static Restaurants.Restaurant ToDomainModel(Restaurant restaurant) { return new Restaurants.Restaurant( restaurant.Id, restaurant.Name, new MaitreD( new TimeOfDay(restaurant.OpensAt), new TimeOfDay(restaurant.LastSeating), restaurant.SeatingDuration, restaurant.Tables.Select(ToDomainModel).ToList())); }

While this helper method converts an EF Restaurant object to a proper Domain Object (Restaurants.Restaurant), it also needs another helper to convert the table objects:

private static Restaurants.Table ToDomainModel(Table table) { if (table.IsCommunal) return Restaurants.Table.Communal(table.Capacity); else return Restaurants.Table.Standard(table.Capacity); }

As should be clear by now, using vanilla EF doesn't reduce the need for mapping.

Granted, the mapping code is a bit simpler, but you still need to remember to map restaurant.Name to the right constructor parameter, restaurant.OpensAt and restaurant.LastSeating to their correct places, table.Capacity to a constructor argument, and so on. If you make changes to the database schema or the Domain Model, you'll need to edit this code.

Encapsulation #

This is the point where more than one reader wonders: Can't you just..?

In short, no, I can't just.

The most common reaction is most likely that I'm doing this all wrong. I'm supposed to use the EF classes as my Domain Model.

But I can't, and I won't. I can't because I already have classes in place that serve that purpose. I also will not, because it would violate the Dependency Inversion Principle. As I recently described, the architecture is Ports and Adapters, or, if you will, Clean Architecture. The database Adapter should depend on the Domain Model; the Domain Model shouldn't depend on the database implementation.

Okay, but couldn't I have generated the EF classes in the Domain Model? After all, a class like the above Table is just a POCO Entity. It doesn't depend on the Entity Framework. I could have those classes in my Domain Model, put my DbContext in the data access layer, and have the best of both worlds. Right?

The code shown so far hints at a particular API afforded by the Domain Model. If you've read my book, you already know what comes next. Here's the Table Domain Model's API:

public sealed class Table { public static Table Standard(int seats) public static Table Communal(int seats) public int Capacity { get; } public int RemainingSeats { get; } public Table Reserve(Reservation reservation) public T Accept<T>(ITableVisitor<T> visitor) }

A couple of qualities of this design should be striking: There's no visible constructor - not even one that takes parameters. Instead, the type affords two static creation functions. One creates a standard table, the other a communal table. My book describes the difference between these types, and so does the Maître d' kata.

This isn't some frivolous design choice of mine, but rather quite deliberate. That Table class is a Visitor-encoded sum type. You can debate whether I should have modelled a table as a sum type or a polymorphic object, but now that I've chosen a sum type, it should be explicit in the API design.

"Explicit is better than implicit."

When we program, we make many mistakes. It's important to discover the mistakes as soon as possible. With a compiled language, the first feedback you get is from the compiler. I favour leveraging the compiler, and its type system, to prevent as many mistakes as possible. That's what Hillel Wayne calls constructive data. Make illegal states unrepresentable.

I could, had I thought of it at the time, have introduced a predicative natural-number wrapper of integers, in which case I could have strengthened the contract of Table even further:

public sealed class Table { public static Table Standard(NaturalNumber capacity) public static Table Communal(NaturalNumber capacity) public NaturalNumber Capacity { get; } public int RemainingSeats { get; } public Table Reserve(Reservation reservation) public T Accept<T>(ITableVisitor<T> visitor) }

The point is that I take encapsulation seriously, and my interpretation of the concept is heavily inspired by Bertrand Meyer's Object-Oriented Software Construction. The view of encapsulation emphasises contracts (preconditions, invariants, postconditions) rather than information hiding.

As I described in a previous article, you can't model all preconditions and invariants with types, but you can still let the type system do much heavy lifting.

This principle applies to all classes that are part of the Domain Model; not only Table, but also Restaurant:

public sealed class Restaurant { public Restaurant(int id, string name, MaitreD maitreD) public int Id { get; } public string Name { get; } public MaitreD MaitreD { get; } public Restaurant WithId(int newId) public Restaurant WithName(string newName) public Restaurant WithMaitreD(MaitreD newMaitreD) public Restaurant Select(Func<MaitreD, MaitreD> selector) }

While this class does have a public constructor, it makes use of another design choice that Entity Framework doesn't support: It nests one rich object (MaitreD) inside another. Why does it do that?

Again, this is far from a frivolous design choice I made just to be difficult. Rather, it's a result of a need-to-know principle (which strikes me as closely related to the Single Responsibility Principle): A class should only contain the information it needs in order to perform its job.

The MaitreD class does all the heavy lifting when it comes to deciding whether or not to accept reservations, how to allocate tables, etc. It doesn't, however, need to know the id or name of the restaurant in order to do that. Keeping that information out of MaitreD, and instead in the Restaurant wrapper, makes the code simpler and easier to use.

The bottom line of all this is that I value encapsulation over 'easy' database mapping.

Limitations of Entity Framework #

The promise of an object-relational mapper is that it automates mapping between objects and database. Is that promise realised?

In its current incarnation, it doesn't look as though Entity Framework supports mapping to and from the Domain Model. With the above tweaks, it supports the database schema that I've described, but only via 'Entity classes'. I still have to map to and from the 'Entity objects' and the actual Domain Model. Not much is gained.

One should, of course, be careful not drawing too strong inferences from this example. First, proving anything impossible is generally difficult. Just because I can't find a way to do what I want, I can't conclude that it's impossible. That a few other people tell me, too, that it's impossible still doesn't constitute strong evidence.

Second, even if it's impossible today, it doesn't follow that it will be impossible forever. Perhaps Entity Framework will support my Domain Model in the future.

Third, we can't conclude that just because Entity Framework (currently) doesn't support my Domain Model it follows that no object-relational mapper (ORM) does. There might be another ORM out there that perfectly supports my design, but I'm just not aware of it.

Based on my experience and what I see, read, and hear, I don't think any of that likely. Things might change, though.

Net benefit or drawback? #

Perhaps, despite all of this, you still prefer ORMs. You may compare my ADO.NET code to my Entity Framework code and conclude that the EF code still looks simpler. After all, when using ADO.NET I have to jump through some hoops to load the correct tables associated with each restaurant, whereas EF automatically handles that for me. The EF version requires fewer lines of code.

In isolation, the fewer lines of code the better. This seems like an argument for using an ORM after all, even if the original promise remains elusive. Take what you can get.

On the other hand, when you take on a dependency, there's usually a cost that comes along. A library like Entity Framework isn't free. While you don't pay a licence fee for it, it comes with other costs. You have to learn how to use it, and so do your colleagues. You also have to keep up to date with changes.

Every time some exotic requirement comes up, you'll have to spend time investigating how to address it with that ORM's API. This may lead to a game of Whac-A-Mole where every tweak to the ORM leads you further down the rabbit hole, and couples your code tighter with it.

You can only keep up with so many things. What's the best investment of your time, and the time of your team mates? Learning and knowing SQL, or learning and keeping up to date with a particular ORM?

I learned SQL decades ago, and that knowledge is still useful. On the other hand, I don't even know how many library and framework APIs that I've both learned and forgotten about.

As things currently stand, it looks to me as though the net benefit of using a library like Entity Framework is negative. Yes, it might save me a few lines of code, but I'm not ready to pay the costs just outlined.

This balance could tip in the future, or my context may change.

Conclusion #

For the kind of applications that I tend to become involved with, I don't find object-relational mappers particularly useful. When you have a rich Domain Model where the first design priority is encapsulation, assisted by the type system, it looks as though mapping is unavoidable.

While you can ask automated tools to generate code that mirrors a database schema (or the other way around), only classes with poor encapsulation are supported. As soon as you do something out of the ordinary like static factory methods or nested objects, apparently Entity Framework gives up.

Can we extrapolate from Entity Framework to other ORMs? Can we infer that Entity Framework will never be able to support objects with proper encapsulation, just because it currently doesn't?

I can't say, but I'd be surprised if things change soon, if at all. If, on the other hand, it eventually turns out that I can have my cake and eat it too, then why shouldn't I?

Until then, however, I don't find that the benefits of ORMs trump the costs of using them.

Comments

One project I worked on was (among other things) mapping data from database to rich domain objects in the way similar to what is described in this article. These object knew how to do a lot of things but were dependant on related objects and so everything neded to be loaded in advance from the database in order to ensure correctness. So having a Order, OrderLine, Person, Address and City, all the rows needed to be loaded in advance, mapped to objects and references set to create the object graph to be able to, say, display shipping costs based on person's address.

The mapping step involved cumbersome manual coding and was error prone because it was easy to forget to load some list or set some reference. Reflecting on that experience, it seems to me that sacrificing a bit of purity wrt domain modelling and leaning on an ORM to lazily load the references would have been much more efficient and correct.

But I guess it all depends on the context..?

Thanks. I've been following recent posts, but I was too lazy to go through the whole PRing things to reply. Maybe that's a good thing, since it forces you to think how to reply, instead of throwing a bunch of words together quickly. Anyways, back to business.

I'm not trying to sell one way or the other, because I'm seriously conflicted with both. Since most people on the web tend to fall into ORM category (in .NET world at least), I was simply looking for other perspective, from someone more knowledgable than me.

The following is just my thinking out loud...

You've used DB-first approach and scaffolding classes from DB schema. With EF core, the usual thing to do, is the opposite. Write classes to scaffold DB schema. Now, this doesn't save us from writing those "relational properties", but it allows us to generate DB update scripts. So if you have a class like:

class SomeTable

{

public int Id;

public string Name;

}

and you add a field:

class SomeTable

{

public int Id;

public string Name;

public DateTime Birthday;

}

you can run

add-migration MyMigration // generate migration file

update-database // execute it

This gives you a nice way to track DB chages via Git, but it can also introduce conflicts. Two devs cannot edit the same class/table. You have to be really careful when making commits. Another painful thing to do this way is creating DB views and stored procedures. I've honestly never saw a good solution for it. Maybe trying to do these things is a futile effort in the first place.

The whole

readByNameSql = @"SELECT [Id], [Name], [OpensAt], [LastSeating], [SeatingDuration]...

is giving me heebie jeebies. It is easy to change some column name, and introduce a bug. It might be possible to do stuff with string interpolation, but at that point, I'm thinking about creating my own framework...

The most common reaction is most likely that I'm doing this all wrong. I'm supposed to use the EF classes as my Domain Model. - Mark SeemannOne of the first things that I was taught on my first job, was to never expose my domain model to the outside world. The domain model being EF Core classes... These days, I'm thinking quite the opposite. EF Core classes are DTOs for the database (with some boilerplate in order for framework to do it's magic). I also want to expose my domain model to the outside world. Why not? That's the contract after all. But the problem with this, is that it adds another layer of mapping. Since my domain model validation is done in class constructor, deserialization of requests becomes a problem. Ideally, it should sit in a static method. But in that case I have: jsonDto -> domainModel -> dbDto. The No-ORM approach also still requires me to map domainModel to command parameters manually. All of this is a tedious, and very error prone process. Especially if you have the case like vlad mentioned above.

Minor observation on your code. People rarely map things from DB data to domain models when using EF Core. This is a horrible thing to do. Anyone can run a script against a DB, and corrupt the data. It is something I intend to enforce in future projects, if possible. Thank you F# community.

I can't think of anything more to say at the moment. Thanks again for a fully-fledged-article reply :). I also recommend this video. I haven't had the time to try things he is talking about yet.

Vlad, qfilip, thank you for writing.

I think your comments warrant another article. I'll post an update here later.

Quick update from me again. I've been thinking and experimenting with several approaches to solve issues I've written about above. How idealized world works and where do we make compromises. Forgive me for the sample types, I couldn't think of anything else. Let's assume we have this table:

type Sheikh = {

// db entity data

Id: Guid

CreatedAt: DateTime

ModifiedAt: DateTime

Deleted: bool

// domain data

Name: string

Email: string // unique constraint here

// relational data

Wives: Wife list

Supercars: Supercar list

}

I've named first 3 fields as "entity data". Why would my domain model contain an ID? It shouldn't care about persistence. I may save it to the DB, write it to a text file, or print it on a piece of paper. Don't care. We put IDs because data usually ends up in a DB. I could have used Email here to serve as an ID, because it should be unique, but we also like to standardize these stuff. All IDs shall be uuids.

There are also these "CreatedAt", "ModifiedAt" and "Deleted" columns. This is something I usually do, when I want soft-delete functionality. Denoramalize the data to gain performance. Otherwise, I would need to make... say... EntityStatus table to keep that data, forcing me to do a JOIN for every read operation and additional UPDATE EntityStatus for every write operation. So I kinda sidestep "the good practices" to avoid very real complications.

Domain data part is what it is, so I can safely skip that part.

Relational data part is the most interesting bit. I think this is what keeps me falling back to EntityFramework and why using "relational properties" are unavoidable. Either that, or I'm missing something.

Focusing attention on Sheikh table here, with just 2 relations, there are 4 potential scenarios. I don't want to load stuff from the DB, unless they are required, so the scenarios are:

- Load

Sheikhwithout relational data - Load

SheikhwithWives - Load

SheikhwithSupercars - Load

SheikhwithWivesandSupercars

2NRelations I guess? I'm three beers in on this, with only six hours left until corporate clock starts ticking, so my math is probably off.

God forbid if any of these relations have their own "inner relations" you may or may not need to load. This is where the (magic mapping/to SQL translations) really start getting useful. There will be some code repetition, but you'll just need to add ThenInclude(x => ...) and you're done.

Now the flip side. Focusing attention on Supercar table:

type Supercar = {

// db entity data

...

// domain data

Vendor: string

Model: string

HorsePower: int

// relational data

Owner: Sheikh

OwnerId: Guid

}

Pretty much same as before. Sometimes I'll need Sheikh info, sometimes I won't. One of F# specific problems I'm having is that, records require all fields to be populated. What if I need just SheikhID to perform some domain logic?

let tuneSheikhCars (sheikhId) (hpIncrement) (cars) =

cars

|> List.filter (fun x -> x.Owner.Id = sheikhId)

|> List.map (fun x -> x with { HorsePower = x.HorsePower + hpIncrement })

Similar goes for inserting new Supercar. I want to query-check first if Owner/Sheikh exists, before attempting insertion. You can pass it as a separate parameter, but code gets messier and messier.

No matter how I twist and turn things around, in the real world, I'm not only concerned by current steps I need to take to complete a task, but also with possible future steps. Now, I could define a record that only contains relevant data per each request. But, as seen above, I'd be eventually forced to make ~ 2NRelations of such records, instead of one. A reusable one, that serves like a bucket for a preferred persistence mechanism, allowing me to load relations later on, because nothing lives in memory long term.

I strayed away slightly here from ORM vs no-ORM discussion that I've started earlier. Because, now I realize that this problem isn't just about mapping things from type A to type B.

ValidationResult as a return type, but now we're entering a territory where C#, as a language, is totally unprepared for.

Just my two cents:

Yes, it is possible to map the NaturalNumber object to an E.F class property using ValueConverters. Here are a couple of articles talking about this:

- Andrew Lock: Using strongly-typed entity IDs to avoid primitive obsession.

- Thomas Levesque: Using C# 9 records as strongly-typed ids.

But even though you can use this, you may still encounter another use cases that you cannot tackle. E.F is just a tool with its limitations, and there will be things you can do with simple C# that you can not do with E.F.

I think you need to consider why you want to use E.F, understand its strengths and weaknesses, and then decide if it suits your project.

Do you want to use EF solely as a data access layer, or do you want it to be your domain layer?. Maybe for a big project you can use only E.F as a data access layer and use old plain C# files for domain layer. In a [small | medium | quick & dirty] project use as your domain layer.

There are bad thing we already know:

- Increased complexity.

- There will be things you can not do. So you must be carefull you will not need something E.F can not give you.

- You need to know how it works. For example, know that accessing

myRestaurant.MaitreDimplies a new database access (if you have not loaded it previously).

But sometimes E.F shines, for example:

- You are programing against the E.F model, not against a specific database, so it is easier to migrate to another database.

- Maybe migrate to another database is rare, but it is very convenient to run tests against an in-memory SQLite database. Tests against a real database can be run in the CD/CI environment, for example.

- Having a centralized point to process changes (

SaveChanges) allows you to easily do interesting things: save "CreatedBy," "CreatedDate," "ModifiedBy," and "ModifiedDate" fields for all tables or create historical tables (if you do not have access to the SQL Server temporal tables). - Global query filters allow you to make your application multi-tenant with very little code: all tables implement

IByClient, a global filter for theses tables... and voilà, your application becomes multi-client with just a few lines.

I am not a E.F defender, in fact I have a love & hate reletaionship with it. But I believe it is a powerful tool for certain projects. As always, the important thing is to think whether it is the right tool for your specific project :)

Thank you, all, for writing. There's more content in your comments than I can address in one piece, but I've written a follow-up article that engages with some of your points: Domain Model first.

Specifically regarding the point of having to hand-write a lot of code to deal with multiple tables joined in various fashions, I grant that while typing isn't a bottleneck, the more code you add, the greater the risk of bugs. I'm not trying to be dismissive of ORMs as a general tool. If you truly, inescapably, have a relational model, then an ORM seems like a good choice. If so, however, I don't see that you can get good encapsulation at the same time.

And indeed, an important responsibility of a software architect is to consider trade-offs to find a good solution for a particular problem. Sometimes such a solution involves an ORM, but sometimes, it doesn't. In my world, it usually doesn't.

Do I breathe rarefied air, dealing with esoteric problems that mere mortals can never hope to encounter? I don't think so. Rather, I offer the interpretation that I sometimes approach problems in a different way. All I really try to do with these articles is to present to the public the ways I think about problems. I hope, then, that it may inspire other people to consider problems from more than one angle.

Finally, from my various customer engagements I get the impression that people also like ORMs because 'entity classes' look strongly typed. As a counter-argument, I suggest that this may be an illusion.

A first stab at the Brainfuck kata

I almost gave up, but persevered and managed to produce something that works.

As I've previously mentioned, a customer hired me to swing by to demonstrate test-driven development and tactical Git. To make things interesting, we agreed that they'd give me a kata at the beginning of the session. I didn't know which problem they'd give me, so I thought it'd be a good idea to come prepared. I decided to seek out katas that I hadn't done before.

The demonstration session was supposed to be two hours in front of a participating audience. In order to make my preparation aligned to that situation, I decided to impose a two-hour time limit to see how far I could get. At the same time, I'd also keep an eye on didactics, so preferably proceeding in an order that would be explainable to an audience.

Some katas are more complicated than others, so I'm under no illusion that I can complete any, to me unknown, kata in under two hours. My success criterion for the time limit is that I'd like to reach a point that would satisfy an audience. Even if, after two hours, I don't reach a complete solution, I should leave a creative and intelligent audience with a good idea of how to proceed.

After a few other katas, I ran into the Brainfuck kata one Thursday. In this article, I'll describe some of the most interesting things that happened along the way. If you want all the details, the code is available on GitHub.

Understanding the problem #

I had heard about Brainfuck before, but never tried to write an interpreter (or a program, for that matter).

The kata description lacks examples, so I decided to search for them elsewhere. The wikipedia article comes with some examples of small programs (including Hello, World), so ultimately I used that for reference instead of the kata page.

I'm happy I wasn't making my first pass on this problem in front of an audience. I spent the first 45 minutes just trying to understand the examples.

You might find me slow, since the rules of the language aren't that complicated. I was, however, confused by the way the examples were presented.

As the wikipedia article explains, in order to add two numbers together, one can use this idiom:

[->+<]

The article then proceeds to list a small, complete program that adds two numbers. This program adds numbers this way:

[ Start your loops with your cell pointer on the loop counter (c1 in our case) < + Add 1 to c0 > - Subtract 1 from c1 ] End your loops with the cell pointer on the loop counter

I couldn't understand why this annotated 'walkthrough' explained the idiom in reverse. Several times, I was on the verge of giving up, feeling that I made absolutely no progress. Finally, it dawned on me that the second example is not an explanation of the first example, but rather a separate example that makes use of the same idea, but expresses it in a different way.

Most programming languages have more than one way to do things, and this is also the case here. [->+<] adds two numbers together, but so does [<+>-].

Once you understand something, it can be difficult to recall why you ever found it confusing. Now that I get this, I'm having trouble explaining what I was originally thinking, and why it confused me.

This experience does, however, drive home a point for educators: When you introduce a concept and then provide examples, the first example should be a direct continuation of the introduction, and not some variation. Variations are fine, too, but should follow later and be clearly labelled.

After 45 minutes I had finally cracked the code and was ready to get programming.

Getting started #

The kata description suggests starting with the +, -, >, and < instructions to manage memory. I briefly considered that, but on the other hand, I wanted to have some test coverage. Usually, I take advantage of test-driven development, and I while I wasn't sure how to proceed, I wanted to have some tests.

If I were to exclusively start with memory management, I would need some way to inspect the memory in order to write assertions. This struck me as violating encapsulation.

Instead, I thought that I'd write the simplest program that would produce some output, because if I had output, I would have something to verify.

That, on the other hand, meant that I had to consider how to model input and output. The Wikipedia article describes these as

"two streams of bytes for input and output (most often connected to a keyboard and a monitor respectively, and using the ASCII character encoding)."

Knowing that you can model the console's input and output streams as polymorphic objects, I decided to model the output as a TextWriter. The lowest-valued printable ASCII character is space, which has the byte value 32, so I wrote this test:

[Theory] [InlineData("++++++++++++++++++++++++++++++++.", " ")] // 32 increments; ASCII 32 is space public void Run(string program, string expected) { using var output = new StringWriter(); var sut = new BrainfuckInterpreter(output); sut.Run(program); var actual = output.ToString(); Assert.Equal(expected, actual); }