ploeh blog danish software design

The Maybe monad

The Maybe sum type forms a monad. An article for object-oriented programmers.

This article is an instalment in an article series about monads.

The Maybe sum type is a useful data type that forms a functor. Like many other useful functors, it also forms a monad.

It can be useful when querying another data structure (like a list or a tree) for a value that may or may not be present. It's also useful when performing a calculation that may not be possible, such as taking the square root of of a number, or calculating the average of a collection of values. A third common use case is when parsing a value, although usually, the Either sum type is often a better option for that task.

Bind #

A monad must define either a bind or join function. In C#, monadic bind is called SelectMany. Depending on how you've implemented Maybe<T> the details of the implementation may vary, but the observable behaviour must always be the same. In this article I'm going to continue to use the Maybe<T> class first shown in the article about the Maybe functor. (That's not really my favourite implementation, but it doesn't matter.)

In that code base, I chose to implement SelectMany as an extension method. Why I didn't use an instance method I no longer remember. Again, this doesn't matter.

public static Maybe<TResult> SelectMany<T, TResult>( this Maybe<T> source, Func<T, Maybe<TResult>> selector) { if (source.HasItem) return selector(source.Item); else return new Maybe<TResult>(); }

If the source has an item, SelectMany calls selector with the value and returns the result. If not, the result is an empty Maybe<TResult>.

Monadic bind becomes useful when you have more than one function that returns a monadic value. Consider, for example, this variation of Average:

public static Maybe<double> Average(IEnumerable<double> values) { if (values.Any()) return new Maybe<double>(values.Average()); else return new Maybe<double>(); }

You can't calculate the average of an empty collection, so if values is empty, this function returns an empty Maybe<double>.

If you only needed this Average function, then you could use Maybe's functor affordance to map the result. On the other hand, imagine that you'd like to pass the Average result to this Sqrt function:

public static Maybe<double> Sqrt(double d) { var result = Math.Sqrt(d); switch (result) { case double.NaN: case double.PositiveInfinity: return new Maybe<double>(); default: return new Maybe<double>(result); } }

This is another calculation that may not be possible. If you try to compose Average and Sqrt with Select (the functor's map function), you'd get a Maybe<Maybe<double>>. Fortunately, however, monads are functors you can flatten:

[Theory] [InlineData(new double[0], -100)] [InlineData(new double[] { 5, 3 }, 2)] [InlineData(new double[] { 1, -2 }, -100)] public void ComposeAverageAndSqrt(double[] values, double expected) { Maybe<double> actual = Average(values).SelectMany(Sqrt); Assert.Equal(expected, actual.GetValueOrFallback(-100)); }

The SelectMany method flattens as it goes. Viewed in this way, Scala's flatMap seems like a more appropriate name.

The above test demonstrates how SelectMany returns a flattened Maybe<double>. The first test case has zero elements, so the Average method is going to return an empty Maybe<double>. SelectMany handles that gracefully by returning another empty value.

In the second test case Average returns a populated value that contains the number 4. SelectMany passes 4 to Sqrt, which returns 2 wrapped in a Maybe<double>.

In the third test case, the average is -.5, wrapped in a Maybe<double>. SelectMany passes -.5 on to Sqrt, which returns an empty value.

Query syntax #

Monads also enable query syntax in C# (just like they enable other kinds of syntactic sugar in languages like F# and Haskell). As outlined in the monad introduction, however, you must add a special SelectMany overload:

public static Maybe<TResult> SelectMany<T, U, TResult>( this Maybe<T> source, Func<T, Maybe<U>> k, Func<T, U, TResult> s) { return source.SelectMany(x => k(x).Select(y => s(x, y))); }

As I also mentioned in the monad introduction, it seems to me that you can always implement this overload with the above expression. Once this overload is in place, you can rewrite the above composition in query syntax, if that's more to your liking:

[Theory] [InlineData(new double[0], -100)] [InlineData(new double[] { 5, 3 }, 2)] [InlineData(new double[] { 1, -2 }, -100)] public void ComposeAverageAndSqrtWithQuerySyntax(double[] values, double expected) { Maybe<double> actual = from a in Average(values) from s in Sqrt(a) select s; Assert.Equal(expected, actual.GetValueOrFallback(-100)); }

In this case, query syntax is more verbose, but the behaviour is the same. It's just two different ways of writing the same expression. The C# compiler desugars the query-syntax expression to one that composes with SelectMany.

Join #

In the introduction you learned that if you have a Flatten or Join function, you can implement SelectMany, and the other way around. Since we've already defined SelectMany for Maybe<T>, we can use that to implement Join. In this article I use the name Join rather than Flatten. This is an arbitrary choice that doesn't impact behaviour. Perhaps you find it confusing that I'm inconsistent, but I do it in order to demonstrate that the behaviour is the same even if the name is different.

The concept of a monad is universal, but the names used to describe its components differ from language to language. What C# calls SelectMany, Scala calls flatMap, and what Haskell calls join, other languages may call Flatten.

You can always implement Join by using SelectMany with the identity function.

public static Maybe<T> Join<T>(this Maybe<Maybe<T>> source) { return source.SelectMany(x => x); }

Instead of flattening as you go with SelectMany, you could also use Select and Join to compose Average and Sqrt:

[Theory] [InlineData(new double[0], -100)] [InlineData(new double[] { 5, 3 }, 2)] [InlineData(new double[] { 1, -2 }, -100)] public void JoinAverageAndSqrt(double[] values, double expected) { Maybe<double> actual = Average(values).Select(Sqrt).Join(); Assert.Equal(expected, actual.GetValueOrFallback(-100)); }

You'd typically not write the composition like this, as it seems more convenient to use SelectMany, but you could. The behaviour is the same.

Return #

Apart from monadic bind, a monad must also define a way to put a normal value into the monad. Conceptually, I call this function return (because that's the name that Haskell uses). You don't, however, have to define a static method called Return. What's of importance is that the capability exists. For Maybe<T> in C# the idiomatic way would be to use a constructor. This constructor overload does double duty as monadic return:

public Maybe(T item) { if (item == null) throw new ArgumentNullException(nameof(item)); this.HasItem = true; this.Item = item; }

Why is it that overload, and not the other one? Because if you try to use new Maybe<T>() as return, you'll find that it breaks the monad laws. Try it. It's a good exercise.

Left identity #

Now that we're on the topic of the monad laws, let's see what they look like for the Maybe monad, starting with the left identity law.

[Theory] [InlineData(0)] [InlineData(1)] [InlineData(2)] public void LeftIdentity(int a) { Func<int, Maybe<int>> @return = i => new Maybe<int>(i); Func<int, Maybe<double>> h = i => i != 0 ? new Maybe<double>(1.0 / i) : new Maybe<double>(); Assert.Equal(@return(a).SelectMany(h), h(a)); }

To provide some variety, h is another Maybe-valued function - this time one that performs a safe multiplicative inverse. If i is zero, it returns an empty Maybe<double> because you can't devide by zero; otherwise, it returns one divided by i (wrapped in a Maybe<double>).

Right identity #

In a similar manner, we can showcase the right identity law as a test.

[Theory] [InlineData("")] [InlineData("foo")] [InlineData("42")] [InlineData("1337")] public void RightIdentity(string a) { Maybe<int> f(string s) { if (int.TryParse(s, out var i)) return new Maybe<int>(i); else return new Maybe<int>(); } Func<int, Maybe<int>> @return = i => new Maybe<int>(i); Maybe<int> m = f(a); Assert.Equal(m.SelectMany(@return), m); }

Again, for variety's sake, for f I've chosen a function that should probably be named TryParseInt if used in another context.

Associativity #

The last monad law is the associativity law that we can demonstrate like this:

[Theory] [InlineData("bar")] [InlineData("-1")] [InlineData("0")] [InlineData("4")] public void Associativity(string a) { Maybe<int> f(string s) { if (int.TryParse(s, out var i)) return new Maybe<int>(i); else return new Maybe<int>(); } Func<int, Maybe<double>> g = i => Sqrt(i); Func<double, Maybe<double>> h = d => d == 0 ? new Maybe<double>() : new Maybe<double>(1 / d); var m = f(a); Assert.Equal(m.SelectMany(g).SelectMany(h), m.SelectMany(x => g(x).SelectMany(h))); }

Here, I've copy-and-pasted the TryParseInt function I also used in the right identity example, and combined it with the Sqrt function and a safe multiplicative inverse. The law, however, should hold for all pure functions that type-check. The functions shown here are just examples.

As usual, a parametrised test is no proof that the law holds. I provide the tests only as examples of what the laws looks like.

Conclusion #

The Maybe sum type is a versatile and useful data structure that forms a monad. This enables you to compose disparate functions that each may err by failing to return a value.

Next: An Either monad.

The List monad

Lists, collections, deterministic Iterators form a monad. An article for object-oriented programmers.

This article is an instalment in an article series about monads.

In the article series about functors I never included an explicit article about collections. Instead, I wrote:

"Perhaps the most well-known of all functors is List, a.k.a. Sequence. C# query syntax can handle any functor, but most people only think of it as a language feature related to

IEnumerable<T>. Since the combination ofIEnumerable<T>and query syntax is already well-described, I'm not going to cover it explicitly here."

Like many other useful functors, a list also forms a monad. In this article, I call it list. In Haskell the most readily available equivalent is the built-in linked list ([]). Likewise, in F#, the fundamental collection types are linked lists and arrays. In C#, any IEnumerable<T> forms a monad. The .NET IEnumerable<T> interface is an application of the Iterator design pattern. There are many possible names for this monad: list, sequence, collection, iterator, etcetera. I've arbitrarily chosen to mostly use list.

SelectMany #

In the introduction to monads you learned that monads are characterised by having either a join (or flatten) function, or a bind function. While in Haskell, monadic bind is the >>= operator, in C# it's a method called SelectMany. Perhaps you wonder about the name.

The name SelectMany makes most sense in the context of lists. An example would be useful around here.

Imagine that you have to parse a CSV file. You've already read the file from disc and split the contents into lines. Now you need to split each line on commas.

> var lines = new[] { "foo,bar,baz", "qux,quux,quuz" }; > lines.Select(l => l.Split(',')).ToArray() string[2][] { string[3] { "foo", "bar", "baz" }, string[3] { "qux", "quux", "quuz" } }

When you use Select the result is a nested list. You've already learned that a monad is a functor you can flatten. In C#, you can 'flatten as you go' with SelectMany.

The above scenario offers a hint at why the method is named SelectMany. You use it instead of Select when the selector returns many values. In the above example, the Split method returns many values.

So, instead of Select, use SelectMany if you need a flattened list:

> lines.SelectMany(l => l.Split(',')).ToArray()

string[6] { "foo", "bar", "baz", "qux", "quux", "quuz" }

I've never had the opportunity to ask any of the LINQ designers about that naming choice, but this explanation makes sense to me. Even if it turns out that they had something else in mind when they named the method, I think that my explanation at least serves as a mnemonic device.

The name is still unfortunate, since it mostly makes sense for the list monad. Already when you use SelectMany with the Maybe monad, the name makes less sense.

Flatten #

In the introduction you learned that if you have a Flatten or Join function, you can implement SelectMany, and the other way around. In C#, LINQ already comes with SelectMany, but surprisingly, Flatten isn't built in.

You can always implement Flatten by using SelectMany with the identity function.

public static IEnumerable<T> Flatten<T>(this IEnumerable<IEnumerable<T>> source) { return source.SelectMany(x => x); }

Recall that the selector passed to SelectMany must have the type Func<TSource, IEnumerable<TResult>>. There's no rule, however, that says that TSource can't be IEnumerable<TResult>. If x in x => x has the type IEnumerable<TResult>, then x => x has the type Func<IEnumerable<TResult>, IEnumerable<TResult>> and it all type-checks.

Here's a test that demonstrates how Flatten works:

[Fact] public void FlattenExample() { string[][] nested = new[] { new[] { "foo", "bar" }, new[] { "baz" } }; IEnumerable<string> flattened = nested.Flatten(); Assert.Equal(new[] { "foo", "bar", "baz" }, flattened); }

In Haskell Flatten is called join and is implemented this way:

join :: (Monad m) => m (m a) -> m a join x = x >>= id

It's the same implementation as the above C# method: monadic bind (SelectMany in C#; >>= in Haskell) with the identity function. The Haskell implementation works for all monads.

Return #

Apart from monadic bind, a monad must also define a way to put a normal value into the monad. For the list monad, this implies a single value promoted to a list. Surprisingly, the .NET base class library doesn't come with such a built-in function, but you can easily implement it yourself:

public static IEnumerable<T> Return<T>(T x) { yield return x; }

In practice, however, you'd normally instead create a singleton array, like this: new[] { 2112 }. Since arrays implement IEnumerable<T>, this works just as well.

Why is this the correct implementation of return?

Wouldn't this work?

public static IEnumerable<T> ReturnZero<T>(T x) { yield break; }

Or this?

public static IEnumerable<T> ReturnTwo<T>(T x) { yield return x; yield return x; }

None of these are appropriate because they break the monad laws. Try it, as an exercise!

Left identity #

Now that we're on the topic of the monad laws, let's see what they look like for the list monad, starting with the left identity law.

[Theory] [InlineData("")] [InlineData("foo")] [InlineData("foo,bar")] [InlineData("foo,bar,baz")] public void LeftIdentity(string a) { Func<string, IEnumerable<string>> @return = List.Return; Func<string, IEnumerable<string>> h = s => s.Split(','); Assert.Equal(@return(a).SelectMany(h), h(a)); }

As usual, a parametrised test is no proof that the law holds. I provide it only as an example of what the law looks like.

Right identity #

Likewise, we can showcase the right identity law as a test:

[Theory] [InlineData(0)] [InlineData(1)] [InlineData(9)] public void RightIdentity(int a) { Func<int, IEnumerable<string>> f = i => Enumerable.Repeat("foo", i); Func<string, IEnumerable<string>> @return = List.Return; IEnumerable<string> m = f(a); Assert.Equal(m.SelectMany(@return), m); }

These tests all pass.

Associativity #

The last monad law is the associativity law that we can demonstrate like this:

[Theory] [InlineData(0)] [InlineData(3)] [InlineData(8)] public void Associativity(int a) { Func<int, IEnumerable<string>> f = i => Enumerable.Repeat("foo", i); Func<string, IEnumerable<char>> g = s => s; Func<char, IEnumerable<string>> h = c => new[] { c.ToString().ToUpper(), c.ToString().ToLower() }; IEnumerable<string> m = f(a); Assert.Equal(m.SelectMany(g).SelectMany(h), m.SelectMany(x => g(x).SelectMany(h))); }

The three functions f, g, and h are just silly functions I chose for variety's sake.

Conclusion #

Lists, arrays, collections, Iterators are various names for a common idea: that of an ordered sequence of values. In this article, I've called it list for simplicity's sake. It's possible to flatten nested lists in a well-behaved manner, which makes list a monad.

Next: The Maybe monad.

Monad laws

An article for object-oriented programmers.

This article is an instalment in an article series about monads.

Just as functors must obey the functor laws, monads must follow the monad laws. When looked at from the right angle, these are intuitive and reasonable rules: identity and associativity.

That sounds almost like a monoid, but the rules are more relaxed. For monoids, we require a binary operation. That's not the case for functors and monads. Instead of binary operations, for monads we're going to use Kleisli composition.

This article draws heavily on the Haskell wiki on monad laws.

In short, the monad laws comprise three rules:

- Left identity

- Right identity

- Associativity

The article describes each in turn, starting with left identity.

Left identity #

A left identity is an element that, when applied to the left of an operator, doesn't change the other element. When used with the fish operator it looks like this:

id >=> h ≡ h

For monads, id turns out to be return, so more specifically:

return >=> h ≡ h

In C# we can illustrate the law as a parametrised test:

[Theory] [InlineData(-1)] [InlineData(42)] [InlineData(87)] public void LeftIdentityKleisli(int a) { Func<int, Monad<int>> @return = Monad.Return; Func<int, Monad<string>> h = i => Monad.Return(i.ToString()); Func<int, Monad<string>> composed = @return.Compose(h); Assert.Equal(composed(a), h(a)); }

Recall that we can implement the fish operators as Compose overloads. The above test demonstrates the notion that @return composed with h should be indistinguishable from h. Such a test should pass.

Keep in mind, however, that Kleisli composition is derived from the definition of a monad. The direct definition of monads is that they come with a return and bind functions (or, alternatively return and join). If we inline the implementation of Compose, the test instead looks like this:

[Theory] [InlineData(-1)] [InlineData(42)] [InlineData(87)] public void LeftIdentityInlined(int a) { Func<int, Monad<int>> @return = Monad.Return; Func<int, Monad<string>> h = i => Monad.Return(i.ToString()); Func<int, Monad<string>> composed = x => @return(x).SelectMany(h); Assert.Equal(composed(a), h(a)); }

or, more succinctly:

Assert.Equal(@return(a).SelectMany(h), h(a));

This is also the way the left identity law is usually presented in Haskell:

return a >>= h = h a

In Haskell, monadic bind is represented by the >>= operator, while in C# it's the SelectMany method. Thus, the Haskell and the C# representations are equivalent.

Right identity #

Right identity starts out similar to left identity. Using the fish operator, the Haskell wiki describes it as:

f >=> return ≡ f

Translated to a C# test, an example of that law might look like this:

[Theory] [InlineData("foo")] [InlineData("ploeh")] [InlineData("lawful")] public void RightIdentityKleisli(string a) { Func<string, Monad<int>> f = s => Monad.Return(s.Length); Func<int, Monad<int>> @return = Monad.Return; Func<string, Monad<int>> composed = f.Compose(@return); Assert.Equal(composed(a), f(a)); }

Again, if we inline Compose, the test instead looks like this:

[Theory] [InlineData("foo")] [InlineData("ploeh")] [InlineData("lawful")] public void RightIdentityInlined(string a) { Func<string, Monad<int>> f = s => Monad.Return(s.Length); Func<int, Monad<int>> @return = Monad.Return; Func<string, Monad<int>> composed = x => f(x).SelectMany(@return); Assert.Equal(composed(a), f(a)); }

Now explicitly call the f function and assign it to a variable m:

[Theory] [InlineData("foo")] [InlineData("ploeh")] [InlineData("lawful")] public void RightIdentityInlinedIntoAssertion(string a) { Func<string, Monad<int>> f = s => Monad.Return(s.Length); Func<int, Monad<int>> @return = Monad.Return; Monad<int> m = f(a); Assert.Equal(m.SelectMany(@return), m); }

The assertion is now the C# version of the normal Haskell definition of the right identity law:

m >>= return = m

In other words, return is the identity element for monadic composition. It doesn't really 'do' anything in itself.

Associativity #

The third monad law is the associativity law. Keep in mind that an operator like + is associative if it's true that

(x + y) + z = x + (y + z)

Instead of +, the operator in question is the fish operator, and since values are functions, we typically call them f, g, and h rather than x, y, and z:

(f >=> g) >=> h ≡ f >=> (g >=> h)

Translated to a C# test as an example, the law looks like this:

[Theory] [InlineData(0)] [InlineData(1)] [InlineData(2)] public void AssociativityKleisli(double a) { Func<double, Monad<bool>> f = i => Monad.Return(i % 2 == 0); Func<bool, Monad<string>> g = b => Monad.Return(b.ToString()); Func<string, Monad<int>> h = s => Monad.Return(s.Length); Func<double, Monad<int>> left = (f.Compose(g)).Compose(h); Func<double, Monad<int>> right = f.Compose(g.Compose(h)); Assert.Equal(left(a), right(a)); }

The outer brackets around (f.Compose(g)) are actually redundant; we should remove them. Also, inline Compose:

[Theory] [InlineData(0)] [InlineData(1)] [InlineData(2)] public void AssociativityInlined(double a) { Func<double, Monad<bool>> f = i => Monad.Return(i % 2 == 0); Func<bool, Monad<string>> g = b => Monad.Return(b.ToString()); Func<string, Monad<int>> h = s => Monad.Return(s.Length); Func<double, Monad<string>> fg = x => f(x).SelectMany(g); Func<double, Monad<int>> left = x => fg(x).SelectMany(h); Func<bool, Monad<int>> gh = x => g(x).SelectMany(h); Func<double, Monad<int>> right = x => f(x).SelectMany(gh); Assert.Equal(left(a), right(a)); }

If there's a way in C# to inline the local compositions fg and gh directly into left and right, respectively, I don't know how. In any case, the above is just an intermediate step.

For both left and right, notice that the first step involves invoking f with x. If, instead, we call that value m, we can rewrite the test like this:

[Theory] [InlineData(0)] [InlineData(1)] [InlineData(2)] public void AssociativityInlinedIntoAssertion(double a) { Func<double, Monad<bool>> f = i => Monad.Return(i % 2 == 0); Func<bool, Monad<string>> g = b => Monad.Return(b.ToString()); Func<string, Monad<int>> h = s => Monad.Return(s.Length); Monad<bool> m = f(a); Assert.Equal(m.SelectMany(g).SelectMany(h), m.SelectMany(x => g(x).SelectMany(h))); }

The above assertion corresponds to the way the associativity law is usually represented in Haskell:

(m >>= g) >>= h = m >>= (\x -> g x >>= h)

Once more, keep in mind that >>= in Haskell corresponds to SelectMany in C#.

Conclusion #

A proper monad must satisfy the three monad laws: left identity, right identity, and associativity. Together, left identity and right identity are know as simply identity. What's so special about identity and associativity?

As Bartosz Milewski explains, a category must satisfy associativity and identity. When it comes to monads, the category in question is the Kleisli category. Or, as the Haskell wiki puts it:

Monad axioms:

Kleisli composition forms

a category.

Subsequent articles in this article series will now proceed to give examples of concrete monads, and what the laws look like for each of these.

Next: The List monad.

Kleisli composition

A way to compose two monadic functions. An article for object-oriented programmers.

This article is an instalment in an article series about monads.

There's a bit of groundwork which will be useful before we get to the monad laws. There are two ways to present the monad laws. One is quite unintuitive, but uses only the concepts already discussed in the introductory article. The other way to present the laws is much more intuitive, but builds on a derived concept: Kleisli composition.

I find it preferable to take the intuitive route, even if it requires this little detour around the Kleisli category.

Monadic functions #

Imagine some monad - any monad. It could be Maybe (AKA Option), Either (AKA Result), Task, State, etcetera. In Haskell notation we denote it m. (Don't worry if you don't know Haskell. The type notation is fairly intuitive, and I'll explain enough of it along the way.)

You may have a function that takes some input and returns a monadic value. Perhaps it's a parser that takes a string as input and returns a Maybe or Either as output:

String -> Maybe Int

Perhaps it's a database query that takes an ID as input and returns a Task or IO value:

UUID -> IO Reservation

Notice that in Haskell, you write the input to the left of the output. (This is also the case in F#.) Functions looks like arrows that point from the input to the output, which is a common depiction that I've also used before.

If we generalise this notation, we would write a monadic function like this:

a -> m b

a is a generic type that denotes input. It could be String or UUID as shown above, or anything else. Likewise, b is another generic type that could be Int, Reservation, or anything else. And m, as already explained, may be any monad. The corresponding C# method signature would be:

Func<T, Monad<T1>>

In C#, it's idiomatic to name generic type arguments T and T1 rather than a and b, but the idea is the same.

The previous article used Functor<T> as a stand-in for any functor. Likewise, now that you know what a monad is, the type Monad<T> is a similar pretend type. It symbolises 'any monad'.

Kleisli composition #

In Functions as pipes you got a visual illustration of how functions compose. If you have a function that returns a Boolean value, you can use that Boolean value as input to a function that accepts Boolean values as input. Generalised, if one function returns a and another function accepts a as input, you can compose these two functions:

(a -> b) -> (b -> c) -> (a -> c)

A combinator of this type takes two functions as input: a -> b (a function from a to b) and (b -> c), and returns a new function that 'hides' the middle step: a -> c.

If this is possible, it seems intuitive that you can also compose monadic functions:

(>=>) :: Monad m => (a -> m b) -> (b -> m c) -> a -> m c

The >=> operator is known as the fish operator - or more precisely the right fish operator, since there's also a left fish operator <=<.

This is known as Kleisli composition. In C# you could write it like this:

public static Func<T, Monad<T2>> Compose<T, T1, T2>( this Func<T, Monad<T1>> action1, Func<T1, Monad<T2>> action2) { return x => action1(x).SelectMany(action2); }

Notice that Kleisli composition works for any monad. You don't need any new abilities in order to compose a monad in this way. The composition exclusively relies on SelectMany (monadic bind).

Here's a usage example:

Func<string, Monad<int>> f = s => Monad.Return(s.Length); Func<int, Monad<bool>> g = i => Monad.Return(i % 2 == 0); Func<string, Monad<bool>> composed = f.Compose(g);

The Compose method corresponds to the right fish operator, but obviously you could define an overload that flips the arguments. That would correspond to the left fish operator.

Universality #

Kleisli composition seems compelling, but in reality I find that I rarely use it. If it isn't that useful, then why dedicate an entire article to it?

As I wrote in the introduction, it's mostly because it gives us an intuitive way to describe the monad laws.

Kleisli arrows form a category. As such, it's a universal abstraction. I admit that this article series has strayed away from its initial vision of discussing category theory, but I still find it assuring when I encounter some bedrock of theory behind a programming concept.

Conclusion #

Kleisli composition lets you compose two (or more) compatible monadic functions into a single monadic function.

Next: Monad laws.

Monads

A monad is a common abstraction. While typically associated with Haskell, monads also exist in C# and other languages.

This article series is part of a larger series of articles about functors, applicatives, and other mappable containers.

If you understand what a functor is, it should be easy to grasp the idea of a monad. It's a functor you can flatten.

There's a little more to it than that, and you also need to learn what I mean by flatten, but that's the essence of it.

In this article-series-within-an-article series, I'll explain this disproportionally dreaded concept, giving you plenty of examples as well as a more formal definition. Examples will be in both C#, F#, and Haskell, although I plan to emphasise C#. I expect that there's little need of explaining monads to people already familiar with Haskell. I'll show examples as separate articles in this series. These articles are mostly aimed at object-oriented programmers curious about monads.

- Kleisli composition

- Monad laws

- The List monad

- The Maybe monad

- An Either monad

- The Identity monad

- The Lazy monad

- Asynchronous monads

- The State monad

- The Reader monad

- Reactive monad

- The IO monad

- Test Data Generator monad

This list is far from exhaustive; more monads exist.

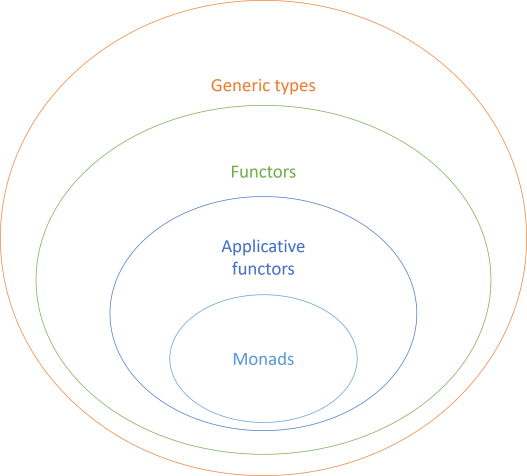

All monads are applicative functors, but not all applicative functors are monads. As the set diagram reiterates, applicative functors are functors, but again: not necessarily the other way around.

Flattening #

A monad is a functor you can flatten. What do I mean by flatten?

As in the article that introduces functors, imagine that you have some Functor<T> container. More concretely, this could be IEnumerable<T>, Maybe<T>, Task<T>, etcetera.

When working with functors, you sometimes run into situations where the containers nest inside each other. Instead of a Functor<decimal>, you may find yourself with a Functor<Functor<decimal>>, or maybe even an object that's thrice or quadruply nested.

If you can flatten such nested functor objects to just Functor<T>, then that functor also forms a monad.

In C#, you need a method with this kind of signature:

public static Functor<T> Flatten<T>(this Functor<Functor<T>> source)

Notice that this method takes a Functor<Functor<T>> as input and returns a Functor<T> as output. I'm not showing the actual implementation, since Functor<T> is really just a 'pretend' functor (and monad). In the example articles that follow, you'll see actual implementation code.

With Flatten you can write code like this:

Functor<decimal> dest = source.Flatten();

Here, source is a Functor<Functor<decimal>>.

This function also exists in Haskell, although there, it's called join:

join :: Monad m => m (m a) -> m a

For any Monad m you can flatten or join a nested monad m (m a) to a 'normal' monad m a.

This is the same function as Flatten, so in C# we could define an alias like this:

public static Functor<T> Join<T>(this Functor<Functor<T>> source) { return source.Flatten(); }

Usually, however, you don't see monads introduced via join or Flatten. It's a shame, really, because it's the most straightforward description.

Bind #

Most languages that come with a notion of monads define them via a function colloquially referred to as monadic bind or just bind. In C# it's called SelectMany, F# computation expressions call it Bind, and Scala calls it flatMap (with a vestige of the concept of flattening). Haskell just defines it as an operator:

(>>=) :: Monad m => m a -> (a -> m b) -> m b

When talking about it, I tend to call the >>= operator bind.

Translated to C# it has this kind of signature:

public static Functor<TResult> SelectMany<T, TResult>( this Functor<T> source, Func<T, Functor<TResult>> selector)

It looks almost like a functor's Select method (AKA map or fmap function). The difference is that this selector returns a functor value (Functor<TResult>) rather than a 'naked' TResult value.

When a Flatten (or Join) method already exists, you can always implement SelectMany like this:

public static Functor<TResult> SelectMany<T, TResult>( this Functor<T> source, Func<T, Functor<TResult>> selector) { Functor<Functor<TResult>> nest = source.Select(selector); return nest.Flatten(); }

I've split the implementation over two lines of code to make it clearer what's going on. That's also the reason I've used an explicit type declaration rather than var.

The Select method exists because the type is already a functor. When you call Select with selector you get a Functor<Functor<TResult>> because selector itself returns a Functor<TResult>. That's a nested functor, which you can Flatten.

You can use SelectMany like this:

Functor<TimeSpan> source = Functor.Return(TimeSpan.FromMinutes(32)); Functor<double> dest = source.SelectMany(x => Functor.Return(x.TotalSeconds + 192));

The lambda expression returns a Functor<double>. Had you called Select with this lambda expression, the result would have been a nested functor. SelectMany, however, flattens while it maps (it flatMaps). (This example is rather inane, since it would be simpler to use Select and omit wrapping the output of the lambda in the functor. The later articles in this series will show some more compelling examples.)

If you already have Flatten (or Join) you can always implement SelectMany like this. Usually, however, languages use monadic bind (e.g. SelectMany) as the foundation. Both C#, Haskell, and F# do that. In that case, it's also possible to go the other way and implement Flatten with SelectMany. You'll see examples of that in the subsequent articles.

Syntactic sugar #

Several languages understand monads well enough to provide some degree of syntactic sugar. While you can always use SelectMany in C# by simply calling the method, there are situations where that becomes awkward. The same is true with bind functions in F# and >>= in Haskell.

In C# you can use query syntax:

Functor<DateTime> fdt = Functor.Return(new DateTime(2001, 8, 3)); Functor<TimeSpan> fts = Functor.Return(TimeSpan.FromDays(4)); Functor<DateTime> dest = from dt in fdt from ts in fts select dt - ts;

In order to support more than a single from expression, however, you must supply a special SelectMany overload, or the code doesn't compile:

public static Functor<TResult> SelectMany<T, U, TResult>( this Functor<T> source, Func<T, Functor<U>> k, Func<T, U, TResult> s) { return source.SelectMany(x => k(x).Select(y => s(x, y))); }

This is an odd quirk of C# that I've yet to encounter in other languages, and I consider it ceremony that I have to perform to satiate the C# compiler. It's not part of the concept of what a monad is. As far as I can tell, you can always implement it like shown here.

Haskell's syntactic sugar is called do notation and works for all monads:

example :: (Monad m, Num a) => m a -> m a -> m a example fdt fts = do dt <- fdt ts <- fts return $ dt - ts

In F#, computation expressions drive syntactic sugar, but still based (among other things) on the concept of a monad:

let example (fdt : Monad<DateTime>) (fts : Monad<TimeSpan>) = monad { let! dt = fdt let! ts = fts return dt - ts }

Here, we again have to pretend that a Monad<'a> type exists, with a companion monad computation expression.

Return #

The ability to flatten nested functors is the essence of what a monad is. There are, however, also some formal requirements. As is the case with functors, there are laws that a monad should obey. I'll dedicate a separate article for those, so we'll skip them for now.

Another requirement is that in addition to SelectMany/bind/flatMap/>>= a monad must also provide a method to 'elevate' a value to the monad. This function is usually called return. It should have a type like this:

public static Functor<T> Return<T>(T x)

You can see it in use in the above code snippet, and with the relevant parts repeated here:

Functor<DateTime> fdt = Functor.Return(new DateTime(2001, 8, 3)); Functor<TimeSpan> fts = Functor.Return(TimeSpan.FromDays(4));

This code example lifts the value DateTime(2001, 8, 3) to a Functor<DateTime> value, and the value TimeSpan.FromDays(4) to a Functor<TimeSpan> value.

Usually, in a language like C# a concrete monad type may either afford a constructor or factory method for this purpose. You'll see examples in the articles that give specific monad examples.

Conclusion #

A monad is a functor you can flatten. That's the simplest way I can put it.

In this article you learned what flattening means. You also learned that a monad must obey some laws and have a return function. The essence, however, is flattening. The laws just ensure that the flattening is well-behaved.

It may not yet be clear to you why some functors would need flattening in the first place, but subsequent articles will show examples.

The word monad has its own mystique in software development culture. If you've ever encountered some abstruse nomenclature about monads, then try to forget about it. The concept is really not hard to grasp, and it usually clicks once you've seen enough examples, and perhaps done some exercises.

Next: Kleisli composition.

Comments

Quoting image in words:

- Every Monad is an applicative functor

- Every applicative functor is a functor

- Every functor is a generic type

As your aricle about Monomorphic functors shows, not every functor is a generic type. However, it is true that every applicative functor is a generic (or polymorphic) functor.

True, the illustration is a simplification.

I guess you might know that already but the reason for the strange SelectMany ceremonial version is compiler performance. The complicated form is needed so that the overload resolution does not take exponential amount of time. See a 2013 article by Eric Lippert covering this.

Petr, thank you for writing. I remember reading about it before, but it's good to have a proper source.

If I understand the article correctly, the problem seems to originate from overload resolution. This would, at least, explain why neither F# nor Haskell needs that extra overload. I wonder if a language like Java would have a similar problem, but I don't think that Java has syntactic sugar for monads.

It still seems a little strange that the C# compiler requires the presence of that overload. Eric Lippert seems to strongly imply that it's always possible to derive that extra overload from any monad's fundamental functions (as is also my conjecture). If this is so, the C# compiler should be able to do that work by itself. Monads that wish to supply a more efficient implement (e.g. IEnumerable<T>) could still do so: The C# compiler could look for the overload and use it if it finds it, and still fall back to the default implementation if none is available.

But I suppose that since the query syntax feature only really exists to support IEnumerable<T> this degree of sophistication wasn't warranted.

Contravariant Dependency Injection

How to use a DI Container in FP... or not.

This article is an instalment in an article series about contravariant functors. It assumes that you've read the introduction, and a few of the examples.

People sometimes ask me how to do Dependency Injection (DI) in Functional Programming (FP). The short answer is that you don't, because dependencies make functions impure. If you're having the discussion on Twitter, you may get some drive-by comments to the effect that DI is just a contravariant functor. (I've seen such interactions take place, but currently can't find the specific examples.)

What might people mean by that?

In this article, I'll explain. You'll also see how this doesn't address the underlying problem that DI makes everything impure. Ultimately, then, it doesn't really matter. It's still not functional.

Partial application as Dependency Injection #

As example code, let's revisit the code from the article Partial application is dependency injection. You may have an impure action like this:

// int -> (DateTimeOffset -> Reservation list) -> (Reservation -> int) -> Reservation // -> int option let tryAccept capacity readReservations createReservation reservation = let reservedSeats = readReservations reservation.Date |> List.sumBy (fun x -> x.Quantity) if reservedSeats + reservation.Quantity <= capacity then createReservation { reservation with IsAccepted = true } |> Some else None

The readReservations and createReservation 'functions' will in reality be impure actions that read from and write to a database. Since they are impure, the entire tryAccept action is impure. That's the result from that article.

In this example the 'dependencies' are pushed to the left. This enables you to partially apply the 'function' with the dependencies:

let sut = tryAccept capacity readReservations createReservation

This code example is from a unit test, which is the reason that I've named the partially applied 'function' sut. It has the type Reservation -> int option. Keep in mind that while it looks like a regular function, in practice it'll be an impure action.

You can invoke sut like another other function:

let actual = sut reservation

When you do this, tryAccept will execute, interact with the partially applied dependencies, and return a result.

Partial application is a standard practice in FP, and in itself it's useful. Using it for Dependency Injection, however, doesn't magically make DI functional. DI remains impure, which also means that the entire sut composition is impure.

Moving dependencies to the right #

Pushing dependencies to the left enables partial application. Dependencies are typically more long-lived than run-time values like reservation. Thus, partially applying the dependencies as shown above makes sense. If all dependencies are thread-safe, you can let an action like sut, above, have Singleton lifetime. In other words, just keep a single, stateless Reservation -> int option action around for the lifetime of the application, and pass it Reservation values as they arrive.

Moving dependencies to the right seems, then, counterproductive.

// Reservation -> int -> (DateTimeOffset -> Reservation list) -> (Reservation -> int) // -> int option let tryAccept reservation capacity readReservations createReservation = let reservedSeats = readReservations reservation.Date |> List.sumBy (fun x -> x.Quantity) if reservedSeats + reservation.Quantity <= capacity then createReservation { reservation with IsAccepted = true } |> Some else None

If you move the dependencies to the right, you can no longer partially apply them, so what would be the point of that?

As given above, it seems that you'll have to supply all arguments in one go:

let actual = tryAccept reservation capacity readReservations createReservation

If, on the other hand, you turn all the dependencies into a tuple, some new options arise:

// Reservation -> (int * (DateTimeOffset -> Reservation list) * (Reservation -> int)) // -> int option let tryAccept reservation (capacity, readReservations, createReservation) = let reservedSeats = readReservations reservation.Date |> List.sumBy (fun x -> x.Quantity) if reservedSeats + reservation.Quantity <= capacity then createReservation { reservation with IsAccepted = true } |> Some else None

I decided to count capacity as a dependency, since this is most likely a value that originates from a configuration setting somewhere. Thus, it'd tend to have a singleton lifetime equivalent to readReservations and createReservation.

It still seems that you have to supply all the arguments, though:

let actual = tryAccept reservation (capacity, readReservations, createReservation)

What's the point, then?

Reader #

You can view all functions as values of the Reader profunctor. This, then, is also true for tryAccept. It's an (impure) function that takes a Reservation as input, and returns a Reader value as output. The 'Reader environment' is a tuple of dependencies, and the output is int option.

First, add an explicit Reader module:

// 'a -> ('a -> 'b) -> 'b let run env rdr = rdr env // ('a -> 'b) -> ('c -> 'd) -> ('b -> 'c) -> 'a -> 'd let dimap f g rdr = fun env -> g (rdr (f env)) // ('a -> 'b) -> ('c -> 'a) -> 'c -> 'b let map g = dimap id g // ('a -> 'b) -> ('b -> 'c) -> 'a -> 'c let contraMap f = dimap f id

The run function is mostly there to make the Reader concept more explicit. The dimap implementation is what makes Reader a profunctor. You can use dimap to implement map and contraMap. In the following, we're not going to need map, but I still included it for good measure.

You can partially apply the last version of tryAccept with a reservation:

// sut : (int * (DateTimeOffset -> Reservation list) * (Reservation -> int)) -> int option let sut = tryAccept reservation

You can view the sut value as a Reader. The 'environment' is the tuple of dependencies, and the output is still int option. Since we can view a tuple as a type-safe DI Container, we've now introduced a DI Container to the 'function'. We can say that the action is a Reader of the DI Container.

You can run the action by supplying a container:

let container = (capacity, readReservations, createReservation) let sut = tryAccept reservation let actual = sut |> Reader.run container

This container, however, is specialised to fit tryAccept. What if other actions require other dependencies?

Contravariant mapping #

In a larger system, you're likely to have more than the three dependencies shown above. Other actions require other dependencies, and may not need capacity, readReservations, or createReservation.

To make the point, perhaps the container looks like this:

let container = (capacity, readReservations, createReservation, someOtherService, yetAnotherService)

If you try to run a partially applied Reader like sut with this action, your code doesn't compile. sut needs a triple of a specific type, but container is now a pentuple. Should you change tryAccept so that it takes the bigger pentuple as a parameter?

That would compile, but would be a leaky abstraction. It would also violate the Dependency Inversion Principle and the Interface Segregation Principle because tryAccept would require callers to supply a bigger argument than is needed.

Instead, you can contraMap the pentuple:

let container = (capacity, readReservations, createReservation, someOtherService, yetAnotherService) let sut = tryAccept reservation |> Reader.contraMap (fun (c, rr, cr, _, _) -> (c, rr, cr)) let actual = sut |> Reader.run container

This turns sut into a Reader of the pentuple, instead of a Reader of the triple. You can now run it with the pentuple container. tryAccept remains a Reader of the triple.

Conclusion #

Keep in mind that tryAccept remains impure. While this may look impressively functional, it really isn't. It doesn't address the original problem that composition of impure actions creates larger impure actions that don't fit in your head.

Why, then, make things more complicated than they have to be?

In FP, I'd look long and hard for a better alternative - one that obeys the functional architecture law. If, for various reasons, I was unable to produce a purely functional design, I'd prefer composing impure actions with partial application. It's simpler.

This article isn't an endorsement of using contravariant DI in FP. It's only meant as an explanation of a phrase like DI is just a contravariant functor.

Next: Profunctors.

Type-safe DI Containers are redundant

Just use Pure DI.

This article is part of a series called Type-safe DI composition. In the previous article, you saw how tuples are adequate DI Containers. In case it's not clear from the article that introduces the series, there's really no point to any of this. My motivation for writing the article is that readers sometimes ask me about topics such as DI Containers versus type safety, or DI Containers in functional programming. The goal of these articles is to make it painfully clear why I find such ideas moot.

Tuples as DI Containers #

In the previous article you saw how tuples make adequate DI Containers. Consider, however, this constructor:

private readonly (IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo) container; public CompositionRoot( (IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo) container) { this.container = container; }

One question, though: Why pass a tuple of three objects as a single argument? Wouldn't it be more idiomatic to use a normal argument list?

Argument list #

Just remove the brackets around the tuple argument and dissolve the elements into separate class fields:

private readonly IRestaurantDatabase rdb; private readonly IClock clock; private readonly IReservationsRepository repo; public CompositionRoot(IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo) { this.rdb = rdb; this.clock = clock; this.repo = repo; }

You no longer need to resolve services from a DI Container. You can simply use the class fields directly:

public object Create(ControllerContext context) { if (context is null) throw new ArgumentNullException(nameof(context)); var t = context.ActionDescriptor.ControllerTypeInfo; if (t == typeof(CalendarController)) return new CalendarController(rdb, repo); else if (t == typeof(HomeController)) return new HomeController(rdb); else if (t == typeof(ReservationsController)) return new ReservationsController(clock, rdb, repo); else if (t == typeof(RestaurantsController)) return new RestaurantsController(rdb); else if (t == typeof(ScheduleController)) return new ScheduleController( rdb, repo, AccessControlList.FromUser(context.HttpContext.User)); else throw new ArgumentException( $"Unexpected controller type: {t}.", nameof(context)); }

Instead of container.rdb, container.repo, and container.clock, you can simply access rdb, repo, and clock.

The registration phase (such as it now remains) also becomes simpler:

var rdb = CompositionRoot.CreateRestaurantDatabase(Configuration); var po = CompositionRoot.CreatePostOffice(Configuration, rdb); var clock = CompositionRoot.CreateClock(); var repo = CompositionRoot.CreateRepository(Configuration, po); var compositionRoot = new CompositionRoot(rdb, clock, repo); services.AddSingleton<IControllerActivator>(compositionRoot);

There's no longer any remnant of a DI Container. This is Pure DI.

Conclusion #

Type-safe DI Containers are redundant. The simplest way to compose dependencies in a type-safe fashion is to write normal code, i.e. Pure DI.

What about 'normal' DI Containers, though? These aren't type-safe, but may have their uses. They represent a trade-off. For sufficiently complicated code bases, they may offer some benefits.

I typically don't consider the trade-off worthwhile. The keywords in the above paragraph are 'sufficiently complicated'. Instead of writing complicated code, I favour simplicity: code that fits in my head.

A type-safe DI Container as a tuple

Tuples are DI Containers. With example code in C#.

This article is part of a series called Type-safe DI composition. In the previous article, you saw a C# example of a type-safe DI Container as a functor. In case it's not clear from the article that introduces the series, there's really no point to any of this. My motivation for writing the article is that readers sometimes ask me about topics such as DI Containers versus type safety, or DI Containers in functional programming. The goal of these articles is to make it painfully clear why I find such ideas moot.

The previous article demonstrated how you can view a type-safe DI Container as a functor. The payload is a registry of services. You could introduce a specialised Parameter Object with each service given a name, but really, since a DI container is little more than a dictionary keyed by type, if you give each element of a tuple a unique type, then a tuple of services is all you need.

Identity #

In the previous article, I asked the question: Does Container<T> and its Select method form a lawful functor? In other words, does it obey the functor laws?

In the article, I just left the question dangling, so you might wonder if there's something fishy going on. In a sense, there is.

The Container functor is simply the Identity functor in disguise. Just imagine renaming Container<T> to Identity<T> and this should be clear. Since the Identity functor is lawful, the Container functor is also lawful.

On the other hand, the Identity functor is redundant. It doesn't offer any additional capabilities over its payload. Anything you can do with the Identity functor's payload, you can do directly to the payload. An even stronger statement is that the Identity functor is isomorphic to its underlying payload. This is true also for Container<T>, which is isomorphic to T. This is clear from the definition of the class:

public sealed class Container<T> { public Container(T item) { Item = item; } public T Item { get; } // More members to follow...

You can convert any T to a Container<T> by calling the constructor, and you can convert any Container<T> to a T value by reading the Item property.

Then why have the Container at all?

Tuple as a DI Container #

In the previous article, we left off with a container defined as Container<(IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo)>. It follows that the type of the Container's Item property is (IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo) - a tuple with three elements.

This code example makes use of C# tuple types, which enables you to give each element in the tuple a name. For the tuple in question, the first element is called rdb, the next element clock, and the third repo. This makes the code that accesses these elements more readable, but is structurally unimportant. If you work in a language without this tuple feature, it doesn't change the conclusion in this article series.

Instead of relying on Container<(IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo)>, the CompositionRoot class can rely on the unwrapped tuple:

private readonly (IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo) container; public CompositionRoot( (IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo) container) { this.container = container; }

Its Create method directly accesses the tuple elements to create Controllers:

public object Create(ControllerContext context) { if (context is null) throw new ArgumentNullException(nameof(context)); var t = context.ActionDescriptor.ControllerTypeInfo; if (t == typeof(CalendarController)) return new CalendarController( container.rdb, container.repo); else if (t == typeof(HomeController)) return new HomeController(container.rdb); else if (t == typeof(ReservationsController)) return new ReservationsController( container.clock, container.rdb, container.repo); else if (t == typeof(RestaurantsController)) return new RestaurantsController(container.rdb); else if (t == typeof(ScheduleController)) return new ScheduleController( container.rdb, container.repo, AccessControlList.FromUser(context.HttpContext.User)); else throw new ArgumentException( $"Unexpected controller type: {t}.", nameof(context)); }

Notice that instead of container.Item.rdb, container.Item.repo, and container.Item.clock, there's no redundant Item property. The Create method can directly access container.rdb, container.repo, and container.clock.

Registration #

Not only did it become easier to resolve services from the Container (i.e. the tuple) - registering services is also simpler:

var rdb = CompositionRoot.CreateRestaurantDatabase(Configuration); var po = CompositionRoot.CreatePostOffice(Configuration, rdb); var clock = CompositionRoot.CreateClock(); var repo = CompositionRoot.CreateRepository(Configuration, po); var container = (rdb, clock, repo); var compositionRoot = new CompositionRoot(container); services.AddSingleton<IControllerActivator>(compositionRoot);

Just create the services and put them in a tuple of the desired shape. No query syntax or other compiler tricks are required. Just write normal code.

Conclusion #

The Container<T> class is just the Identity functor in disguise. It's redundant, so you can delete it and instead use a tuple, a record type, or a Parameter Object as your service registry.

Are we done now, or can we simplify things even further? Read on.

Can one flatten a statically typed list?

What would be the type of a heterogeneously nested list?

Once again, I (inadvertently) managed to start a discussion on Twitter about static versus dynamic typing. I've touched on the topic before, but my agenda is not to fan any flames - quite the contrary. I'm trying (nay, struggling) to understand the other side of the debate.

This post isn't really about that, though, but in one of the threads spawned by my original tweet, Tom Johnson wrote:

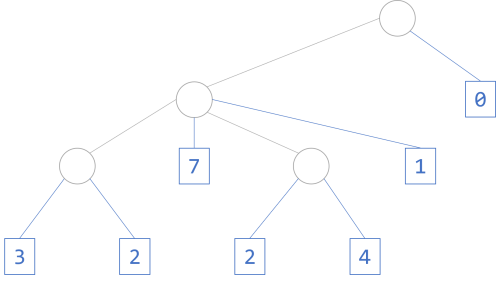

"One of my favourite examples:

"What's the type of [[[3, 2], 7, [2, 4], 1], 0]?

"What's the type of flatten, which takes that value and yields [3, 2, 7, 2, 4, 1, 0]?"

The type, it turns out, is straightforward.

I'd like to be explicit about my agenda when writing this article. I'm not doing this to prove Tom Johnson wrong. In fact, I get the sense that he already knows the answer. Rather, I found it an interesting little exercise.

Syntax #

First, we need to get one thing out of the way. In many languages, [[[3, 2], 7, [2, 4], 1], 0] isn't valid syntax. Even as a 'normal' list, syntax like [3, 2, 7, 2, 4, 1, 0] wouldn't be valid syntax in some languages.

In Haskell [3, 2, 7, 2, 4, 1, 0] would be valid, but it wouldn't be valid syntax in C#. And while it'd be valid syntax in F#, it wouldn't mean what it means in Haskell. The F# equivalent would be [3; 2; 7; 2; 4; 1; 0].

The point is that that I don't consider myself bound by the specific syntax [[[3, 2], 7, [2, 4], 1], 0]. I'm going to answer the question using Haskell, and in Haskell, that code doesn't parse.

Instead, I'll use a data structure that represents the same data, but in a more verbose manner.

Tree #

In one of the Twitter threads, Alexis King already gave the answer:

"`flatten` is perfectly possible in Haskell, you just have to write a rose tree datatype, not use `[a]`."

The standard Tree a in Data.Tree, however, doesn't seem to quite fit. Instead, we can use a rose tree. As Meertens wrote:

"We consider trees whose internal nodes may fork into an arbitrary (natural) number of sub-trees. (If such a node has zero descendants, we still consider it internal.) Each external node carries a data item. No further information is stored in the tree; in particular, internal nodes are unlabelled."

With unlabelled nodes, we might draw Tom Johnson's 'list' like this:

I'll use the Tree data type introduced in the article Picture archivist in Haskell:

data Tree a b = Node a [Tree a b] | Leaf b deriving (Eq, Show, Read)

If you would, you could define a type alias that has unlabelled internal nodes:

type MeertensTree = Tree ()

I'm not really going to bother doing that, though. I'm just going to create a Tree () b value.

Here we go:

> l = Node () [Node () [Node () [Leaf 3, Leaf 2], Leaf 7, Node () [Leaf 2, Leaf 4], Leaf 1], Leaf 0] > :t l l :: Num b => Tree () b

You could probably come up with a more succinct syntax to create trees like this in code, but I'm assuming that in 'reality', if you have the need to flatten nested lists, it'll be a values that arrive at the boundary of the application...

Flattening #

Can you flatten the 'list' l?

Yes, no problem. The Tree a b data type is an instance of various type classes, among these Foldable:

instance Foldable (Tree a) where foldMap = bifoldMap mempty

You can see the full definition of bifoldMap and its dependencies in the article that introduces the type.

One of the functions available via Foldable is toList, which is just what you need:

> toList l [3,2,7,2,4,1,0]

This is the result required by Tom Johnson.

Summary #

In short, the answer to the question: "What's the type of [[[3, 2], 7, [2, 4], 1], 0]?" is: Num b => Tree () b, an example of which might be Tree () Integer. You could also call it MeertensTree Integer.

Likewise, the type of the requested flatten function is toList :: Foldable t => t a -> [a].

Comments

He appears to be using JavaScript and the solution is trivial in TypeScript, so he must not have looked too hard before claiming that statically typed languages cannot handle this scenario. He also kept moving the goalposts (It needs to use list syntax! It needs to support heterogeneous lists!), but even the most naïve attempt in TypeScript handles those as well.

type Nested<T> = (Nested<T> | T)[];

const flatten = <T>(val: Nested<T>): T[] =>

val.reduce(

(r: T[], v) => [...r, ...(Array.isArray(v) ? flatten(v) : [v])],

[]

);

const nested = [[[3, 2], 7, [2, 4], 1], 0];

const result = flatten(nested);

console.log(result); // [3, 2, 7, 2, 4, 1, 0]

const silly: Nested<number | string | { name: string }> = [

[[3, 2], 1],

"cheese",

{ name: "Fred" },

];

const sillyResult = flatten(silly);

console.log(sillyResult); // [3, 2, 1, "cheese", {"name": "Fred"}]

TypeScript types can also maintain the order of the types of elements in the flattened list. How to flatten a tuple type in TypeScript? gives a solution capable of handling that, though it is certainly more complex than the version above.

A type-safe DI Container as a functor

Decoupling the registry of services from the container. An ongoing C# example.

This article is part of a series called Type-safe DI composition. In the previous article, you saw a C# example of a type-safe, nested DI Container. In case it's not clear from the article that introduces the series, there's really no point to any of this. My motivation for writing the article is that readers sometimes ask me about topics such as DI Containers versus type safety, or DI Containers in functional programming. The goal of these articles is to make it painfully clear why I find such ideas moot.

In the previous article, you saw how you can use container nesting to implement a type-safe container of arbitrary arity. You only need these generic containers:

public sealed class Container public sealed class Container<T1> public sealed class Container<T1, T2>

You can achieve arities higher than two by nesting containers. For example, you can register three services by nesting one container inside another: Container<T1, Container<T2, T3>>. Higher arities require more nesting. See the previous article for an example with five services.

Decoupling the container from the registry #

How did I get the idea of nesting the containers?

It started as I was examining the constructor of the CompositionRoot class:

private readonly Container<IConfiguration, Container<Container<Container<IRestaurantDatabase, IPostOffice>, IClock>, IReservationsRepository>> container; public CompositionRoot(Container<IConfiguration, Container<Container<Container<IRestaurantDatabase, IPostOffice>, IClock>, IReservationsRepository>> container) { this.container = container; }

Those are, indeed, wide lines of code. No 80x24 box here. If you ignore all the container nesting, you can tell that the container contains five services:

IConfigurationIRestaurantDatabaseIPostOfficeIClockIReservationsRepository

CompositionRoot:

IRestaurantDatabaseIClockIReservationsRepository

IConfiguration and IPostOffice services are only present because they were required to create some of the other services. CompositionRoot never directly uses these two services. In other words, there's a leaky abstraction somewhere.

It'd be more in spirit with the Dependency Inversion Principle (DIP) and the Interface Segregation Principle (ISP) if the constructor required only the services it needs.

On the other hand, it's easiest to create the container from its constituent elements if you can pass already-registered services along when registering other services:

var container = Container.Empty .Register(Configuration) .Register(CompositionRoot.CreateRestaurantDatabase) .Register(CompositionRoot.CreatePostOffice) .Register(CompositionRoot.CreateClock()) .Register((conf, cont) => CompositionRoot.CreateRepository(conf, cont.Item1.Item2)); var compositionRoot = new CompositionRoot(container); services.AddSingleton<IControllerActivator>(compositionRoot);

I realised that the container needed a way to remove services from the public API. And it hit me that it'd be easier if I separated the container from its registry of services. If I did that, I could define a single generic Container<T> and even make it a functor. The payload could be a custom Parameter Object, but could also just be a tuple of services.

From Thinking with Types I knew that you can reduce all algebraic data types to canonical representations of Eithers and tuples.

A triple, for example, can be modelled as a Tuple<T1, Tuple<T2, T3>> or Tuple<Tuple<T1, T2>, T3>, a quadruple as e.g. Tuple<Tuple<T1, T2>, Tuple<T3, T4>>, etcetera.

Connecting those dots, I also realised that as an intermediary step I could nest the containers. It's really unnecessary, though, so let's proceed to simplify the code.

A Container functor #

Instead of a Container<T1, T2> in addition to a Container<T1>, you only need the single-arity container:

public sealed class Container<T> { public Container(T item) { Item = item; } public T Item { get; } // More members to follow...

Apart from overriding Equals and GetHashCode, the only member is Select:

public Container<TResult> Select<TResult>(Func<T, TResult> selector) { if (selector is null) throw new ArgumentNullException(nameof(selector)); return new Container<TResult>(selector(Item)); }

This is a straightforward Select implementation, making Container<T> a functor.

Usage #

You can use the Select method to incrementally build the desired container:

var container = Container.Empty.Register(Configuration) .Select(conf => (conf, rdb: CompositionRoot.CreateRestaurantDatabase(conf))) .Select(t => (t.conf, t.rdb, po: CompositionRoot.CreatePostOffice(t.conf, t.rdb))) .Select(t => (t.conf, t.rdb, t.po, clock: CompositionRoot.CreateClock())) .Select(t => (t.conf, t.rdb, t.po, t.clock, repo: CompositionRoot.CreateRepository(t.conf, t.po))); var compositionRoot = new CompositionRoot(container); services.AddSingleton<IControllerActivator>(compositionRoot);

The syntax is awkward, since you need to pass a tuple along to the next step, in which you need to access each of the tuple's elements, only to create a bigger tuple, and so on.

While it does get the job done, we can do better.

Query syntax #

Instead of using method-call syntax to chain all these service registrations together, you can take advantage of C#'s query syntax which lights up for any functor:

var container = from conf in Container.Empty.Register(Configuration) let rdb = CompositionRoot.CreateRestaurantDatabase(conf) let po = CompositionRoot.CreatePostOffice(conf, rdb) let clock = CompositionRoot.CreateClock() let repo = CompositionRoot.CreateRepository(conf, po) select (conf, rdb, po, clock, repo); var compositionRoot = new CompositionRoot(container); services.AddSingleton<IControllerActivator>(compositionRoot);

In both cases, the container object has the type Container<(IConfiguration conf, IRestaurantDatabase rdb, IPostOffice po, IClock clock, IReservationsRepository repo)>, which is still quite the mouthful. Now, however, we can do something about it.

DIP and ISP applied #

The CompositionRoot class only needs three services, so its constructor should ask for those, and no more:

private readonly Container<(IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo)> container; public CompositionRoot( Container<(IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo)> container) { this.container = container; }

With that injected container it can implement Create like this:

public object Create(ControllerContext context) { if (context is null) throw new ArgumentNullException(nameof(context)); var t = context.ActionDescriptor.ControllerTypeInfo; if (t == typeof(CalendarController)) return new CalendarController( container.Item.rdb, container.Item.repo); else if (t == typeof(HomeController)) return new HomeController(container.Item.rdb); else if (t == typeof(ReservationsController)) return new ReservationsController( container.Item.clock, container.Item.rdb, container.Item.repo); else if (t == typeof(RestaurantsController)) return new RestaurantsController(container.Item.rdb); else if (t == typeof(ScheduleController)) return new ScheduleController( container.Item.rdb, container.Item.repo, AccessControlList.FromUser(context.HttpContext.User)); else throw new ArgumentException( $"Unexpected controller type: {t}.", nameof(context)); }

That's more readable, although the intermediary Item object doesn't seem to do much work...

You can create a container with the desired type like this:

Container<(IRestaurantDatabase rdb, IClock clock, IReservationsRepository repo)> container = from conf in Container.Empty.Register(Configuration) let rdb = CompositionRoot.CreateRestaurantDatabase(conf) let po = CompositionRoot.CreatePostOffice(conf, rdb) let clock = CompositionRoot.CreateClock() let repo = CompositionRoot.CreateRepository(conf, po) select (rdb, clock, repo); var compositionRoot = new CompositionRoot(container); services.AddSingleton<IControllerActivator>(compositionRoot);

Notice that the only difference compared to earlier is that the select expression only involves the three required services: rdb, clock, and repo.

Conclusion #

This seems cleaner than before, but perhaps you're left with a nagging doubt: Why is the Container<T> class even required? What value does its Item property provide?

And perhaps another question is also in order: Does the Select method shown even define a lawful functor?

Read on.

Comments

I am confused by this paragraph. A monoid requries a binary operation (that is also closed), and Kleisli composition is (closed) binary operation.

As you said in the introductory article to this monad series, there are three pieces to a monad: a generic type

M<>, a function with typea -> M<a>(typically called "return"), and a function with signature(a -> M<b>) -> M<a> -> M<b>(sometimes called "bind"). Then(M<>, return, bind)is a monad if those three items satisfy the monad laws.I think the following is true. Let

M<>be a generic type, and letSbe the set of all functions with signaturea -> M<a>. Then(M<>, return, bind)is a monad if only only if(S, >=>, return)is a monoid.Tyson, thank you for writing. To address the elephant in the room first: A monad is a monoid (in the category of endofunctors), but as far as I can tell, you have to look more abstractly at it than allowed by the static type systems we normally employ.

Bartosz Milewski describes a nested functor as the tensor product of two monadic types:

M ⊗ M. The binary operation in question is thenM ⊗ M -> M, or join/flatten (from here on just join). In this very abstract view, monads are monoids. It does require an abstract view of functors as objects in a category. At that level of abstraction, so many details are lost that join looks like a binary operation.So, to be clear, if you want to look at a monad as a monoid, it's not the monadic bind function that's the binary operation; it's join. (That's another reason I prefer explaining monads in terms of join rather than leading with bind.)

When you zoom in on the details, however, join doesn't look like a regular binary operation:

m (m a) -> m a. A binary operation, on the other hand, should look like this:m a -> m a -> m a.The Kleisli composition operator unfortunately doesn't fix this. Let's look at the C# method signature, since it may offer a way out:

Notice that the types don't match:

action1has a type different fromaction2, which is again different from the return type. Thus, it's not a binary operation, because the elements don't belong to the same set.As Bartosz Milewski wrote, though, if we squint a little, we may make it work. C# allows us to 'squint' because of its object hierarchy:

I'm not sure how helpful that is, though, so I didn't want to get into these weeds in the above article itself. It's fine here in the comments, because I expect only very dedicated readers to consult them. This, thus, serves more like a footnote or appendix.

Thanks very much for you reply. It helped me to see where I was wrong.

(S, >=>, return)is not a monoid because>=>is not total overS, which is another condition the binary operation must satisfy, but I overlooked that. Furthremore, I meant to defineSas the set of all functions with signaturea -> M<b>. The same problem still exists though. The second code block in your comment has this same problem. There is not a natural choice for the implementation unless the types of the objects satisfy the constraints implied by the use of type parameters in the first code block in your comment. I will keep thinking about this.In the meantime, keep up the great work. I am loving your monad content.