ploeh blog danish software design

Favour flat code file folders

How code files are organised is hardly related to sustainability of code bases.

My recent article Folders versus namespaces prompted some reactions. A few kind people shared how they organise code bases, both on Twitter and in the comments. Most reactions, however, carry the (subliminal?) subtext that organising code in file folders is how things are done.

I'd like to challenge that notion.

As is usually my habit, I mostly do this to make you think. I don't insist that I'm universally right in all contexts, and that everyone else are wrong. I only write to suggest that alternatives exist.

The previous article wasn't a recommendation; it's was only an exploration of an idea. As I describe in Code That Fits in Your Head, I recommend flat folder structures. Put most code files in the same directory.

Finding files #

People usually dislike that advice. How can I find anything?!

Let's start with a counter-question: How can you find anything if you have a deep file hierarchy? Usually, if you've organised code files in subfolders of subfolders of folders, you typically start with a collapsed view of the tree.

Those of my readers who know a little about search algorithms will point out that a search tree is an efficient data structure for locating content. The assumption, however, is that you already know (or can easily construct) the path you should follow.

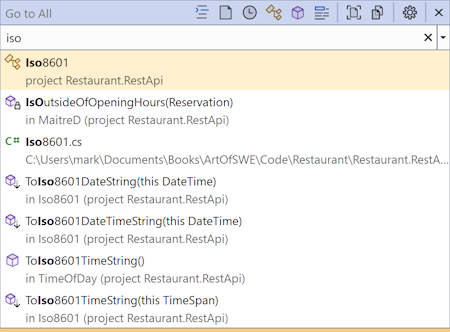

In a view like the above, most files are hidden in one of the collapsed folders. If you want to find, say, the Iso8601.cs file, where do you look for it? Which path through the tree do you take?

Unfair!, you protest. You don't know what the Iso8601.cs file does. Let me enlighten you: That file contains functions that render dates and times in ISO 8601 formats. These are used to transmit dates and times between systems in a platform-neutral way.

So where do you look for it?

It's probably not in the Controllers or DataAccess directories. Could it be in the Dtos folder? Rest? Models?

Unless your first guess is correct, you'll have to open more than one folder before you find what you're looking for. If each of these folders have subfolders of their own, that only exacerbates the problem.

If you're curious, some programmer (me) decided to put the Iso8601.cs file in the Dtos directory, and perhaps you already guessed that. That's not the point, though. The point is this: 'Organising' code files in folders is only efficient if you can unerringly predict the correct path through the tree. You'll have to get it right the first time, every time. If you don't, it's not the most efficient way.

Most modern code editors come with features that help you locate files. In Visual Studio, for example, you just hit Ctrl+, and type a bit of the file name: iso:

Then hit Enter to open the file. In Visual Studio Code, the corresponding keyboard shortcut is Ctrl+p, and I'd be highly surprised if other editors didn't have a similar feature.

To conclude, so far: Organising files in a folder hierarchy is at best on par with your editor's built-in search feature, but is likely to be less productive.

Navigating a code base #

What if you don't quite know the name of the file you're looking for? In such cases, the file system is even less helpful.

I've seen people work like this:

- Look at some code. Identify another code item they'd like to view. (Examples may include: Looking at a unit test and wanting to see the SUT, or looking at a class and wanting to see the base class.)

- Move focus to the editor's folder view (in Visual Studio called the Solution Explorer).

- Scroll to find the file in question.

- Double-click said file.

Regardless of how the files are organised, you could, instead, go to definition (F12 with my Visual Studio keyboard layout) in a single action. Granted, how well this works varies with editor and language. Still, even when editor support is less optimal (e.g. a code base with a mix of F# and C#, or a Haskell code base), I can often find things faster with a search (Ctrl+Shift+f) than via the file system.

A modern editor has efficient tools that can help you find what you're looking for. Looking through the file system is often the least efficient way to find the code you're looking for.

Large code bases #

Do I recommend that you dump thousands of code files in a single directory, then?

Hardly, but a question like that presupposes that code bases have thousands of code files. Or more, even. And I've seen such code bases.

Likewise, it's a common complaint that Visual Studio is slow when opening solutions with hundreds of projects. And the day Microsoft fixes that problem, people are going to complain that it's slow when opening a solution with thousands of projects.

Again, there's an underlying assumption: That a 'real' code base must be so big.

Consider alternatives: Could you decompose the code base into multiple smaller code bases? Could you extract subsystems of the code base and package them as reusable packages? Yes, you can do all those things.

Usually, I'd pull code bases apart long before they hit a thousand files. Extract modules, libraries, utilities, etc. and put them in separate code bases. Use existing package managers to distribute these smaller pieces of code. Keep the code bases small, and you don't need to organise the files.

Maintenance #

But, if all files are mixed together in a single folder, how do we keep the code maintainable?

Once more, implicit (but false) assumptions underlie such questions. The assumption is that 'neatly' organising files in hierarchies somehow makes the code easier to maintain. Really, though, it's more akin to a teenager who 'cleans' his room by sweeping everything off the floor only to throw it into his cupboard. It does enable hoovering the floor, but it doesn't make it easier to find anything. The benefit is mostly superficial.

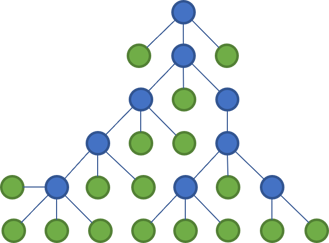

Still, consider a tree.

This may not be the way you're used to see files and folders rendered, but this diagram emphases the tree structure and makes what happens next starker.

The way that most languages work, putting code files in folders makes little difference to the compiler. If the classes in my Controllers folder need some classes from the Dtos folder, you just use them. You may need to import the corresponding namespace, but modern editors make that a breeze.

In the above tree, the two files who now communicate are coloured orange. Notice that they span across two main branches of the tree.

Thus, even though the files are organised in a tree, it has no impact on the maintainability of the code base. Code can reference other code in other parts of the tree. You can easily create cycles in a language like C#, and organising files in trees makes no difference.

Most languages, however, enforce that library dependencies form a directed acyclic graph (i.e. if the data access library references the domain model, the domain model can't reference the data access library). The C# (and most other languages) compiler enforces what Robert C. Martin calls the Acyclic Dependencies Principle. Preventing cycles prevents spaghetti code, which is key to a maintainable code base.

(Ironically, one of the more controversial features of F# is actually one of its greatest strengths: It doesn't allow cycles.)

Tidiness #

Even so, I do understand the lure of organising code files in an elaborate hierarchy. It looks so neat.

Previously, I've touched on the related topic of consistency, and while I'm a bit of a neat freak myself, I have to realise that tidiness seems to be largely unrelated to the sustainability of a code base.

As another example in this category, I've seen more than one code base with consistently beautiful documentation. Every method was adorned with formal XML documentation with every input parameter as well as output described.

Every new phase in a method was delineated with another neat comment, nicely adorned with a 'comment frame' and aligned with other comments.

It was glorious.

Alas, that documentation sat on top of 750-line methods with a cyclomatic complexity above 50. The methods were so long that developers had to introduce artificial variable scopes to avoid naming collisions.

The reason I was invited to look at that code in the first place was that the organisation had trouble with maintainability, and they asked me to help.

It was neat, yet unmaintainable.

This discussion about tidiness may seem like a digression, but I think it's important to make the implicit explicit. If I'm not much mistaken, preference for order is a major reason that so many developers want to organise code files into hierarchies.

Organising principles #

What other motivations for file hierarchies could there be? How about the directory structure as an organising principle?

The two most common organising principles are those that I experimented with in the previous article:

- By technical role (Controller, View Model, DTO, etc.)

- By feature

A technical leader might hope that, by presenting a directory structure to team members, it imparts an organising principle on the code to be.

It may even do so, but is that actually a benefit?

It might subtly discourage developers from introducing code that doesn't fit into the predefined structure. If you organise code by technical role, developers might put most code in Controllers, producing mostly procedural Transaction Scripts. If you organise by feature, this might encourage duplication because developers don't have a natural place to put general-purpose code.

You can put truly shared code in the root folder, the counter-argument might be. This is true, but:

- This seems to be implicitly discouraged by the folder structure. After all, the hierarchy is there for a reason, right? Thus, any file you place in the root seems to suggest a failure of organisation.

- On the other hand, if you flaunt that not-so-subtle hint and put many code files in the root, what advantage does the hierarchy furnish?

In Information Distribution Aspects of Design Methodology David Parnas writes about documentation standards:

"standards tend to force system structure into a standard mold. A standard [..] makes some assumptions about the system. [...] If those assumptions are violated, the [...] organization fits poorly and the vocabulary must be stretched or misused."

(The above quote is on the surface about documentation standards, and I've deliberately butchered it a bit (clearly marked) to make it easier to spot the more general mechanism.)

In the same paper, Parnas describes the danger of making hard-to-change decisions too early. Applied to directory structure, the lesson is that you should postpone designing a file hierarchy until you know more about the problem. Start with a flat directory structure and add folders later, if at all.

Beyond files? #

My claim is that you don't need much in way of directory hierarchy. From this doesn't follow, however, that we may never leverage such options. Even though I left most of the example code for Code That Fits in Your Head in a single folder, I did add a specialised folder as an anti-corruption layer. Folders do have their uses.

"Why not take it to the extreme and place most code in a single file? If we navigate by "namespace view" and search, do we need all those files?"

Following a thought to its extreme end can shed light on a topic. Why not, indeed, put all code in a single file?

Curious thought, but possibly not new. I've never programmed in SmallTalk, but as I understand it, the language came with tooling that was both IDE and execution environment. Programmers would write source code in the editor, but although the code was persisted to disk, it may not have been as text files.

Even if I completely misunderstand how SmallTalk worked, it's not inconceivable that you could have a development environment based directly on a database. Not that I think that this sounds like a good idea, but it sounds technically possible.

Whether we do it one way or another seems mostly to be a question of tooling. What problems would you have if you wrote an entire C# (Java, Python, F#, or similar) code base as a single file? It becomes more difficult to look at two or more parts of the code base at the same time. Still, Visual Studio can actually give you split windows of the same file, but I don't know how it scales if you need multiple views over the same huge file.

Conclusion #

I recommend flat directory structures for code files. Put most code files in the root of a library or app. Of course, if your system is composed from multiple libraries (dependencies), each library has its own directory.

Subfolders aren't prohibited, only generally discouraged. Legitimate reasons to create subfolders may emerge as the code base evolves.

My misgivings about code file directory hierarchies mostly stem from the impact they have on developers' minds. This may manifest as magical thinking or cargo-cult programming: Erect elaborate directory structures to keep out the evil spirits of spaghetti code.

It doesn't work that way.

Visual Studio Code snippet to make URLs relative

Yes, it involves JSON and regular expressions.

Ever since I migrated the blog off dasBlog I've been writing the articles in raw HTML. The reason is mostly a historical artefact: Originally, I used Windows Live Writer, but Jekyll had no support for that, and since I'd been doing web development for more than a decade already, raw HTML seemed like a reliable and durable alternative. I increasingly find that relying on skill and knowledge is a far more durable strategy than relying on technology.

For a decade I used Sublime Text to write articles, but over the years, I found it degrading in quality. I only used Sublime Text to author blog posts, so when I recently repaved my machine, I decided to see if I could do without it.

Since I was already using Visual Studio Code for much of my programming, I decided to give it a go for articles as well. It always takes time when you decide to move off a tool you've been used for a decade, but after some initial frustrations, I quickly found a new modus operandi.

One benefit of rocking the boat is that it prompts you to reassess the way you do things. Naturally, this happened here as well.

My quest for relative URLs #

I'd been using a few Sublime Text snippets to automate a few things, like the markup for the section heading you see above this paragraph. Figuring out how to replicate that snippet in Visual Studio Code wasn't too hard, but as I was already perusing the snippet documentation, I started investigating other options.

One little annoyance I'd lived with for years was adding links to other articles on the blog.

While I write an article, I run the site on my local machine. When linking to other articles, I sometimes use the existing page address off the public site, and sometimes I just copy the page address from localhost. In both cases, I want the URL to be relative so that I can navigate the site even if I'm offline. I've written enough articles on planes or while travelling without internet that this is an important use case for me.

For example, if I want to link to the article Adding NuGet packages when offline, I want the URL to be /2023/01/02/adding-nuget-packages-when-offline, but that's not the address I get when I copy from the browser's address bar. Here, I get the full URL, with either http://localhost:4000/ or https://blog.ploeh.dk/ as the origin.

For years, I've been manually stripping the origin away, as well as the trailing /. Looking through the Visual Studio Code snippet documentation, however, I eyed an opportunity to automate that workflow.

Snippet #

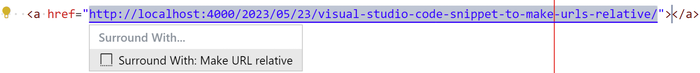

I wanted a piece of editor automation that could modify a URL after I'd pasted it into the article. After a few iterations, I've settled on a surround-with snippet that works pretty well. It looks like this:

"Make URL relative": { "prefix": "urlrel", "body": [ "${TM_SELECTED_TEXT/^(?:http(?:s?):\\/\\/(?:[^\\/]+))(.+)\\//$1/}" ], "description": "Make URL relative." }

Don't you just love regular expressions? Write once, scrutinise forever.

I don't want to go over all the details, because I've already forgotten most of them, but essentially this expression strips away the URL origin starting with either http or https until it finds the first slash /.

The thing that makes it useful, though, is the TM_SELECTED_TEXT variable that tells Visual Studio Code that this snippet works on selected text.

When I paste a URL into an a tag, at first nothing happens because no text is selected. I can then use Shift + Alt + → to expand the selection, at which point the Visual Studio Code lightbulb (Code Action) appears:

Running the snippet removes the URL's origin, as well as the trailing slash, and I can move on to write the link text.

Conclusion #

After I started using Visual Studio Code to write blog posts, I've created a few custom snippets to support my authoring workflow. Most of them are fairly mundane, but the make-URLs-relative snippet took me a few iterations to get right.

I'm not expecting many of my readers to have this particular need, but I hope that this outline showcases the capabilities of Visual Studio Code snippets, and perhaps inspires you to look into creating custom snippets for your own purposes.

Folders versus namespaces

What if you allow folder and namespace structure to diverge?

I'm currently writing C# code with some first-year computer-science students. Since most things are new to them, they sometimes do things in a way that are 'not the way we usually do things'. As an example, teachers have instructed them to use namespaces, but apparently no-one have told them that the file folder structure has to mirror the namespace structure.

The compiler doesn't care, but as long as I've been programming in C#, it's been idiomatic to do it that way. There's even a static code analysis rule about it.

The first couple of times they'd introduce a namespace without a corresponding directory, I'd point out that they are supposed to keep those things in sync. One day, however, it struck me: What happens if you flout that convention?

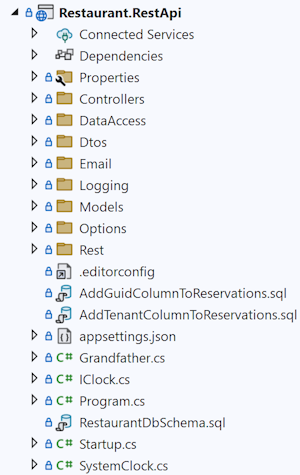

A common way to organise code files #

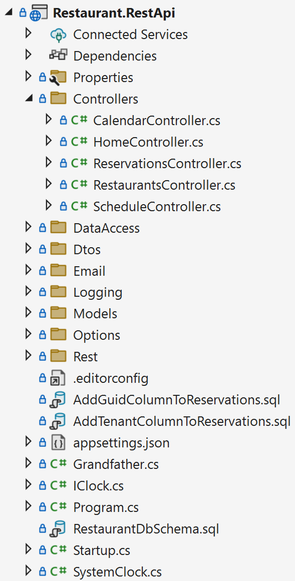

Code scaffolding tools and wizards will often nudge you to organise your code according to technical concerns: Controllers, models, views, etc. I'm sure you've encountered more than one code base organised like this:

You'll put all your Controller classes in the Controllers directory, and make sure that the namespace matches. Thus, in such a code base, the full name of the ReservationsController might be Ploeh.Samples.Restaurants.RestApi.Controllers.ReservationsController.

A common criticism is that this is the wrong way to organise the code.

The problem with trees #

The complaint that this is the wrong way to organise code implies that a correct way exists. I write about this in Code That Fits in Your Head:

Should you create a subdirectory for Controllers, another for Models, one for Filters, and so on? Or should you create a subdirectory for each feature?

Few people like my answer: Just put all files in one directory. Be wary of creating subdirectories just for the sake of 'organising' the code.

File systems are hierarchies; they are trees: a specialised kind of acyclic graph in which any two vertices are connected by exactly one path. Put another way, each vertex can have at most one parent. Even more bluntly: If you put a file in a hypothetical

Controllersdirectory, you can't also put it in aCalendardirectory.

But what if you could?

Namespaces disconnected from directory hierarchy #

The code that accompanies Code That Fits in Your Head is organised as advertised: 65 files in a single directory. (Tests go in separate directories, though, as they belong to separate libraries.)

If you decide to ignore the convention that namespace structure should mirror folder structure, however, you now have a second axis of variability.

As an experiment, I decided to try that idea with the book's code base. The above screen shot shows the stereotypical organisation according to technical responsibility, after I moved things around. To be clear: This isn't how the book's example code is organised, but an experiment I only now carried out.

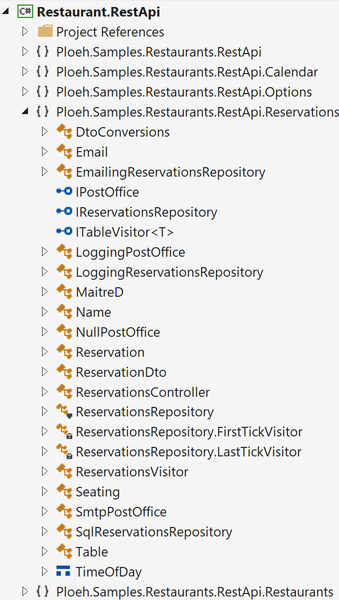

If you open the ReservationsController.cs file, however, I've now declared that it belongs to a namespace called Ploeh.Samples.Restaurants.RestApi.Reservations. Using Visual Studio's Class View, things look different from the Solution Explorer:

Here I've organised the namespaces according to feature, rather than technical role. The screen shot shows the Reservations feature opened, while other features remain closed.

Initial reactions #

This article isn't a recommendation. It's nothing but an initial exploration of an idea.

Do I like it? So far, I think I still prefer flat directory structures. Even though this idea gives two axes of variability, you still have to make judgment calls. It's easy enough with Controllers, but where do you put cross-cutting concerns? Where do you put domain logic that seems to encompass everything else?

As an example, the code base that accompanies Code That Fits in Your Head is a multi-tenant system. Each restaurant is a separate tenant, but I've modelled restaurants as part of the domain model, and I've put that 'feature' in its own namespace. Perhaps that's a mistake; at least, I now have the code wart that I have to import the Ploeh.Samples.Restaurants.RestApi.Restaurants namespace to implement the ReservationsController, because its constructor looks like this:

public ReservationsController( IClock clock, IRestaurantDatabase restaurantDatabase, IReservationsRepository repository) { Clock = clock; RestaurantDatabase = restaurantDatabase; Repository = repository; }

The IRestaurantDatabase interface is defined in the Restaurants namespace, but the Controller needs it in order to look up the restaurant (i.e. tenant) in question.

You could argue that this isn't a problem with namespaces, but rather a code smell indicating that I should have organised the code in a different way.

That may be so, but then implies a deeper problem: Assigning files to hierarchies may not, after all, help much. It looks as though things are organised, but if the assignment of things to buckets is done without a predictable system, then what benefit does it provide? Does it make things easier to find, or is the sense of order mostly illusory?

I tend to still believe that this is the case. This isn't a nihilistic or defeatist position, but rather a realisation that order must arise from other origins.

Conclusion #

I was recently repeatedly encountering student code with a disregard for the convention that namespace structure should follow directory structure (or the other way around). Taking a cue from Kent Beck I decided to investigate what happens if you forget about the rules and instead pursue what that new freedom might bring.

In this article, I briefly show an example where I reorganised a code base so that the file structure is according to implementation detail, but the namespace hierarchy is according to feature. Clearly, I could also have done it the other way around.

What if, instead of two, you have three organising principles? I don't know. I can't think of a third kind of hierarchy in a language like C#.

After a few hours reorganising the code, I'm not scared away from this idea. It might be worth to revisit in a larger code base. On the other hand, I'm still not convinced that forcing a hierarchy over a sophisticated software design is particularly beneficial.

P.S. 2023-05-30. This article is only a report on an experiment. For my general recommendation regarding code file organisation, see Favour flat code file folders.

Comments

Hi Mark,

While reading your book "Code That Fits in Your Head", your latest blog entry caught my attention, as I am struggling in software development with similar issues.

I find it hard, to put all classes into one project directory, as it feels overwhelming, when the number of classes increases.

In the following, I would like to specify possible organising principles in my own words.

Postulations

- Folders should help the programmer (and reader) to keep the code organised

- Namespaces should reflect the hierarchical organisation of the code base

- Cross-cutting concerns should be addressed by modularity.

Definitions

1. Folders

- the allocation of classes in a project with similar technical concerns into folders should help the programmer in the first place, by visualising this similarity

- the benefit lies just in the organisation, i.e. storage of code, not in the expression of hierarchy

2. Namespaces

- expression of hierarchy can be achieved by namespaces, which indicate the relationship between allocated classes

- classes can be organised in folders with same designation

- the namespace designation could vary by concerns, although the classes are placed in same folders, as the technical concern of the class shouldn't affect the hierarchical organisation

3. Cross-cutting concerns

- classes, which aren't related to a single task, could be indicated by a special namespace

- they could be placed in a different folder, to signalize different affiliations

- or even placed in a different assembly

Summing up

A hierarchy should come by design. The organisation of code in folders should help the programmer or reader to grasp the file structure, not necessarily the program hierarchy.

Folders should be a means, not an expression of design. Folders and their designations could change (or disappear) over time in development. Thus, explicit connection of namespace to folder designation seems not desirable, but it's not forbidden.

Best regards,

Markus

Markus, thank you for writing. You can, of course, organise code according to various principles, and what works in one case may not be the best fit in another case. The main point of this article was to suggest, as an idea, that folder hierarchy and namespace hierarchy doesn't have to match.

Based on reader reactions, however, I realised that I may have failed to clearly communicate my fundamental position, so I wrote another article about that. I do, indeed, favour flat folder hierarchies.

That is not to say that you can't have any directories in your code base, but rather that I'm sceptical that any such hierarchy addresses real problems.

For instance, you write that

"Folders should help the programmer (and reader) to keep the code organised"

If I focus on the word should, then I agree: Folders should help the programmer keep the code organised. In my view, then, it follows that if a tree structure does not assist in doing that, then that structure is of no use and should not be implemented (or abandoned if already in place).

I do get the impression from many people that they consider a directory tree vital to be able to navigate and understand a code base. What I've tried to outline in my more recent article is that I don't accept that as an undisputable axiom.

What I do find helpful as an organising principle is focusing on dependencies as a directed acyclic graph. Cyclic dependencies between objects is a main source of complexity. Keep dependency graphs directed and make code easy to delete.

Organising code files in a tree structure doesn't help achieve that goal. This is the reason I consider code folder hierarchies a red herring: Perhaps not explicitly detrimental to sustainability, but usually nothing but a distraction.

How, then, do you organise a large code base? I hope that I answer that question, too, in my more recent article Favour flat code file folders.

Is cyclomatic complexity really related to branch coverage?

A genuine case of doubt and bewilderment.

Regular readers of this blog may be used to its confident and opinionated tone. I write that way, not because I'm always convinced that I'm right, but because prose with too many caveats and qualifications tends to bury the message in verbose and circumlocutory ambiguity.

This time, however, I write to solicit feedback, and because I'm surprised to the edge of bemusement by a recent experience.

Collatz sequence #

Consider the following code:

public static class Collatz { public static IReadOnlyCollection<int> Sequence(int n) { if (n < 1) throw new ArgumentOutOfRangeException( nameof(n), $"Only natural numbers allowed, but given {n}."); var sequence = new List<int>(); var current = n; while (current != 1) { sequence.Add(current); if (current % 2 == 0) current = current / 2; else current = current * 3 + 1; } sequence.Add(current); return sequence; } }

As the names imply, the Sequence function calculates the Collatz sequence for a given natural number.

Please don't tune out if that sounds mathematical and difficult, because it really isn't. While the Collatz conjecture still evades mathematical proof, the sequence is easy to calculate and understand. Given a number, produce a sequence starting with that number and stop when you arrive at 1. Every new number in the sequence is based on the previous number. If the input is even, divide it by two. If it's odd, multiply it by three and add one. Repeat until you arrive at one.

The conjecture is that any natural number will produce a finite sequence. That's the unproven part, but that doesn't concern us. In this article, I'm only interested in the above code, which computes such sequences.

Here are few examples:

> Collatz.Sequence(1) List<int>(1) { 1 } > Collatz.Sequence(2) List<int>(2) { 2, 1 } > Collatz.Sequence(3) List<int>(8) { 3, 10, 5, 16, 8, 4, 2, 1 } > Collatz.Sequence(4) List<int>(3) { 4, 2, 1 }

While there seems to be a general tendency for the sequence to grow as the input gets larger, that's clearly not a rule. The examples show that the sequence for 3 is longer than the sequence for 4.

All this, however, just sets the stage. The problem doesn't really have anything to do with Collatz sequences. I only ran into it while working with a Collatz sequence implementation that looked a lot like the above.

Cyclomatic complexity #

What is the cyclomatic complexity of the above Sequence function? If you need a reminder of how to count cyclomatic complexity, this is a good opportunity to take a moment to refresh your memory, count the number, and compare it with my answer.

Apart from the opportunity for exercise, it was a rhetorical question. The answer is 4.

This means that we'd need at least four unit test to cover all branches. Right? Right?

Okay, let's try.

Branch coverage #

Before we start, let's make the ritual denouncement of code coverage as a target metric. The point isn't to reach 100% code coverage as such, but to gain confidence that you've added tests that cover whatever is important to you. Also, the best way to do that is usually with TDD, which isn't the situation I'm discussing here.

The first branch that we might want to cover is the Guard Clause. This is easily addressed with an xUnit.net test:

[Fact] public void ThrowOnInvalidInput() { Assert.Throws<ArgumentOutOfRangeException>(() => Collatz.Sequence(0)); }

This test calls the Sequence function with 0, which (in this context, at least) isn't a natural number.

If you measure test coverage (or, in this case, just think it through), there are no surprises yet. One branch is covered, the rest aren't. That's 25%.

(If you use the free code coverage option for .NET, it will surprisingly tell you that you're only at 16% branch coverage. It deems the cyclomatic complexity of the Sequence function to be 6, not 4, and 1/6 is 16.67%. Why it thinks it's 6 is not entirely clear to me, but Visual Studio agrees with me that the cyclomatic complexity is 4. In this particular case, it doesn't matter anyway. The conclusion that follows remains the same.)

Let's add another test case, and perhaps one that gives the algorithm a good exercise.

[Fact] public void Example() { var actual = Collatz.Sequence(5); Assert.Equal(new[] { 5, 16, 8, 4, 2, 1 }, actual); }

As expected, the test passes. What's the branch coverage now?

Try to think it through instead of relying exclusively on a tool. The algorithm isn't more complicated that you can emulate execution in your head, or perhaps with the assistance of a notepad. How many branches does it execute when the input is 5?

Branch coverage is now 100%. (Even the dotnet coverage tool agrees, despite its weird cyclomatic complexity value.) All branches are exercised.

Two tests produce 100% branch coverage of a function with a cyclomatic complexity of 4.

Surprise #

That's what befuddles me. I thought that cyclomatic complexity and branch coverage were related. I thought, that the number of branches was a good indicator of the number of tests you'd need to cover all branches. I even wrote an article to that effect, and no-one contradicted me.

That, in itself, is no proof of anything, but the notion that the article presents seems to be widely accepted. I never considered it controversial, and the only reason I didn't cite anyone is that this seems to be 'common knowledge'. I wasn't aware of a particular source I could cite.

Now, however, it seems that it's wrong. Is it wrong, or am I missing something?

To be clear, I completely understand why the above two tests are sufficient to fully cover the function. I also believe that I fully understand why the cyclomatic complexity is 4.

I am also painfully aware that the above two tests in no way fully specify the Collatz sequence. That's not the point.

The point is that it's possible to cover this function with only two tests, despite the cyclomatic complexity being 4. That surprises me.

Is this a known thing?

I'm sure it is. I've long since given up discovering anything new in programming.

Conclusion #

I recently encountered a function that performed a Collatz calculation similar to the one I've shown here. It exhibited the same trait, and since it had no Guard Clause, I could fully cover it with a single test case. That function even had a cyclomatic complexity of 6, so you can perhaps imagine my befuddlement.

Is it wrong, then, that cyclomatic complexity suggests a minimum number of test cases in order to cover all branches?

It seems so, but that's new to me. I don't mind being wrong on occasion. It's usually an opportunity to learn something new. If you have any insights, please leave a comment.

Comments

My first thought is that the code looks like an unrolled recursive function, so perhaps if it's refactored into a driver function and a "continuation passing style" it might make the cyclomatic complexity match the covering tests.

So given the following:

public delegate void ResultFunc(IEnumerable<int> result);

public delegate void ContFunc(int n, ResultFunc result, ContFunc cont);

public static void Cont(int n, ResultFunc result, ContFunc cont) {

if (n == 1) {

result(new[] { n });

return;

}

void Result(IEnumerable<int> list) => result(list.Prepend(n));

if (n % 2 == 0)

cont(n / 2, Result, cont);

else

cont(n * 3 + 1, Result, cont);

}

public static IReadOnlyCollection<int> Continuation(int n) {

if (n < 1)

throw new ArgumentOutOfRangeException(

nameof(n),

$"Only natural numbers allowed, but given {n}.");

var output = new List<int>();

void Output(IEnumerable<int> list) => output = list.ToList();

Cont(n, Output, Cont);

return output;

}

I calculate the Cyclomatic complexity of Continuation to be 2 and Step to be 3.

And it would seem you need 5 tests to properly cover the code, 3 for Step and 2 for Continuation.

But however you write the "n >=1" case for Continuation you will have to cover some of Step.

There is a relation between cyclomatic complexity and branches to cover, but it's not one of equality, cyclomatic complexity is an upper bound for the number of branches. There's a nice example illustrating this in the Wikipedia article on cyclomatic complexity that explains this, as well as the relation with path coverage (for which cyclomatic complexity is a lower bound).

I find cyclomatic complexity to be overly pedantic at times, and you will need four tests if you get really pedantic. First, test the guard clause as you already did. Then, test with 1 in order to test the

whileloop body not being run. Then, test with 2 in order to test that the

whileis executed, but we only hit the

ifpart of the

if/else. Finally, test with 3 in order to hit the

elseinside of the

while. That's four tests where each test is only testing one of the branches (some tests hit more than one branch, but the "extra branch" is already covered by another test). Again, this is being really pedantic and I wouldn't test this function as laid out above (I'd probaby put in the test with 1, since it's an edge case, but otherwise test as you did).

I don't think there's a rigorous relationship between cyclomatic complexity and number of tests. In simple cases, treating things as though the relationship exists can be helpful. But once you start having iterrelated branches in a function, things get murky, and you may have to go to pedantic lengths in order to maintain the relationship. The same thing goes for code coverage, which can be 100% even though you haven't actually tested all paths through your code if there are multiple branches in the function that depend on each other.

Thank you, all, for writing. I'm extraordinarily busy at the moment, so it'll take me longer than usual to respond. Rest assured, however, that I haven't forgotten.

If we agree to the definition of cyclomatic complexity as the number of independent paths through a section of code, then the number of tests needed to cover that section must be the same per definition, if those tests are also independent. Independence is crucial here, and is also the main source of confusion. Both the while and if forks depend on the same variable (current), and so they are not independent.

The second test you wrote is similarly not independent, as it ends up tracing multiple paths through through if: odd for 5, and even for 16, 8, etc, and so ends up covering all paths. Had you picked 2 instead of 5 for the test, that would have been more independent, as it would not have traced the else path, requiring one additional test.

The standard way of computing cyclomatic complexity assumes independence, which simply is not possible in this case.

Struan, thank you for writing, and please accept my apologies for the time it took me to respond. I agree with your calculations of cyclomatic complexity of your refactored code.

I agree with what you write, but you can't write a sentence like "however you write the "n >=1" case for [...] you will have to cover some of [..]" and expect me to just ignore it. To be clear, I agree with you in the particular case of the methods you provided, but you inspired me to refactor my code with that rule as a specific constraint. You can see the results in my new article Collatz sequences by function composition.

Thank you for the inspiration.

Jeroen, thank you for writing, and please accept my apologies for the time it took me to respond. I should have read that Wikipedia article more closely, instead of just linking to it.

What still puzzles me is that I've been aware of, and actively used, cyclomatic complexity for more than a decade, and this distinction has never come up, and no-one has called me out on it.

As Cunningham's law says, the best way to get the right answer on the Internet is not to ask a question; it's to post the wrong answer. Even so, I posted Put cyclomatic complexity to good use in 2019, and no-one contradicted it.

I don't mention this as an argument that I'm right. Obviously, I was wrong, but no-one told me. Have I had something in my teeth all these years, too?

Brett, thank you for writing, and please accept my apologies for the time it took me to respond. I suppose that I failed to make my overall motivation clear. When doing proper test-driven development (TDD), one doesn't need cyclomatic complexity in order to think about coverage. When following the red-green-refactor checklist, you only add enough code to pass all tests. With that process, cyclomatic complexity is rarely useful, and I tend to ignore it.

I do, however, often coach programmers in unit testing and TDD, and people new to the technique often struggle with basics. They add too much code, instead of the simplest thing that could possibly work, or they can't think of a good next test case to write.

When teaching TDD I sometimes suggest cyclomatic complexity as a metric to help decision-making. Did we add more code to the System Under Test than warranted by tests? Is it okay to forgo writing a test of a one-liner with cyclomatic complexity of one?

The metric is also useful in hybrid scenarios where you already have production code, and now you want to add characterisation tests: Which test cases should you at least write?

Another way to answer such questions is to run a code-coverage tool, but that often takes time. I find it useful to teach people about cyclomatic complexity, because it's a lightweight heuristic always at hand.

Nikola, thank you for writing. The emphasis on independence is useful; I used compatible thinking in my new article Collatz sequences by function composition. By now, including the other comments to this article, it seems that we've been able to cover the problem better, and I, at least, feel that I've learned something.

I don't think, however, that the standard way of computing cyclomatic complexity assumes independence. You can easily compute the cyclomatic complexity of the above Sequence function, even though its branches aren't independent. Tooling such as Visual Studio seems to agree with me.

Refactoring pure function composition without breaking existing tests

An example modifying a Haskell Gossiping Bus Drivers implementation.

This is an article in an series of articles about the epistemology of interaction testing. In short, this collection of articles discusses how to test the composition of pure functions. While a pure function is intrinsically testable, how do you test the composition of pure functions? As the introductory article outlines, I consider it mostly a matter of establishing confidence. With enough test coverage you can be confident that the composition produces the desired outputs.

Keep in mind that if you compose pure functions into a larger pure function, the composition is still pure. This implies that you can still test it by supplying input and verifying that the output is correct.

Tests that exercise the composition do so by verifying observable behaviour. This makes them more robust to refactoring. You'll see an example of that later in this article.

Gossiping bus drivers #

I recently did the Gossiping Bus Drivers kata in Haskell. At first, I added the tests suggested in the kata description.

{-# OPTIONS_GHC -Wno-type-defaults #-}

module Main where

import GossipingBusDrivers

import Test.HUnit

import Test.Framework.Providers.HUnit (hUnitTestToTests)

import Test.Framework (defaultMain)

main :: IO ()

main = defaultMain $ hUnitTestToTests $ TestList [

"Kata examples" ~: do

(routes, expected) <-

[

([[3, 1, 2, 3],

[3, 2, 3, 1],

[4, 2, 3, 4, 5]],

Just 5),

([[2, 1, 2],

[5, 2, 8]],

Nothing)

]

let actual = drive routes

return $ expected ~=? actual

]

As I prefer them, these tests are parametrised HUnit tests.

The problem with those suggested test cases is that they don't provide enough confidence that an implementation is correct. In fact, I wrote this implementation to pass them:

drive routes = if length routes == 3 then Just 5 else Nothing

This is clearly incorrect. It just looks at the number of routes and returns a fixed value for each count. It doesn't look at the contents of the routes.

Even if you don't try to deliberately cheat I'm not convinced that these two tests are enough. You could try to write the correct implementation, but how do you know that you've correctly dealt with various edge cases?

Helper function #

The kata description isn't hard to understand, so while the suggested test cases seem insufficient, I knew what was required. Perhaps I could write a proper implementation without additional tests. After all, I was convinced that it'd be possible to do it with a cyclomatic complexity of 1, and since a test function also has a cyclomatic complexity of 1, there's always that tension in test-driven development: Why write test code to exercise code with a cyclomatic complexity of 1?.

To be clear: There are often good reasons to write tests even in this case, and this seems like one of them. Cyclomatic complexity indicates a minimum number of test cases, not necessarily a sufficient number.

Even though Haskell's type system is expressive, I soon found myself second-guessing the behaviour of various expressions that I'd experimented with. Sometimes I find GHCi (the Haskell REPL) sufficiently edifying, but in this case I thought that I might want to keep some test cases around for a helper function that I was developing:

import Data.List import qualified Data.Map.Strict as Map import Data.Map.Strict ((!)) import qualified Data.Set as Set import Data.Set (Set) evaluateStop :: (Functor f, Foldable f, Ord k, Ord a) => f (k, Set a) -> f (k, Set a) evaluateStop stopsAndDrivers = let gossip (stop, driver) = Map.insertWith Set.union stop driver gossipAtStops = foldl' (flip gossip) Map.empty stopsAndDrivers in fmap (\(stop, _) -> (stop, gossipAtStops ! stop)) stopsAndDrivers

I was fairly confident that this function worked as I intended, but I wanted to be sure. I needed some examples, so I added these tests:

"evaluateStop examples" ~: do (stopsAndDrivers, expected) <- [ ([(1, fromList [1]), (2, fromList [2]), (1, fromList [1])], [(1, fromList [1]), (2, fromList [2]), (1, fromList [1])]), ([(1, fromList [1]), (2, fromList [2]), (1, fromList [2])], [(1, fromList [1, 2]), (2, fromList [2]), (1, fromList [1, 2])]), ([(1, fromList [1, 2, 3]), (1, fromList [2, 3, 4])], [(1, fromList [1, 2, 3, 4]), (1, fromList [1, 2, 3, 4])]) ] let actual = evaluateStop stopsAndDrivers return $ fromList expected ~=? fromList actual

They do, indeed, pass.

The idea behind that evaluateStop function is to evaluate the state at each 'minute' of the simulation. The first line of each test case is the state before the drivers meet, and the second line is the expected state after all drivers have gossiped.

My plan was to use some sort of left fold to keep evaluating states until all information has disseminated to all drivers.

Property #

Since I have already extolled the virtues of property-based testing in this article series, I wondered whether I could add some properties instead of relying on examples. Well, I did manage to add one QuickCheck property:

testProperty "drive image" $ \ (routes :: [NonEmptyList Int]) -> let actual = drive $ fmap getNonEmpty routes in isJust actual ==> all (\i -> 0 <= i && i <= 480) actual

There's not much to talk about here. The property only states that the result of the drive function must be between 0 and 480, if it exists.

Such a property could vacuously pass if drive always returns Nothing, so I used the ==> QuickCheck combinator to make sure that the property is actually exercising only the Just cases.

Since the drive function only returns a number, apart from verifying its image I couldn't think of any other general property to add.

You can always come up with more specific properties that explicitly set up more constrained test scenarios, but is it worth it?

It's always worthwhile to stop and think. If you're writing a 'normal' example-based test, consider whether a property would be better. Likewise, if you're about to write a property, consider whether an example would be better.

'Better' can mean more than one thing. Preventing regressions is one thing, but making the code maintainable is another. If you're writing a property that is too complicated, it might be better to write a simpler example-based test.

I could definitely think of some complicated properties, but I found that more examples might make the test code easier to understand.

More examples #

After all that angst and soul-searching, I added a few more examples to the first parametrised test:

"Kata examples" ~: do (routes, expected) <- [ ([[3, 1, 2, 3], [3, 2, 3, 1], [4, 2, 3, 4, 5]], Just 5), ([[2, 1, 2], [5, 2, 8]], Nothing), ([[1, 2, 3, 4, 5], [5, 6, 7, 8], [3, 9, 6]], Just 13), ([[1, 2, 3], [2, 1, 3], [2, 4, 5, 3]], Just 5), ([[1, 2], [2, 1]], Nothing), ([[1]], Just 0), ([[2], [2]], Just 1) ] let actual = drive routes return $ expected ~=? actual

The first two test cases are the same as before, and the last two are some edge cases I added myself. The middle three I adopted from another page about the kata. Since those examples turned out to be off by one, I did those examples on paper to verify that I understood what the expected value was. Then I adjusted them to my one-indexed results.

Drive #

The drive function now correctly implements the kata, I hope. At least it passes all the tests.

drive :: (Num b, Enum b, Ord a) => [[a]] -> Maybe b drive routes = -- Each driver starts with a single gossip. Any kind of value will do, as -- long as each is unique. Here I use the one-based index of each route, -- since it fulfills the requirements. let drivers = fmap Set.singleton [1 .. length routes] goal = Set.unions drivers stops = transpose $ fmap (take 480 . cycle) routes propagation = scanl (\ds ss -> snd <$> evaluateStop (zip ss ds)) drivers stops in fmap fst $ find (all (== goal) . snd) $ zip [0 ..] propagation

Haskell code can be information-dense, and if you don't have an integrated development environment (IDE) around, this may be hard to read.

drivers is a list of sets. Each set represents the gossip that a driver knows. At the beginning, each only knows one piece of gossip. The expression initialises each driver with a singleton set. Each piece of gossip is represented by a number, simply going from 1 to the number of routes. Incidentally, this is also the number of drivers, so you can consider the number 1 as a placeholder for the gossip that driver 1 knows, and so on.

The goal is the union of all the gossip. Once every driver's knowledge is equal to the goal the simulation can stop.

Since evaluateStop simulates one stop, the drive function needs a list of stops to fold. That's the stops value. In the very first example, you have three routes: [3, 1, 2, 3], [3, 2, 3, 1], and [4, 2, 3, 4, 5]. The first time the drivers stop (after one minute), the stops are 3, 3, and 4. That is, the first element in stops would be the list [3, 3, 4]. The next one would be [1, 2, 2], then [2, 3, 3], and so on.

My plan all along was to use some sort of left fold to repeatedly run evaluateStop over each minute. Since I need to produce a list of states, scanl was an appropriate choice. The lambda expression that I have to pass to it, though, is more complicated than I appreciate. We'll return to that in a moment.

The drive function can now index the propagation list by zipping it with the infinite list [0 ..], find the first element where all sets are equal to the goal set, and then return that index. That produces the correct results.

The need for a better helper function #

As I already warned, I wasn't happy with the lambda expression passed to scanl. It looks complicated and arcane. Is there a better way to express the same behaviour? Usually, when confronted with a nasty lambda expression like that, in Haskell my first instinct is to see if pointfree.io has a better option. Alas, (((snd <$>) . evaluateStop) .) . flip zip hardly seems an improvement. That flip zip expression to the right, however, suggests that it might help flipping the arguments to evaluateStop.

When I developed the evaluateStop helper function, I found it intuitive to define it over a list of tuples, where the first element in the tuple is the stop, and the second element is the set of gossip that the driver at that stop knows.

The tuples don't have to be in that order, though. Perhaps if I flip the tuples that would make the lambda expression more readable. It was worth a try.

Confidence #

Since this article is part of a small series about the epistemology of testing composed functions, let's take a moment to reflect on the confidence we may have in the drive function.

Keep in mind the goal of the kata: Calculate the number of minutes it takes for all gossip to spread to all drivers. There's a few tests that verify that; seven examples and a fairly vacuous QuickCheck property. Is that enough to be confident that the function is correct?

If it isn't, I think the best option you have is to add more examples. For the sake of argument, however, let's assume that the tests are good enough.

When summarising the tests that cover the drive function, I didn't count the three examples that exercise evaluateStop. Do these three test cases improve your confidence in the drive function? A bit, perhaps, but keep in mind that the kata description doesn't mandate that function. It's just a helper function I created in order to decompose the problem.

Granted, having tests that cover a helper function does, to a degree, increase my confidence in the code. I have confidence in the function itself, but that is largely irrelevant, because the problem I'm trying to solve is not implementing this particular function. On the other hand, my confidence in evaluateStop means that I have increased confidence in the code that calls it.

Compared to interaction-based testing, I'm not testing that drive calls evaluateStop, but I can still verify that this happens. I can just look at the code.

The composition is already there in the code. What do I gain from replicating that composition with Stubs and Spies?

It's not a breaking change if I decide to implement drive in a different way.

What gives me confidence when composing pure functions isn't that I've subjected the composition to an interaction-based test. Rather, it's that the function is composed from trustworthy components.

Strangler #

My main grievance with Stubs and Spies is that they break encapsulation. This may sound abstract, but is a real problem. This is the underlying reason that so many tests break when you refactor code.

This example code base, as other functional code that I write, avoids interaction-based testing. This makes it easier to refactor the code, as I will now demonstrate.

My goal is to change the evaluateStop helper function by flipping the tuples. If I just edit it, however, I'm going to (temporarily) break the drive function.

Katas typically result in small code bases where you can get away with a lot of bad practices that wouldn't work in a larger code base. To be honest, the refactoring I have in mind can be completed in a few minutes with a brute-force approach. Imagine, however, that we can't break compatibility of the evaluateStop function for the time being. Perhaps, had we had a larger code base, there were other code that depended on this function. At the very least, the tests do.

Instead of brute-force changing the function, I'm going to make use of the Strangler pattern, as I've also described in my book Code That Fits in Your Head.

Leave the existing function alone, and add a new one. You can typically copy and paste the existing code and then make the necessary changes. In that way, you break neither client code nor tests, because there are none.

evaluateStop' :: (Functor f, Foldable f, Ord k, Ord a) => f (Set a, k) -> f (Set a, k) evaluateStop' driversAndStops = let gossip (driver, stop) = Map.insertWith Set.union stop driver gossipAtStops = foldl' (flip gossip) Map.empty driversAndStops in fmap (\(_, stop) -> (gossipAtStops ! stop, stop)) driversAndStops

In a language like C# you can often get away with overloading a method name, but Haskell doesn't have overloading. Since I consider this side-by-side situation to be temporary, I've appended a prime after the function name. This is a fairly normal convention in Haskell, I gather.

The only change this function represents is that I've swapped the tuple order.

Once you've added the new function, you may want to copy, paste and edit the tests. Or perhaps you want to do the tests first. During this process, make micro-commits so that you can easily suspend your 'refactoring' activity if something more important comes up.

Once everything is in place, you can change the drive function:

drive :: (Num b, Enum b, Ord a) => [[a]] -> Maybe b drive routes = -- Each driver starts with a single gossip. Any kind of value will do, as -- long as each is unique. Here I use the one-based index of each route, -- since it fulfills the requirements. let drivers = fmap Set.singleton [1 .. length routes] goal = Set.unions drivers stops = transpose $ fmap (take 480 . cycle) routes propagation = scanl (\ds ss -> fst <$> evaluateStop' (zip ds ss)) drivers stops in fmap fst $ find (all (== goal) . snd) $ zip [0 ..] propagation

Notice that the type of drive hasn't change, and neither has the behaviour. This means that although I've changed the composition (the interaction) no tests broke.

Finally, once I moved all code over, I deleted the old function and renamed the new one to take its place.

Was it all worth it? #

At first glance, it doesn't look as though much was gained. What happens if I eta-reduce the new lambda expression?

drive :: (Num b, Enum b, Ord a) => [[a]] -> Maybe b drive routes = -- Each driver starts with a single gossip. Any kind of value will do, as -- long as each is unique. Here I use the one-based index of each route, -- since it fulfills the requirements. let drivers = fmap Set.singleton [1 .. length routes] goal = Set.unions drivers stops = transpose $ fmap (take 480 . cycle) routes propagation = scanl (((fmap fst . evaluateStop) .) . zip) drivers stops in fmap fst $ find (all (== goal) . snd) $ zip [0 ..] propagation

Not much better. I can now fit the propagation expression on a single line of code and still stay within a 80x24 box, but that's about it. Is ((fmap fst . evaluateStop) .) . zip more readable than what we had before?

Hardly, I admit. I might consider reverting, and since I've been using Git tactically, I have that option.

If I hadn't tried, though, I wouldn't have known.

Conclusion #

When composing one pure function with another, how can you test that the outer function correctly calls the inner function?

By the same way that you test any other pure function. The only way you can observe whether a pure function works as intended is to compare its actual output to the output you expect its input to produce. How it arrives at that output is irrelevant. It could be looking up all results in a big table. As long as the result is correct, the function is correct.

In this article, you saw an example of how to test a composed function, as well as how to refactor it without breaking tests.

Next: When is an implementation detail an implementation detail?

Are pull requests bad because they originate from open-source development?

I don't think so, and at least find the argument flawed.

Increasingly I come across a quote that goes like this:

Pull requests were invented for open source projects where you want to gatekeep changes from people you don't know and don't trust to change the code safely.

If you're wondering where that 'quote' comes from, then read on. I'm not trying to stand up a straw man, but I had to do a bit of digging in order to find the source of what almost seems like a meme.

Quote investigation #

The quote is usually attributed to Dave Farley, who is a software luminary that I respect tremendously. Even with the attribution, the source is typically missing, but after asking around, Mitja Bezenšek pointed me in the right direction.

The source is most likely a video, from which I've transcribed a longer passage:

"Pull requests were invented to gatekeep access to open-source projects. In open source, it's very common that not everyone is given free access to changing the code, so contributors will issue a pull request so that a trusted person can then approve the change.

"I think this is really bad way to organise a development team.

"If you can't trust your team mates to make changes carefully, then your version control system is not going to fix that for you."

I've made an effort to transcribe as faithfully as possible, but if you really want to be sure what Dave Farley said, watch the video. The quote comes twelve minutes in.

My biases #

I agree that the argument sounds compelling, but I find it flawed. Before I proceed to put forward my arguments I want to make my own biases clear. Arguing against someone like Dave Farley is not something I take lightly. As far as I can tell, he's worked on systems more impressive than any I can showcase. I also think he has more industry experience than I have.

That doesn't necessarily make him right, but on the other hand, why should you side with me, with my less impressive résumé?

My objective is not to attack Dave Farley, or any other person for that matter. My agenda is the argument itself. I do, however, find it intellectually honest to cite sources, with the associated risk that my argument may look like a personal attack. To steelman my opponent, then, I'll try to put my own biases on display. To the degree I'm aware of them.

I prefer pull requests over pair and ensemble programming. I've tried all three, and I do admit that real-time collaboration has obvious advantages, but I find pairing or ensemble programming exhausting.

Since I read Quiet a decade ago, I've been alert to the introspective side of my personality. Although I agree with Brian Marick that one should be wary of understanding personality traits as destiny, I mostly prefer solo activities.

Increasingly, since I became self-employed, I've arranged my life to maximise the time I can work from home. The exercise regimen I've chosen for myself is independent of other people: I run, and lift weights at home. You may have noticed that I like writing. I like reading as well. And, hardly surprising, I prefer writing code in splendid isolation.

Even so, I find it perfectly possible to have meaningful relationships with other people. After all, I've been married to the same woman for decades, my (mostly) grown kids haven't fled from home, and I have friends that I've known for decades.

In a toot that I can no longer find, Brian Marick asked (and I paraphrase from memory): If you've tried a technique and didn't like it, what would it take to make you like it?

As a self-professed introvert, social interaction does tire me, but I still enjoy hanging out with friends or family. What makes those interactions different? Well, often, there's good food and wine involved. Perhaps ensemble programming would work better for me with a bottle of Champagne.

Other forces influence my preferences as well. I like the flexibility provided by asynchrony, and similarly dislike having to be somewhere at a specific time.

Having to be somewhere also involves transporting myself there, which I also don't appreciate.

In short, I prefer pull requests over pairing and ensemble programming. All of that, however, is just my subjective opinion, and that's not an argument.

Counter-examples #

The above tirade about my biases is not a refutation of Dave Farley's argument. Rather, I wanted to put my own blind spots on display. If you suspect me of motivated reasoning, that just might be the case.

All that said, I want to challenge the argument.

First, it includes an appeal to trust, which is a line of reasoning with which I don't agree. You can't trust your colleagues, just like you can't trust yourself. A code review serves more purposes than keeping malicious actors out of the code base. It also helps catch mistakes, security issues, or misunderstandings. It can also improve shared understanding of common goals and standards. Yes, this is also possible with other means, such as pair or ensemble programming, but from that, it doesn't follow that code reviews can't do that. They can. I've lived that dream.

If you take away the appeal to trust, though, there isn't much left of the argument. What remains is essentially: Pull requests were invented to solve a particular problem in open-source development. Internal software development is not open source. Pull requests are bad for internal software development.

That an invention was done in one context, however, doesn't preclude it from being useful in another. Git was invented to address an open-source problem. Should we stop using Git for internal software development?

Solar panels were originally developed for satellites and space probes. Does that mean that we shouldn't use them on Earth?

GPS was invented for use by the US military. Does that make civilian use wrong?

Are pull requests bad? #

I find the original argument logically flawed, but if I insist on logic, I'm also obliged to admit that my possible-world counter-examples don't prove that pull requests are good.

Dave Farley's claim may still turn out to be true. Not because of the argument he gives, but perhaps for other reasons.

I think I understand where the dislike of pull requests come from. As they are often practised, pull requests can sit for days with no-one looking at them. This creates unnecessary delays. If this is the only way you know of working with pull requests, no wonder you don't like them.

I advocate a more agile workflow for pull requests. I consider that congruent with my view on agile development.

Conclusion #

Pull requests are often misused, but they don't have to be. On the other hand, that's just my experience and subjective preference.

Dave Farley has argued that pull requests are a bad way to organise a development team. I've argued that the argument is logically flawed.

The question remains unsettled. I've attempted to refute one particular argument, and even if you accept my counter-examples, pull requests may still be bad. Or good.

Comments

Another important angle, for me, is that pull requests are not merely code review. It can also be a way of enforcing a variety of automated checks, i.e. running tests or linting etc. This enforces quality too - so I'd argue to use pull requests even if you don't do peer review (I do on my hobby projects atleast, for the exact reasons you mentioned in On trust in software development - I don't trust myself to be perfect.)

Casper, thank you for writing. Indeed, other readers have made similar observations on other channels (Twitter, Mastodon). That, too, can be a benefit.

In order to once more steel-man 'the other side', they'd probably say that you can run automated checks in your Continuous Delivery pipeline, and halt it if automated checks fail.

When done this way, it's useful to be able to also run the same tests on your dev box. I consider that a good practice anyway.

A restaurant example of refactoring from example-based to property-based testing

A C# example with xUnit.net and FsCheck.

This is the second comprehensive example that accompanies the article Epistemology of interaction testing. In that article, I argue that in a code base that leans toward functional programming (FP), property-based testing is a better fit than interaction-based testing. In this example, I will show how to refactor realistic state-based tests into (state-based) property-based tests.

The previous article showed a minimal and self-contained example that had the advantage of being simple, but the disadvantage of being perhaps too abstract and unrelatable. In this article, then, I will attempt to show a more realistic and concrete example. It actually doesn't start with interaction-based testing, since it's already written in the style of Functional Core, Imperative Shell. On the other hand, it shows how to refactor from concrete example-based tests to property-based tests.

I'll use the online restaurant reservation code base that accompanies my book Code That Fits in Your Head.

Smoke test #

I'll start with a simple test which was, if I remember correctly, the second test I wrote for this code base. It was a smoke test that I wrote to drive a walking skeleton. It verifies that if you post a valid reservation request to the system, you receive an HTTP response in the 200 range.

[Fact] public async Task PostValidReservation() { using var api = new LegacyApi(); var expected = new ReservationDto { At = DateTime.Today.AddDays(778).At(19, 0) .ToIso8601DateTimeString(), Email = "katinka@example.com", Name = "Katinka Ingabogovinanana", Quantity = 2 }; var response = await api.PostReservation(expected); response.EnsureSuccessStatusCode(); var actual = await response.ParseJsonContent<ReservationDto>(); Assert.Equal(expected, actual, new ReservationDtoComparer()); }

Over the lifetime of the code base, I embellished and edited the test to reflect the evolution of the system as well as my understanding of it. Thus, when I wrote it, it may not have looked exactly like this. Even so, I kept it around even though other, more detailed tests eventually superseded it.

One characteristic of this test is that it's quite concrete. When I originally wrote it, I hard-coded the date and time as well. Later, however, I discovered that I had to make the time relative to the system clock. Thus, as you can see, the At property isn't a literal value, but all other properties (Email, Name, and Quantity) are.

This test is far from abstract or data-driven. Is it possible to turn such a test into a property-based test? Yes, I'll show you how.

A word of warning before we proceed: Tests with concrete, literal, easy-to-understand examples are valuable as programmer documentation. A person new to the code base can peruse such tests and learn about the system. Thus, this test is already quite valuable as it is. In a real, living code base, I'd prefer leaving it as it is, instead of turning it into a property-based test.

Since it's a simple and concrete test, on the other hand, it's easy to understand, and thus also a a good place to start. Thus, I'm going to refactor it into a property-based test; not because I think that you should (I don't), but because I think it'll be easy for you, the reader, to follow along. In other words, it's a good introduction to the process of turning a concrete test into a property-based test.

Adding parameters #

This code base already uses FsCheck so it makes sense to stick to that framework for property-based testing. While it's written in F# you can use it from C# as well. The easiest way to use it is as a parametrised test. This is possible with the FsCheck.Xunit glue library. In fact, as I refactor the PostValidReservation test, it'll look much like the AutoFixture-driven tests from the previous article.

When turning concrete examples into properties, it helps to consider whether literal values are representative of an equivalence class. In other words, is that particular value important, or is there a wider set of values that would be just as good? For example, why is the test making a reservation 778 days in the future? Why not 777 or 779? Is the value 778 important? Not really. What's important is that the reservation is in the future. How far in the future actually isn't important. Thus, we can replace the literal value 778 with a parameter:

[Property] public async Task PostValidReservation(PositiveInt days) { using var api = new LegacyApi(); var expected = new ReservationDto { At = DateTime.Today.AddDays((int)days).At(19, 0) .ToIso8601DateTimeString(), // The rest of the test...

Notice that I've replaced the literal value 778 with the method parameter days. The PositiveInt type is a type from FsCheck. It's a wrapper around int that guarantees that the value is positive. This is important because we don't want to make a reservation in the past. The PositiveInt type is a good choice because it's a type that's already available with FsCheck, and the framework knows how to generate valid values. Since it's a wrapper, though, the test needs to unwrap the value before using it. This is done with the (int)days cast.

Notice, also, that I've replaced the [Fact] attribute with the [Property] attribute that comes with FsCheck.Xunit. This is what enables FsCheck to automatically generate test cases and feed them to the test method. You can't always do this, as you'll see later, but when you can, it's a nice and succinct way to express a property-based test.

Already, the PostValidReservation test method is 100 test cases (the FsCheck default), rather than one.

What about Email and Name? Is it important for the test that these values are exactly katinka@example.com and Katinka Ingabogovinanana or might other values do? The answer is that it's not important. What's important is that the values are valid, and essentially any non-null string is. Thus, we can replace the literal values with parameters:

[Property] public async Task PostValidReservation( PositiveInt days, StringNoNulls email, StringNoNulls name) { using var api = new LegacyApi(); var expected = new ReservationDto { At = DateTime.Today.AddDays((int)days).At(19, 0) .ToIso8601DateTimeString(), Email = email.Item, Name = name.Item, Quantity = 2 }; var response = await api.PostReservation(expected); response.EnsureSuccessStatusCode(); var actual = await response.ParseJsonContent<ReservationDto>(); Assert.Equal(expected, actual, new ReservationDtoComparer()); }

The StringNoNulls type is another FsCheck wrapper, this time around string. It ensures that FsCheck will generate no null strings. This time, however, a cast isn't possible, so instead I had to pull the wrapped string out of the value with the Item property.

That's enough conversion to illustrate the process.

What about the literal values 19, 0, or 2? Shouldn't we parametrise those as well? While we could, that takes a bit more effort. The problem is that with these values, any old positive integer isn't going to work. For example, the number 19 is the hour component of the reservation time; that is, the reservation is for 19:00. Clearly, we can't just let FsCheck generate any positive integer, because most integers aren't going to work. For example, 5 doesn't work because it's in the early morning, and the restaurant isn't open at that time.

Like other property-based testing frameworks FsCheck has an API that enables you to constrain value generation, but it doesn't work with the type-based approach I've used so far. Unlike PositiveInt there's no TimeBetween16And21 wrapper type.

You'll see what you can do to control how FsCheck generates values, but I'll use another test for that.

Parametrised unit test #

The PostValidReservation test is a high-level smoke test that gives you an idea about how the system works. It doesn't, however, reveal much about the possible variations in input. To drive such behaviour, I wrote and evolved the following state-based test:

[Theory] [InlineData(1049, 19, 00, "juliad@example.net", "Julia Domna", 5)] [InlineData(1130, 18, 15, "x@example.com", "Xenia Ng", 9)] [InlineData( 956, 16, 55, "kite@example.edu", null, 2)] [InlineData( 433, 17, 30, "shli@example.org", "Shanghai Li", 5)] public async Task PostValidReservationWhenDatabaseIsEmpty( int days, int hours, int minutes, string email, string name, int quantity) { var at = DateTime.Now.Date + new TimeSpan(days, hours, minutes, 0); var db = new FakeDatabase(); var sut = new ReservationsController( new SystemClock(), new InMemoryRestaurantDatabase(Grandfather.Restaurant), db); var expected = new Reservation( new Guid("B50DF5B1-F484-4D99-88F9-1915087AF568"), at, new Email(email), new Name(name ?? ""), quantity); await sut.Post(expected.ToDto()); Assert.Contains(expected, db.Grandfather); }

This test gives more details, without exercising all possible code paths of the system. It's still a Facade Test that covers 'just enough' of the integration with underlying components to provide confidence that things work as they should. All the business logic is implemented by a class called MaitreD, which is covered by its own set of targeted unit tests.

While parametrised, this is still only four test cases, so perhaps you don't have sufficient confidence that everything works as it should. Perhaps, as I've outlined in the introductory article, it would help if we converted it to an FsCheck property.

Parametrised property #

I find it safest to refactor this parametrised test to a property in a series of small steps. This implies that I need to keep the [InlineData] attributes around for a while longer, removing one or two literal values at a time, turning them into randomly generated values.

From the previous test we know that the Email and Name values are almost unconstrained. This means that they are trivial in themselves to have FsCheck generate. That change, in itself, is easy, which is good, because combining an [InlineData]-driven [Theory] with an FsCheck property is enough of a mouthful for one refactoring step: