ploeh blog danish software design

Phased breaking changes

Giving advance warning before breaking client code.

I was recently listening to Jimmy Bogard on .NET Rocks! talking about 14 versions of Automapper. It made me reminisce on how I dealt with versioning of AutFixture, in the approximately ten years I helmed that project.

Jimmy has done open source longer than I have, and it sounds as though he's found a way that works for him. When I led AutoFixture, I did things a bit differently, which I'll outline in this article. In no way do I mean to imply that that way was better than Jimmy's. It may, however, strike a chord with a reader or two, so I present it in the hope that some readers may find the following ideas useful.

Scope #

This article is about versioning a code base. Typically, a code base contains 'modules' of a kind, and client code that relies on those modules. In object-oriented programming, modules are often called classes, but in general, what matters in this context is that some kind of API exists.

The distinction between API and client code is most clear if you're maintaining a reusable library, and you don't know the client developers, but even internal application code has APIs and client code. The following may still be relevant if you're working in a code base together with colleagues.

This article discusses code-level APIs. Examples include C# code that other .NET code can call, but may also apply to Java objects callable from Clojure, Haskell code callable by other Haskell code, etc. It does not discuss versioning of REST APIs or other kinds of online services. I have, in the past, discussed versioning in such a context, and refer you, among other articles, to REST implies Content Negotiation and Retiring old service versions.

Additionally, some of the techniques outlined here are specific to .NET, or even C#. If, as I suspect, JavaScript or other languages don't have those features, then these techniques don't apply. They're hardly universal.

Semantic versioning #

The first few years of AutoFixture, I didn't use a systematic versioning scheme. That changed when I encountered Semantic Versioning: In 2011 I changed AutoFixture versioning to Semantic Versioning. This forced me to think explicitly about breaking changes.

As an aside, in recent years I've encountered the notion that Semantic Versioning is somehow defunct. This is often based on the observation that Semantic Version 2.0.0 was published in 2013. Surely, if no further development has taken place, it's been abandoned by its maintainer? This may or may not be the case. Does it matter?

The original author, Tom Preston-Werner, may have lost interest in Semantic Versioning. Or perhaps it's simply done. Regardless of the underlying reasons, I find Semantic Versioning useful as it is. The fact that it hasn't changed since 2013 may be an indication that it's stable. After all, it's not a piece of software. It's a specification that helps you think about versioning, and in my opinion, it does an excellent job of that.

As I already stated, once I started using Semantic Versioning I began to think explicitly about breaking changes.

Advance warning #

Chapter 10 in Code That Fits in Your Head is about making changes to existing code bases. Unless you're working on a solo project with no other programmers, changes you make impact other people. If you can, avoid breaking other people's code. The chapter discusses some techniques for that, but also briefly covers how to introduce breaking changes. Some of that chapter is based on my experience with AutoFixture.

If your language has a way to retire an API, use it. In Java you can use the @Deprecated annotation, and in C# the equivalent [Obsolete] attribute. In C#, any client code that uses a method with the [Obsolete] attribute will now emit a compiler warning.

By default, this will only be a warning, and there's certainly a risk that people don't look at those. On the other hand, if you follow my advice from Code That Fits in Your Head, you should treat warnings as errors. If you do, however, those warnings emitted by [Obsolete] attributes will prevent your code from compiling. Or, if you're the one who just adorned a method with that attribute, you should understand that you may just have inconvenienced someone else.

Therefore, whenever you add such an attribute, do also add a message that tells client developers how to move on from the API that you've just retired. As an example, here's an (ASP.NET) method that handles GET requests for calendar resources:

[Obsolete("Use Get method with restaurant ID.")] [HttpGet("calendar/{year}/{month}")] public ActionResult LegacyGet(int year, int month)

To be honest, that message may be a bit on the terse side, but the point is that there's another method on the same class that takes an additional restaurantId. While I'm clearly not perfect, and should have written a more detailed message, the point is that you should make it as easy as possible for client developers to deal with the problem that you've just given them. My rules for exception messages also apply here.

It's been more than a decade, but as I remember it, in the AutoFixture code base, I kept a list of APIs that I intended to deprecate at the next major revision. In other words, there were methods I considered fair use in a particular major version, but that I planned to phase out over multiple revisions. There were, however, a few methods that I immediately adorned with the [Obsolete] attribute, because I realized that they created problems for people.

The plan, then, was to take it up a notch when releasing a new major version. To be honest, though, I never got to execute the final steps of the plan.

Escalation #

By default, the [Obsolete] attribute emits a warning, but by supplying true as a second parameter, you can turn the warning into a compiler error.

[Obsolete("Use Get method with restaurant ID.", true)] [HttpGet("calendar/{year}/{month}")] public ActionResult LegacyGet(int year, int month)

You could argue that for people who treat warnings as errors, even a warning is a breaking change, but there can be no discussion that when you flip that bit, this is certainly a breaking change.

Thus, you should only escalate to this level when you publish a new major release.

Code already compiled against previous versions of your deprecated code may still work, but that's it. Code isn't going to compile against an API deprecated like that.

That's the reason it's important to give client developers ample warning.

With AutoFixture, I personally never got to that point, because I'm not sure that I arrived at this deprecation strategy until major version 3, which then had a run from early 2013 to late 2017. In other words, the library had a run of 4½ years without breaking changes. And when major version 4 rolled around, I'd left the project.

Even after setting the error flag to true, code already compiled against earlier versions may still be able to run against newer binaries. Thus, you still need to keep the deprecated API around for a while longer. Completely removing a deprecated method should only happen in yet another major version release.

Conclusion #

To summarize, deprecating an API could be considered a breaking change. If you take that position, imagine that your current Semantic Version is 2.44.2. Deprecating a method would then required that you release version 3.0.0.

In any case, you make some more changes to your code, reaching version 3.5.12. For various reasons, you decide to release version 4.0.0, in which you can also turn the error flag on. EVen so, the deprecated API remains in the library.

Only in version 5.0.0 can you entirely delete it.

Depending on how often you change major versions, this whole process may take years. I find that appropriate.

Appeal to aithority

No, it's not a typo.

A few months ago, I was listening to a semi-serious programme from the Danish public service radio. This is a weekly programme about language that I always listen to as a podcast. The host is the backbone of the show, but in addition to new guests each week, he's flanked by a regular expert who is highly qualified to answer questions about etymology, grammar, semantics, etc.

In the episode I'm describing, the expert got a question that a listener had previously emailed. To answer, (s)he started like this (and I'm paraphrasing): I don't actually know the answer to this question, so I did what everyone does these days, when they don't know the answer: I asked ChatGPT.

(S)he then proceeded to read aloud what ChatGPT had answered, and concluded with some remarks along the lines that that answer sounded quite plausible.

If I used ten to twenty hours of my time re-listening to every episode from the past few months, I could find the particular episode, link to it, transcribe the exact words, and translate them to English to the best of my abilities. I am, however, not going to do that. First, I'm not inclined to use that much time writing an essay on which I make no income. Second, my aim is not to point fingers at anyone in particular, so I'm being deliberately vague. As you may have noticed, I've even masked the person's sex. Not because I don't remember, but to avoid singling out anyone.

The expert in question is a regular of the programme, and I've heard him or her give good and knowledgeable answers to many tricky questions. As far as I could tell, this particular question was unanswerable, along the lines of why is 'table' called 'table' rather than 'griungth'?

The correct answer would have been I don't know, and I don't think anyone else does.

Being a veteran of the programme, (s)he must have realized on beforehand that this wouldn't be good radio, and instead decided to keep it light-hearted.

I get that, and I wouldn't be writing about it now if it doesn't look like an example of an increasing trend.

People are using large language models (LLMs) to advocate for their positions.

Appeal to authority #

Appeal to authority is no new technique in discourse.

"You may also, should it be necessary, not only twist your authorities, but actually falsify them, or quote something which you have invented entirely yourself. As a rule, your opponent has no books at hand, and could not use them if he had."

This seems similar to how people have begun using so-called artificial intelligence (AI) to do their arguing for them. We may, instead, call this appeal to aithority.

Epistemological cul-de-sac #

We've all seen plenty of examples of LLMs being wrong. I'm not going to tire you with any of those here, but I did outline my experience with GitHub Copilot in 2022. While these technologies may have made some advances since then, they still make basic mistakes.

Not only that. They're also non-deterministic. Ask a system a question once, and you get one answer. Ask the same question later, and you may get a variation of the same answer, or perhaps even a contradictory answer. If someone exhibits an answer they got from an LLM as an argument in their favour, consider that they may have been asking it five or six times before they received an answer they liked.

Finally, you can easily ask leading questions. Even if someone shows you a screen shot of a chat with an LLM, they may have clipped prior instructions that nudge the system towards a particular bias.

I've seen people post screen shots that an LLM claims that F# is a better programming language than C#. While I'm sympathetic to that claim, that's not an argument. Just like how you feel about something isn't an argument.

This phenomenon seems to be a new trend. People use answers from LLMs as evidence that they are right. I consider this an epistemological dead end.

Real authority #

Regular readers of this blog may have noticed that I often go to great lengths to track down appropriate sources to cite. I do this for several reasons. One is simply out of respect for the people who figured out things before us. Another reason is to strengthen my own arguments.

It may seem that I, too, appeal to authority. Indeed, I do. When not used in in the way Schopenhauer describes, citing authority is a necessary epistemological shortcut. If someone who knows much about a particular subject has reached a conclusion based on his or her work, we may (tentatively) accept the conclusion without going through all the same work. As Carl Sagan said, "If you wish to make an apple pie from scratch, you must first invent the universe." You can't do all basic research by yourself. At some point, you'll have to take expert assertions at face value, because you don't have the time, the education, or the money to build your own Large Hadron Collider.

Don't blindly accept an argument on the only grounds that someone famous said something, but on the other hand, there's no reason to dismiss out of hand what Albert Einstein had to say about gravity, just because you've heard that you shouldn't accept an argument based on appeal to authority.

Conclusion #

I'm concerned that people increasingly seem to resort to LLMs to argue a case. The LLMs says this, so it must be right.

Sometimes, people will follow up their arguments with of course, it's just an AI, but... and then proceed to unfold their preferred argument. Even if this seems as though the person is making a 'real' argument, starting from an LLM answer establishes a baseline to a discussion. It still lends an aura of truth to something that may be false.

Reactive monad

IObservable<T> is (also) a monad.

This article is an instalment in an article series about monads. While the previous articles showed, in great detail, how to turn various classes into monads, this article mostly serves as a place-holder. The purpose is only to point out that you don't have to create all monads yourself. Sometimes, they come as part of a reusable library.

Rx define such libraries, and IObservable<T> forms a monad. Reactive Extensions for .NET define a SelectMany method for IObservable<T>, so if source is an IObservable<int>, you can translate it to IObservable<char> like this:

IObservable<char> dest = source.SelectMany(i => Observable.Repeat('x', i));

Since the SelectMany method is, indeed, called SelectMany and has the signature

public static IObservable<TResult> SelectMany<TSource, TResult>( this IObservable<TSource> source, Func<TSource, IObservable<TResult>> selector)

you can also use C#'s query syntax:

IObservable<char> dest = from i in source from x in Observable.Repeat('x', i) select x;

In both of the above examples, I've explicitly declared the type of dest instead of using the var keyword. There's no practical reason to do this; I only did it to make the type clear to you.

Left identity #

As I've already written time and again, a few test cases don't prove that any of the monad laws hold, but they can help illustrate what they imply. For example, here's an illustration of the left-identity law, written as a parametrized xUnit.net test:

[Theory] [InlineData(1)] [InlineData(2)] [InlineData(3)] public async Task LeftIdentity(int a) { IObservable<char> h(int i) => Observable.Repeat('x', i); IList<char> left = await Observable.Return(a).SelectMany(h).ToList(); IList<char> right = await h(a).ToList(); Assert.Equal(left, right); }

Not only does the System.Reactive library define monadic bind in the form of SelectMany, but also return, with the aptly named Observable.Return function. .NET APIs often forget to do so explicitly, which means that I often have to go hunting for it, or guessing what the developers may have called it. Not here; thank you, Rx team.

Right identity #

In the same spirit, we may write another test to illustrate the right-identity law:

[Theory] [InlineData("foo")] [InlineData("bar")] [InlineData("baz")] public async Task RightIdentity(string a) { IObservable<char> f(string s) => s.ToObservable(); IObservable<char> m = f(a); IList<char> left = await m.SelectMany(Observable.Return).ToList(); IList<char> right = await m.ToList(); Assert.Equal(left, right); }

In both this and the previous test, you can see that the test has to await the observables in order to verify that the resulting collections are identical. Clearly, if you're instead dealing with infinite streams of data, you can't rely on such a simplifying assumption. For the general case, you must instead turn to other (proof) techniques to convince yourself that the laws hold. That's not my agenda here, so I'll skip that part.

Associativity #

Finally, we may illustrate the associativity law like this:

[Theory] [InlineData("foo")] [InlineData("123")] [InlineData("4t2")] public async Task Associativity(string a) { IObservable<char> f(string s) => s.ToObservable(); IObservable<byte> g(char c) { if (byte.TryParse(c.ToString(), out var b)) return Observable.Return(b); else return Observable.Empty<byte>(); } IObservable<bool> h(byte b) => Observable.Repeat(b % 2 == 0, b); IObservable<char> m = f(a); IList<bool> left = await m.SelectMany(g).SelectMany(h).ToList(); IList<bool> right = await m.SelectMany(x => g(x).SelectMany(h)).ToList(); Assert.Equal(left, right); }

This test composes three observable-producing functions in two different ways, to verify that they produce the same values.

The first function, f, simply turns a string into an observable stream. The string "foo" becomes the stream of characters 'f', 'o', 'o', and so on.

The next function, g, tries to parse the incoming character as a number. I've chosen byte as the data type, since there's no reason trying to parse a value that can, at best, be one of the digits 0 to 9 into a full 32-bit integer. A byte is already too large. If the character can be parsed, it's published as a byte value; if not, an empty stream of data is returned. For example, the character stream 'f', 'o', 'o' results in three empty streams, whereas the stream 4, t, 2 produces one singleton stream containing the byte 4, followed by an empty stream, followed again by a stream containing the single number 2.

The third and final function, h, turns a number into a stream of Boolean values; true if the number is even, and false if it's odd. The number of values is equal to the number itself. Thus, when composed together, "123" becomes the stream false, true, true, false, false, false. This is true for both the left and the right list, even though they're results of two different compositions.

Conclusion #

The point of this article is mostly that monads are commonplace. While you may discover them in your own code, they may also come in a reusable library. If you already know C# LINQ based off IEnumerable<T>, parts of Rx will be easy for you to learn. After all, it's the same abstraction, and familiar abstractions make code readable.

Next: The IO monad.

Easier encapsulation with static types

A metaphor.

While I'm still struggling with the notion that dynamically typed languages may have compelling advantages, I keep coming back to the benefits of statically typed languages. One such benefit is how it enables the communication of contracts, as I recently discussed in Encapsulating rod-cutting.

As usual, I base my treatment of encapsulation on my reading of Bertrand Meyer's seminal Object-Oriented Software Construction. A major aspect of encapsulation is the explicit establishment of contracts. What is expected of client code before it can invoke an operation (preconditions)? What is guaranteed to be true after the operation completes (postconditions)? And what is always true of a particular data structure (invariants)?

Contracts constitute the practical part of encapsulation. A contract can give you a rough sense of how well-encapsulated an API is: The more statements you can utter about the contract, the better encapsulation. You may even be able to take all those assertions about the contract and implement them as property-based tests. In other words, if you can think of many properties to write as tests, the API in question probably has good encapsulation. If, on the other hand, you can't think of a single precondition, postcondition, or invariant, this may indicate that encapsulation is lacking.

Contracts are the practical part of encapsulation. The overall notion provides guidance of how to achieve encapsulation. Specific contracts describe what is possible, and how to successfully interact with an API. Clearly, the what and how.

They don't, however, explain why encapsulation is valuable.

Why encapsulate? #

Successful code bases are big. Such a code base rarely fits in your head in its entirety. And the situation is only exacerbated by multiple programmers working concurrently on the code. Even if you knew most of the code base by heart, your team members are changing it, and you aren't going to be aware of all the modifications.

Encapsulation offers a solution to this problem. Instead of knowing every detail of the entire code base, encapsulation should enable you to interact with an API (originally, an object) without knowing all the implementation details. This is the raison d'être of contracts. Ideally, knowing the contract and the purpose of an object and its methods should be enough.

Imagine that you've designed an API with a strong contract. Is your work done? Not yet. Somehow, you'll have to communicate the contract to all present and future client developers.

How do you convey a contract to potential users? I can think of a few ways. Good names are important, but only skin-deep. You can also publish documentation, or use the type system. The following metaphor explores those two alternatives.

Doing a puzzle #

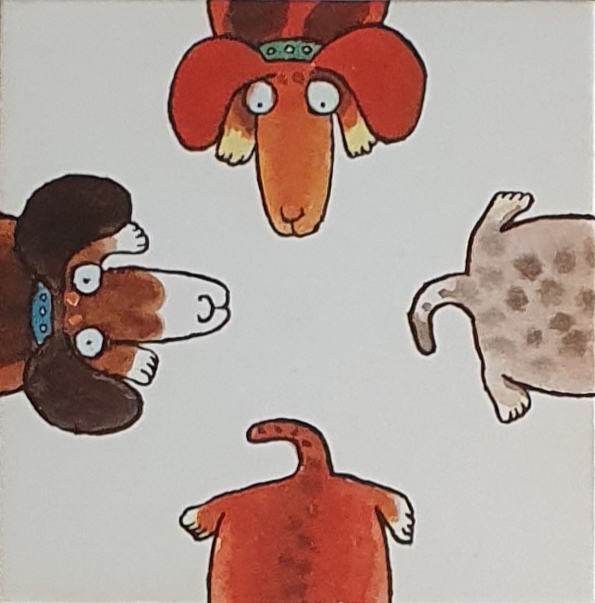

When I was a boy, I had a puzzle called Das verflixte Hunde-Spiel, which roughly translates to the confounded dog game. I've previously described the game and an algorithm for solving it, but that's not my concern here. Rather, I'd like to discuss how one might abstract the information carried by each tile.

As the picture suggests, the game consists of nine square tiles, each with two dog heads and two tails. The objective of the puzzle is to lay all nine tiles in a three-by-three grid such that all the heads fit the opposing tails. The dogs come in four different colour patterns, and each head must fit a tail of the same pattern.

It turns out that there are umpteen variations of this kind of puzzle. This one has cartoon dogs, but you can find similar games with frogs, cola bottles, playing card suits, trains, ladybirds, fast food, flowers, baseball players, owls, etc. This suggests that a generalization may exist. Perhaps an abstraction, even.

"Abstraction is the elimination of the irrelevant and the amplification of the essential"

How to eliminate the irrelevant and amplify the essential of a tile?

To recapitulate, a single tile looks like this:

In a sense, we may regard most of the information on such a tile as 'implementation details'. In a code metaphor, imagine looking at a tile like this as being equivalent to looking at the source code of a method or function (i.e. API). That's not the essence we need to correctly assemble the puzzle.

Imagine that you have to lay down the tiles according to a known solution. Since you already know the solution, this task only involves locating and placing each of the nine tiles. In this case, there are only nine tiles, each with four possible rotations, so if you already know what you're looking for, that is, of course, a tractable endeavour.

Now imagine that you'd like to undertake putting together the tiles without having to navigate by the full information content of each tile. In programming, we often need to do this. We have to identify objects that are likely to perform some subtask for us, and we have to figure out how to interact with such an object to achieve our goals. Preferably, we'd like to be able to do this without having to read all the source code of the candidate object. Encapsulation promises that this should be possible.

The backside of the tiles #

If we want to eliminate the irrelevant, we have to hide the information on each tile. As a first step, consider what happens if we flip the tiles around so that we only see their backs.

Obviously, each backside is entirely devoid of information, which means that we're now flying blind. Even if we know how to solve the puzzle, our only recourse is to blindly pick and rotate each of the nine tiles. As the previous article calculated, when picking at random, the odds of arriving at any valid solution is 1 to 5,945,425,920. Not surprisingly, total absence of information doesn't work.

We already knew that, because, while we want to eliminate the irrelevant, we also must amplify the essential. Thus, we need to figure out what that might be.

Perhaps we could write the essential information on the back of each tile. In the metaphor, this would correspond to writing documentation for an API.

Documentation #

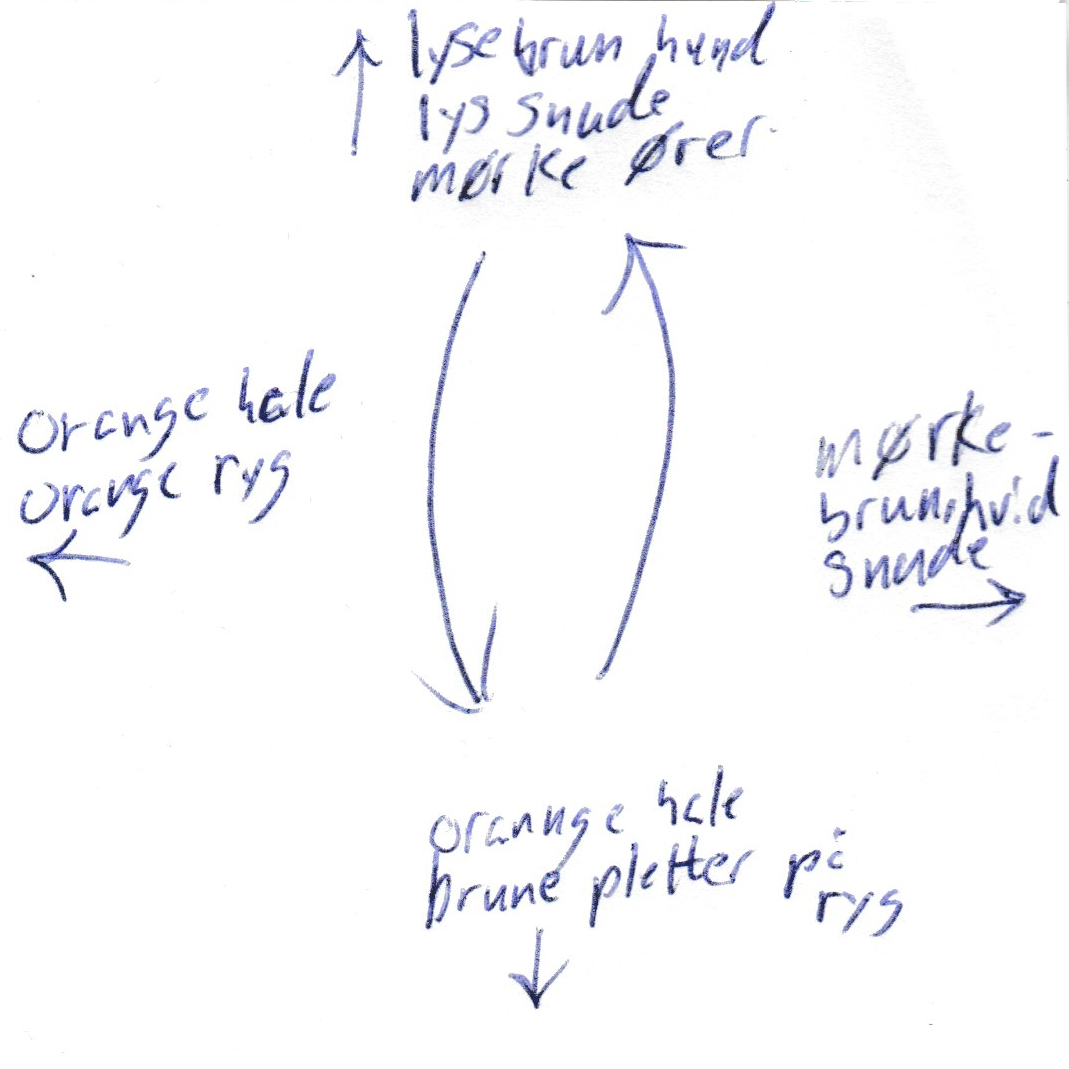

To continue the metaphor, I asked various acquaintances to each 'document' a title. I deliberately only gave them the instruction that they should enable me to assemble the puzzle based on what was on the back of each tile. Some asked for additional directions, as to format, etc., but I refused to give any. People document code in various different ways, and I wanted to capture similar variation. Let's review some of the documentation I received.

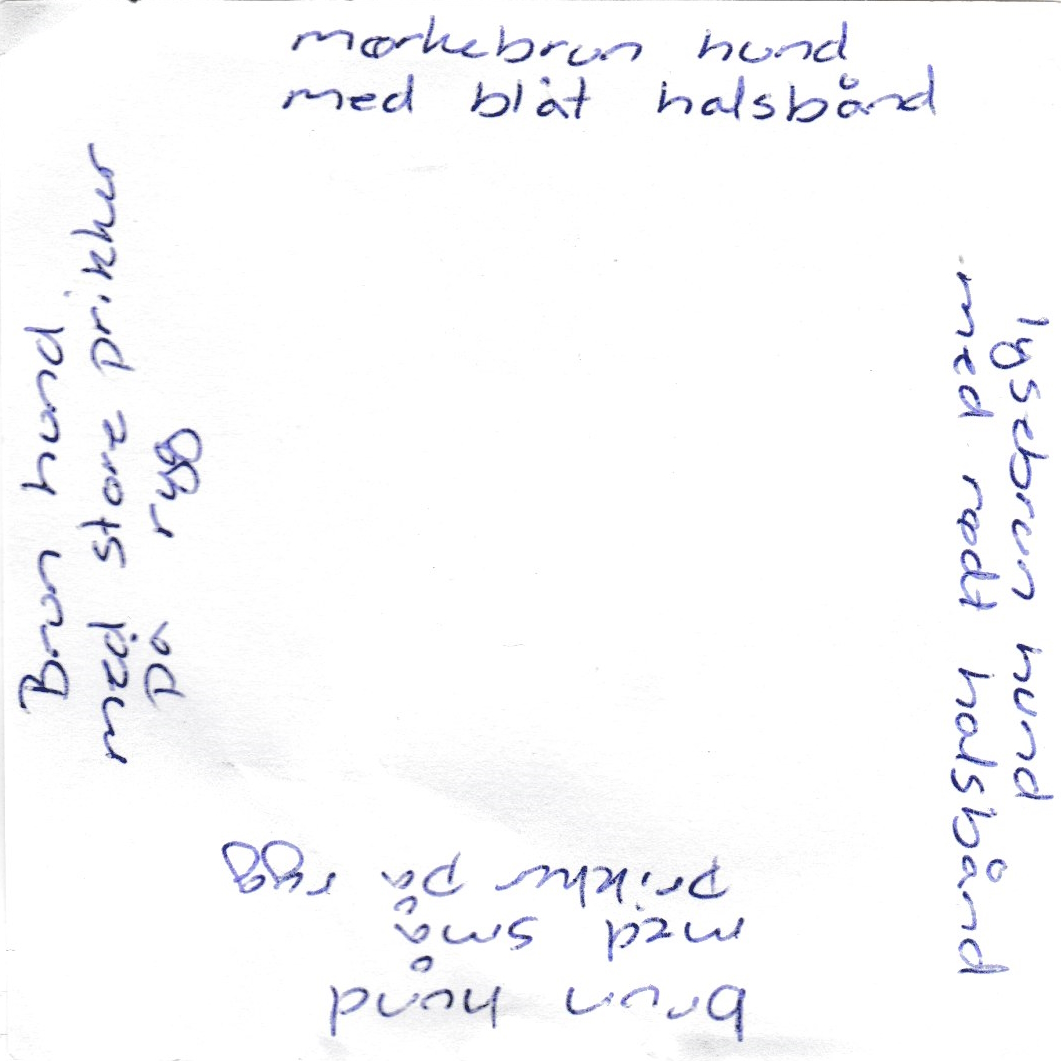

Since I asked around among acquaintances, all respondents were Danes, and some chose to write the documentation in Danish, as is the case with this one.

Unless you have an explicit, enforced policy, you might run into a similar situation in software documentation. I've seen more than one example of software documentation written in Danish, simply because the programmer who wrote it didn't consider anything else than his or her native language. I'm sure most Europeans have similar experiences.

The text on the tile says, from the top and clockwise:

- light brown dog/light snout/dark ears

- dark brown, white/snout

- orange tail/brown spots on/back

- orange tail/orange back

Notice the disregard for capitalization rules or punctuation, a tendency among programmers that I've commented upon in Code That Fits in Your Head.

In addition to the text, the back of the above tile also includes six arrows. Four of them ought to be self-evident, but can you figure out what the two larger arrows indicate?

It turns out that the person writing this piece of documentation eventually realized that the description should be mirrored, because it was on the backside of the tile. To be fair to that person, I'd asked everyone to write with a permanent marker or pen, so correcting a mistake involved either a 'hack' like the two arrows, or starting over from scratch.

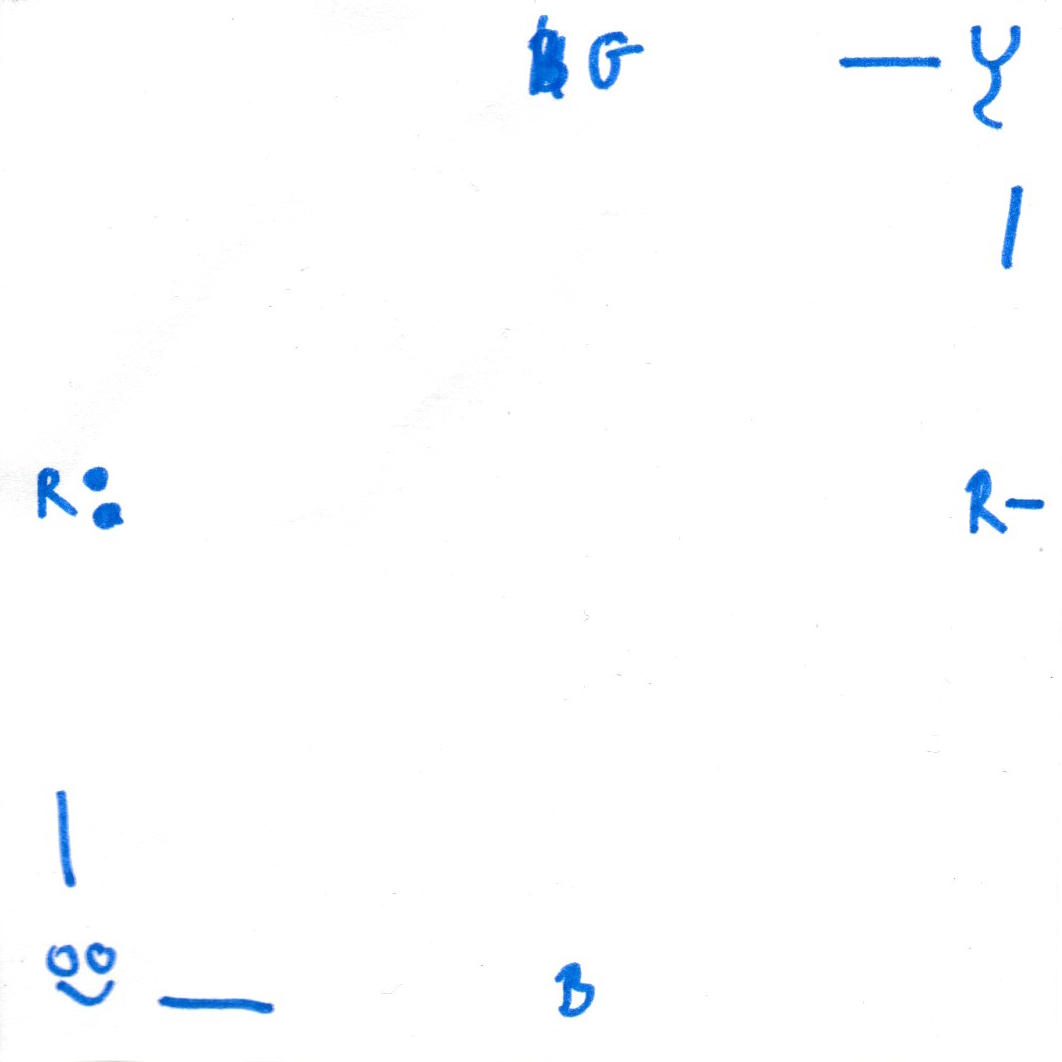

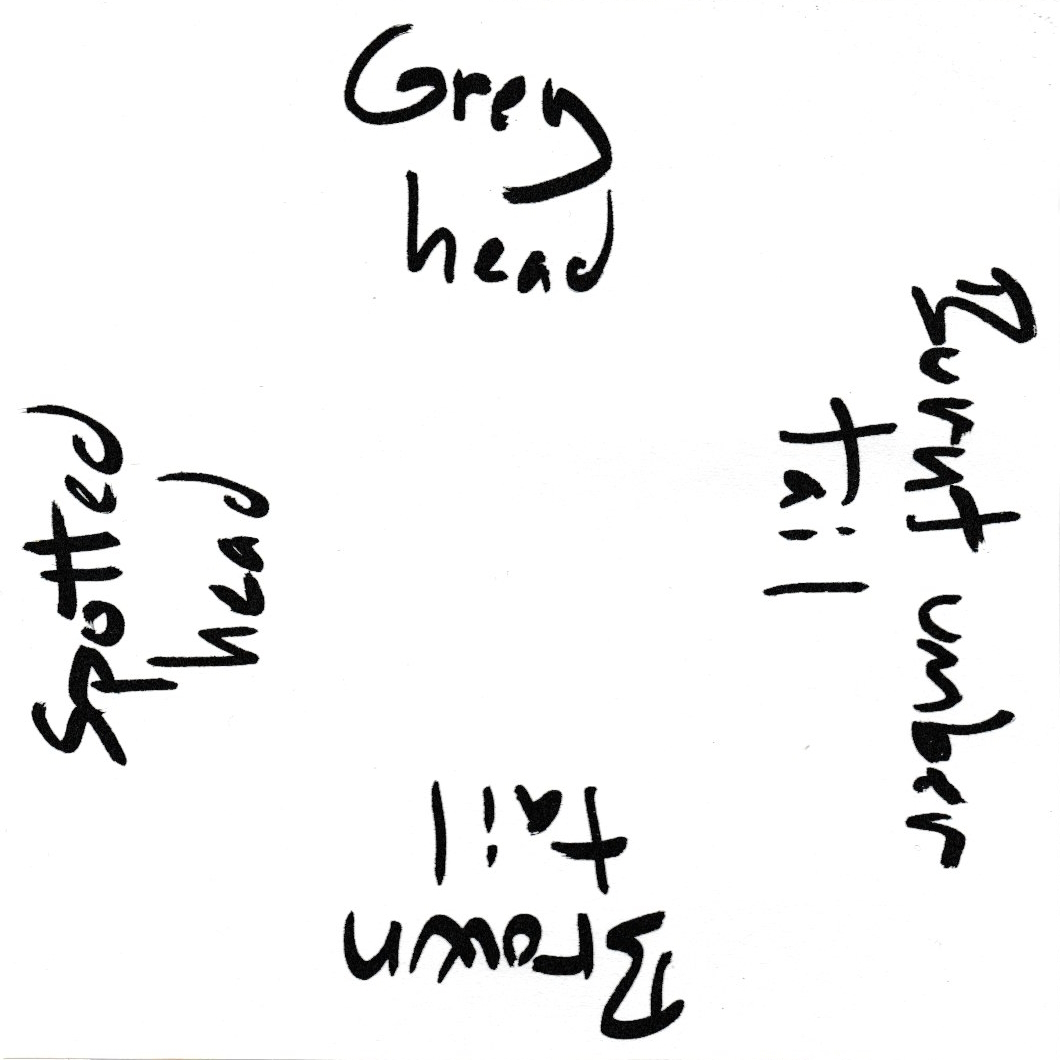

Let's look at some more 'documentation'. Another tile looks like this:

At first glance, I thought those symbols were Greek letters, but once you look at it, you start to realize what's going on. In the upper right corner, you see a stylized back and tail. Likewise, the lower left corner has a stylized face in the form of a smiley. The lines then indicate that the sides indicated by a corner has a head or tail.

Additionally, each side is encoded with a letter. I'll leave it as an exercise for the reader to figure out what G and B indicate, but also notice the two examples of a modified R. The one to the right indicates red with spots, and the other one uses the minus symbol to indicate red without spots.

On the one hand, this example does an admirable job of eliminating the irrelevant, but you may also find that it errs on the side of terseness. At the very least, it demands of the reader that he or she is already intimately familiar with the overall problem domain. You have to know the game well enough to be able to figure out that R- probably means red without spots.

Had this been software documentation, we might have been less than happy with this level of information. It may meet formal requirements, but is perhaps too idiosyncratic or esoteric.

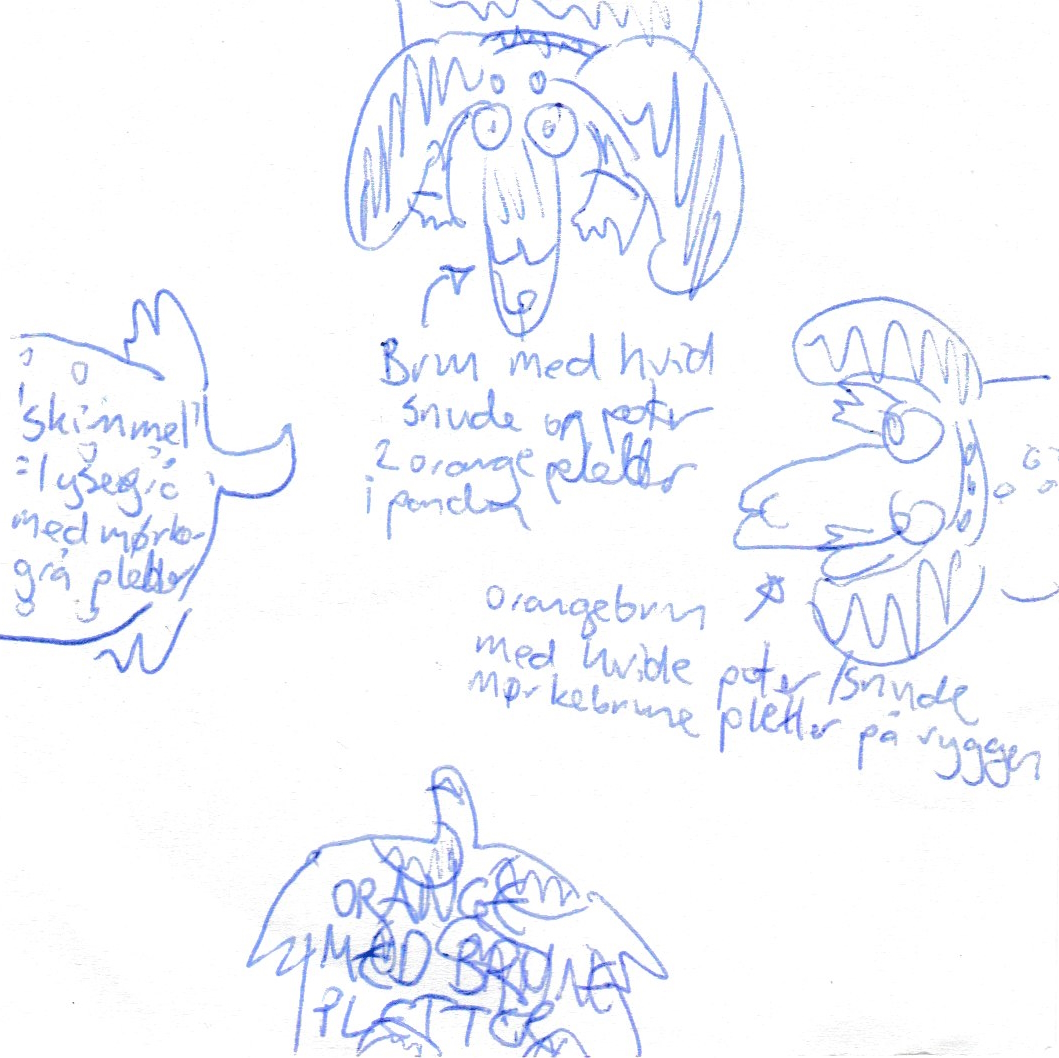

Be that as it may, it's also possible to err on the other side.

In this example, the person writing the documentation essentially copied and described every detail on the front of the tile. Having no colours available, the person instead chose to describe in words the colour of each dog. Metaphorically speaking, the documentation replicates the implementation. It doesn't eliminate any irrelevant detail, and thereby it also fails to amplify the essential.

Here's another interesting example:

The text is in Danish. From the top clockwise, it says:

- dark brown dog with blue collar

- light brown dog with red collar

- brown dog with small spots on back

- Brown dog with big spots on back

Notice how the person writing this were aware that a tile has no natural up or down. Instead, each side is described with letters facing up when that side is up. You have to rotate the documentation in order to read all four sides. You may find that impractical, but I actually consider that to represent something essential about each tile. To me, this is positive.

Even so, an important piece of information is missing. It doesn't say which sides have heads, and which ones have tails.

Finally, here's one that, in my eyes, amplifies the essential and eliminates the irrelevant:

Like the previous example, you have to rotate the documentation in order to read all four sides, but the text is much terser. If you ask me, Grey head, Burnt umber tail, Brown tail, and Spotted head amplifies the essential and eliminates everything else.

Notice, however, how inconsistent the documentation is. People chose various different ways in their attempt to communicate what they found important. Some people inadvertently left out essential information. Other people provided too much information. Some people never came through, so in a few cases, documentation was entirely absent. And finally, I've hinted at this already, most people forgot to 'mirror' the information, but a few did remember.

All of this has direct counterparts in software documentation. The level of detail you get from documentation varies greatly, and often, the information that I actually care about is absent: Can I call this method with a negative number? Does the input string need to be formatted in a particular way? Does the method ever return null? Which exceptions may it throw?

I'm not against documentation, but it has limitations. As far as I can tell, though, that's your only option if you're working in a dynamically typed language.

Static types with limited expression #

Can you think of a way to constrain which puzzle pieces fit together with other pieces?

That's how jigsaw puzzles work. As a first attempt, we may try to cut out out the pieces like this:

This does help some, because when presented with the subtask of having to locate and find the next piece, at least we can't rotate the next piece in four different positions. Instead, we have only two options. Perhaps we'll choose to lay down the next piece like this:

You may also decide to rotate the right piece ninety degrees clockwise, but those are your only two rotation options.

We may decide to encode the shape of the pieces so that, say, the tabs indicate heads and the indentations indicate tails. This, at least, prevents us from joining head with head, or tail with tail.

This strikes me as an apt metaphor for C, or how many programmers use the type systems of C# or Java. It does prevent some easily preventable mistakes, but the types still don't carry enough information to enable you to identify exactly the pieces you need.

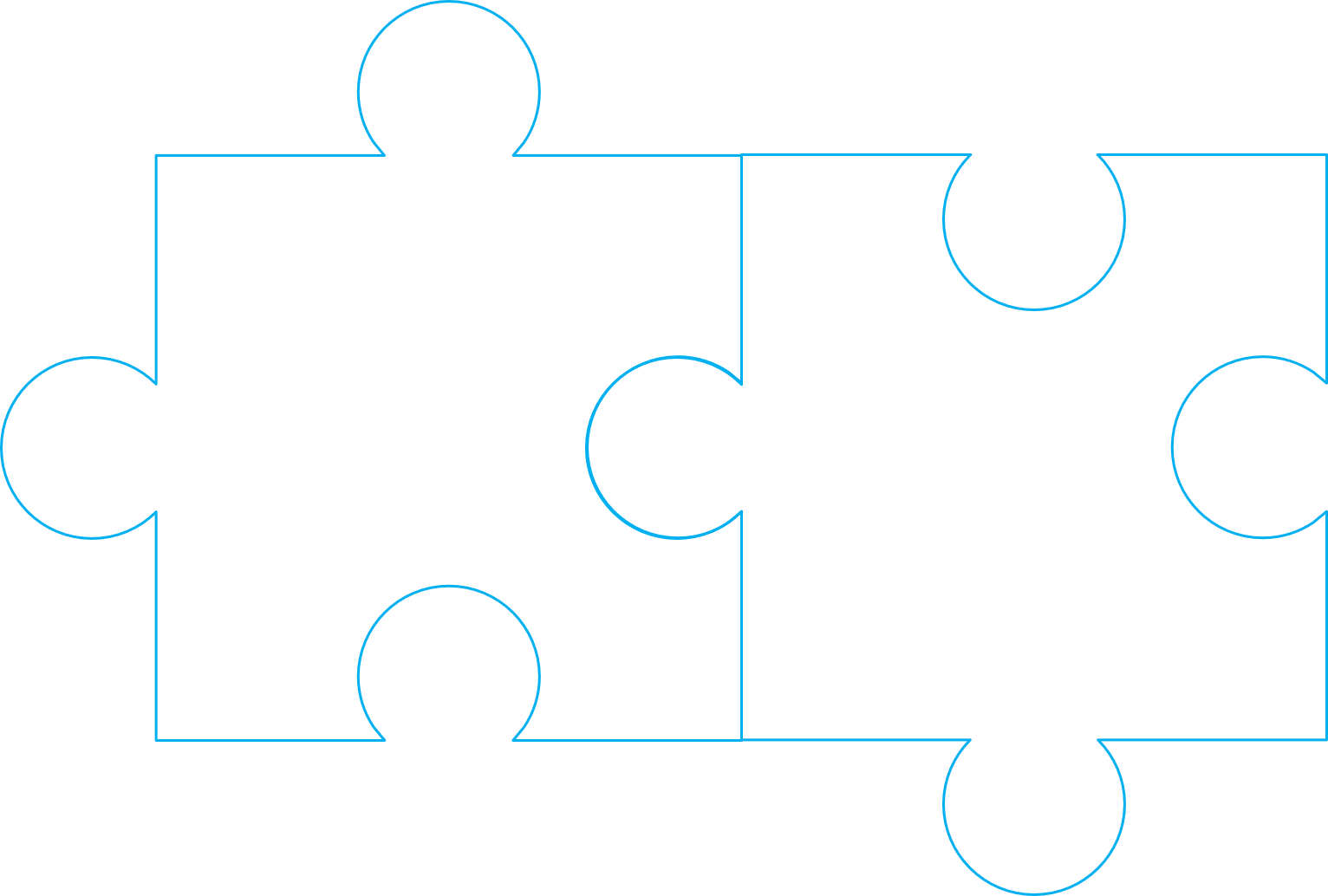

More expressive static types #

Static type systems come in various forms, and some are more expressive than others. To be honest, C#'s type system does come with good expressive powers, although it tends to require much ceremony. As far as I can tell, Java's type system is on par with C#. Let's assume that we either take the time to jump through the hoops that make these type systems expressive, or that we're using a language with a more expressive type system.

In the puzzle metaphor, we may decide to give a tile this shape:

Such a shape encodes all the information that we need, because each tab or indentation has a unique shape. We may not even have to remember exactly what a square indentation represents. If we're presented with the above tile and asked to lay down a compatible tile, we have to find one with a square tab.

Encoding the essential information into tile shapes enables us to not only prevent mistakes, but identify the correct composition of all the tiles.

For years, I've thought about static types as shapes of objects or functions. For practical purposes, static types can't express everything an operation may do, but I find it useful to use a good type system to my advantage.

Code examples #

You may find this a nice metaphor, and still fail to see how it translates to actual code. I'm not going to go into details here, but rather point to existing articles that give some introductions.

One place to start is to refactor from primitive obsession to domain models. Just wrapping a string or an integer in a predicative type helps communicate the purpose and constraints of a data type. Consider a constructor like this:

public Reservation( Guid id, DateTime at, Email email, Name name, NaturalNumber quantity)

While hardly sophisticated, it already communicates much information about preconditions for creating a Reservation object. Some of the constituent types (Guid and DateTime) are built in, so likely well-known to any developer working on a relevant code base. If you're wondering whether you can create a reservation with a negative quantity, the types already answer that.

Languages with native support for sum types let you easily model mutually exclusive, heterogeneous closed type hierarchies, as shown in this example:

type PaymentService = { Name : string; Action : string } type PaymentType = | Individual of PaymentService | Parent of PaymentService | Child of originalTransactionKey : string * paymentService : PaymentService

And if your language doesn't natively support sum types, you can emulate them with the Visitor design pattern.

You can, in fact, do some quite sophisticated tricks even with .NET's type system.

Conclusion #

People often argue about static types with the assumption that their main use is to prevent mistakes. They can help do that, too, but I also find static types an excellent communication medium. The benefits of using a static type system to model contracts is that, when a type system is already part of a language, it's a consistent, formalized framework for communication. Instead of inconsistent and idiosyncratic documentation, you can embed much information about a contract in the types of an API.

And indeed, not only can the types help communicate pre- and postconditions. The type checker also prevents errors.

A sufficiently sophisticated type system carries more information that most people realize. When I write Haskell code, I often need to look up a function that I need. Contrary to other languages, I don't try to search for a function by guessing what name it might have. Rather, the Hoogle search engine enables you to search for a function by type.

Types are shapes, and shapes are like outlines of objects. Used well, they enable you to eliminate the irrelevant, and amplify the essential information about an API.

In defence of multiple WiP

Programming isn't like factory work.

I was recently stuck on a programming problem. Specifically, part two of an Advent of Code puzzle, if you must know. As is my routine, I went for a run, which always helps to get unstuck. During the few hours away from the keyboard, I'd had a new idea. When I returned to the computer, I had my new algorithm implemented in about an hour, and it calculated the correct result in sub-second time.

I'm not writing this to brag. On the contrary, I suck at Advent of Code (which is a major reason that I do it). The point is rather that programming is fundamentally non-linear in effort. Not only are some algorithms orders of magnitudes faster than other algorithms, but it's also the case that the amount of time you put into solving a problem doesn't always correlate with the outcome.

Sometimes, the most productive way to solve a problem is to let it rest and go do something else.

One-piece flow #

Doesn't this conflict with the ideal of one-piece flow? That is, that you should only have one piece of work in progress (WiP).

Yes, it does.

It's not that I don't understand basic queue theory, haven't read The Goal, or that I'm unaware of the compelling explanations given by, among other people, Henrik Kniberg. I do, myself, lean (pun intended) towards lean software development.

I only offer the following as a counterpoint to other voices. As I've described before, when I seem to disagree with the mainstream view on certain topics, the explanation may rather be that I'm concerned with a different problem than other people are. If your problem is a dysfunctional organization where everyone have dozens of tasks in progress, nothing ever gets done because it's considered more important to start new work items than completing ongoing work, where 'utilization' is at 100% because of 'efficiency', then yes, I'd also recommend limiting WiP.

The idea in one-piece flow is that you're only working on one thing at a time.

Perhaps you can divide the task into subtasks, but you're only supposed to start something new when you're done with the current job. Compared to the alternative of starting a lot concurrent tasks in order to deal with wait times in the system, I agree with the argument that this is often better. One-piece flow often prompts you to take a good, hard look at where and how delays occur in your process.

Even so, I find it ironic that most of 'the Lean squad' is so busy blaming Taylorism for everything that's wrong with many software development organizations, only to go advocate for another management style rooted in factory work.

Programming isn't manufacturing.

Urgent or important #

As Eisenhower quoted an unnamed college president:

"I have two kinds of problems, the urgent and the important. The urgent are not important, and the important are never urgent."

It's hard to overstate how liberating it can be to ignore the urgent and focus on the important. Over decades, I keep returning to the realization that you often reach the best solutions to software problems by letting them stew.

I'm sure I've already told the following story elsewhere, but it bears repeating. Back in 2009 I started an open-source project called AutoFixture and also managed to convince my then-employer, Safewhere, to use it in our code base.

Maintaining or evolving AutoFixture wasn't my job, though. It was a work-related hobby, so nothing related to it was urgent. When in the office, I worked on Safewhere code, but biking back and forth between home and work, I thought about AutoFixture problems. Often, these problems would be informed by how we used it in Safewhere. My point is that the problems I was thinking about were real problems that I'd encountered in my day job, not just something I'd dreamt up for fun.

I was mostly thinking about API designs. Given that this was ideally a general-purpose open-source project, I didn't want to solve narrow problems with specific solutions. I wanted to find general designs that would address not only the immediate concerns, but also other problems that I had yet to discover.

Many an evening I spent trying out an idea I'd had on my bicycle. Often, it turned out that the idea wouldn't work. While that might be, in one sense, dismaying, on the other hand, it only meant that I'd learned about yet another way that didn't work.

Because there was no pressure to release a new version of AutoFixture, I could take the time to get it right. (After a fashion. You may disagree with the notion that AutoFixture is well-designed. I designed its APIs to the best of my abilities during the decade I lead the project. And when I discovered property-based testing, I passed on the reins.)

Get there earlier by starting later #

There's a 1944 science fiction short story by A. E. van Vogt called Far Centaurus that I'm now going to spoil.

In it, four astronauts embark on a 500-year journey to Alpha Centauri, using suspended animation. When they arrive, they discover that the system is long settled, from Earth.

During their 500 years en route, humans invented faster space travel. Even though later generations started later, they arrived earlier. They discovered a better way to get from a to b.

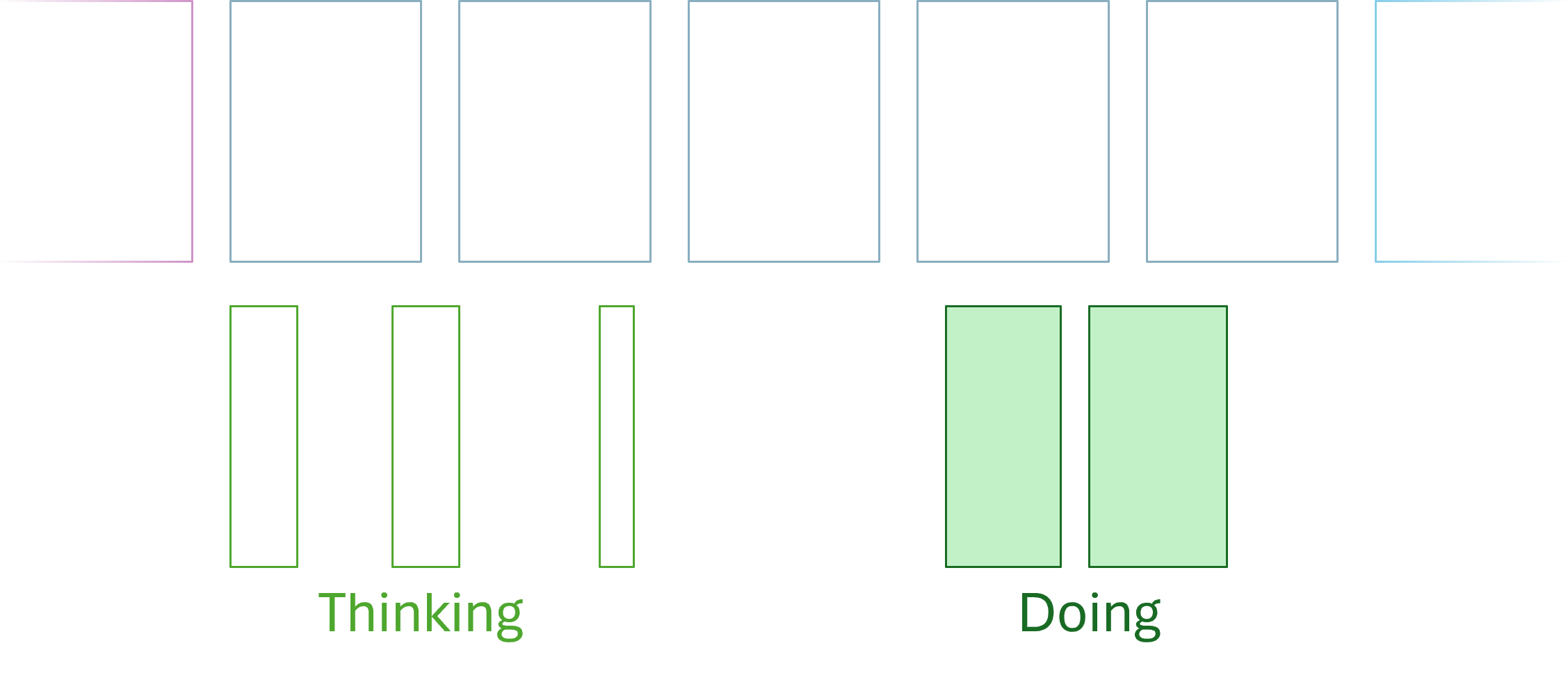

Compared to one-piece flow, we may illustrate this metaphor like this:

When presented with a problem, we don't start working on it right away. Or, we do, but the work we do is thinking rather than typing. We may even do some prototyping at that stage, but if no good solution presents itself, we put away the problem for a while.

We may return to the problem from time to time, and what may happen is that we realize that there's a much better, quicker way of accomplishing the goal than we first believed (as, again, recently happened to me). Once we have that realization, we may initiate the work, and it it may even turn out that we're done earlier than if we'd immediately started hacking at the problem.

By starting later, we've learned more. Like much knowledge work, software development is a profoundly non-linear endeavour. You may find a new way of doing things that are orders of magnitudes faster than what you originally had in mind. Not only in terms of big-O notation, but also in terms of implementation effort.

When doing Advent of Code, I've repeatedly been struck how the more efficient algorithm is often also simpler to implement.

Multiple WiP #

As the above figure suggests, you're probably not going to spend all your time thinking or doing. The figure has plenty of air in between the activities.

This may seem wasteful to efficiency nerds, but again: Knowledge work isn't factory work.

You can't think by command. If you've ever tried meditating, you'll know just how hard it is to empty your mind, or in any way control what goes on in your head. Focus on your breath. Indeed, and a few minutes later you snap out of a reverie about what to make for dinner, only to discover that you were able to focus on your breath for all of ten seconds.

As I already alluded to in the introduction, I regularly exercise during the work day. I also go grocery shopping, or do other chores. I've consistently found that I solve all hard problems when I'm away from the computer, not while I'm at it. I think Rich Hickey calls it hammock-driven development.

When presented with an interesting problem, I usually can't help thinking about it. What often happens, however, is that I'm mulling over multiple interesting problems during my day.

You could say that I actually have multiple pieces of work in progress. Some of them lie dormant for a long time, only to occasionally pop up and be put away again. Even so, I've had problems that I'd essentially given up on, only to resurface later when I'd learned a sufficient amount of new things. At that time, then, I sometimes realize that what I previously thought was impossible is actually quite simple.

It's amazing what you can accomplish when you focus on the things that are important, rather than the things that are urgent.

One size doesn't fit all #

How do I know that this will always work? How can I be sure that an orders-of-magnitude insight will occur if I just wait long enough?

There are no guarantees. My point is rather that this happens with surprising regularity. To me, at least.

Your software organization may include tasks that represent truly menial work. Yet, if you have too much of that, why haven't you automated it away?

Still, I'm not going to tell anyone how to run their development team. I'm only pointing out a weakness with the common one-piece narrative: It treats work as mostly a result of effort, and as if it were somehow interchangeable with other development tasks.

Most crucially, it models the amount of time required to complete a task as being independent of time: Whether you start a job today or in a month, it'll take x days to complete.

What if, instead, the effort was a function of time (as well as other factors)? The later you start, the simpler the work might be.

This of course doesn't happen automatically. Even if I have all my good ideas away from the keyboard, I still spend quite a bit of time at the keyboard. You need to work enough with a problem before inspiration can strike.

I'd recommend more slack time, more walks in the park, more grocery shopping, more doing the dishes.

Conclusion #

Programming is knowledge work. We may even consider it creative work. And while you can nurture creativity, you can't force it.

I find it useful to have multiple things going on at the same time, because concurrent tasks often cross-pollinate. What I learn from engaging with one task may produce a significant insight into another, otherwise unrelated problem. The lack of urgency, the lack of deadlines, foster this approach to problem-solving.

But I'm not going to tell you how to run your software development process. If you want to treat it as an assembly line, that's your decision.

You'll probably get work done anyway. Months of work can save days of thinking.

Geographic hulls

Seven lines of Python code.

Can you tell what this is?

I showed this to both my wife and my son, and they immediately recognized it for what it is. On the other hand, they're also both culturally primed for it.

After all, it's a map of Denmark, although I've transformed each of the major islands, as well as the peninsula of Jutland to their convex hulls.

Here's the original map I used for the transformation:

I had a reason to do this, having to do with the coastline paradox, but my underlying motivation isn't really that important for this article, since I rather want to discuss how I did it.

The short answer is that I used Python. You have to admit that Python has a fabulous ecosystem for all kinds of data crunching, including visualizations. I'd actually geared up to implementing a Graham scan myself, but that turned out not to be necessary.

GeoPandas to the rescue #

I'm a novice Python programmer, but I've used Matplotlib before to visualize data, so I found it natural to start with a few web searches to figure out how to get to grips with the problem.

I quickly found GeoPandas, which works on top of Matplotlib to render and visualize geographical data.

My next problem was to find a data set for Denmark, which I found on SimpleMaps. I chose to download and work with the GeoJSON format.

Originally, I'd envisioned implementing a Graham scan myself. After all, I'd done that before in F#, and it's a compelling exercise. It turned out, however, that this function is already available in the GeoPandas API.

I had trouble separating the data file's multi-part geometry into multiple single geometries. This meant that when I tried to find the convex hull, I got the hull of the entire map, instead of each island individually. The solution was to use the explode function.

Once I figured that out, it turned out that all I needed was seven lines of Python code, including imports and a blank line:

import geopandas as gpd import matplotlib.pyplot as plt map = gpd.read_file('dk.json') map.explode().boundary.plot(edgecolor='green').set_axis_off() map.explode().convex_hull.boundary.plot().set_axis_off() plt.show()

In this script, I display the unmodified map before the convex hulls. This is only an artefact of my process. As I've already admitted, this is new ground for me, and I initially wanted to verify that I could even read in and display a GeoJSON file.

For both maps I use the boundary property to draw only the outline of the map, rather than filled polygons.

Enveloping the map parts #

Mostly for fun, but also to illustrate what a convex hull is, we can layer the two visualizations in a single image. In order to do that, a few changes to the code are required.

import geopandas as gpd import matplotlib.pyplot as plt map = gpd.read_file('dk.json') _, ax = plt.subplots() map.explode().boundary.plot(ax=ax, edgecolor='green').set_axis_off() map.explode().convex_hull.boundary.plot(ax=ax).set_axis_off() plt.show()

This little script now produces this image:

Those readers who know Danish geography may wonder what's going on with Falster. Since it's the sixth-largest Island in Denmark, shouldn't it have its own convex hull? Yes, it should, yet here it's connected to Zealand. Granted, two bridges connect the two, but that's hardly sufficient to consider them one island. There are plenty of bridges in Denmark, so according to that criterion, most of Denmark is connected. In fact, on the above map, only Bornholm, Samsø, Læsø, Ærø, Fanø, and Anholt would then remain as islands.

Rather, this only highlights the quality, or lack thereof, of the data set. I don't want to complain about a free resource, and the data set has served my purposes well enough. I mostly point this out in case readers were puzzled about this. In fact, a similar case applies to Nørrejyske Ø, which in the GeoJSON map is connected to Jutland at Aalborg. Yes, there's a bridge there. No, that shouldn't qualify as a land connection.

Other countries #

As you may have noticed, apart from the hard-coded file name, nothing in the code is specific to Denmark. This means that you can play around with other countries. Here I've downloaded various GeoJSON data sets from GeoJSON Maps of the globe, which seems to be using the same source data set that the Danish data set is also based on. In other words, if I download the file for Denmark from that site, it looks exactly as above.

Can you guess which country this is?

Or this one?

While this is all good fun, not all countries have interesting convex hull:

While I'll let you have a bit of fun guessing, you can hover your cursor over each image to reveal which country it is.

Conclusion #

Your default position when working with Python should probably be: There's already a library for that.

In this article, I've described how I wanted to show Denmark, but only the convex hull of each of the larger islands, as well as the Jutland peninsula. Of course, there was already a library for that, so that I only needed to write seven lines of code to produce the figures I wanted.

Granted, it took a few hours of research to put those seven lines together, but I'm only a novice Python programmer, and I'm sure an old hand could do it much faster.

Modelling data relationships with C# types

A C# example implementation of Ghosts of Departed Proofs.

This article continues where Modelling data relationships with F# types left off. It ports the F# example code to C#. If you don't read F# source code, you may instead want to read Implementing rod-cutting to get a sense of the problem being addressed.

I'm going to assume that you've read enough of the previous articles to get a sense of the example, but in short, this article examines if it's possible to use the type system to model data relationships. Specifically, we have methods that operate on a collection and a number. The precondition for calling these methods is that the number is a valid (one-based) index into the collection.

While you would typically implement such a precondition with a Guard Clause and communicate it via documentation, you can also use the Ghosts of Departed Proofs technique to instead leverage the type system. Please see the previous article for an overview.

That said, I'll repeat one point here: The purpose of these articles is to showcase a technique, using a simple example to make it, I hope, sufficiently clear what's going on. All this machinery is hardly warranted for an example as simple as this. All of this is a demonstration, not a recommendation.

Size proofs #

As in the previous article, we may start by defining what a 'size proof' looks like. In C#, it may idiomatically be a class with an internal constructor.

public sealed class Size<T> { public int Value { get; } internal Size(int value) { Value = value; } // Also override ToString, Equals, and GetHashCode... }

Since the constructor is internal it means that client code can't create Size<T> instances, and thereby client code can't decide a concrete type for the phantom type T.

Issuing size proofs #

How may client code create Size<T> objects? It may ask a PriceList<T> object to issue a proof:

public sealed class PriceList<T> { public IReadOnlyCollection<int> Prices { get; } internal PriceList(IReadOnlyCollection<int> prices) { Prices = prices; } public Size<T>? TryCreateSize(int candidate) { if (0 < candidate && candidate <= Prices.Count) return new Size<T>(candidate); else return null; } // More members go here...

If the requested candidate integer represents a valid (one-indexed) position in the PriceList<T> object, the return value is a Size<T> object that contains the candidate. If, on the other hand, the candidate isn't in the valid range, no object is returned.

Since both PriceList<T> and Size<T> classes are immutable, once a 'size proof' has been issued, it remains valid. As I've previously argued, immutability makes encapsulation simpler.

This kind of API does, however, look like it's turtles all the way down. After all, the PriceList<T> constructor is also internal. Now the question becomes: How does client code create PriceList<T> objects?

The short answer is that it doesn't. Instead, it'll be given an object by the library API. You'll see how that works later, but first, let's review what such an API enables us to express.

Proof-based Cut API #

As described in Encapsulating rod-cutting, returning a collection of 'cut' objects better communicates postconditions than returning a tuple of two arrays, as the original algorithm suggested. In other words, we're going to need a type for that.

public sealed record Cut<T>(int Revenue, Size<T> Size);

In this case we can get by with a simple record type. Since one of the properties is of the type Size<T>, client code can't create Cut<T> instances, just like it can't create Size<T> or PriceList<T> objects. This is what we want, because a Cut<T> object encapsulates a proof that it's valid, related to the original collection of prices.

We can now define the Cut method as an instance method on PriceList<T>. Notice how all the T type arguments line up. As input, the Cut method only accepts Size<T> proofs issued by a compatible price list. This is enforced at compile time, not at run time.

public IReadOnlyCollection<Cut<T>> Cut(Size<T> n) { var p = Prices.Prepend(0).ToArray(); var r = new int[n.Value + 1]; var s = new int[n.Value + 1]; r[0] = 0; for (int j = 1; j <= n.Value; j++) { var q = int.MinValue; for (int i = 1; i <= j; i++) { var candidate = p[i] + r[j - i]; if (q < candidate) { q = candidate; s[j] = i; } } r[j] = q; } var cuts = new List<Cut<T>>(); for (int i = 1; i <= n.Value; i++) { var revenue = r[i]; var size = new Size<T>(s[i]); cuts.Add(new Cut<T>(revenue, size)); } return cuts; }

For good measure, I'm showing the entire implementation, but you only need to pay attention to the method signature. The point is that n is constrained by the type system to be in a valid range.

Proof-based Solve API #

The same technique can be applied to the Solve method. Just align the Ts.

public IReadOnlyCollection<Size<T>> Solve(Size<T> n) { var cuts = Cut(n).ToArray(); var sizes = new List<Size<T>>(); var size = n; while (size.Value > 0) { sizes.Add(cuts[size.Value - 1].Size); size = new Size<T>(size.Value - cuts[size.Value - 1].Size.Value); } return sizes; }

This is another instance method on PriceList<T>, which is where T is defined.

Proof-based revenue API #

Finally, we may also implement a method to calculate the revenue from a given sequence of cuts.

public int CalculateRevenue(IReadOnlyCollection<Size<T>> cuts) { var arr = Prices.ToArray(); return cuts.Sum(c => arr[c.Value - 1]); }

Not surprisingly, I hope, CalculateRevenue is another instance method on PriceList<T>. The cuts will typically come from a call to Solve, but it's entirely possible for client code to create an ad-hoc collection of Size<T> objects by repeatedly calling TryCreateSize.

Running client code #

How does client code use this API? It calls an Accept method with an implementation of this interface:

public interface IPriceListVisitor<TResult> { TResult Visit<T>(PriceList<T> priceList); }

Why 'visitor'? This doesn't quite look like a Visitor, and yet, it still does.

Imagine, for a moment, that we could enumerate all types that T could inhabit.

TResult Visit(PriceList<Type1> priceList); TResult Visit(PriceList<Type2> priceList); TResult Visit(PriceList<Type3> priceList); // ⋮ TResult Visit(PriceList<TypeN> priceList);

Clearly we can't do that, since T is infinite, but if we could, the interface would look like a Visitor.

I find the situation sufficiently similar to name the interface with the Visitor suffix. Now we only need a class with an Accept method.

public sealed class RodCutter(IReadOnlyCollection<int> prices) { public TResult Accept<TResult>(IPriceListVisitor<TResult> visitor) { return visitor.Visit(new PriceList<object>(prices)); } }

Client code may create a RodCutter object, as well as one or more classes that implement IPriceListVisitor<TResult>, and in this way interact with the library API.

Let's see some examples. We'll start with the original CLRS example, written as an xUnit.net test.

[Fact] public void ClrsExample() { var sut = new RodCutter([1, 5, 8, 9, 10, 17, 17, 20, 24, 30]); var actual = sut.Accept(new CutRodVisitor(10)); var expected = new[] { ( 1, 1), ( 5, 2), ( 8, 3), (10, 2), (13, 2), (17, 6), (18, 1), (22, 2), (25, 3), (30, 10) }; Assert.Equal(expected, actual); }

CutRodVisitor is a nested class that implements the IPriceListVisitor<TResult> interface:

private class CutRodVisitor(int i) : IPriceListVisitor<IReadOnlyCollection<(int, int)>> { public IReadOnlyCollection<(int, int)> Visit<T>(PriceList<T> priceList) { var n = priceList.TryCreateSize(i); if (n is null) return []; else { var cuts = priceList.Cut(n); return cuts.Select(c => (c.Revenue, c.Size.Value)).ToArray(); } } }

The CutRodVisitor class returns a collection of tuples. Why doesn't it just return cuts directly?

It can't, because it wouldn't type-check. Think about it for a moment. When you implement the interface, you need to pick a type for TResult. You can't, however, declare it to implement IPriceListVisitor<Cut<T>> (where T would be the T from Visit<T>), because at the class level, you don't know what T is. Neither does the compiler.

Your Visit<T> implementation must work for any T.

Preventing misalignment #

Finally, here's a demonstration of how the phantom type prevents confusing or mixing up two (or more) different price lists. Consider this rather artificial example:

[Fact] public void NestTwoSolutions() { var sut = new RodCutter([1, 2, 2]); var inner = new RodCutter([1]); (int, int)? actual = sut.Accept(new NestedRevenueVisitor(inner)); Assert.Equal((3, 1), actual); }

This unit test creates two price arrays and calls Accept on one of them (the 'outer' one, you may say), while passing the inner one to the Visitor, which at first glance just looks like this:

private class NestedRevenueVisitor(RodCutter inner) : IPriceListVisitor<(int, int)?> { public (int, int)? Visit<T>(PriceList<T> priceList) { return inner.Accept(new InnerRevenueVisitor<T>(priceList)); } // Inner visitor goes here... }

Notice that it only delegates to yet another Visitor, passing the 'outer' priceList as a constructor parameter to the next Visitor. The purpose of this is to bring two PriceList<T> objects in scope at the same time. This will enable us to examine what happens if we make a programming mistake.

First, however, here's the proper, working implementation without mistakes:

private class InnerRevenueVisitor<T>(PriceList<T> priceList1) : IPriceListVisitor<(int, int)?> { public (int, int)? Visit<T1>(PriceList<T1> priceList2) { var n1 = priceList1.TryCreateSize(3); if (n1 is null) return null; else { var cuts1 = priceList1.Solve(n1); var revenue1 = priceList1.CalculateRevenue(cuts1); var n2 = priceList2.TryCreateSize(1); if (n2 is null) return null; else { var cuts2 = priceList2.Solve(n2); var revenue2 = priceList2.CalculateRevenue(cuts2); return (revenue1, revenue2); } } } }

Notice how both priceList1 and priceList2 are now both in scope. So far, they're not mixed up, so the Visit implementation queries first one and then another for the optimal revenue. If all works well (which it does), it returns a tuple with the two revenues.

What happens if I make a mistake? What if, for example, I write priceList2.Solve(n1)? It shouldn't be possible to use n1, which was issued by pricelist1, with priceList2. And indeed this isn't possible. With that mistake, the code doesn't compile. The compiler error is:

Argument 1: cannot convert from 'Ploeh.Samples.RodCutting.Size<T>' to 'Ploeh.Samples.RodCutting.Size<T1>'

When you look at the types, that makes sense. After all, there's no guarantee that T is equal to T1.

You'll run into similar problems if you mix up the two 'contexts' in other ways. The code doesn't compile. Which is what you want.

Conclusion #

This article demonstrates how to use the Ghosts of Departed Proofs technique in C#. In some ways, I find that it comes across as more idiomatic in C# than in F#. I think this is because rank-2 polymorphism is only possible in F# when using its object-oriented features. Since F# is a functional-first programming language, it seems a little out of place there, whereas it looks more at home in C#.

Perhaps I should have designed the F# code to make use of objects to the same degree as I've done here in C#.

I think I actually like how the C# API turned out, although having to define and implement a class every time you need to supply a Visitor may feel a bit cumbersome. Even so, developer experience shouldn't be exclusively about saving a few keystrokes. After all, typing isn't a bottleneck.

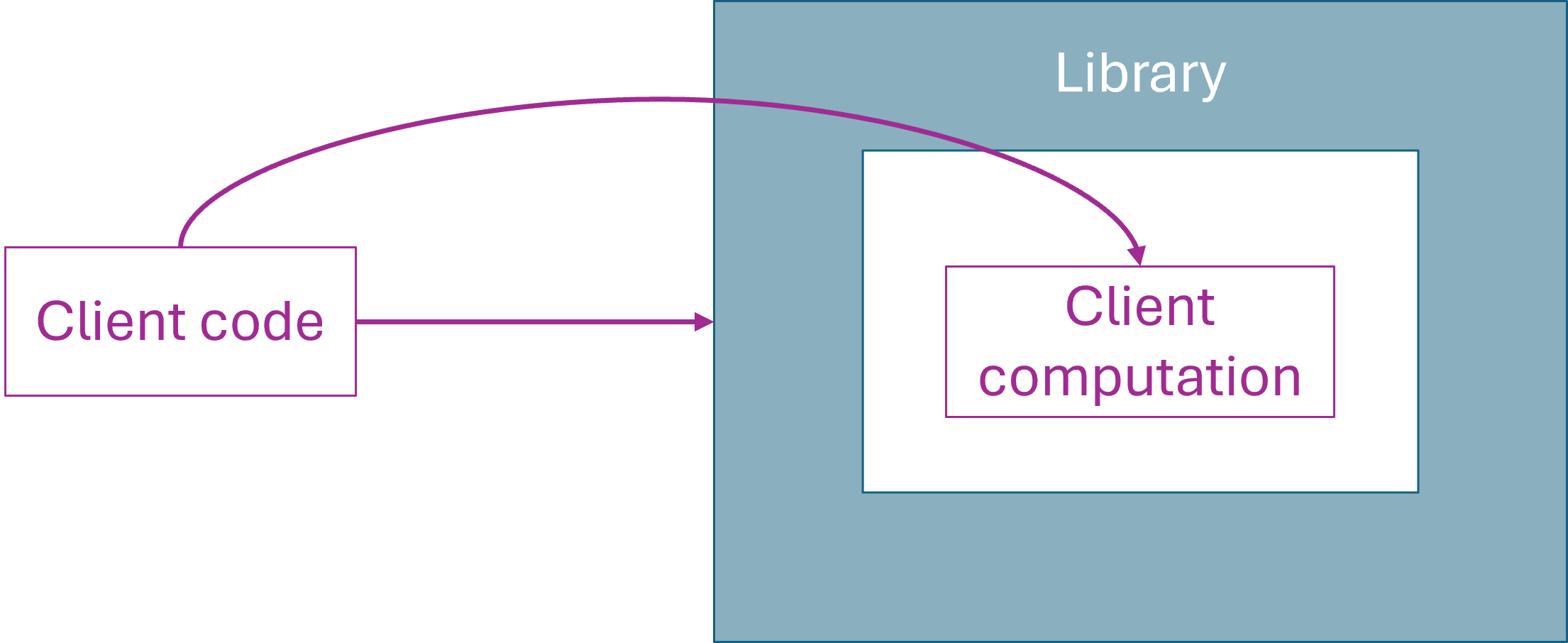

Dependency inversion without inversion of control

Here, have a sandwich.

For years I've been thinking about the Dependency Inversion Principle (DIP) and Inversion of Control (IoC) as two different things. While there's some overlap, they're not the same. To make matters more confusing, most people seem to consider IoC and Dependency Injection (DI) as interchangeable synonyms. As Steven van Deursen and I explain in DIPPP, they're not the same.

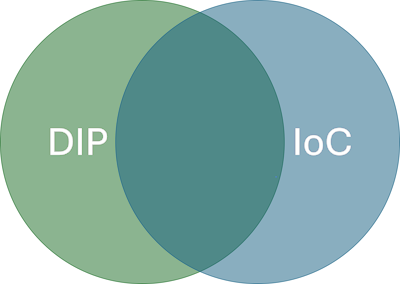

I recently found myself in a discussion on Stack Overflow where I was trying to untangle that confusion for a fellow Stack Overflow user. While I hadn't included a pedagogical Venn diagram, perhaps I should have.

This figure suggests that the sets are of equal size, which doesn't have to be the case. The point, rather, is that while the intersection may be substantial, each relative complement is not only not empty, but richly populated.

In this article, I'm not going to spend more time on the complement IoC without DIP. Rather, I'll expand on how to apply the DIP without IoC.

Appeal to authority? #

While writing the Stack Overflow answer, I'd tried to keep citations to 'original sources'. Sometimes, when a problem is technically concrete, it makes sense for me to link to one of my own works, but I've found that when the discussion is more abstract, that rarely helps convincing people. That's understandable. I'd also be sceptical if I were to run into some rando that immediately proceeded to argue a case by linking to his or her own blog.

This strategy, however elicited this response:

"Are you aware of any DIP-compliant example from Robert Martin that does not utilize polymorphism? The original paper along with some of Martin's lectures certainly seem to imply the DIP requires polymorphism."

That's a fair question, and once I started looking for such examples, I had to admit that I couldn't find any. Eventually, I asked Robert C. Martin directly.

"Does the DIP require polymorphism? I argue that it does't, but I've managed to entangle myself in a debate where original sources count. Could you help us out?"

To which he answered in much detail, but of which the essential response was:

"The DIP does not require polymorphism. Polymorphism is just one of several mechanisms to achieve dependency inversion."

While this was the answer I'd hoped for, it's easy to dismiss this exchange as an appeal to authority. On the other hand, as Carl Sagan said, "If you wish to make an apple pie from scratch, you must first invent the universe," which obviously isn't practical, and so we instead stand on the shoulders of giants.

In this context, asking Robert C. Martin was relevant because he's the original author of works that introduce the DIP. It's reasonable to assume that he has relevant insights on the topic.

It's not that I can't argue my case independently, but rather that I didn't think that the comments section of a Stack Overflow question was the right place to do that. This blog, on the other hand, is mine, I can use all the words I'd like, and I'll now proceed to do so.

Kernel of the idea #

All of Robert C. Martin's treatments of the DIP that I've found starts with the general idea and then proceeds to show examples of implementing it in code. As I've already mentioned, I haven't found a text of Martin's where the example doesn't utilize IoC.

The central idea, however, says nothing about IoC.

"A. High-level modules should not depend on low-level modules. Both should depend on abstractions.

"B. Abstractions should not depend on details. Details should depend upon abstractions."

While only Martin knows what he actually meant, I can attempt a congenial reading of the work. What is most important here, I think, is that the word abstraction doesn't have to denote a particular kind of language construct, such as an abstract class or interface. Rather,

"Abstraction is the elimination of the irrelevant and the amplification of the essential."

The same connotation of abstraction seems to apply to the definition of the DIP. If, for example, we imagine that we consider a Domain Model, the business logic, as the essence we'd like to amplify, we may rightfully consider a particular persistence mechanism a detail. Even more concretely, if you want to take restaurant reservations via a REST API, the business rules that determine whether or not you can accept a reservation shouldn't depend on a particular database technology.

While code examples are useful, there's evidently a risk that if the examples are too much alike, it may constrain readers' thinking. All Martin's examples seem to involve IoC, but for years now, I've mostly been interested in the Dependency Inversion Principle itself. Abstractions should not depend on details. That's the kernel of the idea.

IoC isn't functional #

My thinking was probably helped along by exploring functional programming (FP). A natural question arises when one embarks on learning FP: How does IoC fit with FP? The short answer, it turns out, is that it doesn't. DI, at least, makes everything impure.

Does this mean, then, that FP precludes the DIP? That would be a problem, since the notion that abstractions shouldn't depend on details seems important. Doing FP shouldn't entail giving up on important architectural rules. And fortunately, it turns out not being the case. Quite the contrary, a consistent application of functional architecture seems to lead to Ports and Adapters. It'd go against the grain of FP to have a Domain Model query a relational database. Even if abstracted away, a database exists outside the process space of an application, and is inherently impure. IoC doesn't address that concern.

In FP, there are other ways to address such problems.

DIP sandwich #

While you can always model pure interactions with free monads, it's usually not necessary. In most cases, an Impureim Sandwich suffices.

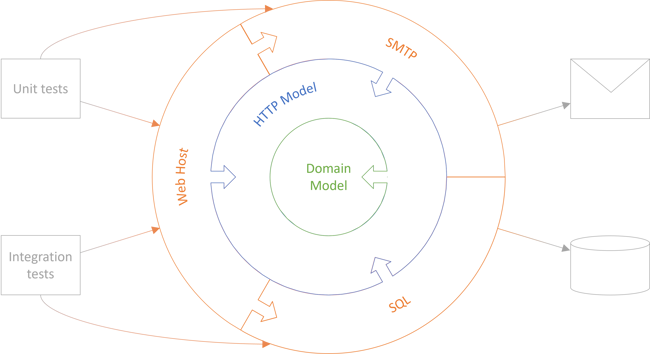

The sample code base that accompanies Code That Fits in Your Head takes a similar approach. While it's possible to refactor it to an explicit Impureim Sandwich, the code presented in the book follows the kindred notion of Functional Core, Imperative Shell.

The code base implements an online restaurant reservation system, and the Domain Model is a set of data structures and pure functions that operate on them. The central and most complex function is the WillAccept method shown here. It decides whether to accept a reservation request, based on restaurant table configurations, existing reservations, business rules related to seating durations, etc. It does this without depending on details. It doesn't know about databases, the application's configuration system, or how to send emails in case it decides to accept a reservation.

All of this is handled by the application's HTTP Model, using the demarcation shown in Decomposing CTFiYH's sample code base. The HTTP Model defines Controllers, Data Transfer Objects (DTOs), middleware, and other building blocks required to drive the actual REST API.

The ReservationsController class contains, among many other methods, this helper method that illustrates the point:

private async Task<ActionResult> TryCreate(Restaurant restaurant, Reservation reservation) { using var scope = new TransactionScope(TransactionScopeAsyncFlowOption.Enabled); var reservations = await Repository.ReadReservations(restaurant.Id, reservation.At); var now = Clock.GetCurrentDateTime(); if (!restaurant.MaitreD.WillAccept(now, reservations, reservation)) return NoTables500InternalServerError(); await Repository.Create(restaurant.Id, reservation); scope.Complete(); return Reservation201Created(restaurant.Id, reservation); }

Notice the call to restaurant.MaitreD.WillAccept. The Controller gathers all data required to call the pure function and subsequently acts on the return value. This keeps the abstraction (MaitreD) free of implementation details.

DI addressing another concern #

You may be wondering what exactly Repository is. If you've bought the book, you also have access to the sample code base, in which case you'd be able to look it up. It turns out that it's an injected dependency. While this may seem a bit contradictory, it also gives me the opportunity to discuss that this isn't an all-or-nothing proposition.

Consider the architecture diagram from Decomposing CTFiYH's sample code base, repeated here for convenience:

In the context of this diagram, the DIP is being applied in two different ways. From the outer Web Host to the HTTP Model, the decomposed code base uses ordinary DI. From the HTTP Model to the Domain Model, there's no inversion of control, but rather the important essence of the DIP: That the Domain Model doesn't depend on any of the details that surrounds it. Even so, the dependencies remain inverted, as indicated by the arrows.

What little DI that's left remains to support automated testing. Injecting Repository and a few other real dependencies enabled me to test-drive the externally visible behaviour of the system with state-based self-hosted tests.

If I hadn't cared about that, I could have hard-coded the SqlReservationsRepository object directly into the Controller and merged the Web Host with the HTTP Module. The Web Host is quite minimal anyway. This would, of course, have meant that the DIP no longer applied at that level, but even so, the interaction between the HTTP Model and the Domain Model would still follow the principle.

One important point about the above figure is that it's not to scale. The Web Host is in reality just six small classes, and the SQL and SMTP libraries each only contain a single class.

Conclusion #

Despite the name similarity, the Dependency Inversion Principle isn't equivalent with Inversion of Control or Dependency Injection. There's a sizeable intersection between the two, but the DIP doesn't require IoC.

I often use the Functional Core, Imperative Shell architecture, or the Impureim Sandwich pattern to invert the dependencies without inverting control. This keeps most of my code more functional, which also means that it fits better in my head and is intrinsically testable.

Modelling data relationships with F# types

An F# example implementation of Ghosts of Departed Proofs.

In a previous article, Encapsulating rod-cutting, I used a code example to discuss how to communicate an API's contract to client developers; that is, users of the API. In the article, I wrote

"All this said, however, it's also possible that I'm missing an obvious design alternative. If you can think of a way to model this relationship in a non-predicative way, please write a comment."

And indeed, a reader helpfully offered an alternative:

"Regarding the relation between the array and the index, you will find the paper called "Ghosts of departed proofs" interesting. Maybe an overkill in this case, maybe not, but a very interesting and useful technique in general."

I wouldn't call it 'an obvious design alternative', but nonetheless find it interesting. In this article, I'll pick up the code from Encapsulating rod-cutting and show how the 'Ghosts of Departed Proofs' (GDP) technique may be applied.

Problem review #

Before we start with the GDP technique, a brief review of the problem is in order. For the complete overview, you should read the Encapsulating rod-cutting article. In the present article, however, we'll focus on one particular problem related to encapsulation:

Ideally, the cut function should take two input arguments. The first argument, p, is an array or list of prices. The second argument, n, is the size of a rod to cut optimally. One precondition states that n must be less than or equal to the length of p. This is because the algorithm needs to look up the price of a rod of size n, and it can't do that if n is greater than the length of p. The implied relationship is that p is indexed by rod size, so that if you want to find the price of a rod of size n, you look at the nth element in p.

How may we model such a relationship in a way that protects the precondition?

An obvious choice, particularly in object-oriented design, is to use a Guard Clause. In the F# code base, it might look like this:

let cut (p : _ array) n = if p.Length <= n then raise (ArgumentOutOfRangeException "n must be less than the length of p") // The rest of the function body...

You might argue that in F# and other functional programming languages, throwing exceptions isn't idiomatic. Instead, you ought to return Result or Option values, here the latter:

let cut (p : _ array) n = if p.Length <= n then None else // The rest of the function body...

To be clear, in most code bases, this is exactly what I would do. What follows is rather exotic, and hardly suitable for all use cases.

Proofs as values #

It's not too hard to model the lower boundary of the n parameter. As is often the case, it turns out that the number must be a natural number. I already covered that in the previous article. It's much harder, however, to model the upper boundary of the value, because it depends on the size of p.

The following is based on the paper Ghosts of Departed Proofs, as well as a helpful Gist also provided by Borar. (The link to the paper is to what I believe is the 'official' page for it, and since it's part of the ACM digital library, it's behind a paywall. Even so, as is the case with most academic papers, it's easy enough to find a PDF of it somewhere else. Not that I endorse content piracy, but it's my impression that academic papers are usually disseminated by the authors themselves.)

The idea is to enable a library to issue a 'proof' about a certain condition. In the example I'm going to use here, the proof is that a certain number is in the valid range for a given list of prices.

We actually can't entirely escape the need for a run-time check, but we do gain two other benefits. The first is that we're now using the type system to communicate a relationship that otherwise would have to be described in written documentation. The second is that once the proof has been issued, there's no need to perform additional run-time checks.

This can help move an API towards a more total, as opposed to partial, definition, which again moves towards what Michael Feathers calls unconditional code. This is particularly useful if the alternative is an API that 'forgets' which run-time guarantees have already been checked. The paper has some examples. I've also recently encountered similar situations when doing Advent of Code 2024. Many days my solution involved immutable maps (like hash tables) that I'd recurse over. In many cases I'd write an algorithm where I with absolute certainty knew that a particular key was in the map (if, for example, I'd just put it there three lines earlier). In such cases, you don't want a total function that returns an option or Maybe value. You want a partial function. Or a type-level guarantee that the value is, indeed, in the map.

For the example in this article, it's overkill, so you may wonder what the point is. On the other hand, a simple example makes it easier to follow what's going on. Hopefully, once you understand the technique, you can extrapolate it to situations where it might be more warranted.

Proof contexts #