ploeh blog danish software design

Encapsulating rod-cutting

Focusing on usage over implementation.

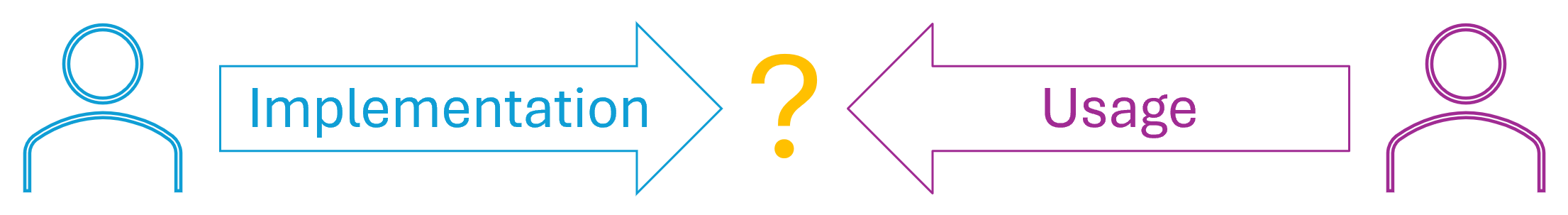

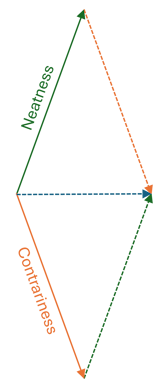

This article is a part of a small article series about implementation and usage mindsets. The hypothesis is that programmers who approach a problem with an implementation mindset may gravitate toward dynamically typed languages, whereas developers concerned with long-term maintenance and sustainability of a code base may be more inclined toward statically typed languages. This could be wrong, and is almost certainly too simplistic, but is still, I hope, worth thinking about. In the previous article you saw examples of an implementation-centric approach to problem-solving. In this article, I'll discuss what a usage-first perspective entails.

A usage perspective indicates that you're first and foremost concerned with how useful a programming interface is. It's what you do when you take advantage of test-driven development (TDD). First, you write a test, which furnishes an example of what a usage scenario looks like. Only then do you figure out how to implement the desired API.

In this article I didn't use TDD since I already had a particular implementation. Even so, while I didn't mention it in the previous article, I did add tests to verify that the code works as intended. In fact, because I wrote a few Hedgehog properties, I have more than 10.000 test cases covering my implementation.

I bring this up because TDD is only one way to focus on sustainability and encapsulation. It's the most scientific methodology that I know of, but you can employ more ad-hoc, ex-post analysis processes. I'll do that here.

Imperative origin #

In the previous article you saw how the Extended-Bottom-Up-Cut-Rod pseudocode was translated to this F# function:

let cut (p : _ array) n = let r = Array.zeroCreate (n + 1) let s = Array.zeroCreate (n + 1) r[0] <- 0 for j = 1 to n do let mutable q = Int32.MinValue for i = 1 to j do if q < p[i] + r[j - i] then q <- p[i] + r[j - i] s[j] <- i r[j] <- q r, s

In case anyone is wondering: This is a bona-fide pure function, even if the implementation is as imperative as can be. Given the same input, cut always returns the same output, and there are no side effects. We may wish to implement the function in a more idiomatic way, but that's not our first concern. My first concern, at least, is to make sure that preconditions, invariants, and postconditions are properly communicated.

The same goal applies to the printSolution action, also repeated here for your convenience.

let printSolution p n = let _, s = cut p n let mutable n = n while n > 0 do printfn "%i" s[n] n <- n - s[n]

Not that I'm not interested in more idiomatic implementations, but after all, they're by definition just implementation details, so first, I'll discuss encapsulation. Or, if you will, the usage perspective.

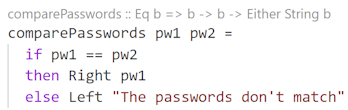

Names and types #

Based on the above two code snippets, we're given two artefacts: cut and printSolution. Since F# is a statically typed language, each operation also has a type.

The type of cut is int array -> int -> int array * int array. If you're not super-comfortable with F# type signatures, this means that cut is a function that takes an integer array and an integer as inputs, and returns a tuple as output. The output tuple is a pair; that is, it contains two elements, and in this particular case, both elements have the same type: They are both integer arrays.

Likewise, the type of printSolution is int array -> int -> unit, which again indicates that inputs must be an integer array and an integer. In this case the output is unit, which, in a sense, corresponds to void in many C-based languages.

Both operations belong to a module called Rod, so their slightly longer, more formal names are Rod.cut and Rod.printSolution. Even so, good names are only skin-deep, and I'm not even convinced that these are particularly good names. To be fair to myself, I adopted the names from the pseudocode from Introduction to Algorithms. Had I been freer to name function and design APIs, I might have chosen different names. As it is, currently, there's no documentation, so the types are the only source of additional information.

Can we infer proper usage from these types? Do they sufficiently well communicate preconditions, invariants, and postconditions? In other words, do the types satisfactorily indicate the contract of each operation? Do the functions exhibit good encapsulation?

We may start with the cut function. It takes as inputs an integer array and an integer. Are empty arrays allowed? Are all integers valid, or perhaps only natural numbers? What about zeroes? Are duplicates allowed? Does the array need to be sorted? Is there a relationship between the array and the integer? Can the single integer parameter be negative?

And what about the return value? Are the two integer arrays related in any way? Can one be empty, but the other large? Can they both be empty? May negative numbers or zeroes be present?

Similar questions apply to the printSolution action.

Not all such questions can be answered by types, but since we already have a type system at our disposal, we might as well use it to address those questions that are easily modelled.

Encapsulating the relationship between price array and rod length #

The first question I decided to answer was this: Is there a relationship between the array and the integer?

The array, you may recall, is an array of prices. The integer is the length of the rod to cut up.

A relationship clearly exists. The length of the rod must not exceed the length of the array. If it does, cut throws an IndexOutOfRangeException. We can't calculate the optimal cuts if we lack price information.

Likewise, we can already infer that the length must be a non-negative number.

While we could choose to enforce this relationship with Guard Clauses, we may also consider a simpler API. Let the function infer the rod length from the array length.

let cut (p : _ array) = let n = p.Length - 1 let r = Array.zeroCreate (n + 1) let s = Array.zeroCreate (n + 1) r[0] <- 0 for j = 1 to n do let mutable q = Int32.MinValue for i = 1 to j do if q < p[i] + r[j - i] then q <- p[i] + r[j - i] s[j] <- i r[j] <- q r, s

You may argue that this API is more implicit, which we generally don't like. The implication is that the rod length is determined by the array length. If you have a (one-indexed) price array of length 10, then how do you calculate the optimal cuts for a rod of length 7?

By shortening the price array:

> let p = [|0; 1; 5; 8; 9; 10; 17; 17; 20; 24; 30|];; val p: int array = [|0; 1; 5; 8; 9; 10; 17; 17; 20; 24; 30|] > cut (p |> Array.take (7 + 1));; val it: int array * int array = ([|0; 1; 5; 8; 10; 13; 17; 18|], [|0; 1; 2; 3; 2; 2; 6; 1|])

This is clearly still sub-optimal. Notice, for example, how you need to add 1 to 7 in order to deal with the prefixed 0. On the other hand, we're not done with the redesign, so it may be worth pursuing this course a little further.

(To be honest, while this is the direction I ultimately choose, I'm not blind to the disadvantages of this implicit design. It makes it less clear to a client developer how to indicate a rod length. An alternative design would keep the price array and the rod length as two separate parameters, but then introduce a Guard Clause to check that the rod length doesn't exceed the length of the price array. Outside of dependent types I can't think of a way to model such a relationship between two values, and I admit to having no practical experience with dependent types. All this said, however, it's also possible that I'm missing an obvious design alternative. If you can think of a way to model this relationship in a non-predicative way, please write a comment.)

I gave the printSolution the same treatment, after first having extracted a solve function in order to separate decisions from effects.

let solve p = let _, s = cut p let l = ResizeArray () let mutable n = p.Length - 1 while n > 0 do l.Add s[n] n <- n - s[n] l |> List.ofSeq let printSolution p = solve p |> List.iter (printfn "%i")

The implementation of the solve function is still imperative, but if you view it as a black box, it's referentially transparent. We'll get back to the implementation later.

Returning a list of cuts #

Let's return to all the questions I enumerated above, particularly the questions about the return value. Are the two integer arrays related?

Indeed they are! In fact, they have the same length.

As explained in the previous article, in the original pseudocode, the r array is supposed to be zero-indexed, but non-empty and containing 0 as the first element. The s array is supposed to be one-indexed, and be exactly one element shorter than the r array. In practice, in all three implementations shown in that article, I made both arrays zero-indexed, non-empty, and of the exact same length. This is also true for the F# implementation.

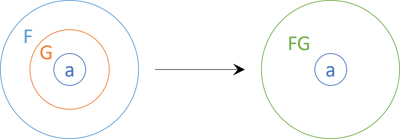

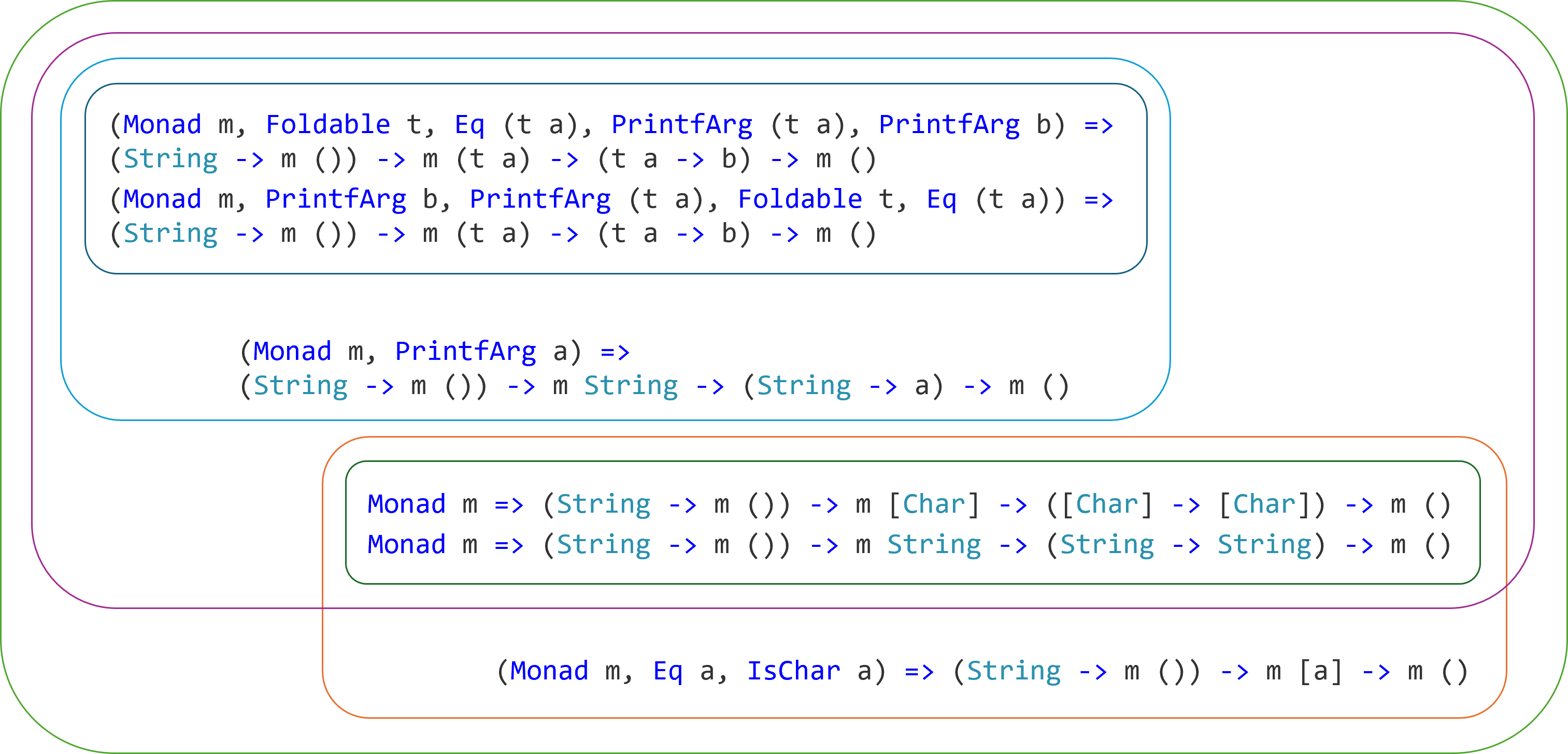

We can communicate this relationship much better to client developers by changing the return type of the cut function. Currently, the return type is int array * int array, indicating a pair of arrays. Instead, we can change the return type to an array of pairs, thereby indicating that the values are related two-and-two.

That would be a decent change, but we can further improve the API. A pair of integers are still implicit, because it isn't clear which integer represents the revenue and which one represents the size. Instead, we introduce a custom type with clear labels:

type Cut = { Revenue : int; Size : int }

Then we change the cut function to return a collection of Cut values:

let cut (p : _ array) = let n = p.Length - 1 let r = Array.zeroCreate (n + 1) let s = Array.zeroCreate (n + 1) r[0] <- 0 for j = 1 to n do let mutable q = Int32.MinValue for i = 1 to j do if q < p[i] + r[j - i] then q <- p[i] + r[j - i] s[j] <- i r[j] <- q let result = ResizeArray () for i = 0 to n do result.Add { Revenue = r[i]; Size = s[i] } result |> List.ofSeq

The type of cut is now int array -> Cut list. Notice that I decided to return a linked list rather than an array. This is mostly because I consider linked lists to be more idiomatic than arrays in a context of functional programming (FP), but to be honest, I'm not sure that it makes much difference as a return value.

In any case, you'll observe that the implementation is still imperative. The main topic of this article is how to give an API good encapsulation, so I treat the actual code as an implementation detail. It's not the most important thing.

Linked list input #

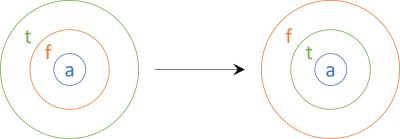

Although I wrote that I'm not sure it makes much difference whether cut returns an array or a list, it does matter when it comes to input values. Currently, cut takes an int array as input.

As the implementation so amply demonstrates, F# arrays are mutable; you can mutate the cells of an array. A client developer may worry, then, whether cut modifies the input array.

From the implementation code we know that it doesn't, but encapsulation is all about sparing client developers the burden of having to read the implementation. Rather, an API should communicate its contract in as succinct a way as possible, either via documentation or the type system.

In this case, we can use the type system to communicate this postcondition. Changing the input type to a linked list effectively communicates to all users of the API that cut doesn't mutate the input. This is because F# linked lists are truly immutable.

let cut prices = let p = prices |> Array.ofList let n = p.Length - 1 let r = Array.zeroCreate (n + 1) let s = Array.zeroCreate (n + 1) r[0] <- 0 for j = 1 to n do let mutable q = Int32.MinValue for i = 1 to j do if q < p[i] + r[j - i] then q <- p[i] + r[j - i] s[j] <- i r[j] <- q let result = ResizeArray () for i = 0 to n do result.Add { Revenue = r[i]; Size = s[i] } result |> List.ofSeq

The type of the cut function is now int list -> Cut list, which informs client developers of an invariant. You can trust that cut will not change the input arguments.

Natural numbers #

You've probably gotten the point by now, so let's move a bit quicker. There are still issues that we'd like to document. Perhaps the worst part of the current API is that client code is required to supply a prices list where the first element must be zero. That's a very specific requirement. It's easy to forget, and if you do, the cut function just silently fails. It doesn't throw an exception; it just gives you a wrong answer.

We may choose to add a Guard Clause, but why are we even putting that responsibility on the client developer? Why can't the cut function add that prefix itself? It can, and it turns out that once you do that, and also remove the initial zero element from the output, you're now working with natural numbers.

First, add a NaturalNumber wrapper of integers:

type NaturalNumber = private NaturalNumber of int with member this.Value = let (NaturalNumber i) = this in i static member tryCreate candidate = if candidate < 1 then None else Some <| NaturalNumber candidate override this.ToString () = let (NaturalNumber i) = this in string i

Since the case constructor is private, external code can only try to create values. Once you have a NaturalNumber value, you know that it's valid, but creation requires a run-time check. In other words, this is what Hillel Wayne calls predicative data.

Armed with this new type, however, we can now strengthen the definition of the Cut record type:

type Cut = { Revenue : int; Size : NaturalNumber } with static member tryCreate revenue size = NaturalNumber.tryCreate size |> Option.map (fun size -> { Revenue = revenue; Size = size })

The Revenue may still be any integer, because it turns out that the algorithm also works with negative prices. (For a book that's very meticulous in its analysis of algorithms, CLRS is surprisingly silent on this topic. Thorough testing with Hedgehog, however, indicates that this is so.) On the other hand, the Size of the Cut must be a NaturalNumber. Since, again, we don't have any constructive way (outside of using refinement types) of modelling this requirement, we also supply a tryCreate function.

This enables us to define the cut function like this:

let cut prices = let p = prices |> List.append [0] |> Array.ofList let n = p.Length - 1 let r = Array.zeroCreate (n + 1) let s = Array.zeroCreate (n + 1) r[0] <- 0 for j = 1 to n do let mutable q = Int32.MinValue for i = 1 to j do if q < p[i] + r[j - i] then q <- p[i] + r[j - i] s[j] <- i r[j] <- q let result = ResizeArray () for i = 1 to n do Cut.tryCreate r[i] s[i] |> Option.iter result.Add result |> List.ofSeq

It still has the type int list -> Cut list, but the Cut type is now more restrictively designed. In other words, we've provided a more conservative definition of what we return, in keeping with Postel's law.

Furthermore, notice that the first line prepends 0 to the p array, so that the client developer doesn't have to do that. Likewise, when returning the result, the for loop goes from 1 to n, which means that it omits the first zero cut.

These changes ripple through and also improves encapsulation of the solve function:

let solve prices = let cuts = cut prices let l = ResizeArray () let mutable n = prices.Length while n > 0 do let idx = n - 1 let s = cuts.[idx].Size l.Add s n <- n - s.Value l |> List.ofSeq

The type of solve is now int list -> NaturalNumber list.

This is about as strong as I can think of making the API using F#'s type system. A type like int list -> NaturalNumber list tells you something about what you're allowed to do, what you're expected to do, and what you can expect in return. You can provide (almost) any list of integers, both positive, zero, or negative. You may also give an empty list. If we had wanted to prevent that, we could have used a NonEmpty list, as seen (among other places) in the article Conservative codomain conjecture.

Okay, to be perfectly honest, there's one more change that might be in order, but this is where I ran out of steam. One remaining precondition that I haven't yet discussed is that the input list must not contain 'too big' numbers. The problem is that the algorithm adds numbers together, and since 32-bit integers are bounded, you could run into overflow situations. Ask me how I know.

Changing the types to use 64-bit integers doesn't solve that problem (it only moves the boundary of where overflow happens), but consistently changing the API to work with BigInteger values might. To be honest, I haven't tried.

Functional programming #

From an encapsulation perspective, we're done now. By using the type system, we've emphasized how to use the API, rather than how it's implemented. Along the way, we even hid away some warts that came with the implementation. If I wanted to take this further, I would seriously consider making the cut function a private helper function, because it doesn't really return a solution. It only returns an intermediary value that makes it easier for the solve function to return the actual solution.

If you're even just a little bit familiar with F# or functional programming, you may have found it painful to read this far. All that imperative code. My eyes! For the love of God, please rewrite the implementation with proper FP idioms and patterns.

Well, the point of the whole article is that the implementation doesn't really matter. It's how client code may use the API that's important.

That is, of course, until you have to go and change the implementation code. In any case, as a little consolation prize for those brave FP readers who've made it all this way, here follows more functional implementations of the functions.

The NaturalNumber and Cut types haven't changed, so the first change comes with the cut function:

let private cons x xs = x :: xs let cut prices = let p = 0 :: prices |> Array.ofList let n = p.Length - 1 let findBestCut revenues j = [1..j] |> List.map (fun i -> p[i] + Map.find (j - i) revenues, i) |> List.maxBy fst let aggregate acc j = let revenues = snd acc let q, i = findBestCut revenues j let cuts = fst acc cuts << (cons (q, i)), Map.add revenues.Count q revenues [1..n] |> List.fold aggregate (id, Map.add 0 0 Map.empty) |> fst <| [] // Evaluate Hughes list |> List.choose (fun (r, i) -> Cut.tryCreate r i)

Even here, however, some implementation choices are dubious at best. For instance, I decided to use a Hughes list or difference list (see Tail Recurse for a detailed explanation of how this works in F#) without measuring whether or not it was better than just using normal list consing followed by List.rev (which is, in fact, often faster). That's one of the advantages of writing code for articles; such things don't really matter that much in that context.

Another choice that may leave you scratching your head is that I decided to model the revenues as a map (that is, an immutable dictionary) rather than an array. I did this because I was concerned that with the move towards immutable code, I'd have n reallocations of arrays. Perhaps, I thought, adding incrementally to a Map structure would be more efficient.

But really, all of that is just wanking, because I haven't measured.

The FP-style implementation of solve is, I believe, less controversial:

let solve prices = let cuts = cut prices let rec imp n = if n <= 0 then [] else let idx = n - 1 let s = cuts[idx].Size s :: imp (n - s.Value) imp prices.Length

This is a fairly standard implementation using a local recursive helper function.

Both cut and solve have the types previously reported. In other words, this final refactoring to functional implementations didn't change their types.

Conclusion #

This article goes through a series of code improvements to illustrate how a static type system can make it easier to use an API. Use it correctly, that is.

There's a common misconception about ease of use that it implies typing fewer characters, or getting instant gratification. That's not my position. Typing is not a bottleneck, and in any case, not much is gained if you make it easier for client developers to get the wrong answers from your API.

Static types gives you a consistent vocabulary you can use to communicate an API's contract to client developers. What must client code do in order to make a valid method or function call? What guarantees can client code rely on? Encapsulation, in other words.

P.S. 2025-01-20:

For a type-level technique for modelling the relationship between rod size and price list, see Modelling data relationships with F# types.

Pytest is fast

One major attraction of Python. A recent realization.

Ever since I became aware of the distinction between statically and dynamically typed languages, I've struggled to understand the attraction of dynamically typed languages. As regular readers may have noticed, this is a bias that doesn't sit well with me. Clearly, there are advantages to dynamic languages that I fail to notice. Is it a question of mindset? Or is it a combination of several small advantages?

In this article, I'll discuss another potential benefit of at least one dynamically typed language, Python.

Fast feedback #

Rapid feedback is a cornerstone of modern software engineering. I've always considered the feedback from the compiler an important mechanism, but I've recently begun to realize that it comes with a price. While a good type system keeps you honest, compilation takes time, too.

Since I've been so entrenched in the camp of statically typed languages (C#, F#, Haskell), I've tended to regard compilation as a mandatory step. And since the compiler needs to run anyway, you might as well take advantage of it. Use the type system to make illegal states unrepresentable, and all that.

Even so, I've noticed that compilation time isn't a fixed size. This observation surely borders on the banal, but with sufficient cognitive bias, it can, apparently, take years to come to even such a trivial conclusion. After initial years with various programming languages, my formative years as a programmer were spent with C#. As it turns out, the C# compiler is relatively fast.

This is probably a combination of factors. Since C# is a one of the most popular languages, it has a big and skilled engineering team, and it's my impression that much effort goes into making it as fast and efficient as possible.

I also speculate that, since the C# type system isn't as powerful as F#'s or Haskell's, there's simply work that it can't do. When you can't expression certain constraints or relationships with the type system, the compiler can't check them, either.

That said, the C# compiler seems to have become slower over the years. This could be a consequence of all the extra language features that accumulate.

The F# compiler, in comparison, has always taken longer than the C# compiler. Again, this may be due to a combination of a smaller engineering team and that it actually can check more things at compile time, since the type system is more expressive.

This, at least, seems to fit with the observation that the Haskell compiler is even slower than F#. The language is incredibly expressive. There's a lot of constraints and relationships that you can model with the type system. Clearly, the compiler has to perform extra work to check that your types line up.

You're often left with the impression that if it compiles, it works. The drawback is that getting Haskell code to compile may be a non-trivial undertaking.

One thing is that you'll have to wait for the compiler. Another is that if you practice test-driven development (TDD), you'll have to compile the test code, too. Only once the tests are compiled can you run them. And TDD test suites should run in 10 seconds or less.

Skipping compilation with pytest #

A few years ago I had to learn a bit of Python, so I decided to try Advent of Code 2022 in Python. As the puzzles got harder, I added unit tests with pytest. When I ran them, I was taken aback at how fast they ran.

There's no compilation step, so the test suite runs immediately. Obviously, if you've made a mistake that a compiler would have caught, the test fails, but if the code makes sense to the interpreter, it just runs.

For various reasons, I ran out of steam, as one does with Advent of Code, but I managed to write a good little test suite. Until day 17, it ran in 0.15-0.20 seconds on my little laptop. To be honest, though, once I added tests for day 17, feedback time jumped to just under two seconds. This is clearly because I'd written some inefficient code for my System Under Test.

I can't really blame a test framework for being slow, when it's really my own code that slows it down.

A counter-argument is that a compiled language is much faster than an interpreted one. Thus, one might think that a faster language would counter poor implementations. Not so.

TDD with Haskell #

As I've already outlined, the Haskell compiler takes more time than C#, and obviously it takes more time than a language that isn't compiled at all. On the other hand, Haskell compiles to native machine code. My experience with it is that once you've compiled your program, it's fast.

In order to compare the two styles, I decided to record compilation and test times while doing Advent of Code 2024 in Haskell. I set up a Haskell code base with Stack and HUnit, as I usually do. As I worked through the puzzles, I'd be adding and running tests. Every time I recorded the time it took, using the time command to measure the time it took for stack test to run.

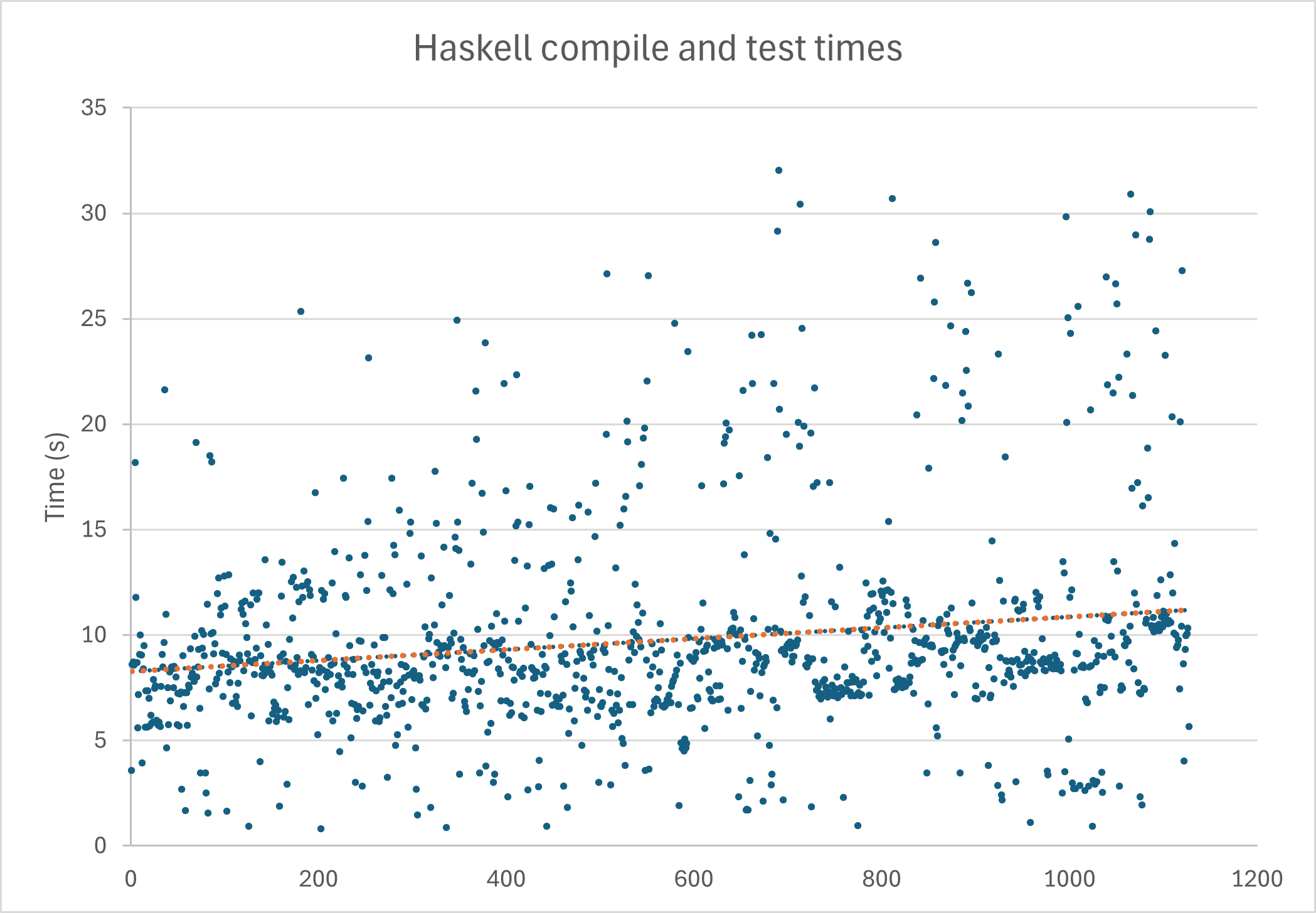

I've plotted the observations in this chart:

The chart shows more than a thousand observations, with the first to the left, and the latest to the right. The times recorded are the entire time it took from I started a test run until I had an answer. For this, I used the time command's real time measurement, rather than user or sys time. What matters is the feedback time; not the CPU time.

Each measurement is in seconds. The dashed orange line indicates the linear trend.

It's not the first time I've written Haskell code, so I knew what to expect. While you get the occasional fast turnaround time, it easily takes around ten seconds to compile even an empty code base. It seems that there's a constant overhead of that size. While there's an upward trend line as I added more and more code, and more tests, actually running the tests takes almost no time. The initial 'average' feedback time was around eight seconds, and 1100 observations later, the trends sits around 11.5 seconds. At this time, I had more than 200 test cases.

You may also notice that the observations vary quite a bit. You occasionally see sub-second times, but also turnaround times over thirty seconds. There's an explanation for both.

The sub-second times usually happen if I run the test suite twice without changing any code. In that case, the Haskell Stack correctly skips recompiling the code and instead just reruns the tests. This only highlights that I'm not waiting for the tests to execute. The tests are fast. It's the compiler that causes over 90% of the delay.

(Why would I rerun a test suite without changing any code? This mostly happens when I take a break from programming, or if I get distracted by another task. In such cases, when I return to the code, I usually run the test suite in order to remind myself of the state in which I left it. Sometimes, it turns out, I'd left the code in a state were the last thing I did was to run all tests.)

The other extremes have a different explanation.

IDE woes #

Why do I have to suffer through those turnaround times over twenty seconds? A few times over thirty?

The short answer is that these represent complete rebuilds. Most of these are caused by problems with the IDE. For Haskell development, I use Visual Studio Code with the Haskell extension.

Perhaps it's only my setup that's messed up, but whenever I change a function in the System Under Test (SUT), I can. not. make. VS Code pick up that the API changed. Even if I correct my tests so that they still compile and run successfully from the command line, VS Code will keep insisting that the code is wrong.

This is, of course, annoying. One of the major selling points of statically type languages is that a good IDE can tell you if you made mistakes. Well, if it operates on an outdated view of what the SUT looks like, this no longer works.

I've tried restarting the Haskell Language Server, but that doesn't work. The only thing that works, as far as I've been able to discover, is to close VS Code, delete .stack-work, recompile, and reopen VS Code. Yes, that takes a minute or two, so not something I like doing too often.

Deleting .stack-work does trigger a full rebuild, which is why we see those long build times.

Striking a good balance #

What bothers me about dynamic languages is that I find discoverability and encapsulation so hard. I can't just look at the type of an operation and deduce what inputs it might take, or what the output might look like.

To be honest, if you give me a plain text file with F# or Haskell, I can't do that either. A static type system doesn't magically surface that kind of information. Instead, you may rely on an IDE to provide such information at your fingertips. The Haskell extension, for example, gives you a little automatic type annotation above your functions, as discussed in the article Pendulum swing: no Haskell type annotation by default, and shown in a figure reprinted here for your convenience:

If this is to work well, this information must be immediate and responsive. On my system it isn't.

It may, again, be that there's some problem with my particular tool chain setup. Or perhaps a four-year-old Lenovo X1 Carbon is just too puny a machine to effectively run such a tool.

On the other hand, I don't have similar issues with C# in Visual Studio (not VS Code). When I make changes, the IDE quickly responds and tells me if I've made a mistake. To be honest, even here, I feel that it was faster and more responsive a decade ago, but compared to Haskell programming, the feedback I get with C# is close to immediate.

My experience with F# is somewhere in between. Visual Studio is quicker to pick up changes in F# code than VS Code is to reflect changes in Haskell, but it's not as fast as C#.

With Python, what little IDE integration is available is usually not trustworthy. Essentially, when suggesting callable operations, the IDE is mostly guessing, based on what it's already seen.

But, good Lord! The tests run fast.

Conclusion #

My recent experiences with both Haskell and Python programming is giving me a better understanding of the balances and trade-offs involved with picking a language. While I still favour statically typed languages, I'm beginning to see some attractive qualities on the other side.

Particularly, if you buy the argument that TDD suites should run in 10 seconds or less, this effectively means that I can't do TDD in Haskell. Not with the hardware I'm running. Python, on the other hand, seems eminently well-suited for TDD.

That doesn't sit too well with me, but on the other hand, I'm glad. I've learned about a benefit of a dynamically typed language. While you may consider all of this ordinary and trite, it feels like a small breakthrough to me. I've been trying hard to see past my own limitations, and it finally feels as though I've found a few chinks in the armour of my biases.

I'll keep pushing those envelopes to see what else I may learn.

Implementing rod-cutting

From pseudocode to implementation in three languages.

This article picks up where Implementation and usage mindsets left off, examining how easy it is to implement an algorithm in three different programming languages.

As an example, I'll use the bottom-up rod-cutting algorithm from Introduction to Algorithms.

Rod-cutting #

The problem is simple:

"Serling Enterprises buys long steel rods and cuts them into shorter rods, which it then sells. Each cut is free. The management of Serling Enterprises wants to know the best way to cut up the rods."

You're given an array of prices, or rather revenues, that each size is worth. The example from the book is given as a table:

| length i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| price pi | 1 | 5 | 8 | 9 | 10 | 17 | 17 | 20 | 24 | 30 |

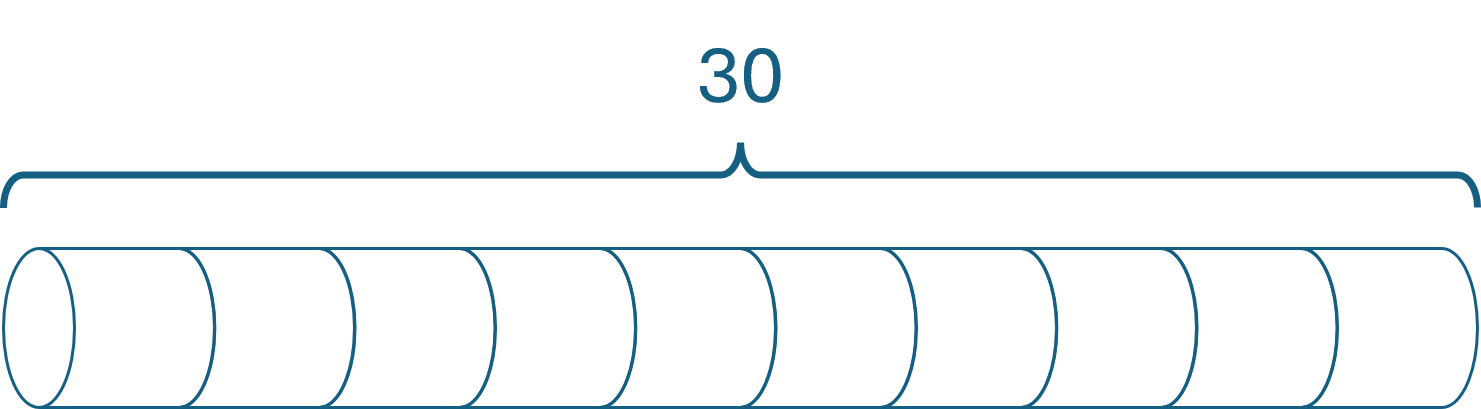

Notice that while this implies an array like [1, 5, 8, 9, 10, 17, 17, 20, 24, 30], the array is understood to be one-indexed, as is the most common case in the book. Most languages, including all three languages in this article, have zero-indexed arrays, but it turns out that we can get around the issue by adding a leading zero to the array: [0, 1, 5, 8, 9, 10, 17, 17, 20, 24, 30].

Thus, given that price array, the best you can do with a rod of length 10 is to leave it uncut, yielding a revenue of 30.

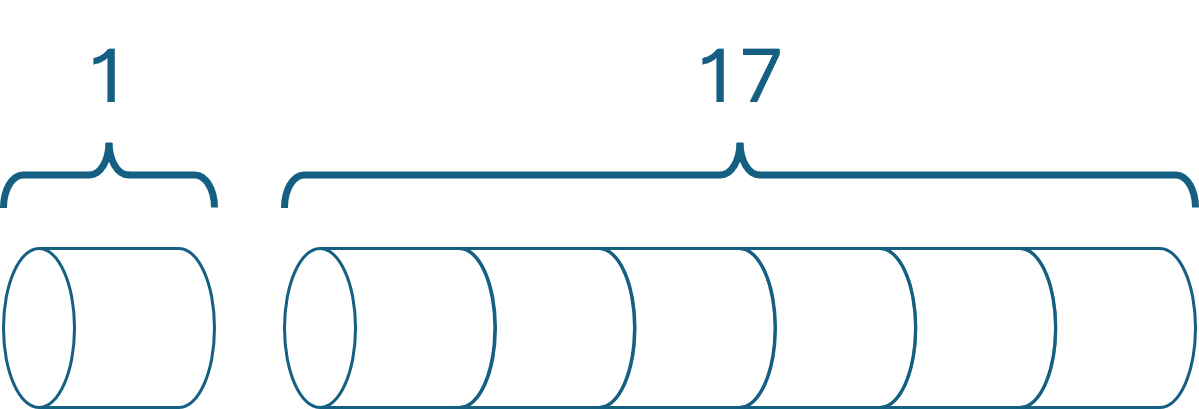

On the other hand, if you have a rod of length 7, you can cut it into two rods of lengths 1 and 6.

Another solution for a rod of length 7 is to cut it into three rods of sizes 2, 2, and 3. Both solutions yield a total revenue of 18. Thus, while more than one optimal solution exists, the algorithm given here only identifies one of them.

Extended-Bottom-Up-Cut-Rod(p, n) 1 let r[0:n] and s[1:n] be new arrays 2 r[0] = 0 3 for j = 1 to n // for increasing rod length j 4 q = -∞ 5 for i = 1 to j // i is the position of the first cut 6 if q < p[i] + r[j - i] 7 q = p[i] + r[j - i] 8 s[j] = i // best cut location so far for length j 9 r[j] = q // remember the solution value for length j 10 return r and s

Which programming language is this? It's no particular language, but rather pseudocode.

The reason that the function is called Extended-Bottom-Up-Cut-Rod is that the book pedagogically goes through a few other algorithms before arriving at this one. Going forward, I don't intend to keep that rather verbose name, but instead just call the function cut_rod, cutRod, or Rod.cut.

The p parameter is a one-indexed price (or revenue) array, as explained above, and n is a rod size (e.g. 10 or 7, reflecting the above examples).

Given the above price array and n = 10, the algorithm returns two arrays, r for maximum possible revenue for a given cut, and s for the size of the maximizing cut.

| i | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| r[i] | 0 | 1 | 5 | 8 | 10 | 13 | 17 | 18 | 22 | 25 | 30 |

| s[i] | 1 | 2 | 3 | 2 | 2 | 6 | 1 | 2 | 3 | 10 |

Such output doesn't really give a solution, but rather the raw data to find a solution. For example, for n = 10 (= i), you consult the table for (one-indexed) index 10, and see that you can get the revenue 30 from making a cut at position 10 (which effectively means no cut). For n = 7, you consult the table for index 7 and observe that you can get the total revenue 18 by making a cut at position 1. This leaves you with two rods, and you again consult the table. For n = 1, you can get the revenue 1 by making a cut at position 1; i.e. no further cut. For n = 7 - 1 = 6 you consult the table and observe that you can get the revenue 17 by making a cut at position 6, again indicating that no further cut is necessary.

Another procedure prints the solution, using the above process:

Print-Cut-Rod-Solution(p, n) 1 (r, s) = Extended-Bottom-Up-Cut-Rod(p, n) 2 while n > 0 3 print s[n] // cut location for length n 4 n = n - s[n] // length of the remainder of the rod

Again, the procedure is given as pseudocode.

How easy is it translate this algorithm into code in a real programming language? Not surprisingly, this depends on the language.

Translation to Python #

The hypothesis of the previous article is that dynamically typed languages may be more suited for implementation tasks. The dynamically typed language that I know best is Python, so let's try that.

def cut_rod(p, n): r = [0] * (n + 1) s = [0] * (n + 1) r[0] = 0 for j in range(1, n + 1): q = float('-inf') for i in range(1, j + 1): if q < p[i] + r[j - i]: q = p[i] + r[j - i] s[j] = i r[j] = q return r, s

That does, indeed, turn out to be straightforward. I had to figure out the syntax for initializing arrays, and how to represent negative infinity, but a combination of GitHub Copilot and a few web searches quickly cleared that up.

The same is true for the Print-Cut-Rod-Solution procedure.

def print_cut_rod_solution(p, n): r, s = cut_rod(p, n) while n > 0: print(s[n]) n = n - s[n]

Apart from minor syntactical differences, the pseudocode translates directly to Python.

So far, the hypothesis seems to hold. This particular dynamically typed language, at least, easily implements that particular algorithm. If we must speculate about underlying reasons, we may argue that a dynamically typed language is low on ceremony. You don't have to get side-tracked by declaring types of parameters, variables, or return values.

That, at least, is a common complaint about statically typed languages that I hear when I discuss with lovers of dynamically typed languages.

Let us, then, try to implement the rod-cutting algorithm in a statically typed language.

Translation to Java #

Together with other C-based languages, Java is infamous for requiring a high amount of ceremony to get anything done. How easy is it to translate the rod-cutting pseudocode to Java? Not surprisingly, it turns out that one has to jump through a few more hoops.

First, of course, one has to set up a code base and choose a build system. I'm not well-versed in Java development, but here I (more or less) arbitrarily chose gradle. When you're new to an ecosystem, this can be a significant barrier, but I know from decades of C# programming that tooling alleviates much of that pain. Still, a single .py file this isn't.

Apart from that, the biggest hurdle turned out to be that, as far as I can tell, Java doesn't have native tuple support. Thus, in order to return two arrays, I would have to either pick a reusable package that implements tuples, or define a custom class for that purpose. Object-oriented programmers often argue that tuples represent poor design, since a tuple doesn't really communicate the role or intent of each element. Given that the rod-cutting algorithm returns two integer arrays, I'd be inclined to agree. You can't even tell them apart based on their types. For that reason, I chose to define a class to hold the result of the algorithm.

public class RodCuttingSolution { private int[] revenues; private int[] sizes; public RodCuttingSolution(int[] revenues, int[] sizes) { this.revenues = revenues; this.sizes = sizes; } public int[] getRevenues() { return revenues; } public int[] getSizes() { return sizes; } }

Armed with this return type, the rest of the translation went smoothly.

public static RodCuttingSolution cutRod(int[] p, int n) { var r = new int[n + 1]; var s = new int[n + 1]; r[0] = 0; for (int j = 1; j <= n; j++) { var q = Integer.MIN_VALUE; for (int i = 1; i <= j; i++) { if (q < p[i] + r[j - i]) { q = p[i] + r[j - i]; s[j] = i; } } r[j] = q; } return new RodCuttingSolution(r, s); }

Granted, there's a bit more ceremony involved compared to the Python code, since one must declare the types of both input parameters and method return type. You also have to declare the type of the arrays when initializing them, and you could argue that the for loop syntax is more complicated than Python's for ... in range ... syntax. One may also complain that all the brackets and parentheses makes it harder to read the code.

While I'm used to such C-like code, I'm not immune to such criticism. I actually do find the Python code more readable.

Translating the Print-Cut-Rod-Solution pseudocode is a bit easier:

public static void printCutRodSolution(int[] p, int n) { var result = cutRod(p, n); while (n > 0) { System.out.println(result.getSizes()[n]); n = n - result.getSizes()[n]; } }

The overall structure of the code remains intact, but again we're burdened with extra ceremony. We have to declare input and output types, and call that awkward getSizes method to retrieve the array of cut sizes.

It's possible that my Java isn't perfectly idiomatic. After all, although I've read many books with Java examples over the years, I rarely write Java code. Additionally, you may argue that static methods exhibit a code smell like Feature Envy. I might agree, but the purpose of the current example is to examine how easy or difficult it is to implement a particular algorithm in various languages. Now that we have an implementation in Java, we might wish to refactor to a more object-oriented design, but that's outside the scope of this article.

Given that the rod-cutting algorithm isn't the most complex algorithm that exists, we may jump to the conclusion that Java isn't that bad compared to Python. Consider, however, how the extra ceremony on display here impacts your work if you have to implement a larger algorithm, or if you need to iterate to find an algorithm on your own.

To be clear, C# would require a similar amount of ceremony, and I don't even want to think about doing this in C.

All that said, it'd be irresponsible to extrapolate from only a few examples. You'd need both more languages and more problems before it even seems reasonable to draw any conclusions. I don't, however, intend the present example to constitute a full argument. Rather, it's an illustration of an idea that I haven't pulled out of thin air.

One of the points of Zone of Ceremony is that the degree of awkwardness isn't necessarily correlated to whether types are dynamically or statically defined. While I'm sure that I miss lots of points made by 'dynamists', this is a point that I often feel is missed by that camp. One language that exemplifies that 'beyond-ceremony' zone is F#.

Translation to F# #

If I'm right, we should be able to translate the rod-cutting pseudocode to F# with approximately the same amount of trouble than when translating to Python. How do we fare?

let cut (p : _ array) n = let r = Array.zeroCreate (n + 1) let s = Array.zeroCreate (n + 1) r[0] <- 0 for j = 1 to n do let mutable q = Int32.MinValue for i = 1 to j do if q < p[i] + r[j - i] then q <- p[i] + r[j - i] s[j] <- i r[j] <- q r, s

Fairly well, as it turns out, although we do have to annotate p by indicating that it's an array. Still, the underscore in front of the array keyword indicates that we're happy to let the compiler infer the type of array (which is int array).

(We can get around that issue by writing Array.item i p instead of p[i], but that's verbose in a different way.)

Had we chosen to instead implement the algorithm based on an input list or map, we wouldn't have needed the type hint. One could therefore argue that the reason that the hint is even required is because arrays aren't the most idiomatic data structure for a functional language like F#.

Otherwise, I don't find that this translation was much harder than translating to Python, and I personally prefer for j = 1 to n do over for j in range(1, n + 1):.

We also need to add the mutable keyword to allow q to change during the loop. You could argue that this is another example of additional ceremony, While I agree, it's not much related to static versus dynamic typing, but more to how values are immutable by default in F#. If I recall correctly, JavaScript similarly distinguishes between let, var, and const.

Translating Print-Cut-Rod-Solution requires, again due to values being immutable by default, a bit more effort than Python, but not much:

let printSolution p n = let _, s = cut p n let mutable n = n while n > 0 do printfn "%i" s[n] n <- n - s[n]

I had to shadow the n parameter with a mutable variable to stay as close to the pseudocode as possible. Again, one may argue that the overall problem here isn't the static type system, but that programming based on mutation isn't idiomatic for F# (or other functional programming languages). As you'll see in the next article, a more idiomatic implementation is even simpler than this one.

Notice, however, that the printSolution action requires no type declarations or annotations.

Let's see it all in use:

> let p = [|0; 1; 5; 8; 9; 10; 17; 17; 20; 24; 30|];; val p: int array = [|0; 1; 5; 8; 9; 10; 17; 17; 20; 24; 30|] > Rod.printSolution p 7;; 1 6

This little interactive session reproduces the example illustrated in the beginning of this article, when given the price array from the book and a rod of size 7, the solution printed indicates cuts at positions 1 and 6.

I find it telling that the translation to F# is on par with the translation to Python, even though the structure of the pseudocode is quite imperative.

Conclusion #

You could, perhaps, say that if your mindset is predominantly imperative, implementing an algorithm using Python is likely easier than both F# or Java. If, on the other hand, you're mostly in an implementation mindset, but not strongly attached to whether the implementation should be imperative, object-oriented, or functional, I'd offer the conjecture that a language like F# is as implementation-friendly as a language like Python.

If, on the other hand, you're more focused on encapsulating and documenting how an existing API works, perhaps that shift of perspective suggests another evaluation of dynamically versus statically typed languages.

In any case, the F# code shown here is hardly idiomatic, so it might be illuminating to see what happens if we refactor it.

Next: Encapsulating rod-cutting.

A restaurant sandwich

An Impureim Sandwich example in C#.

When learning functional programming (FP) people often struggle with how to organize code. How do you discern and maintain purity? How do you do Dependency Injection in FP? What does a functional architecture look like?

A common FP design pattern is the Impureim Sandwich. The entry point of an application is always impure, so you push all impure actions to the boundary of the system. This is also known as Functional Core, Imperative Shell. If you have a micro-operation-based architecture, which includes all web-based systems, you can often get by with a 'sandwich'. Perform impure actions to collect all the data you need. Pass all data to a pure function. Finally, use impure actions to handle the referentially transparent return value from the pure function.

No design pattern applies universally, and neither does this one. In my experience, however, it's surprisingly often possible to apply this architecture. We're far past the Pareto principle's 80 percent.

Examples may help illustrate the pattern, as well as explore its boundaries. In this article you'll see how I refactored an entry point of a REST API, specifically the PUT handler in the sample code base that accompanies Code That Fits in Your Head.

Starting point #

As discussed in the book, the architecture of the sample code base is, in fact, Functional Core, Imperative Shell. This isn't, however, the main theme of the book, and the code doesn't explicitly apply the Impureim Sandwich. In spirit, that's actually what's going on, but it isn't clear from looking at the code. This was a deliberate choice I made, because I wanted to highlight other software engineering practices. This does have the effect, though, that the Impureim Sandwich is invisible.

For example, the book follows the 80/24 rule closely. This was a didactic choice on my part. Most code bases I've seen in the wild have far too big methods, so I wanted to hammer home the message that it's possible to develop and maintain a non-trivial code base with small code blocks. This meant, however, that I had to split up HTTP request handlers (in ASP.NET known as action methods on Controllers).

The most complex HTTP handler is the one that handles PUT requests for reservations. Clients use this action when they want to make changes to a restaurant reservation.

The action method actually invoked by an HTTP request is this Put method:

[HttpPut("restaurants/{restaurantId}/reservations/{id}")] public async Task<ActionResult> Put( int restaurantId, string id, ReservationDto dto) { if (dto is null) throw new ArgumentNullException(nameof(dto)); if (!Guid.TryParse(id, out var rid)) return new NotFoundResult(); Reservation? reservation = dto.Validate(rid); if (reservation is null) return new BadRequestResult(); var restaurant = await RestaurantDatabase .GetRestaurant(restaurantId).ConfigureAwait(false); if (restaurant is null) return new NotFoundResult(); return await TryUpdate(restaurant, reservation).ConfigureAwait(false); }

Since I, for pedagogical reasons, wanted to fit each method inside an 80x24 box, I made a few somewhat unnatural design choices. The above code is one of them. While I don't consider it completely indefensible, this method does a bit of up-front input validation and verification, and then delegates execution to the TryUpdate method.

This may seem all fine and dandy until you realize that the only caller of TryUpdate is that Put method. A similar thing happens in TryUpdate: It calls a method that has only that one caller. We may try to inline those two methods to see if we can spot the Impureim Sandwich.

Inlined Transaction Script #

Inlining those two methods leave us with a larger, Transaction Script-like entry point:

[HttpPut("restaurants/{restaurantId}/reservations/{id}")] public async Task<ActionResult> Put( int restaurantId, string id, ReservationDto dto) { if (dto is null) throw new ArgumentNullException(nameof(dto)); if (!Guid.TryParse(id, out var rid)) return new NotFoundResult(); Reservation? reservation = dto.Validate(rid); if (reservation is null) return new BadRequestResult(); var restaurant = await RestaurantDatabase .GetRestaurant(restaurantId).ConfigureAwait(false); if (restaurant is null) return new NotFoundResult(); using var scope = new TransactionScope( TransactionScopeAsyncFlowOption.Enabled); var existing = await Repository .ReadReservation(restaurant.Id, reservation.Id) .ConfigureAwait(false); if (existing is null) return new NotFoundResult(); var reservations = await Repository .ReadReservations(restaurant.Id, reservation.At) .ConfigureAwait(false); reservations = reservations.Where(r => r.Id != reservation.Id).ToList(); var now = Clock.GetCurrentDateTime(); var ok = restaurant.MaitreD.WillAccept( now, reservations, reservation); if (!ok) return NoTables500InternalServerError(); await Repository.Update(restaurant.Id, reservation) .ConfigureAwait(false); scope.Complete(); return new OkObjectResult(reservation.ToDto()); }

While I've definitely seen longer methods in the wild, this variation is already so big that it no longer fits on my laptop screen. I have to scroll up and down to read the whole thing. When looking at the bottom of the method, I have to remember what was at the top, because I can no longer see it.

A major point of Code That Fits in Your Head is that what limits programmer productivity is human cognition. If you have to scroll your screen because you can't see the whole method at once, does that fit in your brain? Chances are, it doesn't.

Can you spot the Impureim Sandwich now?

If you can't, that's understandable. It's not really clear because there's quite a few small decisions being made in this code. You could argue, for example, that this decision is referentially transparent:

if (existing is null) return new NotFoundResult();

These two lines of code are deterministic and have no side effects. The branch only returns a NotFoundResult when existing is null. Additionally, these two lines of code are surrounded by impure actions both before and after. Is this the Sandwich, then?

No, it's not. This is how idiomatic imperative code looks. To borrow a diagram from another article, pure and impure code is interleaved without discipline:

Even so, the above Put method implements the Functional Core, Imperative Shell architecture. The Put method is the Imperative Shell, but where's the Functional Core?

Shell perspective #

One thing to be aware of is that when looking at the Imperative Shell code, the Functional Core is close to invisible. This is because it's typically only a single function call.

In the above Put method, this is the Functional Core:

var ok = restaurant.MaitreD.WillAccept( now, reservations, reservation); if (!ok) return NoTables500InternalServerError();

It's only a few lines of code, and had I not given myself the constraint of staying within an 80 character line width, I could have instead laid it out like this and inlined the ok flag:

if (!restaurant.MaitreD.WillAccept(now, reservations, reservation)) return NoTables500InternalServerError();

Now that I try this, in fact, it turns out that this actually still stays within 80 characters. To be honest, I don't know exactly why I had that former code instead of this, but perhaps I found the latter alternative too dense. Or perhaps I simply didn't think of it. Code is rarely perfect. Usually when I revisit a piece of code after having been away from it for some time, I find some thing that I want to change.

In any case, that's beside the point. What matters here is that when you're looking through the Imperative Shell code, the Functional Core looks insignificant. Blink and you'll miss it. Even if we ignore all the other small pure decisions (the if statements) and pretend that we already have an Impureim Sandwich, from this viewpoint, the architecture looks like this:

It's tempting to ask, then: What's all the fuss about? Why even bother?

This is a natural experience for a code reader. After all, if you don't know a code base well, you often start at the entry point to try to understand how the application handles a certain stimulus. Such as an HTTP PUT request. When you do that, you see all of the Imperative Shell code before you see the Functional Core code. This could give you the wrong impression about the balance of responsibility.

After all, code like the above Put method has inlined most of the impure code so that it's right in your face. Granted, there's still some code hiding behind, say, Repository.ReadReservations, but a substantial fraction of the imperative code is visible in the method.

On the other hand, the Functional Core is just a single function call. If we inlined all of that code, too, the picture might rather look like this:

This obviously depends on the de-facto ratio of pure to imperative code. In any case, inlining the pure code is a thought experiment only, because the whole point of functional architecture is that a referentially transparent function fits in your head. Regardless of the complexity and amount of code hiding behind that MaitreD.WillAccept function, the return value is equal to the function call. It's the ultimate abstraction.

Standard combinators #

As I've already suggested, the inlined Put method looks like a Transaction Script. The cyclomatic complexity fortunately hovers on the magical number seven, and branching is exclusively organized around Guard Clauses. Apart from that, there are no nested if statements or for loops.

Apart from the Guard Clauses, this mostly looks like a procedure that runs in a straight line from top to bottom. The exception is all those small conditionals that may cause the procedure to exit prematurely. Conditions like this:

if (!Guid.TryParse(id, out var rid)) return new NotFoundResult();

or

if (reservation is null) return new BadRequestResult();

Such checks occur throughout the method. Each of them are actually small pure islands amidst all the imperative code, but each is ad hoc. Each checks if it's possible for the procedure to continue, and returns a kind of error value if it decides that it's not.

Is there a way to model such 'derailments' from the main flow?

If you've ever encountered Scott Wlaschin's Railway Oriented Programming you may already see where this is going. Railway-oriented programming is a fantastic metaphor, because it gives you a way to visualize that you have, indeed, a main track, but then you have a side track that you may shuffle some trains too. And once the train is on the side track, it can't go back to the main track.

That's how the Either monad works. Instead of all those ad-hoc if statements, we should be able to replace them with what we may call standard combinators. The most important of these combinators is monadic bind. Composing a Transaction Script like Put with standard combinators will 'hide away' those small decisions, and make the Sandwich nature more apparent.

If we had had pure code, we could just have composed Either-valued functions. Unfortunately, most of what's going on in the Put method happens in a Task-based context. Thankfully, Either is one of those monads that nest well, implying that we can turn the combination into a composed TaskEither monad. The linked article shows the core TaskEither SelectMany implementations.

The way to encode all those small decisions between 'main track' or 'side track', then, is to wrap 'naked' values in the desired Task<Either<L, R>> container:

Task.FromResult(id.TryParseGuid().OnNull((ActionResult)new NotFoundResult()))

This little code snippet makes use of a few small building blocks that we also need to introduce. First, .NET's standard TryParse APIs don't, compose, but since they're isomorphic to Maybe-valued functions, you can write an adapter like this:

public static Guid? TryParseGuid(this string candidate) { if (Guid.TryParse(candidate, out var guid)) return guid; else return null; }

In this code base, I treat nullable reference types as equivalent to the Maybe monad, but if your language doesn't have that feature, you can use Maybe instead.

To implement the Put method, however, we don't want nullable (or Maybe) values. We need Either values, so we may introduce a natural transformation:

public static Either<L, R> OnNull<L, R>(this R? candidate, L left) where R : struct { if (candidate.HasValue) return Right<L, R>(candidate.Value); return Left<L, R>(left); }

In Haskell one might just make use of the built-in Maybe catamorphism:

ghci> maybe (Left "boo!") Right $ Just 123 Right 123 ghci> maybe (Left "boo!") Right $ Nothing Left "boo!"

Such conversions from Maybe to Either hover just around the Fairbairn threshold, but since we are going to need it more than once, it makes sense to add a specialized OnNull transformation to the C# code base. The one shown here handles nullable value types, but the code base also includes an overload that handles nullable reference types. It's almost identical.

Support for query syntax #

There's more than one way to consume monadic values in C#. While many C# developers like LINQ, most seem to prefer the familiar method call syntax; that is, just call the Select, SelectMany, and Where methods as the normal extension methods they are. Another option, however, is to use query syntax. This is what I'm aiming for here, since it'll make it easier to spot the Impureim Sandwich.

You'll see the entire sandwich later in the article. Before that, I'll highlight details and explain how to implement them. You can always scroll down to see the end result, and then scroll back here, if that's more to your liking.

The sandwich starts by parsing the id into a GUID using the above building blocks:

var sandwich = from rid in Task.FromResult(id.TryParseGuid().OnNull((ActionResult)new NotFoundResult()))

It then immediately proceeds to Validate (parse, really) the dto into a proper Domain Model:

from reservation in dto.Validate(rid).OnNull((ActionResult)new BadRequestResult())

Notice that the second from expression doesn't wrap the result with Task.FromResult. How does that work? Is the return value of dto.Validate already a Task? No, this works because I added 'degenerate' SelectMany overloads:

public static Task<Either<L, R1>> SelectMany<L, R, R1>( this Task<Either<L, R>> source, Func<R, Either<L, R1>> selector) { return source.SelectMany(x => Task.FromResult(selector(x))); } public static Task<Either<L, R1>> SelectMany<L, U, R, R1>( this Task<Either<L, R>> source, Func<R, Either<L, U>> k, Func<R, U, R1> s) { return source.SelectMany(x => k(x).Select(y => s(x, y))); }

Notice that the selector only produces an Either<L, R1> value, rather than Task<Either<L, R1>>. This allows query syntax to 'pick up' the previous value (rid, which is 'really' a Task<Either<ActionResult, Guid>>) and continue with a function that doesn't produce a Task, but rather just an Either value. The first of these two overloads then wraps that Either value and wraps it with Task.FromResult. The second overload is just the usual ceremony that enables query syntax.

Why, then, doesn't the sandwich use the same trick for rid? Why does it explicitly call Task.FromResult?

As far as I can tell, this is because of type inference. It looks as though the C# compiler infers the monad's type from the first expression. If I change the first expression to

from rid in id.TryParseGuid().OnNull((ActionResult)new NotFoundResult())

the compiler thinks that the query expression is based on Either<L, R>, rather than Task<Either<L, R>>. This means that once we run into the first Task value, the entire expression no longer works.

By explicitly wrapping the first expression in a Task, the compiler correctly infers the monad we'd like it to. If there's a more elegant way to do this, I'm not aware of it.

Values that don't fail #

The sandwich proceeds to query various databases, using the now-familiar OnNull combinators to transform nullable values to Either values.

from restaurant in RestaurantDatabase .GetRestaurant(restaurantId) .OnNull((ActionResult)new NotFoundResult()) from existing in Repository .ReadReservation(restaurant.Id, reservation.Id) .OnNull((ActionResult)new NotFoundResult())

This works like before because both GetRestaurant and ReadReservation are queries that may fail to return a value. Here's the interface definition of ReadReservation:

Task<Reservation?> ReadReservation(int restaurantId, Guid id);

Notice the question mark that indicates that the result may be null.

The GetRestaurant method is similar.

The next query that the sandwich has to perform, however, is different. The return type of the ReadReservations method is Task<IReadOnlyCollection<Reservation>>. Notice that the type contained in the Task is not nullable. Barring database connection errors, this query can't fail. If it finds no data, it returns an empty collection.

Since the value isn't nullable, we can't use OnNull to turn it into a Task<Either<L, R>> value. We could try to use the Right creation function for that.

public static Either<L, R> Right<L, R>(R right) { return Either<L, R>.Right(right); }

This works, but is awkward:

from reservations in Repository .ReadReservations(restaurant.Id, reservation.At) .Traverse(rs => Either.Right<ActionResult, IReadOnlyCollection<Reservation>>(rs))

The problem with calling Either.Right is that while the compiler can infer which type to use for R, it doesn't know what the L type is. Thus, we have to tell it, and we can't tell it what L is without also telling it what R is. Even though it already knows that.

In such scenarios, the F# compiler can usually figure it out, and GHC always can (unless you add some exotic language extensions to your code). C# doesn't have any syntax that enables you to tell the compiler about only the type that it doesn't know about, and let it infer the rest.

All is not lost, though, because there's a little trick you can use in cases such as this. You can let the C# compiler infer the R type so that you only have to tell it what L is. It's a two-stage process. First, define an extension method on R:

public static RightBuilder<R> ToRight<R>(this R right) { return new RightBuilder<R>(right); }

The only type argument on this ToRight method is R, and since the right parameter is of the type R, the C# compiler can always infer the type of R from the type of right.

What's RightBuilder<R>? It's this little auxiliary class:

public sealed class RightBuilder<R> { private readonly R right; public RightBuilder(R right) { this.right = right; } public Either<L, R> WithLeft<L>() { return Either.Right<L, R>(right); } }

The code base for Code That Fits in Your Head was written on .NET 3.1, but today you could have made this a record instead. The only purpose of this class is to break the type inference into two steps so that the R type can be automatically inferred. In this way, you only need to tell the compiler what the L type is.

from reservations in Repository .ReadReservations(restaurant.Id, reservation.At) .Traverse(rs => rs.ToRight().WithLeft<ActionResult>())

As indicated, this style of programming isn't language-neutral. Even if you find this little trick neat, I'd much rather have the compiler just figure it out for me. The entire sandwich query expression is already defined as working with Task<Either<ActionResult, R>>, and the L type can't change like the R type can. Functional compilers can figure this out, and while I intend this article to show object-oriented programmers how functional programming sometimes work, I don't wish to pretend that it's a good idea to write code like this in C#. I've covered that ground already.

Not surprisingly, there's a mirror-image ToLeft/WithRight combo, too.

Working with Commands #

The ultimate goal with the Put method is to modify a row in the database. The method to do that has this interface definition:

Task Update(int restaurantId, Reservation reservation);

I usually call that non-generic Task class for 'asynchronous void' when explaining it to non-C# programmers. The Update method is an asynchronous Command.

Task and void aren't legal values for use with LINQ query syntax, so you have to find a way to work around that limitation. In this case I defined a local helper method to make it look like a Query:

async Task<Reservation> RunUpdate(int restaurantId, Reservation reservation, TransactionScope scope) { await Repository.Update(restaurantId, reservation).ConfigureAwait(false); scope.Complete(); return reservation; }

It just echoes back the reservation parameter once the Update has completed. This makes it composable in the larger query expression.

You'll probably not be surprised when I tell you that both F# and Haskell handle this scenario gracefully, without requiring any hoop-jumping.

Full sandwich #

Those are all the building block. Here's the full sandwich definition, colour-coded like the examples in Impureim sandwich.

Task<Either<ActionResult, OkObjectResult>> sandwich = from rid in Task.FromResult( id.TryParseGuid().OnNull((ActionResult)new NotFoundResult())) from reservation in dto.Validate(rid).OnNull( (ActionResult)new BadRequestResult()) from restaurant in RestaurantDatabase .GetRestaurant(restaurantId) .OnNull((ActionResult)new NotFoundResult()) from existing in Repository .ReadReservation(restaurant.Id, reservation.Id) .OnNull((ActionResult)new NotFoundResult()) from reservations in Repository .ReadReservations(restaurant.Id, reservation.At) .Traverse(rs => rs.ToRight().WithLeft<ActionResult>()) let now = Clock.GetCurrentDateTime() let reservations2 = reservations.Where(r => r.Id != reservation.Id) let ok = restaurant.MaitreD.WillAccept( now, reservations2, reservation) from reservation2 in ok ? reservation.ToRight().WithLeft<ActionResult>() : NoTables500InternalServerError().ToLeft().WithRight<Reservation>() from reservation3 in RunUpdate(restaurant.Id, reservation2, scope) .Traverse(r => r.ToRight().WithLeft<ActionResult>()) select new OkObjectResult(reservation3.ToDto());

As is evident from the colour-coding, this isn't quite a sandwich. The structure is honestly more accurately depicted like this:

As I've previously argued, while the metaphor becomes strained, this still works well as a functional-programming architecture.

As defined here, the sandwich value is a Task that must be awaited.

Either<ActionResult, OkObjectResult> either = await sandwich.ConfigureAwait(false); return either.Match(x => x, x => x);

By awaiting the task, we get an Either value. The Put method, on the other hand, must return an ActionResult. How do you turn an Either object into a single object?

By pattern matching on it, as the code snippet shows. The L type is already an ActionResult, so we return it without changing it. If C# had had a built-in identity function, I'd used that, but idiomatically, we instead use the x => x lambda expression.

The same is the case for the R type, because OkObjectResult inherits from ActionResult. The identity expression automatically performs the type conversion for us.

This, by the way, is a recurring pattern with Either values that I run into in all languages. You've essentially computed an Either<T, T>, with the same type on both sides, and now you just want to return whichever T value is contained in the Either value. You'd think this is such a common pattern that Haskell has a nice abstraction for it, but even Hoogle fails to suggest a commonly-accepted function that does this. Apparently, either id id is considered below the Fairbairn threshold, too.

Conclusion #

This article presents an example of a non-trivial Impureim Sandwich. When I introduced the pattern, I gave a few examples. I'd deliberately chosen these examples to be simple so that they highlighted the structure of the idea. The downside of that didactic choice is that some commenters found the examples too simplistic. Therefore, I think that there's value in going through more complex examples.

The code base that accompanies Code That Fits in Your Head is complex enough that it borders on the realistic. It was deliberately written that way, and since I assume that the code base is familiar to readers of the book, I thought it'd be a good resource to show how an Impureim Sandwich might look. I explicitly chose to refactor the Put method, since it's easily the most complicated process in the code base.

The benefit of that code base is that it's written in a programming language that reach a large audience. Thus, for the reader curious about functional programming I thought that this could also be a useful introduction to some intermediate concepts.

As I've commented along the way, however, I wouldn't expect anyone to write production C# code like this. If you're able to do this, you're also able to do it in a language better suited for this programming paradigm.

Implementation and usage mindsets

A one-dimensional take on the enduring static-versus-dynamic debate.

It recently occurred to me that one possible explanation for the standing, and probably never-ending, debate about static versus dynamic types may be that each camp have disjoint perspectives on the kinds of problems their favourite languages help them solve. In short, my hypothesis is that perhaps lovers of dynamically-typed languages often approach a problem from an implementation mindset, whereas proponents of static types emphasize usage.

I'll expand on this idea here, and then provide examples in two subsequent articles.

Background #

For years I've struggled to understand 'the other side'. While I'm firmly in the statically typed camp, I realize that many highly skilled programmers and thought leaders enjoy, or get great use out of, dynamically typed languages. This worries me, because it might indicate that I'm stuck in a local maximum.

In other words, just because I, personally, prefer static types, it doesn't follow that static types are universally better than dynamic types.

In reality, it's probably rather the case that we're dealing with a false dichotomy, and that the problem is really multi-dimensional.

"Let me stop you right there: I don't think there is a real dynamic typing versus static typing debate.

"What such debates normally are is language X vs language Y debates (where X happens to be dynamic and Y happens to be static)."

Even so, I can't help thinking about such things. Am I missing something?

For the past few years, I've dabbled with Python to see what writing in a popular dynamically typed language is like. It's not a bad language, and I can clearly see how it's attractive. Even so, I'm still frustrated every time I return to some Python code after a few weeks or more. The lack of static types makes it hard for me to pick up, or revisit, old code.

A question of perspective? #

Whenever I run into a difference of opinion, I often interpret it as a difference in perspective. Perhaps it's my academic background as an economist, but I consider it a given that people have different motivations, and that incentives influence actions.

A related kind of analysis deals with problem definitions. Are we even trying to solve the same problem?

I've discussed such questions before, but in a different context. Here, it strikes me that perhaps programmers who gravitate toward dynamically typed languages are focused on another problem than the other group.

Again, I'd like to emphasize that I don't consider the world so black and white in reality. Some developers straddle the two camps, and as the above Kevlin Henney quote suggests, there really aren't only two kinds of languages. C and Haskell are both statically typed, but the similarities stop there. Likewise, I don't know if it's fair to put JavaScript and Clojure in the same bucket.

That said, I'd still like to offer the following hypothesis, in the spirit that although all models are wrong, some are useful.

The idea is that if you're trying to solve a problem related to implementation, dynamically typed languages may be more suitable. If you're trying to implement an algorithm, or even trying to invent one, a dynamic language seems useful. One year, I did a good chunk of Advent of Code in Python, and didn't find it harder than in Haskell. (I ultimately ran out of steam for reasons unrelated to Python.)

On the other hand, if your main focus may be usage of your code, perhaps you'll find a statically typed language more useful. At least, I do. I can use the static type system to communicate how my APIs work. How to instantiate my classes. How to call my functions. How return values are shaped. In other words, the preconditions, invariants, and postconditions of my reusable code: Encapsulation.

Examples #

Some examples may be in order. In the next two articles, I'll first examine how easy it is to implement an algorithm in various programming languages. Then I'll discuss how to encapsulate that algorithm.

The articles will both discuss the rod-cutting problem from Introduction to Algorithms, but I'll introduce the problem in the next article.

Conclusion #

I'd be naive if I believed that a single model can fully explain why some people prefer dynamically typed languages, and others rather like statically typed languages. Even so, suggesting a model helps me understand how to analyze problems.

My hypothesis is that dynamically typed languages may be suitable for implementing algorithms, whereas statically typed languages offer better encapsulation.

This may be used as a heuristic for 'picking the right tool for the job'. If I need to suss out an algorithm, perhaps I should do it in Python. If, on the other hand, I need to publish a reusable library, perhaps Haskell is a better choice.

Next: Implementing rod-cutting.

Short-circuiting an asynchronous traversal

Another C# example.

This article is a continuation of an earlier post about refactoring a piece of imperative code to a functional architecture. It all started with a Stack Overflow question, but read the previous article, and you'll be up to speed.

Imperative outset #

To begin, consider this mostly imperative code snippet:

var storedItems = new List<ShoppingListItem>(); var failedItems = new List<ShoppingListItem>(); var state = (storedItems, failedItems, hasError: false); foreach (var item in itemsToUpdate) { OneOf<ShoppingListItem, NotFound, Error> updateResult = await UpdateItem(item, dbContext); state = updateResult.Match<(List<ShoppingListItem>, List<ShoppingListItem>, bool)>( storedItem => { storedItems.Add(storedItem); return state; }, notFound => { failedItems.Add(item); return state; }, error => { state.hasError = true; return state; } ); if (state.hasError) return Results.BadRequest(); } await dbContext.SaveChangesAsync(); return Results.Ok(new BulkUpdateResult([.. storedItems], [.. failedItems]));

I'll recap a few points from the previous article. Apart from one crucial detail, it's similar to the other post. One has to infer most of the types and APIs, since the original post didn't show more code than that. If you're used to engaging with Stack Overflow questions, however, it's not too hard to figure out what most of the moving parts do.

The most non-obvious detail is that the code uses a library called OneOf, which supplies general-purpose, but rather abstract, sum types. Both the container type OneOf, as well as the two indicator types NotFound and Error are defined in that library.

The Match method implements standard Church encoding, which enables the code to pattern-match on the three alternative values that UpdateItem returns.

One more detail also warrants an explicit description: The itemsToUpdate object is an input argument of the type IEnumerable<ShoppingListItem>.

The major difference from before is that now the update process short-circuits on the first Error. If an error occurs, it stops processing the rest of the items. In that case, it now returns Results.BadRequest(), and it doesn't save the changes to dbContext.

The implementation makes use of mutable state and undisciplined I/O. How do you refactor it to a more functional design?

Short-circuiting traversal #

The standard Traverse function isn't lazy, or rather, it does consume the entire input sequence. Even various Haskell data structures I investigated do that. And yes, I even tried to traverse ListT. If there's a data structure that you can traverse with deferred execution of I/O-bound actions, I'm not aware of it.