ploeh blog danish software design

The Reader monad

Normal functions form monads. An article for object-oriented programmers.

This article is an instalment in an article series about monads. A previous article described the Reader functor. As is the case with many (but not all) functors, Readers also form monads.

This article continues where the Reader functor article stopped. It uses the same code base.

Flatten #

A monad must define either a bind or join function, although you can use other names for both of these functions. Flatten is in my opinion a more intuitive name than join, since a monad is really just a functor that you can flatten. Flattening is relevant if you have a nested functor; in this case a Reader within a Reader. You can flatten such a nested Reader with a Flatten function:

public static IReader<R, A> Flatten<R, A>( this IReader<R, IReader<R, A>> source) { return new FlattenReader<R, A>(source); } private class FlattenReader<R, A> : IReader<R, A> { private readonly IReader<R, IReader<R, A>> source; public FlattenReader(IReader<R, IReader<R, A>> source) { this.source = source; } public A Run(R environment) { IReader<R, A> newReader = source.Run(environment); return newReader.Run(environment); } }

Since the source Reader is nested, calling its Run method once returns a newReader. You can Run that newReader one more time to get an A value to return.

You could easily chain the two calls to Run together, one after the other. That would make the code terser, but here I chose to do it in two explicit steps in order to show what's going on.

Like the previous article about the State monad, a lot of ceremony is required because this variation of the Reader monad is defined with an interface. You could also define the Reader monad on a 'raw' function of the type Func<R, A>, in which case Flatten would be simpler:

public static Func<R, A> Flatten<R, A>(this Func<R, Func<R, A>> source) { return environment => source(environment)(environment); }

In this variation source is a function, so you can call it with environment, which returns another function that you can again call with environment. This produces an A value for the function to return.

SelectMany #

When you have Flatten you can always define SelectMany (monadic bind) like this:

public static IReader<R, B> SelectMany<R, A, B>( this IReader<R, A> source, Func<A, IReader<R, B>> selector) { return source.Select(selector).Flatten(); }

First use functor-based mapping. Since the selector returns a Reader, this mapping produces a Reader within a Reader. That's exactly the situation that Flatten addresses.

The above SelectMany example works with the IReader<R, A> interface, but the 'raw' function version has the exact same implementation:

public static Func<R, B> SelectMany<R, A, B>( this Func<R, A> source, Func<A, Func<R, B>> selector) { return source.Select(selector).Flatten(); }

Only the method declaration differs.

Query syntax #

Monads also enable query syntax in C# (just like they enable other kinds of syntactic sugar in languages like F# and Haskell). As outlined in the monad introduction, however, you must add a special SelectMany overload:

public static IReader<R, T1> SelectMany<R, T, U, T1>( this IReader<R, T> source, Func<T, IReader<R, U>> k, Func<T, U, T1> s) { return source.SelectMany(x => k(x).Select(y => s(x, y))); }

As already predicted in the monad introduction, this boilerplate overload is always implemented in the same way. Only the signature changes. With it, you could write an expression like this nonsense:

IReader<int, bool> r = from dur in new MinutesReader() from b in new Thingy(dur) select b;

Where MinutesReader was already shown in the article Reader as a contravariant functor. I couldn't come up with a good name for another reader, so I went with Dan North's naming convention that if you don't yet know what to call a class, method, or function, don't pretend that you know. Be explicit that you don't know.

Here it is, for the sake of completion:

public sealed class Thingy : IReader<int, bool> { private readonly TimeSpan timeSpan; public Thingy(TimeSpan timeSpan) { this.timeSpan = timeSpan; } public bool Run(int environment) { return new TimeSpan(timeSpan.Ticks * environment).TotalDays < 1; } }

I'm not claiming that this class makes sense. These articles are deliberate kept abstract in order to focus on structure and behaviour, rather than on practical application.

Return #

Apart from flattening or monadic bind, a monad must also define a way to put a normal value into the monad. Conceptually, I call this function return (because that's the name that Haskell uses):

public static IReader<R, A> Return<R, A>(A a) { return new ReturnReader<R, A>(a); } private class ReturnReader<R, A> : IReader<R, A> { private readonly A a; public ReturnReader(A a) { this.a = a; } public A Run(R environment) { return a; } }

This implementation returns the a value and completely ignores the environment. You can do the same with a 'naked' function.

Left identity #

We need to identify the return function in order to examine the monad laws. Now that this is accomplished, let's see what the laws look like for the Reader monad, starting with the left identity law.

[Theory] [InlineData(UriPartial.Authority, "https://example.com/f?o=o")] [InlineData(UriPartial.Path, "https://example.net/b?a=r")] [InlineData(UriPartial.Query, "https://example.org/b?a=z")] [InlineData(UriPartial.Scheme, "https://example.gov/q?u=x")] public void LeftIdentity(UriPartial a, string u) { Func<UriPartial, IReader<Uri, UriPartial>> @return = up => Reader.Return<Uri, UriPartial>(up); Func<UriPartial, IReader<Uri, string>> h = up => new UriPartReader(up); Assert.Equal( @return(a).SelectMany(h).Run(new Uri(u)), h(a).Run(new Uri(u))); }

In order to compare the two Reader values, the test has to Run them and then compare the return values.

This test and the next uses a Reader implementation called UriPartReader, which almost makes sense:

public sealed class UriPartReader : IReader<Uri, string> { private readonly UriPartial part; public UriPartReader(UriPartial part) { this.part = part; } public string Run(Uri environment) { return environment.GetLeftPart(part); } }

Almost.

Right identity #

In a similar manner, we can showcase the right identity law as a test.

[Theory] [InlineData(UriPartial.Authority, "https://example.com/q?u=ux")] [InlineData(UriPartial.Path, "https://example.net/q?u=uuz")] [InlineData(UriPartial.Query, "https://example.org/c?o=rge")] [InlineData(UriPartial.Scheme, "https://example.gov/g?a=rply")] public void RightIdentity(UriPartial a, string u) { Func<UriPartial, IReader<Uri, string>> f = up => new UriPartReader(up); Func<string, IReader<Uri, string>> @return = s => Reader.Return<Uri, string>(s); IReader<Uri, string> m = f(a); Assert.Equal( m.SelectMany(@return).Run(new Uri(u)), m.Run(new Uri(u))); }

As always, even a parametrised test constitutes no proof that the law holds. I show the tests to illustrate what the laws look like in 'real' code.

Associativity #

The last monad law is the associativity law that describes how (at least) three functions compose. We're going to need three functions. For the purpose of demonstrating the law, any three pure functions will do. While the following functions are silly and not at all 'realistic', they have the virtue of being as simple as they can be (while still providing a bit of variety). They don't 'mean' anything, so don't worry too much about their behaviour. It is, as far as I can tell, nonsensical.

public sealed class F : IReader<int, string> { private readonly char c; public F(char c) { this.c = c; } public string Run(int environment) { return new string(c, environment); } } public sealed class G : IReader<int, bool> { private readonly string s; public G(string s) { this.s = s; } public bool Run(int environment) { return environment < 42 || s.Contains("a"); } } public sealed class H : IReader<int, TimeSpan> { private readonly bool b; public H(bool b) { this.b = b; } public TimeSpan Run(int environment) { return b ? TimeSpan.FromMinutes(environment) : TimeSpan.FromSeconds(environment); } }

Armed with these three classes, we can now demonstrate the Associativity law:

[Theory] [InlineData('a', 0)] [InlineData('b', 1)] [InlineData('c', 42)] [InlineData('d', 2112)] public void Associativity(char a, int i) { Func<char, IReader<int, string>> f = c => new F(c); Func<string, IReader<int, bool>> g = s => new G(s); Func<bool, IReader<int, TimeSpan>> h = b => new H(b); IReader<int, string> m = f(a); Assert.Equal( m.SelectMany(g).SelectMany(h).Run(i), m.SelectMany(x => g(x).SelectMany(h)).Run(i)); }

In case you're wondering, the four test cases produce the outputs 00:00:00, 00:01:00, 00:00:42, and 00:35:12. You can see that reproduced below:

Haskell #

In Haskell, normal functions a -> b are already Monad instances, which means that you can easily replicate the functions from the Associativity test:

> f c = \env -> replicate env c > g s = \env -> env < 42 || 'a' `elem` s > h b = \env -> if b then secondsToDiffTime (toEnum env * 60) else secondsToDiffTime (toEnum env)

I've chosen to write the f, g, and h as functions that return lambda expressions in order to emphasise that each of these functions return Readers. Since Haskell functions are already curried, I could also have written them in the more normal function style with two normal parameters, but that might have obscured the Reader aspect of each.

Here's the composition in action:

> f 'a' >>= g >>= h $ 0 0s > f 'b' >>= g >>= h $ 1 60s > f 'c' >>= g >>= h $ 42 42s > f 'd' >>= g >>= h $ 2112 2112s

In case you are wondering, 2,112 seconds is 35 minutes and 12 seconds, so all outputs fit with the results reported for the C# example.

What the above Haskell GHCi (REPL) session demonstrates is that it's possible to compose functions with Haskell's monadic bind operator >>= operator exactly because all functions are (Reader) monads.

Conclusion #

In Haskell, it can occasionally be useful that a function can be used when a Monad is required. Some Haskell libraries are defined in very general terms. Their APIs may enable you to call functions with any monadic input value. You can, say, pass a Maybe, a List, an Either, a State, but you can also pass a function.

C# and most other languages (F# included) doesn't come with that level of abstraction, so the fact that a function forms a monad is less useful there. In fact, I can't recall having made explicit use of this knowledge in C#, but one never knows if that day arrives.

In a similar vein, knowing that endomorphisms form monoids (and thereby also semigroups) enabled me to quickly identify the correct design for a validation problem.

Who knows? One day the knowledge that functions are monads may come in handy.

Next: Reactive monad.

Applicative assertions

An exploration.

In a recent Twitter exchange, Lucas DiCioccio made an interesting observation:

"Imho the properties you want of an assertion-framework are really close (the same as?) applicative-validation: one assertion failure with multiple bullet points composed mainly from combinators."

In another branch off my initial tweet Josh McKinney pointed out the short-circuiting nature of standard assertions:

"short circuiting often causes weaker error messages in failing tests than running compound assertions. E.g.

TransferTest { a.transfer(b,50); a.shouldEqual(50); b.shouldEqual(150); // never reached? }

Most standard assertion libraries work by throwing exceptions when an assertion fails. Once you throw an exception, remaining code doesn't execute. This means that you only get the first assertion message. Further assertions are not evaluated.

Josh McKinney later gave more details about a particular scenario. Although in the general case I don't consider the short-circuiting nature of assertions to be a problem, I grant that there are cases where proper assertion composition would be useful.

Lucas DiCioccio's suggestion seems worthy of investigation.

Ongoing exploration #

I asked Lucas DiCioccio whether he'd done any work with his idea, and the day after he replied with a Haskell proof of concept.

I found the idea so interesting that I also wanted to carry out a few proofs of concept myself, perhaps within a more realistic setting.

As I'm writing this, I've reached some preliminary conclusions, but I'm also aware that they may not hold in more general cases. I'm posting what I have so far, but you should expect this exploration to evolve over time. If I find out more, I'll update this post with more articles.

- An initial proof of concept of applicative assertions in C#

- Error-accumulating composable assertions in C#

- Built-in alternatives to applicative assertions

A preliminary summary is in order. Based on the first two articles, applicative assertions look like overkill. I think, however, that it's because of the degenerate nature of the example. Some assertions are essentially one-stop verifications: Evaluate a predicate, and throw an exception if the result is false. These assertions return unit or void. Examples from xUnit include Assert.Equal, Assert.True, Assert.False, Assert.All, and Assert.DoesNotContain.

These are the kinds of assertions that the initial two articles explore.

There are other kinds of assertions that return a value in case of success. xUnit.net examples include Assert.Throws, Assert.Single, Assert.IsAssignableFrom, and some overloads of Assert.Contains. Assert.Single, for example, verifies that a collection contains only a single element. While it throws an exception if the collection is either empty or has more than one element, in the success case it returns the single value. This can be useful if you want to add more assertions based on that value.

I haven't experimented with this yet, but as far as can tell, you'll run into the following problem: If you make such an assertion return an applicative functor, you'll need some way to handle the success case. Combining it with another assertion-producing function, such as a -> Asserted e b (pseudocode) is possible with functor mapping, but will leave you with a nested functor.

You'll probably want to flatten the nested functor, which is exactly what monads do. Monads, on the other hand, short circuit, so you don't want to make your applicative assertion type a monad. Instead, you'll need to use an isomorphic monad container (Either should do) to move in and out of. Doable, but is the complexity warranted?

I realise that the above musings are abstract, and that I really should show rather than tell. I'll add some more content here if I ever collect something worthy of an article. if you ask me now, though, I consider that a bit of a toss-up.

The first two examples also suffer from being written in C#, which doesn't have good syntactic support for applicative functors. Perhaps I'll add some articles that use F# or Haskell.

Conclusion #

There's the occasional need for composable assertions. You can achieve that with an applicative functor, but the question is whether it's warranted. Could you make something simpler based on the list monad?

As I'm writing this, I don't consider that question settled. Even so, you may want to read on.

Next: An initial proof of concept of applicative assertions in C#.

A regular grid emerges

The code behind a lecture animation.

If you've seen my presentation Fractal Architecture, you may have wondered how I made the animation where a regular(ish) hexagonal grid emerges from adding more and more blobs to an area.

Like a few previous blog posts, today's article appears on Observable, which is where the animation and the code that creates it lives. Go there to read it.

If you have time, watch the animation evolve. Personally I find it quite mesmerising.

Encapsulation in Functional Programming

Encapsulation is only relevant for object-oriented programming, right?

The concept of encapsulation is closely related to object-oriented programming (OOP), and you rarely hear the word in discussions about (statically-typed) functional programming (FP). I will argue, however, that the notion is relevant in FP as well. Typically, it just appears with a different catchphrase.

Contracts #

I base my understanding of encapsulation on Object-Oriented Software Construction. I've tried to distil it in my Pluralsight course Encapsulation and SOLID.

In short, encapsulation denotes the distinction between an object's contract and its implementation. An object should fulfil its contract in such a way that client code doesn't need to know about its implementation.

Contracts, according to Bertrand Meyer, describe three properties of objects:

- Preconditions: What client code must fulfil in order to successfully interact with the object.

- Invariants: Statements about the object that are always true.

- Postconditions: Statements that are guaranteed to be true after a successful interaction between client code and object.

You can replace object with value and I'd argue that the same concerns are relevant in FP.

In OOP invariants often point to the properties of an object that are guaranteed to remain even in the face of state mutation. As you change the state of an object, the object should guarantee that its state remains valid. These are the properties (i.e. qualities, traits, attributes) that don't vary - i.e. are invariant.

An example would be helpful around here.

Table mutation #

Consider an object that models a table in a restaurant. You may, for example, be working on the Maître d' kata. In short, you may decide to model a table as being one of two kinds: Standard tables and communal tables. You can reserve seats at communal tables, but you still share the table with other people.

You may decide to model the problem in such a way that when you reserve the table, you change the state of the object. You may decide to describe the contract of Table objects like this:

- Preconditions

- To create a

Tableobject, you must supply a type (standard or communal). - To create a

Tableobject, you must supply the size of the table, which is a measure of its capacity; i.e. how many people can sit at it. - The capacity must be a natural number. One (1) is the smallest valid capacity.

- When reserving a table, you must supply a valid reservation.

- When reserving a table, the reservation quantity must be less than or equal to the table's remaining capacity.

- To create a

- Invariants

- The table capacity doesn't change.

- The table type doesn't change.

- The number of remaining seats is never negative.

- The number of remaining seats is never greater than the table's capacity.

- Postconditions

- After reserving a table, the number of remaining seats can't be greater than the previous number of remaining seats minus the reservation quantity.

This list may be incomplete, and if you add more operations, you may have to elaborate on what that means to the contract.

In C# you may implement a Table class like this:

public sealed class Table { private readonly List<Reservation> reservations; public Table(int capacity, TableType type) { if (capacity < 1) throw new ArgumentOutOfRangeException( nameof(capacity), $"Capacity must be greater than zero, but was: {capacity}."); reservations = new List<Reservation>(); Capacity = capacity; Type = type; RemaingSeats = capacity; } public int Capacity { get; } public TableType Type { get; } public int RemaingSeats { get; private set; } public void Reserve(Reservation reservation) { if (RemaingSeats < reservation.Quantity) throw new InvalidOperationException( "The table has no remaining seats."); if (Type == TableType.Communal) RemaingSeats -= reservation.Quantity; else RemaingSeats = 0; reservations.Add(reservation); } }

This class has good encapsulation because it makes sure to fulfil the contract. You can't put it in an invalid state.

Immutable Table #

Notice that two of the invariants for the above Table class is that the table can't change type or capacity. While OOP often revolves around state mutation, it seems reasonable that some data is immutable. A table doesn't all of a sudden change size.

In FP data is immutable. Data doesn't change. Thus, data has that invariant property.

If you consider the above contract, it still applies to FP. The specifics change, though. You'll no longer be dealing with Table objects, but rather Table data, and to make reservations, you call a function that returns a new Table value.

In F# you could model a Table like this:

type Table = private Standard of int * Reservation list | Communal of int * Reservation list module Table = let standard capacity = if 0 < capacity then Some (Standard (capacity, [])) else None let communal capacity = if 0 < capacity then Some (Communal (capacity, [])) else None let remainingSeats = function | Standard (capacity, []) -> capacity | Standard _ -> 0 | Communal (capacity, rs) -> capacity - List.sumBy (fun r -> r.Quantity) rs let reserve r t = match t with | Standard (capacity, []) when r.Quantity <= remainingSeats t -> Some (Standard (capacity, [r])) | Communal (capacity, rs) when r.Quantity <= remainingSeats t -> Some (Communal (capacity, r :: rs)) | _ -> None

While you'll often hear fsharpers say that one should make illegal states unrepresentable, in practice you often have to rely on predicative data to enforce contracts. I've done this here by making the Table cases private. Code outside the module can't directly create Table data. Instead, it'll have to use one of two functions: Table.standard or Table.communal. These are functions that return Table option values.

That's the idiomatic way to model predicative data in statically typed FP. In Haskell such functions are called smart constructors.

Statically typed FP typically use Maybe (Option) or Either (Result) values to communicate failure, rather than throwing exceptions, but apart from that a smart constructor is just an object constructor.

The above F# Table API implements the same contract as the OOP version.

If you want to see a more elaborate example of modelling table and reservations in F#, see An F# implementation of the Maître d' kata.

Functional contracts in OOP languages #

You can adopt many FP concepts in OOP languages. My book Code That Fits in Your Head contains sample code in C# that implements an online restaurant reservation system. It includes a Table class that, at first glance, looks like the above C# class.

While it has the same contract, the book's Table class is implemented with the FP design principles in mind. Thus, it's an immutable class with this API:

public sealed class Table { public static Table Standard(int seats) public static Table Communal(int seats) public int Capacity { get; } public int RemainingSeats { get; } public Table Reserve(Reservation reservation) public T Accept<T>(ITableVisitor<T> visitor) public override bool Equals(object? obj) public override int GetHashCode() }

Notice that the Reserve method returns a Table object. That's the table with the reservation associated. The original Table instance remains unchanged.

The entire book is written in the Functional Core, Imperative Shell architecture, so all domain models are immutable objects with pure functions as methods.

The objects still have contracts. They have proper encapsulation.

Conclusion #

Functional programmers may not use the term encapsulation much, but that doesn't mean that they don't share that kind of concern. They often throw around the phrase make illegal states unrepresentable or talk about smart constructors or partial versus total functions. It's clear that they care about data modelling that prevents mistakes.

The object-oriented notion of encapsulation is ultimately about separating the affordances of an API from its implementation details. An object's contract is an abstract description of the properties (i.e. qualities, traits, or attributes) of the object.

Functional programmers care so much about the properties of data and functions that property-based testing is often the preferred way to perform automated testing.

Perhaps you can find a functional programmer who might be slightly offended if you suggest that he or she should consider encapsulation. If so, suggest instead that he or she considers the properties of functions and data.

Comments

I wonder what's the goal of illustrating OOP-ish examples exclusively in C# and FP-ish ones in F# when you could stick to just one language for the reader. It might not always be as effective depending on the topic, but for encapsulation and the examples shown in this article, a C# version would read just as effective as an F# one. I mean when you get round to making your points in the Immutable Table section of your article, you could demonstrate the ideas with a C# version that's nearly identical to and reads as succinct as the F# version:

#nullable enable readonly record struct Reservation(int Quantity); abstract record Table; record StandardTable(int Capacity, Reservation? Reservation): Table; record CommunalTable(int Capacity, ImmutableArray<Reservation> Reservations): Table; static class TableModule { public static StandardTable? Standard(int capacity) => 0 < capacity ? new StandardTable(capacity, null) : null; public static CommunalTable? Communal(int capacity) => 0 < capacity ? new CommunalTable(capacity, ImmutableArray<Reservation>.Empty) : null; public static int RemainingSeats(this Table table) => table switch { StandardTable { Reservation: null } t => t.Capacity, StandardTable => 0, CommunalTable t => t.Capacity - t.Reservations.Sum(r => r.Quantity) }; public static Table? Reserve(this Table table, Reservation r) => table switch { StandardTable t when r.Quantity <= t.RemainingSeats() => t with { Reservation = r }, CommunalTable t when r.Quantity <= t.RemainingSeats() => t with { Reservations = t.Reservations.Add(r) }, _ => null, }; }

This way, I can just point someone to your article for enlightenment, 😉 but not leave them feeling frustrated that they need F# to (practice and) model around data instead of state mutating objects. It might still be worthwhile to show an F# version to draw the similarities and also call out some differences; like Table being a true discriminated union in F#, and while it appears to be emulated in C#, they desugar to the same thing in terms of CLR types and hierarchies.

By the way, in the C# example above, I modeled the standard table variant differently because if it can hold only one reservation at a time then the model should reflect that.

Atif, thank you for supplying and example of an immutable C# implementation.

I already have an example of an immutable, functional C# implementation in Code That Fits in Your Head, so I wanted to supply something else here. I also tend to find it interesting to compare how to model similar ideas in different languages, and it felt natural to supply an F# example to show how a 'natural' FP implementation might look.

Your point is valid, though, so I'm not insisting that this was the right decision.

I took your idea, Atif, and wrote something that I think is more congruent with the example here. In short, I’m

- using polymorphism to avoid having to switch over the Table type

- hiding subtypes of Table to simplify the interface.

Here's the code:

#nullable enable

using System.Collections.Immutable;

readonly record struct Reservation(int Quantity);

abstract record Table

{

public abstract Table? Reserve(Reservation r);

public abstract int RemainingSeats();

public static Table? Standard(int capacity) =>

capacity > 0 ? new StandardTable(capacity, null) : null;

public static Table? Communal(int capacity) =>

capacity > 0 ? new CommunalTable(

capacity,

ImmutableArray<Reservation>.Empty) : null;

private record StandardTable(int Capacity, Reservation? Reservation) : Table

{

public override Table? Reserve(Reservation r) => RemainingSeats() switch

{

var seats when seats >= r.Quantity => this with { Reservation = r },

_ => null,

};

public override int RemainingSeats() => Reservation switch

{

null => Capacity,

_ => 0,

};

}

private record CommunalTable(

int Capacity,

ImmutableArray<Reservation> Reservations) : Table

{

public override Table? Reserve(Reservation r) => RemainingSeats() switch

{

var seats when seats >= r.Quantity =>

this with { Reservations = Reservations.Add(r) },

_ => null,

};

public override int RemainingSeats() =>

Capacity - Reservations.Sum(r => r.Quantity);

}

}

I’d love to hear your thoughts on this approach. I think that one of its weaknesses is that calls to Table.Standard() and Table.Communal() will yield two instances of Table that can never be equal. For instance, Table.Standard(4) != Table.Communal(4), even though they’re both of type Table? and have the same number of seats.

Calling GetType() on each of the instances reveals that their types are actually Table+StandardTable and Table+CommunalTable respectively; however, this isn't transparent to callers. Another solution might be to expose the Table subtypes and give them private constructors – I just like the simplicity of not exposing the individual types of tables the same way you’re doing here, Mark.

Mark,

How do you differentiate encapsulation from abstraction?

Here's an excerpt from your book Dependency Injection: Principles, Practices, and Patterns.

Section: 1.3 - What to inject and what not to inject Subsection: 1.3.1 - Stable Dependencies

"Other examples [of libraries that do not require to be injected] may include specialized libraries that encapsulate alogorithms relevant to your application".

In that section, you and Steven were giving examples of stable dependencies that do not require to be injected to keep modularity. You define a library that "encapsulates an algorithm" as an example.

Now, to me, encapsulation is "protecting data integrity", plain and simple. A class is encapsulated as long as it's impossible or nearly impossible to bring it to an invalid or inconsistent state.

Protection of invariants, implementation hiding, bundling data and operations together, pre- and postconditions, Postel's Law all come into play to achieve this goal.

Thus, a class, to be "encapsulatable", has to have a state that can be initialized and/or modified by the client code.

Now I ask: most of the time when we say that something is encapsulating another, don't we really mean abstracting?

Why is it relevant to know that the hypothetical algorithm library protects it's invariants by using the term "encapsulate"?

Abstraction, under the light of Robert C. Martin's definition of it, makes much more sense in that context: "a specialized library that abstracts algorithms relevant to your application". It amplifies the essential (by providing a clear API), but eliminates the irrelevant (by hiding the alogirthm's implementation details).

Granted, there is some overlap between encapsulation and abstraction, specially when you bundle data and operations together (rich domain models), but they are not the same thing, you just use one to achieve another sometimes.

Would it be correct to say that the .NET Framework encapsulates math algorithms in the System.Math class? Is there any state there to be preserved? They're all static methods and constants. On the other hand, they're surely eliminating some pretty irrelevant (from a consumer POV) trigonometric algorithms.

Thanks.

Alexandre, thank you for writing. How do I distinguish between abstraction and encapsulation?

There's much overlap, to be sure.

As I write, my view on encapsulation is influenced by Bertrand Meyer's notion of contract. Likewise, I do use Robert C. Martin's notion of amplifying the essentials while hiding the irrelevant details as a guiding light when discussing abstraction.

While these concepts may seem synonymous, they're not quite the same. I can't say that I've spent too much time considering how these two words relate, but shooting from the hip I think that abstraction is a wider concept.

You don't need to read much of Robert C. Martin before he'll tell you that the Dependency Inversion Principle is an important part of abstraction:

"Abstractions should not depend on details. Details should depend on abstractions."

It's possible to implement a code base where this isn't true, even if classes have good encapsulation. You could imagine a domain model that depends on database details like a particular ORM. I've seen plenty of those in my career, although I grant that most of them have had poor encapsulation as well. It is not, however, impossible to imagine such a system with good encapsulation, but suboptimal abstraction.

Does it go the other way as well? Can we have good abstraction, but poor encapsulation?

An example doesn't come immediately to mind, but as I wrote, it's not an ontology that I've given much thought.

Stubs and mocks break encapsulation

Favour Fakes over dynamic mocks.

For a while now, I've favoured Fakes over Stubs and Mocks. Using Fake Objects over other Test Doubles makes test suites more robust. I wrote the code base for my book Code That Fits in Your Head entirely with Fakes and the occasional Test Spy, and I rarely had to fix broken tests. No Moq, FakeItEasy, NSubstitute, nor Rhino Mocks. Just hand-written Test Doubles.

It recently occurred to me that a way to explain the problem with Mocks and Stubs is that they break encapsulation.

You'll see some examples soon, but first it's important to be explicit about terminology.

Terminology #

Words like Mocks, Stubs, as well as encapsulation, have different meanings to different people. They've fallen victim to semantic diffusion, if ever they were well-defined to begin with.

When I use the words Test Double, Fake, Mock, and Stub, I use them as they are defined in xUnit Test Patterns. I usually try to avoid the terms Mock and Stub since people use them vaguely and inconsistently. The terms Test Double and Fake fare better.

We do need, however, a name for those libraries that generate Test Doubles on the fly. In .NET, they are libraries like Moq, FakeItEasy, and so on, as listed above. Java has Mockito, EasyMock, JMockit, and possibly more like that.

What do we call such libraries? Most people call them mock libraries or dynamic mock libraries. Perhaps dynamic Test Double library would be more consistent with the xUnit Test Patterns vocabulary, but nobody calls them that. I'll call them dynamic mock libraries to at least emphasise the dynamic, on-the-fly object generation these libraries typically use.

Finally, it's important to define encapsulation. This is another concept where people may use the same word and yet mean different things.

I base my understanding of encapsulation on Object-Oriented Software Construction. I've tried to distil it in my Pluralsight course Encapsulation and SOLID.

In short, encapsulation denotes the distinction between an object's contract and its implementation. An object should fulfil its contract in such a way that client code doesn't need to know about its implementation.

Contracts, according to Meyer, describe three properties of objects:

- Preconditions: What client code must fulfil in order to successfully interact with the object.

- Invariants: Statements about the object that are always true.

- Postconditions: Statements that are guaranteed to be true after a successful interaction between client code and object.

As I'll demonstrate in this article, objects generated by dynamic mock libraries often break their contracts.

Create-and-read round-trip #

Consider the IReservationsRepository interface from Code That Fits in Your Head:

public interface IReservationsRepository { Task Create(int restaurantId, Reservation reservation); Task<IReadOnlyCollection<Reservation>> ReadReservations( int restaurantId, DateTime min, DateTime max); Task<Reservation?> ReadReservation(int restaurantId, Guid id); Task Update(int restaurantId, Reservation reservation); Task Delete(int restaurantId, Guid id); }

I already discussed some of the contract properties of this interface in an earlier article. Here, I want to highlight a certain interaction.

What is the contract of the Create method?

There are a few preconditions:

- The client must have a properly initialised

IReservationsRepositoryobject. - The client must have a valid

restaurantId. - The client must have a valid

reservation.

A client that fulfils these preconditions can successfully call and await the Create method. What are the invariants and postconditions?

I'll skip the invariants because they aren't relevant to the line of reasoning that I'm pursuing. One postcondition, however, is that the reservation passed to Create must now be 'in' the repository.

How does that manifest as part of the object's contract?

This implies that a client should be able to retrieve the reservation, either with ReadReservation or ReadReservations. This suggests a kind of property that Scott Wlaschin calls There and back again.

Picking ReadReservation for the verification step we now have a property: If client code successfully calls and awaits Create it should be able to use ReadReservation to retrieve the reservation it just saved. That's implied by the IReservationsRepository contract.

SQL implementation #

The 'real' implementation of IReservationsRepository used in production is an implementation that stores reservations in SQL Server. This class should obey the contract.

While it might be possible to write a true property-based test, running hundreds of randomly generated test cases against a real database is going to take time. Instead, I chose to only write a parametrised test:

[Theory] [InlineData(Grandfather.Id, "2022-06-29 12:00", "e@example.gov", "Enigma", 1)] [InlineData(Grandfather.Id, "2022-07-27 11:40", "c@example.com", "Carlie", 2)] [InlineData(2, "2021-09-03 14:32", "bon@example.edu", "Jovi", 4)] public async Task CreateAndReadRoundTrip( int restaurantId, string at, string email, string name, int quantity) { var expected = new Reservation( Guid.NewGuid(), DateTime.Parse(at, CultureInfo.InvariantCulture), new Email(email), new Name(name), quantity); var connectionString = ConnectionStrings.Reservations; var sut = new SqlReservationsRepository(connectionString); await sut.Create(restaurantId, expected); var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual); }

The part that we care about is the three last lines:

await sut.Create(restaurantId, expected); var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual);

First call Create and subsequently ReadReservation. The value created should equal the value retrieved, which is also the case. All tests pass.

Fake #

The Fake implementation is effectively an in-memory database, so we expect it to also fulfil the same contract. We can test it with an almost identical test:

[Theory] [InlineData(RestApi.Grandfather.Id, "2022-06-29 12:00", "e@example.gov", "Enigma", 1)] [InlineData(RestApi.Grandfather.Id, "2022-07-27 11:40", "c@example.com", "Carlie", 2)] [InlineData(2, "2021-09-03 14:32", "bon@example.edu", "Jovi", 4)] public async Task CreateAndReadRoundTrip( int restaurantId, string at, string email, string name, int quantity) { var expected = new Reservation( Guid.NewGuid(), DateTime.Parse(at, CultureInfo.InvariantCulture), new Email(email), new Name(name), quantity); var sut = new FakeDatabase(); await sut.Create(restaurantId, expected); var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual); }

The only difference is that the sut is a different class instance. These test cases also all pass.

How is FakeDatabase implemented? That's not important, because it obeys the contract. FakeDatabase has good encapsulation, which makes it possible to use it without knowing anything about its internal implementation details. That, after all, is the point of encapsulation.

Dynamic mock #

How does a dynamic mock fare if subjected to the same test? Let's try with Moq 4.18.2 (and I'm not choosing Moq to single it out - I chose Moq because it's the dynamic mock library I used to love the most):

[Theory] [InlineData(RestApi.Grandfather.Id, "2022-06-29 12:00", "e@example.gov", "Enigma", 1)] [InlineData(RestApi.Grandfather.Id, "2022-07-27 11:40", "c@example.com", "Carlie", 2)] [InlineData(2, "2021-09-03 14:32", "bon@example.edu", "Jovi", 4)] public async Task CreateAndReadRoundTrip( int restaurantId, string at, string email, string name, int quantity) { var expected = new Reservation( Guid.NewGuid(), DateTime.Parse(at, CultureInfo.InvariantCulture), new Email(email), new Name(name), quantity); var sut = new Mock<IReservationsRepository>().Object; await sut.Create(restaurantId, expected); var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual); }

If you've worked a little with dynamic mock libraries, you will not be surprised to learn that all three tests fail. Here's one of the failure messages:

Ploeh.Samples.Restaurants.RestApi.Tests.MoqRepositoryTests.CreateAndReadRoundTrip(↩ restaurantId: 1, at: "2022-06-29 12:00", email: "e@example.gov", name: "Enigma", quantity: 1) Source: MoqRepositoryTests.cs line 17 Duration: 1 ms Message: Assert.Equal() Failure Expected: Reservation↩ {↩ At = 2022-06-29T12:00:00.0000000,↩ Email = e@example.gov,↩ Id = c9de4f95-3255-4e1f-a1d6-63591b58ff0c,↩ Name = Enigma,↩ Quantity = 1↩ } Actual: (null) Stack Trace: MoqRepositoryTests.CreateAndReadRoundTrip(↩ Int32 restaurantId, String at, String email, String name, Int32 quantity) line 35 --- End of stack trace from previous location where exception was thrown ---

(I've introduced line breaks and indicated them with the ↩ symbol to make the output more readable. I'll do that again later in the article.)

Not surprisingly, the return value of Create is null. You typically have to configure a dynamic mock in order to give it any sort of behaviour, and I didn't do that here. In that case, the dynamic mock returns the default value for the return type, which in this case correctly is null.

You may object that the above example is unfair. How can a dynamic mock know what to do? You have to configure it. That's the whole point of it.

Retrieval without creation #

Okay, let's set up the dynamic mock:

var dm = new Mock<IReservationsRepository>(); dm.Setup(r => r.ReadReservation(restaurantId, expected.Id)).ReturnsAsync(expected); var sut = dm.Object;

These are the only lines I've changed from the previous listing of the test, which now passes.

A common criticism of dynamic-mock-heavy tests is that they mostly 'just test the mocks', and this is exactly what happens here.

You can make that more explicit by deleting the Create method call:

var dm = new Mock<IReservationsRepository>(); dm.Setup(r => r.ReadReservation(restaurantId, expected.Id)).ReturnsAsync(expected); var sut = dm.Object; var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual);

The test still passes. Clearly it only tests the dynamic mock.

You may, again, demur that this is expected, and it doesn't demonstrate that dynamic mocks break encapsulation. Keep in mind, however, the nature of the contract: Upon successful completion of Create, the reservation is 'in' the repository and can later be retrieved, either with ReadReservation or ReadReservations.

This variation of the test no longer calls Create, yet ReadReservation still returns the expected value.

Do SqlReservationsRepository or FakeDatabase behave like that? No, they don't.

Try to delete the Create call from the test that exercises SqlReservationsRepository:

var sut = new SqlReservationsRepository(connectionString); var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual);

Hardly surprising, the test now fails because actual is null. The same happens if you delete the Create call from the test that exercises FakeDatabase:

var sut = new FakeDatabase(); var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual);

Again, the assertion fails because actual is null.

The classes SqlReservationsRepository and FakeDatabase behave according to contract, while the dynamic mock doesn't.

Alternative retrieval #

There's another way in which the dynamic mock breaks encapsulation. Recall what the contract states: Upon successful completion of Create, the reservation is 'in' the repository and can later be retrieved, either with ReadReservation or ReadReservations.

In other words, it should be possible to change the interaction from Create followed by ReadReservation to Create followed by ReadReservations.

First, try it with SqlReservationsRepository:

await sut.Create(restaurantId, expected); var min = expected.At.Date; var max = min.AddDays(1); var actual = await sut.ReadReservations(restaurantId, min, max); Assert.Contains(expected, actual);

The test still passes, as expected.

Second, try the same change with FakeDatabase:

await sut.Create(restaurantId, expected); var min = expected.At.Date; var max = min.AddDays(1); var actual = await sut.ReadReservations(restaurantId, min, max); Assert.Contains(expected, actual);

Notice that this is the exact same code as in the SqlReservationsRepository test. That test also passes, as expected.

Third, try it with the dynamic mock:

await sut.Create(restaurantId, expected); var min = expected.At.Date; var max = min.AddDays(1); var actual = await sut.ReadReservations(restaurantId, min, max); Assert.Contains(expected, actual);

Same code, different sut, and the test fails. The dynamic mock breaks encapsulation. You'll have to go and fix the Setup of it to make the test pass again. That's not the case with SqlReservationsRepository or FakeDatabase.

Dynamic mocks break the SUT, not the tests #

Perhaps you're still not convinced that this is of practical interest. After all, Bertrand Meyer had limited success getting mainstream adoption of his thought on contract-based programming.

That dynamic mocks break encapsulation does, however, have real implications.

What if, instead of using FakeDatabase, I'd used dynamic mocks when testing my online restaurant reservation system? A test might have looked like this:

[Theory] [InlineData(1049, 19, 00, "juliad@example.net", "Julia Domna", 5)] [InlineData(1130, 18, 15, "x@example.com", "Xenia Ng", 9)] [InlineData( 956, 16, 55, "kite@example.edu", null, 2)] [InlineData( 433, 17, 30, "shli@example.org", "Shanghai Li", 5)] public async Task PostValidReservationWhenDatabaseIsEmpty( int days, int hours, int minutes, string email, string name, int quantity) { var at = DateTime.Now.Date + new TimeSpan(days, hours, minutes, 0); var dm = new Mock<IReservationsRepository>(); dm.Setup(r => r.ReadReservations(Grandfather.Id, at.Date, at.Date.AddDays(1).AddTicks(-1))) .ReturnsAsync(Array.Empty<Reservation>()); var sut = new ReservationsController( new SystemClock(), new InMemoryRestaurantDatabase(Grandfather.Restaurant), dm.Object); var expected = new Reservation( new Guid("B50DF5B1-F484-4D99-88F9-1915087AF568"), at, new Email(email), new Name(name ?? ""), quantity); await sut.Post(expected.ToDto()); dm.Verify(r => r.Create(Grandfather.Id, expected)); }

This is yet another riff on the PostValidReservationWhenDatabaseIsEmpty test - the gift that keeps giving. I've previously discussed this test in other articles:

- Branching tests

- Waiting to happen

- Parametrised test primitive obsession code smell

- The Equivalence contravariant functor

Here I've replaced the FakeDatabase Test Double with a dynamic mock. (I am, again, using Moq, but keep in mind that the fallout of using a dynamic mock is unrelated to specific libraries.)

To go 'full dynamic mock' I should also have replaced SystemClock and InMemoryRestaurantDatabase with dynamic mocks, but that's not necessary to illustrate the point I wish to make.

This, and other tests, describe the desired outcome of making a reservation against the REST API. It's an interaction that looks like this:

POST /restaurants/90125/reservations?sig=aco7VV%2Bh5sA3RBtrN8zI8Y9kLKGC60Gm3SioZGosXVE%3D HTTP/1.1

content-type: application/json

{

"at": "2022-12-12T20:00",

"name": "Pearl Yvonne Gates",

"email": "pearlygates@example.net",

"quantity": 4

}

HTTP/1.1 201 Created

Content-Length: 151

Content-Type: application/json; charset=utf-8

Location: [...]/restaurants/90125/reservations/82e550b1690742368ea62d76e103b232?sig=fPY1fSr[...]

{

"id": "82e550b1690742368ea62d76e103b232",

"at": "2022-12-12T20:00:00.0000000",

"email": "pearlygates@example.net",

"name": "Pearl Yvonne Gates",

"quantity": 4

}

What's of interest here is that the response includes the JSON representation of the resource that the interaction created. It's mostly a copy of the posted data, but enriched with a server-generated ID.

The code responsible for the database interaction looks like this:

private async Task<ActionResult> TryCreate(Restaurant restaurant, Reservation reservation) { using var scope = new TransactionScope(TransactionScopeAsyncFlowOption.Enabled); var reservations = await Repository .ReadReservations(restaurant.Id, reservation.At) .ConfigureAwait(false); var now = Clock.GetCurrentDateTime(); if (!restaurant.MaitreD.WillAccept(now, reservations, reservation)) return NoTables500InternalServerError(); await Repository.Create(restaurant.Id, reservation).ConfigureAwait(false); scope.Complete(); return Reservation201Created(restaurant.Id, reservation); }

The last line of code creates a 201 Created response with the reservation as content. Not shown in this snippet is the origin of the reservation parameter, but it's the input JSON document parsed to a Reservation object. Each Reservation object has an ID that the server creates when it's not supplied by the client.

The above TryCreate helper method contains all the database interaction code related to creating a new reservation. It first calls ReadReservations to retrieve the existing reservations. Subsequently, it calls Create if it decides to accept the reservation. The ReadReservations method is actually an internal extension method:

internal static Task<IReadOnlyCollection<Reservation>> ReadReservations( this IReservationsRepository repository, int restaurantId, DateTime date) { var min = date.Date; var max = min.AddDays(1).AddTicks(-1); return repository.ReadReservations(restaurantId, min, max); }

Notice how the dynamic-mock-based test has to replicate this internal implementation detail to the tick. If I ever decide to change this just one tick, the test is going to fail. That's already bad enough (and something that FakeDatabase gracefully handles), but not what I'm driving towards.

At the moment the TryCreate method echoes back the reservation. What if, however, you instead want to query the database and return the record that you got from the database? In this particular case, there's no reason to do that, but perhaps in other cases, something happens in the data layer that either enriches or normalises the data. So you make an innocuous change:

private async Task<ActionResult> TryCreate(Restaurant restaurant, Reservation reservation) { using var scope = new TransactionScope(TransactionScopeAsyncFlowOption.Enabled); var reservations = await Repository .ReadReservations(restaurant.Id, reservation.At) .ConfigureAwait(false); var now = Clock.GetCurrentDateTime(); if (!restaurant.MaitreD.WillAccept(now, reservations, reservation)) return NoTables500InternalServerError(); await Repository.Create(restaurant.Id, reservation).ConfigureAwait(false); var storedReservation = await Repository .ReadReservation(restaurant.Id, reservation.Id) .ConfigureAwait(false); scope.Complete(); return Reservation201Created(restaurant.Id, storedReservation!); }

Now, instead of echoing back reservation, the method calls ReadReservation to retrieve the (possibly enriched or normalised) storedReservation and returns that value. Since this value could, conceivably, be null, for now the method uses the ! operator to insist that this is not the case. A new test case might be warranted to cover the scenario where the query returns null.

This is perhaps a little less efficient because it implies an extra round-trip to the database, but it shouldn't change the behaviour of the system!

But when you run the test suite, that PostValidReservationWhenDatabaseIsEmpty test fails:

Ploeh.Samples.Restaurants.RestApi.Tests.ReservationsTests.PostValidReservationWhenDatabaseIsEmpty(↩

days: 433, hours: 17, minutes: 30, email: "shli@example.org", name: "Shanghai Li", quantity: 5)↩

[FAIL]

System.NullReferenceException : Object reference not set to an instance of an object.

Stack Trace:

[...]\Restaurant.RestApi\ReservationsController.cs(94,0): at↩

[...].RestApi.ReservationsController.Reservation201Created↩

(Int32 restaurantId, Reservation r)

[...]\Restaurant.RestApi\ReservationsController.cs(79,0): at↩

[...].RestApi.ReservationsController.TryCreate↩

(Restaurant restaurant, Reservation reservation)

[...]\Restaurant.RestApi\ReservationsController.cs(57,0): at↩

[...].RestApi.ReservationsController.Post↩

(Int32 restaurantId, ReservationDto dto)

[...]\Restaurant.RestApi.Tests\ReservationsTests.cs(73,0): at↩

[...].RestApi.Tests.ReservationsTests.PostValidReservationWhenDatabaseIsEmpty↩

(Int32 days, Int32 hours, Int32 minutes, String email, String name, Int32 quantity)

--- End of stack trace from previous location where exception was thrown ---

Oh, the dreaded NullReferenceException! This happens because ReadReservation returns null, since the dynamic mock isn't configured.

The typical reaction that most people have is: Oh no, the tests broke!

I think, though, that this is the wrong perspective. The dynamic mock broke the System Under Test (SUT) because it passed an implementation of IReservationsRepository that breaks the contract. The test didn't 'break', because it was never correct from the outset.

Shotgun surgery #

When a test code base uses dynamic mocks, it tends to do so pervasively. Most tests create one or more dynamic mocks that they pass to their SUT. Most of these dynamic mocks break encapsulation, so when you refactor, the dynamic mocks break the SUT.

You'll typically need to revisit and 'fix' all the failing tests to accommodate the refactoring:

[Theory] [InlineData(1049, 19, 00, "juliad@example.net", "Julia Domna", 5)] [InlineData(1130, 18, 15, "x@example.com", "Xenia Ng", 9)] [InlineData( 956, 16, 55, "kite@example.edu", null, 2)] [InlineData( 433, 17, 30, "shli@example.org", "Shanghai Li", 5)] public async Task PostValidReservationWhenDatabaseIsEmpty( int days, int hours, int minutes, string email, string name, int quantity) { var at = DateTime.Now.Date + new TimeSpan(days, hours, minutes, 0); var expected = new Reservation( new Guid("B50DF5B1-F484-4D99-88F9-1915087AF568"), at, new Email(email), new Name(name ?? ""), quantity); var dm = new Mock<IReservationsRepository>(); dm.Setup(r => r.ReadReservations(Grandfather.Id, at.Date, at.Date.AddDays(1).AddTicks(-1))) .ReturnsAsync(Array.Empty<Reservation>()); dm.Setup(r => r.ReadReservation(Grandfather.Id, expected.Id)).ReturnsAsync(expected); var sut = new ReservationsController( new SystemClock(), new InMemoryRestaurantDatabase(Grandfather.Restaurant), dm.Object); await sut.Post(expected.ToDto()); dm.Verify(r => r.Create(Grandfather.Id, expected)); }

The test now passes (until the next change in the SUT), but notice how top-heavy it becomes. That's a test code smell when using dynamic mocks. Everything has to happen in the Arrange phase.

You typically have many such tests that you need to edit. The name of this antipattern is Shotgun Surgery.

The implication is that refactoring by definition is impossible:

"to refactor, the essential precondition is [...] solid tests"

You need tests that don't break when you refactor. When you use dynamic mocks, tests tend to fail whenever you make changes in SUTs. Even though you have tests, they don't enable refactoring.

To add spite to injury, every time you edit existing tests, they become less trustworthy.

To address these problems, use Fakes instead of Mocks and Stubs. With the FakeDatabase the entire sample test suite for the online restaurant reservation system gracefully handles the change described above. No tests fail.

Spies #

If you spelunk the test code base for the book, you may also find this Test Double:

internal sealed class SpyPostOffice : Collection<SpyPostOffice.Observation>, IPostOffice { public Task EmailReservationCreated( int restaurantId, Reservation reservation) { Add(new Observation(Event.Created, restaurantId, reservation)); return Task.CompletedTask; } public Task EmailReservationDeleted( int restaurantId, Reservation reservation) { Add(new Observation(Event.Deleted, restaurantId, reservation)); return Task.CompletedTask; } public Task EmailReservationUpdating( int restaurantId, Reservation reservation) { Add(new Observation(Event.Updating, restaurantId, reservation)); return Task.CompletedTask; } public Task EmailReservationUpdated( int restaurantId, Reservation reservation) { Add(new Observation(Event.Updated, restaurantId, reservation)); return Task.CompletedTask; } internal enum Event { Created = 0, Updating, Updated, Deleted } internal sealed class Observation { public Observation( Event @event, int restaurantId, Reservation reservation) { Event = @event; RestaurantId = restaurantId; Reservation = reservation; } public Event Event { get; } public int RestaurantId { get; } public Reservation Reservation { get; } public override bool Equals(object? obj) { return obj is Observation observation && Event == observation.Event && RestaurantId == observation.RestaurantId && EqualityComparer<Reservation>.Default.Equals(Reservation, observation.Reservation); } public override int GetHashCode() { return HashCode.Combine(Event, RestaurantId, Reservation); } } }

As you can see, I've chosen to name this class with the Spy prefix, indicating that this is a Test Spy rather than a Fake Object. A Spy is a Test Double whose main purpose is to observe and record interactions. Does that break or realise encapsulation?

While I favour Fakes whenever possible, consider the interface that SpyPostOffice implements:

public interface IPostOffice { Task EmailReservationCreated(int restaurantId, Reservation reservation); Task EmailReservationDeleted(int restaurantId, Reservation reservation); Task EmailReservationUpdating(int restaurantId, Reservation reservation); Task EmailReservationUpdated(int restaurantId, Reservation reservation); }

This interface consist entirely of Commands. There's no way to query the interface to examine the state of the object. Thus, you can't check that postconditions hold exclusively via the interface. Instead, you need an additional retrieval interface to examine the posterior state of the object. The SpyPostOffice concrete class exposes such an interface.

In a sense, you can view SpyPostOffice as an in-memory message sink. It fulfils the contract.

Concurrency #

Perhaps you're still not convinced. You may argue, for example, that the (partial) contract that I stated is naive. Consider, again, the implications expressed as code:

await sut.Create(restaurantId, expected); var actual = await sut.ReadReservation(restaurantId, expected.Id); Assert.Equal(expected, actual);

You may argue that in the face of concurrency, another thread or process could be making changes to the reservation after Create, but before ReadReservation. Thus, you may argue, the contract I've stipulated is false. In a real system, we can't expect that to be the case.

I agree.

Concurrency makes things much harder. Even in that light, I think the above line of reasoning is appropriate, for two reasons.

First, I chose to model IReservationsRepository like I did because I didn't expect high contention on individual reservations. In other words, I don't expect two or more concurrent processes to attempt to modify the same reservation at the same time. Thus, I found it appropriate to model the Repository as

"a collection-like interface for accessing domain objects."

A collection-like interface implies both data retrieval and collection manipulation members. In low-contention scenarios like the reservation system, this turns out to be a useful model. As the aphorism goes, all models are wrong, but some models are useful. Treating IReservationsRepository as a collection accessed in a non-concurrent manner turned out to be useful in this code base.

Had I been more worried about data contention, a move towards CQRS seems promising. This leads to another object model, with different contracts.

Second, even in the face of concurrency, most unit test cases are implicitly running on a single thread. While they may run in parallel, each unit test exercises the SUT on a single thread. This implies that reads and writes against Test Doubles are serialised.

Even if concurrency is a real concern, you'd still expect that if only one thread is manipulating the Repository object, then what you Create you should be able to retrieve. The contract may be a little looser, but it'd still be a violation of the principle of least surprise if it was any different.

Conclusion #

In object-oriented programming, encapsulation is the notion of separating the affordances of an object from its implementation details. I find it most practical to think about this in terms of contracts, which again can be subdivided into sets of preconditions, invariants, and postconditions.

Polymorphic objects (like interfaces and base classes) come with contracts as well. When you replace 'real' implementations with Test Doubles, the Test Doubles should also fulfil the contracts. Fake objects do that; Test Spies may also fit that description.

When Test Doubles obey their contracts, you can refactor your SUT without breaking your test suite.

By default, however, dynamic mocks break encapsulation because they don't fulfil the objects' contracts. This leads to fragile tests.

Favour Fakes over dynamic mocks. You can read more about this way to write tests by following many of the links in this article, or by reading my book Code That Fits in Your Head.

Comments

Excellent article exploring the nuances of encapsulation as it relates to testing. That said, the examples here left me with one big question: what exactly is covered by the tests using `FakeDatabase`?

This line in particular is confusing me (as to its practical use in a "real-world" setting): `var sut = new FakeDatabase();`

How can I claim to have tested the real system's implementation when the "system under test" is, in this approach, explicitly _not_ my real system? It appears the same criticism of dynamic mocks surfaces: "you're only testing the fake database". Does this approach align with any claim you are testing the "real database"?

When testing the data-layer, I have historically written (heavier) tests that integrate with a real database to exercise a system's data-layer (as you describe with `SqlReservationsRepository`). I find myself reaching for dynamic mocks in the context of exercising an application's domain layer -- where the data-layer is a dependency providing indirect input/output. Does this use of mocks violate encapsulation in the way this article describes? I _think_ not, because in that case a dynamic mock is used to represent states that are valid "according to the contract", but I'm hoping you could shed a bit more light on the topic. Am I putting the pieces together correctly?

Rephrasing the question using your Reservations example code, I would typically inject `IReservationsRepository` into `MaitreD` (which you opt not to do) and outline the posssible database return values (or commands) using dynamic mocks in a test suite of `MaitreD`. What drawbacks, if any, would that approach lead to with respect to encapsulation and test fragility?

Matthew, thank you for writing. I apologise if the article is unclear about this, but nowhere in the real code base do I have a test of FakeDatabase. I only wrote the tests that exercise the Test Doubles to illustrate the point I was trying to make. These tests only exist for the benefit of this article.

The first CreateAndReadRoundTrip test in the article shows a real integration test. The System Under Test (SUT) is the SqlReservationsRepository class, which is part of the production code - not a Test Double.

That class implements the IReservationsRepository interface. The point I was trying to make is that the CreateAndReadRoundTrip test already exercises a particular subset of the contract of the interface. Thus, if one replaces one implementation of the interface with another implementation, according to the Liskov Substitution Principle (LSP) the test should still pass.

This is true for FakeDatabase. While the behaviour is different (it doesn't persist data), it still fulfils the contract. Dynamic mocks, on the other hand, don't automatically follow the LSP. Unless one is careful and explicit, dynamic mocks tend to weaken postconditions. For example, a dynamic mock doesn't automatically return the added reservation when you call ReadReservation.

This is an essential flaw of dynamic mock objects that is independent of where you use them. My article already describes how a fairly innocuous change in the production code will cause a dynamic mock to break the test.

I no longer inject dependencies into domain models, since doing so makes the domain model impure. Even if I did, however, I'd still have the same problem with dynamic mocks breaking encapsulation.

Refactoring a saga from the State pattern to the State monad

A slightly less unrealistic example in C#.

This article is one of the examples that I promised in the earlier article The State pattern and the State monad. That article examines the relationship between the State design pattern and the State monad. It's deliberately abstract, so one or more examples are in order.

In the previous example you saw how to refactor Design Patterns' TCP connection example. That example is, unfortunately, hardly illuminating due to its nature, so a second example is warranted.

This second example shows how to refactor a stateful asynchronous message handler from the State pattern to the State monad.

Shipping policy #

Instead of inventing an example from scratch, I decided to use an NServiceBus saga tutorial as a foundation. Read on even if you don't know NServiceBus. You don't have to know anything about NServiceBus in order to follow along. I just thought that I'd embed the example code in a context that actually executes and does something, instead of faking it with a bunch of unit tests. Hopefully this will help make the example a bit more realistic and relatable.

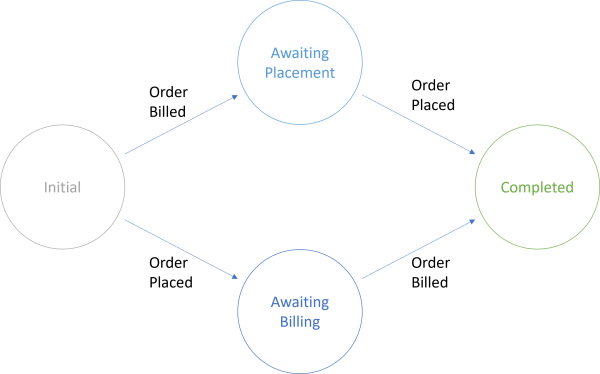

The example is a simple demo of asynchronous message handling. In a web store shipping department, you should only ship an item once you've received the order and a billing confirmation. When working with asynchronous messaging, you can't, however, rely on message ordering, so perhaps the OrderBilled message arrives before the OrderPlaced message, and sometimes it's the other way around.

Only when you've received both messages may you ship the item.

It's a simple workflow, and you don't really need the State pattern. So much is clear from the sample code implementation:

public class ShippingPolicy : Saga<ShippingPolicyData>, IAmStartedByMessages<OrderBilled>, IAmStartedByMessages<OrderPlaced> { static ILog log = LogManager.GetLogger<ShippingPolicy>(); protected override void ConfigureHowToFindSaga(SagaPropertyMapper<ShippingPolicyData> mapper) { mapper.MapSaga(sagaData => sagaData.OrderId) .ToMessage<OrderPlaced>(message => message.OrderId) .ToMessage<OrderBilled>(message => message.OrderId); } public Task Handle(OrderPlaced message, IMessageHandlerContext context) { log.Info($"OrderPlaced message received."); Data.IsOrderPlaced = true; return ProcessOrder(context); } public Task Handle(OrderBilled message, IMessageHandlerContext context) { log.Info($"OrderBilled message received."); Data.IsOrderBilled = true; return ProcessOrder(context); } private async Task ProcessOrder(IMessageHandlerContext context) { if (Data.IsOrderPlaced && Data.IsOrderBilled) { await context.SendLocal(new ShipOrder() { OrderId = Data.OrderId }); MarkAsComplete(); } } }

I don't expect you to be familiar with the NServiceBus API, so don't worry about the base class, the interfaces, or the ConfigureHowToFindSaga method. What you need to know is that this class handles two types of messages: OrderPlaced and OrderBilled. What the base class and the framework does is handling message correlation, hydration and dehydration, and so on.

For the purposes of this demo, all you need to know about the context object is that it enables you to send and publish messages. The code sample uses context.SendLocal to send a new ShipOrder Command.

Messages arrive asynchronously and conceptually with long wait times between them. You can't just rely on in-memory object state because a ShippingPolicy instance may receive one message and then risk that the server it's running on shuts down before the next message arrives. The NServiceBus framework handles message correlation and hydration and dehydration of state data. The latter is modelled by the ShippingPolicyData class:

public class ShippingPolicyData : ContainSagaData { public string OrderId { get; set; } public bool IsOrderPlaced { get; set; } public bool IsOrderBilled { get; set; } }

Notice that the above sample code inspects and manipulates the Data property defined by the Saga<ShippingPolicyData> base class.

When the ShippingPolicy methods are called by the NServiceBus framework, the Data is automatically populated. When you modify the Data, the state data is automatically persisted when the message handler shuts down to wait for the next message.

Characterisation tests #

While you can draw an explicit state diagram like the one above, the sample code doesn't explicitly model the various states as objects. Instead, it relies on reading and writing two Boolean values.

There's nothing wrong with this implementation. It's the simplest thing that could possibly work, so why make it more complicated?

In this article, I am going to make it more complicated. First, I'm going to refactor the above sample code to use the State design pattern, and then I'm going to refactor that code to use the State monad. From a perspective of maintainability, this isn't warranted, but on the other hand, I hope it's educational. The sample code is just complex enough to showcase the structures of the State pattern and the State monad, yet simple enough that the implementation logic doesn't get in the way.

Simplicity can be deceiving, however, and no refactoring is without risk.

"to refactor, the essential precondition is [...] solid tests"

I found it safest to first add a few Characterisation Tests to make sure I didn't introduce any errors as I changed the code. It did catch a few copy-paste goofs that I made, so adding tests turned out to be a good idea.

Testing NServiceBus message handlers isn't too hard. All the tests I wrote look similar, so one should be enough to give you an idea.

[Theory] [InlineData("1337")] [InlineData("baz")] public async Task OrderPlacedAndBilled(string orderId) { var sut = new ShippingPolicy { Data = new ShippingPolicyData { OrderId = orderId } }; var ctx = new TestableMessageHandlerContext(); await sut.Handle(new OrderPlaced { OrderId = orderId }, ctx); await sut.Handle(new OrderBilled { OrderId = orderId }, ctx); Assert.True(sut.Completed); var msg = Assert.Single(ctx.SentMessages.Containing<ShipOrder>()); Assert.Equal(orderId, msg.Message.OrderId); }

The tests use xUnit.net 2.4.2. When I downloaded the NServiceBus saga sample code it targeted .NET Framework 4.8, and I didn't bother to change the version.

While the NServiceBus framework will automatically hydrate and populate Data, in a unit test you have to remember to explicitly populate it. The TestableMessageHandlerContext class is a Test Spy that is part of NServiceBus testing API.

You'd think I was paid by Particular Software to write this article, but I'm not. All this is really just the introduction. You're excused if you've forgotten the topic of this article, but my goal is to show a State pattern example. Only now can we begin in earnest.

State pattern implementation #

Refactoring to the State pattern, I chose to let the ShippingPolicy class fill the role of the pattern's Context. Instead of a base class with virtual method, I used an interface to define the State object, as that's more Idiomatic in C#:

public interface IShippingState { Task OrderPlaced(OrderPlaced message, IMessageHandlerContext context, ShippingPolicy policy); Task OrderBilled(OrderBilled message, IMessageHandlerContext context, ShippingPolicy policy); }

The State pattern only shows examples where the State methods take a single argument: The Context. In this case, that's the ShippingPolicy. Careful! There's also a parameter called context! That's the NServiceBus context, and is an artefact of the original example. The two other parameters, message and context, are run-time values passed on from the ShippingPolicy's Handle methods:

public IShippingState State { get; internal set; } public async Task Handle(OrderPlaced message, IMessageHandlerContext context) { log.Info($"OrderPlaced message received."); Hydrate(); await State.OrderPlaced(message, context, this); Dehydrate(); } public async Task Handle(OrderBilled message, IMessageHandlerContext context) { log.Info($"OrderBilled message received."); Hydrate(); await State.OrderBilled(message, context, this); Dehydrate(); }

The Hydrate method isn't part of the State pattern, but finds an appropriate state based on Data:

private void Hydrate() { if (!Data.IsOrderPlaced && !Data.IsOrderBilled) State = InitialShippingState.Instance; else if (Data.IsOrderPlaced && !Data.IsOrderBilled) State = AwaitingBillingState.Instance; else if (!Data.IsOrderPlaced && Data.IsOrderBilled) State = AwaitingPlacementState.Instance; else State = CompletedShippingState.Instance; }

In more recent versions of C# you'd be able to use more succinct pattern matching, but since this code base is on .NET Framework 4.8 I'm constrained to C# 7.3 and this is as good as I cared to make it. It's not important to the topic of the State pattern, but I'm showing it in case you where wondering. It's typical that you need to translate between data that exists in the 'external world' and your object-oriented, polymorphic code, since at the boundaries, applications aren't object-oriented.

Likewise, the Dehydrate method translates the other way:

private void Dehydrate() { if (State is AwaitingBillingState) { Data.IsOrderPlaced = true; Data.IsOrderBilled = false; return; } if (State is AwaitingPlacementState) { Data.IsOrderPlaced = false; Data.IsOrderBilled = true; return; } if (State is CompletedShippingState) { Data.IsOrderPlaced = true; Data.IsOrderBilled = true; return; } Data.IsOrderPlaced = false; Data.IsOrderBilled = false; }

In any case, Hydrate and Dehydrate are distractions. The important part is that the ShippingPolicy (the State Context) now delegates execution to its State, which performs the actual work and updates the State.

Initial state #

The first time the saga runs, both Data.IsOrderPlaced and Data.IsOrderBilled are false, which means that the State is InitialShippingState:

public sealed class InitialShippingState : IShippingState { public readonly static InitialShippingState Instance = new InitialShippingState(); private InitialShippingState() { } public Task OrderPlaced( OrderPlaced message, IMessageHandlerContext context, ShippingPolicy policy) { policy.State = AwaitingBillingState.Instance; return Task.CompletedTask; } public Task OrderBilled( OrderBilled message, IMessageHandlerContext context, ShippingPolicy policy) { policy.State = AwaitingPlacementState.Instance; return Task.CompletedTask; } }

As the above state transition diagram indicates, the only thing that each of the methods do is that they transition to the next appropriate state: AwaitingBillingState if the first event was OrderPlaced, and AwaitingPlacementState when the event was OrderBilled.

"State object are often Singletons"

Like in the previous example I've made all the State objects Singletons. It's not that important, but since they are all stateless, we might as well. At least, it's in the spirit of the book.

Awaiting billing #

AwaitingBillingState is another IShippingState implementation:

public sealed class AwaitingBillingState : IShippingState { public readonly static IShippingState Instance = new AwaitingBillingState(); private AwaitingBillingState() { } public Task OrderPlaced( OrderPlaced message, IMessageHandlerContext context, ShippingPolicy policy) { return Task.CompletedTask; } public async Task OrderBilled( OrderBilled message, IMessageHandlerContext context, ShippingPolicy policy) { await context.SendLocal( new ShipOrder() { OrderId = policy.Data.OrderId }); policy.Complete(); policy.State = CompletedShippingState.Instance; } }