ploeh blog danish software design

Three data architectures for the server

A comparison, for educational purposes.

Use the right tool for the job. How often have you encountered that phrase when discussing software architecture?

There's nothing wrong with the sentiment per se, but it's almost devoid of meaning. It doesn't pass the 'not test'. Try to negate it and imagine if anyone would seriously hold that belief: Don't use the right tool for the job, said no-one ever.

Even so, the underlying idea is that there are better and worse ways to solve problems. In software architecture too. It follows that you should choose the better solution.

How to do that requires skill and experience. When planning a good software architecture, an important consideration is how it'll handle future requirements. This seems to indicate that an architect should be able to predict the future in order to pick the best architecture. Which is, in general, not possible. Predicting the future is not the topic of this article.

There is, however, a more practical issue related to the notion of using the right tool for the job. One that we can address.

Choice #

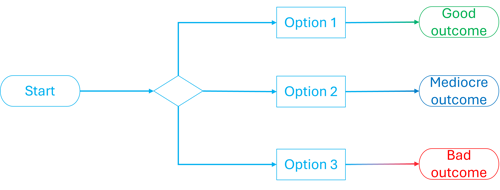

In order to choose the better solution, you need to be aware of alternatives. You can't choose if there's nothing to choose from. This seems obvious, but a flowchart may drive home the point in an even stronger fashion.

On the other hand, if you have options, you're now in a position to choose.

In order to make a decision, you must be able to identify alternatives. This is hardly earth-shattering, but perhaps a bit abstract. To make it concrete, in this article, I'll look at a particular example.

Default data architecture #

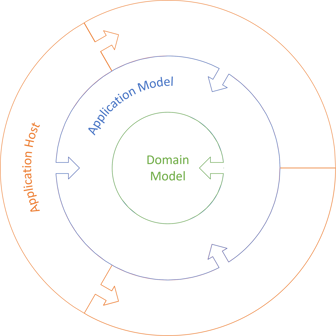

Many applications need some sort of persistent storage. Particularly when it comes to (relational) database-based systems, I've seen more than one organization defaulting to a single data architecture: A presentation layer with View Models, a business logic layer with Domain Models, and a data access layer with ORM objects. A few decades ago, you'd typically see that model illustrated with horizontal layers. This is no longer en vogue. Today, most organizations that I consult with will tell me that they've decided on Ports and Adapters. Even so, if you do it right, it's the same architecture.

Reusing a diagram from a recent article, we may draw it like this:

The architect or senior developer who made that decision is obviously aware of some of the lore in the industry. He or she can often name that data architecture as either Ports and Adapters, Hexagonal Architecture, Clean Architecture, or, more rarely, Onion Architecture.

I still get the impression that this way of arranging code was chosen by default, without much deliberation. I see it so often that it strikes me as a 'default architecture'. Are architects aware of alternatives? Can they compare the benefits and drawbacks of each alternative?

Three alternatives #

As an example, I'll explore three alternative data architectures, one of them being Ports and Adapters. My goal with this is only to raise awareness. Since I rarely (if ever) see my customers use anything other than Ports and Adapters, I think some readers may benefit from seeing some alternatives.

I'll show three ways to organize data with code, but that doesn't imply that these are the only three options. At the very least, some hybrid combinations are also possible. It's also possible that a fourth or fifth alternative exists, and I'm just not aware of it.

In three articles, you'll see each data architecture explored in more detail.

- Using Ports and Adapters to persist restaurant table configurations

- Using a Shared Data Model to persist restaurant table configurations

- Using only a Domain Model to persist restaurant table configurations

As the titles suggest, all three examples will attempt to address the same problem: How to persist restaurant table configuration for a restaurant. The scenario is the same as already outlined in the article Serialization with and without Reflection, and the example code base also attempts to follow the external data format of those articles.

Data formats #

In JSON, a table may be represented like this:

{

"singleTable": {

"capacity": 16,

"minimalReservation": 10

}

}

Or like this:

{ "communalTable": { "capacity": 10 } }

But I'll also explore what happens if you need to support multiple external formats, such as XML. Generally speaking, a given XML specification may lean towards favouring a verbose style based on elements, or a terser style based on attributes. An example of the former could be:

<communal-table> <capacity>12</capacity> </communal-table>

or

<single-table> <capacity>4</capacity> <minimal-reservation>3</minimal-reservation> </single-table>

while examples of the latter style include

<communal-table capacity="12" />

and

<single-table capacity="4" minimal-reservation="3" />

As it turns out, only one of the three data architectures is flexible enough to fully address such requirements.

Comparisons #

A REST API is the kind of application where data representation flexibility is most likely to be an issue. Thus, that only one of the three alternative architectures is able to exhibit enough expressive power in that dimension doesn't disqualify the other two. Each come with their own benefits and drawbacks.

| Ports and Adapters | Shared Data Model | Domain Model only | |

| Advantages |

|

|

|

| Disadvantages |

|

|

|

I'll discuss each alternative's benefits and drawbacks in their individual articles.

An important point of all this is that none of these articles are meant to be prescriptive. While I do have favourites, my biases are shaped by the kind of work I typically do. In other contexts, another alternative may prevail.

Example code #

As usual, example code is in C#. Of the three languages in which I'm most proficient (the other two being F# and Haskell), this is the most easily digestible for a larger audience.

All three alternatives are written with ASP.NET 8.0, and it's unavoidable that there will be some framework-specific details. In Code That Fits in Your Head, I made it an explicit point that while the examples in the book are in C#, the book (and the code in it) should be understandable by developers who normally use Java, C++, TypeScript, or similar C-based languages.

The book is, for that reason, light on .NET-specific details. Instead, I published an article that collects all the interesting .NET things I ran into while writing the book.

Not so here. The three articles cover enough ASP.NET particulars that readers who don't care about that framework are encouraged to skim-read.

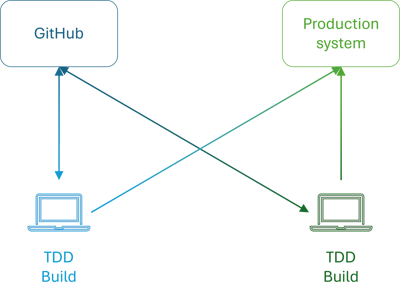

I've developed the three examples as three branches of the same Git repository. The code is available upon request against a small support donation of 10 USD (or more). If you're one of my regular supporters, you have my gratitude and can get the code without further donation. Send me an email in both cases.

Conclusion #

There's more than one way to organize a code base to deal with data. Depending on context, one may be a better choice than another. Thus, it pays to be aware of alternatives.

In the remaining articles in this series, you'll see three examples of how to deal with persistent data from a database. In order to establish a baseline, the first covers the well-known Ports and Adapters architecture.

Next: Using Ports and Adapters to persist restaurant table configurations.

The end of trust?

Software development in a globalized, hostile world.

Imagine that you're perusing the thriller section in an airport book store and come across a book with the following back cover blurb:

Programmers are dying.

Holly-Ann Kerr works as a data scientist for an NGO that fights workplace discrimination. While scrubbing input, she discovers an unusual pattern in the data. Some employees seem to have an unusually high fatal accident rate. Programmers are dying in traffic accidents, falling on stairs, defect electrical wiring, smoking in bed. They work for a variety of companies. Some for Big Tech, others for specialized component vendors, some for IT-related NGOs, others again for utility companies. The deaths seem to have nothing in common, until H-A uncovers a disturbing pattern.

All victims had recently started in a new position. And all were of Iranian descent.

Is a racist killer on the loose? But if so, why is he only targeting new hires? And why only software developers?

When H-A shares her discovery with the wrong people, she soon discovers that she'll be the next victim.

Okay, I'm not a professional editor, so this could probably do with a bit of polish. Does it sound like an exiting piece of fiction, though?

I'm going to spoil the plot, since the book doesn't exist anyway.

An international plot #

(Apologies to Iranian readers. I have nothing against Iranians, but find the regime despicable. In any case, nothing in the following hinges on the ICC. You can replace it with another adversarial intelligence agency that you don't like, including, but not limited to RGB, FSB, or a clandestine Chinese intelligence organization. You could probably even swap the roles and make CIA, MI5, or Mossad be the bad guys, if your loyalties lie elsewhere.)

In the story, it turns out that clandestine Iranian special operations are attempting to recruit moles in software organizations that constitute the supply chain of Western digital infrastructure.

Intelligence bureaus and software organizations that directly develop sensitive software tend to have good security measures. Planting a mole in such an organization is difficult. The entire supply chain of software dependencies, on the other hand, is much more vulnerable. If you can get an employee to install a backdoor in left-pad, chances are that you may attain remote execution capabilities on an ostensibly secure system.

In my hypothetical thriller, the Iranians kill those software developers that they fail to recruit. After all, one can't run a clandestine operation if people notify the police that they've been approached by a foreign power.

Long game #

Does that plot sound far-fetched?

I admit that I did turn to 11 some plot elements. This is, after all, supposed to be a thriller.

The story is, however, 'loosely based on real events'. Earlier this year, a Microsoft developer revealed a backdoor that someone had intentionally planted in xz Utils. That version of the software was close to being merged into Debian and Red Hat Linux distributions. It would have enabled an attacker to execute arbitrary code on an infected machine.

The attack was singularly sophisticated. It also looks as though it was initiated years ago by one or more persons who contributed real, useful work to an open-source project, apparently (in hindsight) with the sole intention of gaining the trust of the rest of the community.

This is such a long game that it reeks of an adversarial state actor. The linked article speculates on which foreign power may be behind the attack. No, not the Iranians, after all.

If you think about it, it's an entirely rational gambit for a foreign intelligence agency to make. It's not that the NSA hasn't already tried something comparable. If anything, the xz hack mostly seems far-fetched because it's so unnecessarily sophisticated.

Usually, the most effective hacking techniques utilize human trust or gullibility. Why spend enormous effort developing sophisticated buffer overrun exploits if you can get a (perhaps unwitting) insider to run arbitrary code for you?

It'd be much cheaper, and much more reliable, to recruit moles on the inside of software companies, and get them to add the backdoors you need. It doesn't necessary have to be new hires, but perhaps (I'm speculating) it's easier to recruit people before they've developed any loyalty to their new team mates.

The soft underbelly #

Which software organizations are the most promising targets? If it were me, I'd particularly try to go after various component vendors. One category may be companies that produce RAD tools such as grid GUIs, but also service providers that offer free SDKs to, say, send email, generate invoices, send SMS, charge credit cards, etc.

I'm not implying that any such company has ill intent, but since such software run on many machines, it's a juicy target if you can sneak a backdoor into one.

Why not open-source software (OSS)? Many OSS libraries run on even more machines, so wouldn't that be an even more attractive target for an adversary? Yes, but on the other hand, most popular open-source code is also scrutinized by many independent agents, so it's harder to sneak in a backdoor. As the attempted xz hack demonstrates, even a year-long sophisticated attack is at risk of being discovered.

Doesn't commercial or closed-source code receive the same level of scrutiny?

In my experience, not always. Of course, some development organizations use proper shared-code-ownership techniques like code reviews or pair programming, but others rely on siloed solo development. Programmers just check in code that no-one else ever looks at.

In such an organization, imagine how easy it'd be for a mole to add a backdoor to a widely-distributed library. He or she wouldn't even have to resort to sophisticated ways to obscure the backdoor, because no colleague would be likely to look at the code. Particularly not if you bury it in seven levels of nested for loops and call the class MonitorManager or similar. As long as the reusable library ships as compiled code, it's unlikely that customers will discover the backdoor before its too late.

Trust #

Last year I published an article on trust in software development. The point of that piece wasn't that you should suspect your colleagues of ill intent, but rather that you can trust neither yourself nor your co-workers for the simple reason that people make mistakes.

Since then, I've been doing some work in the digital security space, and I've been forced to think about concerns like supply-chain attacks. The implications are, unfortunately, that you can't automatically trust that your colleague has benign intentions.

This, obviously, will vary with context. If you're only writing a small web site for your HR department to use, it's hard to imagine how an adversarial state actor could take advantage of a backdoor in your code. If so, it's unlikely that anyone will go to the trouble of planting a mole in your organization.

On the other hand, if you're writing any kind of reusable library or framework, you just might be an interesting target. If so, you can no longer entirely trust your team mates.

As a Dane, that bothers me deeply. Denmark, along with the other Nordic countries, exhibit the highest levels of inter-societal trust in the world. I was raised to trust strangers, and so far, it's worked well enough for me. A business transaction in Denmark is often just a short email exchange. It's a great benefit to the business environment, and the economy in general, that we don't have to waste a lot of resources filling out formulas, contracts, agreements, etc. Trust is grease that makes society run smoother.

Even so, Scandinavians aren't naive. We don't believe that we can trust everyone. To a large degree, we rely on a lot of subtle social cues to assess a given situation. Some people shouldn't be trusted, and we're able to identify those situations, too.

What remains is that insisting that you can trust your colleague, just because he or she is your colleague, would be descending into teleology. I'm not a proponent of wishful thinking if good arguments suggest the contrary.

Shared code ownership #

Perhaps you shouldn't trust your colleagues. How does that impact software development?

The good news is that this is yet another argument to practice the beneficial practices of shared code ownership. Crucially, what this should entail is not just that everyone is allowed to edit any line of code, but rather that all team members take responsibility for the entire code base. No-one should be allowed to write code in splendid isolation.

There are several ways to address this concern. I often phrase it as follows: There should be at least two pair of eyes on every line of code before a merge to master.

As I describe in Code That Fits in Your Head, you can achieve that goal with pair programming, ensemble programming, or code reviews (including agile pull request reviews). That's a broad enough palette that it should be possible for every developer in every organization to find a modus vivendi that fits any personality and context.

Just looking at each others' code could significantly raise the bar for a would-be mole to add a backdoor to the code base. As an added benefit, it might also raise the general code quality.

What this does suggest to me, however, is that a too simplistic notion of running on trunk may be dangerous. Letting everyone commit to master and trusting that everyone means well no longer strikes me as a good idea (again, given the context, and all that).

Or, if you do, you should consider having some sort of systematic posterior post mortem review process. I've read of organizations that do that, but specific sources escape me at the moment. With Git, however, it's absolutely within the realms of the possible to make a diff of all change since the last ex-post review, and then go through those changes.

Conclusion #

The world is changed. I feel it in the OWASP top 10. I sense it in the shifting geopolitical climate. I smell it on the code I review.

Much that once was, is lost. The dream of a global computer network with boundless trust is no more. There are countries whose interests no longer align with ours. Who pay full-time salaries to people whose job it is to wage 'cyber warfare' against us. We can't rule out that parts of such campaigns include planting moles in our midsts. Moles whose task it is to weaken the foundations of our digital infrastructure.

In that light, should you always trust your colleagues?

Despite the depressing thought that I probably shouldn't, I'm likely to bounce back to my usual Danish most-people-are-to-be-trusted attitude tomorrow. On the other hand, I'll still insist that more than one person is involved with every line of code. Not only because every other person may be a foreign agent, but mostly, still, because humans are fallible, and two brains think better than one.

Should interfaces be asynchronous?

Async and await are notorious for being contagious. Must all interfaces be Task-based, just in case?

I recently came across this question on Mastodon:

"To async or not to async?

"How would you define a library interface for a service that probably will be implemented with an in memory procedure - let's say returning a mapped value to a key you registered programmatically - and a user of your API might want to implement a decorator that needs a 'long running task' - for example you want to log a msg into your db or load additional mapping from a file?

"Would you define the interface to return a Task<string> or just a string?"

While seemingly a simple question, it's both fundamental and turns out to have deeper implications than you may at first realize.

Interpretation #

Before I proceed, I'll make my interpretation of the question more concrete. This is just how I interpret the question, so doesn't necessarily reflect the original poster's views.

The post itself doesn't explicitly mention a particular language, and since several languages now have async and await features, the question may be of more general interest that a question constrained to a single language. On the other hand, in order to have something concrete to discuss, it'll be useful with some real code examples. From perusing the discussion surrounding the original post, I get the impression that the language in question may be C#. That suits me well, since it's one of the languages with which I'm most familiar, and is also a language where programmers of other C-based languages should still be able to follow along.

My interpretation of the implementation, then, is this:

public sealed class NameMap { private readonly Dictionary<Guid, string> knownIds = new() { { new Guid("4778CA3D-FB1B-4665-AAC1-6649CEFA4F05"), "Bob" }, { new Guid("8D3B9093-7D43-4DD2-B317-DCEE4C72D845"), "Alice" } }; public string GetName(Guid guid) { return knownIds.TryGetValue(guid, out var name) ? name : "Trudy"; } }

Nothing fancy, but, as Fandermill writes in a follow-up post:

"Used examples that first came into mind, but it could be anything really."

The point, as I understand it, is that the intended implementation doesn't require asynchrony. A Decorator, on the other hand, may.

Should we, then, declare an interface like the following?

public interface INameMap { Task<string> GetName(Guid guid); }

If we do, the NameMap class can't automatically implement that interface because the return types of the two GetName methods don't match. What are the options?

Conform #

While the following may not be the 'best' answer, let's get the obvious solution out of the way first. Let the implementation conform to the interface:

public sealed class NameMap : INameMap { private readonly Dictionary<Guid, string> knownIds = new() { { new Guid("4778CA3D-FB1B-4665-AAC1-6649CEFA4F05"), "Bob" }, { new Guid("8D3B9093-7D43-4DD2-B317-DCEE4C72D845"), "Alice" } }; public Task<string> GetName(Guid guid) { return Task.FromResult( knownIds.TryGetValue(guid, out var name) ? name : "Trudy"); } }

This variation of the NameMap class conforms to the interface by making the GetName method look asynchronous.

We may even keep the synchronous implementation around as a public method if some client code might need it:

public sealed class NameMap : INameMap { private readonly Dictionary<Guid, string> knownIds = new() { { new Guid("4778CA3D-FB1B-4665-AAC1-6649CEFA4F05"), "Bob" }, { new Guid("8D3B9093-7D43-4DD2-B317-DCEE4C72D845"), "Alice" } }; public Task<string> GetName(Guid guid) { return Task.FromResult(GetNameSync(guid)); } public string GetNameSync(Guid guid) { return knownIds.TryGetValue(guid, out var name) ? name : "Trudy"; } }

Since C# doesn't support return-type-based overloading, we need to distinguish these two methods by giving them different names. In C# it might be more idiomatic to name the asynchronous method GetNameAsync and the synchronous method just GetName, but for reasons that would be too much of a digression now, I've never much liked that naming convention. In any case, I'm not going to go in this direction for much longer, so it hardly matters how we name these two methods.

Kinds of interfaces #

Another digression is, however, quite important. Before we can look at some more code, I'm afraid that we have to perform a bit of practical ontology, as it were. It starts with the question: Why do we even need interfaces?

I should also make clear, as a digression within a digression, that by 'interface' in this context, I'm really interested in any kind of mechanism that enables you to achieve polymorphism. In languages like C# or Java, we may in fact avail ourselves of the interface keyword, as in the above INameMap example, but we may equally well use a base class or perhaps just what C# calls a delegate. In other languages, we may use function or action types, or even function pointers.

Regardless of specific language constructs, there are, as far as I can tell, two kinds of interfaces:

- Interfaces that enable variability or extensibility in behaviour.

- Interfaces that mostly or exclusively exist to support automated testing.

While there may be some overlap between these two kinds, in my experience, the intersection between the two tends to be surprisingly small. Interfaces tend to mostly belong to one of those two categories.

Strategies and higher-order functions #

In design-patterns parlance, examples of the first kind are Builder, State, Chain of Responsibility, Template Method, and perhaps most starkly represented by the Strategy pattern. A Strategy is an encapsulated piece of behaviour that you pass around as a single 'thing' (an object).

And granted, you could also use a Strategy to access a database or make a web-service call, but that's not how the pattern was originally described. We'll return to that use case in the next section.

Rather, the first kind of interface exists to enable extensibility or variability in algorithms. Typical examples (from Design Patterns) include page layout, user interface component rendering, building a maze, finding the most appropriate help topic for a given application context, and so on. If we wish to relate this kind of interface to the SOLID principles, it mostly exists to support the Open-closed principle.

A good heuristics for identifying such interfaces is to consider the Reused Abstractions Principle (Jason Gorman, 2010, I'd link to it, but the page has fallen off the internet. Use your favourite web archive to read it.). If your code base contains multiple production-ready implementations of the same interface, you're reusing the interface, most likely to vary the behaviour of a general-purpose data structure.

And before the functional-programming (FP) crowd becomes too smug: FP uses this kind of interface all the time. In the FP jargon, however, we rather talk about higher-order functions and the interfaces we use to modify behaviour are typically modelled as functions and passed as lambda expressions. So when you write Cata((_, xs) => xs.Sum(), _ => 1) (as one does), you might as well just have passed a Visitor implementation to an Accept method.

This hints at a more quantifiable distinction: If the interface models something that's intended to be a pure function, it'd typically be part of a higher-order API in FP, while we in object-oriented design (once again) lack the terminology to distinguish these interfaces from the other kind.

These days, in C# I mostly use these kinds of interfaces for the Visitor pattern.

Seams #

The other kind of interface exists to afford automated testing. In Working Effectively with Legacy Code, Michael Feathers calls such interfaces Seams. Modern object-oriented code bases often use Dependency Injection (DI) to control which Strategies are in use in a given context. The production system may use an object that communicates with a relational database, while an automated test environment might replace that with a Test Double.

Yes, I wrote Strategies. As I suggested above, a Strategy is really a replaceable object in its purest form. When you use DI you may call all those interfaces IUserRepository, ICommandHandler, IEmailGateway, and so on, but they're really all Strategies.

Contrary to the first kind of interface, you typically only find a single production implementation of each of these interfaces. If you find more that one, the rest are usually Decorators (one that logs, one that caches, one that works as a Circuit Breaker, etc.). All other implementations will be defined in the test code as dynamic mocks or Fakes.

Code bases that rely heavily on DI in order to support testing rub many people the wrong way. In 2014 David Heinemeier Hansson published a serious criticism of such test-induced damage. For the record, I agree with the criticism, but not with the conclusion. While I still practice test-driven development, I only define interfaces for true architectural dependencies. So, yes, my code bases may have an IReservationsRepository or IEmailGateway, but no ICommandHandler or IUserManager.

The bottom line, though, is that some interfaces exist to support testing. If there's a better way to make inherently non-deterministic systems behave deterministically in a test context, I've yet to discover it.

(As an aside, it's worth looking into tests that adopt non-deterministic behaviour as a driving principle, or at least an unavoidable state of affairs. Property-based testing is one such approach, but I also found the article When I'm done, I don't clean up by Arialdo Martini interesting. You may also want to refer to my article Waiting to happen for a discussion of how to make tests independent of system time.)

Where to define interfaces #

The reason the above distinction is important is that it fundamentally determines where interfaces should be defined. In short, the first kind of interface is part of an object model's API, and should be defined together with that API. The second kind, on the other hand, is part of a particular application's architecture, and should be defined by the client code that talks to the interface.

As an example of the first kind, consider this recent example, where the IPriorityEditor<T> interface is part of the PriorityCollection<T> API. You must ship the interface together with the class, because the Edit method takes an interface implementation as an argument. It's how client code interacts with the API.

Another example is this Table class that comes with an ITableVisitor<T> interface. In both cases, we'd expect interface implementations to be deterministic. These interfaces don't exist to support automated testing, but rather to afford a flexible programming model.

For the sake of argument, imagine that you package such APIs in reusable libraries that you publish via a package manager. In that case, it's obvious that the interface is as much part of the package as the class.

Contrast this with the other kind of interface, as described in the article Decomposing CTFiYH's sample code base or showcased in the article An example of state-based testing in C#. In the latter example, the interfaces IUserReader and IUserRepository are not part of any pre-packaged library. Rather, they are defined by the application code to support application-specific needs.

This may be even more evident if you contemplate the diagram in Decomposing CTFiYH's sample code base. Interfaces like IPostOffice and IReservationsRepository only exist to support the application. Following the Dependency Inversion Principle

"clients [...] own the abstract interfaces"

In these code bases, only the Controllers (or rather the tests that exercise them) need these interfaces, so the Controllers get to define them.

Should it be asynchronous, then? #

Okay, so should INameMap.GetName return string or Task<string>, then?

Hopefully, at this point, it should be clear that the answer depends on what kind of interface it is.

If it's the first kind, the return type should support the requirements of the API. If the object model doesn't need the return type to be asynchronous, it shouldn't be.

If it's the second kind of interface, the application code decides what it needs, and defines the interface accordingly.

In neither case, however, is it the concrete class' responsibility to second-guess what client code might need.

But client code may need the method to be asynchronous. What's the harm of returning Task<string>, just in case?

The problem, as you may well be aware, is that the asynchronous programming model is contagious. Once you've made an API asynchronous, you can't easily make it synchronous, whereas if you have a synchronous API, you can easily make it asynchronous. This follows from Postel's law, in this case: Be conservative with what you send.

Library API #

Imagine, for the sake of argument, that the NameMap class is defined in a reusable library, wrapped in a package and imported into your code base via a package manager (NuGet, Maven, pip, NPM, Hackage, RubyGems, etc.).

Clearly it shouldn't implement any interface in order to 'support unit testing', since such interfaces should be defined by application code.

It could implement one or more 'extensibility' interfaces, if such interfaces are part of the wider API offered by the library. In the case of the NameMap class, we don't really know if that's the case. To complete this part of the argument, then, I'd just leave it as shown in the first code example, shown above. It doesn't need to implement any interface, and GetName can just return string.

Domain Model #

What if, instead of an external library, the NameMap class is part of an application's Domain Model?

In that case, you could define application-level interfaces as part of the Domain Model. In fact, most people do. Even so, I'd recommend that you don't, at least if you're aiming for a Functional Core, Imperative Shell architecture, a functional architecture, or even a Ports and Adapters or, if you will, Clean Architecture. The interfaces that exist only to support testing are application concerns, so keep them out of the Domain Model and instead define them in the Application Model.

You don't have to follow my advice. If you want to define interfaces in the Domain Model, I can't stop you. But what if, as I recommend, you define application-specific interfaces in the Application Model? If you do that, your NameMap Domain Model can't implement your INameMap interface, because the dependencies point the other way, and most languages will not allow circular dependencies.

In that case, what do you do if, as the original toot suggested, you need to Decorate the GetName method with some asynchronous behaviour?

You can always introduce an Adapter:

public sealed class NameMapAdapter : INameMap { private readonly NameMap imp; public NameMapAdapter(NameMap imp) { this.imp = imp; } public Task<string> GetName(Guid guid) { return Task.FromResult(imp.GetName(guid)); } }

Now any NameMap object can look like an INameMap. This is exactly the kind of problem that the Adapter pattern addresses.

But, you say, that's too much trouble! I don't want to have to maintain two classes that are almost identical.

I understand the concern, and it may even be appropriate. Maybe you're right. As usual, I don't really intend this article to be prescriptive. Rather, I'm offering ideas for your consideration, and you can choose to adopt them or ignore them as it best fits your context.

When it comes to whether or not an Adapter is an unwarranted extra complication, I'll return to that topic later in this article.

Application Model #

The final architectural option is when the concrete NameMap class is part of the Application Model, where you'd also define the application-specific INameMap interface. In that case, we must assume that the NameMap class implements some application-specific concern. If you want it to implement an interface so that you can wrap it in a Decorator, then do that. This means that the GetName method must conform to the interface, and if that means that it must be asynchronous, then so be it.

As Kent Beck wrote in a Facebook article that used to be accessible without a Facebook account (but isn't any longer):

"Things that change at the same rate belong together. Things that change at different rates belong apart."

If the concrete NameMap class and the INameMap interface are both part of the application model, it's not unreasonable to guess that they may change together. (Do be mindful of Shotgun Surgery, though. If you expect the interface and the concrete class to frequently change, then perhaps another design might be more appropriate.)

Easier Adapters #

Before concluding this article, let's revisit the topic of introducing an Adapter for the sole purpose of 'architectural purity'. Should you really go to such lengths only to 'do it right'? You decide, but

You can only be pragmatic if you know how to be dogmatic.

I'm presenting a dogmatic solution for your consideration, so that you know what it might look like. Would I follow my own 'dogmatic' advice? Yes, I usually do, but then, I wouldn't log the return value of a pure function, so I wouldn't introduce an interface for that purpose, at least. To be fair to Fandermill, he or she also wrote: "or load additional mapping from a file", which could be an appropriate motivation for introducing an interface. I'd probably go with an Adapter in that case.

Whether or not an Adapter is an unwarranted complication depends, however, on language specifics. In high-ceremony languages like C#, Java, or C++, adding an Adapter involves at least one new file, and dozens of lines of code.

Consider, on the other hand, a low-ceremony language like Haskell. The corresponding getName function might close over a statically defined map and have the type getName :: UUID -> String.

How do you adapt such a pure function to an API that returns IO (which is roughly comparable to task-based programming)? Trivially:

getNameM :: Monad m => UUID -> m String getNameM = return . getName

For didactic purposes I have here shown the 'Adapter' as an explicit function, but in idiomatic Haskell I'd consider this below the Fairbairn threshold; I'd usually just inline the composition return . getName if I needed to adapt the getName function to the Kleisli category.

You can do the same in F#, where the composition would be getName >> Task.FromResult. F# compositions usually go in the (for Westerners) intuitive left-to-right directions, whereas Haskell compositions follow the mathematical right-to-left convention.

The point, however, is that there's nothing conceptually complicated about an Adapter. Unfortunately, however, some languages require substantial ceremony to implement them.

Conclusion #

Should an API return a Task-based (asynchronous) value 'just in case'? In general: No.

You can't predict all possible use cases, so don't make an API more complicated than it has to be. If you need to implement an application-specific interface, use the Adapter design pattern.

A possible exception to this rule is if the entire API (the concrete implementation and the interface) only exists to support a specific application. If the interface and its concrete implementation are both part of the Application Model, you may as well skip the Adapter step and consider the concrete implementation as its own Adapter.

An immutable priority collection

With examples in C# and F#.

This article is part of a series about encapsulation and immutability. After two attempts at an object-oriented, mutable implementation, I now turn toward immutability. As already suggested in the introductory article, immutability makes it easier to maintain invariants.

In the introductory article, I described the example problem in more details, but in short, the exercise is to develop a class that holds a collection of prioritized items, with the invariant that the priorities must always sum to 100. It should be impossible to leave the object in a state where that's not true. It's quite an illuminating exercise, so if you have the time, you should try it for yourself before reading on.

Initialization #

Once again, I begin by figuring out how to initialize the object, and how to model it. Since it's a kind of collection, and since I now plan to keep it immutable, it seems natural to implement IReadOnlyCollection<T>.

In this, the third attempt, I'll reintroduce Prioritized<T>, with one important difference. It's now an immutable record:

public sealed record Prioritized<T>(T Item, byte Priority);

If you're not on a version of C# that supports records (which is also trivially true if you're not using C# at all), you can always define an immutable class by hand. It just requires more boilerplate code.

Prioritized<T> is going to be the T in the IReadOnlyCollection<T> implementation:

public sealed class PriorityCollection<T> : IReadOnlyCollection<Prioritized<T>>

Since an invariant should always hold, it should also hold at initialization, so the PriorityCollection<T> constructor must check that all is as it should be:

private readonly Prioritized<T>[] priorities; public PriorityCollection(params Prioritized<T>[] priorities) { if (priorities.Sum(p => p.Priority) != 100) throw new ArgumentException( "The sum of all priorities must be 100.", nameof(priorities)); this.priorities = priorities; }

The rest of the class is just the IReadOnlyCollection<T> implementation, which just delegates to the priorities field.

That's it, really. That's the API. We're done.

Projection #

But, you may ask, how does one edit such a collection?

(Comic originally by John Muellerleile.)

Humour aside, you don't edit an immutable object, but rather make a new object from a previous one. Most modern languages now come with built-in collection-projection APIs; in .NET, it's called LINQ. Here's an example. You begin with a collection with two items:

var pc = new PriorityCollection<string>( new Prioritized<string>("foo", 60), new Prioritized<string>("bar", 40));

You'd now like to add a third item with priority 20:

var newPriority = new Prioritized<string>("baz", 20);

How should you make room for this new item? One option is to evenly reduce each of the existing priorities:

var reduction = newPriority.Priority / pc.Count; IEnumerable<Prioritized<string>> reduced = pc .Select(p => p with { Priority = (byte)(p.Priority - reduction) });

Notice that while the sum of priorities in reduced no longer sum to 100, it's okay, because reduced isn't a PriorityCollection object. It's just an IEnumerable<Prioritized<string>>.

You can now Append the newPriority to the reduced sequence and repackage that in a PriorityCollection:

var adjusted = new PriorityCollection<string>(reduced.Append(newPriority).ToArray());

Like the original pc object, the adjusted object is valid upon construction, and since its immutable, it'll remain valid.

Edit #

If you think this process of unwrapping and rewrapping seems cumbersome, we can make it a bit more palatable by defining a wrapping Edit function, similar to the one in the previous article:

public PriorityCollection<T> Edit( Func<IReadOnlyCollection<Prioritized<T>>, IEnumerable<Prioritized<T>>> edit) { return new PriorityCollection<T>(edit(this).ToArray()); }

You can now write code equivalent to the above example like this:

var adjusted = pc.Edit(col => { var reduced = col.Select(p => p with { Priority = (byte)(p.Priority - reduction) }); return reduced.Append(newPriority); });

I'm not sure it's much of an improvement, though.

Using the right tool for the job #

While C# over the years has gained some functional-programming features, it's originally an object-oriented language, and working with immutable values may still seem a bit cumbersome. If so, consider using a language natively designed for this style of programming. On .NET, F# is the obvious choice.

First, you define the required types:

type Prioritized<'a> = { Item: 'a; Priority: byte } type PriorityList = private PriorityList of Prioritized<string> list

Notice that PriorityList has a private constructor, so that client code can't just create any value. The type should protect its invariants, since encapsulation is also relevant in functional programming. Since client code can't directly create PriorityList objects, you instead supply a function for that purpose:

module PriorityList = let tryCreate priorities = if priorities |> List.sumBy (_.Priority) = 100uy then Some (PriorityList priorities) else None

That's really it, although you also need a way to work with the data. We supply two alternatives that correspond to the above C#:

let edit f (PriorityList priorities) = f priorities |> tryCreate let toList (PriorityList priorities) = priorities

These functions are also defined on the PriorityList module.

Here's the same adjustment example as shown above in C#:

let pl = [ { Item = "foo"; Priority = 60uy }; { Item = "bar"; Priority = 40uy } ] |> PriorityList.tryCreate let newPriority = { Item = "baz"; Priority = 20uy } let adjusted = pl |> Option.bind (PriorityList.edit (fun l -> l |> List.map (fun p -> { p with Priority = p.Priority - (newPriority.Priority / byte l.Length) }) |> List.append [ newPriority ]))

The entire F# definition is 15 lines of code, including namespace declaration and blank lines.

Conclusion #

With an immutable data structure, you only need to check the invariants upon creation. Invariants therefore become preconditions. Once a value is created in a valid state, it stays valid because it never changes state.

If you're having trouble maintaining invariants in an object-oriented design, try making the object immutable. It's likely to make it easier to attain good encapsulation.

Comments

First, it's a nice series of articles.

I see that nowadays C# has a generic projection, which is a sort of wither in Java parlance. I should be usable instead of having to define the `Edit` one.

A way to make it more palatable would be to have a `tryAddAndRedistrube(Prioritized

But, it's usually not possible to anticipate all the ways the clients wants to add elements to something, so I think I prefer the open-ended way this API lets clients choose.

Thank you for writing. Whether or not a wither works in this case depends on language implementation details. For example, the F# example code doesn't allow copy-and-update expressions because the record constructor is private. This is as it should be, since otherwise, client code would be able to circumvent the encapsulation.

I haven't tried to refactor the C# class to a record, and I don't recall whether C# with expressions respect custom constructors. That's a good exercise for any reader to try out; unfortunately, I don't have time for that at the moment.

As to your other point, it's definitely conceivable that a library developer could add more convenient methods to the PriorityCollection<T> class, including one that uses a simple formula to redistribute existing priorities to make way for the new one. As far as I can tell, though, you'd be able to implement such more convenient APIs as extension methods that are implemented using the basic affordances already on display here. If so, we may consider the constructor and the IReadOnlyCollection<Prioritized<T>> interface as the fundamental API. Everything else, including the Edit method, could build off that.

A mutable priority collection

An encapsulated, albeit overly complicated, implementation.

This is the second in a series of articles about encapsulation and immutability. In the next article, you'll see how immutability makes encapsulation easier, but in order to appreciate that, you should see the alternative. This article, then, shows a working, albeit overly complicated, implementation that does maintain its invariants.

In the introductory article, I described the example problem in more details, but in short, the exercise is to develop a class that holds a collection of prioritized items, with the invariant that the priorities must always sum to 100. It should be impossible to leave the object in a state where that's not true. It's quite an illuminating exercise, so if you have the time, you should try it for yourself before reading on.

Initialization #

As the previous article demonstrated, inheriting directly from a base class seems like a dead end. Once you see the direction that I go in this article, you may argue that it'd be possible to also make that design work with an inherited collection. It may be, but I'm not convinced that it would improve anything. Thus, for this iteration, I decided to eschew inheritance.

On the other hand, we need an API to query the object about its state, and I found that it made sense to implement the IReadOnlyDictionary interface.

As before, invariants are statements that are always true about an object, and that includes a newly initialized object. Thus, the PriorityCollection<T> class should require enough information to safely initialize.

public sealed class PriorityCollection<T> : IReadOnlyDictionary<T, byte> where T : notnull { private readonly Dictionary<T, byte> dict; public PriorityCollection(T initial) { dict = new Dictionary<T, byte> { { initial, 100 } }; } // IReadOnlyDictionary implemented by delegating to dict field... }

Several design decisions are different from the previous article. This design has no Prioritized<T> class. Instead it treats the item (of type T) as a dictionary key, and the priority as the value. The most important motivation for this design decision was that this enables me to avoid the 'leaf node mutation' problem that I demonstrated in the previous article. Notice how, while the general design in this iteration will be object-oriented and mutable, I already take advantage of a bit of immutability to make the design simpler and safer.

Another difference is that you can't initialize a PriorityCollection<T> object with a list. Instead, you only need to tell the constructor what the initial item is. The constructor will then infer that, since this is the only item so far, its priority must be 100. It can't be anything else, because that would violate the invariant. Thus, no assertion is required in the constructor.

Mutation API #

So far, the code only implements the IReadOnlyDictionary API, so we need to add some methods that will enable us to add new items and so on. As a start, we can add methods to add, remove, or update items:

public void Add(T key, byte value) { AssertInvariants(dict.Append(KeyValuePair.Create(key, value))); dict.Add(key, value); } public void Remove(T key) { AssertInvariants(dict.Where(kvp => !kvp.Key.Equals(key))); dict.Remove(key); } public byte this[T key] { get { return dict[key]; } set { var l = dict.ToDictionary(kvp => kvp.Key, kvp => kvp.Value); l[key] = value; AssertInvariants(l); dict[key] = value; } }

I'm not going to show the AssertInvariants helper method yet, since it's going to change anyway.

At this point, the implementation suffers from the same problem as the example in the previous article. While you can add new items, you can only add an item with priority 0. You can only remove items if they have priority 0. And you can only 'update' an item if you set the priority to the same value as it already had.

We need to be able to add new items, change their priorities, and so on. How do we get around the above problem, without breaking the invariant?

Edit mode #

One way out of this conundrum is introduce a kind of 'edit mode'. The idea is to temporarily turn off the maintenance of the invariant for long enough to allow edits.

Af first glance, such an idea seems to go against the very definition of an invariant. After all, an invariant is a statement about the object that is always true. If you allow a client developer to turn off that guarantee, then, clearly, the guarantee is gone. Guarantees only work if you can trust them, and you can't trust them if they can be cancelled.

That idea in itself doesn't work, but if we can somehow encapsulate such an 'edit action' in an isolated scope that either succeeds or fails in its entirety, we may be getting somewhere. It's an idea similar to Unit of Work, although here we're not involving an actual database. Still, an 'edit action' is a kind of in-memory transaction.

For didactic reasons, I'll move toward that design in a series of step, where the intermediate steps fail to maintain the invariant. We'll get there eventually. The first step is to introduce 'edit mode'.

private bool isEditing;

While I could have made that flag public, I found it more natural to wrap access to it in two methods:

public void BeginEdit() { isEditing = true; } public void EndEdit() { isEditing = false; }

This still doesn't accomplishes anything in itself, but the final change in this step is to change the assertion so that it respects the flag:

private void AssertInvariants(IEnumerable<KeyValuePair<T, byte>> candidate) { if (!isEditing && candidate.Sum(kvp => kvp.Value) != 100) throw new InvalidOperationException( "The sum of all values must be 100."); }

Finally, you can add or change priorities, as this little F# example shows:

sut.BeginEdit () sut["foo"] <- 50uy sut["bar"] <- 50uy sut.EndEdit ()

Even if you nominally 'don't read F#', this little example is almost like C# without semicolons. The <- arrow is F#'s mutation or assignment operator, which in C# would be =, and the uy suffix is the F# way of stating that the literal is a byte.

The above example is well-behaved because the final state of the object is valid. The priorities sum to 100. Even so, no code in PriorityCollection<T> actually checks that, so we could trivially leave the object in an invalid state.

Assert invariant at end of edit #

The first step toward remedying that problem is to add a check to the EndEdit method:

public void EndEdit() { isEditing = false; AssertInvariants(dict); }

The class is still not effectively protecting its invariants, because a client developer could forget to call EndEdit, or client code might pass around a collection in edit mode. Other code, receiving such an object as an argument, may not know whether or not it's in edit mode, so again, doesn't know if it can trust it.

We'll return to that problem shortly, but first, there's another, perhaps more pressing issue that we should attend to.

Edit dictionary #

The current implementation directly edits the collection, and even if a client developer remembers to call EndEdit, other code, higher up in the call stack could circumvent the check and leave the object in an invalid state. Not that I expect client developers to be deliberately malicious, but the notion that someone might wrap a method call in a try-catch block seems realistic.

The following F# unit test demonstrates the issue:

[<Fact>] let ``Attempt to circumvent`` () = let sut = PriorityCollection<string> "foo" try sut.BeginEdit () sut["foo"] <- 50uy sut["bar"] <- 48uy sut.EndEdit () with _ -> () 100uy =! sut["foo"] test <@ sut.ContainsKey "bar" |> not @>

Again, let me walk you through it in case you're unfamiliar with F#.

The try-with block works just like C# try-catch blocks. Inside of that try-with block, the test enters edit mode, changes the values in such a way that the sum of them is 98, and then calls EndEdit. While EndEdit throws an exception, those four lines of code are wrapped in a try-with block that suppresses all exceptions.

The test attempts to verify that, since the edit failed, the "foo" value should be 100, and there should be no "bar" value. This turns out not to be the case. The test fails. The edits persist, even though EndEdit throws an exception, because there's no roll-back.

You could probably resolve that defect in various ways, but I chose to address it by introducing two, instead of one, backing dictionaries. One holds the data that always maintains the invariant, and the other is a temporary dictionary for editing.

private Dictionary<T, byte> current; private readonly Dictionary<T, byte> encapsulated; private readonly Dictionary<T, byte> editable; private bool isEditing; public PriorityCollection(T initial) { encapsulated = new Dictionary<T, byte> { { initial, 100 } }; editable = []; current = encapsulated; }

There are two dictionaries: encapsulated holds the always-valid list of priorities, while editable is the dictionary that client code will be editing when in edit mode. Finally, current is either of these: editable when the object is in edit mode, and encapsulated when it's not. Most of the existing code shown so far now uses current, which before was called dict. The important changes are in BeginEdit and EndEdit.

public void BeginEdit() { isEditing = true; editable.Clear(); foreach (var kvp in current) editable.Add(kvp.Key, kvp.Value); current = editable; }

Besides setting the isEditing flag, BeginEdit now copies all data from current to editable, and then sets current to editable. Keep in mind that encapsulated still holds the original, valid values.

Now that I'm writing this, I'm not even sure if this method is re-entrant, in the following sense: What happens if client code calls BeginEdit, makes some changes, and then calls BeginEdit again? It's questions like these that I don't feel intelligent enough to feel safe that I always answer correctly. That's why I like functional programming better. I don't have to think so hard.

Anyway, this will soon become irrelevant, since BeginEdit and EndEdit will eventually become private methods.

The EndEdit method performs the inverse manoeuvre:

public void EndEdit() { isEditing = false; try { AssertInvariants(current); encapsulated.Clear(); foreach (var kvp in current) encapsulated.Add(kvp.Key, kvp.Value); current = encapsulated; } catch { current = encapsulated; throw; } }

It first checks the invariant, and only copies the edited values to the encapsulated dictionary if the invariant still holds. Otherwise, it restores the original encapsulated values and rethrows the exception.

This helps to make the nature of editing 'transactional' in nature, but it doesn't address the issue that the collection is in an invalid state during editing, or that a client developer may forget to call EndEdit.

Edit action #

As the next step towards addressing that problem, we may now introduce a 'wrapper method' for that little object protocol:

public void Edit(Action<PriorityCollection<T>> editAction) { BeginEdit(); editAction(this); EndEdit(); }

As you can see, it just wraps that little call sequence so that you don't have to remember to call BeginEdit and EndEdit. My F# test code comes with this example:

sut.Edit (fun col -> col["bar"] <- 55uy col["baz"] <- 45uy col.Remove "foo" )

The fun col -> part is just F# syntax for a lambda expression. In C#, you'd write it as col =>.

We're close to a solution. What remains is to make BeginEdit and EndEdit private. This means that client code can only edit a PriorityCollection<T> object through the Edit method.

Replace action with interface #

You may complain that this solution isn't properly object-oriented, since it makes use of Action<T> and requires that client code uses lambda expressions.

We can easily fix that.

Instead of the action, you can introduce a Command interface with the same signature:

public interface IPriorityEditor<T> where T : notnull { void EditPriorities(PriorityCollection<T> priorities); }

Next, change the Edit method:

public void Edit(IPriorityEditor<T> editor) { BeginEdit(); editor.EditPriorities(this); EndEdit(); }

Now you have a nice, object-oriented design, with no lambda expressions in sight.

Full code dump #

The final code is complex enough that it's easy to lose track of what it looks like, as I walk through my process. To make it easer, here's the full code for the collection class:

public sealed class PriorityCollection<T> : IReadOnlyDictionary<T, byte> where T : notnull { private Dictionary<T, byte> current; private readonly Dictionary<T, byte> encapsulated; private readonly Dictionary<T, byte> editable; private bool isEditing; public PriorityCollection(T initial) { encapsulated = new Dictionary<T, byte> { { initial, 100 } }; editable = []; current = encapsulated; } public void Add(T key, byte value) { AssertInvariants(current.Append(KeyValuePair.Create(key, value))); current.Add(key, value); } public void Remove(T key) { AssertInvariants(current.Where(kvp => !kvp.Key.Equals(key))); current.Remove(key); } public byte this[T key] { get { return current[key]; } set { var l = current.ToDictionary(kvp => kvp.Key, kvp => kvp.Value); l[key] = value; AssertInvariants(l); current[key] = value; } } public void Edit(IPriorityEditor<T> editor) { BeginEdit(); editor.EditPriorities(this); EndEdit(); } private void BeginEdit() { isEditing = true; editable.Clear(); foreach (var kvp in current) editable.Add(kvp.Key, kvp.Value); current = editable; } private void EndEdit() { isEditing = false; try { AssertInvariants(current); encapsulated.Clear(); foreach (var kvp in current) encapsulated.Add(kvp.Key, kvp.Value); current = encapsulated; } catch { current = encapsulated; throw; } } private void AssertInvariants(IEnumerable<KeyValuePair<T, byte>> candidate) { if (!isEditing && candidate.Sum(kvp => kvp.Value) != 100) throw new InvalidOperationException( "The sum of all values must be 100."); } public IEnumerable<T> Keys { get { return current.Keys; } } public IEnumerable<byte> Values { get { return current.Values; } } public int Count { get { return current.Count; } } public bool ContainsKey(T key) { return current.ContainsKey(key); } public IEnumerator<KeyValuePair<T, byte>> GetEnumerator() { return current.GetEnumerator(); } public bool TryGetValue(T key, [MaybeNullWhen(false)] out byte value) { return current.TryGetValue(key, out value); } IEnumerator IEnumerable.GetEnumerator() { return GetEnumerator(); } }

The IPriorityEditor<T> interface remains as shown above.

Conclusion #

Given how simple the problem is, this solution is surprisingly complicated, and I'm fairly sure that it's not even thread-safe.

At least it does, as far as I can tell, protect the invariant that the sum of priorities must always be exactly 100. Even so, it's just complicated enough that I wouldn't be surprised if a bug is lurking somewhere. It'd be nice if a simpler design existed.

Comments

Where does the notion come that a data structure invariant has to be true at all times? I am fairly certain that it's only required to be true at "quiescent" points of executions. That is, just as the loop invariant is only required to hold before and after each loop step but not inside the loop step, so is the data structure invariant is only required to hold before and after each invocation of its public methods.

This definition actually has an interesting quirk which is absent in the loop invariant: a data structure's method can't, generally speaking, call other public methods of the very same data structure because the invariant might not hold at this particular point of execution! I've been personally bitten by this a couple of times, and I've seen others tripping over this subtle point as well. You yourself notice it when you muse about the re-entrancy of the BeginEdit method.

Now, this particular problem is quite similar to the problem with inner iteration, and can be solved the same way, with the outer editor, as you've done, although I would have probably provided each editor with its own, separate editable dictionary because right now, the editors cannot nest/compose... but that'd complicate implementation even further.

Thank you for writing. As so many other areas of knowledge, the wider field of software development suffers from the problem of overlapping or overloaded terminology. The word invariant is just one of them. In this context, invariant doesn't refer to loop invariants, or any other kind of invariants used in algorithmic analysis.

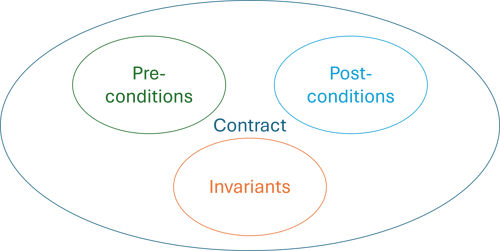

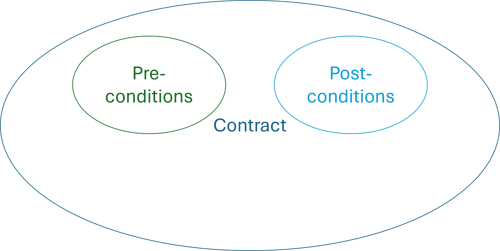

As outlined in the introduction article, when discussing encapsulation, I follow Object-Oriented Software Construction (OOSC). In that seminal work, Bertrand Meyer proposes the notion of design-by-contract, and specifically decomposes a contract into three parts: preconditions, invariants, and postconditions.

Having actually read the book, I'm well aware that it uses Eiffel as an exemplar of the concept. This has led many readers to conflate design-by-contract with Eiffel, and (in yet another logical derailment) conclude that it doesn't apply to, say, Java or C# programming.

It turns out, however, to transfer easily to other languages, and it's a concept with much practical potential.

A major problem with object-oriented design is that most ideas about good design are too 'fluffy' to be of immediate use to most developers. Take the Single Responsibility Principle (SRP) as an example. It's seductively easy to grasp the overall idea, but turns out to be hard to apply. Being able to identify reasons to change requires more programming experience than most people have. Or rather, the SRP is mostly useful to programmers who already have that experience. Being too 'fluffy', it's not a good learning tool.

I've spent quite some time with development organizations and individual programmers eager to learn, but struggling to find useful, concrete design rules. The decomposition of encapsulation into preconditions, invariants, and postconditions works well as a concrete, almost quantifiable heuristic.

Does it encompass everything that encapsulation means? Probably not, but it's by far the most effective heuristic that I've found so far.

Since I'm currently travelling, I don't have my copy of OOSC with me, but as far as I remember, the notion that an invariant should be true at all times originates there.

In any case, if an invariant doesn't always hold, then of what value is it? The whole idea behind encapsulation (as I read Meyer) is that client developers should be able to use 'objects' without having intimate knowledge of their implementation details. The use of contracts proposes to achieve that ideal by decoupling affordances from implementation details by condensing the legal protocol between object and client code into a contract. This means that a client developer, when making programming decisions, should be able to trust that certain guarantees stipulated by a contract always hold. If a client developer can't trust those guarantees, they aren't really guarantees.

"the data structure invariant is only required to hold before and after each invocation of its public methods"

I can see how a literal reading of OOSC may leave one with that impression. One must keep in mind, however, that the book was written in the eighties, at a time when multithreading wasn't much of a concern. (Incidentally, this is an omission that also mars a much later book on API design, the first edition of the .NET Framework Design Guidelines.)

In modern code, concurrent execution is a real possibility, so is at least worth keeping in mind. I'm still most familiar with the .NET ecosystem, and in it, there are plenty of classes that are documented as not being thread-safe. You could say that such a statement is part of the contract, in which case what you wrote is true: The invariant is only required to hold before and after each method invocation.

If, on the other hand, you want to make the code thread-safe, you must be more rigorous than that. Then an invariant must truly always hold.

This is, of course, a design decision one may take. Just don't bother with thread-safety if it's not important.

Still, the overall thrust of this article series is that immutability makes encapsulation much simpler. This is also true when it comes to concurrency. Immutable data structures are automatically thread-safe.

A failed attempt at priority collection with inheritance

An instructive dead end.

This article is part of a short series on encapsulation and immutability. As the introductory article claims, object mutation makes it difficult to maintain invariants. In order to demonstrate the problem, I deliberately set out to do it wrong, and report on the result.

In subsequent articles in this series I will then show one way you can maintain the invariants in the face of mutation, as well as how much easier everything becomes if you choose an immutable design.

For now, however, I'll pretend to be naive and see how far I can get with that.

In the first article, I described the example problem in more details, but in short, the exercise is to develop a class that holds a collection of prioritized items, with the invariant that the priorities must always sum to 100. It should be impossible to leave the object in a state where that's not true. It's quite an illuminating exercise, so if you have the time, you should try it for yourself before reading on.

Initialization #

In object-oriented design it's common to inherit from a base class. Since I'll try to implement a collection of prioritized items, it seems natural to inherit from Collection<T>:

public sealed class PriorityCollection<T> : Collection<Prioritized<T>>

Of course, I also had to define Prioritized<T>:

public sealed class Prioritized<T> { public Prioritized(T item, byte priority) { Item = item; Priority = priority; } public T Item { get; set; } public byte Priority { get; set; } }

Since Prioritized<T> is generic, it can be used to prioritize any kind of object. In the tests I wrote, however, I exclusively used strings.

A priority is a number between 0 and 100, so I chose to represent that with a byte. Not that this strongly protects invariants, because values can still exceed 100, but on the other hand, there's no reason to use a 32-bit integer to model a number between 0 and 100.

Now that I write this text, I realize that I could have added a Guard Clause to the Prioritized<T> constructor to enforce that precondition, but as you can tell, I didn't think of doing that. This omission, however, doesn't change the conclusion, because the problems that we'll run into stems from another source.

In any case, just inheriting from Collection<Prioritized<T>> isn't enough to guarantee the invariant that the sum of priorities must be 100. An invariant must always hold, even for a newly initialized object. Thus, we need something like this ensure that this is the case:

public sealed class PriorityCollection<T> : Collection<Prioritized<T>> { public PriorityCollection(params Prioritized<T>[] priorities) : base(priorities) { AssertSumIsOneHundred(); } private void AssertSumIsOneHundred() { if (this.Sum(p => p.Priority) != 100) throw new InvalidOperationException( "The sum of all priorities must be 100."); } }

So far, there's no real need to have a separate AssertSumIsOneHundred helper method; I could have kept that check in the constructor, and that would have been simpler. I did, however, anticipate that I'd need the helper method in other parts of the code base. As it turned out, I did, but not without having to change it.

Protecting overrides #

The Collection<T> base class offers normal collection methods like Add, Insert, Remove and so on. The default implementation allows client code to make arbitrary changes to the collection, including clearing it. The PriorityCollection<T> class can't allow that, because such edits could easily violate the invariants.

Collection<T> is explicitly designed to be a base class, so it offers various virtual methods that inheritors can override to change the behaviour. In this case, this is necessary.

As it turned out, I quickly realized that I had to change my assertion helper method to check the invariant in various cases:

private static void AssertSumIsOneHundred(IEnumerable<Prioritized<T>> priorities) { if (priorities.Sum(p => p.Priority) != 100) throw new InvalidOperationException( "The sum of all priorities must be 100."); }

By taking the sequence of priorities as an input argument, this enables me to simulate what would happen if I make a change to the actual collection, for example when adding an item to the collection:

protected override void InsertItem(int index, Prioritized<T> item) { AssertSumIsOneHundred(this.Append(item)); base.InsertItem(index, item); }

By using Append, the InsertItem method creates a sequence of values that simulates what the collection would look like if we add the candidate item. The Append function returns a new collection, so this operation doesn't change the actual PriorityCollection<T>. This only happens if we get past the assertion and call InsertItem.

Likewise, I can protect the invariant in the other overrides:

protected override void RemoveItem(int index) { var l = this.ToList(); l.RemoveAt(index); AssertSumIsOneHundred(l); base.RemoveItem(index); } protected override void SetItem(int index, Prioritized<T> item) { var l = this.ToList(); l[index] = item; AssertSumIsOneHundred(l); base.SetItem(index, item); }

I can even use it in the implementation of ClearItems, although that may seem a tad redundant:

protected override void ClearItems() { AssertSumIsOneHundred([]); }

I could also just have thrown an exception directly from this method, since it's never okay to clear the collection. This would violate the invariant, because the sum of an empty collection of priorities is zero.

As far as I recall, the entire API of Collection<T> is (transitively) based on those four virtual methods, so now that I've protected the invariant in all four, the PriorityCollection<T> class maintains the invariant, right?

Not yet. See if you can spot the problem.

There are, in fact, at least two remaining problems. One that we can recover from, and one that is insurmountable with this design. I'll get back to the serious problem later, but see if you can spot it already.

Leaf mutation #

In the introductory article I wrote:

"If the mutation happens on a leaf node in an object graph, the leaf may have to notify its parent, so that the parent can recheck the invariants."

I realize that this may sound abstract, but the current code presents a simple example. What happens if you change the Priority of an item after you've initialized the collection?

Consider the following example. For various reasons, I wrote the examples (that is, the unit tests) for this exercise in F#, but even if you're not an F# developer, you can probably understand what's going on. First, we create a Prioritized<string> object and use it to initialize a PriorityCollection<string> object named sut:

let item = Prioritized<string> ("foo", 40uy) let sut = PriorityCollection<string> (item, Prioritized<string> ("bar", 60uy))

The item has a priority of 40 (the uy suffix is the F# way of stating that the literal is a byte), and the other unnamed value has a priority of 60, so all is good so far; the sum is 100.

Since, however, item is a mutable object, we can now change its Priority:

item.Priority <- 50uy

This changes item.Priority to 50, but since none of the four virtual base class methods of Collection<T> are involved, the sut never notices, the assertion never runs, and the object is now in an invalid state.

That's what I meant when I discussed mutations in leaf nodes. You can think of a collection as a rather flat and boring tree. The collection object itself is the root, and each of the items are leaves, and no further nesting is allowed.

When you edit a leaf, the root isn't automatically aware of such an event. You explicitly have to wire the object graph up so that this happens.

Event propagation #

One possible way to address this issue is to take advantage of .NET's event system. If you're reading along, but you normally write in another language, you can also use the Observer pattern, or even ReactiveX.

We need to have Prioritized<T> raise events, and one option is to let it implement INotifyPropertyChanging:

public sealed class Prioritized<T> : INotifyPropertyChanging

A Prioritized<T> object can now raise its PropertyChanging event before accepting an edit:

public byte Priority { get => priority; set { if (PropertyChanging is { }) PropertyChanging( this, new PriorityChangingEventArgs(value)); priority = value; } }

where PriorityChangingEventArgs is a little helper class that carries the proposed value around:

public class PriorityChangingEventArgs(byte proposal) : PropertyChangingEventArgs(nameof(Priority)) { public byte Proposal { get; } = proposal; }

A PriorityCollection<T> object can now subscribe to that event on each of the values it keeps track of, so that it can protect the invariant against leaf node mutations.

private void Priority_PropertyChanging(object? sender, PropertyChangingEventArgs e) { if (sender is Prioritized<T> p && e is Prioritized<T>.PriorityChangingEventArgs pcea) { var l = this.ToList(); l[l.IndexOf(p)] = new Prioritized<T>(p.Item, pcea.Proposal); AssertSumIsOneHundred(l); } }

Such a solution comes with its own built-in complexity, because the PriorityCollection<T> class must be careful to subscribe to the PropertyChanging event in various different places. A new Prioritized<T> object may be added to the collection during initialization, or via the InsertItem or SetItem methods. Furthermore, the collection should make sure to unsubscribe from the event if an item is removed from the collection.

To be honest, I didn't bother to implement these extra checks, because the point is moot anyway.

Fatal flaw #

The design shown here comes with a fatal flaw. Can you tell what it is?

Since the invariant is that the priorities must always sum to exactly 100, it's impossible to add, remove, or change any items after initialization.

Or, rather, you can add new Prioritized<T> objects as long as their Priority is 0. Any other value breaks the invariant.

Likewise, the only item you can remove is one with a Priority of 0. Again, if you remove an item with any other Priority, you'd be violating the invariant.

A similar situation arises with editing an existing item. While you can change the Priority of an item, you can only 'change' it to the same value. So you can change 0 to 0, 42 to 42, or 100 to 100, but that's it.

But, I can hear you say, I'll only change 60 to 40 because I intend to add a new item with a 20 priority! In the end, the sum will be 100!

Yes, but this design doesn't know that, and you have no way of telling it.

While we may be able to rectify the situation, I consider this design so compromised that I think it better to start afresh with this realization. Thus, I'll abandon this version of PriorityCollection<T> in favour of a fresh start in the next article.

Conclusion #

While I've titled this article "A failed attempt", the actual purpose was to demonstrate how 'aggregate' requirements make it difficult to maintain class invariants.

I've seen many code bases with poor encapsulation. As far as I can tell, a major reason for that is that the usual 'small-scale' object-oriented design techniques like Guard Clauses fall short when an invariant involves the interplay of multiple objects. And in real business logic, that's the rule rather than the exception.

Not all is lost, however. In the next article, I'll develop an alternative object-oriented solution to the priority collection problem.

Comments